Extensibility of U-Net Neural Network Model for Hydrographic Feature Extraction and Implications for Hydrologic Modeling

Abstract

1. Introduction

2. Materials and Methods

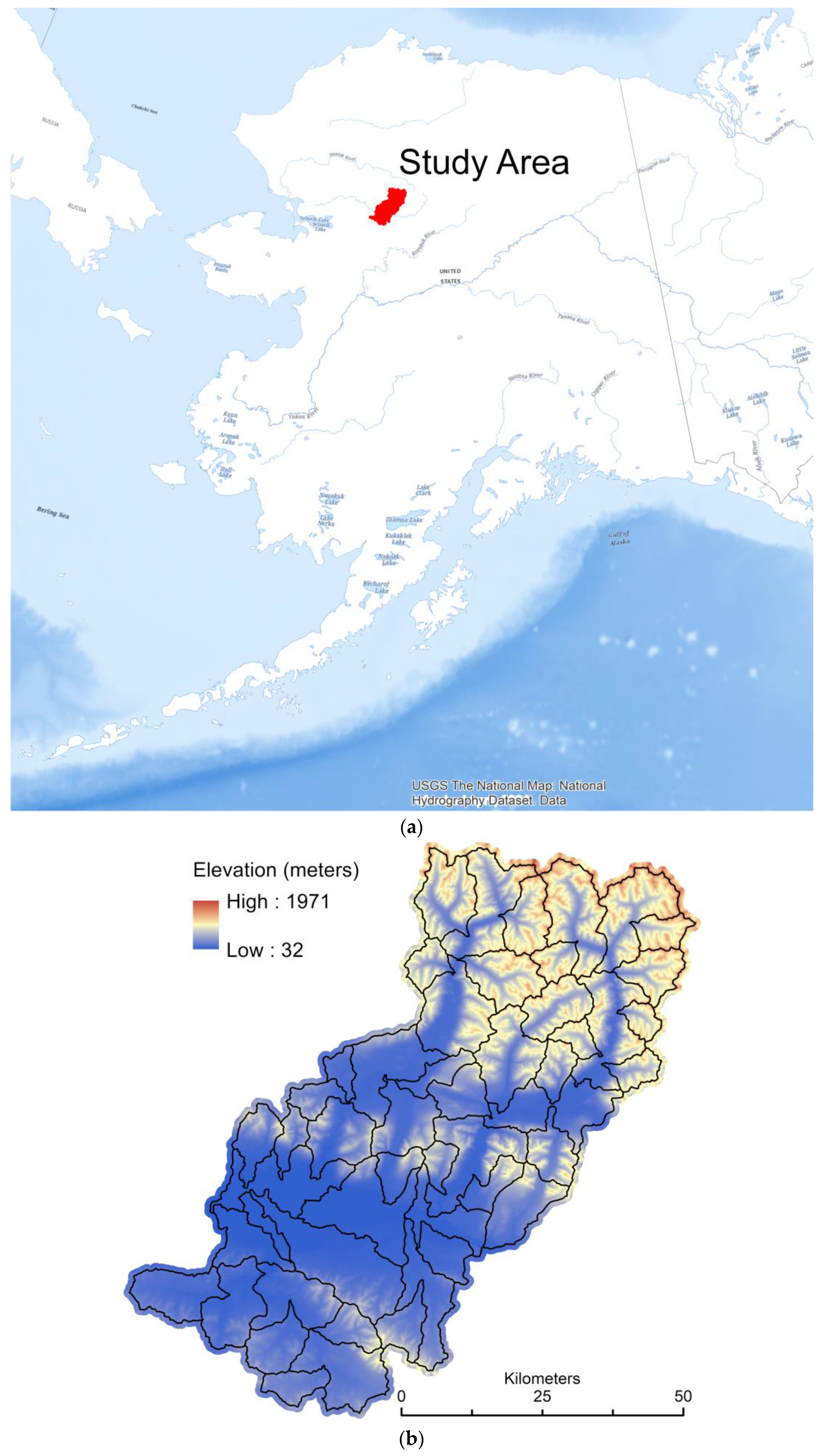

2.1. Study Area and Data

2.1.1. IfSAR and Auxiliary Image Data

2.1.2. Reference Hydrography

2.2. Input Feature Layers

2.3. U-Net Model Architecture

Selection of Training Samples

2.4. HPC Processing Environment

2.5. Design for Extensibility

2.6. Accuracy Metrics

2.7. Significance of Layers

2.8. Weighted Flow Accumulation Network Extraction

3. Results and Discussion

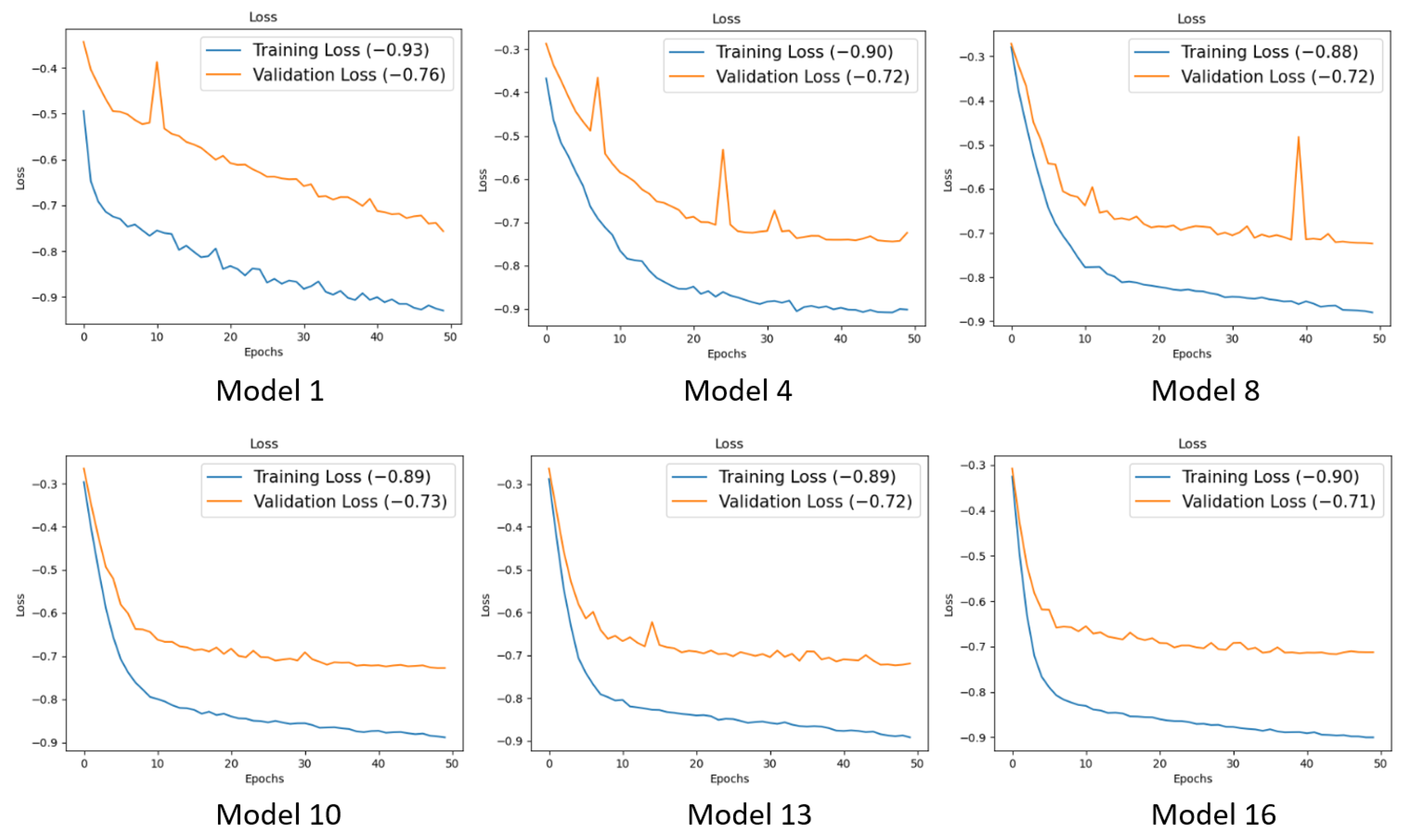

3.1. Model Training

3.2. Model Test Results

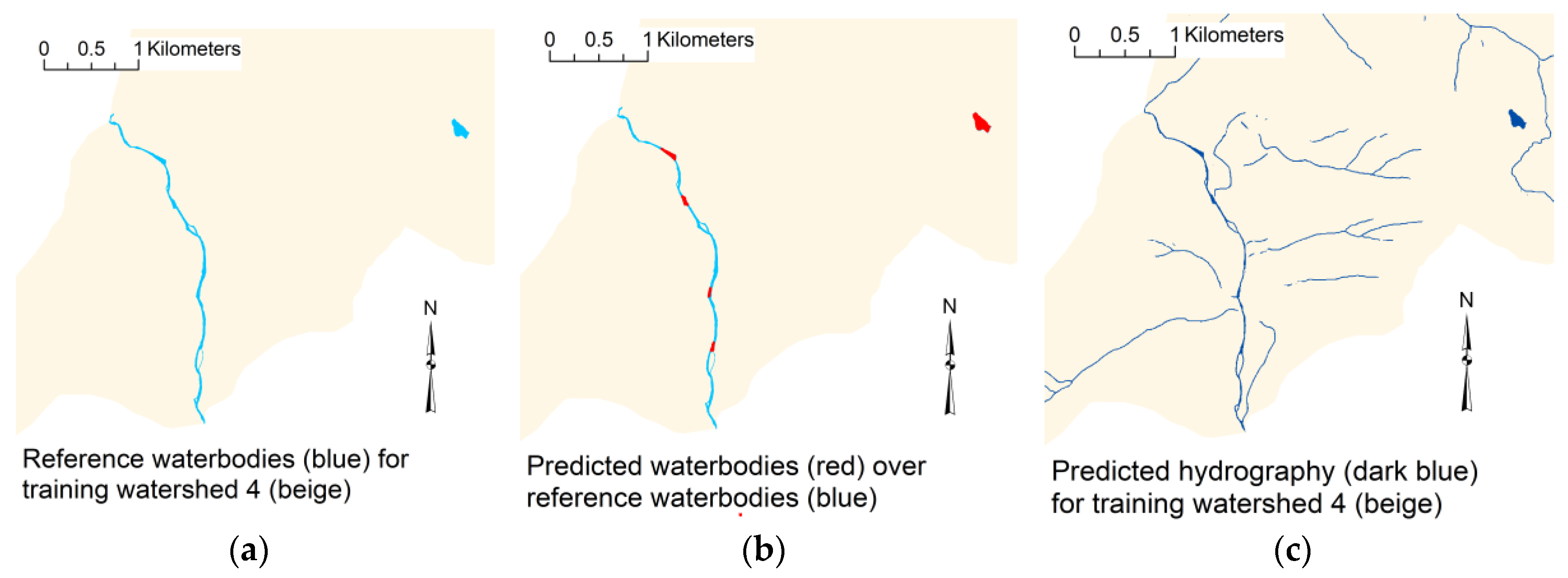

3.2.1. Model Waterbody Tests

3.2.2. Spatial Relations of Model Results

3.2.3. Review of Reference Hydrography

3.3. Significance of Model Layers

3.4. Flow Accumulation Network Extraction

4. Summary and Recommendations

- Hydrography prediction accuracies averaging near 70 percent can be achieved by training the described U-net model with about 15 percent of the project area using reference data having the same quality as what is used in this study. Little can be gained by including additional training data beyond 15 percent of the study area.

- Evaluation of predicted waterbodies provides F1-scores that average 77 percent for tested watersheds. Accuracies are positively correlated with the area-to-line ratio of hydrography content in the watersheds. That is, U-net waterbody predictions are highly accurate for watersheds with larger waterbodies, but less accurate for watersheds comprised mostly of finely detailed waterbodies and drainage channels.

- Precision values are 7 to 30 percent higher than recall values, which indicates predicted water pixels are likely to be included within reference water pixels, however not enough water pixels are being predicted by the models.

- Layer significance testing indicates the SWM layer contributes the largest amount of information to the U-net model predictions, averaging 71 to 93 percent, which is more than 20 percent higher than the next most influential layer.

- Augmenting U-net predictions with D-8 flow accumulation network features improves connectivity that increases recall but more so decreases precision, leading to F1-scores averaging 63 percent, which is about 5 percent less than predictions without augmentation. Comparisons with satellite image data and the most influential layer, SWM, indicate predicted flow paths and reference hydrography both follow probable flow paths, but sometimes take alternate routes.

- (1)

- Better verification of reference hydrography data, or use of hydrographic features compiled at a higher level of detail than 24k,

- (2)

- Eliminating uncertain reference features from training data, and ensuring training windows include minimum overlap and are sufficiently distributed over the range of conditions with consideration to area-to-line ratios of hydrographic feature content, and

- (3)

- Continuation of model training until a learning rate plateau is achieved.

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Disclaimer

References

- Maidment, D.R. Conceptual framework for the national flood interoperability experiment. J. Am. Water Resour. Assoc. 2016, 53, 245–257. [Google Scholar] [CrossRef]

- Chen, B.; Krajewski, W.; Goska, R.; Young, N. Using LiDAR surveys to document floods: A case study of the 2008 Iowa flood. J. Hydrol. 2017, 553, 338–349. [Google Scholar] [CrossRef]

- Regan, R.S.; Juracek, K.E.; Hay, L.E.; Markstrom, S.L.; Viger, R.J.; Driscoll, J.M.; Lafontaine, J.H.; Norton, P.A. The U.S. Geological Survey National Hydrologic Model infrastructure: Rationale, description, and application of a watershed-scale model for the conterminous United States. Environ. Model. Softw. 2019, 111, 192–203. [Google Scholar] [CrossRef]

- Simley, J.D.; Carswell, W.J., Jr. The National Map–Hydrography. U.S. Geological Survey Fact Sheet; 2009. Available online: http://pubs.usgs.gov/fs/2009/3054/ (accessed on 30 April 2020).

- Wright, W.; Nielsen, B.; Mullen, J.; Dowd, J. Agricultural groundwater policy during drought: A spatially differentiated approach for Flint River Basin. In Proceedings of the Agricultural and Applied Economics Association 2012 Annual Meeting, Seattle, WA, USA, 12–14 August 2012. [Google Scholar]

- Schultz, L.D.; Heck, M.P.; Hockman-Wert, D.; Allai, T.; Wenger, S.; Cook, N.A.; Dunham, J.B. Spatial and temporal variability in the effects of wildfire and drought on thermal habitat for a desert trout. J. Arid. Environ. 2017, 145, 60–68. [Google Scholar] [CrossRef]

- Poppenga, S.K.; Gesch, D.B.; Worstell, B.B. Hydrography change detection: The usefulness of surface channels derived from LiDAR DEMS for updating mapped hydrography. J. Am. Water Resour. Assoc. 2013, 49, 371–389. [Google Scholar] [CrossRef]

- Terziotti, S.; Adkins, K.; Aichele, S.; Anderson, R.; Archuleta, C. Testing the waters: Integrating hydrography and elevation in national hydrography mapping. AWRA Water Resour. IMPACT 2018, 20, 28–29. [Google Scholar]

- Maune, D.F. Digital Elevation Model (DEM) Data for the Alaska Statewide Digital Mapping Initiative (SDMI). Alaska DEM Workshop Whitepaper; 2008. Available online: http://agc.dnr.alaska.gov/documents/Alaska_SDMI_DEM_Whitepaper_Final.pdf (accessed on 30 April 2020).

- Montgomery, L. Alaska’s Outdated Maps Make Flying a Peril, But High-Tech Fix Is Gaining Ground. 2014. Anchorage Daily News. 15 October 2014. Available online: https://www.adn.com/aviation/article/alaska-s-outdated-maps-make-flying-peril-high-tech-fix-gaining-ground/2014/10/15/ (accessed on 10 May 2021).

- Clubb, F.J.; Mudd, S.M.; Milodowski, D.T.; Hurst, M.D.; Slater, L.J. Objective extraction of channel heads from high-resolution topographic data. Water Resour. Res. 2014, 50, 5. [Google Scholar] [CrossRef]

- Passalacqua, P.; Do Trung, T.; Foufoula-Georgiou, E.; Sapiro, G.; Dietrich, W.E. A geometric framework for channel network extraction from lidar: Nonlinear diffusion and geodesic paths. J. Geophys. Res. 2010, 115, F01002. [Google Scholar] [CrossRef]

- Woodrow, K.; Lindsay, J.B.; Berg, A.A. Evaluating DEM conditioning techniques, elevation source data, and grid resolution for field-scale hydrological parameter extraction. J. Hydrol. 2016, 540, 1022–1029. [Google Scholar] [CrossRef]

- Wilson, J.; Lam, C.; Deng, Y. Comparison of the performance of flow-routing algorithms used in GIS-based hydrologic analysis. Hydrol. Process. 2007, 21, 1026–1044. [Google Scholar] [CrossRef]

- Tarboton, D.G.; Schreuders, K.A.T.; Watson, D.W.; Baker, M.E. Generalized terrain-based flow analysis of digital elevation models. In Proceedings of the 18th World IMACS/MODSIM Congress, Cairns, Australia, 13–17 July 2009; Available online: http://mssanz.org.au/modsim09 (accessed on 10 May 2021).

- Shin, S.; Paik, K. An improved method for single flow direction calculation in grid digital elevation models. Hydrol. Process. 2017, 31, 1650–1661. [Google Scholar] [CrossRef]

- Metz, M.; Mitasova, H.; Harmon, R.S. Efficient extraction of drainage networks from massive, radar-based elevation models with least cost path search. Hydrol. Earth Syst. Sci. 2011, 15, 667–678. [Google Scholar] [CrossRef]

- Bernhardt, H.; Garcia, D.; Hagensieker, R.; Mateo-Garcia, G.; Lopez-Francos, I.; Stock, J.; Schumann, G.; Dobbs, K.; Kalaitzis, F. Waters of the United States: Mapping America’s Waters in Near Real-Time. In Earth Science, Artificial Intelligence 2020: Ad Astra per Algorithmos; Frontier Development Lab, National Aeronautics and Space Administration; SETI Institute: Mountain View, CA, USA, 2020; pp. 243–269. [Google Scholar]

- Lin, P.; Pan, M.; Wood, E.F.; Yamazaki, D.; Allen, G.H. A new vector-based global river network dataset accounting for variable drainage density. Sci. Data 2021, 8, 1–9. [Google Scholar] [CrossRef] [PubMed]

- Chen, Y.; Fan, R.; Yang, X.; Wang, J.; Latif, A. Extraction of urban water bodies from high-resolution remote-sensing imagery using deep learning. Water 2018, 10, 585. [Google Scholar] [CrossRef]

- Chen, Y.; Tang, L.; Kan, Z.; Bilal, M.; Li, Q. A novel water body extraction neural network (WBE-NN) for optical high-resolution multispectral imagery. J. Hydrol. 2020, 588, 125092. [Google Scholar] [CrossRef]

- Xu, Z.; Jiang, Z.; Shavers, E.J.; Stanislawski, L.V.; Wang, S. A 3D Convolutional neural network method for surface water mapping using lidar and NAIP imagery. In Proceedings of the ASPRS-International Lidar Mapping Forum, Denver, CO, USA, 25–31 January 2019. [Google Scholar]

- Wang, G.; Wu, M.; Wei, X.; Song, H. Water identification from high-resolution remote sensing images based on multidimensional densely connected convolutional neural networks. Remote Sens. 2020, 12, 795. [Google Scholar] [CrossRef]

- Shaker, A.; Yan, W.Y.; LaRocque, P.E. Automatic land-water classification using multispectral airborne LiDAR data for near-shore and river environments. ISPRS J. Photogramm. Remote Sens. 2019, 152, 94–108. [Google Scholar] [CrossRef]

- Stanislawski, L.V.; Brockmeyer, T.; Shavers, E.J. Automated road breaching to enhance extraction of natural drainage networks from elevation models through deep learning. In Proceedings of the ISPRS Technical Commission IV Symposium, Delft, The Netherlands, 1–5 October 2018. [Google Scholar]

- Stanislawski, L.V.; Brockmeyer, T.; Shavers, E. Automated extraction of drainage channels and roads through deep learning. Abstr. Int. Cartogr. Assoc. 2019, 1, 350. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland; New York, NY, USA, 2015. [Google Scholar]

- Zhang, Z.; Liu, Q.; Wang, Y. Road extraction by deep residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef]

- Feng, W.; Sui, H.; Huang, W.; Xu, C.; An, K. Water Body Extraction from Very High-Resolution Remote Sensing Imagery Using Deep U-Net and a Superpixel-Based Conditional Random Field Model. IEEE Geosci. Remote Sens. Lett. 2018, 16, 618–622. [Google Scholar] [CrossRef]

- Deumlich, D.; Schmidt, R.; Sommer, M. A multiscale soil–landform relationship in the glacial-drift area based on digital terrain analysis and soil attributes. J. Plant Nutr. Soil Sci. 2010, 173, 843–851. [Google Scholar] [CrossRef]

- Newman, D.R.; Lindsay, J.B.; Cockburn, J.M.H. Evaluating metrics of local topographic position for multiscale geomorphometric analysis. Geomorphology 2018, 312, 40–50. [Google Scholar] [CrossRef]

- Stepinski, T.; Jasiewicz, J. Geomorphons—A new approach to classification of landform. In Proceedings of Geomorphometry; Hengl, T., Evans, I.S., Wilson, J.P., Gould, M., Eds.; Elsevier: Redlands, CA, USA, 2011; pp. 109–112. [Google Scholar]

- Xu, Z.; Wang, S.; Stanislawski, L.V.; Jiang, Z.; Jaroenchai, N.; Sainju, A.M.; Shavers, E.; Usery, E.L.; Chen, L. An attention U-Net model for detection of fine-scale hydrologic streamlines. Env. Model. Softw. 2021, 140, 104992. [Google Scholar] [CrossRef]

- Shavers, E.; Stanislawski, L.V. Channel cross-section analysis for automated stream head identification. Environ. Model. Softw. 2020, 132, 104809. [Google Scholar] [CrossRef]

- Yuan, Q.; Shen, H.; Li, T.; Li, Z.; Li, S.; Jiang, Y.; Xu, H.; Tan, W.; Yang, Q.; Wang, J.; et al. Deep learning in environmental remote sensing: Achievements and challenges. Remote Sens. Environ. 2020, 241, 111716. [Google Scholar] [CrossRef]

- Gargiulo, M.; Dell’Aglio, D.A.G.; Iodice, A.; Riccio, D.; Ruello, G. Integration of Sentinel-1 and Sentinel-2 Data for Land Cover Mapping Using W-Net. Sensors 2020, 20, 2969. [Google Scholar] [CrossRef]

- Nemni, E.; Bullock, J.; Belabbes, S.; Bromley, L. Fully Convolutional Neural Network for Rapid Flood Segmentation in Synthetic Aperture Radar Imagery. Remote Sens. 2020, 12, 2532. [Google Scholar] [CrossRef]

- Lu, D.; Weng, Q. A survey of image classification methods and techniques for improving classification performance. Int. J. Remote Sens. 2007, 28, 823–870. [Google Scholar] [CrossRef]

- U.S. Geological Survey. 5 Meter Alaska Digital Elevation Models (DEMs)-USGS National Map 3DEP Downloadable Data Collection. U.S. Geological Survey, 2017. Available online: https://www.sciencebase.gov/catalog/item/5641fe98e4b0831b7d62e758 (accessed on 10 May 2021).

- U.S. Geological Survey. Alaska Digital Surface Models (DSMs)-USGS National Map 3DEP Downloadable Data Collection. U.S. Geological Survey, 2017. Available online: https://www.sciencebase.gov/catalog/item/543e6a6ae4b0fd76af69cf47 (accessed on 10 May 2021).

- U.S. Geological Survey. Alaska Orthorectified Radar Intensity Image-USGS National Map 3DEP Downloadable Data Collection. U.S. Geological Survey, 2017. Available online: https://www.sciencebase.gov/catalog/item/543e6acde4b0fd76af69cf4a (accessed on 10 May 2021).

- U.S. Geological Survey. The National Map Download Client. 2021, U.S. Geological Survey, U.S. Department of Interior. Available online: https://apps.nationalmap.gov/ (accessed on 10 May 2021).

- Kampes, B.; Blaskovich, M.; Reis, J.J.; Sanford, M.; Morgan, K. Fugro GEOSar airborne dual-band IFSAR DTM processing. In Proceedings of the ASPRS 2011 Annual Conference, Milwaukee, WI, USA, 1–5 May 2011. [Google Scholar]

- Archuleta, C.M.; Terziotti, S. Elevation-Derived Hydrography—Representation, Extraction, Attribution, and Delineation Rules. In Techniques and Methods; U.S. Geological Survey: Reston, VA, USA, 2020. [Google Scholar] [CrossRef]

- Terziotti, S.; Archuleta, C.M. Elevation-Derived Hydrography Acquisition Specifications. In Techniques and Methods; U.S. Geological Survey: Reston, VA, USA, 2020. [Google Scholar] [CrossRef]

- Jasiewicz, J.; Stepinski, T. Geomorphons—A pattern recognition approach to classification and mapping of landforms. Geomorphology 2013, 182, 147–156. [Google Scholar] [CrossRef]

- Doneus, M. Openness as visualization technique for interpretative mapping of airborne lidar derived digital terrain models. Remote Sens. 2013, 5, 6427–6442. [Google Scholar] [CrossRef]

- Perona, P.; Malik, J. Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 1990, 12, 629–639. [Google Scholar] [CrossRef]

- Sangireddy, H.; Stark, C.P.; Kladzyk, A.; Passalacqua, P. GeoNet: An open source software for the automatic and objective extraction of channel heads, channel network, and channel morphology from high resolution topography data. Environ. Model. Softw. 2016, 83, 58–73. [Google Scholar] [CrossRef]

- Mitasova, H.; Thaxton, C.; Hofierka, J.; McLaughlin, R.; Moore, A.; Mitas, L. Path sampling method for modeling overland water flow, sediment transport, and short-term terrain evolution in Open Source GIS. Dev. Water Sci. 2004, 55, 1479–1490. [Google Scholar]

- Moore, I.D.; Grayson, R.B.; Ladson, A.R. Digital Terrain Modeling: A Review of Hydrological, Geomorphological, and Biological Applications. Hydrol. Process. 1991, 5, 3–30. [Google Scholar] [CrossRef]

- Zakšek, K.; Oštir, K.; Kokalj, Ž. Sky-view factor as a relief visualization technique. Remote Sens. 2011, 3, 398–415. [Google Scholar] [CrossRef]

- Kennelly, P.J.; Stewart, A.J. General sky models for illuminating terrains. Int. J. Geogr. Inf. Sci. 2014, 28, 383–406. [Google Scholar] [CrossRef]

- Tompson, J.; Goroshin, R.; Jain, A.; LeCun, Y.; Bregler, C. Efficient Object Localization Using Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France. J. Mach. Learn. Res. 2015, 37, 9. [Google Scholar]

- Agarap, A.F. Deep Learning Using Rectified Linear Units (RELU). arXiv 2019, arXiv:1803.08375. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2015, arXiv:1412.6980v9. [Google Scholar]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

- Zou, K.H.; Warfield, S.K.; Bharatha, A.; Tempany, C.M.C.; Kaus, M.R.; Haker, S.J.; Wells, W.M., III; Jolesz, F.A.; Kikinis, R. Statistical validation of image segmentation quality based on a spatial overlap index. Acad. Radiol. 2004, 11, 178–189. [Google Scholar] [CrossRef]

- Kang, Y.; Gao, S.; Roth, R.E. Transferring multiscale map styles using generative adversarial networks. Int. J. Cartogr. 2019, 5, 115–141. [Google Scholar] [CrossRef]

- Tarboton, D.G.; Ames, D.P. Advances in the mapping of flow networks from digital elevation data. In Bridging the Gap: 2001, Meeting the World’s Water and Environmental Resources Challenges; American Society of Civil Engineers: Reston, VA, USA, 2001; pp. 1–10. [Google Scholar] [CrossRef]

- Hashim, S.; Naim, W.M. Evaluation of vertical accuracy of airborne IFSAR and open source Digital elevation models (DEMs) based on GPS observation. Int. J. Comput. Commun. Instrum. Eng. (IJCCIE) 2015, 2, 114–120. [Google Scholar] [CrossRef]

- Guritz, R.; Ignatov, D.M.; Broderson, D.; Heinrichs, T. Southeast Alaska LiDAR, Orthoimagery, and IFSAR mapping for ADOTPJ Roads to Resources. In Proceedings of the Alaska Surveying and Mapping Conference, Anchorage, AK, USA, 17 February 2016. [Google Scholar]

- Andersen, H.-E.; Reutebuch, S.E.; McGaughey, R.J. Accuracy of an IFSAR-derived digital terrain model under a conifer forest canopy. Can. J. Remote Sens. 2005, 31, 283–288. [Google Scholar]

- Kavzoglu, T. Increasing the accuracy of neural network classification using refined training data. Environ. Model. Softw. 2009, 24, 850–858. [Google Scholar] [CrossRef]

- Tarboton, D.G. A new method for the determination of flow directions and upslope areas in grid digital elevation models. Water Resour. Res. 1997, 33, 309–319. [Google Scholar] [CrossRef]

- Ehlschlaeger, C. Using the AT search algorithm to develop hydrologic models from digital elevation data. In Proceedings of the International Geographic Information Systems (IGIS) Symposium, Baltimore, MD, USA, 18–19 March 1989. [Google Scholar]

- Mitas, L.; Mitasova, H. Distributed soil erosion simulation for effective erosion prevention. Water Resour. Res. 1998, 34, 505–516. [Google Scholar] [CrossRef]

- GRASS GIS 7.8.6dev Reference Manual. 2021. Available online: https://grass.osgeo.org/grass78/manuals/r.sim.water.html (accessed on 3 June 2021).

| Layer Name | Source | Description | Reference |

|---|---|---|---|

| Non-linear filtered DTM | USGS */GeoNet | IfSAR derived 5-m elevation model filtered using a non-linear diffusion filter for noise removal and enhancement of edges. | [48] |

| IfSAR DSM (resampled) | USGS */open-source | Elevation model representing the highest elevation on the surface, including vegetation and buildings | [40] |

| IfSAR DTM (resampled) | USGS */open-source | Elevation model representing the land surface | [39] |

| IfSAR ORI (resampled) | USGS */open-source | Orthorectified radar backscatter intensity image | [41] |

| Curvature | GRASS | The normalized sum of surface curvature in the x and y directions, generated from the filtered DTM | [49] |

| Geomorphon (10 cell radius) | GRASS | Identifies terrain landforms such as ridge, valley, and slope by analysis of elevation distribution within a 10-cell radius. Values are landform class ID’s | [46] |

| 2-D shallow-water channel depth model | GRASS | A storm water drainage model that considers the amount and duration of rain, surface friction, and surface water volume resulting in a water depth raster | [50] |

| Topographic wetness index | GRASS | Natural log of contributing upslope area of a cell over the local slope | [51] |

| Negative openness (5 cell radius) | RVT ** | The mean of the angle between nadir and the horizon in 32 directions surrounding a cell | [47] |

| Positive openness (5 cell radius) | RVT ** | The mean of the angle between zenith and the horizon in 32 directions surrounding a cell | [47] |

| Sky view factor | RVT ** | The amount of incoming “light” from a diffuse hemisphere centered on a cell. As more of the hemisphere visible from the cell, lower the surrounding horizon, the higher the value | [52] |

| Sky illumination | RVT ** | A hillshade generated assuming a diffuse illumination | [53] |

| Topographic position index (3 × 3 kernel) | open source | Difference between a cell elevation value and the average elevation of cells in a 3 × 3 window surrounding it | [30] |

| Topographic position index (11 × 11 kernel) | open source | Difference between a cell elevation value and the average elevation of cells in a 11 × 11 window surrounding it | [30] |

| Training Watersheds | Test Watersheds | |||||

|---|---|---|---|---|---|---|

| Minimum | Maximum | Average | Minimum | Maximum | Average | |

| Precision | 81.7 | 94.7 | 86.0 | 52.1 | 92.9 | 72.3 |

| WFA Precision | 48.4 | 88.8 | 64.6 | 34.1 | 88.1 | 56.0 |

| Recall | 66.9 | 90.4 | 79.2 | 34.6 | 94.5 | 63.9 |

| WFA Recall | 72.6 | 91.6 | 84.0 | 45.5 | 95.7 | 71.9 |

| F1-Score | 73.5 | 92.4 | 82.4 | 44.8 | 93.7 | 67.6 |

| WFA F1-Score | 59.9 | 90.2 | 72.8 | 41.5 | 91.7 | 62.8 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Stanislawski, L.V.; Shavers, E.J.; Wang, S.; Jiang, Z.; Usery, E.L.; Moak, E.; Duffy, A.; Schott, J. Extensibility of U-Net Neural Network Model for Hydrographic Feature Extraction and Implications for Hydrologic Modeling. Remote Sens. 2021, 13, 2368. https://doi.org/10.3390/rs13122368

Stanislawski LV, Shavers EJ, Wang S, Jiang Z, Usery EL, Moak E, Duffy A, Schott J. Extensibility of U-Net Neural Network Model for Hydrographic Feature Extraction and Implications for Hydrologic Modeling. Remote Sensing. 2021; 13(12):2368. https://doi.org/10.3390/rs13122368

Chicago/Turabian StyleStanislawski, Lawrence V., Ethan J. Shavers, Shaowen Wang, Zhe Jiang, E. Lynn Usery, Evan Moak, Alexander Duffy, and Joel Schott. 2021. "Extensibility of U-Net Neural Network Model for Hydrographic Feature Extraction and Implications for Hydrologic Modeling" Remote Sensing 13, no. 12: 2368. https://doi.org/10.3390/rs13122368

APA StyleStanislawski, L. V., Shavers, E. J., Wang, S., Jiang, Z., Usery, E. L., Moak, E., Duffy, A., & Schott, J. (2021). Extensibility of U-Net Neural Network Model for Hydrographic Feature Extraction and Implications for Hydrologic Modeling. Remote Sensing, 13(12), 2368. https://doi.org/10.3390/rs13122368