Abstract

In this paper, we propose a new approach for sub-pixel co-registration based on Fourier phase correlation combined with the Harris detector. Due to the limitation of the standard phase correlation method to achieve only pixel-level accuracy, another approach is required to reach sub-pixel matching precision. We first applied the Harris corner detector to extract corners from both references and sensed images. Then, we identified their corresponding points using phase correlation between the image pairs. To achieve sub-pixel registration accuracy, two optimization algorithms were used. The effectiveness of the proposed method was tested with very high-resolution (VHR) remote sensing images, including Pleiades satellite images and aerial imagery. Compared with the speeded-up robust features (SURF)-based method, phase correlation with the Blackman window function produced 91% more matches with high reliability. Moreover, the results of the optimization analysis have revealed that Nelder–Mead algorithm performs better than the two-point step size gradient algorithm regarding localization accuracy and computation time. The proposed approach achieves better accuracy than 0.5 pixels and outperforms the speeded-up robust features (SURF)-based method. It can achieve sub-pixel accuracy in the presence of noise and produces large numbers of correct matching points.

1. Introduction

The image registration process is an important task in image processing in applications such as automatic change detection, data fusion, motion tracking, and image mosaicking. When images of the same location taken at different dates or from different viewpoints need to be compared, they must be geometrically registered to one another. In the process of image registration, the image to be registered, called the sensed image, is aligned against a reference image. Accurate registration allows detection and monitoring of changes between images that might otherwise be difficult to ascertain without sub-pixel misregistration.

In remote sensing applications, the registration process is most often carried out manually, which is not feasible for large numbers of images. Hence, automated techniques are needed that require little or no operator supervision. Automatic image registration is a classical problem and has been an active research topic [1,2,3,4,5,6,7,8]. Although considerable research has been conducted, until now, significant challenges remain. There is, therefore, a critical need to develop an accurate robust and efficient image registration algorithm that requires minimal or no operator supervision to register multi-temporal remote sensing images.

A review of the most common image registration techniques was conducted in [9,10,11,12]. The existing methods can be broadly divided into two major categories: area-based and feature-based techniques. Several studies examined the improvements in accuracy and reliability and means of mitigating the limitations of both groups of registration techniques.

In feature-based techniques, the algorithms involve two stages. Firstly, the method extracts feature from the reference and sensed images. Secondly, corresponding points are matched to obtain a correct match and the optimal model transformation between the image pair. The feature space includes corners, edges, and regions from the reference and sensed image. As a result that the corners are invariant to imaging geometry, they can achieve more precise results [12].

The point feature methods first detect a set of candidate feature points by applying an interest operator to both images [2,5,6,7,8,9,10,11,12,13,14,15]. In [16,17,18,19], the authors review different feature detectors and suggest a combination of various detectors to obtain the best performance. Comparison between detectors has shown that the performance declines with increasing viewpoint-change effects. Despite the fact that repeatability is the most commonly employed measure for assessing the accuracy of extracted interest points, their spatial distribution plays a crucial role in the process of registration. These should be the most widely distributed throughout the entire image to ensure a precise registration [16]. The most popular algorithm is the Harris corner detector [20], which continues to be a reference technique. The success of the Harris detector resides in its efficiency to detect stable and repeatable interest points across various image deformations [21,22]. Once feature points have been detected, feature matching is then performed by comparing the local descriptors such as scale-invariant feature transform (SIFT) or speeded-up robust features (SURF). The SIFT descriptor was developed by Lowe [23] to achieve feature invariance to image scaling, rotation, and illumination variations. The SIFT operator can find optimal point correspondences between images against errors arising from small geometric distortions [24]. The registration based on SIFT algorithm suffers from low precision and slow speed for remote sensing images [25,26].

By comparison, area-based techniques compute a measure of similarity using raw image pixel values for a small template in the reference image and compare this with a similar region in the sensed image. Consequently, a review of the related works indicates that classical algorithms are highly sensitive to changes in pixel intensity that are introduced by local distortions, illumination differences, shadows, and changes in viewing angle, in addition to the use of images of different sensors. Typical similarity measures are cross-correlation with or without prefiltering, and Fourier invariance properties [27,28], such as phase correlation (PC) used as a similarity measure [29]. Arya proposed a normalized correlation algorithm based on M-estimators that reduces the effect of noisy pixels [30]. In [31], M-estimators in intensity correlation were found to perform better than the mutual information (MI) measure for medical images. Georgescu and Meer suggested the implementation of a gradient descent algorithm using M-estimators and radiometric correction [32]. In Eastman [33], several image registration schemes in major satellite ground systems were surveyed. The registration was performed on a database of carefully selected control point image chips. Normalized correlation was used in local regions after applying the topographic correction and the cloud masking process.

Researchers have begun to attach importance to the application of phase correlation in image registration [3,34,35]. Leprince et al. [36] built a workflow for automated correction, called COSI-Corr, based on phase correlation. Scheffler et al. [37] proposed a phase correlation based on an approach called AROSICS that uses cloud masking. Fourier-based registration in the frequency domain is adapted to handle images that have been both translated, rotated, and scaled with respect to each other [38,39,40]. Chen et al. [39] proposed a matching algorithm based on both the shift-invariant Fourier transform and the scale invariant Mellin transform to match images that are rotated and scaled. Abdelfattah and Nicolas [41] demonstrated improved precision of the invariant Fourier–Mellin Transform (FMT) over a standard correlation for the registration of interferometric synthetic aperture radar images.

Among many previously proposed approaches, the effect of increased relief displacement, the requirement of more control points, and increased data volume are the challenges associated with the sub-pixel registration of VHR remote sensing images taken from different viewpoints. As a result, there is a need for accurate, robust, and automatic sub-pixel correlation algorithms that produce a high number of correct matches with homogenous geometric distribution and have low sensitivity to noise, outliers, and occlusions. To achieve the above-mentioned objectives, an appropriate method should be selected and used for each of the following four components [42]: (1) a feature space, (2) a search space, (3) a search strategy, and (4) a similarity metric.

Here, we propose a hybrid algorithm that combines Harris corner detection and Fourier phase correlation for co-registering very high-resolution remote sensing images. This approach uses phase correlation to reduce sensitivity to noise and Harris corner to improve efficiency [43]. The proposed method comprises three major steps: (1) feature extraction; (2) Fourier phase matching; and (3) phase-matching optimization. First, the corners are generated by the Harris corner detector, which provides useful reference points but still contains sub-pixel shifts. We then refine the location of the corners to obtain sub-pixel accuracy using optimization techniques. The proposed phase correlation approach is formulated as an optimization problem that minimizes a cost function.

2. Brief Overview of Related Work

In this section, we first present a summary of representative hybrid registration operations in both an area-based and feature-based approach, followed by a discussion of sub-pixel phase correlation methods.

2.1. Hybrid Registration Approach

In recent years, various hybrid approaches combining both area-based and feature-based methods have been developed in order to achieve reliable and precise image registration [8]. In [44], the authors proposed a hybrid image registration method based on an entropy-based detector and a robust similarity measure. Their approach consists of two steps: the extraction of scale invariant salient region features for each image and the estimation of correspondences using a joint correspondence between multiple pairs of region features and the entropy correlation coefficient. Experimental results on medical images showed that the proposed method has excellent robustness to image noise. In [45], the concept of the wavelet-based hierarchical pyramid is combined with MI and SIFT to align medical images. This approach is compared to different image registration methods, namely cross-correlation, MI-based hierarchical registration, registration using SIFT features, and the hybrid registration technique described in [46]. The idea of this study was therefore to investigate the performance of the proposed method with results obtained from the same algorithms in the spatial domain. Experimental results showed that the proposed registration methods in the wavelet domain could achieve better performance than those in the spatial domain. Ezzeldeen et al. conducted a comparative study between a fast Fourier transform (FFT)-based technique, a contour-based technique, a wavelet-based technique, a Harris-pulse coupled neural network (PCNN)-based technique, and a Harris-moment-based technique for Landsat Thematic Mapper (TM) and SPOT remote sensing images. It was observed that the most appropriate technique was FFT, although it had the largest RMSE, of above two, whereas the method that detected the greatest number of control points in both images was the wavelet-based technique.

In [47], a two-stage registration algorithm was proposed which integrates feature-based and area-based methods for remote sensing images at different spatial resolutions (3.7, 16, and 30 m). In the first stage, the corresponding points are detected and matched using the SIFT algorithm. After outliers are removed using the random sample consensus (RANSAC), a weight function is introduced to distribute weight to different feature points to improve the performance of the local projective model. Then, a second registration is applied using Huber estimation and the structure tensor (ST) at the finer scale to minimize the influence of the remaining outliers on the transformation model. The results show that the proposed algorithm can achieve a reliable registration accuracy for varied terrain features.

In [48], another approach was proposed based on the phase correlation and Harris affine detector for images of outdoor buildings. Firstly, the overlapping areas in reference and sensed images are determined using translation parameters recovered using the phase correlation. Then, interest points are detected using the Harris affine detector and matched with the normalized cross-correlation (NCC). The experiment results demonstrate that the feature matching method achieves an accuracy of about 69.2% for 13 matched feature point pairs.

More recently, new approaches for hybrid approaches have been developed [47,48]. In [47], the authors apply the registration twice. Therefore, the resampling of the sensed image introduces aliasing in the similarity measure and therefore may reduce the accuracy of any correlation process, and hence any registration process [49]. In [48], the first step of the study was to determine the overlapping areas between the reference and sensed image by phase correlation; however, image matching is performed using a normalized cross-correlation algorithm. In addition, the proposed approach does not address outliers, which are inevitable, particularly for real data sets.

Although most approaches achieve a reasonable result, their performances tend to be heavily affected by the presence of local distortions. Knowledge about the characteristics of each type of local distortion must be considered to design and develop a robust registration approach for VHR remote sensing images. Therefore, our choice of algorithms was primarily dictated by the following criteria: (1) appropriate features should be robust to noise; (2) a large number of control points evenly distributed are required for an accurate result; (3) the matching method should be robust to noise; (4) sub-pixel localization accuracy of control points using optimization algorithms; (5) able to address inevitable outliers; (6) able to rapidly treat high data volume, which affects the processing speed; (7) automatic operation to avoid the requirement of manual experience and a priori knowledge; and (8) allow selection of the proper transformation function suitable for each type of geometric difference between the images.

2.2. Fine Registration Using Phase Correlation

Precise image matching is a crucial step in the registration of multi-source remote sensing images that may have significantly different illumination. For these images, matching algorithms can often only achieve accuracy at the pixel level due to the effect of noise and differences in acquired image pairs. Therefore, there is a need for sub-pixel correlation algorithms that enhance the accuracy, robustness, and efficiency of matching. Indeed, to address frequency-dependent noise due to illumination or changes in sensors, phase correlation based on the invariant properties of the Fourier transform is a good candidate.

Several sub-pixel phase correlation approaches have been developed, which are generally classified into two major categories [31,46,50,51]. In the first approach, the sub-pixel displacement is estimated in the spatial domain using the inverse Fourier transform method through interpolation, fitting, or other approaches with a certain range of neighbors [6]. The sinc function is one of the most commonly used fitting models [52,53,54]. Argyriou modified the conventional sinc function to fit the exact sub-pixel peak location using Gaussian weighting, which can better approximate the noisy correlation surface [55]. Another feasible alternative, which can provide accurate estimates of the real peak location through minimization of the correlation function, is defined as the inverse Fourier transform of the cross-power spectrum [56,57]. The sub-pixel methods calculated in the spatial domain are sensitive to the aliasing errors dispersed following inverse transforming of the cross-power spectrum [58].

In contrast, these artifacts are avoided in the second approach, which can estimate the sub-pixel shifts directly in the frequency domain. Three algorithms have been proposed: the SVD (singular value decomposition) method [59], the 2D fitting method [60], and nonlinear optimization [49,61]. Hoge used the SVD method, according to the Eckart–Young–Mirsky theorem, to determine the sub-pixel shifts by finding the optimum rank-one approximation of the normalized cross-power spectrum matrix [62]. In [61], a robust nonlinear optimization was proposed to estimate the sub-pixel displacements, with respect to the Hermitian inner product, by maximizing the norm of the projection of the computed normalized cross-spectrum of the images onto the continuous space defined by the theoretical space. In [49], another optimization method was proposed based on the Frobenius norm, which minimizes the difference between the computed and theoretical cross-power spectrum. More recently, in [3] the authors combined enhanced sub-pixel PC matching with a block-based phase congruency feature detector to precisely estimate translation for satellite imagery from different sensors. In [63], another approach focused on the effects of aliasing on the shift estimation and estimated the translation parameter using a least-squares fit to a two-dimensional data set. Foroosh et al. [52] extended the phase correlation method to sub-pixel accuracy using down-sampled images.

To improve the accuracy of registration algorithms at the sub-pixel level, the optimization method should be initialized appropriately to converge toward the optimal solution [49]. In addition, the task of designing or selecting the optimal sub-pixel phase correlation method for a specific problem should consider the kind of features extracted, the matching strategy, and the source of distortion present in the images to be registered [42]. Although most phase-matching approaches achieve a reasonable result, their performances tend to be heavily affected by the presence of edge effects. Therefore, it is of interest to develop a co-registration algorithm that combines good quality, robustness to noise, and a low computational cost. Common strategies for obtaining robust algorithms often involve the optimization of nonlinear cost functions [2,21], and significantly reduce the total computation cost. In the present contribution, inspired by the co-registration technique presented by Leprince et al. [49] and Alba [64], we propose an efficient sub-pixel registration approach based on an iterative optimization algorithm.

3. Materials and Methods

Our co-registration approach can be divided into four major steps. In the first step, corners are extracted from a set of reference images and sensed images. Then the corresponding points are identified on the sensed and reference images using phase correlation. After obtaining the corresponding points, accurate sub-pixel measurement is performed by minimizing a cost function. Following point-wise correspondence, it is important to address outliers prior to computing the spatial transformation model in order to achieve more accurate registration. All of the experiments were performed on a desktop with a 64-bit Windows 10 OS with MATLAB. The hardware of the desktop comprised 2.30 GHz Intel i5-6200U CPU and 12 GB memory.

3.1. Harris Corners Extraction

The fundamental step in image registration is to choose which features are to be used for robust matching in order to determine the most optimal transformation between the two images [11]. In order to achieve reliable matching, corner points are chosen as matching features as they are the most stable features in the image; they are invariant to scale, rotation, and viewpoint changes [42]. As a result, that computing the optimal transformation parameters depends on these features, a sufficient number of invariant features, evenly distributed across the image, are required.

The proposed approach consists of detecting interest points using a suitable corner detector. Compared to previous detectors, the Harris corner detector has been proven to provide more fine and stable results [65,66]. Here, we propose the detection of accurate corners using a Harris corner detector to significantly enhance the registration performance. Moreover, since precise homologous feature points with a homogeneous spatial distribution over the images are important for reliable determination of spatial transformation model parameters [67], our approach involves first subdividing the image into nine sub-regions and then extracting the Harris corners points for each area using the Harris detection algorithm.

This process ensures a uniform distribution of points throughout the image and avoids out-of-memory errors.

3.2. Point Correspondence Using Fourier Phase Matching

Following the extraction of distinctive control points from the reference image, the search for corresponding control points (CP) is then performed using Fourier phase matching, which consists of searching the best match for each corner in the reference image from a set of candidate points in the sensed image. The candidate corner with maximum magnitude of the inverse Fourier transform of the normalized cross-power spectrum is considered the correct corresponding point.

Phase correlation methods used to estimate the displacement [2,18,19,21,29] with sub-pixel accuracy are affected by aliasing and edge effects, which are mostly present at higher frequencies. The bandwidth of the magnitude of the cross-spectrum may, therefore, be essential to limit these artifacts. Stone recommended masking the phase-correlation matrix by retaining only the frequencies for which the magnitude of the cross-spectrum crosses above a certain threshold value [63].

Additionally, image features close to the image edges can have a negative effect on the ability to identify translational motion between two images. Applying a 2D spatial Blackman or Blackman–Harris window greatly reduces image-boundary effects in the Fourier domain [63]. In [59], the Blackman window removes a significant amount of the signal energy. The Hann window achieves a good compromise between noise reduction and losing the desired phase information [49]. The idea behind denoising is to choose the appropriate frequency masking such that the undesired signal is removed while preserving the necessary information.

The more successful phase matching methods typically apply Hann or Blackman windows to reduce the impact of edge effects on the phase correlation process. Although performing phase matching can provide accurate results, it is important to improve the image clarity using windowing. Identification of efficient image denoising techniques remains a critical challenge. To choose a suitable window for the reference and the sensed images, we evaluated the effect of the Blackman window and the Hann window prior to the Fourier transform as a post-process to minimize the amount of loss in the image intensity information, and therefore obtain a more accurate estimation of the translational shifts.

3.3. Sub-Pixel Translation Estimation

Among the existing sub-pixel registration techniques, Fourier domain methods achieve excellent robustness against correlated and frequency-dependent noise [4,19,20,21,22]. Most of these methods are in fact variations of the original phase-correlation method [28]. The translation (x,y) that is given by can be recovered in either the spatial or frequency domains. In the first approach, the inverse Fourier transform of the normalized cross-power spectrum was computed in [15,19], and we obtain a function that is an impulse; that is, it is approximately zero everywhere except at the displacement (xo, yo), which is needed for the shift to optimally register the two images. As a result, it is limited to integer-pixel accuracy. Getting sub-pixel accuracy requires finding the displacement that maximizes the inverse Fourier transform of the normalized cross-power spectrum defined as the phase-only correlation (POC) function. The normalized cross-spectrum of the images is defined by:

where wx and wy are the frequency variables in column and row.

And the POC function is given by

with and , where , and are, respectively, the width and height of the input images.

The problem of finding the extrema of the cost function with sub-pixel accuracy is equivalent to finding the zeros of . The derivative of takes the form of a vector-valued bivariate gradient function, given by:

with

and

by setting .

This stage involves computing the sub-pixel displacements that minimize the cost function. The process is then iterated until convergence to a close neighborhood or it has reached its maximum number of iterations; then, the algorithm is implemented to obtain a fast and accurate solution. The drawback of phase correlation is that it cannot cope with large noisy data. Therefore, both a good initial estimate of the displacement and an optimal filter for noise reduction without losing the desired information are required.

The derivative of the cost function has increased high-frequency content with respect to . Thus, is very sensitive to noise and border effects. For this reason, two methods are used to minimize the objective function for accurate local searching. The first uses only function evaluations, and the second method requires the first derivative to update the solution point.

3.3.1. Nelder–Mead (NM) Optimization

The Nelder–Mead (NM) method [68] was designed to solve multidimensional unconstrained minimization of an objective function of n variables using (n + 1) vertices arranged as a simplex. For two variables, a simplex is a triangle, and the algorithm begins with a randomly-generated simplex requiring three starting points; the first was the integer solution ( and the remaining two were chosen as ( ±0.5, ) and (, ±0.5) where the sign was selected depending on which neighbor was best. At every iteration step, the NM algorithm modifies a single vertex of the current simplex towards an optimal region in the search space to determine the optimal value of the objective function. Finally, the vertex of the triangle that yields the most optimal objective value is returned.

3.3.2. The Two-Point Step Size (TPSS) Gradient

In [21], the TPSS is an optimization algorithm used to minimize the POC function at a point by iteratively moving in the direction of steepest descent until convergence is achieved.

The updating equation for any gradient algorithm has the following iterative form:

where is the gradient vector of r at , is a given starting point, and the scalar is given by:

where and .

Iterations stop when where is a pre-specified small scalar.

3.4. Detection of Outliers

The choice of the best key points plays a crucial role in order to achieve the most accurate registration. Co-registration can be inaccurate if outliers are present. Thus, dealing with outliers is absolutely necessary prior to computing a spatial transformation model [69,70]. To identify and handle outliers efficiently, robust algorithms are required. Our approach is based on the Random Sample Consensus (RANSAC) algorithm for filtering outliers in an efficient way. RANSAC algorithm proposed by Fischler and Bolles [71], is one of the most robust techniques designed to detect and deal with a large percentage of outliers in the key points set. This method aims to repeatedly select a minimal set of points and evaluates their likelihood with other data until the optimal solution is found. The basic steps of the RANSAC algorithm are as follows: Sampling of all minimal random points from all point-wise correspondences to estimate a model by minimizing a cost function that is the sum of the distance error of the inlier. All other point correspondences are checked against the fitted model. A point with a residual larger than a threshold will be considered an outlier. The estimated model with sufficiently many inliers is classified as the optimal transformation. Finally, all the inliers are used to re-estimate the model. The RANSAC algorithm is listed in Algorithm 1.

| Algorithm 1: RANSAC Algorithm |

|

3.5. Transformation Model

A precise registration requires an adequate choice of the optimal transformation that correctly models the distortions expected to be present within the images [11]. A transformation is selected depending on the nature of distortions. The most commonly used registration is the global transformations which are implemented by a single equation that maps the entire image using a single function. Generally, the registration of satellite imagery has been modeled as a global deformation, mainly utilizing affine and polynomial transformations [1,72]. For very high spatial resolution imagery with rugged terrain, a global transformation may not handle the local distortions. Global transformations that can accommodate slight local variations include thin-plate spline (TPS) [1,73] and multiquadric transformations (MQ). To overcome the problem of geometric distortions, several local mapping functions have been proposed. These transformations are more accurate, but are time-consuming and need large numbers of evenly distributed features to represent the local variation [74]. In [75], Goshtasby found that TPS and MQ functions are effective in the registration of remote sensing images with few local geometric differences and a small number of widely spread points. By comparison, piecewise linear transformation (PL) is more appropriate for images with local geometric differences because local accuracy is kept in a small neighborhood surrounding the corresponding control points [76,77]. When the correspondences are noisy, weighted mean and weighted linear methods are preferred to PL, TPS, and MQ because inaccuracies in the control point are smoothed and the rational weight is adapted to the density and organization of the control points [77]. Here we use TPS and polynomial transformations to register VHR remote sensing images.

3.6. Workflow of the Proposed Approach

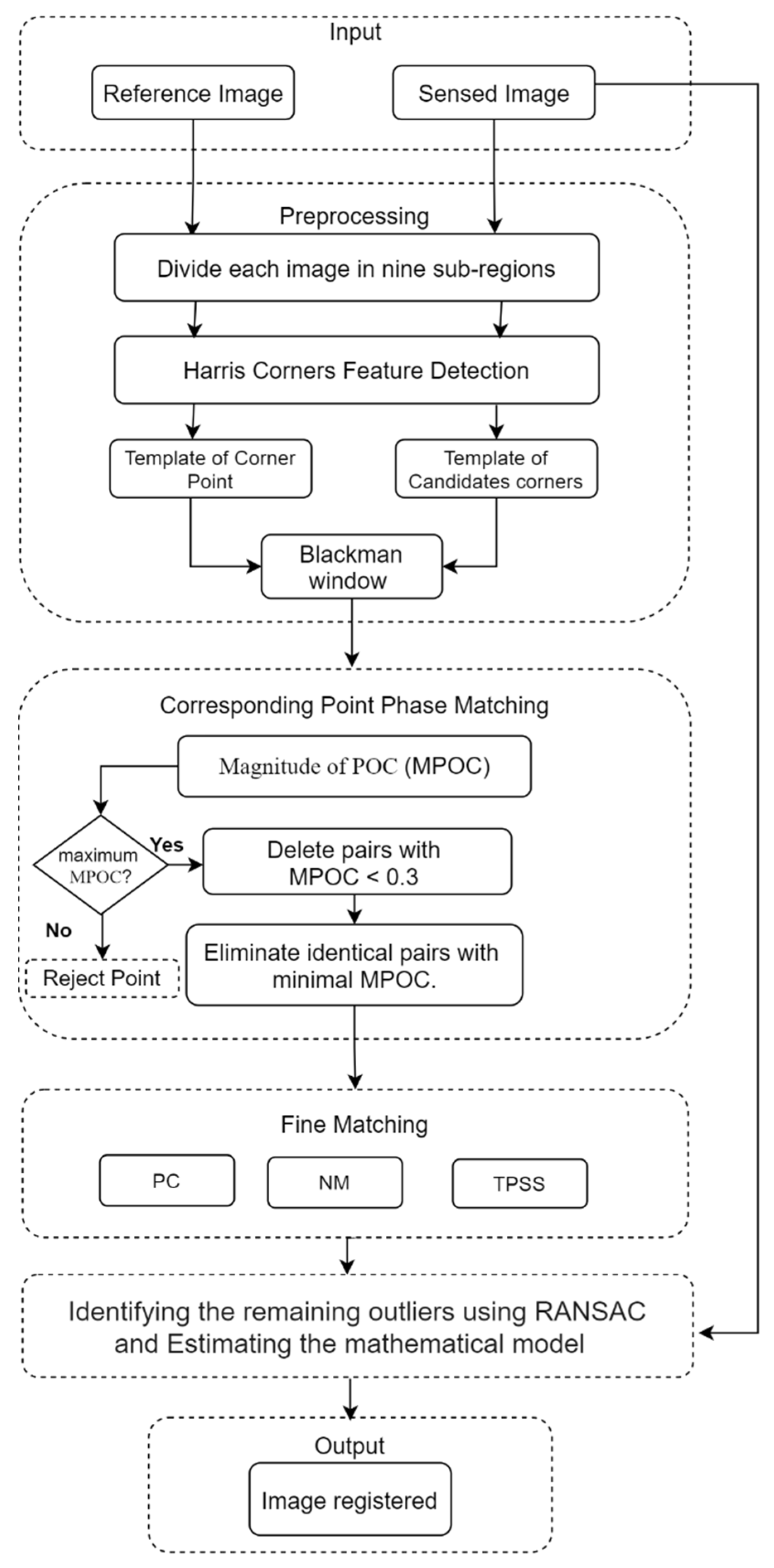

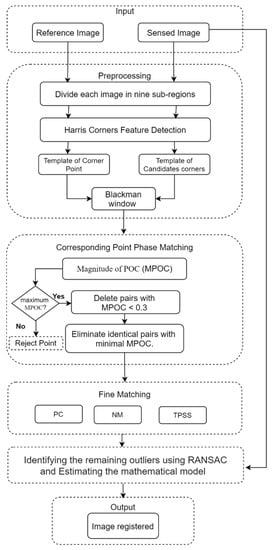

The proposed hybrid algorithm used for sub-pixel co-registration can be summarized in the following steps (Figure 1):

Figure 1.

The flowchart of our methodology. CP: control point; DB: database; PC: phase correlation; NM: Nelder–Mead; TPSS: two-point step size.

- Extract Harris corners from the sensed and reference images for each of the nine sub-regions.

- For each point PCi in the reference image, weigh the reference template image and the candidate template image for each of the k-nearest neighbors in the sensed image by a Blackman window.

- Compute the discrete Fourier transform of each filtered image .

- Compute the normalized cross-correlation and POC function between .

- The candidate points with the maximum magnitude of the phase-only correlation are considered as the exact corresponding points.

- Eliminate the pairs for which the score is less than 0.3, in addition to the many-to-one match with the minimum score.

- Deal with outliers using the RANSAC algorithm.

- Compute the displacement . for each corresponding point pairs using phase correlation.

- Using as initial approximations, two optimization algorithms are used to find that maximize the POC function .

- Compute the parameters of the model transformation using least squares minimization.

- Apply model transformations to align the sensed image with the reference image.

3.7. Evaluation Criteria

We evaluated the overall performance of our proposed co-registration workflow using the precision of phase matching and the root mean square error (RMSE) of measured displacements before and after the registration.

The RMSE can be written generically as:

where i is the CP number, n is the number of evaluated points, xti and yti are the true coordinates, and xri and yri are the retransformed coordinates for CPi.

Feature matching precision is used to evaluate the matching performance. Matching precision refers to the ratio of the number of correct matches over the total number of matches.

where Nc is the number of correct matches and Nw is the number of wrong matches.

4. Results and Discussion

In this section, we first present the results obtained using our proposed method to automatically find corresponding points and accurately register the sensed image. Then, we evaluate the effects of different window functions on the correlation measures. After identifying a suitable window function, in the third series of experiments we apply the Nelder–Mead and TPSS optimization methods to improve the matching accuracy. The proposed methods were carried out using ground-truth sub-pixel shifts.

4.1. Descriptions of Experimental Data

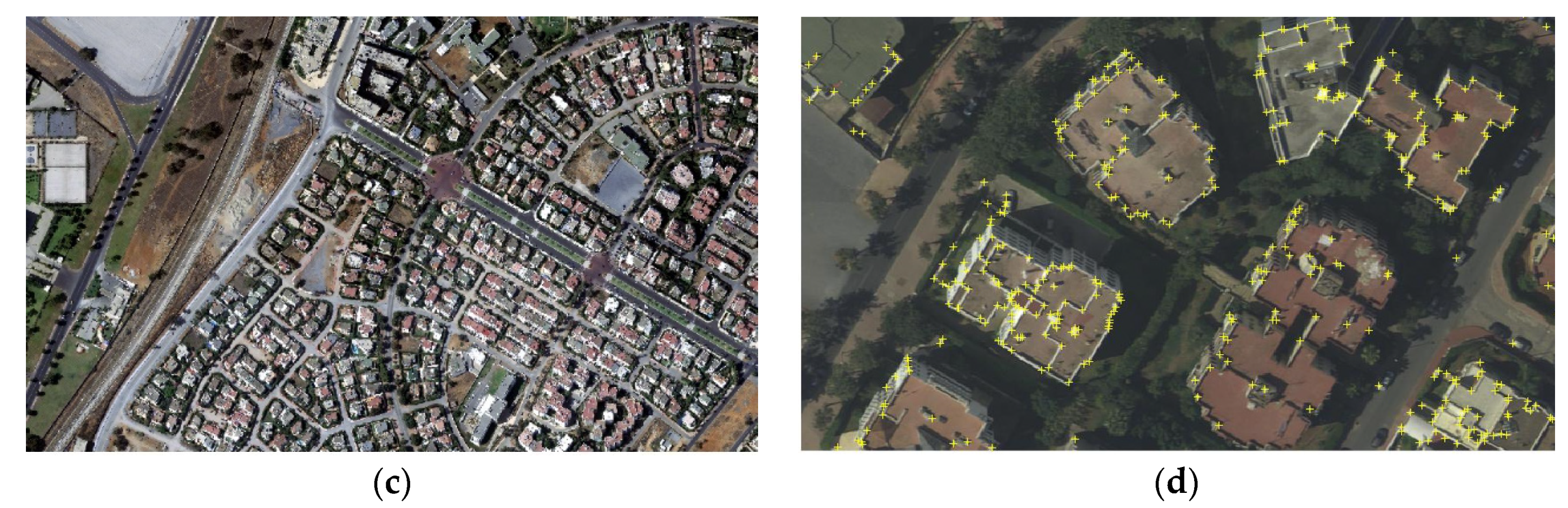

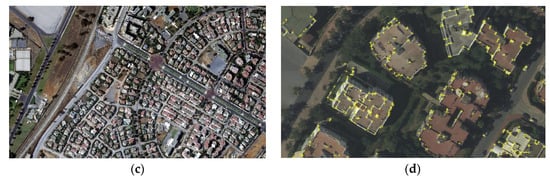

The reliability and validity of the proposed method were evaluated with two sets of VHR remote sensing images acquired from different sensors. These data include a Pleiades multispectral scene and an aerial image at Fes and Rabat city, respectively. Their specific parameters are given in Table 1 and Table 2, and the aerial imagery is displayed in Figure 2. Each set of images contains a reference image and a sensed image registered based on the metadata and georeferencing information. The approximate height range at the Fes city is between 180 and 837 m. The elevation for the Rabat test site ranges between 2 and 332 m.

Table 1.

Specific parameters of the Pleiades images.

Table 2.

Specific parameters of aerial images.

Figure 2.

Aerial photography used in the experiments: (a) reference image acquired in 2009, (b) sensed image acquired in 2014, (c) sensed image acquired in 2016, (d) sensed image with Harris corners.

4.2. Large-Scale Displacements Estimation with Pixel-Level Accuracy

In this section, we evaluate the effect of the window functions on the phase matching and identified the corresponding control points using phase correlation based on the windows function that provided the largest number of matches.

4.2.1. Effect of Two Window Functions on the Correlation Measures

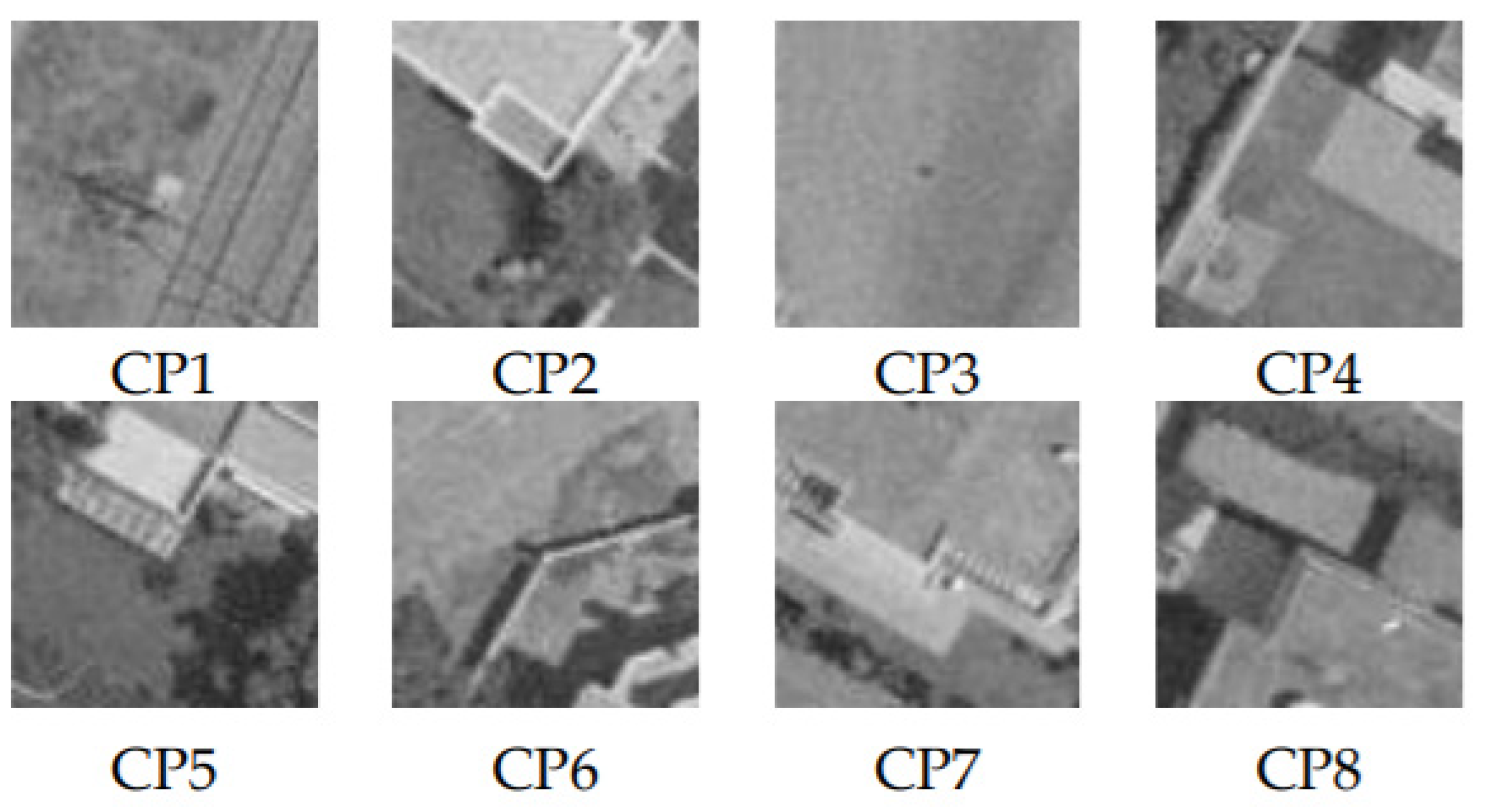

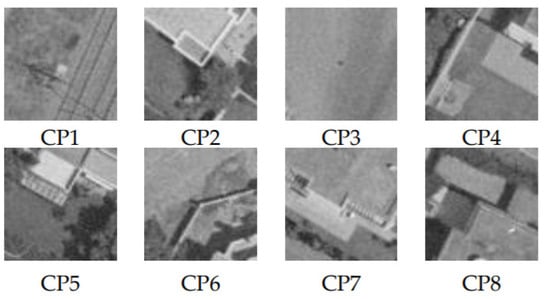

Here, phase matching results for a pair of aerial images 2014–2016 are presented and discussed. To choose the optimal window, we applied Blackman and Hann window functions to the templates of sensed and reference images centered on 128 Harris points. A correlation was considered to be successful if the magnitude of the POC function was less than 0.3. After outlier removal, 110 corresponding point pairs remained. Examples of the template images of reference points are shown in Figure 3. The experiment results show that the use of the Blackman window for both reference and sensed images generated a greater number of correct matches than the Hann window function; however, the Hann window produced more accurate results. The responses of both the Blackman and Hann window functions are robust to noise and produce more accurate matching. The results are summarized in Table 3.

Figure 3.

Examples of aerial image templates used in the experiments.

Table 3.

Statistics of the matching errors using Blackman and Hann window functions, expressed in pixels. BW: Blackman window; HW: Hann window; BW-HW: reference patches weighted with BW and sensed patches weighted with HW; and HW-BW: reference patches weighted with HW and sensed patches weighted with BW.

4.2.2. Performance of Phase Matching

Table 4 shows the results of phase matching and our algorithm for aerial images. For the first algorithm, which uses phase correlation only, the experiment results confirm that using only phase matching may not always provide precise correlation, particularly for multipixel displacements between reference and sensed images; this confirms the results of Leprince et al. [49]. To improve the accuracy of image matching, we applied a hybrid algorithm that combines phase correlation and a feature-based technique. After extracting the interest points using the Harris corner detector, we efficiently identified the corresponding feature matches using phase correlation with a template image centered in the Harris corner. The Harris corner was first used to achieve a good initial position, based on which matching was then refined using the phase matching method. Our approach avoids decorrelation caused by multipixel displacements and produces a displacement estimation with pixel precision.

Table 4.

Results for the PM only and the PM using Harris corners with different types of window functions. PM: Phase Matching. The unit of RMSE is pixels.

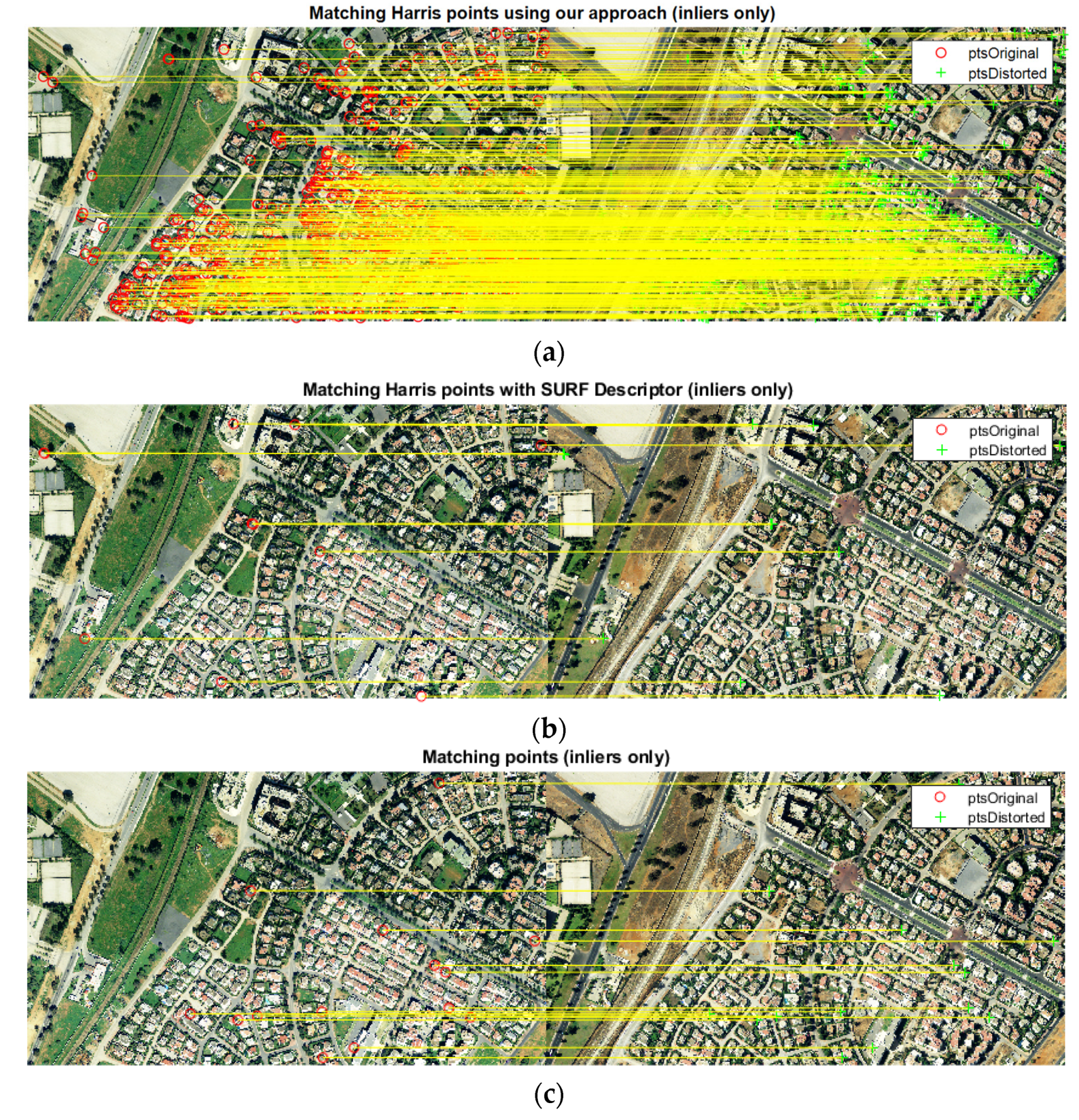

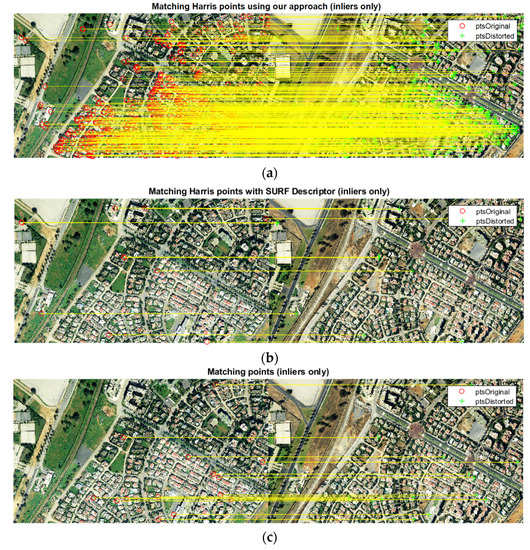

The results of the correlation process of our approach and the SURF-based method are shown in Table 5. The matching score of the proposed approach indicates better performance was achieved than that of the SURF descriptor; the average matching precisions are about 0.1 and 0.02 for aerial images, respectively, and for satellite images, the average matching scores obtained are about 0.11 and 0.15, respectively. The main difference between the SURF-based method and our method is the number of correct matches. Figure 4 shows that our approach provides the largest number of corresponding feature points for both aerial and satellite images. In terms of the overhead computation time, for satellite images, our approach requires an average of 237.5 mn due to the large number of corresponding points. Conversely, the SURF-based matching can obtain a result in a shorter time.

Table 5.

Comparison between time consumption and true matching rate of the proposed method and SURF-based matching. (1) Aerial image 2014–2016 (45792 SURF corners and 35274 Harris corners). (2) Satellite imagery 2013–2014 (1760353 SURF corners and 1094728 Harris corners). MinQuality: Minimum accepted quality of corners.

Figure 4.

Matching result comparison after RANSAC for aerial imagery 2014–2016: (a) our approach without optimization, (b) Harris corners with SURF descriptor, (c) SURF-based method. (SURF corners with SURF descriptor).

The success of the proposed approach is largely due to the use of the Blackman and Hann windows, which avoid edge effects by using patches of 16 × 16 pixels and the RANSAC algorithm to deal with outliers; the larger patch size increases the number of incorrectly matched point. To obtain this performance for the corresponding algorithm, the results of many phase matching trials were examined for various experimental conditions. The experiments demonstrated that with VHR remote sensing images, the proposed method was able to find a large number of correct matches with homogeneous distribution.

4.3. Enhanced Sub-Pixel Displacement Estimation

To achieve more accurate results, we tested two optimization approaches using POC function maximization. To assess the quality and accuracy of this automated process on aerial and satellite images, we manually relocated the corresponding point in the sensed image for 40 control points.

A comparison of two-point step size and Nelder–Mead algorithms are shown in Table 6. The analysis of the optimization results shows that both of the optimization algorithms provide the same accuracy of the displacement estimate. The obtained RMSE values of the NM algorithm are similar to those of the solution without optimization, even if both algorithms are initialized with the same displacement generated using phase correlation. In addition, when the MN algorithm attempts to iteratively reduce the error for both the east/west and north/south displacement to improve the minimum of the POC function, the estimations provide the same interest point localizations as the Harris detector algorithm.

Table 6.

Evaluation of the improved sub-pixel shift estimation using two optimization algorithms (TPSS: two-point step size, MN: Nelder–Mead). The unit of RMSE is pixels.

The difference between the two-point step size and Nelder–Mead algorithms are the overhead computation time: the two-point step size algorithm (107 mn) is more time-consuming than the Nelder–Mead algorithm, which required an average of 3 mn for the same number of corners (in addition to the preprocessing and corresponding point phase matching steps). It was observed that our sub-pixel approach based on Nelder–Mead optimization performs better in terms of the number of correct matches, localization accuracy, and computation time.

4.4. Validation of the Proposed Registration Method

Following sub-pixel refinement, the corresponding points were used to estimate the model transformation for each data set. The proposed approach found 150,619, and 33,565 corresponding points in aerial image 2014, aerial image 2016, and satellite image 2014, respectively. In this work, we registered the three images using TPS and polynomial transformation. This step was performed using PCI Geomatica.

The estimated misregistration, measured on a set of independent check points, was found using TPS and first-order polynomial transformations, separately, in each of the x and y dimensions. As shown in Table 7, the polynomial transformation of degree one provides better performance than TPS, which is time-consuming for large images. The proposed approach was able to align remote sensing images and achieved an average accuracy of 0.3 pixels in the x and y directions for aerial image 2016. This performance was lower for aerial image 2014, which was co-registered with the aerial image 2009 acquired with an analogue camera. Regarding the co-registration of the satellite image, the accuracy was about 0.6 pixels.

Table 7.

Registration accuracy using two transformation functions. Thin plate spline (TPS). (1) Aerial image 2009–2014. (2) Aerial image 2014–2016. (3) Satellite imagery 2013–2014. The unit of RMSE is meters.

5. Conclusions

Very high-resolution remote sensing images usually contain obvious geometric distortion in local areas introduced by increasing the spatial resolution and lowering the altitude of the sensors. To co-register these images more effectively, this paper proposes a hybrid registration approach based on combining Harris feature corners and phase correlation, which has two advantages. First, the proposed approach uses Harris feature points, which improve the reliability and efficiency of Fourier phase matching. Second, it is more insensitive to image noise and sub-pixel resolution is feasible.

As a result that the Harris corner location is at the pixel level, two optimization algorithms were used to refine the localization to sub-pixel accuracy. The proposed approach was used to co-register the aerial and satellite imagery and was validated in terms of precision and robustness to noise.

The experiment results show that the responses of both the Blackman and Hann window functions are robust to noise and result in more accurate matching. The use of the Blackman window for both reference and sensed images generates a larger number of correct matches than the Hann window function and results in a correct matching rate of 0.8. In addition, the correct matching rate of the proposed approach is superior to that of the SURF-based method. The experiments also demonstrate that the proposed approach based on the MN optimization algorithm performs better in terms of the number of correct matches, localization accuracy, and computation time. The sensed image was then registered using polynomial and TPS transformations, and the parameters of the polynomial mapping function were estimated via the weighted least squares method. Experiments confirmed that the proposed approach can achieve registration accuracy better than 0.5 pixels and is more robust to noise. Our approach is a better compromise between accurate results and a high number of correspondences. The advantages of our approach are: (1) it produces a high number of true correspondences that match with the sub-pixel location; (2) it results in high performance of phase matching, which is based on corners and produces more precise and stable results; (3) it reduces the computation cost because the correlation is based on corners, and allows accurate error evaluation because the error can be detected and measured more easily.

Three limiting factors of our approach need to be improved. First, our adopted phase correlation approach recovers only the translation between the reference and sensed images. Second, the high computational processing time can be addressed using a coarse-to-fine registration strategy and hardware with high capabilities. The third factor relates to the control points used to assess the optimization algorithms. The control points are manually relocated, which means that the localization may contain errors because it is difficult to localize the same corner with sub-pixel accuracy. Therefore, the performance and efficacy of the optimization methods must be revalidated with simulated data.

Our future work will focus on Fourier–Mellin transformation to match images that are translated, rotated, and scaled, with the aim to improve sub-pixel image registration accuracy using satellite and drone imagery.

Author Contributions

L.R. developed the methods, carried out the experiments, and wrote the manuscript. I.S. and M.E. supervised the research. All the authors analyzed the results and improved the manuscript. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Acknowledgments

The authors would like to thank the editor and anonymous reviewers for their valuable comments on the improvement of this paper. The authors also thank the National Agency of Land Registry, Cadaster and Cartography (ANCFCC), and Royal Center of Remote Sensing (CRTS) for providing aerial images.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Bentoutou, Y.; Taleb, N.; Kpalma, K.; Ronsin, J. An automatic image registration for applications in remote sensing. IEEE Trans. Geosci. Remote Sens. 2005, 43, 2127–2137. [Google Scholar] [CrossRef]

- Dong, Y.; Jiao, W.; Long, T.; Liu, L.; He, G. Eliminating the Effect of Image Border with Image Periodic Decomposition for Phase Correlation Based Remote Sensing Image Registration. Sensors 2019, 19, 2329. [Google Scholar] [CrossRef]

- Ye, Z.; Kang, J.; Yao, J.; Song, W.; Liu, S.; Luo, X.; Xu, Y.; Tong, X. Robust Fine Registration of Multisensor Remote Sensing Images Based on Enhanced Subpixel Phase Correlation. Sensors 2020, 20, 4338. [Google Scholar] [CrossRef]

- Fan, R.; Hou, B.; Liu, J.; Yang, J.; Hong, Z. Registration of Multiresolution Remote Sensing Images Based on L2-Siamese Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 237–248. [Google Scholar] [CrossRef]

- Tondewad, M.P.S.; Dale, M. Remote Sensing Image Registration Methodology: Review and Discussion. Procedia Comput. Sci. 2020, 171, 2390–2399. [Google Scholar] [CrossRef]

- Tong, X.; Ye, Z.; Xu, Y.; Gao, S.; Xie, H.; Du, Q.; Liu, S.; Xu, X.; Liu, S.; Luan, K.; et al. Image Registration With Fourier-Based Image Correlation: A Comprehensive Review of Developments and Applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 4062–4081. [Google Scholar] [CrossRef]

- Lee, W.; Sim, D.; Oh, S.-J. A CNN-Based High-Accuracy Registration for Remote Sensing Images. Remote Sens. 2021, 13, 1482. [Google Scholar] [CrossRef]

- Feng, R.; Du, Q.; Shen, H.; Li, X. Region-by-Region Registration Combining Feature-Based and Optical Flow Methods for Remote Sensing Images. Remote Sens. 2021, 13, 1475. [Google Scholar] [CrossRef]

- Shah, U.S.; Mistry, D. Survey of Image Registration techniques for Satellite Images. Int. J. Sci. Res. Dev. 2014, 1, 2448–2452. [Google Scholar]

- Eastman, R. Survey of image registration methods. In Image Registation for Remote Sensing; Cambridge University Press: Cambridge, UK, 2011; pp. 35–78. [Google Scholar]

- Brown, L.G. A survey of image registration techniques. ACM Comput. Surv. 1992, 24, 325–376. [Google Scholar] [CrossRef]

- Zitová, B.; Flusser, J. Image registration methods: A survey. Image Vis. Comput. 2003, 21, 977–1000. [Google Scholar] [CrossRef]

- Bhowmik, A.; Gumhold, S.; Rother, C.; Brachmann, E. Reinforced Feature Points: Optimizing Feature Detection and Description for a High-Level Task. arXiv 2020, arXiv:1912.00623. [Google Scholar]

- Li, K.; Zhang, Y.; Zhang, Z.; Lai, G. A Coarse-to-Fine Registration Strategy for Multi-Sensor Images with Large Resolution Differences. Remote Sens. 2019, 11, 470. [Google Scholar] [CrossRef]

- Ma, J.; Jiang, X.; Fan, A.; Jiang, J.; Yan, J. Image Matching from Handcrafted to Deep Features: A Survey. Int. J. Comput. Vis. 2021, 129, 23–79. [Google Scholar] [CrossRef]

- Mikolajczyk, K.; Schmid, C. A performance evaluation of local descriptors. IEEE Trans. Pattern Anal. Mach. Intell. 2003, 27, 1615–1630. [Google Scholar] [CrossRef]

- Kelman, A.; Sofka, M.; Stewart, C.V. Keypoint Descriptors for Matching Across Multiple Image Modalities and Non-linear Intensity Variations. In Proceedings of the 2007 IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007; pp. 1–7. [Google Scholar] [CrossRef]

- Ehsan, S.; Clark, A.F.; Mcdonald-Maier, K.D. Rapid Online Analysis of Local Feature Detectors and Their Complementarity. Sensors 2013, 13, 10876–10907. [Google Scholar] [CrossRef]

- Bansal, M.; Kumar, M. 2D object recognition: A comparative analysis of SIFT, SURF and ORB feature descriptors. Multimedia Tools Appl. 2021, 80, 18839–18857. [Google Scholar] [CrossRef]

- Harris, C.; Stephens, M. A Combined Corner and Edge Detector. AVC 1988, 23.1–23.6. [Google Scholar] [CrossRef]

- Zhu, Q.; Wu, B.; Wan, N. A sub-pixel location method for interest points by means of the Harris interest strength. Photogramm. Rec. 2007, 22, 321–335. [Google Scholar] [CrossRef]

- Zhang, Z.; Lu, H.; Li, X.; Li, W.; Yuan, W. Application of Improved Harris Algorithm in Sub-Pixel Feature Point Extraction. Int. J. Comput. Electr. Eng. 2014, 6, 101–104. [Google Scholar] [CrossRef][Green Version]

- Lowe, G. SIFT—The Scale Invariant Feature Transform. Int. J. Comput. Vis. 2004, 2, 91–110. [Google Scholar] [CrossRef]

- Ye, M.; Tang, Z. Registration of correspondent points in the stereo-pairs of Chang’E-1 lunar mission using SIFT algorithm. J. Earth Sci. 2013, 24, 371–381. [Google Scholar] [CrossRef]

- Wang, S.; Wang, X.; Li, J. GF-2 Panchromatic and Multispectral Remote Sensing Image Registration Algorithm. IEEE Access 2020, 8, 138067–138076. [Google Scholar] [CrossRef]

- Dong, Y.; Jiao, W.; Long, T.; He, G.; Gong, C. An Extension of Phase Correlation-Based Image Registration to Estimate Similarity Transform Using Multiple Polar Fourier Transform. Remote Sens. 2018, 10, 1719. [Google Scholar] [CrossRef]

- Oppenheim, A.V.; Buck, J.R.; Schafer, R.W. Discrete-Time Signal Processing; Prentice Hall: Upper Saddle River, NJ, USA, 2001; Volume 2. [Google Scholar]

- Kuglin, C.D. The Phase Correlation Image Alignment Method. Proc. Int. Conf. Cybern. Soc. 1975, 4, 163–165. [Google Scholar]

- Li, X.; Hu, Y.; Shen, T.; Zhang, S.; Cao, J.; Hao, Q. A comparative study of several template matching algorithms oriented to visual navigation. In Proceedings of the Optoelectronic Imaging and Multimedia Technology VII, Virtual, 11–16 October 2020; Volume 11550, pp. 66–74. [Google Scholar]

- Arya, K.V.; Gupta, P.; Kalra, P.; Mitra, P. Image registration using robust M-estimators. Pattern Recognit. Lett. 2007, 28, 1957–1968. [Google Scholar] [CrossRef]

- Kim, J.; Fessler, J.A. Intensity-Based Image Registration Using Robust Correlation Coefficients. IEEE Trans. Med. Imaging 2004, 23, 1430–1444. [Google Scholar] [CrossRef] [PubMed]

- Georgescu, B.; Meer, P. Point matching under large image deformations and illumination changes. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 674–688. [Google Scholar] [CrossRef]

- Eastman, R.D.; Le Moigne, J.; Netanyahu, N.S. Research issues in image registration far remote sensing. IEEE Conf. Comput. Vis. Pattern Recognit. 2007, 1–8. [Google Scholar] [CrossRef]

- Konstantinidis, D.; Stathaki, T.; Argyriou, V. Phase Amplified Correlation for Improved Sub-Pixel Motion Estimation. IEEE Trans. Image Process. 2019, 28, 3089–3101. [Google Scholar] [CrossRef]

- Xu, Q.; Chavez, A.G.; Bulow, H.; Birk, A.; Schwertfeger, S. Improved Fourier Mellin Invariant for Robust Rotation Estimation with Omni-Cameras. arXiv 2019, arXiv:1811.05306v2, 320–324. [Google Scholar]

- Leprince, S.; Ayoub, F.; Klinger, Y.; Avouac, J.-P. Co-Registration of Optically Sensed Images and Correlation (COSI-Corr): An operational methodology for ground deformation measurements. IEEE Int. Geosci. Remote Sens. Symp. 2007, 1943–1946. [Google Scholar] [CrossRef]

- Scheffler, D.; Hollstein, A.; Diedrich, H.; Segl, K.; Hostert, P. AROSICS: An Automated and Robust Open-Source Image Co-Registration Software for Multi-Sensor Satellite Data. Remote Sens. 2017, 9, 676. [Google Scholar] [CrossRef]

- De Castro, E.; Morandi, C. Registration of Translated and Rotated Images Using Finite Fourier Transforms. IEEE Trans. Pattern Anal. Mach. Intell. 1987, 9, 700–703. [Google Scholar] [CrossRef]

- Chen, Q.-S.; Defrise, M.; Deconinck, F. Symmetric phase-only matched filtering of Fourier-Mellin transforms for image registration and recognition. IEEE Trans. Pattern Anal. Mach. Intell. 1994, 16, 1156–1168. [Google Scholar] [CrossRef]

- Reddy, B.; Chatterji, B. An FFT-based technique for translation, rotation, and scale-invariant image registration. IEEE Trans. Image Process. 1996, 5, 1266–1271. [Google Scholar] [CrossRef]

- Abdelfattah, R.; Nicolás, J.M. InSAR image co-registration using the Fourier–Mellin transform. Int. J. Remote Sens. 2005, 26, 2865–2876. [Google Scholar] [CrossRef]

- Gottesfeld, L. A Survey of Image Registration. ACM Comput. Surv. 1992, 24, 326–376. [Google Scholar]

- Viola, P.; Jones, M. Rapid object detection using a boosted cascade of simple features. In Proceedings of the 2001 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Kauai, HI, USA, 8–14 December 2001. [Google Scholar] [CrossRef]

- Huang, X.; Sun, Y.; Metaxas, D.; Sauer, F.; Xu, C. Hybrid Image Registration based on Configural Matching of Scale-Invariant Salient Region Features. IEEE Comput. Soc. Conf. Comput. Vis. Pattern Recognit. Work. 2005, 167. [Google Scholar] [CrossRef]

- Mekky, N.E.; Kishk, S. Wavelet-Based Image Registration Techniques: A Study of Performance. Comput. Geosci. 2011, 11, 188–196. [Google Scholar]

- Suri, J.; Schwind, S.; Reinartz, P.; Uhl, P. Combining mutual information and scale invariant feature transform for fast and robust multisensor SAR image registration. Am. Soc. Photogramm. Remote Sens. Annu. Conf. 2009, 2, 795–806. [Google Scholar]

- Feng, R.; Du, Q.; Li, X.; Shen, H. Robust registration for remote sensing images by combining and localizing feature- and area-based methods. ISPRS J. Photogramm. Remote Sens. 2019, 151, 15–26. [Google Scholar] [CrossRef]

- Zheng, Y.; Zheng, P. Image Matching Based on Harris-Affine Detectors and Translation Parameter Estimation by Phase Correlation. In Proceedings of the 2019 IEEE 4th International Conference on Signal and Image Processing (ICSIP), Wuxi, China, 19–21 July 2019; pp. 106–111. [Google Scholar] [CrossRef]

- Leprince, S.; Barbot, S.; Ayoub, F.; Avouac, J.-P. Automatic and Precise Orthorectification, Coregistration, and Subpixel Correlation of Satellite Images, Application to Ground Deformation Measurements. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1529–1558. [Google Scholar] [CrossRef]

- Hrazdíra, Z.; Druckmüller, M.; Habbal, S. Iterative Phase Correlation Algorithm for High-precision Subpixel Image Registration. Astrophys. J. Suppl. Ser. 2020, 247, 8. [Google Scholar] [CrossRef]

- Li, J.; Ma, Q. A Fast Subpixel Registration Algorithm Based on Single-Step DFT Combined with Phase Correlation Constraint in Multimodality Brain Image. Comput. Math. Methods Med. 2020, 2020, 1–10. [Google Scholar] [CrossRef]

- Foroosh, H.; Zerubia, J.B.; Berthod, M. Extension of phase correlation to subpixel registration. IEEE Trans. Image Process. 2002, 11, 188–200. [Google Scholar] [CrossRef]

- Ma, N.; Sun, P.-F.; Men, Y.-B.; Men, C.-G.; Li, X. A Subpixel Matching Method for Stereovision of Narrow Baseline Remotely Sensed Imagery. Math. Probl. Eng. 2017, 2017, 7901692. [Google Scholar] [CrossRef]

- Ren, J.; Jiang, J.; Vlachos, T. High-Accuracy Sub-Pixel Motion Estimation From Noisy Images in Fourier Domain. IEEE Trans. Image Process. 2009, 19, 1379–1384. [Google Scholar] [CrossRef]

- Argyriou, V.; Vlachos, T. On the estimation of subpixel motion using phase correlation. J. Electron. Imaging 2007, 16. [Google Scholar] [CrossRef]

- Alba, A.; Gomez, J.-F.V.; Arce-Santana, E.R.; Aguilar-Ponce, R.M. Phase correlation with sub-pixel accuracy: A comparative study in 1D and 2D. Comput. Vis. Image Underst. 2015, 137, 76–87. [Google Scholar] [CrossRef]

- Guizar-Sicairos, M.; Thurman, S.T.; Fienup, J.R. Efficient subpixel image registration algorithms. Opt. Lett. 2008, 33, 156–158. [Google Scholar] [CrossRef]

- Foroosh, H.; Balci, M. Sub-pixel registration and estimation of local shifts directly in the fourier domain. In Proceedings of the 2004 International Conference on Image Processing, Singapore, 24–27 October 2004; pp. 1915–1918. [Google Scholar]

- Hoge, W.S. A subspace identification extension to the phase correlation method. IEEE Trans. Med. Imaging 2003, 22, 277–280. [Google Scholar] [CrossRef]

- Balci, M.; Foroosh, H. Subpixel Registration Directly from the Phase Difference. EURASIP J. Adv. Signal Process. 2006, 2006, 060796. [Google Scholar] [CrossRef][Green Version]

- Van Puymbroeck, N.; Michel, R.; Binet, R.; Avouac, J.-P.; Taboury, J. Measuring earthquakes from optical satellite images. Appl. Opt. 2000, 39, 3486–3494. [Google Scholar] [CrossRef]

- Hoge, W.S.; Westin, C.-F. Identification of translational displacements between N-dimensional data sets using the high-order SVD and phase correlation. IEEE Trans. Image Process. 2005, 14, 884–889. [Google Scholar] [CrossRef]

- Stone, H.S.; Orchard, M.T.; Chang, E.; Martucci, S.A.; Member, S. A Fast Direct Fourier-Based Algorithm for Sub-pixel Registration of Images. IEEE Trans. Geosci. Remote Sens. 2011, 39, 2235–2243. [Google Scholar] [CrossRef]

- Alba, A.; Aguilar-Ponce, R.M.; Vigueras, J.F. Phase correlation based image alignment with sub-pixel accuracy. Adv. Artif. 2013, 7629, 71–182. [Google Scholar]

- Rondao, D.; Aouf, N.; Richardson, M.A.; Dubois-Matra, O. Benchmarking of local feature detectors and descriptors for multispectral relative navigation in space. Acta Astronaut. 2020, 172, 100–122. [Google Scholar] [CrossRef]

- Luo, T.; Shi, Z.; Wang, P. Robust and Efficient Corner Detector Using Non-Corners Exclusion. Appl. Sci. 2020, 10, 443. [Google Scholar] [CrossRef]

- Behrens, A.; Hendrik, R. Analysis of Feature Point Distributions for Fast Image Mosaicking. Acta Polytech. 2010, 50, 12–18. [Google Scholar] [CrossRef]

- Nelder, J.A.; Mead, R. A Simplex Method for Function Minimization. Comput. J. 1965, 7, 308–313. [Google Scholar] [CrossRef]

- Jiang, X.; Ma, J.; Jiang, J.; Guo, X. Robust Feature Matching Using Spatial Clustering with Heavy Outliers. IEEE Trans. Image Process. 2019, 29, 736–746. [Google Scholar] [CrossRef] [PubMed]

- Misra, I.; Moorthi, S.M.; Dhar, D.; Ramakrishnan, R. An automatic satellite image registration technique based on Harris corner detection and Random Sample Consensus (RANSAC) outlier rejection model. In Proceedings of the 2012 1st International Conference on Recent Advances in Information Technology (RAIT), Dhanbad, India, 15–17 March 2012; Volume 2, pp. 68–73. [Google Scholar] [CrossRef]

- Fischler, M.A.; Bolles, R.C. Random sample consensus. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Inglada, J.; Giros, A. On the possibility of automatic multisensor image registration. IEEE Trans. Geosci. Remote Sens. 2004, 42, 2104–2120. [Google Scholar] [CrossRef]

- Arévalo, V.; González, J. An experimental evaluation of non-rigid registration techniques on Quickbird satellite imagery. Int. J. Remote Sens. 2008, 29, 513–527. [Google Scholar] [CrossRef]

- Fonseca, L.M.G.; Manjunath, B.S. Registration Techniques for Multisensor Remotely Sensed Imagery. Photogramm. Eng. Remote Sens. 1996, 62, 1049–1056. [Google Scholar]

- Goshtasby, A. Registration of images with geometric distortions. IEEE Trans. Geosci. Remote Sens. 1988, 26, 60–64. [Google Scholar] [CrossRef]

- Goshtaby, A. Image Registration: Principles, Tools and Methods; Springer: London, UK, 2012. [Google Scholar]

- Liew, L.H.; Lee, B.Y.; Wang, Y.C.; Cheah, W.S. Rectification of aerial images using piecewise linear transformation. IOP Conf. Series Earth Environ. Sci. 2014, 18, 12009. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).