Augmented Virtuality Using Touch-Sensitive 3D-Printed Objects

Abstract

1. Introduction

2. Related Works

2.1. Real World Mapping in VR

2.2. Touch Sensing

2.3. 3D Printing and Cultural Heritage

3. Materials and Methods

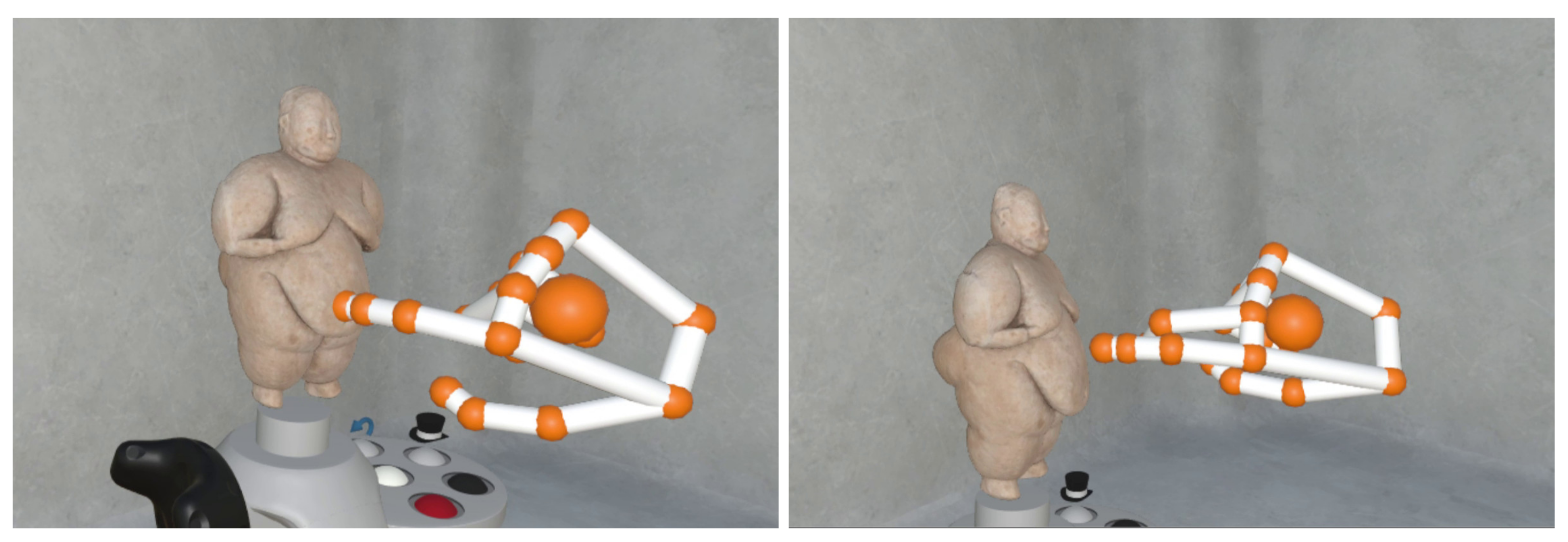

- to enhance the visual appearance of a low-cost physical copy of an artefact using a VR device, i.e., an HMD, to overlay virtually over it the faithful appearance of the original object;

- to enhance the user’s emotional impact, giving them the possibility to manipulate in their hands the physical replica in the virtual environment, taking advantage of the touch feedback;

- to enhance the user’s immersion and engagement, allowing the virtual personalization of the replica by changing its virtual appearance when touching the surface, using a physical personalization palette.

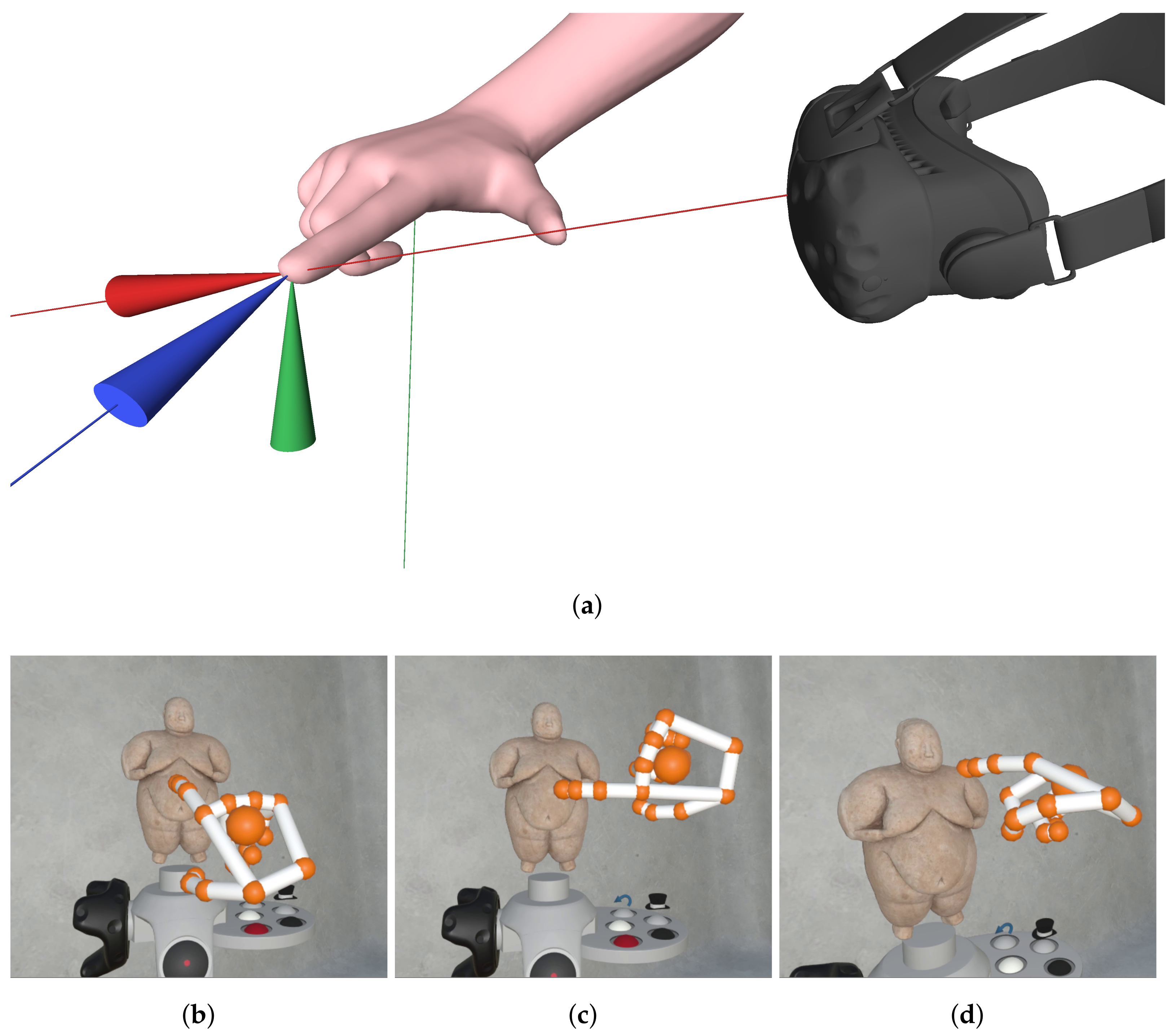

- to compute the position and orientation of the physical object in the virtual environment using a robust tracking solution to guarantee an accurate and precise overlay of the virtual 3D model of the original object over the replica;

- to show the user’s hands in the virtual environment, tracking the movement of each part of both hands to create virtual models that are as realistic as possible;

- to detect when and where the user touches the replica to modify the virtual model at the right position and time;

- to satisfy all the previous requirements in real time to guarantee a high-quality user experience

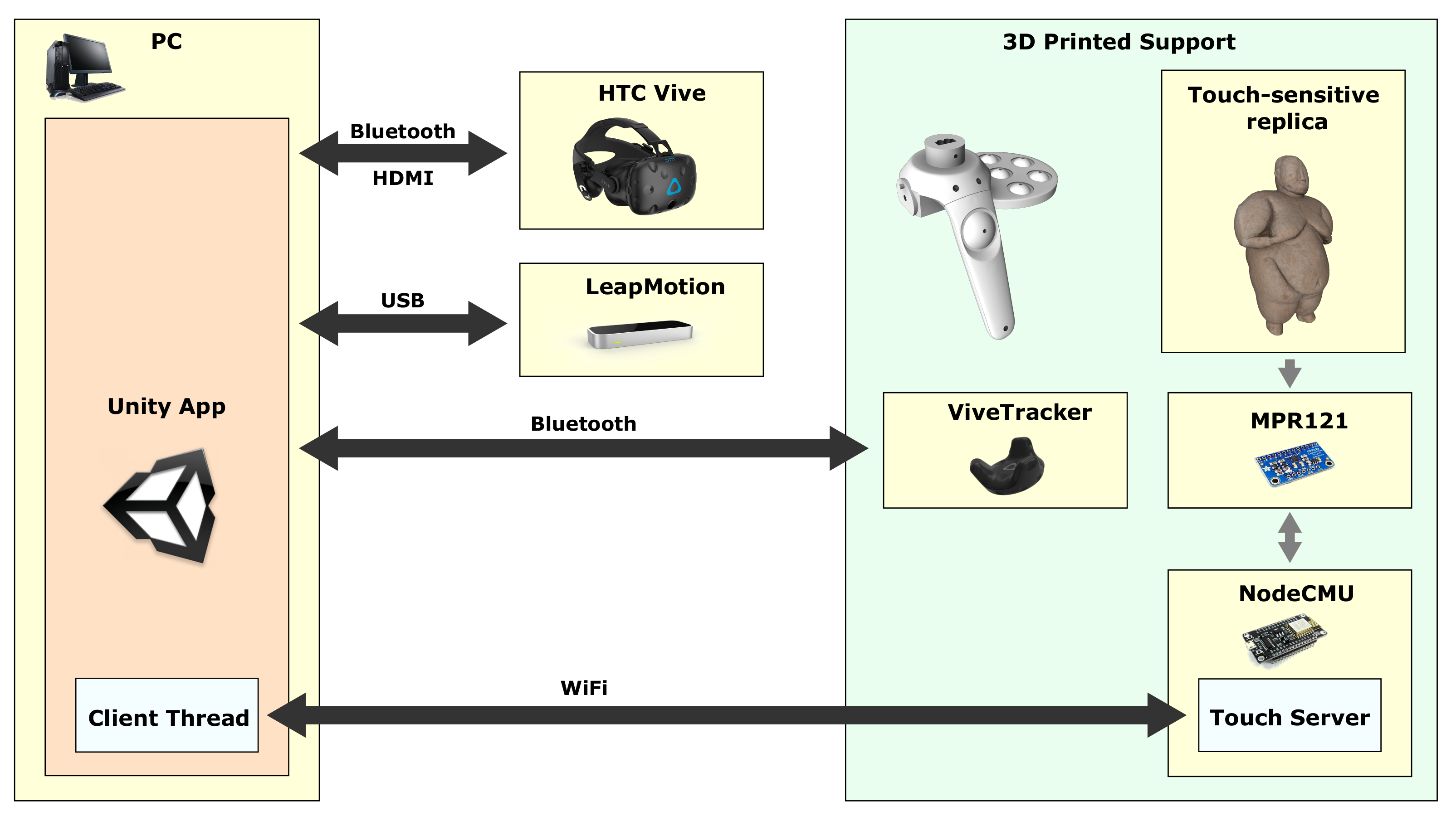

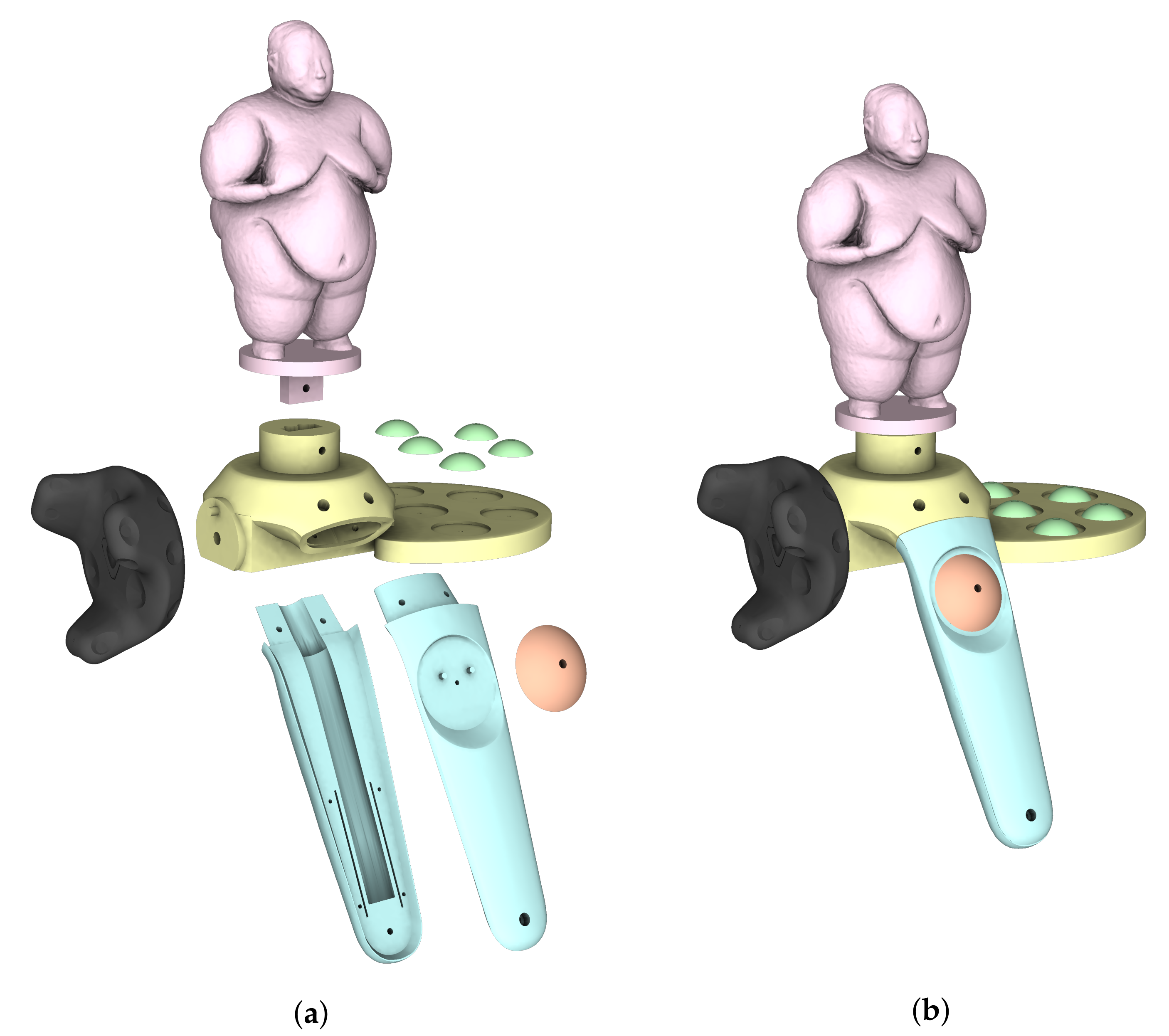

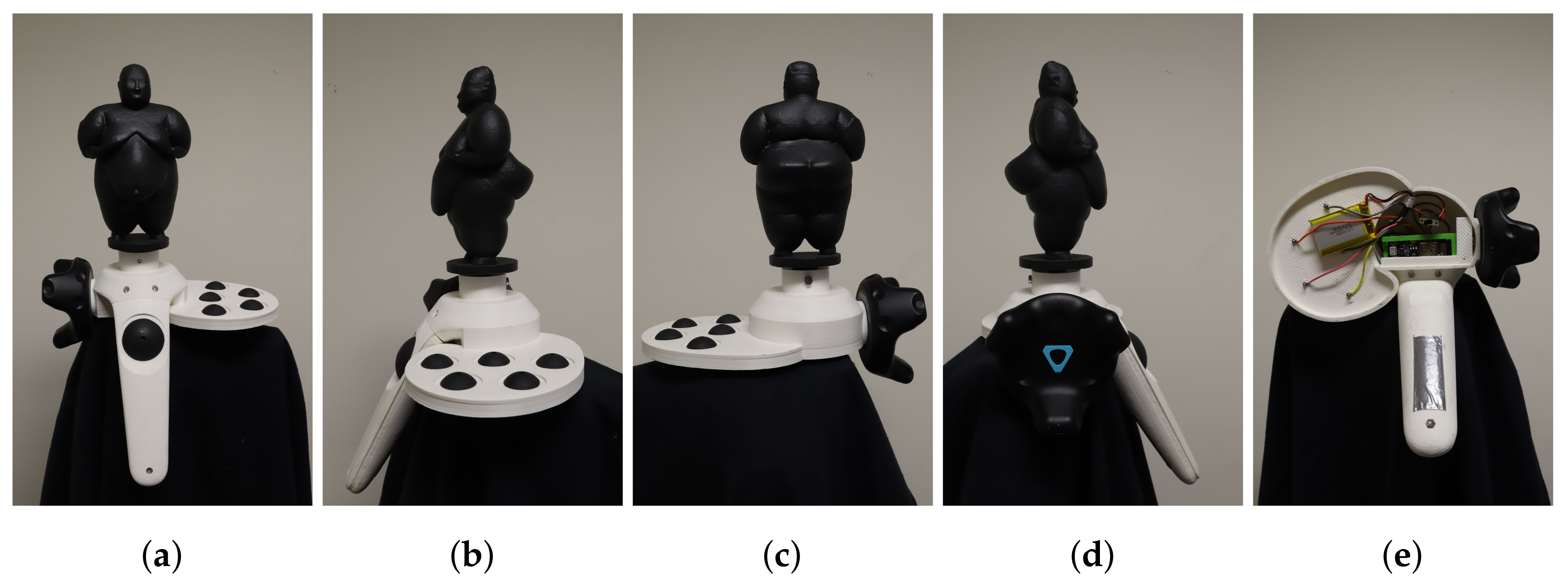

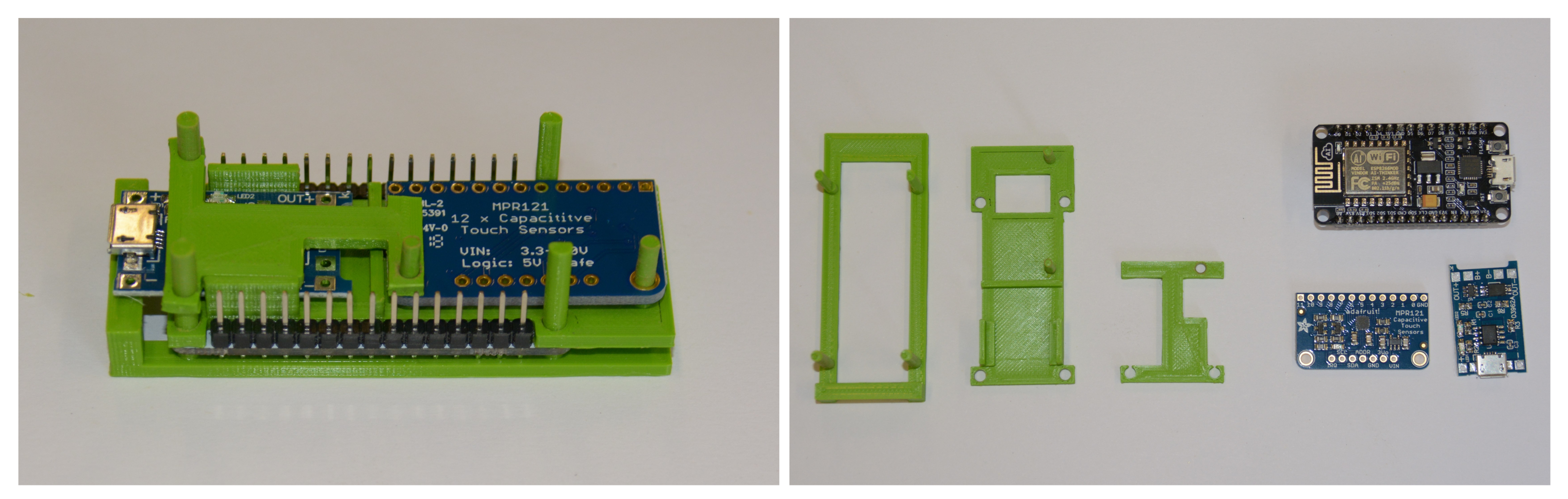

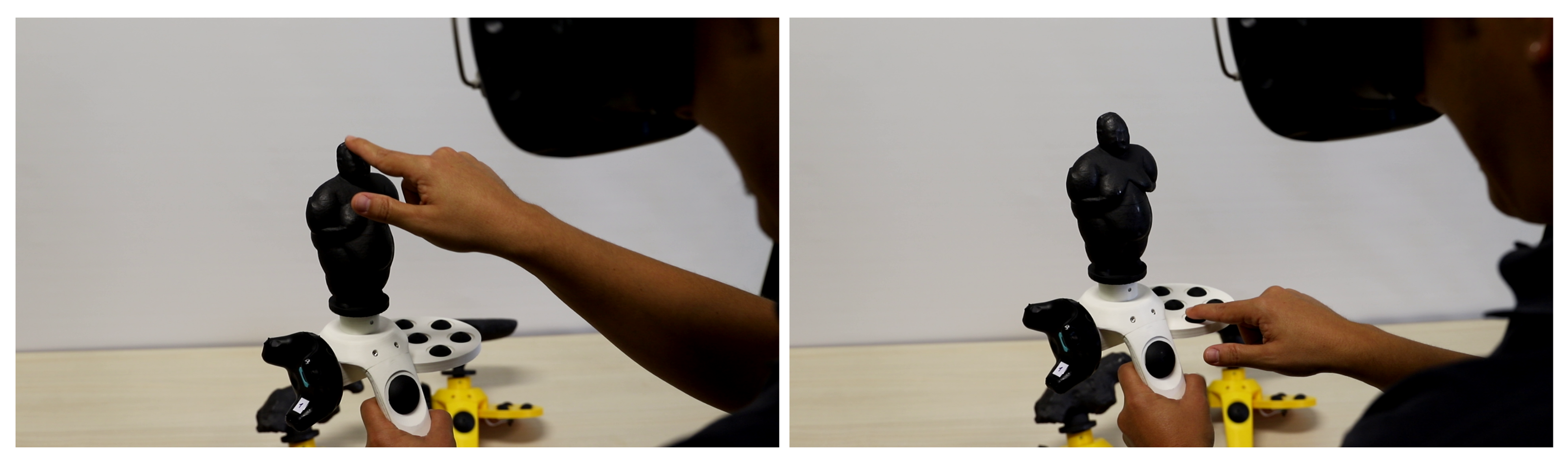

3.1. Hardware Setup

- the HDM for the visual VR experience and the tracking of the 3D-printed replica in the VR environment—in our case, the HTC Vive [59];

- the LeapMotion [60], an active hand tracking device;

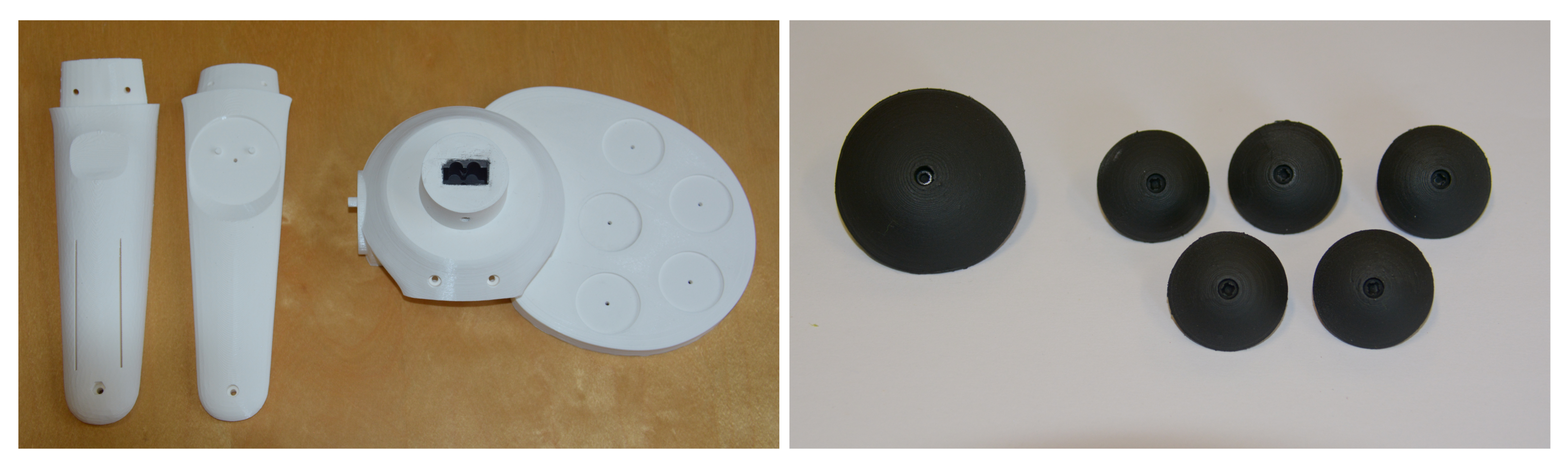

- a 3D-printed support to mount the physical replica on the HDM tracking device, the Vive Tracker [61];

- a physical palette attached to the 3D-printed support to give the user the possibility to select the type of personalization to apply on the surface of the virtual object;

- an electronic controller to detect when the user touches the replica and the personalization palette.

3.1.1. Physical Object Tracking

3.1.2. Hand Tracking

3.1.3. Touch Detection

3.2. Software Component

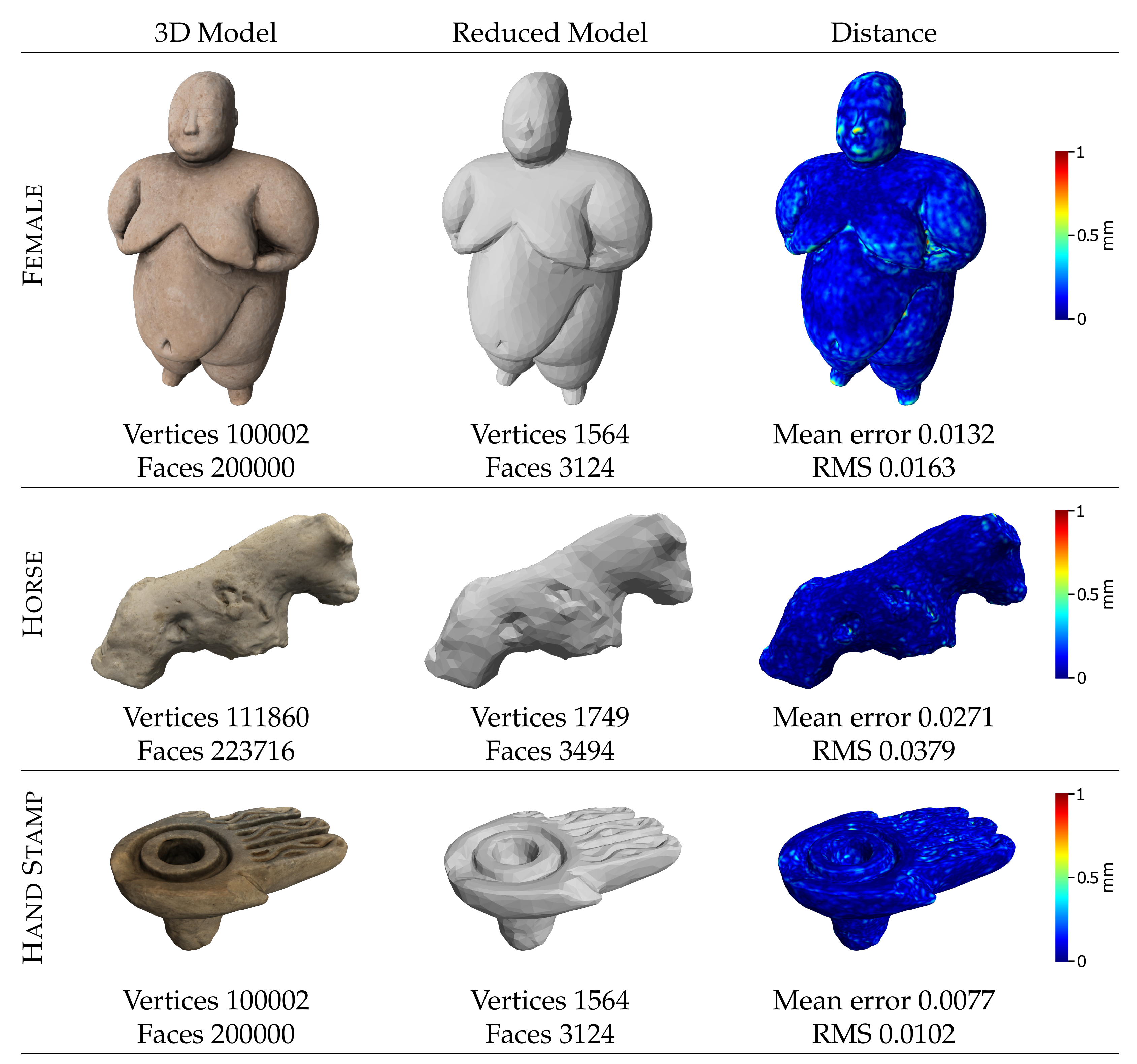

3.3. 3D Model Processing

4. Results

5. Discussion

6. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Heilig, M.L. Stereoscopic-Television Apparatus for Individual Use. U.S. Patent 2,955,156, 4 October 1960. [Google Scholar]

- Sutherland, I.E. A Head-Mounted Three Dimensional Display. In Proceedings of the Fall Joint Computer Conference (AFIPS ’68), San Francisco, CA, USA, 9–11 December 1968; ACM: New York, NY, USA, 1968; pp. 757–764. [Google Scholar] [CrossRef]

- Hoffman, H.G. Physically touching virtual objects using tactile augmentation enhances the realism of virtual environments. In Proceedings of the IEEE 1998 Virtual Reality Annual International Symposium, Atlanta, GA, USA, 14–18 March 1998; pp. 59–63. [Google Scholar] [CrossRef]

- Shapira, L.; Amores, J.; Benavides, X. TactileVR: Integrating Physical Toys into Learn and Play Virtual Reality Experiences. In Proceedings of the 2016 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Merida, Mexico, 19–23 September 2016; pp. 100–106. [Google Scholar] [CrossRef]

- Yoshimoto, R.; Sasakura, M. Using Real Objects for Interaction in Virtual Reality. In Proceedings of the 2017 21st International Conference Information Visualisation (IV), London, UK, 11–14 July 2017; pp. 440–443. [Google Scholar] [CrossRef]

- Milgram, P.; Kishino, F. A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. 1994, 77, 1321–1329. [Google Scholar]

- Liritzis, I.; Al-Otaibi, F.; Volonakis, P.; Drivaliari, A. Digital technologies and trends in cultural heritage. Mediterr. Archaeol. Archaeom. 2015, 15, 313–332. [Google Scholar]

- Scopigno, R.; Cignoni, P.; Pietroni, N.; Callieri, M.; Dellepiane, M. Digital Fabrication Techniques for Cultural Heritage: A Survey. Comput. Graph. Forum 2017, 36, 6–21. [Google Scholar] [CrossRef]

- Gallace, A.; Spence, C. In Touch with the Future: The Sense of Touch from Cognitive Neuroscience to Virtual Reality; OUP: Oxford, UK, 2014. [Google Scholar]

- Hoffman, H.; Groen, J.; Rousseau, S.; Hollander, A.; Winn, W.; Wells, M.; Furness, T., III. Tactile augmentation: Enhancing presence in inclusive VR with tactile feedback from real objects. In Proceedings of the Meeting of the American Psychological Sciences, San Francisco, CA, USA, 29 June–2 July 1996. [Google Scholar]

- Hoffman, H.G.; Hollander, A.; Schroder, K.; Rousseau, S.; Furness, T. Physically touching and tasting virtual objects enhances the realism of virtual experiences. Virtual Real. 1998, 3, 226–234. [Google Scholar] [CrossRef]

- Pan, M.K.X.J.; Niemeyer, G. Catching a real ball in virtual reality. In Proceedings of the 2017 IEEE Virtual Reality (VR), Los Angeles, CA, USA, 18–22 March 2017; pp. 269–270. [Google Scholar] [CrossRef]

- Bozgeyikli, L.; Bozgeyikli, E. Tangiball: Dynamic Embodied Tangible Interaction with a Ball in Virtual Reality. In Proceedings of the Companion Publication of the 2019 on Designing Interactive Systems Conference 2019 Companion (DIS ’19 Companion), San Diego, CA, USA, 24–28 June 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 135–140. [Google Scholar] [CrossRef]

- Neges, M.; Adwernat, S.; Abramovici, M. Augmented Virtuality for maintenance training simulation under various stress conditions. Procedia Manuf. 2018, 19, 171–178. [Google Scholar] [CrossRef]

- Taylor, C.; Mullany, C.; McNicholas, R.; Cosker, D. VR Props: An End-to-End Pipeline for Transporting Real Objects Into Virtual and Augmented Environments. In Proceedings of the 2019 IEEE International Symposium on Mixed and Augmented Reality (ISMAR), Beijing, China, 14–18 October 2019; IEEE Computer Society: Los Alamitos, CA, USA, 2019; pp. 83–92. [Google Scholar] [CrossRef]

- Liritzis, I.; Volonakis, P. Cyber-Archaeometry: Novel Research and Learning Subject Overview. Educ. Sci. 2021, 11, 86. [Google Scholar] [CrossRef]

- Simeone, A.L.; Velloso, E.; Gellersen, H. Substitutional Reality: Using the Physical Environment to Design Virtual Reality Experiences. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI ’15), Seoul, Korea, 18–23 April 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 3307–3316. [Google Scholar] [CrossRef]

- Azmandian, M.; Hancock, M.; Benko, H.; Ofek, E.; Wilson, A.D. Haptic Retargeting: Dynamic Repurposing of Passive Haptics for Enhanced Virtual Reality Experiences. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI ’16), San Jose, CA, USA, 7–12 May 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 1968–1979. [Google Scholar] [CrossRef]

- Cheng, L.P.; Ofek, E.; Holz, C.; Benko, H.; Wilson, A.D. Sparse Haptic Proxy: Touch Feedback in Virtual Environments Using a General Passive Prop. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI ’17), Denver, CO, USA, 6–11 May 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 3718–3728. [Google Scholar] [CrossRef]

- Huang, H.Y.; Ning, C.W.; Wang, P.Y.; Cheng, J.H.; Cheng, L.P. Haptic-Go-Round: A Surrounding Platform for Encounter-Type Haptics in Virtual Reality Experiences. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems (CHI ’20), Honolulu, HI, USA, 25–30 April 2020; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–10. [Google Scholar] [CrossRef]

- McNeely, W.A. Robotic graphics: A new approach to force feedback for virtual reality. In Proceedings of the IEEE Virtual Reality Annual International Symposium, Seattle, WA, USA, 18–22 September 1993; pp. 336–341. [Google Scholar] [CrossRef]

- Siu, A.F.; Gonzalez, E.J.; Yuan, S.; Ginsberg, J.B.; Follmer, S. ShapeShift: 2D Spatial Manipulation and Self-Actuation of Tabletop Shape Displays for Tangible and Haptic Interaction. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18), Montreal, QC, Canada, 21–26 April 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–13. [Google Scholar] [CrossRef]

- Hoppe, M.; Knierim, P.; Kosch, T.; Funk, M.; Futami, L.; Schneegass, S.; Henze, N.; Schmidt, A.; Machulla, T. VRHapticDrones: Providing Haptics in Virtual Reality through Quadcopters. In Proceedings of the 17th International Conference on Mobile and Ubiquitous Multimedia (MUM 2018), Cairo, Egypt, 25–28 November 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 7–18. [Google Scholar] [CrossRef]

- Abtahi, P.; Landry, B.; Yang, J.J.; Pavone, M.; Follmer, S.; Landay, J.A. Beyond the Force: Using Quadcopters to Appropriate Objects and the Environment for Haptics in Virtual Reality. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ’19), Glasgow, UK, 4–9 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–13. [Google Scholar] [CrossRef]

- Yang, J.J.; Holz, C.; Ofek, E.; Wilson, A.D. DreamWalker: Substituting Real-World Walking Experiences with a Virtual Reality. In Proceedings of the 32nd Annual ACM Symposium on User Interface Software and Technology (UIST ’19), New Orleans, LA, USA, 20–23 October 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1093–1107. [Google Scholar] [CrossRef]

- Sun, Q.; Wei, L.Y.; Kaufman, A. Mapping Virtual and Physical Reality. ACM Trans. Graph. 2016, 35. [Google Scholar] [CrossRef]

- Dong, Z.C.; Fu, X.M.; Zhang, C.; Wu, K.; Liu, L. Smooth Assembled Mappings for Large-Scale Real Walking. ACM Trans. Graph. 2017, 36. [Google Scholar] [CrossRef]

- Sun, Q.; Patney, A.; Wei, L.Y.; Shapira, O.; Lu, J.; Asente, P.; Zhu, S.; Mcguire, M.; Luebke, D.; Kaufman, A. Towards Virtual Reality Infinite Walking: Dynamic Saccadic Redirection. ACM Trans. Graph. 2018, 37. [Google Scholar] [CrossRef]

- Langbehn, E.; Steinicke, F.; Lappe, M.; Welch, G.F.; Bruder, G. In the Blink of an Eye: Leveraging Blink-Induced Suppression for Imperceptible Position and Orientation Redirection in Virtual Reality. ACM Trans. Graph. 2018, 37. [Google Scholar] [CrossRef]

- Hartmann, J.; Holz, C.; Ofek, E.; Wilson, A.D. RealityCheck: Blending Virtual Environments with Situated Physical Reality. In Proceedings of the 2019 CHI Conference on Human Factors in Computing Systems (CHI ’19), Glasgow, UK, 4–9 May 2019; Association for Computing Machinery: New York, NY, USA, 2019; pp. 1–12. [Google Scholar] [CrossRef]

- Nilsson, N.C.; Serafin, S.; Steinicke, F.; Nordahl, R. Natural Walking in Virtual Reality: A Review. Comput. Entertain. 2018, 16. [Google Scholar] [CrossRef]

- Murakami, T.; Person, T.; Fernando, C.L.; Minamizawa, K. Altered Touch: Miniature Haptic Display with Force, Thermal and Tactile Feedback for Augmented Haptics. In Proceedings of the ACM SIGGRAPH 2017 Emerging Technologies (SIGGRAPH ’17), Brisbane, Australia, 17–20 November 2017; Association for Computing Machinery: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Gabardi, M.; Leonardis, D.; Solazzi, M.; Frisoli, A. Development of a miniaturized thermal module designed for integration in a wearable haptic device. In Proceedings of the 2018 IEEE Haptics Symposium (HAPTICS), San Francisco, CA, USA, 25–28 March 2018; pp. 100–105. [Google Scholar] [CrossRef]

- Jang, S.; Kim, L.H.; Tanner, K.; Ishii, H.; Follmer, S. Haptic Edge Display for Mobile Tactile Interaction. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI ’16), San Jose, CA, USA, 7–12 May 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 3706–3716. [Google Scholar] [CrossRef]

- Stanley, A.A.; Gwilliam, J.C.; Okamura, A.M. Haptic jamming: A deformable geometry, variable stiffness tactile display using pneumatics and particle jamming. In Proceedings of the 2013 World Haptics Conference (WHC), Daejeon, Korea, 14–17 April 2013; pp. 25–30. [Google Scholar] [CrossRef]

- Carter, T.; Seah, S.A.; Long, B.; Drinkwater, B.; Subramanian, S. UltraHaptics: Multi-Point Mid-Air Haptic Feedback for Touch Surfaces. In Proceedings of the 26th Annual ACM Symposium on User Interface Software and Technology (UIST ’13), St. Andrews, UK, 8–11 October 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 505–514. [Google Scholar] [CrossRef]

- Sodhi, R.; Poupyrev, I.; Glisson, M.; Israr, A. AIREAL: Interactive Tactile Experiences in Free Air. ACM Trans. Graph. 2013, 32. [Google Scholar] [CrossRef]

- Lee, H.; Cha, H.; Park, J.; Choi, S.; Kim, H.S.; Chung, S.C. LaserStroke: Mid-Air Tactile Experiences on Contours Using Indirect Laser Radiation. In Proceedings of the 29th Annual Symposium on User Interface Software and Technology (UIST ’16 Adjunct), Tokyo, Japan, 16–19 October 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 73–74. [Google Scholar] [CrossRef]

- Wang, D.; Ohnishi, K.; Xu, W. Multimodal Haptic Display for Virtual Reality: A Survey. IEEE Trans. Ind. Electron. 2020, 67, 610–623. [Google Scholar] [CrossRef]

- Harrison, C.; Tan, D.; Morris, D. Skinput: Appropriating the Body as an Input Surface. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’10), Atlanta, GA, USA, 10–15 April 2010; Association for Computing Machinery: New York, NY, USA, 2010; pp. 453–462. [Google Scholar] [CrossRef]

- Zhang, Y.; Yang, C.J.; Hudson, S.E.; Harrison, C.; Sample, A. Wall++: Room-Scale Interactive and Context-Aware Sensing. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18), Montreal, QC, Canada, 21–26 April 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–15. [Google Scholar] [CrossRef]

- Schmitz, M.; Khalilbeigi, M.; Balwierz, M.; Lissermann, R.; Mühlhäuser, M.; Steimle, J. Capricate: A Fabrication Pipeline to Design and 3D Print Capacitive Touch Sensors for Interactive Objects. In Proceedings of the 28th Annual ACM Symposium on User Interface Software and Technology (UIST ’15), Charlotte, NC, USA, 8–11 November 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 253–258. [Google Scholar] [CrossRef]

- Kao, H.L.C.; Holz, C.; Roseway, A.; Calvo, A.; Schmandt, C. DuoSkin: Rapidly Prototyping on-Skin User Interfaces Using Skin-Friendly Materials. In Proceedings of the 2016 ACM International Symposium on Wearable Computers (ISWC ’16), Heidelberg, Germany, 12–16 September 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 16–23. [Google Scholar] [CrossRef]

- Nittala, A.S.; Withana, A.; Pourjafarian, N.; Steimle, J. Multi-Touch Skin: A Thin and Flexible Multi-Touch Sensor for On-Skin Input. In Proceedings of the 2018 CHI Conference on Human Factors in Computing Systems (CHI ’18), Montreal, QC, Canada, 21–26 April 2018; Association for Computing Machinery: New York, NY, USA, 2018; pp. 1–12. [Google Scholar] [CrossRef]

- Hagan, M.; Teodorescu, H. Intelligent clothes with a network of painted sensors. In Proceedings of the 2013 E-Health and Bioengineering Conference (EHB), Iasi, Romania, 21–23 November 2013; pp. 1–4. [Google Scholar] [CrossRef]

- Poupyrev, I.; Gong, N.W.; Fukuhara, S.; Karagozler, M.E.; Schwesig, C.; Robinson, K.E. Project Jacquard: Interactive Digital Textiles at Scale. In Proceedings of the 2016 CHI Conference on Human Factors in Computing Systems (CHI ’16), San Jose, CA, USA, 7–12 May 2016; Association for Computing Machinery: New York, NY, USA, 2016; pp. 4216–4227. [Google Scholar] [CrossRef]

- Weigel, M.; Lu, T.; Bailly, G.; Oulasvirta, A.; Majidi, C.; Steimle, J. ISkin: Flexible, Stretchable and Visually Customizable On-Body Touch Sensors for Mobile Computing. In Proceedings of the 33rd Annual ACM Conference on Human Factors in Computing Systems (CHI ’15), Seoul, Korea, 18–23 April 2015; Association for Computing Machinery: New York, NY, USA, 2015; pp. 2991–3000. [Google Scholar] [CrossRef]

- Kawahara, Y.; Hodges, S.; Cook, B.S.; Zhang, C.; Abowd, G.D. Instant Inkjet Circuits: Lab-Based Inkjet Printing to Support Rapid Prototyping of UbiComp Devices. In Proceedings of the 2013 ACM International Joint Conference on Pervasive and Ubiquitous Computing (UbiComp ’13), Zurich, Switzerland, 8–12 September 2013; Association for Computing Machinery: New York, NY, USA, 2013; pp. 363–372. [Google Scholar] [CrossRef]

- Harrison, C.; Benko, H.; Wilson, A.D. OmniTouch: Wearable Multitouch Interaction Everywhere. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology (UIST ’11), Santa Barbara, CA, USA, 16–19 October 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 441–450. [Google Scholar] [CrossRef]

- Harrison, C.; Schwarz, J.; Hudson, S.E. TapSense: Enhancing Finger Interaction on Touch Surfaces. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology (UIST ’11), Santa Barbara, CA, USA, 16–19 October 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 627–636. [Google Scholar] [CrossRef]

- Zimmerman, T.G.; Smith, J.R.; Paradiso, J.A.; Allport, D.; Gershenfeld, N. Applying Electric Field Sensing to Human-Computer Interfaces. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’95), Denver, CO, USA, 7–11 May 1995; pp. 280–287. [Google Scholar] [CrossRef]

- Sato, M.; Poupyrev, I.; Harrison, C. Touché: Enhancing Touch Interaction on Humans, Screens, Liquids, and Everyday Objects. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’12), Austin, TX, USA, 5–10 May 2012; Association for Computing Machinery: New York, NY, USA, 2012; pp. 483–492. [Google Scholar] [CrossRef]

- Wimmer, R.; Baudisch, P. Modular and Deformable Touch-Sensitive Surfaces Based on Time Domain Reflectometry. In Proceedings of the 24th Annual ACM Symposium on User Interface Software and Technology (UIST ’11), Santa Barbara, CA, USA, 16–19 October 2011; Association for Computing Machinery: New York, NY, USA, 2011; pp. 517–526. [Google Scholar] [CrossRef]

- Zhang, Y.; Laput, G.; Harrison, C. Electrick: Low-Cost Touch Sensing Using Electric Field Tomography. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI ’17), Denver, CO, USA, 6–11 May 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 1–14. [Google Scholar] [CrossRef]

- Grosse-Puppendahl, T.; Holz, C.; Cohn, G.; Wimmer, R.; Bechtold, O.; Hodges, S.; Reynolds, M.S.; Smith, J.R. Finding Common Ground: A Survey of Capacitive Sensing in Human-Computer Interaction. In Proceedings of the 2017 CHI Conference on Human Factors in Computing Systems (CHI ’17), Denver, CO, USA, 6–11 May 2017; Association for Computing Machinery: New York, NY, USA, 2017; pp. 3293–3315. [Google Scholar] [CrossRef]

- Di Franco, P.D.G.; Camporesi, C.; Galeazzi, F.; Kallmann, M. 3d Printing and Immersive Visualization for Improved Perception of Ancient Artifacts. Presence Teleoper. Virtual Environ. 2015, 24, 243–264. [Google Scholar] [CrossRef]

- Wilson, P.F.; Stott, J.; Warnett, J.M.; Attridge, A.; Smith, M.P.; Williams, M.A. Evaluation of Touchable 3D-Printed Replicas in Museums. Curator Mus. J. 2017, 60, 445–465. [Google Scholar] [CrossRef]

- Balletti, C.; Ballarin, M. An Application of Integrated 3D Technologies for Replicas in Cultural Heritage. ISPRS Int. J. Geo Inf. 2019, 8, 285. [Google Scholar] [CrossRef]

- HTC Vive Technical Specification. Available online: https://www.vive.com/eu/product/vive (accessed on 15 March 2021).

- Leap Motion Techical Specification. Available online: https://www.ultraleap.com/product/leap-motion-controller/ (accessed on 15 March 2021).

- Vive Tracker Technical Specification. Available online: https://dl.vive.com/Tracker/Guideline/HTC_Vive_Tracker(2018)_%20Developer+Guidelines_v1.0.pdf (accessed on 15 March 2021).

- Niehorster, D.C.; Li, L.; Lappe, M. The accuracy and precision of position and orientation tracking in the HTC vive virtual reality system for scientific research. i-Perception 2017, 8, 2041669517708205. [Google Scholar] [CrossRef] [PubMed]

- Weichert, F.; Bachmann, D.; Rudak, B.; Fisseler, D. Analysis of the accuracy and robustness of the leap motion controller. Sensors 2013, 13, 6380–6393. [Google Scholar] [CrossRef] [PubMed]

- NodeMCU Technical Specification. Available online: https://www.nodemcu.com/index_en.html (accessed on 15 March 2021).

- MPR121 Technical Specification. Available online: https://www.nxp.com/docs/en/data-sheet/MPR121.pdf (accessed on 15 March 2021).

- Unity. Available online: https://unity.com/ (accessed on 15 March 2021).

- Garland, M.; Heckbert, P.S. Surface Simplification Using Quadric Error Metrics. In Proceedings of the 24th Annual Conference on Computer Graphics and Interactive Techniques (SIGGRAPH ’97), Los Angeles, CA, USA, 3–8 August 1997; pp. 209–216. [Google Scholar] [CrossRef]

- Agisoft Metashape. Available online: https://www.agisoft.com/ (accessed on 15 March 2021).

- Cignoni, P.; Callieri, M.; Corsini, M.; Dellepiane, M.; Ganovelli, F.; Ranzuglia, G. MeshLab: An Open-Source Mesh Processing Tool. In Proceedings of the Eurographics Italian Chapter Conference, Salerno, Italy, 2–4 July 2008; Scarano, V., Chiara, R.D., Erra, U., Eds.; The Eurographics Association: Aire-la-Ville, Switzerland, 2008. [Google Scholar] [CrossRef]

- Katifori, A.; Roussou, M.; Perry, S.; Cignoni, P.; Malomo, L.; Palma, G.; Dretakis, G.; Vizcay, S. The EMOTIVE project—Emotive virtual cultural experiences through personalized storytelling. In Proceedings of the CEUR Workshop Proceedings, Hotel Plejsy, Slovakia, 21–25 September 2018; Volume 2235. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Palma, G.; Perry, S.; Cignoni, P. Augmented Virtuality Using Touch-Sensitive 3D-Printed Objects. Remote Sens. 2021, 13, 2186. https://doi.org/10.3390/rs13112186

Palma G, Perry S, Cignoni P. Augmented Virtuality Using Touch-Sensitive 3D-Printed Objects. Remote Sensing. 2021; 13(11):2186. https://doi.org/10.3390/rs13112186

Chicago/Turabian StylePalma, Gianpaolo, Sara Perry, and Paolo Cignoni. 2021. "Augmented Virtuality Using Touch-Sensitive 3D-Printed Objects" Remote Sensing 13, no. 11: 2186. https://doi.org/10.3390/rs13112186

APA StylePalma, G., Perry, S., & Cignoni, P. (2021). Augmented Virtuality Using Touch-Sensitive 3D-Printed Objects. Remote Sensing, 13(11), 2186. https://doi.org/10.3390/rs13112186