Feasibility Analyses of Real-Time Detection of Wildlife Using UAV-Derived Thermal and RGB Images

Abstract

1. Introduction

- (1)

- Reduce the animal detection time

- (2)

- Enable detection in more environments

- (3)

- Use thermal and RGB images acquired from the same thermal camera

2. Study Site and Data

2.1. Study Site

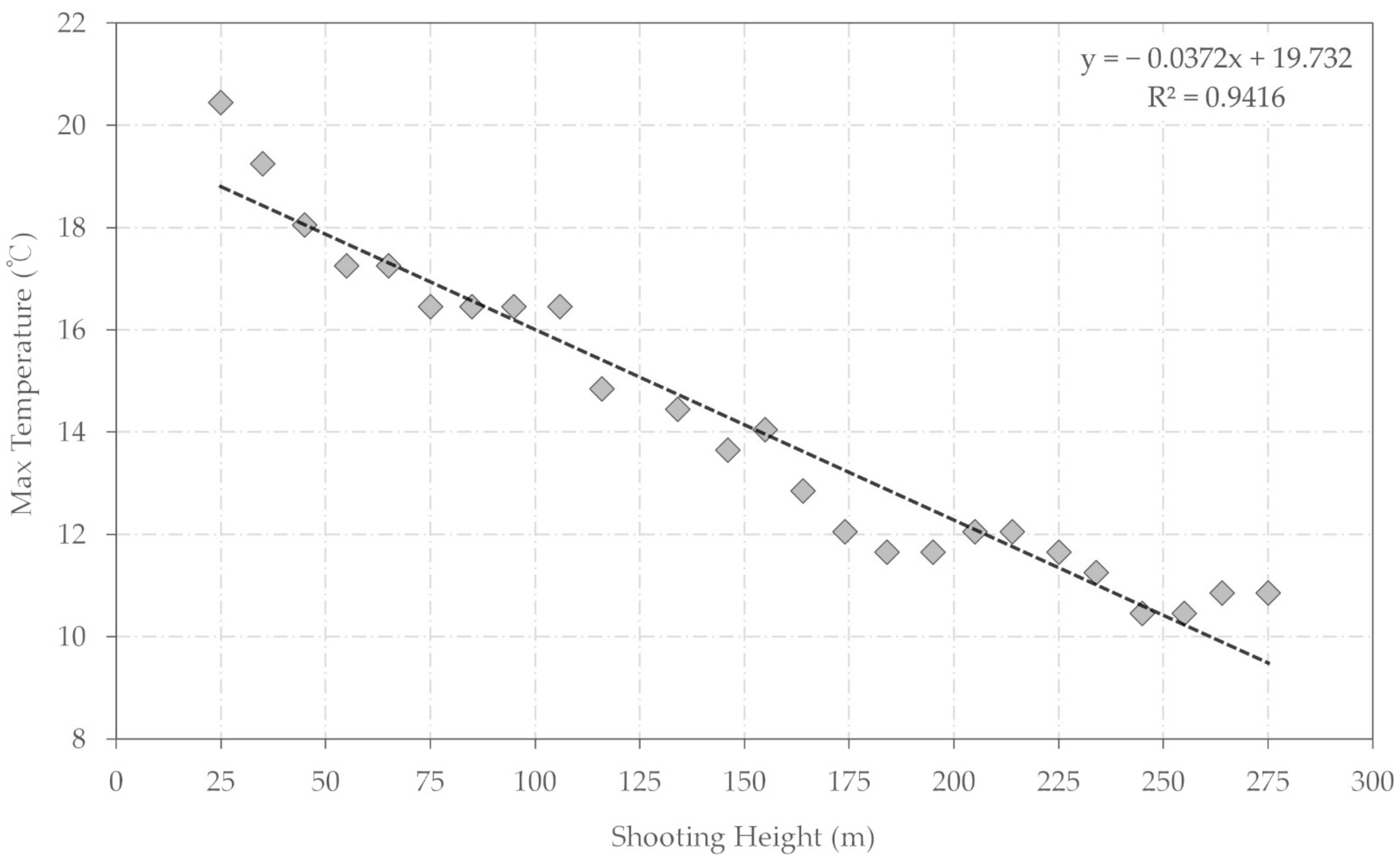

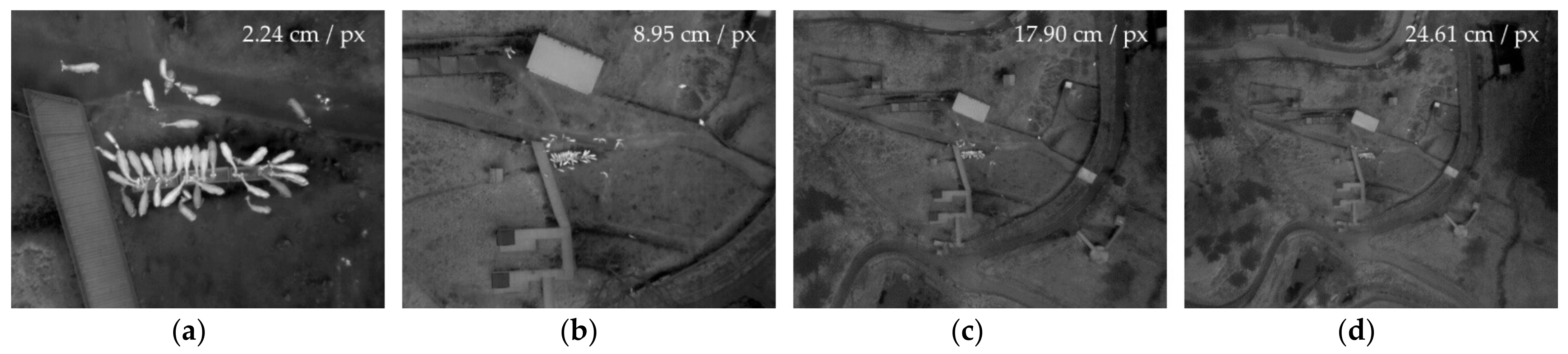

2.2. Data Acquisition

2.3. Data Preprocessing

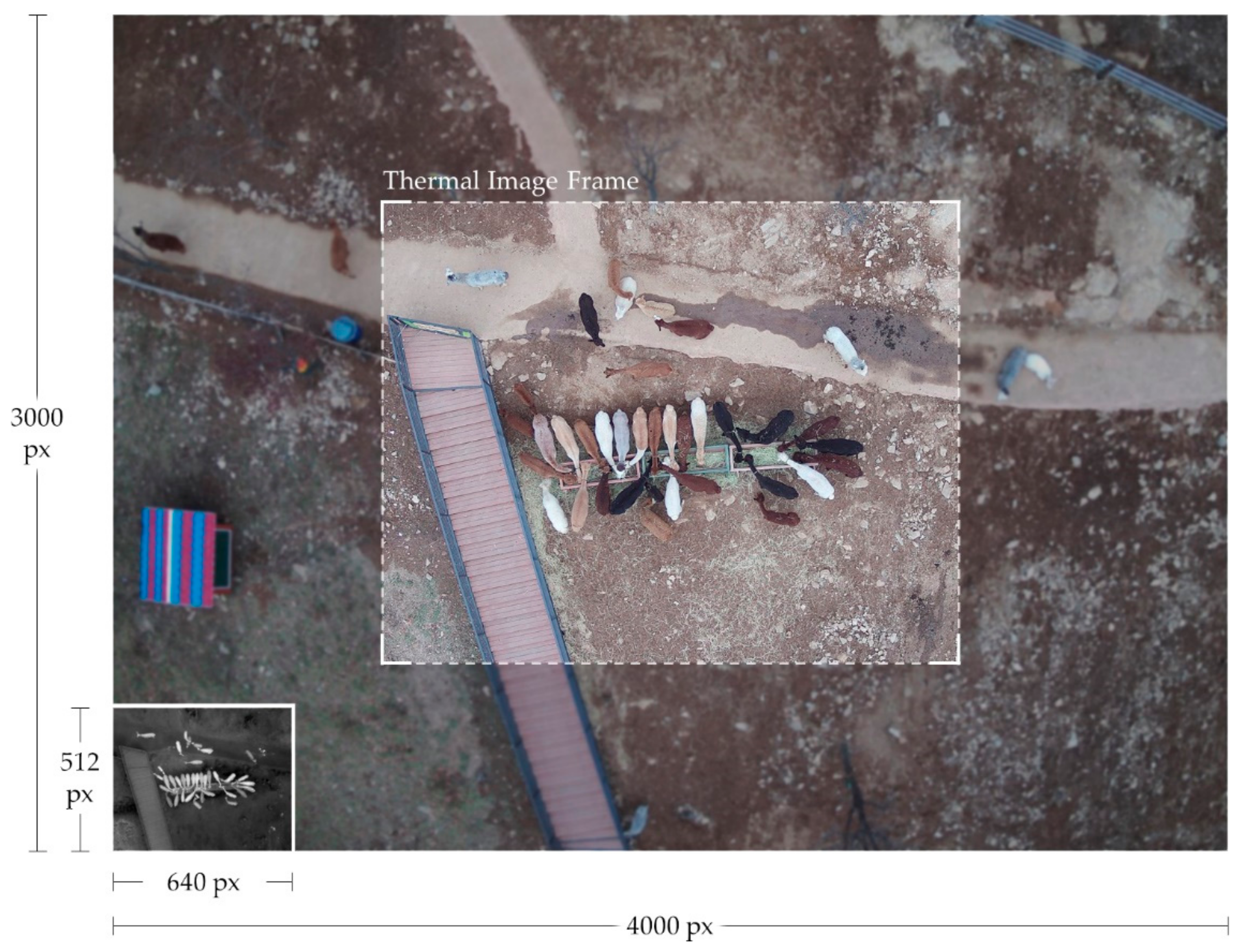

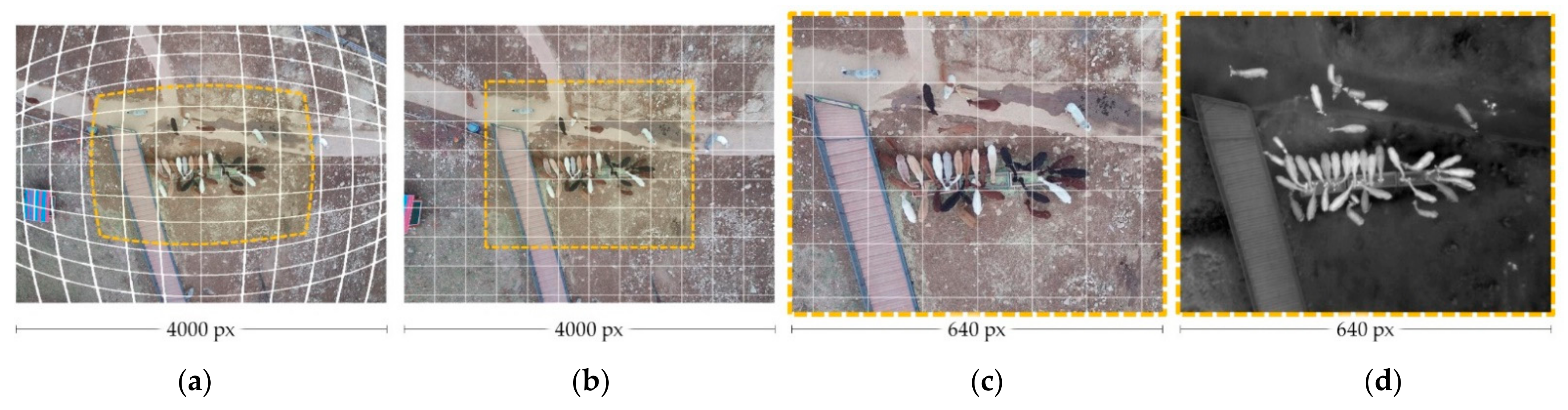

2.3.1. RGB Lens Distortion Correction and Clipping

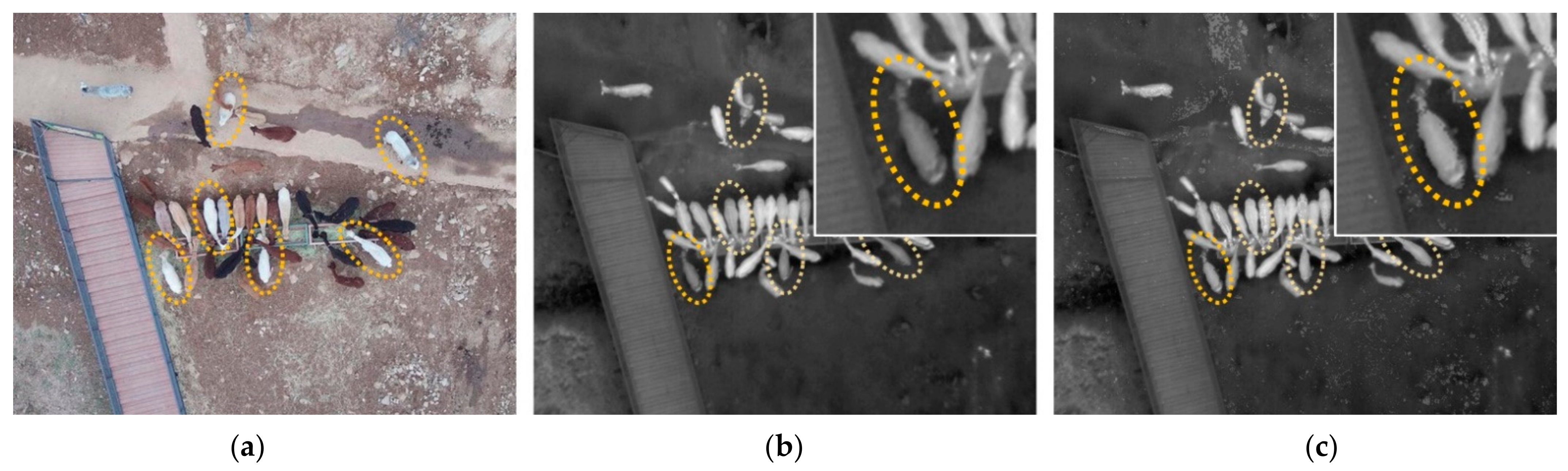

2.3.2. Thermal Image Correction by Fur Color

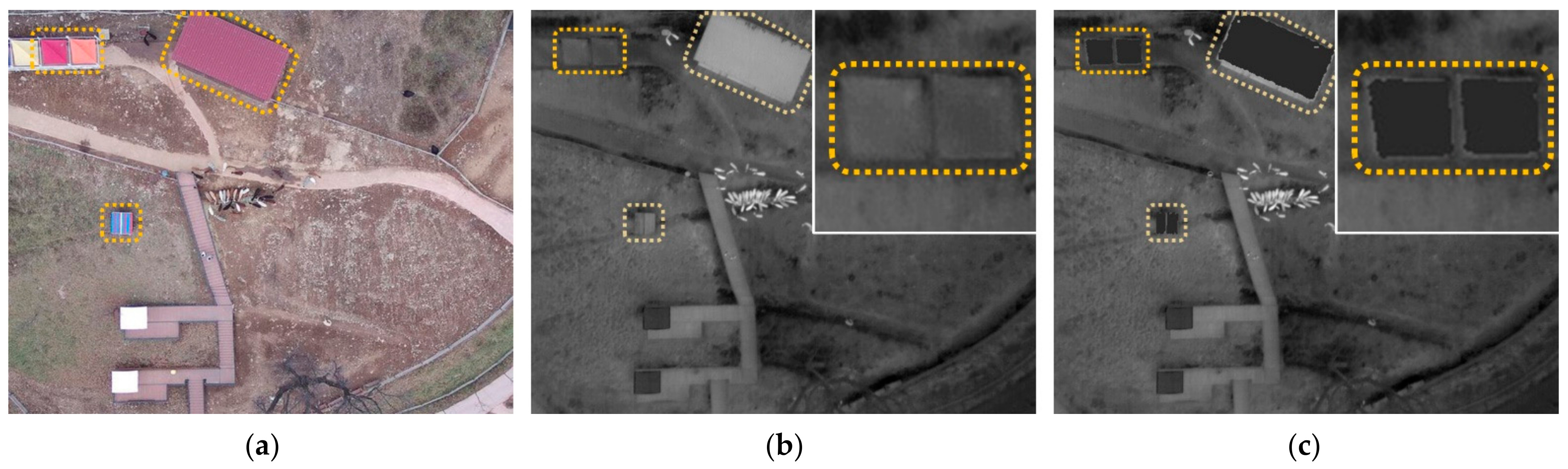

2.3.3. Unnatural Object Removal

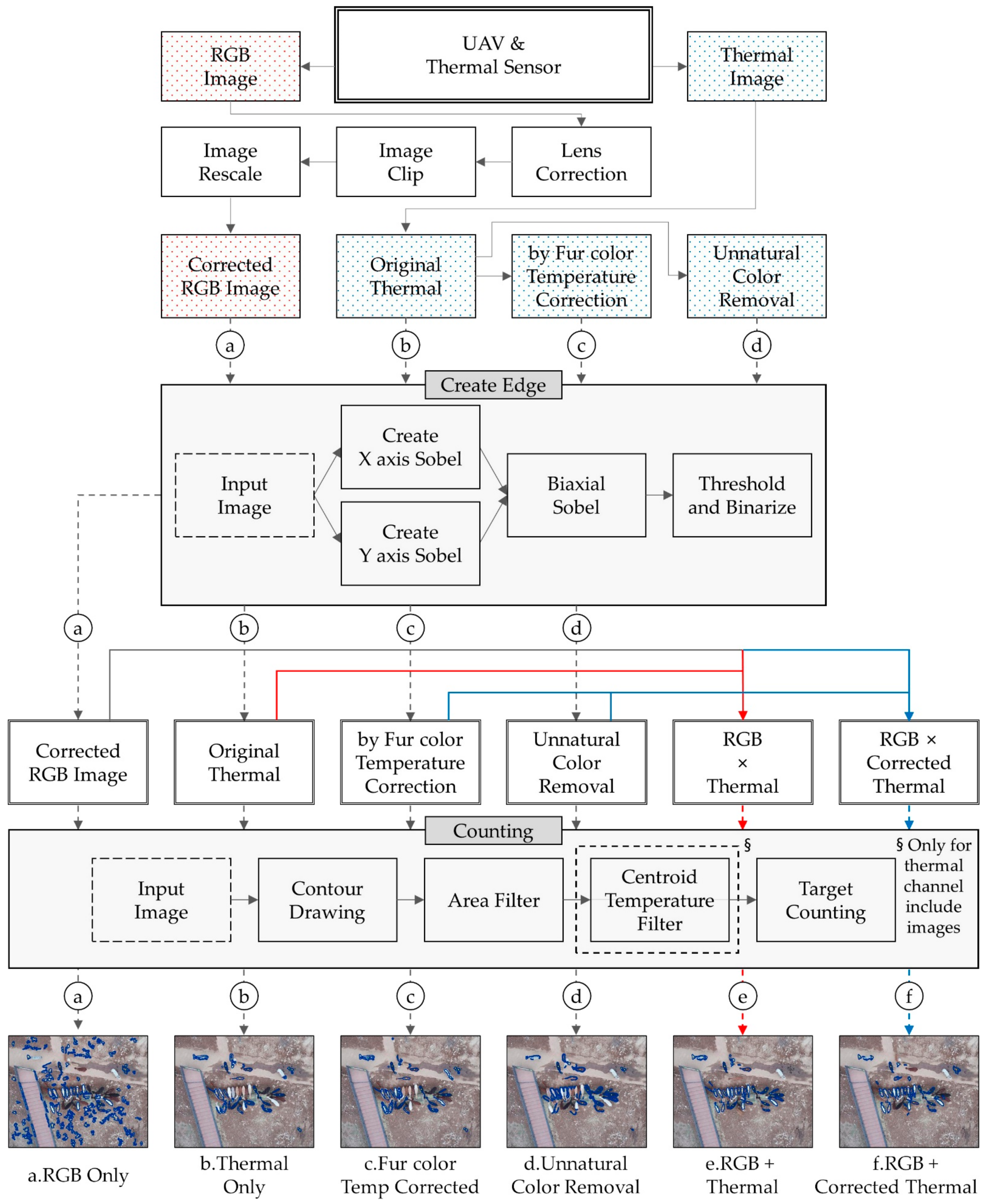

3. Methods

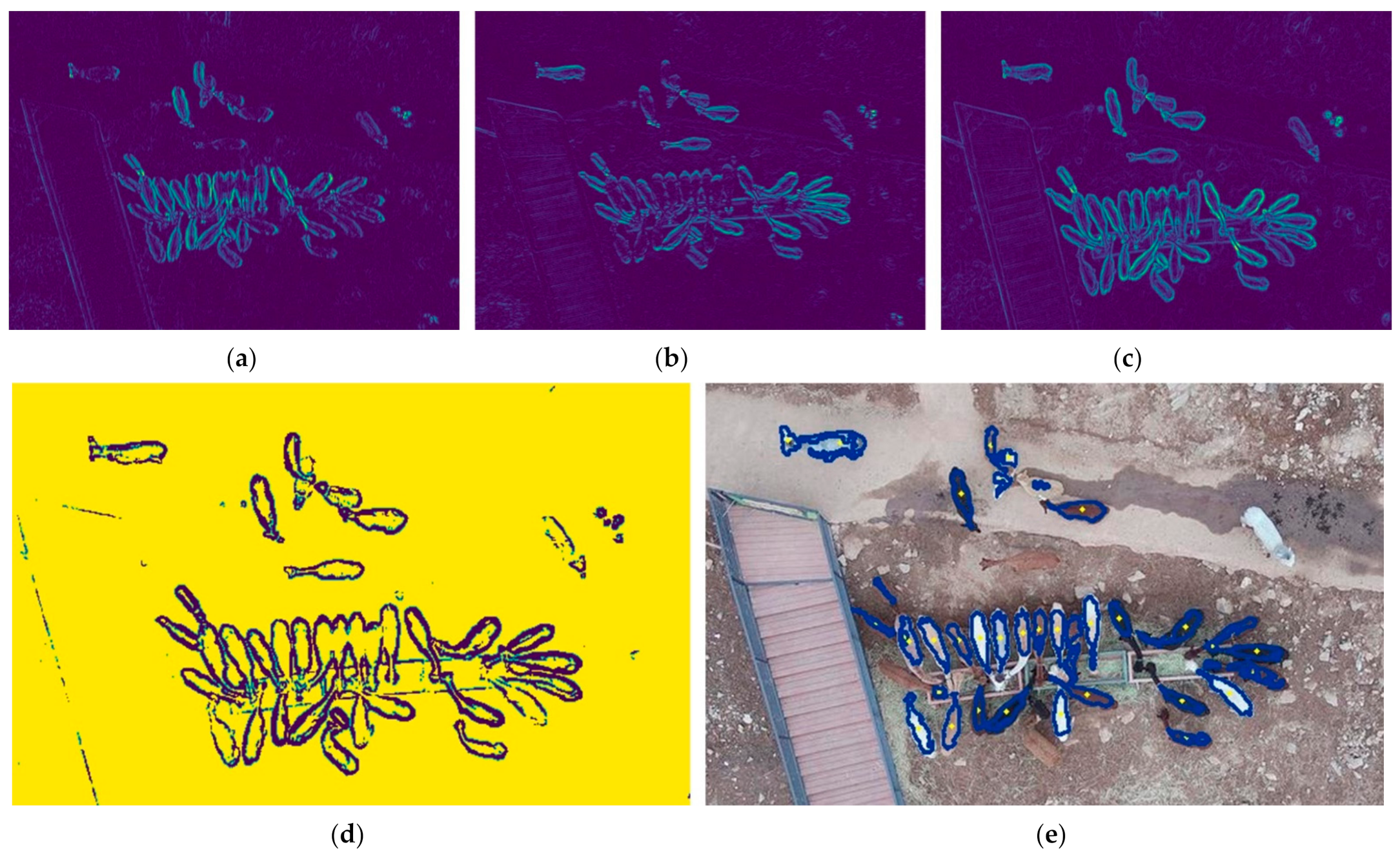

3.1. Sobel Edge Detection and Contour Drawing

3.2. Object Detection and Sorting

3.3. Input Images Generation

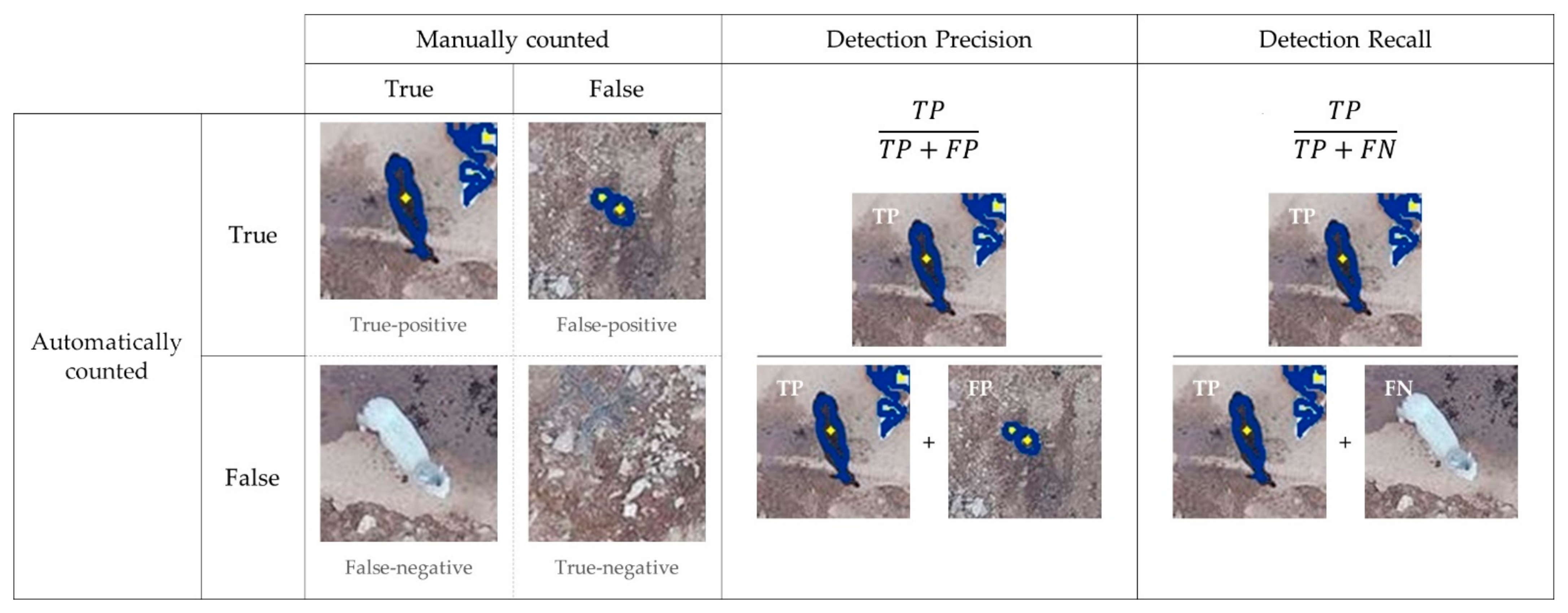

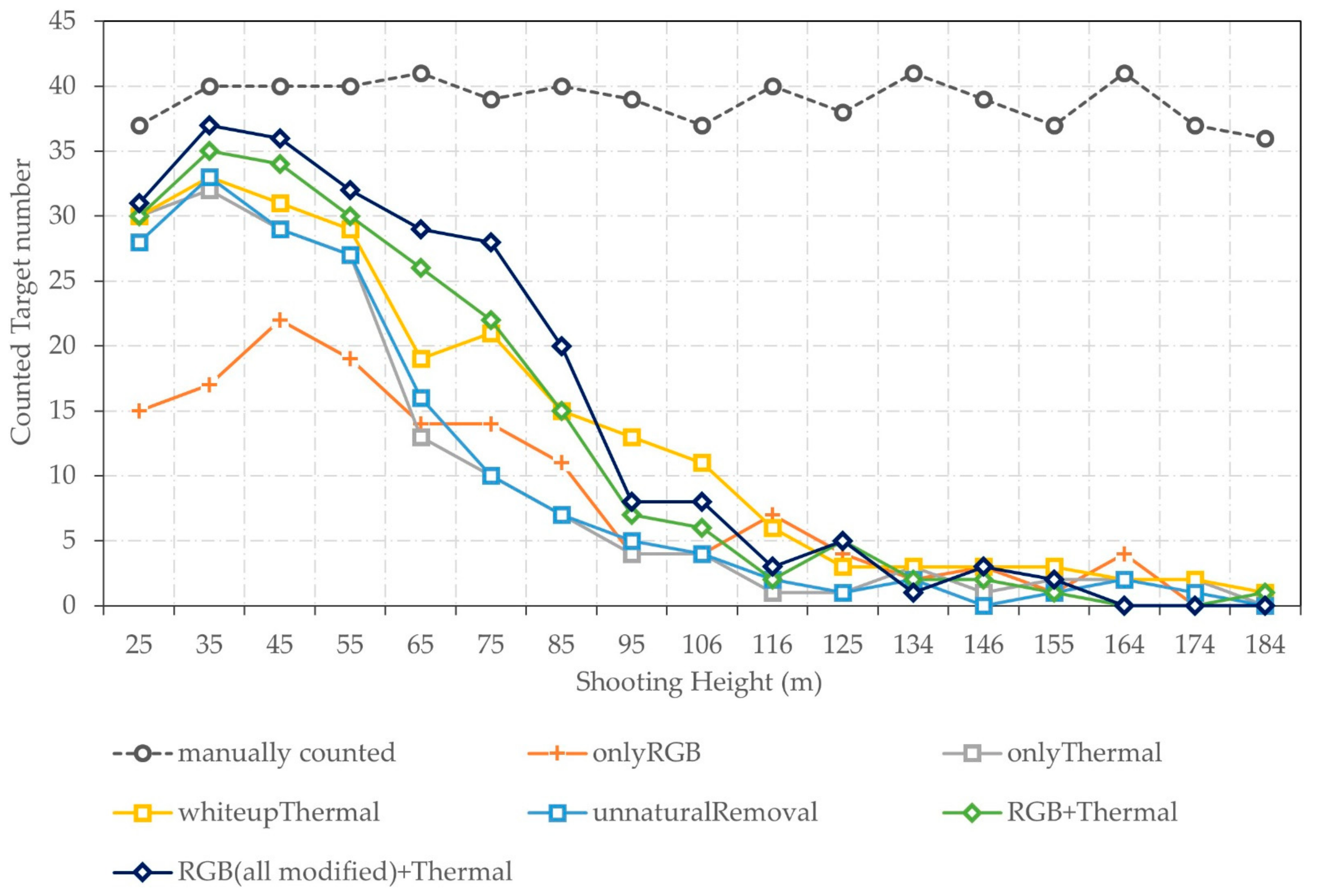

4. Results

5. Discussion

5.1. Detection Presicion and Recall

5.2. Instant Detection

5.3. Using the Proposed Method to Supplement Previous Methods

5.4. Utility of Thermal Sensors

5.5. Method Overview

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Witmer, G.W. Wildlife population monitoring: Some practical considerations. Wildl. Res. 2005, 32, 259–263. [Google Scholar] [CrossRef]

- Caughley, G. Analysis of Vertebrate Populations; Wiley: London, UK, 1977. [Google Scholar]

- Kellenberger, B.; Volpi, M.; Tuia, D. Fast animal detection in UAV images using convolutional neural networks. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Fort Worth, TX, USA, 23–28 July 2017; pp. 866–869. [Google Scholar]

- Pollock, K.H.; Nichols, J.D.; Simons, T.R.; Farnsworth, G.L.; Bailey, L.L.; Sauer, J.R. Large scale wildlife monitoring studies: Statistical methods for design and analysis. Environmetrics 2002, 13, 105–119. [Google Scholar] [CrossRef]

- O’Connell, A.F.; Nichols, J.D.; Karanth, K.U. Camera Traps in Animal Ecology: Methods and Analyses; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Bowman, J.L.; Kochanny, C.O.; Demarais, S.; Leopold, B.D. Evaluation of a GPS collar for white-tailed deer. Wildl. Soc. Bull. 2000, 28, 141–145. [Google Scholar]

- Bohmann, K.; Evans, A.; Gilbert, M.T.P.; Carvalho, G.R.; Creer, S.; Knapp, M.; Yu, D.W.; De Bruyn, M. Environmental DNA for wildlife biology and biodiversity monitoring. Trends Ecol. Evol. 2014, 29, 358–367. [Google Scholar] [CrossRef]

- Hinke, J.T.; Barbosa, A.; Emmerson, L.M.; Hart, T.; Juáres, M.A.; Korczak-Abshire, M.; Milinevsky, G.; Santos, M.; Trathan, P.N.; Watters, G.M.; et al. Estimating nest-level phenology and reproductive success of colonial seabirds using time-lapse cameras. Methods Ecol. Evol. 2018, 9, 1853–1863. [Google Scholar] [CrossRef]

- Burton, A.C.; Neilson, E.; Moreira, D.; Ladle, A.; Steenweg, R.; Fisher, J.T.; Bayne, T.; Boutin, S. Wildlife camera trapping: A review and recommendations for linking surveys to ecological processes. J. Appl. Ecol. 2015, 52, 675–685. [Google Scholar] [CrossRef]

- Ford, A.T.; Clevenger, A.P.; Bennett, A. Comparison of methods of monitoring wildlife crossing-structures on highways. J. Wildl. Manag. 2009, 73, 1213–1222. [Google Scholar] [CrossRef]

- Kellenberger, B.; Marcos, D.; Tuia, D. Detecting mammals in UAV images: Best practices to address a substantially im-balanced dataset with deep learning. Remote Sens. Environ. 2018, 216, 139–153. [Google Scholar] [CrossRef]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef]

- Bayram, H.; Stefas, N.; Engin, K.S.; Isler, V. Tracking wildlife with multiple UAVs: System design, safety and field experiments. In Proceedings of the 2017 International Symposium on Multi-Robot and Multi-Agent Systems (MRS), Los Angeles, CA, USA, 4–5 December 2017; pp. 97–103. [Google Scholar]

- Caughley, G. Bias in aerial survey. J. Wildl. Manag. 1974, 38, 921–933. [Google Scholar] [CrossRef]

- Bartmann, R.M.; Carpenter, L.H.; Garrott, R.A.; Bowden, D.C. Accuracy of helicopter counts of mule deer in pinyon-juniper woodland. Wildl. Soc. Bull. 1986, 14, 356–363. [Google Scholar]

- Mutalib, A.H.A.; Ruppert, N.; Akmar, S.; Kamaruszaman, F.F.J.; Rosely, N.F.N. Feasibility of Thermal Imaging Using Unmanned Aerial Vehicles to Detect Bornean Orangutans. J. Sustain. Sci. Manag. 2019, 14, 182–194. [Google Scholar]

- Thibbotuwawa, A.; Bocewicz, G.; Radzki, G.; Nielsen, P.; Banaszak, Z. UAV Mission planning resistant to weather uncertainty. Sensors 2020, 20, 515. [Google Scholar] [CrossRef]

- Cesare, K.; Skeele, R.; Yoo, S.H.; Zhang, Y.; Hollinger, G. Multi-UAV exploration with limited communication and battery. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2230–2235. [Google Scholar]

- Kellenberger, B.; Marcos, D.; Lobry, S.; Tuia, D. Half a percent of labels is enough: Efficient animal detection in UAV imagery using deep CNNs and active learning. IEEE Trans. Geosci. Remote Sens. 2019, 57, 9524–9533. [Google Scholar] [CrossRef]

- Barbedo, J.G.A.; Koenigkan, L.V.; Santos, P.M.; Ribeiro, A.R.B. Counting cattle in uav images—dealing with clus-tered animals and animal/background contrast changes. Sensors 2020, 20, 2126. [Google Scholar] [CrossRef]

- Barbedo, J.G.A.; Koenigkan, L.V.; Santos, T.T.; Santos, P.M. A study on the detection of cattle in UAV images using deep learning. Sensors 2019, 19, 5436. [Google Scholar] [CrossRef]

- Rivas, A.; Chamoso, P.; González-Briones, A.; Corchado, J.M. Detection of cattle using drones and convolutional neural networks. Sensors 2018, 18, 2048. [Google Scholar] [CrossRef]

- Rey, N.; Volpi, M.; Joost, S.; Tuia, D. Detecting animals in African Savanna with UAVs and the crowds. Remote Sens. Environ. 2017, 200, 341–351. [Google Scholar] [CrossRef]

- Seymour, A.C.; Dale, J.; Hammill, M.; Halpin, P.N.; Johnston, D.W. Automated detection and enumeration of marine wildlife using unmanned aircraft systems UAS and thermal imagery. Sci. Rep. 2017, 7, 45127. [Google Scholar] [CrossRef]

- Lee, W.Y.; Park, M.; Hyun, C.-U. Detection of two Arctic birds in Greenland and an endangered bird in Korea using RGB and thermal cameras with an unmanned aerial vehicle (UAV). PLoS ONE 2019, 14, e0222088. [Google Scholar] [CrossRef]

- Bevan, E.; Wibbels, T.; Najera, B.M.; Martinez, M.A.; Martinez, L.A.; Martinez, F.I.; Cuevas, J.M.; Anderson, T.; Bonka, A.; Hernandez, M.H.; et al. Unmanned aerial vehicles (UAVs) for monitoring sea turtles in near-shore waters. Mar. Turt. Newsl. 2015, 145, 19–22. [Google Scholar]

- Fudala, K.; Bialik, R.J. Breeding Colony Dynamics of Southern Elephant Seals at Patelnia Point, King George Island, Antarctica. Remote Sens. 2020, 12, 2964. [Google Scholar] [CrossRef]

- Pfeifer, C.; Rümmler, M.C.; Mustafa, O. Assessing colonies of Antarctic shags by unmanned aerial vehicle (UAV) at South Shetland Islands, Antarctica. Antarct. Sci. 2021, 33, 133–149. [Google Scholar] [CrossRef]

- Kays, R.; Sheppard, J.; Mclean, K.; Welch, C.; Paunescu, C.; Wang, V.; Kravit, G.; Crofoot, M. Hot monkey, cold reality: Surveying rainforest canopy mammals using drone-mounted thermal infrared sensors. Int. J. Remote Sens. 2019, 40, 407–419. [Google Scholar] [CrossRef]

- Lhoest, S.; Linchant, J.; Quevauvillers, S.; Vermeulen, C.; Lejeune, P. How many hippos HOMHIP: Algorithm for auto-matic counts of animals with infra-red thermal imagery from UAV. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-3/W3, 355–362. [Google Scholar] [CrossRef]

- Oishi, Y.; Oguma, H.; Tamura, A.; Nakamura, R.; Matsunaga, T. Animal detection using thermal images and its required observation conditions. Remote Sens. 2018, 10, 1050. [Google Scholar] [CrossRef]

- Hambrecht, L.; Brown, R.P.; Piel, A.K.; Wich, S.A. Detecting ‘poachers’ with drones: Factors influencing the probability of detection with TIR and RGB imaging in miombo woodlands, Tanzania. Biol. Conserv. 2019, 233, 109–117. [Google Scholar] [CrossRef]

- Chabot, D. Systematic Evaluation of a Stock Unmanned Aerial Vehicle (UAV) System for Small-Scale Wildlife Survey Applications. Doctoral Dissertation, McGill University, Montreal, QC, Canada, 2009. [Google Scholar]

- Chrétien, L.-P.; Théau, J.; Ménard, P. Visible and thermal infrared remote sensing for the detection of white-tailed deer using an unmanned aerial system. Wildl. Soc. Bull. 2016, 40, 181–191. [Google Scholar] [CrossRef]

- López, A.; Jurado, J.M.; Ogayar, C.J.; Feito, F.R. A framework for registering UAV-based imagery for crop-tracking in Precision Agriculture. Int. J. Appl. Earth Obs. Geoinf. 2021, 97, 102274. [Google Scholar] [CrossRef]

- Heikkila, J.; Silven, O. Calibration procedure for short focal length off-the-shelf CCD cameras. In Proceedings of the 13th International Conference on Pattern Recognition, Vienna, Austria, 25–29 August 1996; Volume 1, pp. 166–170. [Google Scholar]

- Oishi, Y.; Matsunaga, T. Support system for surveying moving wild animals in the snow using aerial remote-sensing images. Int. J. Remote Sens. 2014, 35, 1374–1394. [Google Scholar] [CrossRef]

- Kellie, K.A.; Colson, K.E.; Reynolds, J.H. Challenges to Monitoring Moose in Alaska; Alaska Department of Fish and Game, Division of Wildlife Conservation: Juneau, AK, USA, 2019. [Google Scholar]

- Třebický, V.; Fialová, J.; Kleisner, K.; Havlíček, J. Focal length affects depicted shape and perception of facial images. PLoS ONE 2016, 11, e0149313. [Google Scholar] [CrossRef]

- Neale, W.T.; Hessel, D.; Terpstra, T. Photogrammetric Measurement Error Associated with Lens Distortion; SAE Technical Paper; SAE International: Warrendale, PA, USA, 2011. [Google Scholar]

- Hongzhi, W.; Meijing, L.; Liwei, Z. The distortion correction of large view wide-angle lens for image mosaic based on OpenCV. In Proceedings of the 2011 International Conference on Mechatronic Science, Electric Engineering and Computer (MEC), Jilin, China, 19–22 August 2011; pp. 1074–1077. [Google Scholar]

- Synnefa, A.; Santamouris, M.; Apostolakis, K. On the development, optical properties and thermal performance of cool colored coatings for the urban environment. Sol. Energy 2007, 81, 488–497. [Google Scholar] [CrossRef]

- Griffiths, S.R.; Rowland, J.A.; Briscoe, N.J.; Lentini, P.E.; Handasyde, K.A.; Lumsden, L.F.; Robert, K.A. Surface re-flectance drives nest box temperature profiles and thermal suitability for target wildlife. PLoS ONE 2017, 12, e0176951. [Google Scholar] [CrossRef]

- Apolo-Apolo, O.E.; Pérez-Ruiz, M.; Martínez-Guanter, J.; Valente, J. A cloud-based environment for generating yield estimation maps from apple orchards using UAV imagery and a deep learning technique. Front. Plant Sci. 2020, 11, 1086. [Google Scholar] [CrossRef]

- Sobel, I. An Isotropic 3 × 3 Gradient Operator, Machine Vision for Three–Dimensional Scenes; Academic Press: New York, NY, USA, 1990. [Google Scholar]

- Russ, J.C.; Matey, J.R.; Mallinckrodt, A.J.; McKay, S. The image processing handbook. Comput. Phys. 1994, 8, 177–178. [Google Scholar] [CrossRef]

- Longmore, S.N.; Collins, R.P.; Pfeifer, S.; Fox, S.E.; Mulero-Pázmány, M.; Bezombes, F.; Goodwin, A.; De Juan Ovelar, M.; Knapen, J.H.; Wich, S.A. Adapting astronomical source detection software to help detect animals in thermal images obtained by unmanned aerial systems. Int. J. Remote Sens. 2017, 38, 2623–2638. [Google Scholar] [CrossRef]

- Spaan, D.; Burke, C.; McAree, O.; Aureli, F.; Rangel-Rivera, C.E.; Hutschenreiter, A.; Longmore, S.N.; McWhirter, P.R.; Wich, S.A. Thermal infrared imaging from drones offers a major advance for spider monkey surveys. Drones 2019, 3, 34. [Google Scholar] [CrossRef]

- Gooday, O.J.; Key, N.; Goldstien, S.; Zawar-Reza, P. An assessment of thermal-image acquisition with an unmanned aerial vehicle (UAV) for direct counts of coastal marine mammals ashore. J. Unmanned Veh. Syst. 2018, 6, 100–108. [Google Scholar] [CrossRef]

- Luo, R.; Sener, O.; Savarese, S. Scene semantic reconstruction from egocentric rgb-d-thermal videos. In Proceedings of the 2017 International Conference on 3D Vision 3DV, Qingdao, China, 10–12 October 2017; pp. 593–602. [Google Scholar]

- Van, G.; Camiel, R.V.; Pascal, M.; Kitso, E.; Lian, P.K.; Serge, W. Nature Conservation Drones for Automatic Localization and Counting of Animals. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 255–270. [Google Scholar]

- Gonzalez, L.F.; Montes, G.A.; Puig, E.; Johnson, S.; Mengersen, K.; Gaston, K.J. Unmanned aerial vehicles (UAVs) and artificial intelligence revolutionizing wildlife monitoring and conservation. Sensors 2016, 16, 97. [Google Scholar] [CrossRef]

- Witczuk, J.; Pagacz, S.; Zmarz, A.; Cypel, M. Exploring the feasibility of unmanned aerial vehicles and thermal imaging for ungulate surveys in forests-preliminary results. Int. J. Remote Sens. 2018, 39, 5504–5521. [Google Scholar] [CrossRef]

| Isolated | Bordering | Over-Lapping | Partial | Detected | Error | Total Count | Detection Precision | Detection Recall | |

|---|---|---|---|---|---|---|---|---|---|

| Manual count | 56 | 243 | 17 | 3 | 316 | ||||

| Corrected RGB only | 17 | 95 | 0 | 4 | 116 | 9099 | 9215 | 0.013 | 0.367 |

| Thermal only | 32 | 120 | 0 | 0 | 152 | 40 | 192 | 0.792 | 0.481 |

| Corrected for fur thermal | 42 | 149 | 0 | 0 | 191 | 108 | 299 | 0.639 | 0.604 |

| Unnatural color removal thermal | 31 | 122 | 2 | 0 | 155 | 38 | 193 | 0.803 | 0.491 |

| Corrected RGB + Thermal | 28 | 168 | 2 | 1 | 199 | 795 | 994 | 0.200 | 0.630 |

| Corrected RGB + Corrected thermal | 34 | 183 | 3 | 1 | 221 | 54 | 275 | 0.804 | 0.699 |

| CPU and RAM | Intel(R) Xeon(R) CPU @ 2.20 GHz and 12.69 GB | |||||||

|---|---|---|---|---|---|---|---|---|

| Running Environment | Single CPU | GPU Accelerated (Tesla T4_16 GB) | GPU Accelerated (Tesla P100_16 GB) | CPU Parallel Processing (2 Cores) | ||||

| Detection Time and Applicable FPS | Time (s) | FPS | Time (s) | FPS | Time (s) | FPS | Time (s) | FPS |

| Corrected RGB only | 0.192 | 5 | 0.159 | 6 | 0.143 | 7 | 0.109 | 9 |

| Thermal only | 0.047 | 21 | 0.038 | 26 | 0.036 | 28 | 0.036 | 28 |

| Corrected for fur color and temperature | 0.063 | 16 | 0.051 | 20 | 0.047 | 21 | 0.040 | 25 |

| Unnatural color removal | 0.048 | 21 | 0.038 | 26 | 0.036 | 28 | 0.033 | 30 |

| RGB + Thermal | 0.194 | 5 | 0.151 | 7 | 0.146 | 7 | 0.109 | 9 |

| RGB + Corrected thermal | 0.197 | 5 | 0.158 | 6 | 0.145 | 7 | 0.111 | 9 |

| Proposed Method | Chrétien, L.P., et al., 2016 [34] | Hambrecht, L., et al., 2019 [32] | Lhoest, S., et al., 2015 [30] | Longmore, S.N., et al., 2017 [47] | Seymour, A.C., et al., 2017 [24] | Gooday, O.J., et al., 2018 [49] | Oishi, Y., et al., 2018 [31] | Spaan, D., et al., 2019 [48] | ||

|---|---|---|---|---|---|---|---|---|---|---|

| Used Dataset | UAV Derived RGB and Thermal Images | UAV Derived Thermal Images | ||||||||

| Site | location | animal farm, Hongcheon, Republic of Korea | Falardeau Wildlife Observation and Agricultural Interpretive Centre, Canada | Issa study site, Tanzania | Garamba National Park, Democratic Republic of Congo | Arrowe Brook Farm Wirral, UK | Hay Island & Saddle Island, Canada | Kaikoura, New Zealand | Nara Park, Japan | Los Arboles Tulum, Mexico |

| area (m2) | 120,200 | 2215 | - | - | 6500 | 160,000 | - | 5,510,000 | 40,000 | |

| numbers | 1 | 1 | 24 | 4 | 1 | 2 | 3 | 1 | 3 | |

| Data Acquisition | Date | 25 11 2020 | 06 11 2011 | 03 2017 | 09 2014, 05 2015 | 14 07 2015 | 29 01 2015~02 02 | 19 02 2015~27 | 11 09 2015 | 10 06 2018~23 |

| Time | 11:00~13:00 | 07:00~13:00 | - | - | - | 07:30, 19:00 | 07:00, 12:00, 16:00 | 19:22~20:22 | 17:30~19:00 | |

| Altitude (m) | 25~275 | 60 | 70, 100 | 39, 49, 73, 91 | 80~120 | - | 50 | 1000, 1300 | 70 | |

| Target | name | alpaca | white-tailed deer | human | hippopotamus | cattle | grey seal | New Zealand fur seal | sika deer | spider monkey |

| body length (m) | 0.8~1.0 | 1~1.9 | 0.3~0.5 | 3~5 | 2.4 | 1.0~2.5 | 1.0~2.5 | 1~1.9 | 0.7 | |

| Results (best or average) | Accuracy | 0.804 | 0.650 | 0.410 | 0.860 | 0.700 | 0.750 | 0.430 | 0.753 | 0.650 |

| Detection time (s) | 0.033 | - | - | - | - | - | - | - | - | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lee, S.; Song, Y.; Kil, S.-H. Feasibility Analyses of Real-Time Detection of Wildlife Using UAV-Derived Thermal and RGB Images. Remote Sens. 2021, 13, 2169. https://doi.org/10.3390/rs13112169

Lee S, Song Y, Kil S-H. Feasibility Analyses of Real-Time Detection of Wildlife Using UAV-Derived Thermal and RGB Images. Remote Sensing. 2021; 13(11):2169. https://doi.org/10.3390/rs13112169

Chicago/Turabian StyleLee, Seunghyeon, Youngkeun Song, and Sung-Ho Kil. 2021. "Feasibility Analyses of Real-Time Detection of Wildlife Using UAV-Derived Thermal and RGB Images" Remote Sensing 13, no. 11: 2169. https://doi.org/10.3390/rs13112169

APA StyleLee, S., Song, Y., & Kil, S.-H. (2021). Feasibility Analyses of Real-Time Detection of Wildlife Using UAV-Derived Thermal and RGB Images. Remote Sensing, 13(11), 2169. https://doi.org/10.3390/rs13112169