Oil Spill Detection Using Machine Learning and Infrared Images

Abstract

1. Introduction

- Less harmful environmental damage. This is because of the fact that the cleanup is faster.

- The oil can be contained faster. This will make the cleanup process more efficient.

- Less impact on the rest of the operations inside the port, which results in less economical damage.

- In certain situations, it will lead to a more clear indication of the polluter.

2. Literature

3. Methods

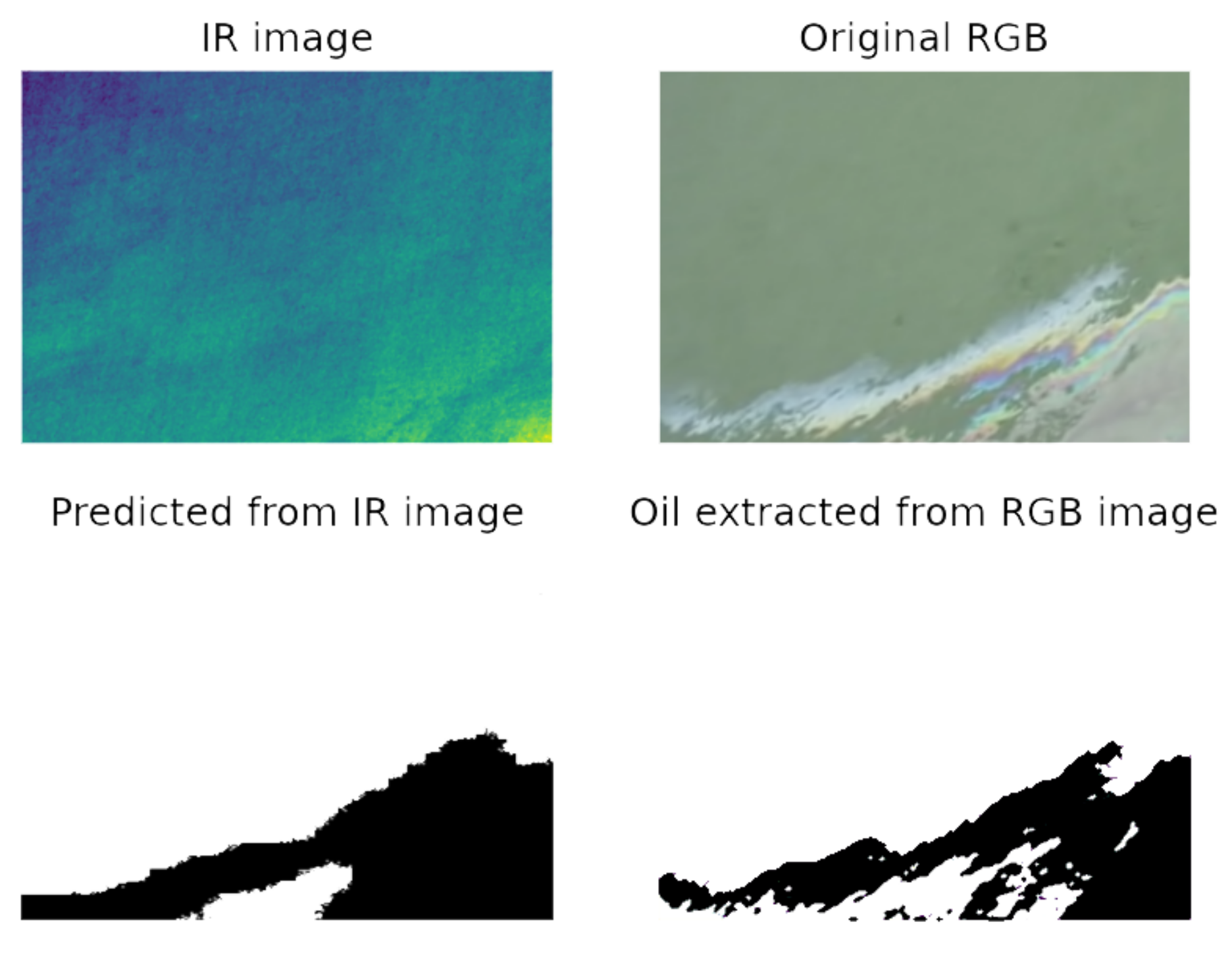

- Training process:To train the CNN, we use both of the RGB images and the Infrared images. From the RGB images, the oil spill is segmented, so that we have a mask representing the oil in the RGB image. We then feed both the segmented RGB images and IR images to the neural network and start the training process. Figure 1 presents an overview of that process.

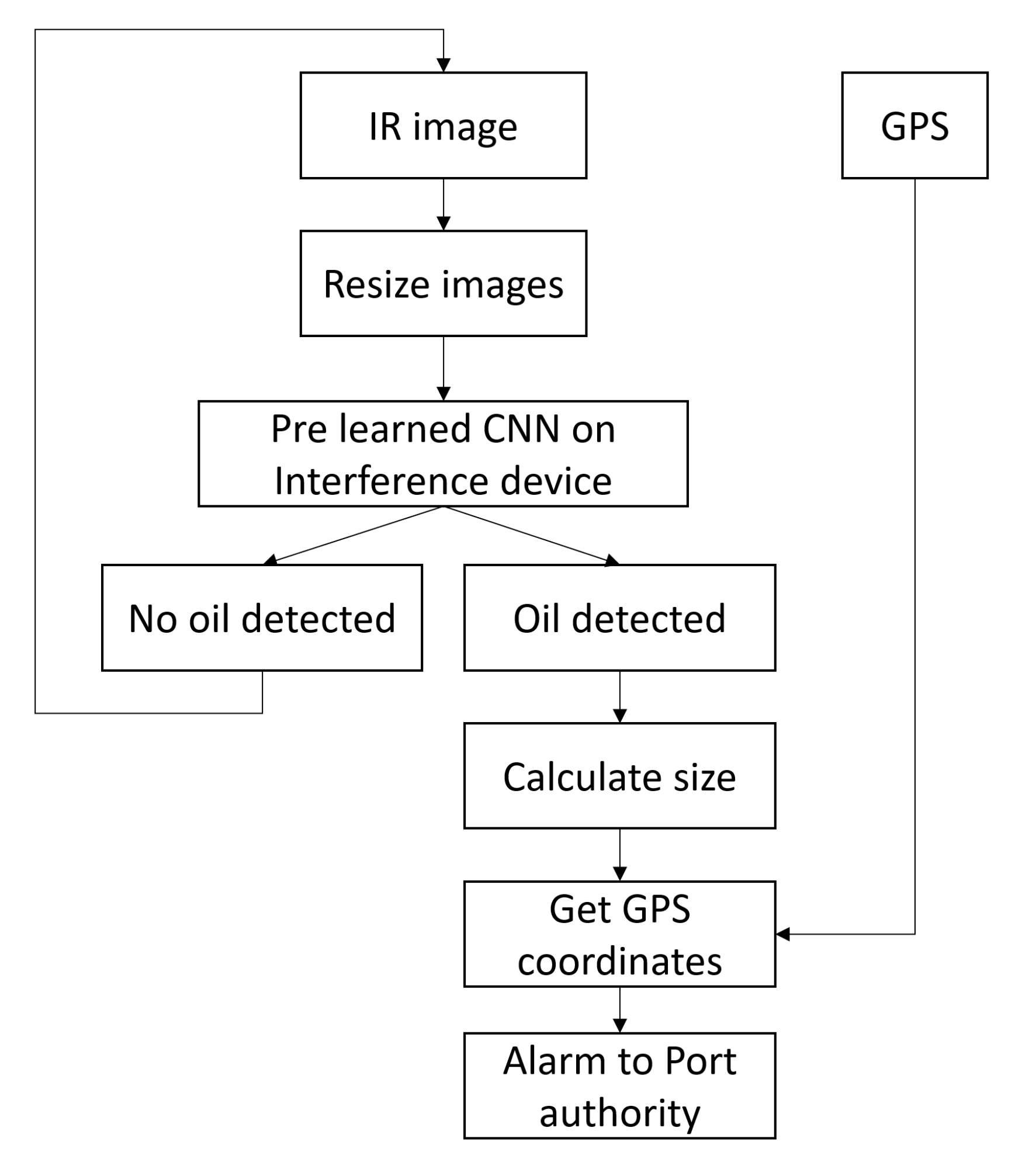

- Operational Process:Once the training is done, the trained CNN can be deployed while using an interference device. Interference devices are low cost, low power computers, and are highly optimized for parallel GPU computations, ideal for CNNs. These devices make it possible to segment the images in real-time. See Figure 2 for a step-by-step flowchart.

3.1. Training Process

3.2. Training a Neural Network

3.2.1. Architectures

3.2.2. Hyper-Parameters

3.3. Operational Process

3.4. Experimental Setup

3.5. Preprocessing

- 1.

- Data compression of the image. The original footage was in 3840 × 2160 pixels large. This is compressed to 640 × 480 pixels in order to speed up the preprocessing

- 2.

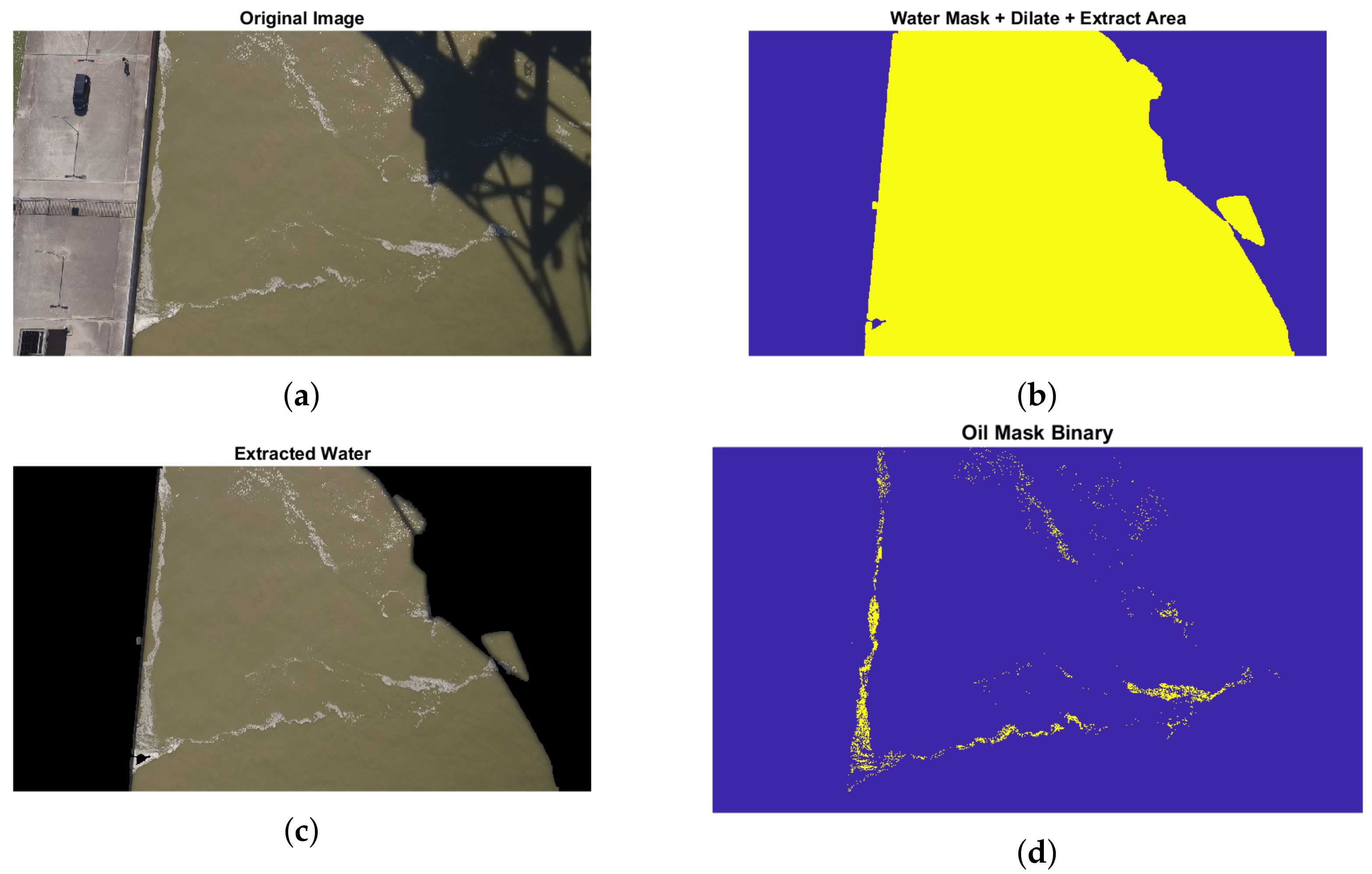

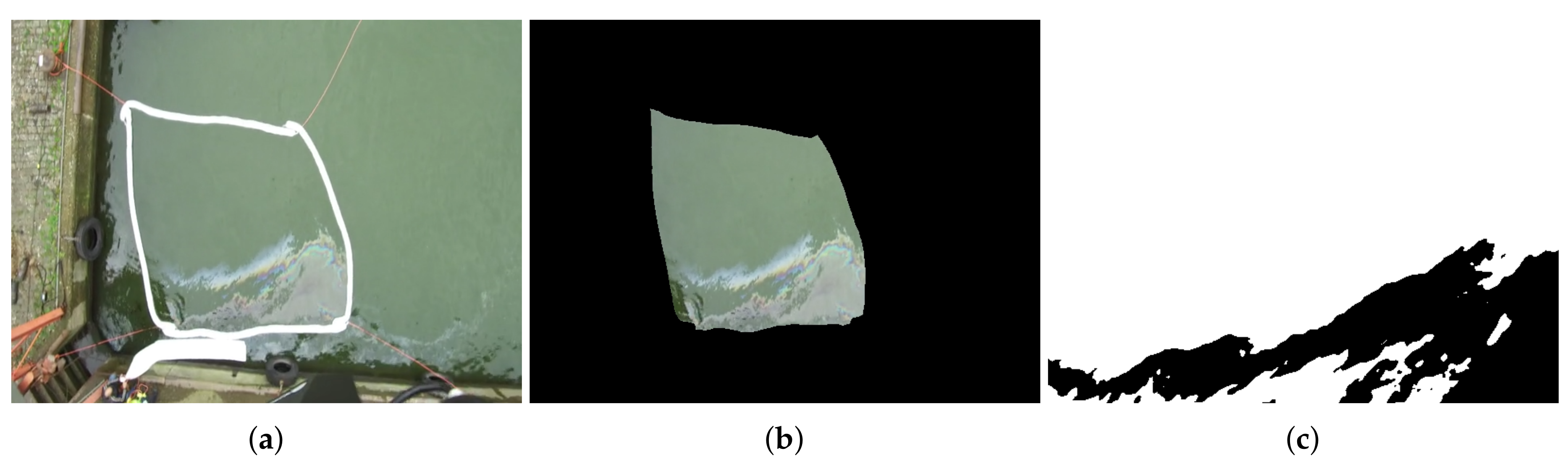

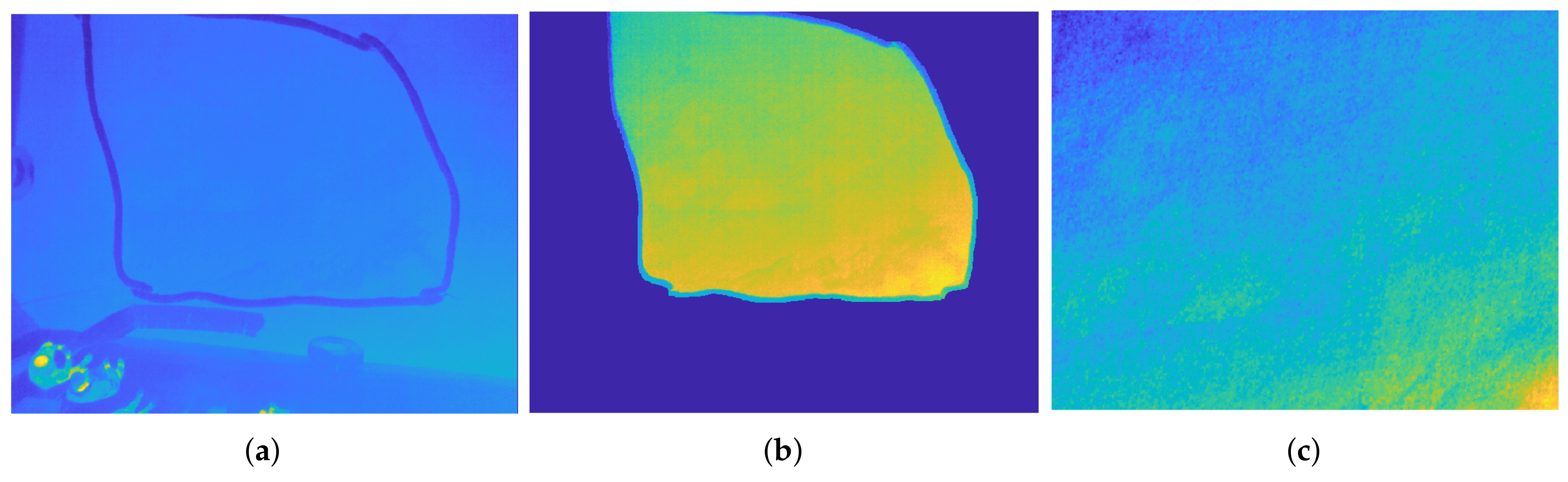

- Extraction of the image center and masking of the region outside the absorbing bands. The region of interest was the area where the oil was contained (in between the white absorbing strings). This region was identified using thresholding and edge detection image processing techniques. Figure 7b shows the result of this step.

- 3.

- Transformation of the contained region presented in Figure 7b to a rectangular region. The region in the white absorbing bands was a deformed rectangle (because of the current). In order to transform the deformed region back to a rectangle, we first identified the corners and then applied a transformation matrix on the image (see Figure 7c and Figure 8c, where this transformation was applied both on the binarized RGB image and on the infrared image).

- 4.

- Estimation of the amount of oil inside of area. Using a thresholding algorithm, it was possible to estimate the amount of oil inside the area (thresholding means that we classify the pixels in an image based on the intensity value or color value of the pixel). This was possible in an accurate way, because the conditions were optimal, and there was no direct sunlight or other disturbances on the image. This estimation will serve as a ground truth image. We save the resulting binary (oil = 1, no oil = 0) with the same name as the corresponding IR image. The result of applying a thresholding algorithm can be seen in Figure 7c.

3.6. Post Processing

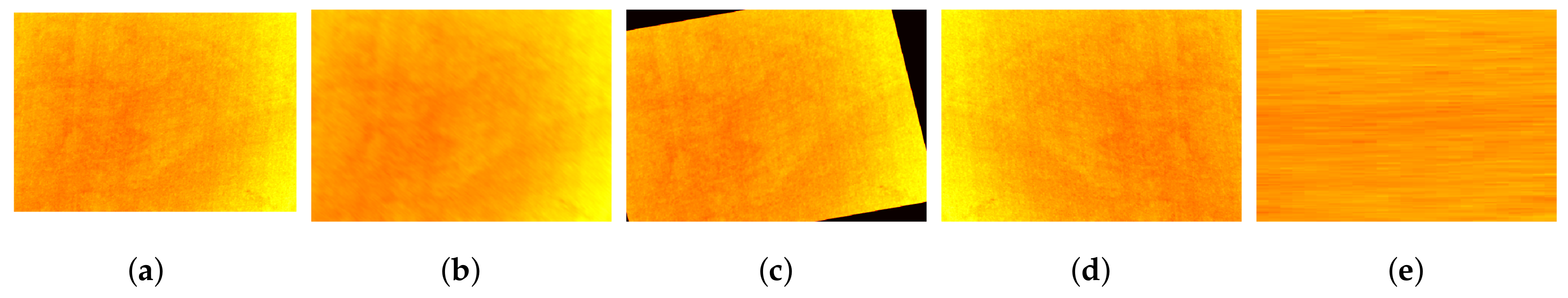

3.7. Nighttime Detection

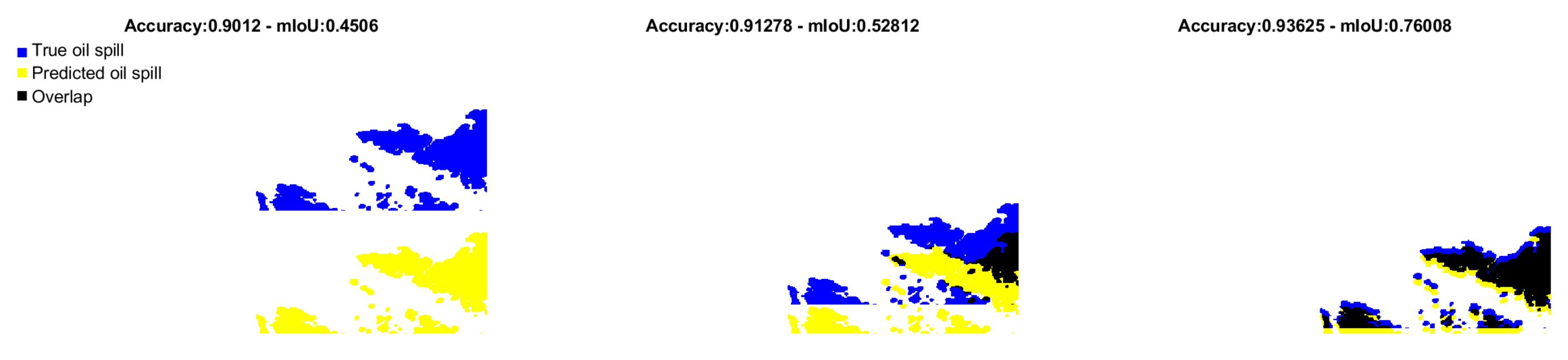

4. Results

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| DOAJ | Directory of open access journals |

| UAV | Unmanned Aereal Vehicle |

| UV | Ultra Violet |

| mIoU | mean Intersection over Union |

| NIR | Near Infrared |

| SWIR | Short Wave Infrared |

| CNN | Convolutional Neural Network |

References

- Beyer, J.; Trannum, H.C.; Bakke, T.; Hodson, P.V.; Collier, T.K. Environmental effects of the Deepwater Horizon oil spill: A review. Marine Pollution Bulletin Environmental effects of the Deepwater Horizon oil spill Environmental effects of the Deepwater Horizon oil spill: A review. Mar. Pollut. Bull. 2016, 110, 28–51. [Google Scholar] [CrossRef]

- Readman, J.W.; Bartocci, J.; Tolosa, I.; Fowler, S.W.; Oregioni, B.; Abdulraheem, M.Y. Recovery of the coastal marine environment in the Gulf following the 1991 war-related oil spills. Mar. Pollut. Bull. 1996, 32, 493–498. [Google Scholar] [CrossRef]

- ITPOF. Oil Tanker Spill Statistics 2019. In Technical Report, International Tanker Owners Pollution Federation; ITPOF: Montreal, QC, Canada, 2019; Available online: https://www.itopf.org/fileadmin/data/Documents/Company_Lit/Oil_Spill_Stats_brochure_2020_for_web.pdf (accessed on 14 December 2020).

- Janati, M.; Kolahdoozan, M.; Imanian. Artificial Neural Network Modeling for the Management of Oil Slick Transport in the Marine Environments. Pollution 2020, 6, 399–415. [Google Scholar] [CrossRef]

- Hu, C.; Feng, L.; Holmes, J.; Swayze, G.A.; Leifer, I.; Melton, C.; Garcia, O.; MacDonald, I.; Hess, M.; Muller-Karger, F.; et al. Remote sensing estimation of surface oil volume during the 2010 Deepwater Horizon oil blowout in the Gulf of Mexico: Scaling up AVIRIS observations with MODIS measurements. J. Appl. Remote Sens. 2018, 12, 1. [Google Scholar] [CrossRef]

- Leifer, I.; Lehr, W.J.; Simecek-Beatty, D.; Bradley, E.; Clark, R.; Dennison, P.; Hu, Y.; Matheson, S.; Jones, C.E.; Holt, B.; et al. State of the art satellite and airborne marine oil spill remote sensing: Application to the BP Deepwater Horizon oil spill. Remote Sens. Environ. 2012, 124, 185–209. [Google Scholar] [CrossRef]

- White, H.; Commy, R.; MacDonald, I.; Reddy, C. Methods of Oil Detection in Response to the Deepwater Horizon Oil Spill. Oceanography 2016, 29, 76–87. [Google Scholar] [CrossRef]

- Garcia-Pineda, O.; MacDonald, I.; Hu, C.; Svejkovsky, J.; Hess, M.; Dukhovskoy, D.; Morey, S. Detection of Floating Oil Anomalies From the Deepwater Horizon Oil Spill With Synthetic Aperture Radar. Oceanography 2013, 26, 124–137. [Google Scholar] [CrossRef]

- Xing, Q.; Li, L.; Lou, M.; Bing, L.; Zhao, R.; Li, Z. Observation of Oil Spills through Landsat Thermal Infrared Imagery: A Case of Deepwater Horizon. Aquat. Procedia 2015, 3, 151–156. [Google Scholar] [CrossRef]

- Alves, T.M.; Kokinou, E.; Zodiatis, G. A three-step model to assess shoreline and offshore susceptibility to oil spills: The South Aegean (Crete) as an analogue for confined marine basins. Mar. Pollut. Bull. 2014, 86, 443–457. [Google Scholar] [CrossRef]

- Alves, T.M.; Kokinou, E.; Zodiatis, G.; Lardner, R.; Panagiotakis, C.; Radhakrishnan, H. Modelling of oil spills in confined maritime basins: The case for early response in the Eastern Mediterranean Sea. Environ. Pollut. 2015, 206, 390–399. [Google Scholar] [CrossRef]

- Andrejev, O.; Soomere, T.; Sokolov, A.; Myrberg, K. The role of the spatial resolution of a three-dimensional hydrodynamic model for marine transport risk assessment. Oceanologia 2011, 53, 309–334. [Google Scholar] [CrossRef]

- Fingas, M.; Brown, C. Review of oil spill remote sensing. Mar. Pollut. Bull. 2014, 83, 9–23. [Google Scholar] [CrossRef] [PubMed]

- Chenault, D.B.; Vaden, J.P.; Mitchell, D.A.; DeMicco, E.D. Infrared polarimetric sensing of oil on water. In Proceedings of the Remote Sensing of the Ocean, Sea Ice, Coastal Waters, and Large Water Regions 2016, Edinburgh, UK, 26–29 September 2016; Volume 9999, pp. 89–100. [Google Scholar] [CrossRef]

- Topouzelis, K.N. Oil Spill Detection by SAR Images: Dark Formation Detection, Feature Extraction and Classification Algorithms. Sensors 2008, 8, 6642–6659. [Google Scholar] [CrossRef] [PubMed]

- Fiscella, B.; Giancaspro, A.; Nirchio, F.; Pavese, P.; Trivero, P. Oil spill detection using marine SAR images. Int. J. Remote Sens. 2000, 21, 3561–3566. [Google Scholar] [CrossRef]

- Li, W.; Zhang, H.; Osen, O.L. A UAV SAR Prototype for Marine and Arctic Application. In Proceedings of the International Conference on Offshore Mechanics and Arctic Engineering, Trondheim, Norway, 25–30 June 2017; ASME: New York, NY, USA, 2017; Volume 7B. Available online: https://asmedigitalcollection.asme.org/OMAE/proceedings-pdf/OMAE2017/57748/V07BT06A002/2533852/v07bt06a002-omae2017-61264.pdf (accessed on 14 December 2020). [CrossRef]

- Lennon, M.; Babichenko, S.; Thomas, N.; Mariette, V.; Lisin, A. Detection and mapping of oil slicks in the sea by comined use of hyperspectral imagery and laser induced fluorescence. EARSeL Eproceedings 2006, 5, 120–128. [Google Scholar]

- Duan, Z.; Li, Y.; Wang, J.; Zhao, G.; Svanberg, S. Aquatic environment monitoring using a drone-based fluorosensor. Appl. Phys. B Lasers Opt. 2019, 125, 108. [Google Scholar] [CrossRef]

- Fang, S.A.; Huang, X.X.; Yin, D.Y.; Xu, C.; Feng, X.; Feng, Q. Research on the ultraviolet reflectivity characteristic of simulative targets of oil spill on the ocean. Guang Pu Xue Yu Guang Pu Fen Xi/Spectrosc. Spectr. Anal. 2010, 30, 738–741. [Google Scholar] [CrossRef]

- Huang, H.; Zhang, D.; Wang, C.; Zhan, S.; Song, H.; Wang, H.; Zhu, W.; Chen, J.; Liu, C.; Xu, R.; et al. Research on UV Reflective Spectrum of Floating Transparent Oil. Spectrosc. Spectr. Anal. 2019, 39, 2377–2381. [Google Scholar] [CrossRef]

- Zhan, S.; Wang, C.; Liu, S.; Xia, K.; Huang, H.; Li, X.; Liu, C.; Xu, R. Floating Xylene Spill Segmentation from Ultraviolet Images via Target Enhancement. Remote Sens. 2019, 11, 1142. [Google Scholar] [CrossRef]

- Pisano, A.; Bignami, F.; Santoleri, R. Oil Spill Detection in Glint-Contaminated Near-Infrared MODIS Imagery. Remote Sens. 2015, 7, 1112–1134. [Google Scholar] [CrossRef]

- Clark, R.; Swayze, G.; Leifer, I.; Livo, K.; Kokaly, R.; Hoefen, T.; Lundeen, S.; Eastwood, M.; Green, R.; Pearson, N.; et al. A Method for Quantitative Mapping of Thick Oil Spills Using Imaging Spectroscopy; Technical Report; United States Geological Survey: Reston, VA, USA, 2010.

- Allik, T.; Ramboyong, L.; Roberts, M.; Walters, M.; Soyka, T.; Dixon, R.; Cho, J. Enhanced oil spill detection sensors in low-light environments. In Proceedings of the Ocean Sensing and Monitoring VIII, Baltimore, MD, USA, 17–21 April 2016; Volume 9827, p. 98270B. [Google Scholar] [CrossRef]

- Mihoub, Z.; Hassini, A. Remote sensing of marine oil spills using Sea-viewing Wide Field-of-View Sensor images. Boll. Geofis. Teor. Appl. 2019, 60, 123–136. [Google Scholar] [CrossRef]

- Zhao, D.; Cheng, X.; Zhang, H.; Niu, Y.; Qi, Y.; Zhang, H.; Zhao, D.; Cheng, X.; Zhang, H.; Niu, Y.; et al. Evaluation of the Ability of Spectral Indices of Hydrocarbons and Seawater for Identifying Oil Slicks Utilizing Hyperspectral Images. Remote Sens. 2018, 10, 421. [Google Scholar] [CrossRef]

- Jiao, Z.; Jia, G.; Cai, Y. A new approach to oil spill detection that combines deep learning with unmanned aerial vehicles. Comput. Ind. Eng. 2019, 135, 1300–1311. [Google Scholar] [CrossRef]

- Jung, A.B.; Wada, K.; Crall, J.; Tanaka, S.; Graving, J.; Reinders, C.; Yadav, S.; Banerjee, J.; Vecsei, G.; Kraft, A.; et al. Imgaug. 2020. Available online: https://github.com/aleju/imgaug (accessed on 1 February 2020).

- Stockman, G.; Shapiro, L.G. Computer Vision. In Computer Vision, 1st ed.; Prentice Hall PTR: Upper Saddle River, NJ, USA, 2001; pp. 72–89. [Google Scholar]

- Drozdzal, M.; Vorontsov, E.; Chartrand, G.; Kadoury, S.; Pal, C. The Importance of Skip Connections in Biomedical Image Segmentation. Lect. Notes Comput. Sci. 2016, 10008 LNCS, 179–187. [Google Scholar]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid Scene Parsing Network. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 6230–6239. [Google Scholar] [CrossRef]

- Kendall, A.; Badrinarayanan, V.; Cipolla, R. Bayesian SegNet: Model Uncertainty in Deep Convolutional Encoder-Decoder Architectures for Scene Understanding. arxiv 2015, arXiv:1511.02680. [Google Scholar]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef]

- Sandler, M.; Howard, A.; Zhu, M.; Zhmoginov, A.; Chen, L. MobileNetV2: Inverted Residuals and Linear Bottlenecks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 4510–4520. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient convolutional neural networks for mobile vision applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. arxiv 2014, arXiv:1409.1556. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Fei-Fei, L. ImageNet: A Large-Scale Hierarchical Image Database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar] [CrossRef]

- Zeiler, M.D. Adadelta: An Adaptive Learning Rate Method. arXiv 2012, arXiv:1212.5701. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Dozat, T. Incorporating Nesterov Momentum into Adam. Workshop track ICLR. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Hinton, G.E.; Srivastava, N.; Swersky, K. Neural Networks for Machine Learning Lecture 6a Overview of mini-batch gradient descent. In COURSERA: Neural Networks for Machine Learning; 2012; p. 29. Available online: http://www.cs.toronto.edu/~hinton/coursera/lecture6/lec6.pdf (accessed on 14 December 2020).

- He, K.; Zhang, X.; Ren, S.; Sun, J. Delving deep into rectifiers: Surpassing human-level performance on imagenet classification. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; Volume 2015, pp. 1026–1034. [Google Scholar] [CrossRef]

| Feature Extractor | Segmentation Architecture | Optimizer | Mean Iou | Val Mean Iou |

|---|---|---|---|---|

| MobileNet | FCN 8 | RMSprop | 0.89 | 0.89 |

| MobileNet | FCN 32 | Adamax | 0.88 | 0.88 |

| MobileNet | Unet | RMSprop | 0.87 | 0.87 |

| MobileNet | Segnet | RMSprop | 0.83 | 0.84 |

| ResNet 50 | Segnet | Adamax | 0.79 | 0.79 |

| ResNet 50 | Pspnet | RMSprop | 0.75 | 0.75 |

| Pspnet | Pspnet | Adamax | 0.65 | 0.65 |

| VGG | FCN 32 | Adadelta | 0.64 | 0.64 |

| FCN 32 | FNC 32 | Adadelta | 0.60 | 0.60 |

| FCN 8 | FNC 8 | Adadelta | 0.59 | 0.60 |

| VGG | Segnet | Adamax | 0.60 | 0.60 |

| VGG | Pspnet | RMSprop | 0.59 | 0.59 |

| Unet | Unet-mini | RMSprop | 0.55 | 0.55 |

| ResNet 50 | Unet | Adadelta | 0.49 | 0.49 |

| VGG | Unet | RMSprop | 0.47 | 0.48 |

| VGG | FCN 8 | Adadelta | 0.44 | 0.44 |

| Unet | Unet | RMSprop | 0.41 | 0.41 |

| Segnet | Segnet | RMSprop | 0.38 | 0.38 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

De Kerf, T.; Gladines, J.; Sels, S.; Vanlanduit, S. Oil Spill Detection Using Machine Learning and Infrared Images. Remote Sens. 2020, 12, 4090. https://doi.org/10.3390/rs12244090

De Kerf T, Gladines J, Sels S, Vanlanduit S. Oil Spill Detection Using Machine Learning and Infrared Images. Remote Sensing. 2020; 12(24):4090. https://doi.org/10.3390/rs12244090

Chicago/Turabian StyleDe Kerf, Thomas, Jona Gladines, Seppe Sels, and Steve Vanlanduit. 2020. "Oil Spill Detection Using Machine Learning and Infrared Images" Remote Sensing 12, no. 24: 4090. https://doi.org/10.3390/rs12244090

APA StyleDe Kerf, T., Gladines, J., Sels, S., & Vanlanduit, S. (2020). Oil Spill Detection Using Machine Learning and Infrared Images. Remote Sensing, 12(24), 4090. https://doi.org/10.3390/rs12244090