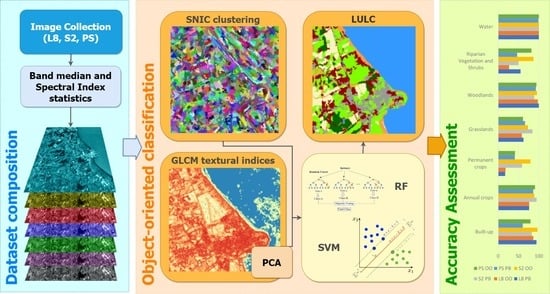

Object-Oriented LULC Classification in Google Earth Engine Combining SNIC, GLCM, and Machine Learning Algorithms

Abstract

1. Introduction

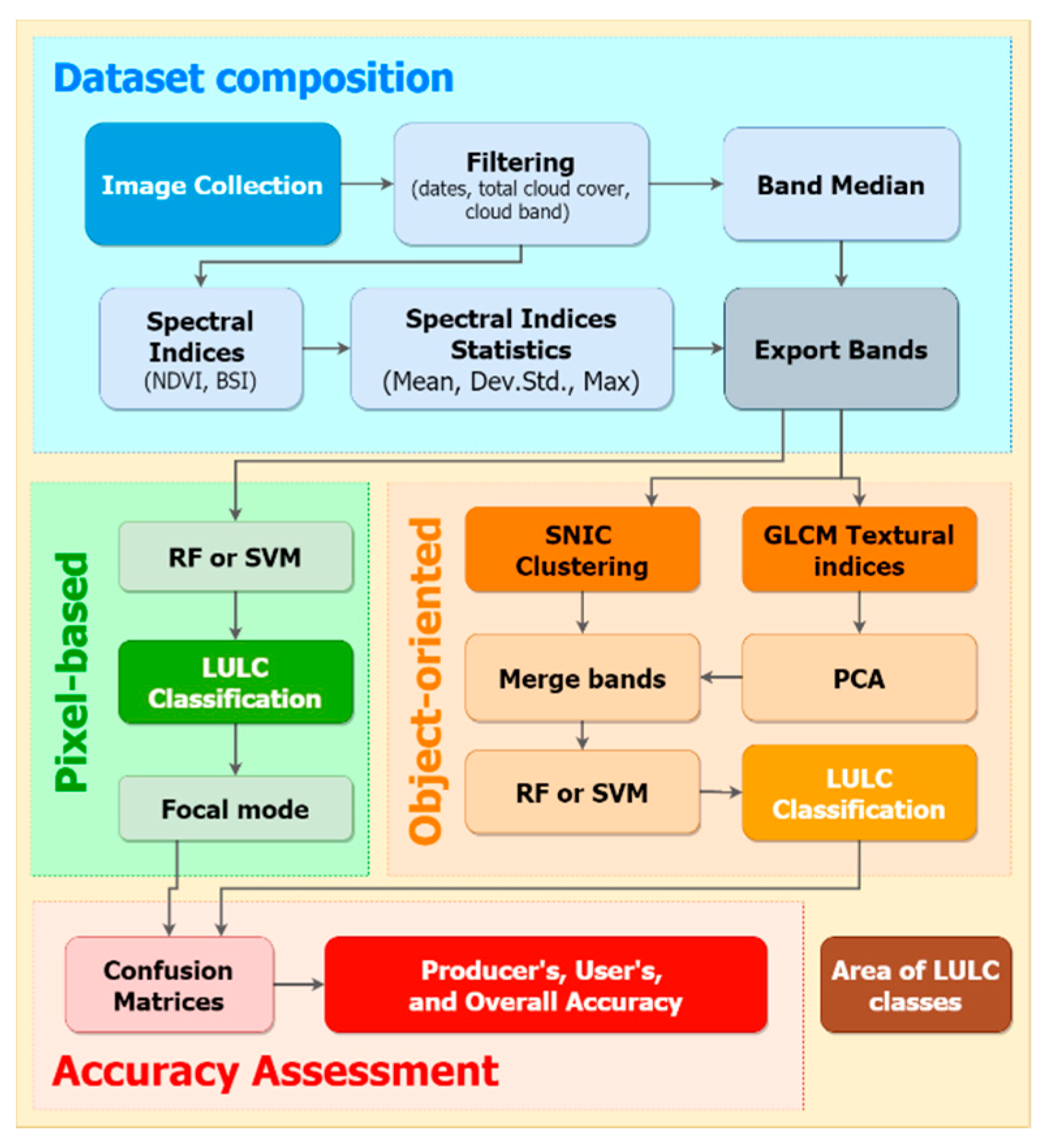

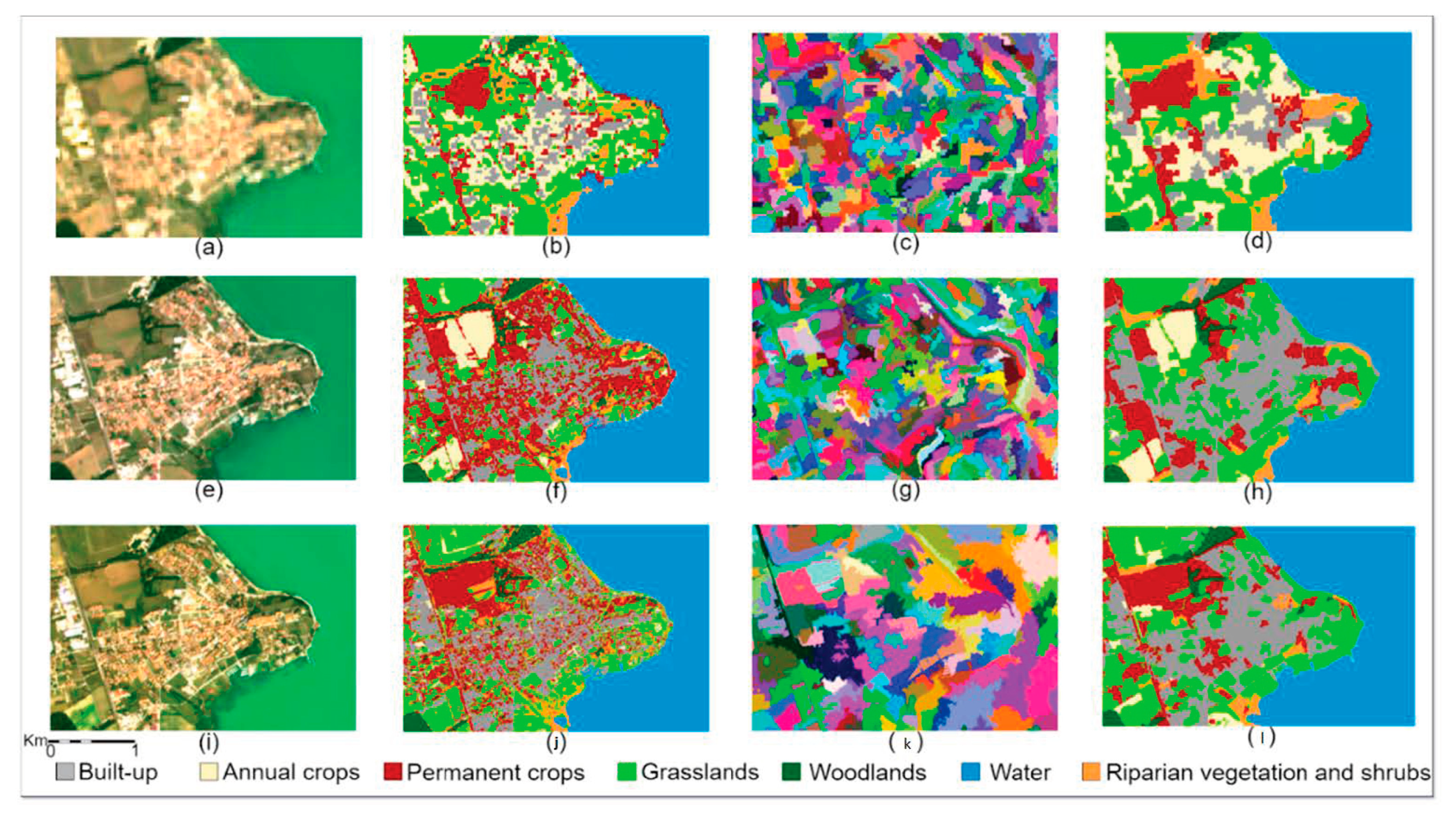

2. Materials and Methods

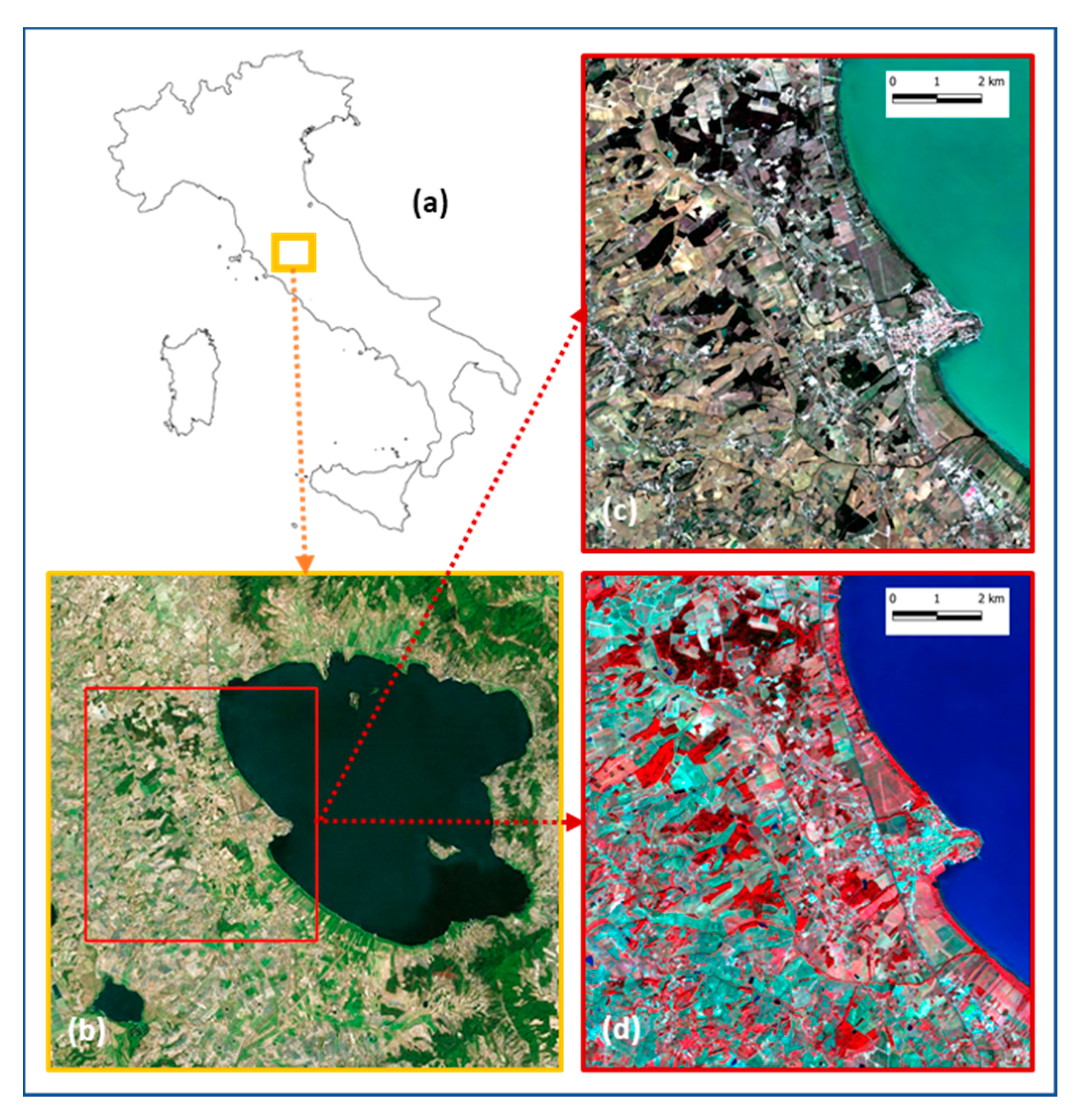

2.1. Study Area

2.2. Training and Validation Sample Data

2.3. Methodology

2.3.1. Dataset Composition

- roi (region of interest), a polygon used to delimitate the study area;

- period of interest, based on the definition of start date (MM-DD-YYYY) and end date (MM’-DD’-YYYY’);

- inBands, which are the input bands selected from the L8 or S2 available bands [45];

- outBands, which are the output bands of the final dataset. As Indicated, they are selected from the median of the inBands and on the other mean, max, and standard deviation of NDVI ad BSI indices.

2.3.2. LULC Classification

- roi: region of interest;

- newfc: a collection of features containing all training data labeled with codes corresponding to LULC classes;

- valpnts: validation points randomly generated and manually labeled with the same LULC code used to assess the model’s accuracy;

- dataset: previously generated in the “Dataset composition” step.

2.3.3. Accuracy Assessment

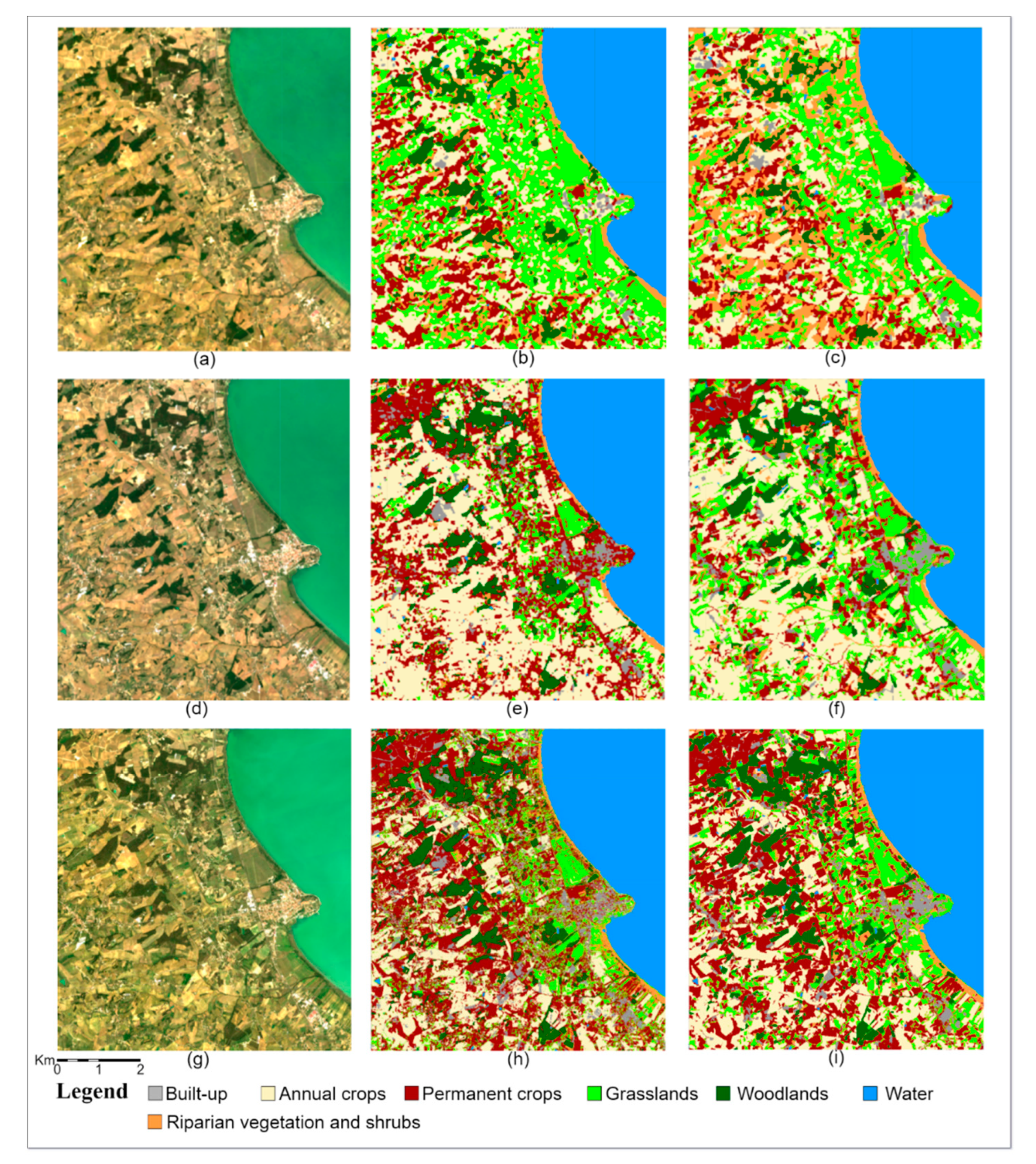

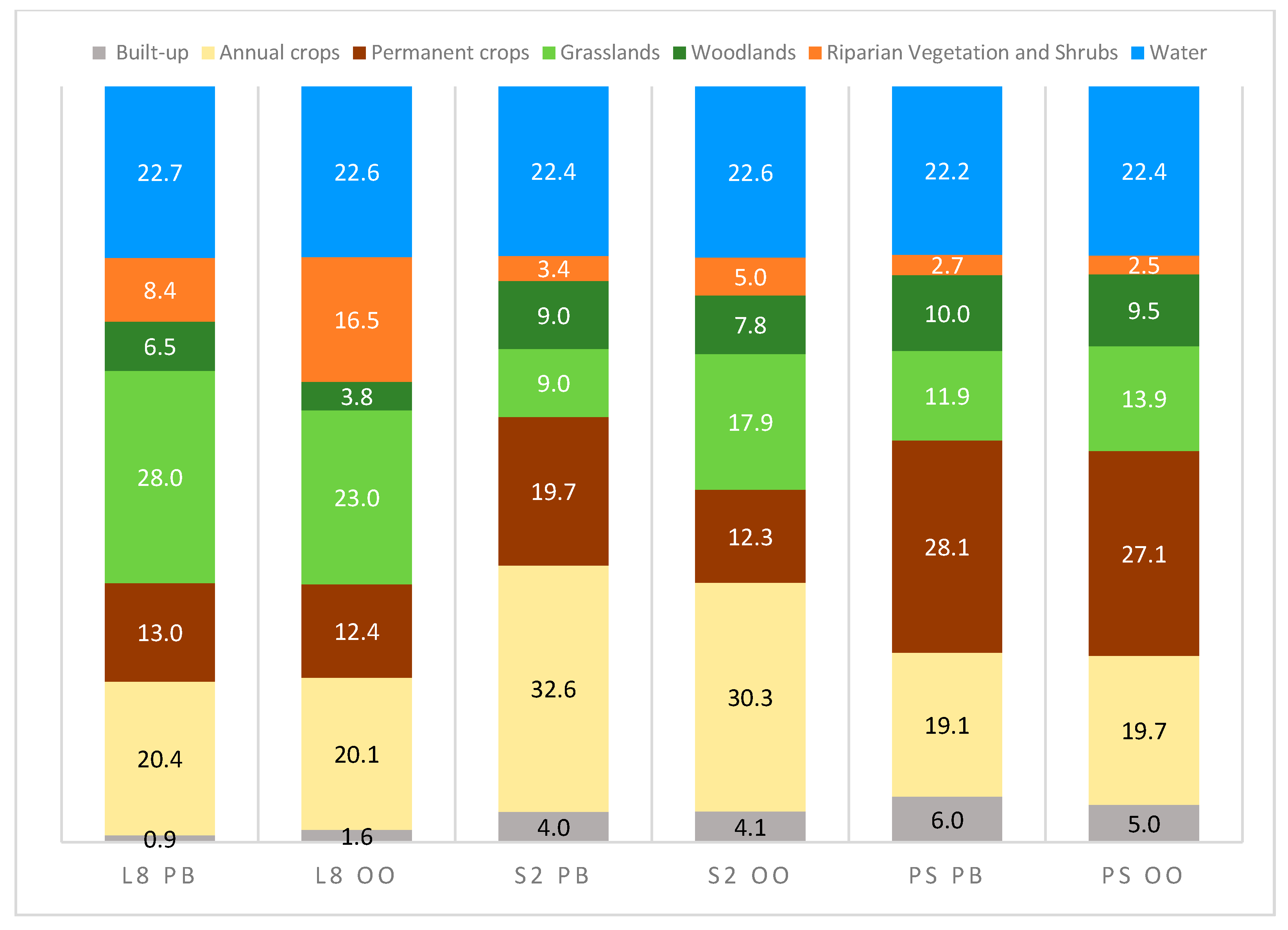

3. Results

4. Discussion

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

References

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Remote Sensing of Environment Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202. [Google Scholar] [CrossRef]

- Shalaby, A.; Tateishi, R. Remote sensing and GIS for mapping and monitoring land cover and land-use changes in the Northwestern coastal zone of Egypt. Appl. Geogr. 2007, 27. [Google Scholar] [CrossRef]

- Vizzari, M.; Sigura, M. Landscape sequences along the urban–rural–natural gradient: A novel geospatial approach for identification and analysis. Landsc. Urban Plan. 2015, 140, 42–55. [Google Scholar] [CrossRef]

- Vizzari, M.; Hilal, M.; Sigura, M.; Antognelli, S.; Joly, D. Urban-rural-natural gradient analysis with CORINE data: An application to the metropolitan France. Landsc. Urban Plan. 2018, 171. [Google Scholar] [CrossRef]

- Griffiths, P.; van der Linden, S.; Kuemmerle, T.; Hostert, P. A Pixel-Based Landsat Compositing Algorithm for Large Area Land Cover Mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6. [Google Scholar] [CrossRef]

- Hermosilla, T.; Wulder, M.A.; White, J.C.; Coops, N.C.; Hobart, G.W. Disturbance-Informed Annual Land Cover Classification Maps of Canada’s Forested Ecosystems for a 29-Year Landsat Time Series. Can. J. Remote Sens. 2018, 67–68. [Google Scholar] [CrossRef]

- Pfeifer, M.; Disney, M.; Quaife, T.; Marchant, R. Terrestrial ecosystems from space: A review of earth observation products for macroecology applications. Glob. Ecol. Biogeogr. 2012. [Google Scholar] [CrossRef]

- Blaschke, T. Object based image analysis for remote sensing. ISPRS J. Photogramm. Remote Sens. 2010, 65, 2–16. [Google Scholar] [CrossRef]

- Solano, F.; Di Fazio, S.; Modica, G. A methodology based on GEOBIA and WorldView-3 imagery to derive vegetation indices at tree crown detail in olive orchards. Int. J. Appl. Earth Obs. Geoinf. 2019, 83, 101912. [Google Scholar] [CrossRef]

- Ren, X.; Malik, J. Learning a classification model for segmentation. In Proceedings of the IEEE International Conference on Computer Vision, Nice, France, 13–16 October 2003. [Google Scholar]

- Messina, G.; Peña, J.M.; Vizzari, M.; Modica, G. A Comparison of UAV and Satellites Multispectral Imagery in Monitoring Onion Crop. An Application in the ‘Cipolla Rossa di Tropea’ (Italy). Remote Sens. 2020, 12, 3424. [Google Scholar] [CrossRef]

- Achanta, R.; Süsstrunk, S. Superpixels and polygons using simple non-iterative clustering. In Proceedings of the 30th IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2017, Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Flanders, D.; Hall-Beyer, M.; Pereverzoff, J. Preliminary evaluation of eCognition object-based software for cut block delineation and feature extraction. Can. J. Remote Sens. 2003, 441–452. [Google Scholar] [CrossRef]

- Hall-Beyer, M. Practical guidelines for choosing GLCM textures to use in landscape classification tasks over a range of moderate spatial scales. Int. J. Remote Sens. 2017, 38, 1312–1338. [Google Scholar] [CrossRef]

- Ghimire, B.; Rogan, J.; Galiano, V.; Panday, P.; Neeti, N. An evaluation of bagging, boosting, and random forests for land-cover classification in Cape Cod, Massachusetts, USA. GIScience Remote Sens. 2012, 623–643. [Google Scholar] [CrossRef]

- Foody, G.M. Status of land cover classification accuracy assessment. Remote Sens. Environ. 2002, 80, 185–201. [Google Scholar] [CrossRef]

- Nery, T.; Sadler, R.; Solis-Aulestia, M.; White, B.; Polyakov, M.; Chalak, M. Comparing supervised algorithms in Land Use and Land Cover classification of a Landsat time-series. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar]

- Tso, B.; Mather, P.M. Classification Methods for Remotely Sensed Data; CRC Press: Boca Raton, FL, USA, 2001. [Google Scholar]

- Gislason, P.O.; Benediktsson, J.A.; Sveinsson, J.R. Random forests for land cover classification. Pattern Recognit. Lett. 2006, 27, 294–300. [Google Scholar] [CrossRef]

- Jin, Y.; Liu, X.; Chen, Y.; Liang, X. Land-cover mapping using Random Forest classification and incorporating NDVI time-series and texture: A case study of central Shandong. Int. J. Remote Sens. 2018, 8703–8723. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Chica-Rivas, M. Evaluation of different machine learning methods for land cover mapping of a Mediterranean area using multi-seasonal Landsat images and Digital Terrain Models. Int. J. Digit. Earth 2014, 492–509. [Google Scholar] [CrossRef]

- De Luca, G.N.; Silva, J.M.; Cerasoli, S.; Araújo, J.; Campos, J.; Di Fazio, S.; Modica, G. Object-Based Land Cover Classification of Cork Oak Woodlands using UAV Imagery and Orfeo ToolBox. Remote Sens. 2019, 11, 1238. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Wang, Y.; Li, Z.; Zeng, C.; Xia, G.-S.; Shen, H. An Urban Water Extraction Method Combining Deep Learning and Google Earth Engine. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 769–782. [Google Scholar] [CrossRef]

- Cheng, G.; Han, J.; Lu, X. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar] [CrossRef]

- Abadi, M.; Barham, P.; Chen, J.; Chen, Z.; Davis, A.; Dean, J.; Devin, M.; Ghemawat, S.; Irving, G.; Isard, M.; et al. TensorFlow: A system for large-scale machine learning. In Proceedings of the 12th USENIX Symposium on Operating Systems Design and Implementation, OSDI 2016, Savannah, GA, USA, 2–4 November 2016. [Google Scholar]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Homayouni, S.; Gill, E. The first wetland inventory map of newfoundland at a spatial resolution of 10 m using sentinel-1 and sentinel-2 data on the Google Earth Engine cloud computing platform. Remote Sens. 2019, 11, 43. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Brisco, B.; Homayouni, S.; Gill, E.; DeLancey, E.R.; Bourgeau-Chavez, L. Big Data for a Big Country: The First Generation of Canadian Wetland Inventory Map at a Spatial Resolution of 10-m Using Sentinel-1 and Sentinel-2 Data on the Google Earth Engine Cloud Computing Platform. Can. J. Remote Sens. 2020, 46, 15–33. [Google Scholar] [CrossRef]

- Paludo, A.; Becker, W.R.; Richetti, J.; Silva, L.C.D.A.; Johann, J.A. Mapping summer soybean and corn with remote sensing on Google Earth Engine cloud computing in Parana state–Brazil. Int. J. Digit. Earth 2020, 1–13. [Google Scholar] [CrossRef]

- Ghorbanian, A.; Kakooei, M.; Amani, M.; Mahdavi, S.; Mohammadzadeh, A.; Hasanlou, M. Improved land cover map of Iran using Sentinel imagery within Google Earth Engine and a novel automatic workflow for land cover classification using migrated training samples. ISPRS J. Photogramm. Remote Sens. 2020, 167, 276–288. [Google Scholar] [CrossRef]

- Djerriri, K.; Safia, A.; Adjoudj, R. Object-Based Classification of Sentinel-2 Imagery Using Compact Texture Unit Descriptors Through Google Earth Engine. In Proceedings of the 2020 Mediterranean and Middle-East Geoscience and Remote Sensing Symposium, M2GARSS 2020, Tunis, Tunisia, 9–11 March 2020. [Google Scholar]

- Firigato, J.O.N. Object Based Image Analysis on Google Earth Engine. Available online: https://medium.com/@joaootavionf007/object-based-image-analysis-on-google-earth-engine-1b80e9cb7312 (accessed on 3 July 2020).

- Stromann, O.; Nascetti, A.; Yousif, O.; Ban, Y. Dimensionality Reduction and Feature Selection for Object-Based Land Cover Classification based on Sentinel-1 and Sentinel-2 Time Series Using Google Earth Engine. Remote Sens. 2020, 12, 76. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.S.; Tilton, J.C.; Gumma, M.K.; Teluguntla, P.; Oliphant, A.; Congalton, R.G.; Yadav, K.; Gorelick, N. Nominal 30-m cropland extent map of continental Africa by integrating pixel-based and object-based algorithms using Sentinel-2 and Landsat-8 data on google earth engine. Remote Sens. 2017, 9, 1065. [Google Scholar] [CrossRef]

- Godinho, S.; Guiomar, N.; Gil, A. Estimating tree canopy cover percentage in a mediterranean silvopastoral systems using Sentinel-2A imagery and the stochastic gradient boosting algorithm. Int. J. Remote Sens. 2018, 4640–4662. [Google Scholar] [CrossRef]

- Mananze, S.; Pôças, I.; Cunha, M. Mapping and assessing the dynamics of shifting agricultural landscapes using google earth engine cloud computing, a case study in Mozambique. Remote Sens. 2020, 12, 1279. [Google Scholar] [CrossRef]

- Sarzynski, T.; Giam, X.; Carrasco, L.; Lee, J.S.H. Combining Radar and Optical Imagery to Map Oil Palm Plantations in Sumatra, Indonesia, Using the Google Earth Engine. Remote Sens. 2020, 12, 1220. [Google Scholar] [CrossRef]

- Smits, P.C.; Dellepiane, S.G.; Schowengerdt, R.A. Quality assessment of image classification algorithms for land-cover mapping: A review and a proposal for a cost-based approach. Int. J. Remote Sens. 1999, 1461–1486. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37. [Google Scholar] [CrossRef]

- Richards, J.A.; Jia, X. Remote Sensing Digital Image Analysis: An Introduction; Springer: Berlin, Germany, 2006; ISBN 3540251286. [Google Scholar]

- Liu, C.; Frazier, P.; Kumar, L. Comparative assessment of the measures of thematic classification accuracy. Remote Sens. Environ. 2007, 107. [Google Scholar] [CrossRef]

- Foody, G.M. Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification. Remote Sens. Environ. 2020, 239, 111630. [Google Scholar] [CrossRef]

- Adam, H.E.; Csaplovics, E.; Elhaja, M.E. A comparison of pixel-based and object-based approaches for land use land cover classification in semi-arid areas, Sudan. In Proceedings of the IOP Conference Series: Earth and Environmental Science, Kuala Lumpur, Malaysia, 13–14 April 2016. [Google Scholar]

- Aggarwal, N.; Srivastava, M.; Dutta, M. Comparative Analysis of Pixel-Based and Object-Based Classification of High Resolution Remote Sensing Images—A Review. Int. J. Eng. Trends Technol. 2016, 38. [Google Scholar] [CrossRef]

- Cecchetti, A.; Lazzerini, G. Vegetazione, Habitat di Interesse Comunitario, uso del Suolo del Parco del Lago Trasimeno. 2005. Available online: https://www.regione.umbria.it/documents/18/6593575/trasimeno_pptVAS_mag_15.pdf/698f64e0-fa49-4038-ba75-b063a0a80607?version=1.0 (accessed on 17 November 2020).

- Bodesmo, M.; Pacicco, L.; Romano, B.; Ranfa, A. The role of environmental and socio-demographic indicators in the analysis of land use changes in a protected area of the Natura 2000 Network: The case study of Lake Trasimeno, Umbria, Central Italy. Environ. Monit. Assess. 2012, 184. [Google Scholar] [CrossRef]

- Giardino, C.; Bresciani, M.; Villa, P.; Martinelli, A. Application of Remote Sensing in Water Resource Management: The Case Study of Lake Trasimeno, Italy. Water Resour. Manag. 2010, 24. [Google Scholar] [CrossRef]

- Hansen, M.C.; Roy, D.P.; Lindquist, E.; Adusei, B.; Justice, C.O.; Altstatt, A. A method for integrating MODIS and Landsat data for systematic monitoring of forest cover and change in the Congo Basin. Remote Sens. Environ. 2008, 112. [Google Scholar] [CrossRef]

- Bwangoy, J.R.B.; Hansen, M.C.; Roy, D.P.; De Grandi, G.; Justice, C.O. Wetland mapping in the Congo Basin using optical and radar remotely sensed data and derived topographical indices. Remote Sens. Environ. 2010, 114. [Google Scholar] [CrossRef]

- Lunetta, R.S.; Knight, J.F.; Ediriwickrema, J.; Lyon, J.G.; Worthy, L.D. Land-cover change detection using multi-temporal MODIS NDVI data. Remote Sens. Environ. 2006, 105. [Google Scholar] [CrossRef]

- Woodcock, C.E.; Macomber, S.A.; Kumar, L. Vegetation mapping and monitoring. In Environmental Modelling with GIS and Remote Sensing; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Singh, R.P.; Singh, N.; Singh, S.; Mukherjee, S. Normalized Difference Vegetation Index (NDVI) Based Classification to Assess the Change in Land Use/Land Cover (LULC) in Lower Assam, India. Int. J. Adv. Remote Sens. GIS 2016, 5. [Google Scholar] [CrossRef]

- Polykretis, C.; Grillakis, M.G.; Alexakis, D.D. Exploring the impact of various spectral indices on land cover change detection using change vector analysis: A case study of Crete Island, Greece. Remote Sens. 2020, 12, 319. [Google Scholar] [CrossRef]

- Baetens, L.; Desjardins, C.; Hagolle, O. Validation of copernicus Sentinel-2 cloud masks obtained from MAJA, Sen2Cor, and FMask processors using reference cloud masks generated with a supervised active learning procedure. Remote Sens. 2019, 11, 433. [Google Scholar] [CrossRef]

- Nyland, K.E.; Gunn, G.E.; Shiklomanov, N.I.; Engstrom, R.N.; Streletskiy, D.A. Land cover change in the lower Yenisei River using dense stacking of landsat imagery in Google Earth Engine. Remote Sens. 2018, 10, 1226. [Google Scholar] [CrossRef]

- Xie, S.; Liu, L.; Zhang, X.; Yang, J.; Chen, X.; Gao, Y. Automatic land-cover mapping using landsat time-series data based on google earth engine. Remote Sens. 2019, 11, 3023. [Google Scholar] [CrossRef]

- Chen, D.M.; Stow, D. The effect of training strategies on supervised classification at different spatial resolutions. Photogramm. Eng. Remote Sens. 2002, 68, 1155–1161. [Google Scholar]

- QGIS Development Team. QGIS Geographic Information System. Open Source Geospatial Foundation Project. 2020. Available online: http://qgis.osgeo.org (accessed on 16 November 2020).

- Al-Saady, Y.; Merkel, B.; Al-Tawash, B.; Al-Suhail, Q. Land use and land cover (LULC) mapping and change detection in the Little Zab River Basin (LZRB), Kurdistan Region, NE Iraq and NW Iran. FOG—Freib. Online Geosci. 2015, 43, 1–32. [Google Scholar]

- Forghani, A.; Cechet, B.; Nadimpalli, K. Object-based classification of multi-sensor optical imagery to generate terrain surface roughness information for input to wind risk simulation. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Barcelona, Spain, 23–27 July 2007. [Google Scholar]

- Ryherd, S.; Woodcock, C. Combining Spectral and Texture Data in the Segmentation of Remotely Sensed Images. Photogramm. Eng. Remote Sens. 1996, 62, 181–194. [Google Scholar]

- Mueed Choudhury, M.A.; Costanzini, S.; Despini, F.; Rossi, P.; Galli, A.; Marcheggiani, E.; Teggi, S. Photogrammetry and Remote Sensing for the identification and characterization of trees in urban areas. J. Phys. Conf. Ser. Inst. Phys. Publ. 2019, 1249, 12008. [Google Scholar] [CrossRef]

| CLASS | Number of Validation Points |

|---|---|

| Built-up | 35 |

| Annual crops | 154 |

| Permanent crops | 40 |

| Grasslands | 59 |

| Woodlands | 65 |

| Riparian Vegetation or Shrubs | 17 |

| Water | 80 |

| Total | 450 |

| Bands | Description |

|---|---|

| Angular Second Moment (ASM) | Measures the uniformity or energy of the gray level distribution of the image |

| Contrast | Measures the contrast based on the local gray level variation |

| Correlation | Measures the linear dependency of gray levels of neighboring pixels |

| Entropy | Measures the degree of the disorder among pixels in the image |

| Variance | Measures the dispersion of the gray level distribution to emphasize the visual edges of land-cover patches |

| Inverse Difference Moment (IDM) | Measures the smoothness (homogeneity) of the gray level distribution |

| Sum Average (SAVG) | Measures the mean of the gray level sum distribution of the image |

| Sat | Classifier | PB | OO Without GLCM | OO With GLCM | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| 5 (35) | 10 (40) | 15 (45) | 20 (50) | 5 (35) | 10 (40) | 15 (45) | 20 (50) | |||

| L8 | RF | 72.7 | 68.0 | 58.9 | 48.7 | 38.7 | 64.0 | 57.3 | 54.6 | 58.4 |

| SVM | 79.1 | 78.4 | 71.3 | 61.1 | 73.1 | 70.4 | 67.6 | 61.3 | 64.4 | |

| S2 | RF | 82 | 83.6 | 82.2 | 86.6 | 83.3 | 83.5 | 83.8 | 89.3 | 82.9 |

| SVM | 80.2 | 80.9 | 84.2 | 85.3 | 82.4 | 83.8 | 86.2 | 86.9 | 84 | |

| PS | RF | 74.2 | 74 | 72.4 | 71.8 | 70.4 | 76.7 | 77.9 | 76.3 | 72.9 |

| SVM | 74.8 | 72.4 | 73.1 | 70.9 | 70.8 | 74.9 | 73.9 | 73.6 | 71.7 | |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tassi, A.; Vizzari, M. Object-Oriented LULC Classification in Google Earth Engine Combining SNIC, GLCM, and Machine Learning Algorithms. Remote Sens. 2020, 12, 3776. https://doi.org/10.3390/rs12223776

Tassi A, Vizzari M. Object-Oriented LULC Classification in Google Earth Engine Combining SNIC, GLCM, and Machine Learning Algorithms. Remote Sensing. 2020; 12(22):3776. https://doi.org/10.3390/rs12223776

Chicago/Turabian StyleTassi, Andrea, and Marco Vizzari. 2020. "Object-Oriented LULC Classification in Google Earth Engine Combining SNIC, GLCM, and Machine Learning Algorithms" Remote Sensing 12, no. 22: 3776. https://doi.org/10.3390/rs12223776

APA StyleTassi, A., & Vizzari, M. (2020). Object-Oriented LULC Classification in Google Earth Engine Combining SNIC, GLCM, and Machine Learning Algorithms. Remote Sensing, 12(22), 3776. https://doi.org/10.3390/rs12223776