Abstract

The retrieval of aerosol and cloud properties such as their optical thickness and/or layer/top height requires the selection of a model that describes their microphysical properties. We demonstrate that, if there is not enough information for an appropriate microphysical model selection, the solution’s accuracy can be improved if the model uncertainty is taken into account and appropriately quantified. For this purpose, we design a retrieval algorithm accounting for the uncertainty in model selection. The algorithm is based on (i) the computation of each model solution using the iteratively regularized Gauss–Newton method, (ii) the linearization of the forward model around the solution, and (iii) the maximum marginal likelihood estimation and the generalized cross-validation to estimate the optimal model. The algorithm is applied to the retrieval of aerosol optical thickness and aerosol layer height from synthetic measurements corresponding to the Earth Polychromatic Imaging Camera (EPIC) instrument onboard the Deep Space Climate Observatory (DSCOVR) satellite. Our numerical simulations show that the heuristic approach based on the thesolution minimizing the residual, which is frequently used in literature, is completely unrealistic when both the aerosol model and surface albedo are unknown.

1. Introduction

The limited information provided by satellite measurements does not allow for a complete determination of aerosol and cloud properties and, in particular, their microphysical properties. To deal with this problem, a set of models describing the microphysical properties is typically used. For example, the aerosol models implemented in the Moderate Resolution Imaging Spectroradiometer (MODIS) aerosol algorithm over land [1] consist of three spherical, fine-dominated types and one spheroidal, coarse-dominated type, while typical cloud models consist of water and ice clouds with a predefined particle shape and size. In this regard, a priori information such as the selection of a microphysical model is an essential part of the retrieval process. Standard retrieval algorithms usually ignore model uncertainty, i.e., a model is chosen from a given set of candidate models, and the retrieval is performed as if the selected model represents the true state. This approach is very risky and may result in large errors in the retrieved parameters if the model does not reflect the real scenario. An efficient way to quantify the uncertainty in model selection is the Bayesian approach and, in particular, Bayesian model selection and model averaging [2]. By model selection, we mean the specific problem of choosing the most suitable model from a given set of candidate models. In general, model selection is not a trivial task because for a given measurement, there will be several models that fit the data equally well. These tools were used, among others, in Refs. [3,4] to study uncertainty quantification in remote sensing of aerosols from Ozone Monitoring Instrument (OMI) data.

The key quantity in Bayesian model selection is relative evidence, which is a measure of how well the model fits the measurement. This is expressed in terms of the marginal likelihood, which is the integral over the state vector space of the a priori density times the likelihood density (see below). An accurate computation of the marginal likelihood is not a trivial task because some parameters characterizing the a priori and likelihood densities, e.g., the data error variance and the a priori state variance, are not precisely known and must be estimated. Moreover, the integral over the state vector space cannot be computed analytically because the likelihood density depends on the nonlinear forward model, for which an analytical representation is not available.

In this paper, we aim to eliminate the drawbacks of the Bayesian approach by using:

- an iteratively regularized Gauss–Newton method for computing the solution of each model and estimating the ratio of the data error variance and the a priori state variance;

- a linearization of the forward model around the solution for integrating the likelihood density over the state vector;

- parameter choice methods from linear regularization theory for estimating the optimal model and the data error variance.

The paper is organized as follows. In Section 2, we derive a scaled data model with white noise in order to simplify the subsequent analysis. Section 3 is devoted to a review of the Bayesian parameter estimation and model selection in order to describe the problem and clarify the nomenclature. In Section 4, we summarize the iteratively regularized Gauss–Newton method and emphasize some special features of this method, including the estimation of the ratio of the data error variance and the a priori state variance. In Section 5, we assume a linearization of the forward model around the solution and, under this assumption, extend some regularization parameter choice methods for linear problems (the maximum marginal likelihood estimation and the generalized cross-validation) for data error variance estimation and model selection. Section 6 summarizes the computational steps of the proposed retrieval algorithm. In Section 7, we apply the algorithm to the retrieval of aerosol optical thickness and aerosol layer height from the Earth Polychromatic Imaging Camera (EPIC)/Deep Space Climate Observatory (DSCOVR) measurements.

2. Data Model

Consider microphysical models, and let be the forward model corresponding to the model m for . More specifically, in our analysis, is the vector of the logarithms of the simulated radiances at all wavelengths , , i.e., , and is the state vector encapsulating the atmospheric parameters to be retrieved, e.g., aerosol optical thickness and layer height.

The nonlinear data model under examination consists of the equations:

and:

where is the measurement vector or the noisy data vector, the exact data vector, the measurement error vector, and the model error vector, i.e., the error due to an inadequate model. Defining the (total) data error vector by:

the data model (1) and (2) becomes:

In a deterministic setting, is characterized by the noise level (defined as an upper bound for , i.e., ), is a deterministic vector, and we are faced with the solution of the nonlinear equation . In a stochastic setting, and are random vectors, and the data model (3) is solved by means of a Bayesian approach.

Using the prewhitening technique, we transform the data model (3) into a model with white noise. For this purpose, we take:

- as a Gaussian random vector with zero mean and covariance matrix:and

- as a Gaussian random vector with zero mean and covariance matrix:

If and are independent random vectors, then is a Gaussian random vector with zero mean and covariance matrix:

where:

is the variance of the data error vector and:

is the weighting factor giving the contribution of to the covariance matrix . After these preliminary constructions, we introduce the scaled data model:

where:

and:

is the scaling matrix. Taking into account that:

we see that by means of the prewhitening technique, the Gaussian random vector is transformed into the white noise . Here and in the following, the notation stands for a normal distribution with mean and covariance matrix .

The following comments can be taken into consideration:

- In [3], the covariance matrix was estimated by empirically exploring a set of residuals of model fits to the measurements. Essentially, is assumed to be in the form:where , , and l (representing the non-spectral diagonal variance, the spectral variance, and the correlation length, respectively) are computed by means of an empirical semivariogram, i.e., the variances of the residual differences are calculated for each wavelength pair with the distance , and a theoretical Gaussian variogram model is fitted to these empirical semivariogram values. As compared to Equation (6), in our analysis, we use the diagonal matrix approximation with .

- In principle, the scaling matrix depends on the model error variance through the weighting factor . However, for , we usually have for all . In this case, it follows that , together with and , are close to the identity matrix. This result does not mean that in our model, does not play any role; is included in , which is the subject of an estimation process.

3. Bayesian Approach

In this section, we review the basics of Bayesian parameter estimation and model selection by following the analysis given in Refs. [2,3].

3.1. Bayesian Parameter Estimation

In statistical inversion theory, the state vector is assumed to be a random vector, and in this regard, we take , where is the a priori state vector, the best beforehand estimate of the solution, and:

Furthermore, the matrix is defined through the Cholesky factorization:

and the parameter is defined as:

The data model (3) gives a relation between the three random vectors , , and , and therefore, their probability densities depend on each other. The following probability densities are relevant in Bayesian parameter estimation: (i) the a priori density , which represents the conditional probability density of given the model m before performing the measurement , (ii) the likelihood density , which represents the conditional probability density of given the state and the model m, and (iii) the a posteriori density , which represents the conditional probability density of given the data and the model m.

The Bayes theorem of inverse problems relates the a posteriori density to the likelihood density:

In Bayesian parameter estimation, the marginal likelihood density , defined by:

plays the role of a normalization constant and is usually ignored. However, as we will see in the next section, this density is of particular importance in Bayesian model selection.

For and , the probability densities and are given by:

and:

respectively. The Bayes formula yields the following expression for the a posteriori density:

where the a posteriori potential is defined by:

The maximum a posteriori estimator maximizing the conditional probability density also minimizes the potential , i.e.,

3.2. Bayesian Model Selection

The relative evidence, also known as the a posteriori probability of the model m given the measurement , , is used for model comparison. By taking into consideration the Bayes theorem, this conditional probability density is defined by:

The mean formula:

and the assumption that all models are equally likely, i.e., , yield:

Intuitively, the model with the highest evidence is the best among all the models involved, and in this regard, we define the maximum solution estimate as:

In fact, we can compare the models to see if one of them clearly shows the highest evidence, or if there are several models with comparable values of evidence. When several models can provide equally good fits to the measurement, we can use the Bayesian model averaging technique to combine the individual a posteriori densities weighted by their evidence. Specifically, using the relation:

on the one hand, and , on the other hand, we are led to the Bayesian model averaging formula:

and, consequently, to the mean solution estimate:

The above analysis shows that in Bayesian parameter estimation and model selection, we are faced with the following problems:

- Problem 1.

- Problem 2.

- From Equations (15) and (17), we see that the solution estimates and are expressed in terms of relative evidence , which in turn, according to Equation (14), is expressed in terms of the marginal likelihood density . In view of Equation (8), the computation of the marginal likelihood density requires the knowledge of the likelihood density , and therefore of Equation (10), of the data error variance .

- Problem 3.

- The dependency of the likelihood density on the nonlinear forward model does not allow an analytical integration in Equation (8).

4. Iteratively Regularized Gauss–Newton Method

In the framework of Tikhonov regularization, a regularized solution to the nonlinear equation is computed as the minimizer of the Tikhonov function:

that is,

In classical regularization theory, the first term on the right-hand side of Equation (18) is the squared residual; the second one is the penalty term; while and are the regularization parameter and the regularization matrix, respectively. From Equations (12) and (18), we see that , and from Equations (13) and (19), we deduce that . Thus, the maximum a posteriori estimate coincides with the Tikhonov solution, and therefore, the Bayesian parameter estimation can be regarded as a stochastic version of the method of Tikhonov regularization with an a priori chosen regularization parameter .

In the framework of Tikhonov regularization, the computation of the optimal regularization parameter is a crucial issue. With too little regularization, reconstructions deviate significantly from the a priori, and the solution is said to be under-regularized. With too much regularization, the reconstructions are too close to the a priori, and the solution is said to be over-regularized. In the Bayesian framework, the optimal regularization parameter is identified as the true ratio of the data error variance and the a priori state variance.

Several regularization parameter choice methods were discussed in [5]. These include methods with constant regularization parameters, e.g., the maximum likelihood estimation, the generalized cross-validation, and the nonlinear L-curve method. Unfortunately, at present, there is no fail-safe regularization parameter choice method that guarantees small solution errors in any circumstance, that is for any errors in the data vector.

An improvement of the problems associated with the regularization parameter selection is achieved in the framework of the so-called iterative regularization methods. These approaches are less sensitive to overestimates of the regularization parameter, but require more iteration steps to achieve convergence. A representative iterative approach is the iteratively regularized Gauss–Newton method.

At iteration step k of the iteratively regularized Gauss–Newton method, the forward model is linearized around the current iterate ,

where:

is the Jacobian matrix at , and the nonlinear equation is replaced by its linearization:

where:

is the step vector with respect to the a priori and:

is the linearized data vector at iteration step k.

Since the nonlinear problem is ill-posed, its linearization is also ill-posed. Therefore, the linearized Equation (20) is solved by means of Tikhonov regularization with the penalty term , where the regularization parameters are the terms of a decreasing (geometric) sequence, i.e., with . Note that in the method of Tikhonov regularization, the regularization parameter is kept constant during the iterative process. The Tikhonov function for the linearized equation takes the form:

and its minimizer is given by:

where:

is the regularized generalized inverse at . The new iterate is computed as:

For the iterative regularization methods, the number of iteration steps k plays the role of the regularization parameter, and the iterative process is stopped after an appropriate number of steps in order to avoid an uncontrolled expansion of errors in the data. In fact, a mere minimization of the residual , where:

is the residual vector at , leads to a semi-convergent behavior of the iterated solution: while the error in the residual decreases as the number of iteration steps increases, the error in the solution starts to increase after an initial decay. A widely used a posteriori choice for the stopping index is the discrepancy principle. According to this principle, the iterative process is terminated after steps such that:

with ; hence, the regularized solution is . Since the noise level cannot be estimated a priori for many practical problems arising in atmospheric remote sensing, we adopt a practical approach. This is based on the observation that the squared residual decreases during the iterative process and attains a plateau at approximately . Thus, if the nonlinear residuals converge to within a prescribed tolerance, we use the estimate:

The above heuristic stopping rule does not have any mathematical justification, but works sufficiently well in practice.

Since the amount of regularization is gradually decreased during the iterative processes, the iteratively regularized Gauss–Newton method can handle problems that practically do not require much regularization.

The numerical experiments performed in [5] showed that at the solution , (i) is close to the optimal regularization parameter, and (ii) is close to the Tikhonov solution corresponding to the optimal regularization parameter. In this regard, we make the following assumptions:

- is an estimate for the optimal regularization parameter, and

- is the minimizer of the Tikhonov function with regularization parameter , .

Some consequences of the second assumption are the following. The optimality condition , yields:

and further,

where ,

and:

Consequently, we see that:

is the minimizer of the Tikhonov function:

and that the residual vector of the linear equation is equal to the nonlinear residual vector at the solution, i.e.,

In summary, in the iteratively regularized Gauss–Newton method:

- the computation of the regularized solution depends only on the initial value and the ratio q of the geometric sequence which determine the rate of convergence, and

- the regularization parameter at the solution is an estimate for the optimal regularization parameter, and so, for the ratio of the data error variance and the a priori state variance.

Taking these results into account, we may conclude that the iteratively regularized Gauss–Newton method gives a solution to Problem 1 of the Bayesian parameter estimation.

5. Parameter Estimation and Model Selection

In this section, we address Problems 2 and 3 related to Bayesian model selection.

To solve Problem 3, we suppose that the forward model can be linearized around the solution [6]. In other words, in the first-order Taylor expansion:

the remainder term can be neglected. As a result, the nonlinear data model becomes the linear model:

where is given by Equation (21), and . The direct consequences of dealing with the linear model (26) are the following results:

- the a posteriori density can be expressed as a Gaussian distribution:where:is the a posteriori covariance matrix, and

- as shown in Appendix A, the marginal likelihood density can be computed analytically; the result is:where is the influence matrix at the solution .

The replacement of the nonlinear data model by a linear data model will also enable us to deal with Problem 2. More specifically, for this purpose, we will adapt some regularization parameter choice methods for linear problems, i.e., maximum marginal likelihood estimation [7,8,9] and generalized cross-validation [10,11], to model selection. Furthermore, estimates for the data error variance delivered by these methods will be used to compute the marginal likelihood density and, therefore, the relative evidence.

In Appendix B, it is shown that:

- in the framework of maximum marginal likelihood estimation, the data error variance can be estimated by:and the model with the smallest value of the marginal likelihood function, defined by:is optimal.

- in the framework of generalized cross-validation, the data error variance can be estimated by:and the model with the smallest value of the generalized cross-validation function, defined by:is optimal.

Denoting by and the marginal likelihoods corresponding to and , respectively, we define:

- the relative evidence of an approach based on marginal likelihood and the computation of the data error variance in the framework of the Maximum Marginal Likelihood Estimation (MLMMLE) by:

- the relative evidence of an approach based on the marginal likelihood and computation of the data error variance in the framework of the Generalized Cross-Validation (MLGCV) by:

On the other hand, the statements (30) and (32) are equivalent to (cf. Equation (15)):

and:

respectively. Deviating from a stochastic interpretation of the relative evidence and regarding this quantity in a deterministic setting merely as a merit function characterizing the solution , we define:

- the relative evidence of an approach based on Maximum Marginal Likelihood Estimation (MMLE) by:

- the relative evidence of an approach based on Generalized Cross-Validation (GCV) by:

Note that and do not depend on the data error variance . Note also that is defined in terms of the square residual:

while, according to Equation (29), is defined in terms of the quantity . The same difference exists between the numerators of the cross-validation function and the marginal likelihood function (see Equations (32) and (30)).

6. Algorithm Description

An algorithmic implementation of the iteratively regularized Gauss–Newton method in connection with model selection is as follows:

- compute the scaling matrix by means of Equation (5) and the scaled data vector ;

- given the current iterate at step k, compute the forward model , the Jacobian matrix , and the scaled quantities and ;

- compute the linearized data vector:

- compute the singular value decomposition of the quotient matrix , where with for is a diagonal matrix containing the singular values in decreasing order and and are orthogonal matrices containing the left and right singular column vectors and , respectively;

- if , choose , where and are the largest and the smallest singular values, respectively; otherwise, set ;

- compute the minimizer of the Tikhonov function for the linearized equation,and the new iterate, ;

- compute the nonlinear residual vector at ,and the residual ;

- compute the condition number , the scalar quantities:and the normalized covariance matrix:where ;

- if , recompute by means of a step-length algorithm such that ; if the residual cannot be reduced, set . and go to Step 12;

- compute the relative decrease in the residual ;

- if , go to Step 1; otherwise set , and go to Step 12;

- determine such that , for all ;

- since , , , and , compute the estimates:the marginal likelihood:where X stands for the character strings MLMMLE and MLGCV when the character variable Y takes the values L and G, respectively, and the covariance matrix,

The control parameters of the algorithm are: (i) the ratio q of the geometric sequence of regularization parameters, (ii) the minimum acceptable value of the regularization parameter , (iii) the tolerance of the residual test convergence, and (iv) the tolerance of the discrepancy principle stopping rule.

We conclude our theoretical analysis with some comments:

- Another estimate for the data error variance is derived in Appendix C; this is given by:

- If a model is far from reality, it is natural to assume that the model parameter errors are large or, equivalently, that the data error variance is large. This observation suggests that, in a deterministic setting, we may define the relative evidence as:where the character variable Y takes the values R, L, or G. In this case, for , the model with the smallest value of the squared residual is considered to be optimal.

7. Application to the EPIC Instrument

The Deep Space Climate Observatory (DSCOVR) flies in a Lissajous orbit about the first Earth-Sun Lagrange point (L), which is 1.5 million kilometers from the Earth towards the Sun. The Earth Polychromatic Imaging Camera (EPIC) is one of the two Earth observing instruments on board DSCOVR. This instrument views the entire sunlit side of the Earth from sunrise to sunset in the backscattering direction (scattering angles between 168.5° and 175.5°) with 10 narrowband filters, ranging from 317.5 to 779.5 nm [12]. This unique Earth observing geometry of EPIC compared to other instruments in Sun-synchronous orbits, that rarely view Earth at such large scattering angles [13], makes it a suitable candidate for several climate science applications including the measurement of cloud reflectivity and aerosol optical thickness and layer height [14].

We apply the algorithm proposed in Section 6 to the retrieval of aerosol optical thickness and aerosol layer height by generating synthetic measurements corresponding to the EPIC instrument. Specifically, Channels 7 and 8 in the Oxygen B-band at 680 and 687.75 nm, respectively, and Channels 9 and 10 in the Oxygen A-band at 764 and 779.5 nm, respectively, are used in the retrieval. The linearized radiative transfer model is based on the discrete ordinates method with matrix exponential and uses the correlated k-distribution method in conjunction with the principal component analysis technique to speed up the computations [15,16].

We consider the aerosol models implemented in the Moderate Resolution Imaging Spectroradiometer (MODIS) aerosol retrieval algorithm over land [1]. There are three spherical, fine-dominated model types (non-absorbing, moderately absorbing, and absorbing) and one spheroid, coarse-dominated model type (dust). These aerosol models, which depend on location and season, are the result of a cluster analysis of the climatology of almucantar retrievals from global AERONET (AErosol RObotic NETwork) measurements [17]. Each model consists of two log-normal modes (accumulated and coarse). A single log-normal mode is described by the number size distribution:

where is the modal or median radius of the number size distribution, is the standard deviation, and:

is the total number of particles per cross-section of the atmospheric column. Table 1 displays the log-normal size parameters and the complex refractive indices for the four aerosol models, where, for each log-normal mode,

is the median radius of the volume size distribution:

and:

is the volume of particles per cross-section of the atmospheric column.

Table 1.

Optical properties of the aerosol models. For each model, the first and second lines are related to the accumulated and coarse modes, respectively. The complex refractive index of dust corresponds to the wavelengths 470 (1), 550 (2), 660 (3), and 2100 (4) nm. In the simulations, the values of the refractive index at nm are assumed.

In our numerical analysis, the state vector is , where is the aerosol optical thickness and H the aerosol layer height. The true aerosol optical thicknesses to be retrieved are , 0.50, 0.75, 1.0, 1.25, and 1.5, while for the true aerosol layer height, we take , 1.5, 2.0, 2.5, and 3.0 km. The a priori values, which coincide with the initial guesses, are and km. A Lambertian surface is assumed, and if not stated otherwise, the surface albedo is . We generate synthetic measurements by choosing the moderately absorbing aerosol model (Model 2) as the true or exact model. Specifically, we:

- compute the radiances for the moderately absorbing aerosol model and the true values and , ;

- add the measurement noise ,where , , and:which is the maximum signal-to-noise ratio according to the EPIC camera specifications and

- in view of the approximation:identify , , and:implying:

The above scheme yields and , showing that the regularized solution does not depend on the scaling matrix. Coming to the regularization matrix, we first note that the choice for some gives:

However, to have a better control on the amount of regularization that is applied to each component of the state vector, we introduce the weight of component , and take:

If , the component is close to the a priori, while for , the component is practically unconstrained. In our simulations, we used . What is left is the specification of the control parameters of the algorithm; these are , , where is the smallest singular value of the quotient matrix at (the first iteration step), , and .

To analyze the accuracy of the aerosol retrieval we consider several test examples.

- Test Example 1

In the first example, we analyze the efficiency of the aerosol model selection by considering all models, i.e., we take m = 1, 2, 3, 4. Thus, the algorithm has the chance to identify the correct aerosol model. The solution accuracy is characterized by the relative errors:

corresponding to (cf. Equation (17)) , and:

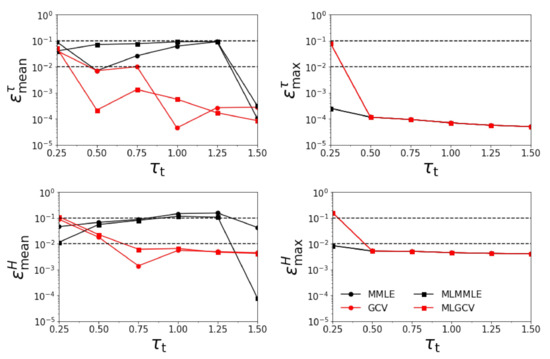

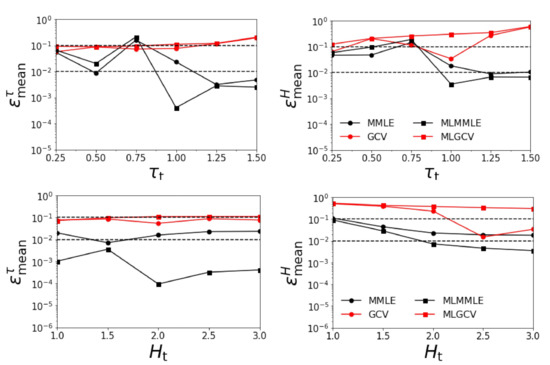

corresponding to (cf. Equation (15)) . In Figure 1 and Figure 2, we illustrate the variations of the relative errors with respect to and , respectively, while in Table 2, we show the average relative errors:

over for , and:

over for . The following conclusions can be drawn:

Figure 1.

Relative errors , , , and versus for = 3.0 km. All four aerosol models are involved in the retrieval.

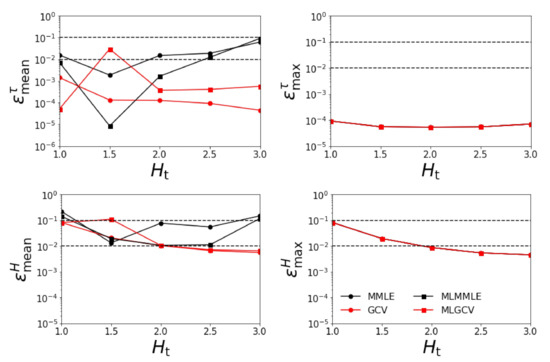

Figure 2.

Relative errors , , , and versus for = 1.0. All four aerosol models are involved in the retrieval.

- the relative errors and corresponding to the generalized cross-validation (GCV and MLGCV) are in general smaller than those corresponding to the maximum marginal likelihood estimation (MMLE and MLMMLE).

- the relative errors and are very small, and so, we deduce that the algorithm recognizes the exact aerosol model;

- the best method is GCV, in which case the average relative errors are , , , and ;

- the aerosol optical thickness is better retrieved than the aerosol layer height.

- Test Example 2

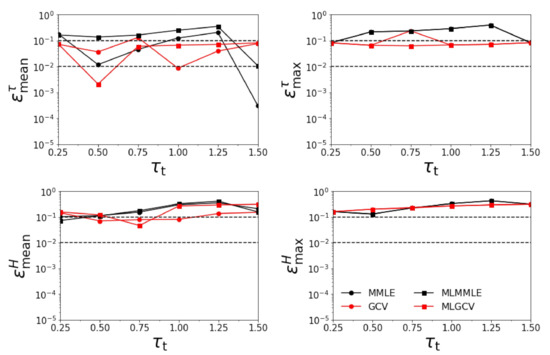

In the second example, we consider all aerosol models m except the exact one, i.e., we take . In this more realistic scenario, the algorithm tries to find an aerosol model that is as close as possible to the exact one. The variations of the relative errors with respect to and are illustrated in Figure 3 and Figure 4, respectively, while the average relative errors are given in Table 3. The following conclusions can be drawn:

Figure 3.

Relative errors , , , and versus for km. All aerosol models except the exact one are involved in the retrieval.

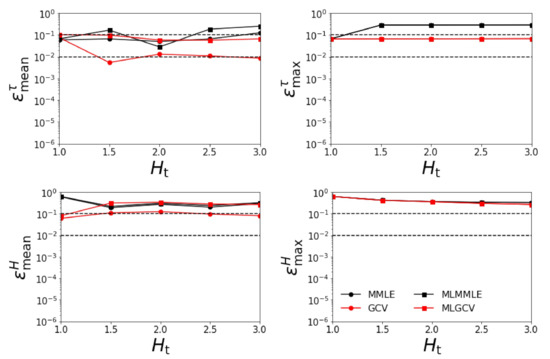

Figure 4.

Relative errors , , , and versus for . All aerosol models except the exact one are involved in the retrieval.

- the relative errors and corresponding to the generalized cross-validation (GCV and MLGCV) are still smaller than those corresponding to the maximum marginal likelihood estimation (MMLE and MLMMLE).

- the relative errors and are extremely large, and so, we infer that the maximum solution estimate is completely unrealistic;

- as before, the best method is GCV characterized by the average relative errors , , , and ;

- the relative errors are significantly larger than those corresponding to the case when all four aerosol models are taken into account.

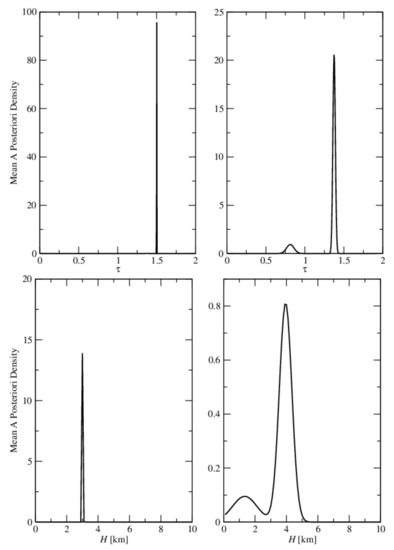

In Figure 5, we show the mean a posteriori density of and H computed by GCV for Test Examples 1 and 2. In Test Example 1, the a posteriori density is sharply peaked, indicating small errors in the retrieval, while in Test Example 2, the a posteriori density is wide and spread over all the aerosol models.

Figure 5.

Upper panels: mean a posteriori density of computed by GCV for Test Examples 1 (left) and 2 (right). Lower panels: mean a posteriori density of H computed by GCV for Test Examples 1 (left) and 2 (right). The true values are = 1.5 and = 3.0 km.

- Test Example 3

In the third series of our numerical experiments, we include the surface albedo in the retrieval. In fact, the surface albedo regarded as a model parameter can be (i) assumed to be known, (ii) included in the retrieval, or (iii) treated as a model uncertainty. The second situation, which leads to more accurate results, is considered here. In this case, however, the aerosol optical thickness and the surface albedo are strongly correlated (the condition number of at k = 1 is – times larger than for the case in which the surface albedo is not part of the retrieval). Since we are not interested in an accurate surface albedo retrieval, we chose the weight controlling the constraint of the surface albedo in the regularization matrix as . As , this means that the surface albedo is tightly constrained to the a priori. We use the a priori and true values and , respectively; thus, the uncertainty in the surface albedo with respect to the a priori is 5%. The synthetic measurements are generated with the true surface albedo of 0.063.

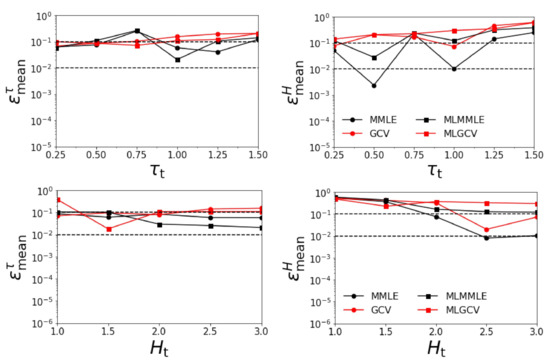

In Figure 6, we illustrate the variations of the relative errors and with respect to and , when all four aerosol models are taken into account. The average relative errors are given in Table 4. The results show that:

Figure 6.

Relative errors and versus for km (upper panels) and for (lower panels). The surface albedo is included in the retrieval and all four aerosol models are considered.

Table 4.

Average relative errors for the results plotted in Figure 6.

- the relative errors and corresponding to generalized cross-validation (GCV and MLGCV) are in general larger than those corresponding to maximum marginal likelihood estimation (MMLE and MLMMLE);

- the best methods are MMLE and MLMMLE; the average relative errors given by MLMMLE are , , , and ;

- the relative errors are significantly larger than those obtained in the first two test examples (when the surface albedo is known exactly).

Figure 7 illustrates the variations of the relative errors and with respect to and , when all aerosol models except the exact one are considered. The corresponding average relative errors are given in Table 5. The results show that:

Figure 7.

Relative errors and versus for km (upper panels) and for (lower panels). The surface albedo is included in the retrieval and all aerosol models excepting the exact one are considered.

Table 5.

Average relative errors for the results plotted in Figure 7.

- as in the previous scenario, the relative errors and corresponding to generalized cross-validation (GCV and MLGCV) are in general larger than those corresponding to maximum marginal likelihood estimation (MMLE and MLMMLE).

- the best methods are MMLE and MLMMLE; the average relative errors delivered by MMLE are = 0.101, = 0.113, = 0.070, and = 0.204;

- the relative errors are the largest among all the test examples.

8. Conclusions

We designed a retrieval algorithm that takes into account uncertainty in model selection. The solution corresponding to a specific model is characterized by a metric called relative evidence, which is a measure of the fit between the model and the measurement. Based on this metric, the maximum solution estimate, corresponding to the model with the highest evidence, and the mean solution estimate, representing a linear combination of solutions weighted by their evidence, are introduced.

The retrieval algorithm is based on:

- an application of the prewhitening technique in order to transform the data model into a scaled model with white noise;

- a deterministic regularization method, i.e., the iteratively regularized Gauss–Newton method, in order to compute the regularized solution (equivalent to the maximum a posteriori estimate of the solution in a Bayesian framework) and to determine the optimal value of the regularization parameter (equivalent to the ratio of the data error and a priori state variances in a Bayesian framework);

- a linearization of the forward model around the solution in order to transform the nonlinear data model into a linear model and, in turn, facilitate an analytical integration of the likelihood density over the state vector;

- an extension of maximum marginal likelihood estimation and generalized cross-validation to model selection and data error variance estimation.

Essentially, the algorithm includes four selection models corresponding to:

- the two parameter choice methods used (maximum marginal likelihood estimation and generalized cross-validation) and

- the two settings in which the relative evidence is treated (stochastic and deterministic).

The algorithm is applied to the retrieval of aerosol optical thickness and aerosol layer height from synthetic measurements corresponding to the EPIC instrument. In the simulations, the aerosol models implemented in the MODIS aerosol algorithm over land are considered, and the surface albedo is either assumed to be known or included in the retrieval. The following conclusions are drawn:

- The differences between the results corresponding to the stochastic and deterministic interpretations of the relative evidence are not significant.

- If the surface albedo is assumed to be known, generalized cross-validation is superior to maximum marginal likelihood estimation; if the surface albedo is included in the retrieval, the contrary is true.

- The errors in the aerosol optical thickness retrieval are smaller than those in the aerosol layer height retrieval. In the most realistic situation, when the exact aerosol model and surface albedo are unknown, the average relative errors in the retrieved aerosol optical thickness are about 10%, while the corresponding errors in the aerosol layer height are about 20%.

- The maximum solution estimate is completely unrealistic when both the aerosol model and surface albedo are unknown.

Author Contributions

Conceptualization: V.N. and A.D.; Data curation: S.S.; Formal analysis: S.S. and V.M.G.; Funding acquisition: V.N., D.L. and A.D.; Investigation: S.S.; Methodology: V.N. and A.D.; Project administration: D.L. and A.D.; Supervision: V.N., D.S.E. and A.D.; Validation: V.N. and A.D.; Writing—original draft: S.S.; Writing—review & editing: S.S., V.N., D.S.E., D.L. and A.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the German Aerospace Center (DLR) and the German Academic Exchange Service (DAAD) through the program DLR/DAAD Research Fellowships 2015 (57186656), with Reference Numbers 91613528 and 91627488.

Acknowledgments

A portion of this research was carried out at the Jet Propulsion Laboratory, California Institute of Technology, under a contract with the National Aeronautics and Space Administration (80NM0018D0004). V.N. acknowledges support from the NASA Earth Science US Participating Investigator program (Solicitation NNH16ZDA001N-ESUSPI).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A

In this Appendix, we compute the marginal likelihood for the linear data model (26) with and . In this case, the a priori and likelihood densities and are given by:

and:

respectively. Using the identity:

where (cf. Equation (23)) and , we express the integrand in Equation (8) as:

The normalization condition:

the identities and:

where is the averaging kernel matrix, and the relation:

yield the following expression for the marginal likelihood density:

Appendix B

In this Appendix, we present a general tool for estimating the data error variance and the optimal model. Consider the linear model (26), i.e., , and a singular value decomposition of the quotient matrix , where with the convention for , , and . The covariance matrix of the data can be computed as:

where:

Define the scaled data:

and note that the covariance matrix of is the diagonal matrix , i.e.,

If is the correct data error variance, we must have (cf. Equation (A2)):

where . If is unknown, we can find the estimate from the equations:

However, since only one realization of the random vector is known, the calculation of from Equation (A3) may lead to erroneous results. Therefore, we look for another selection criterion.

Set:

and define the function:

with being a strictly concave function, i.e., . The expected value of is given by:

hence, defining the estimate through the relation (cf. Equations (A3) and (A4)):

we express as:

Then, we obtain:

Considering the second-order Taylor expansion around ,

for some between and and taking into account the fact that is strictly concave, we deduce that each term in the sum (A6) is non-negative and vanishes only for Thus, we have:

for all . In conclusion, , defined through Equation (A5), is the unique global minimizer of , i.e.,

Coming to the optimal model , it seems that a natural choice of is:

However, instead of Equation (A8), we use a more general selection criterion, namely the optimal model defined as:

where F is a monotonic increasing function of .

The generalized cross-validation [10,11] and maximum marginal likelihood estimation [7,8,9] can be obtained by a particular choice of the function .

- Generalized cross-validation

For the choice:

we obtain:

Since is the unique global minimizer of , the condition:

yields:

The above equation together with the relation:

provide the following estimate for the data error variance:

On the other hand, from Equations (A10) and (A12), we obtain:

and we define the function by:

In practice, the expectation cannot be computed since only one realization is known. Therefore, we approximate and consider the so-called generalized cross-validation function:

Finally, using the representations:

we express the estimate of the data error variance as:

and the generalized cross-validation function as:

- Maximum marginal likelihood estimation

For the choice:

we obtain:

Furthermore, using the result:

we express as:

Considering the approximation , we define the maximum likelihood function by:

Using the results:

we express the estimate for the data error variance as:

and the maximum likelihood function as:

Appendix C

In this Appendix, we derive an estimate for the data error variance by analyzing the residual of the linear equation . In terms of a singular value decomposition of the quotient matrix with , for , , and , the squared norm of the residual of the linear equation is:

In the data model (cf. Equation (26)) , we set and rewrite this equation as:

Using the relation:

where is the Kronecker delta function, we get:

so that from Equations (A29) and (A30), we obtain:

Since belongs to the range of the matrix operator , which, in turn, is spanned by the vectors , we have for , and further (cf. Equation (A31)):

Now, if for all , we approximate:

and deduce that an estimate for the data error variance is:

References

- Levy, R.C.; Remer, L.A.; Dubovik, O. Global aerosol optical properties and application to Moderate Resolution Imaging Spectroradiometer aerosol retrieval over land. J. Geophys. Res. Atmos. 2007, 112. [Google Scholar] [CrossRef]

- Hoeting, J.A.; Madigan, D.; Raftery, A.E.; Volinsky, C.T. Bayesian Model averaging: A tutorial. Stat. Sci. 1999, 14, 382–401. [Google Scholar]

- Määttä, A.; Laine, M.; Tamminen, J.; Veefkind, J. Quantification of uncertainty in aerosol optical thickness retrieval arising from aerosol microphysical model and other sources, applied to Ozone Monitoring Instrument (OMI) measurements. Atmos. Meas. Tech. 2014, 7, 1185–1199. [Google Scholar] [CrossRef]

- Kauppi, A.; Kolmonen, P.; Laine, M.; Tamminen, J. Aerosol-type retrieval and uncertainty quantification from OMI data. Atmos. Meas. Tech. 2017, 10, 4079–4098. [Google Scholar] [CrossRef]

- Doicu, A.; Trautmann, T.; Schreier, F. Numerical Regularization for Atmospheric Inverse Problems; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar] [CrossRef]

- Tarantola, A. Inverse Problem Theory and Methods for Model Parameter Estimation; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 2005. [Google Scholar] [CrossRef]

- Patterson, H.; Thompson, R. Recovery of inter-block information when block sizes are unequal. Biometrika 1971, 58, 545–554. [Google Scholar] [CrossRef]

- Smyth, G.K.; Verbyla, A.P. A conditional likelihood approach to residual maximum likelihood estimation in generalized linear models. J. R. Stat. Soc. Ser. Methodol. 1996, 58, 565–572. [Google Scholar] [CrossRef]

- Stuart, A.; Ord, J.; Arnols, S. Kendall’s Advanced Theory of Statistics. Volume 2A: Classical Inference and the Linear Model; Oxford University Press Inc.: Oxford, UK, 1999. [Google Scholar]

- Wahba, G. Practical approximate solutions to linear operator equations when the data are noisy. SIAM J. Numer. Anal. 1977, 14, 651–667. [Google Scholar] [CrossRef]

- Wahba, G. Spline Models for Observational Data; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 1990. [Google Scholar] [CrossRef]

- Marshak, A.; Herman, J.; Szabo, A.; Blank, K.; Carn, S.; Cede, A.; Geogdzhayev, I.; Huang, D.; Huang, L.K.; Knyazikhin, Y.; et al. Earth Observations from DSCOVR EPIC Instrument. Bull. Am. Meteorol. Soc. 2018, 99, 1829–1850. [Google Scholar] [CrossRef] [PubMed]

- Geogdzhayev, I.V.; Marshak, A. Calibration of the DSCOVR EPIC visible and NIR channels using MODIS Terra and Aqua data and EPIC lunar observations. Atmos. Meas. Tech. 2018, 11, 359–368. [Google Scholar] [CrossRef] [PubMed]

- Xu, X.; Wang, J.; Wang, Y.; Zeng, J.; Torres, O.; Reid, J.; Miller, S.; Martins, J.; Remer, L. Detecting layer height of smoke aerosols over vegetated land and water surfaces via oxygen absorption bands: Hourly results from EPIC/DSCOVR in deep space. Atmos. Meas. Tech. 2019, 12, 3269–3288. [Google Scholar] [CrossRef]

- García, V.M.; Sasi, S.; Efremenko, D.S.; Doicu, A.; Loyola, D. Radiative transfer models for retrieval of cloud parameters from EPIC/DSCOVR measurements. J. Quant. Spectrosc. Radiat. Transf. 2018, 213, 228–240. [Google Scholar] [CrossRef]

- García, V.M.; Sasi, S.; Efremenko, D.S.; Doicu, A.; Loyola, D. Linearized radiative transfer models for retrieval of cloud parameters from EPIC/DSCOVR measurements. J. Quant. Spectrosc. Radiat. Transf. 2018, 213, 241–251. [Google Scholar] [CrossRef]

- Holben, B.; Eck, T.; Slutsker, I.; Tanré, D.; Buis, J.; Setzer, A.; Vermote, E.; Reagan, J.; Kaufman, Y.; Nakajima, T.; et al. AERONET—A federated instrument network and data archive for aerosol characterization. Remote Sens. Environ. 1998, 66, 1–16. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).