Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning

Abstract

1. Introduction

2. Materials and Methods

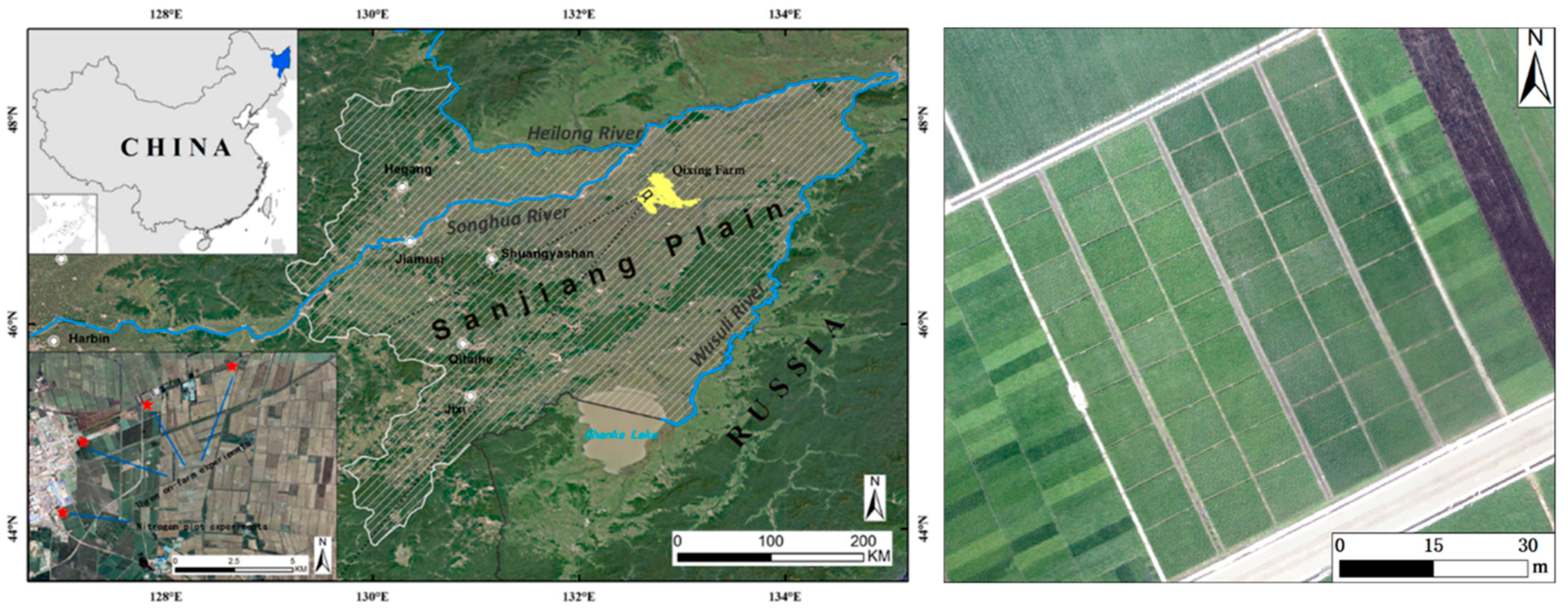

2.1. Study Site

2.2. Experimental Setup

2.3. Field Data Collection and NNI Parametrization

2.4. UAV Image Acquisition and Preprocessing

2.5. Data Analysis

3. Results

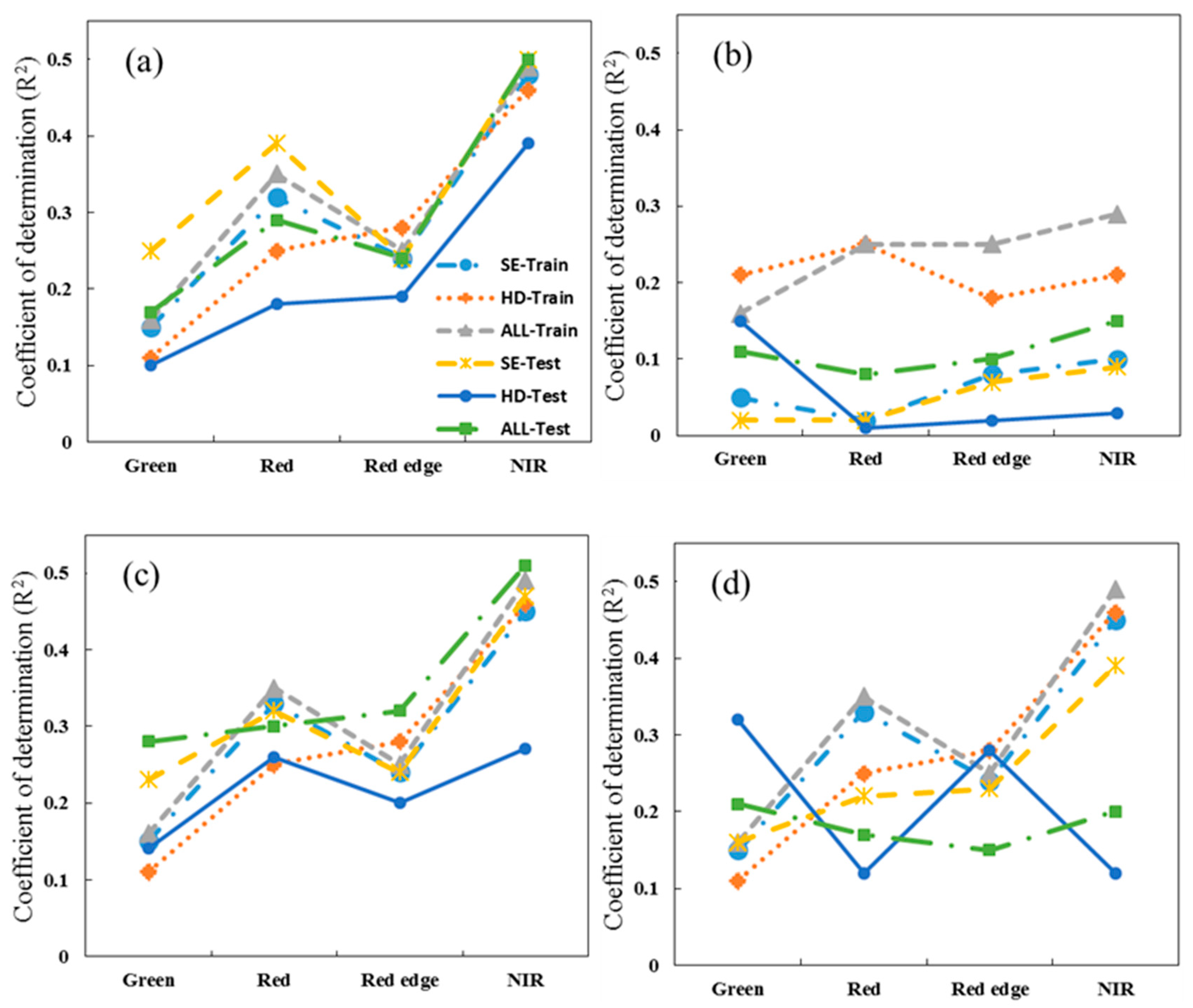

3.1. Single Spectral Band Analysis

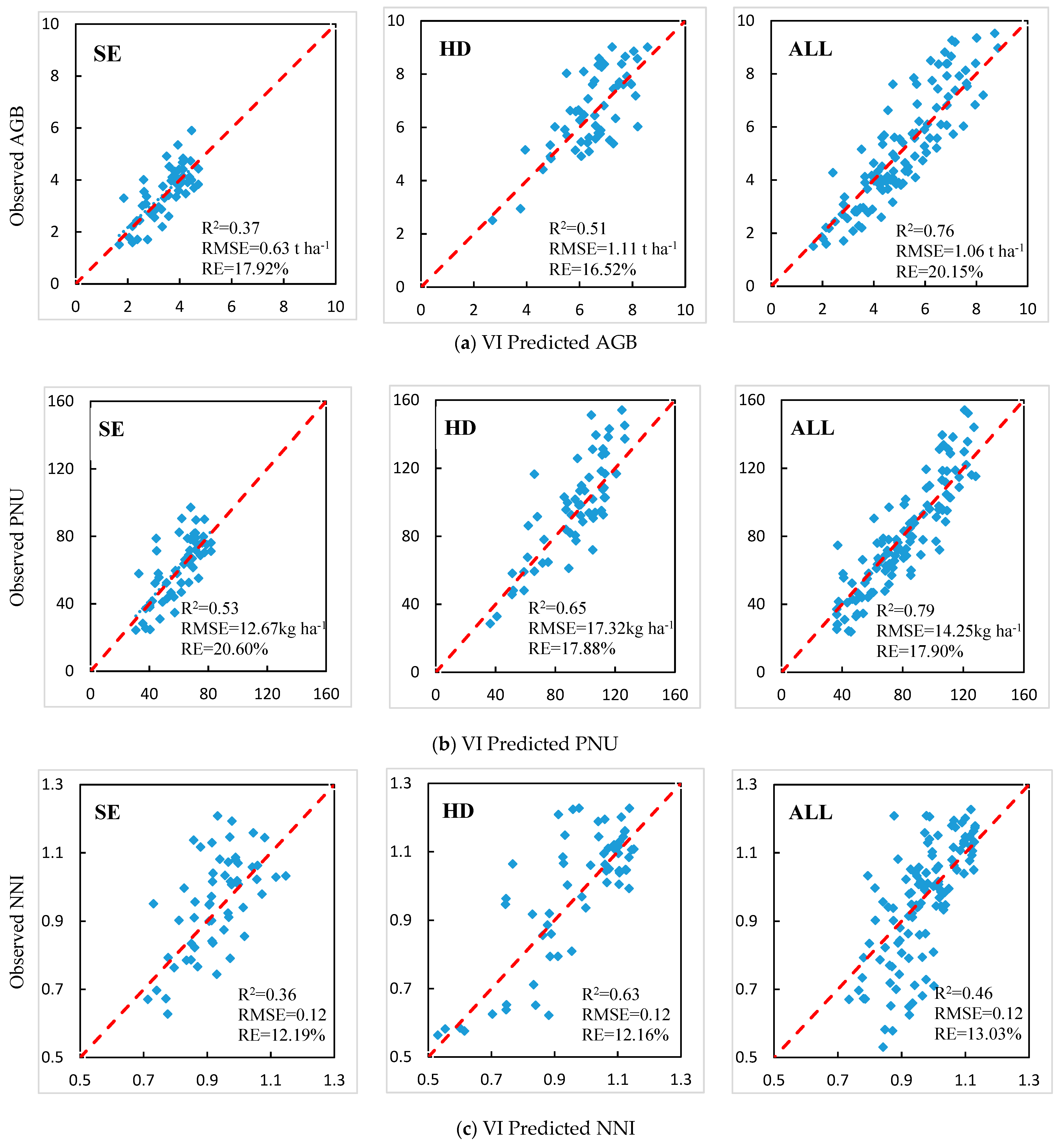

3.2. Vegetation Index Analysis

3.3. Stepwise Multiple Linear Regression (SMLR) Analysis

3.4. Performance of Machine Learning Models

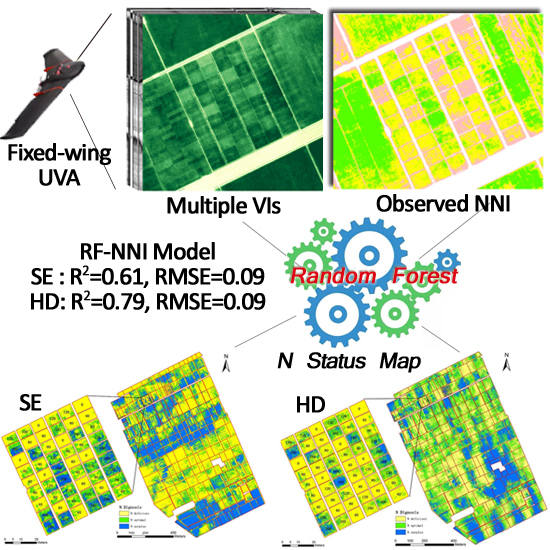

3.5. Random Forest Models Based on Selected Vegetation Indices

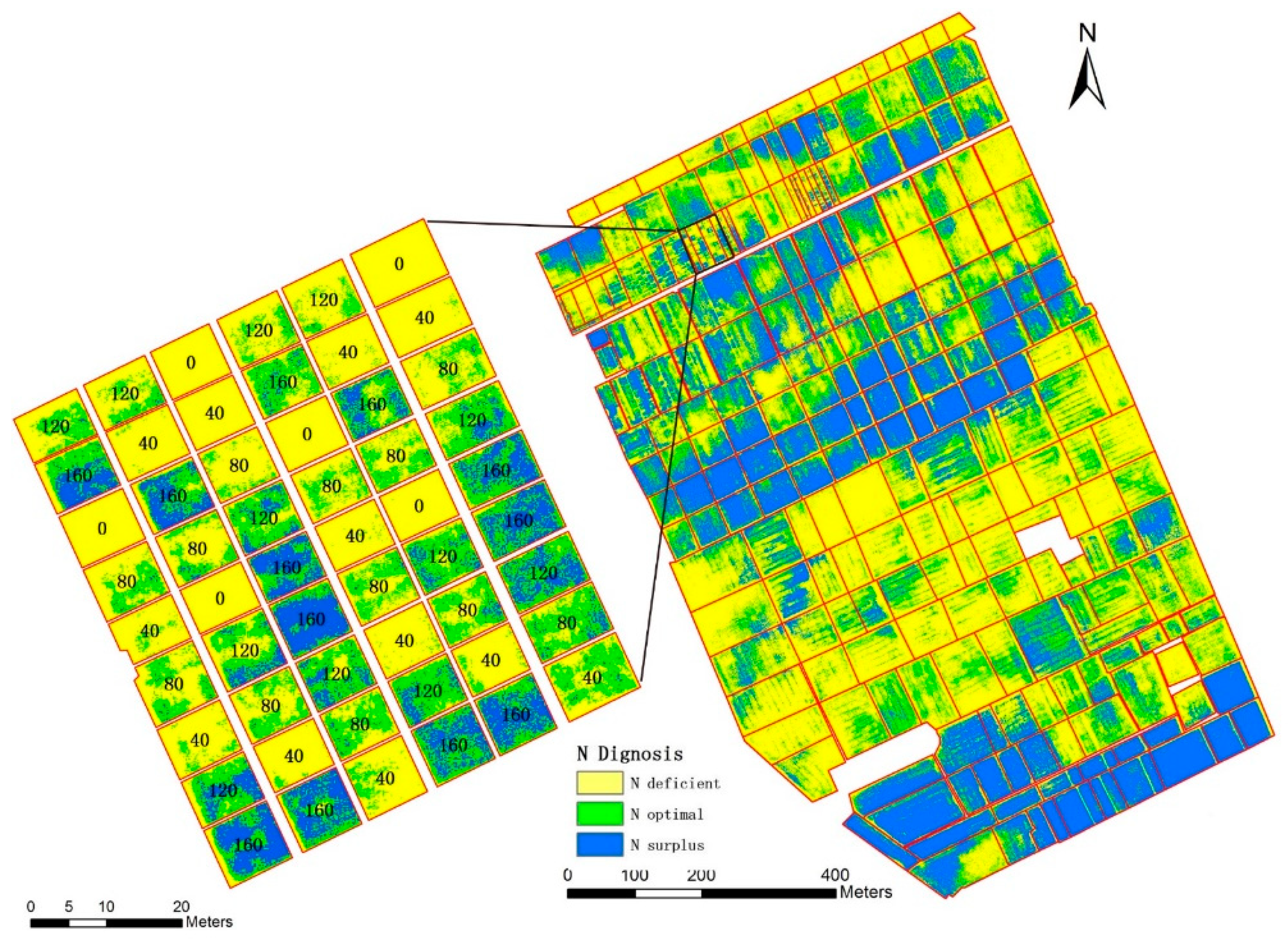

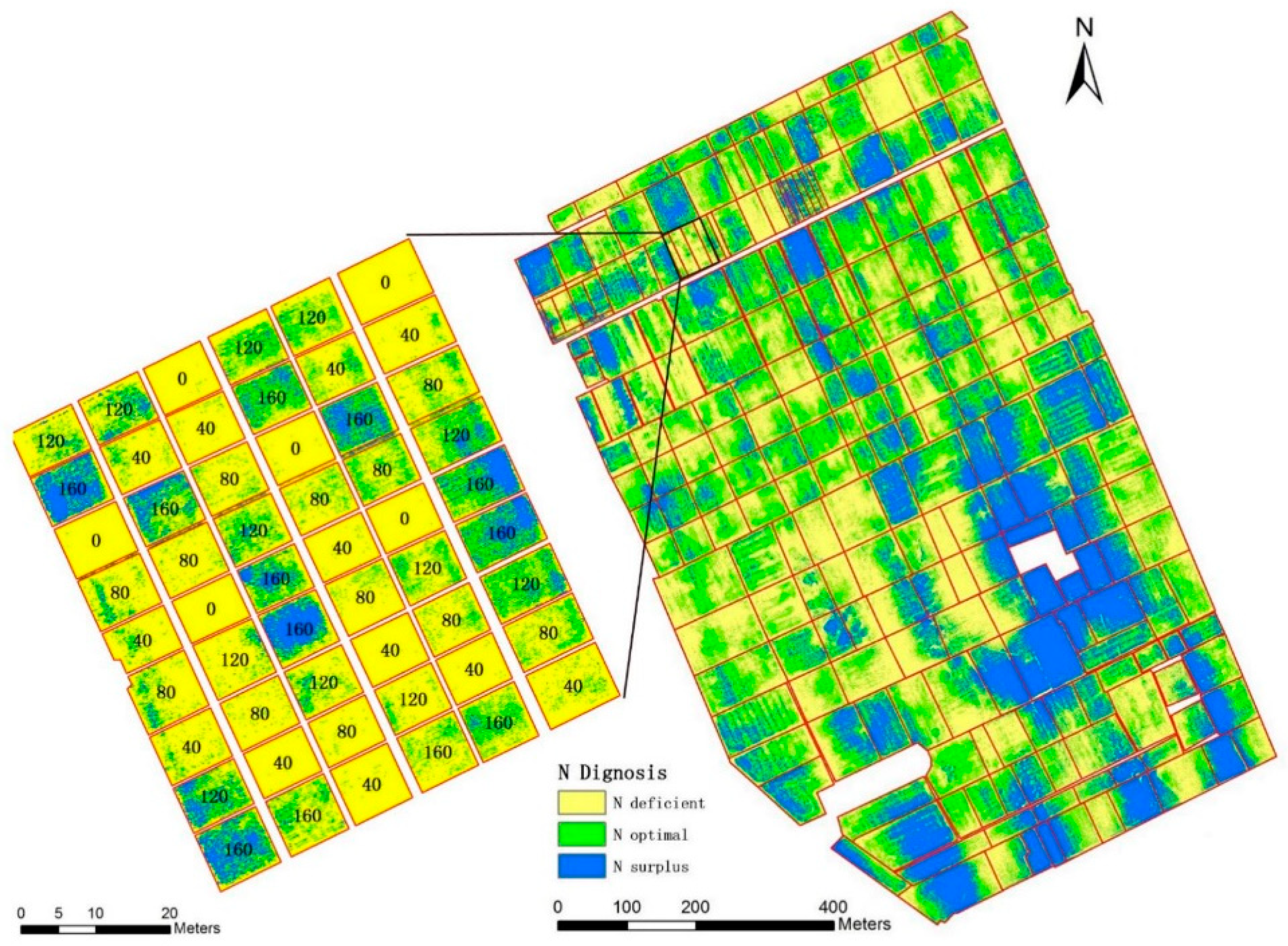

3.6. Nitrogen Status Diagnosis at the Farm Scale

4. Discussion

4.1. Estimating Rice N Status Indicators Using Single Vegetation Index

4.2. The Performance of Different Machine Learning Modeling Methods

4.3. Challenges and Future Research Needs

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Index | Formula | Reference |

|---|---|---|

| Green Ratio Vegetation Index (GRVI) | NIR/G | [67] |

| Green Difference Vegetation Index (GDVI) | NIR − G | [68] |

| Green Normalized Difference Vegetation Index (GNDVI) | (NIR − G)/(NIR + G) | [69] |

| Green Wide Dynamic Range Vegetation Index (GWDRVI) | (a*NIR − G)/(a*NIR + G) (a = 0.12) | [46] |

| Green Chlorophyll Index (CIg) | NIR/G − 1 | [70] |

| Modified Green Simple Ratio (MSR_G) | (NIR/G − 1)/SQRT(NIR/G + 1) | [46] |

| Green Soil Adjusted Vegetation Index (GSAVI) | 1.5*[(NIR − G)/(NIR + G + 0.5)] | [71] |

| Modified Soil Adjusted Vegetation Index (MSAVI) | 0.5*[2*NIR + 1 − SQRT((2*NIR + 1)2 − 8*(NIR − G))] | [72] |

| Green Optimal Soil Adjusted Vegetation Index (GOSAVI) | (1 + 0.16)(NIR − G)/(NIR + G + 0.16) | [73] |

| Green Re-normalized Different Vegetation Index (GRDVI) | (NIR − G)/SQRT(NIR + G) | [46] |

| Normalized Green Index (NGI) | G/(NIR + RE + G) | [71] |

| Normalized Red Edge Index (NREI) | RE/(NIR + RE + G) | [46] |

| Normalized Red Index (NRI) | R/(NIR + RE + R) | [14] |

| Normalized NIR Index (NNIR) | NIR/(NIR + RE + G) | [71] |

| Modified Double Difference Index (MDD) | (NIR − RE) − (RE − G) | [14] |

| Modified Normalized Difference Index (MNDI) | (NIR − RE)/(NIR − G) | [46] |

| Modified Enhanced Vegetation Index (MEVI) | 2.5*(NIR − RE)/(NIR + 6*RE − 7.5*G + 1) | [46] |

| Modified Normalized Difference Red Edge (MNDRE) | [NIR − (RE − 2*G)]/[NIR + (RE − 2*G)] | [46] |

| Modified Chlorophyll Absorption In Reflectance Index1 (MCARI1) | [(NIR − RE) − 0.2*(NIR − R)](NIR/RE) | [46] |

| Modified Chlorophyll Absorption In Reflectance Index 2 (MCARI2) | [14] | |

| Normalized Difference Vegetation Index (NDVI) | (NIR − R)/(NIR + R) | [74] |

| Ratio Vegetation Index( RVI) | NIR/R | [75] |

| Difference Vegetation Index (DVI) | NIR − R | [68] |

| Renormalized Difference Vegetation Index (RDVI) | (NIR − R)/SQRT(NIR + R) | [76] |

| Wide Dynamic Range Vegetation Index (WDRVI) | (a*NIR − R)/(a*NIR + R) (a = 0.12) | [77] |

| Soil-Adjusted Vegetation Index (SAVI) | 1.5*(NIR − R)/(NIR + R + 0.5) | [78] |

| Optimized SAVI (OSAVI) | (1 + 0.16)*(NIR − R)/(NIR + R + 0.16) | [73] |

| Modified Soil-adjusted Vegetation Index (MSAVI) | 0.5*[2*NIR + 1 − SQRT((2*NIR + 1)2 − 8*(NIR − R))] | [72] |

| Transformed Normalized Vegetation Index (TNDVI) | SQRT((NIR − R)/(NIR + R) + 0.5) | [79] |

| Modified Simple Ratio (MSR) | (NIR/R − 1)/SQRT(NIR/R + 1) | [80] |

| Optimal Vegetation Index (VIopt) | 1.45*((NIR2 + 1)/(R + 0.45)) | [81] |

| MERIS Terrestrial Chlorophyll Index (MTCI) | (NIR − RE)/(RE − R) | [82] |

| Nonlinear Index (NLI) | (NIR2 − R)/(NIR2 + R) | [83] |

| Modified Nonlinear Index (MNLI) | 1.5*(NIR2 − R)/(NIR2 + R + 0.5) | [84] |

| NDVI*RVI | (NIR2 − R)/(NIR + R2) | [84] |

| SAVI*SR | (NIR2 − R)/[(NIR + R + 0.5)*R] | [84] |

| Normalized Difference Red Edge (NDRE) | (NIR − RE)/(NIR + RE) | [85] |

| Red Edge Ratio Vegetation Index (RERVI) | NIR/RE | [86] |

| Red Edge Difference Vegetation Index (REDVI) | NIR − RE | [46] |

| Red Edge Re-normalized Different Vegetation Index (RERDVI) | (NIR − RE)/SQRT(NIR + RE) | [46] |

| Red Edge Wide Dynamic Range Vegetation Index (REWDRVI) | (a*NIR − RE)/(a*NIR + RE) (a = 0.12) | [46] |

| Red Edge Soil Adjusted Vegetation Index (RESAVI) | 1.5*[(NIR − RE)/(NIR + RE + 0.5)] | [46] |

| Red Edge Optimal Soil Adjusted Vegetation Index (REOSAVI) | (1 + 0.16)(NIR − RE)/(NIR + RE + 0.16) | [46] |

| Modified Red Edge Soil Adjusted Vegetation Index (MRESAVI) | 0.5*[2*NIR + 1 − SQRT((2*NIR + 1)2 − 8*(NIR − RE))] | [46] |

| Optimized Red Edge Vegetation Index (REVIopt) | 100*(lnNIR − lnRE) | [87] |

| Red Edge Chlorophyll Index (CIre) | NIR/RE − 1 | [88] |

| Modified Red Edge Simple Ratio (MSR_RE) | (NIR/RE − 1)/SQRT(NIR/RE + 1) | [14] |

| Red Edge Normalized Difference Vegetation Index (RENDVI) | (RE − R)/(RE + R) | [89] |

| Red Edge Simple Ratio (RESR) | RE/R | [90] |

| Modified Red Edge Difference Vegetation Index (MREDVI) | RE − R | [46] |

| MERIS Terrestrial Chlorophyll Index (MTCI) | (NIR − RE)/(RE − R) | [82] |

| DATT Index (DATT) | (NIR − RE)/(NIR − R) | [91] |

| Normalized Near Infrared Index (NNIRI) | NIR/(NIR + RE + R) | [14] |

| Normalized Red Edge Index (NREI) | RE/(NIR + RE + R) | [14] |

| Normalized Red Index (NRI) | R/(NIR + RE + R) | [14] |

| Modified Double Difference Index (MDD) | (NIR − RE) − (RE − R) | [14] |

| Modified Red Edge Simple Ratio (MRESR) | (NIR − R)/(RE − R) | [14] |

| Modified Normalized Difference Index (MNDI) | (NIR − RE)/(NIR + RE − 2R) | [14] |

| Modified Enhanced Vegetation Index (MEVI) | 2.5*(NIR − R)/(NIR + 6*R − 7.5*RE + 1) | [14] |

| Modified Normalized Difference Red Edge (MNDRE2) | (NIR − RE + 2*R)/(NIR + RE − 2*R) | [14] |

| Red Edge Transformed Vegetation Index (RETVI) | 0.5*[120*(NIR − R) − 200*(RE − R)] | [14] |

| Modified Chlorophyll Absorption In Reflectance Index 3 (MCARI3) | [(NIR − RE) − 0.2*(NIR − R)](NIR/RE) | [14] |

| Modified Chlorophyll Absorption In Reflectance Index 4 (MCARI4) | [14] | |

| Modified Transformed Chlorophyll Absorption In Reflectance Index (MTCARI) | 3*[(NIR − RE) − 0.2*(NIR − R)(NIR/RE)] | [14] |

| Modified Red Edge Transformed Vegetation Index (MRETVI) | 1.2*[1.2*(NIR − R) − 2.5*(RE − R)] | [14] |

| Modified Canopy Chlorophyll Content Index (MCCCI) | NDRE/NDVI | [92] |

| MCARI1/OSAVI | MCARI1/OSAVI | [14] |

| MCARI2/OSAVI | MCARI2/OSAVI | [14] |

| MTCARI/OSAVI | MTCARI/OSAVI | [14] |

| MCARI1/MRETVI | MCARI1/MRETVI | [14] |

| MTCARI/MRETVI | MTCARI/MRETVI | [14] |

References

- Miao, Y.; Stewart, B.A.; Zhang, F. Long-term experiments for sustainable nutrient management in China. A review. Agron. Sustain. Dev. 2011, 31, 397–414. [Google Scholar] [CrossRef]

- Cao, Q.; Miao, Y.; Feng, G.; Gao, X.; Liu, B.; Liu, Y.; Li, F.; Khosla, R.; Mulla, D.J.; Zhang, F. Improving nitrogen use efficiency with minimal environmental risks using an active canopy sensor in a wheat-maize cropping system. Field Crop Res. 2017, 214, 365–372. [Google Scholar] [CrossRef]

- Havlin, J.L.; Tisdale, S.L.; Nelson, W.L.; Beaton, J.D. Soil Fertility and Fertilizers: An Introduction to Nutrient Management, 8th ed.; Pearson, Inc.: Upper Saddle River, NJ, USA, 2014; pp. 117–184. [Google Scholar]

- Huang, S.; Miao, Y.; Cao, Q.; Yao, Y.; Zhao, G.; Yu, W.; Shen, J.; Yu, K.; Bareth, G. A new critical nitrogen dilution curve for rice nitrogen status diagnosis in Northeast China. Pedosphere 2018, 28, 814–822. [Google Scholar] [CrossRef]

- Greenwood, D.; Gastal, F.; Lemaire, G.; Draycott, A.; Millard, P.; Neeteson, J. Growth rate and % N of field grown crops: Theory and experiments. Ann. Bot. 1991, 67, 181–190. [Google Scholar] [CrossRef]

- Lemaire, G.; Jeuffroy, M.; Gastal, F. Diagnosis tool for plant and crop N status in vegetative stage. Eur. J. Agron. 2008, 28, 614–624. [Google Scholar] [CrossRef]

- Inoue, Y.; Sakaiya, E.; Zhu, Y.; Takahashi, W. Diagnostic mapping of canopy nitrogen content in rice based on hyperspectral measurements. Remote Sens. Environ. 2012, 126, 210–221. [Google Scholar] [CrossRef]

- Filella, I.; Serrano, L.; Serra, J.; Penuelas, J. Evaluating wheat nitrogen status with canopy reflectance indices and discriminant analysis. Crop Sci. 1995, 35, 1400–1405. [Google Scholar] [CrossRef]

- Caturegli, L.; Corniglia, M.; Gaetani, M.; Grossi, N.; Magni, S.; Migliazzi, M.; Angelini, L.; Mazzoncini, M.; Silvestri, N.; Fontanelli, M.; et al. Unmanned aerial vehicle to estimate nitrogen status of turfgrasses. PLoS ONE 2016, 11, e158268. [Google Scholar] [CrossRef]

- Jay, S.; Maupas, F.; Bendoula, R.; Gorretta, N. Retrieving LAI, chlorophyll and nitrogen contents in sugar beet crops from multi-angular optical remote sensing: Comparison of vegetation indices and PROSAIL inversion for field phenotyping. Field Crop. Res. 2017, 210, 33–46. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W.; Gitelson, A.A. Remote estimation of crop and grass chlorophyll and nitrogen content using red-edge bands on Sentinel-2 and -3. Int. J. Appl. Earth Obs. 2013, 23, 344–351. [Google Scholar] [CrossRef]

- Cao, Q.; Miao, Y.; Feng, G.; Gao, X.; Li, F.; Liu, B.; Yue, S.; Cheng, S.; Ustin, S.L.; Khosla, R. Active canopy sensing of winter wheat nitrogen status: An evaluation of two sensor systems. Comput. Electron. Agric. 2015, 112, 54–67. [Google Scholar] [CrossRef]

- Padilla, F.M.; Gallardo, M.; Pena-Fleitas, M.T.; Souza, R.D.; Thompson, R.B. Proximal optical sensors for nitrogen management of vegetable crops: A review. Sensors 2018, 18, 2083. [Google Scholar] [CrossRef]

- Lu, J.; Miao, Y.; Shi, W.; Li, J.; Yuan, F. Evaluating different approaches to non-destructive nitrogen status diagnosis of rice using portable RapidSCAN active canopy sensor. Sci. Rep. 2017, 7, 14073. [Google Scholar] [CrossRef]

- Morier, T.; Cambouris, A.N.; Chokmani, K. In-season nitrogen status assessment and yield estimation using hyperspectral vegetation indices in a potato crop. Agron. J. 2015, 107, 1295–1309. [Google Scholar] [CrossRef]

- Xia, T.; Miao, Y.; Wu, D.; Shao, H.; Khosla, R.; Mi, G. Active optical sensing of spring maize for in-season diagnosis of nitrogen status based on nitrogen nutrition index. Remote Sens. 2016, 8, 605. [Google Scholar] [CrossRef]

- Padilla, F.M.; Pena-Fleitas, M.T.; Gallardo, M.; Thompson, R.B. Evaluation of optical sensor measurements of canopy reflectance and of leaf flavonols and chlorophyll contents to assess crop nitrogen status of muskmelon. Eur. J. Agron. 2014, 58, 39–52. [Google Scholar] [CrossRef]

- Huang, S.; Miao, Y.; Yuan, F.; Gnyp, M.; Yao, Y.; Cao, Q.; Wang, H.; Lenz-Wiedemann, V.; Bareth, G. Potential of RapidEye and WorldView-2 satellite data for improving rice nitrogen status monitoring at different growth stages. Remote Sens. 2017, 9, 227. [Google Scholar] [CrossRef]

- Ham, Y.; Han, K.K.; Lin, J.J.; Golparvar-Fard, M. Visual monitoring of civil infrastructure systems via camera-equipped Unmanned Aerial Vehicles (UAVs): A review of related works. Vis. Eng. 2016, 4, 1. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Barbedo, J.G.A. A review on the use of unmanned aerial vehicles and imaging sensors for monitoring and assessing plant stresses. Drones 2019, 3, 40. [Google Scholar] [CrossRef]

- Deng, L.; Mao, Z.; Li, X.; Hu, Z.; Duan, F.; Yan, Y. UAV-based multispectral remote sensing for precision agriculture: A comparison between different cameras. ISPRS J. Photogramm. 2018, 146, 124–136. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on Unmanned Aerial Vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef]

- Severtson, D.; Callow, N.; Flower, K.; Neuhaus, A.; Olejnik, M.; Nansen, C. Unmanned aerial vehicle canopy reflectance data detects potassium deficiency and green peach aphid susceptibility in canola. Precis. Agric. 2016, 17, 659–677. [Google Scholar] [CrossRef]

- Vega, F.A.; Ramírez, F.C.; Saiz, M.P.; Rosúa, F.O. Multi-temporal imaging using an unmanned aerial vehicle for monitoring a sunflower crop. Biosyst. Eng. 2015, 132, 19–27. [Google Scholar] [CrossRef]

- Wang, H.; Mortensen, A.K.; Mao, P.; Boelt, B.; Gislum, R. Estimating the nitrogen nutrition index in grass seed crops using a UAV-mounted multispectral camera. Int. J. Remote Sens. 2019, 40, 2467–2482. [Google Scholar] [CrossRef]

- Qiu, J.; Wu, Q.; Ding, G.; Xu, Y.; Feng, S. A survey of machine learning for big data processing. EURASIP J. Adv. Signal Process. 2016, 2016, 67. [Google Scholar] [CrossRef]

- Chlingaryan, A.; Sukkarieh, S.; Whelan, B. Machine learning approaches for crop yield prediction and nitrogen status estimation in precision agriculture: A review. Comput. Electron. Agric. 2018, 151, 61–69. [Google Scholar] [CrossRef]

- Liakos, K.; Busato, P.; Moshou, D.; Pearson, S.; Bochtis, D. Machine learning in agriculture: A review. Sensors 2018, 18, 2674. [Google Scholar] [CrossRef]

- Ali, I.; Greifeneder, F.; Stamenkovic, J.; Neumann, M.; Notarnicola, C. Review of machine learning approaches for biomass and soil moisture retrievals from remote sensing data. Remote Sens. 2015, 7, 16398–16421. [Google Scholar] [CrossRef]

- Han, L.; Yang, G.; Dai, H.; Xu, B.; Yang, H.; Feng, H.; Li, Z.; Yang, X. Modeling maize above-ground biomass based on machine learning approaches using UAV remote-sensing data. Plant Methods 2019, 15, 10. [Google Scholar] [CrossRef] [PubMed]

- Ali, I.; Cawkwell, F.; Dwyer, E.; Green, S. Modeling managed grassland biomass estimation by using multitemporal remote sensing data—A machine learning approach. IEEE J. Sel. Top. Earth Obs. Remote Sens. 2017, 10, 3254–3264. [Google Scholar] [CrossRef]

- Pantazi, X.E.; Moshou, D.; Alexandridis, T.; Whetton, R.L.; Mouazen, A.M. Wheat yield prediction using machine learning and advanced sensing techniques. Comput. Electron. Agric. 2016, 121, 57–65. [Google Scholar] [CrossRef]

- Liu, H.; Zhu, H.; Wang, P. Quantitative modelling for leaf nitrogen content of winter wheat using UAV-based hyperspectral data. Int. J. Remote Sens. 2017, 38, 2117–2134. [Google Scholar] [CrossRef]

- Zheng, H.; Li, W.; Jiang, J.; Liu, Y.; Cheng, T.; Tian, Y.; Zhu, Y.; Cao, W.; Zhang, Y.; Yao, X. A comparative assessment of different modeling algorithms for estimating leaf nitrogen content in winter wheat using multispectral images from an unmanned aerial vehicle. Remote Sens. 2018, 10, 2026. [Google Scholar] [CrossRef]

- Xing, B.; Dudas, M.J.; Zhang, Z.; Xu, Q. Pedogenetic characteristics of albic soils in the three river plain, Heilongjiang Province. Acta Pedol. Sin. 1994, 31, 95–104. [Google Scholar]

- Lv, W.; Ge, Y.; Wu, J.; Chang, J. Study on the method for the determination of nitric nitrogen, ammoniacal nitrogen and total nitrogen in plant. Spectrosc. Spect. Anal. 2004, 24, 204–206. [Google Scholar]

- Chen, Z.; Miao, Y.; Lu, J.; Zhou, L.; Li, Y.; Zhang, H.; Lou, W.; Zhang, Z.; Kusnierek, K.; Liu, C. In-season diagnosis of winter wheat nitrogen status in smallholder farmer fields across a village using unmanned aerial vehicle-based remote sensing. Agronomy 2019, 9, 619. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

- Abraham, A.; Pedregosa, F.; Eickenberg, M.; Gervais, P.; Mueller, A.; Kossaifi, J.; Gramfort, A.; Thirion, B.; Varoquaux, G. Machine learning for neuroimaging with scikit-learn. Front. Neuroinform. 2014, 8, 14. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef]

- Hatfield, J.L.; Prueger, J.H. Value of using different vegetative indices to quantify agricultural crop chateristics at different growth stages under varying management practices. Remote Sens. 2010, 2, 562–578. [Google Scholar] [CrossRef]

- Gnyp, M.L.; Miao, Y.; Yuan, F.; Ustin, S.L.; Yu, K.; Yao, Y.; Huang, S.; Bareth, G. Hyperspectral canopy sensing of paddy rice aboveground biomass at different growth stages. Field Crop Res. 2014, 155, 42–55. [Google Scholar] [CrossRef]

- Tang, Y.; Huang, J.; Wang, R. Change law of hyperspectral data in related with chlorophyll and carotenoid in rice at different developmental stages. Rice Sci. 2004, 11, 274–282. [Google Scholar]

- Cao, Q.; Miao, Y.; Wang, H.; Huang, S.; Cheng, S.; Khosla, R.; Jiang, R. Non-destructive estimation of rice plant nitrogen status with Crop Circle multispectral active canopy sensor. Field Crop. Res. 2013, 154, 133–144. [Google Scholar] [CrossRef]

- Jin, X.; Yang, G.; Xu, X.; Yang, H.; Feng, H.; Li, Z.; Shen, J.; Lan, Y.; Zhao, C. Combined multi-temporal optical and radar parameters for estimating LAI and biomass in winter wheat using HJ and RADARSAR-2 Data. Remote Sens. 2015, 7, 13251–13272. [Google Scholar] [CrossRef]

- Miao, Y.; Mulla, D.J.; Randall, G.W.; Vetsch, J.A.; Vintila, R. Combining chlorophyll meter readings and high spatial resolution remote sensing images for in-season site-specific nitrogen management of corn. Precis. Agric. 2009, 10, 45–62. [Google Scholar] [CrossRef]

- Faurtyot, T.; Baret, F. Vegetation water and dry matter contents estimated from top-of-the-atmosphere reflectance data: A simulation study. Remote Sens. Environ. 1997, 61, 34–45. [Google Scholar] [CrossRef]

- Chen, W.; Zhao, J.; Cao, C.; Tian, H. Shrub biomass estimation in semi-arid sandland ecosystem based on remote sensing technology. Glob. Ecol. Conserv. 2018, 16, e479. [Google Scholar] [CrossRef]

- Qin, H.; Wang, C.; Xi, X.; Tian, J.; Zhou, G. Estimation of coniferous forest aboveground biomass with aggregated airborne small-footprint LiDAR full-waveforms. Opt. Express 2017, 25, A851–A869. [Google Scholar] [CrossRef]

- Forkuor, G.; Hounkpatin, O.K.L.; Welp, G.; Thiel, M. High Resolution Mapping of Soil Properties Using Remote Sensing Variables in South-Western Burkina Faso: A comparison of machine learning and multiple linear regression models. PLoS ONE 2017, 12, e170478. [Google Scholar] [CrossRef] [PubMed]

- Breiman, L.; Friedman, J.; Olshen, R.; Stone, C. Classification and Regression Trees (The Wadsworth Statistics/Probability Series); Chapman and Hall/CRC: New York, NY, USA, 1984; pp. 1–358. [Google Scholar]

- Rodriguez-Galiano, V.; Mendes, M.P.; Garcia-Soldado, M.J.; Chica-Olmo, M.; Ribeiro, L. Predictive modeling of groundwater nitrate pollution using Random Forest and multisource variables related to intrinsic and specific vulnerability: A case study in an agricultural setting (Southern Spain). Sci. Total Environ. 2014, 476, 189–206. [Google Scholar] [CrossRef] [PubMed]

- Wang, L.; Zhou, X.; Zhu, X.; Dong, Z.; Guo, W. Estimation of biomass in wheat using random forest regression algorithm and remote sensing data. Crop J. 2016, 4, 212–219. [Google Scholar] [CrossRef]

- Durbha, S.S.; King, R.L.; Younan, N.H. Support vector machines regression for retrieval of leaf area index from multiangle imaging spectroradiometer. Remote Sens. Environ. 2007, 107, 348–361. [Google Scholar] [CrossRef]

- He, Q. Neural Network and its Application in IR. In Graduate School of Library and Information Science, University of Illinois at Urbana-Champaign; Spring: Champaign, IL, USA, 1999. [Google Scholar]

- Yuan, H.; Yang, G.; Li, C.; Wang, Y.; Liu, J.; Yu, H.; Feng, H.; Xu, B.; Zhao, X.; Yang, X. Retrieving soybean leaf area index from unmanned aerial vehicle hyperspectral remote sensing: Analysis of RF, ANN, and SVM Regression Models. Remote Sens. 2017, 9, 309. [Google Scholar] [CrossRef]

- Yao, X.; Huang, Y.; Shang, G.; Zhou, C.; Cheng, T.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of six algorithms to monitor wheat leaf nitrogen concentration. Remote Sens. 2015, 7, 14939–14966. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of winter wheat above-ground biomass using unmanned aerial vehicle-based snapshot hyperspectral sensor and crop height improved models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Tsouros, D.C.; Bibi, S.; Sarigiannidis, P.G. A review on UAV-based applications for precision agriculture. Information 2019, 10, 349. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Daughtry, C.S.T. What good are unmanned aircraft systems for agricultural remtoe sensing and precision agriculture? Int. J. Remote Sens. 2018, 39, 5345–5376. [Google Scholar] [CrossRef]

- Li, S.; Ding, X.; Kuang, Q.; Ata-Ul-Karim, S.T.; Cheng, T.; Liu, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cao, Q. Potential of UAV-based active sensing for monitoring rice leaf nitrogen status. Front. Plant Sci. 2018, 9, 1834. [Google Scholar] [CrossRef]

- Herrmann, I.; Karnieli, A.; Bonfil, D.J.; Cohen, Y.; Alchanatis, V. SWIR-based spectral indices for assessing nitrogen content in potato fields. Int. J. Remote Sens. 2010, 31, 5127–5143. [Google Scholar] [CrossRef]

- Elarab, M.; Ticlavilca, A.M.; Torres-Rua, A.F.; Maslova, I.; McKee, M. Estimating chlorophyll with thermal and broadband multispectral high resolution imagery from an unmanned aerial system using relevance vector machines for precision agriculture. Int. J. Appl. Earth Observ. Geoinf. 2015, 43, 32–42. [Google Scholar] [CrossRef]

- Pullanagari, R.R.; Kereszturi, G.; Yule, I. Integrating airborne hyperspectral, topographic, and soil data for estimating pasture quality using recersive feature elimination with random forest regression. Remote Sens. 2018, 10, 1117. [Google Scholar] [CrossRef]

- Buschmann, C.; Nagel, E. In vivo spectroscopy and internal optics of leaves as basis for remote sensing of vegetation. Int. J. Remote Sens. 1993, 14, 711–722. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Gitelson, A.A. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32, L08403. [Google Scholar] [CrossRef]

- Sripada, R.P.; Heiniger, R.W.; White, J.G.; Meijer, A.D. Aerial color infrared photography for determining early in-season nitrogen requirements in corn. Agron. J. 2006, 98, 968. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Rondeaux, G.; Steven, M.; Baret, F. Optimization of soil-adjusted vegetation indices. Remote Sens. Environ. 1996, 55, 95–107. [Google Scholar] [CrossRef]

- Rouse, J.W., Jr.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the Great Plains with ERTS. In NASA. Goddard Space Flight Center 3d ERTS-1 Symphony; NASA: Washington DC, USA, 1974; pp. 309–317. [Google Scholar]

- Jordan, C.F. Derivation of leaf-area index from quality of light on the forest floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Roujean, J.; Breon, F. Estimating PAR absorbed by vegetation from bidirectional reflectance measurements. Remote Sens. Environ. 1995, 51, 375–384. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef] [PubMed]

- Huete, A.R. A soil-adjusted vegetation index (SAVI). Remote Sens. Environ. 1988, 25, 295–309. [Google Scholar] [CrossRef]

- Sandham, L. Surface temperature measurement from space: A case study in the south western cape of south Africa. S. Afr. J. Enol. Vitic. 1997, 18, 25–30. [Google Scholar] [CrossRef][Green Version]

- Chen, J.M. Evaluation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Reyniers, M.; Walvoort, D.J.; De Baardemaaker, J. A linear model to predict with a multi-spectral radiometer the amount of nitrogen in winter wheat. Int. J. Remote Sens. 2006, 27, 4159–4179. [Google Scholar] [CrossRef]

- Dash, J.; Curran, P. The MERIS terrestrial chlorophyll index. Int. J. Remote Sens. 2004, 25, 5403–5413. [Google Scholar] [CrossRef]

- Goel, N.S.; Qin, W. Influences of canopy architecture on relationships between various vegetation indices and LAI and FPAR: A computer simulation. Remote Sens. Rev. 1994, 10, 309–347. [Google Scholar] [CrossRef]

- Gong, P.; Pu, R.; Biging, G.S.; Larrieu, M.R. Estimation of forest leaf area index using vegetation indices derived from Hyperion hyperspectral data. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1355–1362. [Google Scholar] [CrossRef]

- Barnes, E.; Clarke, T.; Richards, S.; Colaizzi, P.; Haberland, J.; Kostrzewski, M.; Waller, P.; Choi, C.; Riley, E.; Thompson, T.; et al. Coincident detection of crop water stress, nitrogen status and canopy density using ground based multispectral data. In Proceedings of the Fifth International Conference on Precision Agriculture, Bloomington, MN, USA, 16–19 July 2000. [Google Scholar]

- Gitelson, A.A.; Merzlyak, M.N.; Lichtenthaler, H.K. Detection of red edge position and chlorophyll content by reflectance measurements near 700 nm. J. Plant Physiol. 1996, 148, 501–508. [Google Scholar] [CrossRef]

- Jasper, J.; Reusch, S.; Link, A. Active sensing of the N status of wheat using optimized wavelength combination: Impact of seed rate, variety and growth stage. Precis. Agric. 2009, 9, 23–30. [Google Scholar]

- Gitelson, A.A.; Gritz, Y.; Merzlyak, M.N. Relationships between leaf chlorophyll content and spectral reflectance and algorithms for non-destructive chlorophyll assessment in higher plant leaves. J. Plant Physiol. 2003, 160, 271–282. [Google Scholar] [CrossRef] [PubMed]

- Elsayed, S.; Rischbeck, P.; Schmidhalter, U. Comparing the performance of active and passive reflectance sensors to assess the normalized relative canopy temperature and grain yield of drought-stressed barley cultivars. Field Crop. Res. 2015, 177, 148–160. [Google Scholar] [CrossRef]

- Erdle, K.; Mistele, B.; Schmidhalter, U. Comparison of active and passive spectral sensors in discriminating biomass parameters and nitrogen status in wheat cultivars. Field Crop. Res. 2011, 124, 74–84. [Google Scholar] [CrossRef]

- Datt, B. Visible/near infrared reflectance and chlorophyll content in Eucalyptus leaves. Int. J. Remote Sens. 1999, 20, 2741–2759. [Google Scholar] [CrossRef]

- Long, D.S.; Eitel, J.U.; Huggins, D.R. Assessing nitrogen status of dryland wheat using the canopy chlorophyll content index. Crop Manag. 2009, 8. [Google Scholar] [CrossRef]

| Treatment * | Planting Density (plants m−2) | Total N Rate (kg ha−1) | Base N (kg ha−1) | Tiller N (kg ha−1) | Panicle N (kg ha−1) |

|---|---|---|---|---|---|

| FP | 24 | 120 | 79 | 21 | 20 |

| ROM | 27 | 120 | 79 | 21 | 20 |

| PRM1 | 27 | ? | 71 | 21 | ? |

| PRM2 | 27 | ? | 80 | - | ? |

| PRM3 | 27 | ? | 80 | - | ? |

| Minimum | Maximum | Mean | SD | CV (%) | |

|---|---|---|---|---|---|

| Training dataset (n = 266) | |||||

| AGB (t ha−1) | 0.98 | 10.86 | 5.28 | 1.98 | 37.54 |

| PNC (g kg−1) | 8.75 | 20.99 | 15.65 | 2.51 | 16.03 |

| PNU (kg ha−1) | 15.73 | 154.10 | 80.60 | 27.74 | 34.41 |

| NNI | 0.57 | 1.28 | 0.97 | 0.16 | 16.42 |

| Test dataset (n = 115) | |||||

| AGB (t ha−1) | 1.51 | 10.45 | 5.25 | 2.22 | 42.37 |

| PNC (g kg−1) | 9.36 | 20.04 | 15.53 | 2.40 | 15.47 |

| PNU (kg ha−1) | 23.83 | 154.09 | 79.62 | 31.53 | 39.59 |

| NNI | 0.58 | 1.21 | 0.95 | 0.17 | 17.86 |

| AGB (t ha−1) | PNU (kg ha−1) | NNI | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Index | Model | R2 | RMSE | RE (%) | Index | Model | R2 | RMSE | RE (%) | Index | Model | R2 | RMSE | RE (%) |

| Stem elongation stage | ||||||||||||||

| GOSAVI | E | 0.65 | 0.58 | 16 | GOSAVI | E | 0.61 | 11.40 | 18 | NNIR | Q | 0.43 | 0.10 | 11 |

| GRDVI | P | 0.64 | 0.58 | 16 | GRDVI | P | 0.60 | 11.63 | 18 | GOSAVI | E | 0.42 | 0.10 | 11 |

| GSAVI | E | 0.63 | 0.59 | 16 | NNIR | E | 0.60 | 12.38 | 20 | GRDVI | P | 0.42 | 0.10 | 11 |

| Heading stage | ||||||||||||||

| NLI | P | 0.65 | 1.01 | 15 | GOSAVI | P | 0.69 | 15.84 | 16 | GNDVI | P | 0.63 | 0.11 | 12 |

| WDRVI | P | 0.61 | 1.08 | 16 | NDVI | P | 0.61 | 17.54 | 18 | CIg | Q | 0.63 | 0.11 | 11 |

| GSAVI | P | 0.59 | 1.03 | 15 | WDRVI | P | 0.60 | 17.57 | 18 | GRVI | Q | 0.63 | 0.11 | 11 |

| Across growth stages | ||||||||||||||

| MGSAVI | E | 0.74 | 1.10 | 21 | GOSAVI | Q | 0.73 | 14.95 | 19 | CIg | Q | 0.39 | 0.13 | 13 |

| GRDVI | E | 0.73 | 1.12 | 21 | MGSAVI | E | 0.69 | 16.30 | 20 | GRVI | Q | 0.38 | 0.13 | 13 |

| GSAVI | E | 0.73 | 1.13 | 21 | GRDVI | E | 0.69 | 16.48 | 21 | GWDRVI | Q | 0.38 | 0.13 | 13 |

| Stage | Regression Equation | R2 | RMSE | RE (%) |

|---|---|---|---|---|

| AGB (kg ha−1) | ||||

| SE | −4.053 + 4.384*GNDVI + 0.211*RESR + 16.482*MTCAR/OSAVI | 0.69 | 0.51 | 14 |

| HD | −5.475 + 8.159*MCARI3 + 1.106*MSR | 0.62 | 0.97 | 14 |

| All | 7.906 + 81.541*MGSAVI − 90.222*GSAVI − 3.516*MCARI2*OSAVI | 0.68 | 1.11 | 21 |

| PNU (kg ha−1) | ||||

| SE | −198.601 + 353.387*GOSAVI + 132.397*MNDRE2 − 91.552*MCARI1 | 0.63 | 10.32 | 16 |

| HD | −267.115 + 579.684*GOSAVI − 206.772*RE | 0.69 | 15.18 | 16 |

| All | 6.614 + 613.62*MGSAVI − 1711.01*SAVI + 248.331*REDVI + 1237.866*RDVI | 0.73 | 14.38 | 18 |

| NNI | ||||

| SE | −7.976 + 32.438*NNIR − 15.718*NNIRI + 16.493*RE − 7.852*MGSAVI + 0.038*SAVI*SR | 0.54 | 0.09 | 9 |

| HD | −36.417 + 39.501*GNDVI + 103.241*NGI − 2.601*MNDI | 0.75 | 0.09 | 9 |

| All | 0.983 + 0.776*MNDRE2 − 7.632*NGI + 7.384*R | 0.40 | 0.13 | 13 |

| Parameter | SE | HD | ALL | ||||||

|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | RE (%) | R2 | RMSE | RE (%) | R2 | RMSE | RE (%) | |

| AGB (t ha−1) | 0.61 | 0.51 | 14 | 0.52 | 1.09 | 16 | 0.77 | 1.05 | 20 |

| PNU (kg ha−1) | 0.60 | 11.60 | 19 | 0.65 | 16.96 | 18 | 0.80 | 13.76 | 17 |

| NNI | 0.52 | 0.10 | 10 | 0.74 | 0.09 | 10 | 0.53 | 0.11 | 12 |

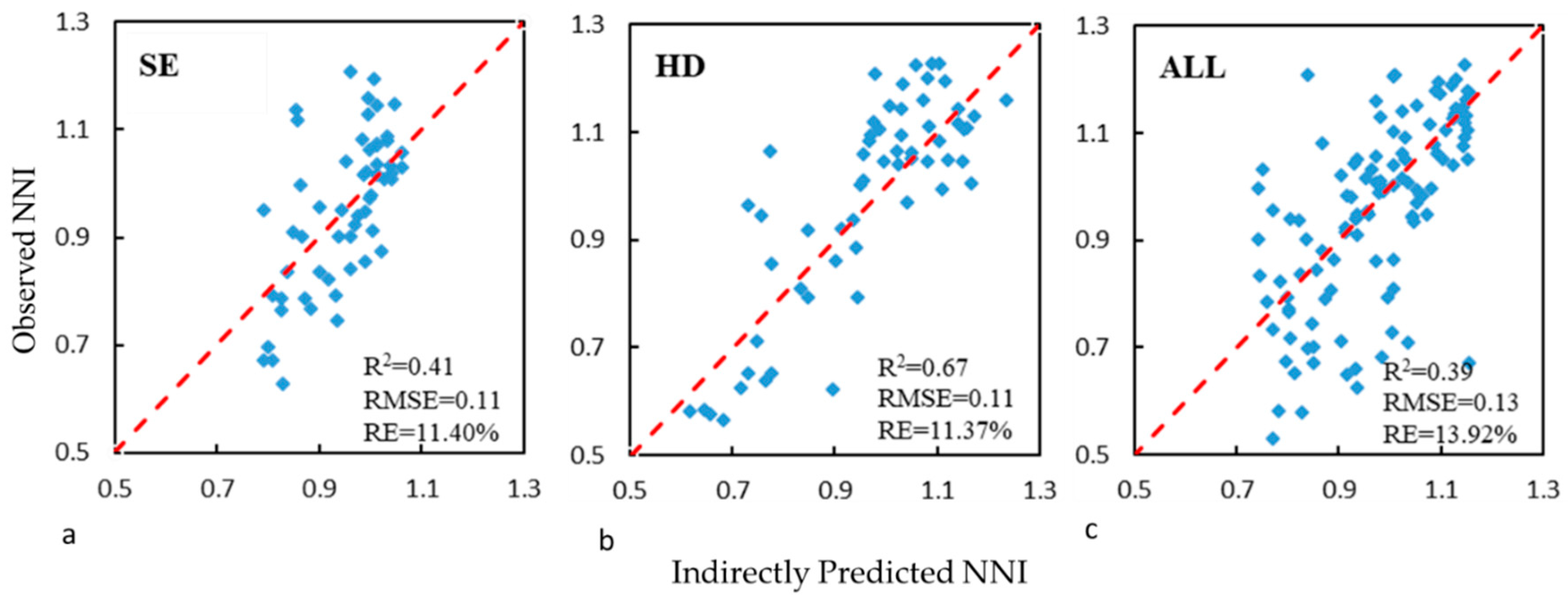

| NNI_Indirect | 0.51 | 0.10 | 11 | 0.74 | 0.10 | 10 | 0.49 | 0.11 | 12 |

| Parameter | SE | HD Subset | ALL | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | RE (%) | R2 | RMSE | RE (%) | R2 | RMSE | RE (%) | ||

| AGB (t ha−1) | RF | 0.87 | 0.33 | 9 | 0.85 | 0.6 | 9 | 0.92 | 0.54 | 10 |

| SVM | 0.74 | 0.47 | 13 | 0.62 | 0.79 | 11 | 0.88 | 0.69 | 17 | |

| ANN | 0.88 | 0.32 | 9 | 0.77 | 0.74 | 11 | 0.97 | 0.31 | 19 | |

| PNU (kg ha−1) | RF | 0.93 | 4.59 | 7 | 0.93 | 7.05 | 7 | 0.90 | 8.59 | 16 |

| SVM | 0.65 | 10.05 | 16 | 0.70 | 15.07 | 15 | 0.73 | 14.38 | 18 | |

| ANN | 0.71 | 9.1 | 14 | 0.73 | 13.53 | 14 | 0.95 | 6.47 | 8 | |

| NNI | RF | 0.94 | 0.03 | 3 | 0.96 | 0.03 | 3 | 0.93 | 0.04 | 4 |

| SVM | 0.65 | 0.08 | 8 | 0.79 | 0.08 | 8.52% | 0.75 | 0.08 | 8.08% | |

| ANN | 0.73 | 0.07 | 7 | 0.81 | 0.08 | 8.66% | 0.55 | 0.11 | 10.61% | |

| Parameter | SE | HD | ALL | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | RE (%) | R2 | RMSE | RE [%] | R2 | RMSE | RE (%) | ||

| AGB (t ha−1) | RF | 0.64 | 0.58 | 16 | 0.61 | 1.00 | 15 | 0.83 | 0.58 | 16 |

| SVM | 0.38 | 0.76 | 22 | 0.59 | 1.01 | 15 | 0.81 | 0.95 | 18 | |

| ANN | 0.60 | 0.62 | 17 | 0.39 | 1.24 | 18 | 0.65 | 1.31 | 25 | |

| PNU (kg ha−1) | RF | 0.62 | 11.52 | 19 | 0.69 | 16.45 | 17 | 0.83 | 12.81 | 16 |

| SVM | 0.55 | 11.92 | 19 | 0.49 | 20.98 | 22 | 0.79 | 14.16 | 18 | |

| ANN | 0.57 | 12.13 | 9 | 0.63 | 17.89 | 18 | 0.74 | 15.88 | 20 | |

| NNI | RF | 0.58 | 0.09 | 10 | 0.79 | 0.09 | 9 | 0.72 | 0.09 | 9.34 |

| SVM | 0.46 | 0.10 | 11 | 0.70 | 0.11 | 11 | 0.62 | 0.11 | 11 | |

| ANN | 0.56 | 0.10 | 10 | 0.79 | 0.09 | 9 | 0.61 | 0.11 | 11 | |

| NNI_Indirect | RF | 0.54 | 0.10 | 10 | 0.64 | 0.10 | 10 | 0.64 | 0.10 | 11 |

| SVM | 0.37 | 0.11 | 12 | 0.49 | 0.15 | 16 | 0.50 | 0.12 | 13 | |

| ANN | 0.34 | 0.17 | 18 | 0.58 | 0.14 | 15 | 0.46 | 0.15 | 16 | |

| Parameter | SE | HD | ALL | |||||||

|---|---|---|---|---|---|---|---|---|---|---|

| R2 | RMSE | RE | R2 | RMSE | RE | R2 | RMSE | RE | ||

| AGB (t ha−1) | Calibration | 0.91 | 0.28 | 7.66 | 0.95 | 0.35 | 5.06 | 0.97 | 0.36 | 6.90 |

| Validation | 0.66 | 0.58 | 16.45 | 0.69 | 0.88 | 13.15 | 0.83 | 0.92 | 17.54 | |

| PNU (kg ha−1) | Calibration | 0.94 | 4.11 | 6.52 | 0.96 | 5.32 | 5.46 | 0.94 | 7.35 | 9.12 |

| Validation | 0.66 | 11.13 | 18.10 | 0.69 | 16.39 | 16.94 | 0.85 | 12.37 | 15.55 | |

| NNI_direct | Calibration | 0.94 | 0.03 | 3.33 | 0.96 | 0.04 | 3.65 | 0.93 | 0.04 | 4.45 |

| Validation | 0.61 | 0.09 | 9.98 | 0.79 | 0.09 | 9.06 | 0.74 | 0.09 | 8.72 | |

| NNI_indirect | Validation | 0.53 | 0.10 | 10.77 | 0.72 | 0.10 | 10.60 | 0.67 | 0.10 | 10.13 |

| AGB (t ha−1) | PNU (kg ha−1) | NNI | |||

|---|---|---|---|---|---|

| SE | N = 21 | N = 21 | N = 22 | ||

| NNIR | 0.09 | NNIR | 0.22 | REDVI | 0.21 |

| REDVI | 0.09 | REDVI | 0.20 | NNIR | 0.13 |

| MSR_G | 0.0 | GOSAVI | 0.12 | MERIS | 0.06 |

| GOSAVI | 0.07 | NLI | 0.05 | MTCARI/OSAVI | 0.05 |

| CIg | 0.06 | REOSAVI | 0.04 | GOSAVI | 0.05 |

| HD | N = 17 | N = 20 | N = 23 | ||

| OSAVI | 0.30 | GOSAVI | 0.49 | GNDVI | 0.53 |

| MCARI3 | 0.23 | GWDRVI | 0.18 | NNIR | 0.09 |

| VIopt | 0.10 | NRI2 | 0.04 | GOSAVI | 0.09 |

| GOSAVI | 0.07 | NRI | 0.04 | NGI | 0.04 |

| MCARI1/MRETVI | 0.05 | Green | 0.03 | REDVI | 0.02 |

| ALL | N = 19 | N = 23 | N = 23 | ||

| GRDVI | 0.37 | GRDVI | 0.49 | CIg | 0.24 |

| GOSAVI | 0.30 | GRVI | 0.14 | GOSAVI | 0.10 |

| NLI | 0.06 | NNIR | 0.05 | Red | 0.06 |

| MNDRE | 0.04 | SAVI*SR | 0.05 | RETVI | 0.05 |

| OSAVI | 0.04 | GSAVI | 0.04 | MDD | 0.04 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zha, H.; Miao, Y.; Wang, T.; Li, Y.; Zhang, J.; Sun, W.; Feng, Z.; Kusnierek, K. Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning. Remote Sens. 2020, 12, 215. https://doi.org/10.3390/rs12020215

Zha H, Miao Y, Wang T, Li Y, Zhang J, Sun W, Feng Z, Kusnierek K. Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning. Remote Sensing. 2020; 12(2):215. https://doi.org/10.3390/rs12020215

Chicago/Turabian StyleZha, Hainie, Yuxin Miao, Tiantian Wang, Yue Li, Jing Zhang, Weichao Sun, Zhengqi Feng, and Krzysztof Kusnierek. 2020. "Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning" Remote Sensing 12, no. 2: 215. https://doi.org/10.3390/rs12020215

APA StyleZha, H., Miao, Y., Wang, T., Li, Y., Zhang, J., Sun, W., Feng, Z., & Kusnierek, K. (2020). Improving Unmanned Aerial Vehicle Remote Sensing-Based Rice Nitrogen Nutrition Index Prediction with Machine Learning. Remote Sensing, 12(2), 215. https://doi.org/10.3390/rs12020215