IM2ELEVATION: Building Height Estimation from Single-View Aerial Imagery

Abstract

1. Introduction

2. Related Works

2.1. Fusion of Heterogeneous Data Streams

2.2. Mapping of Buildings and Rooftops

2.3. Monocular Depth Estimation from Images

2.4. Aerial Image Height Estimation

2.5. Point Cloud and Aerial Imagery Registration

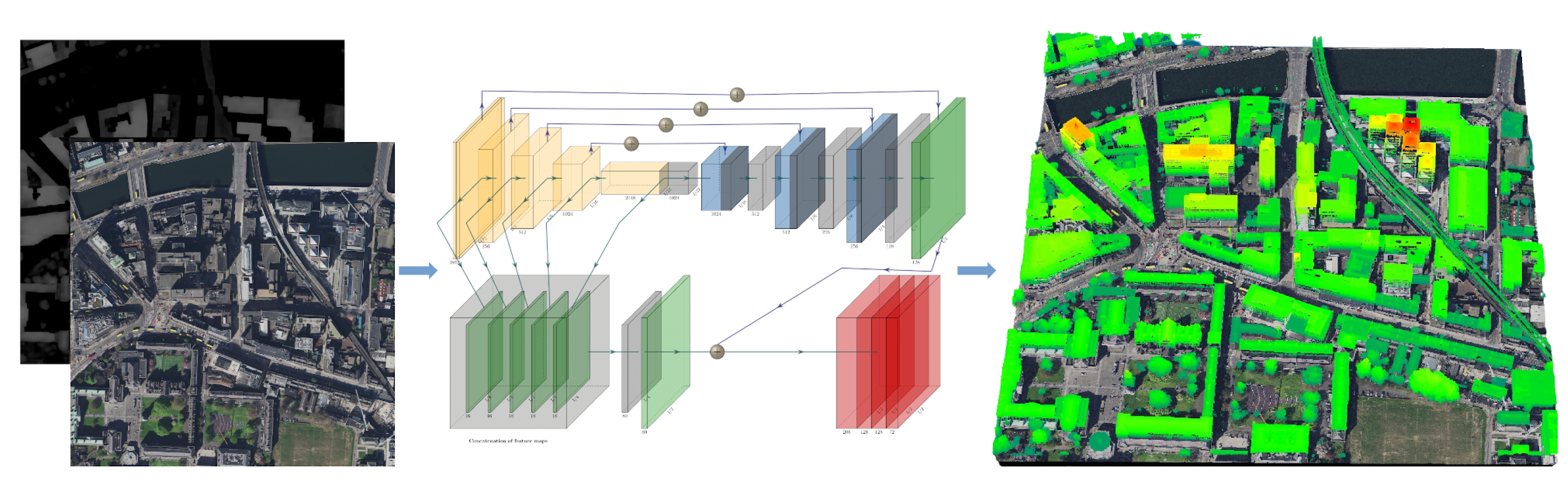

3. Data Preprocessing and Registration

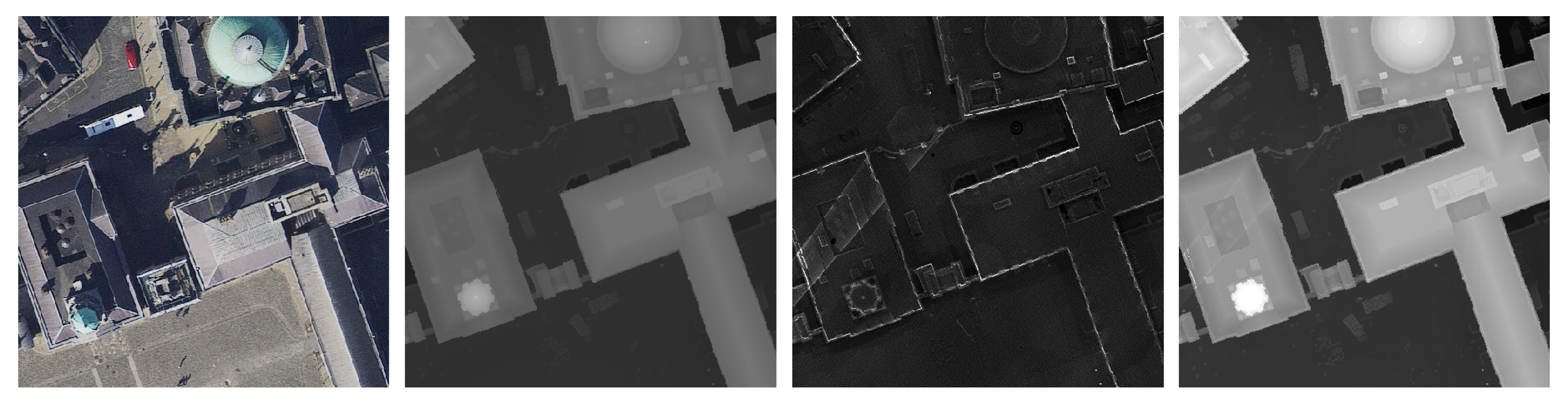

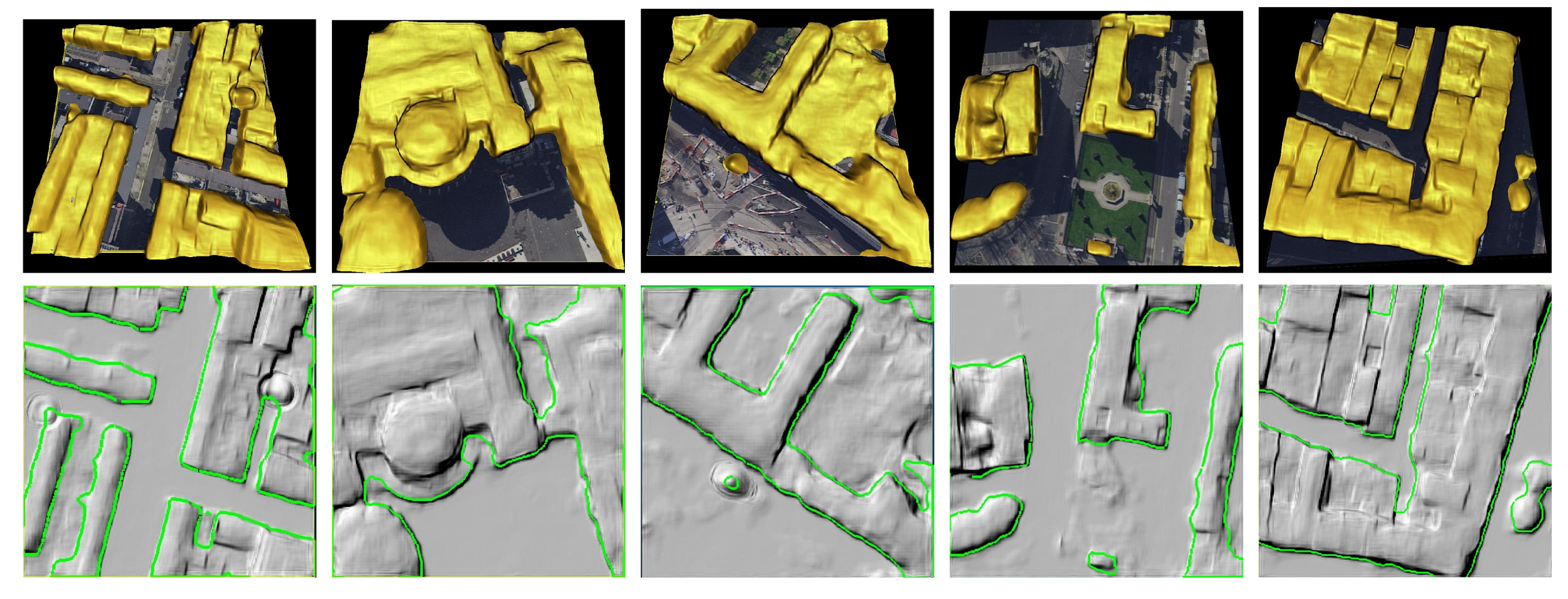

3.1. Preprocessing of Lidar Data

3.2. Preprocessing of Aerial Orthorectified Imagery

3.3. Registration with Mutual Information

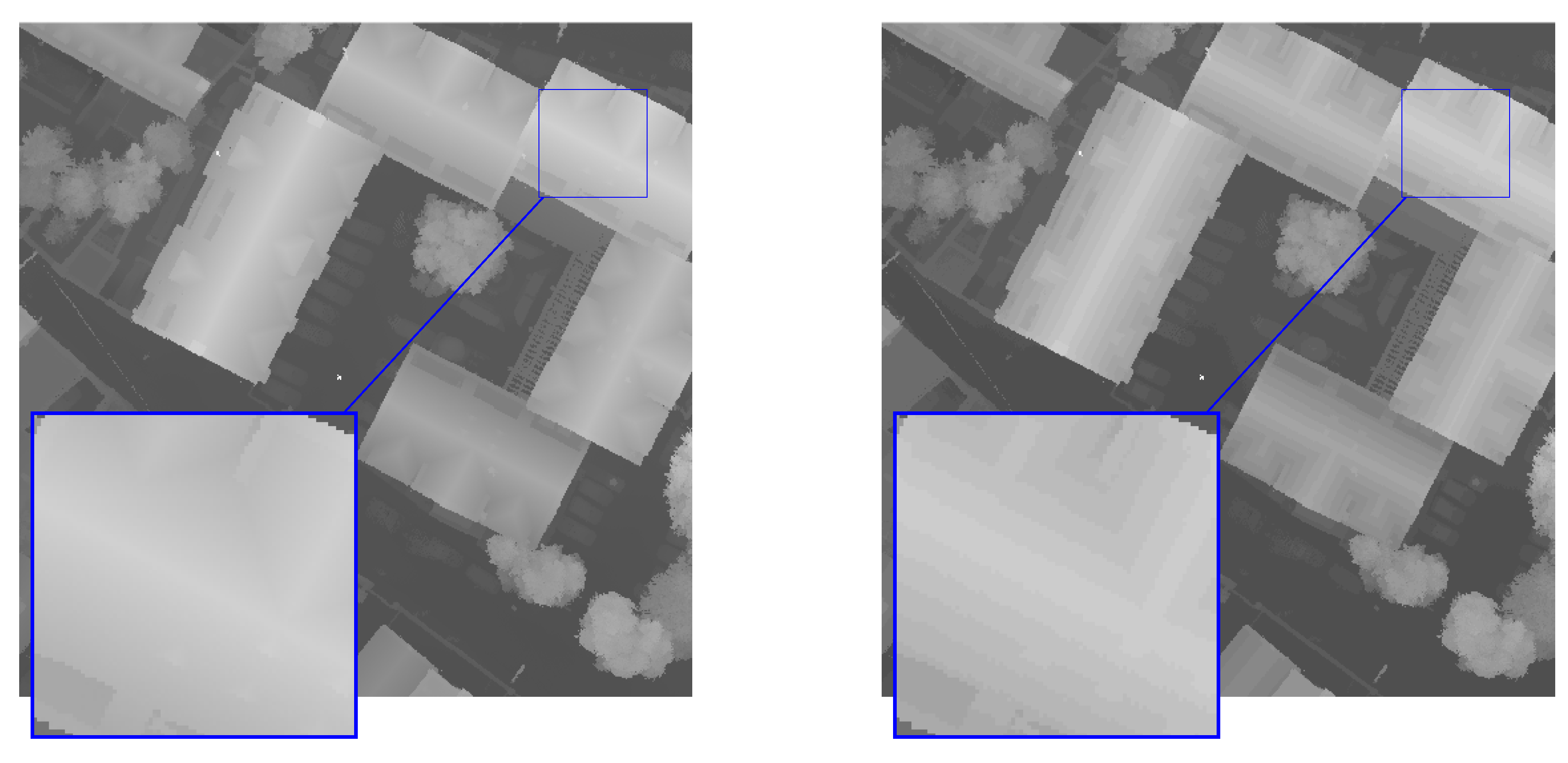

3.4. Patch Adjustment via Hough Lines Validation

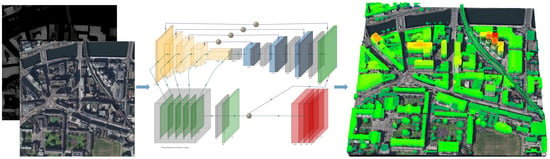

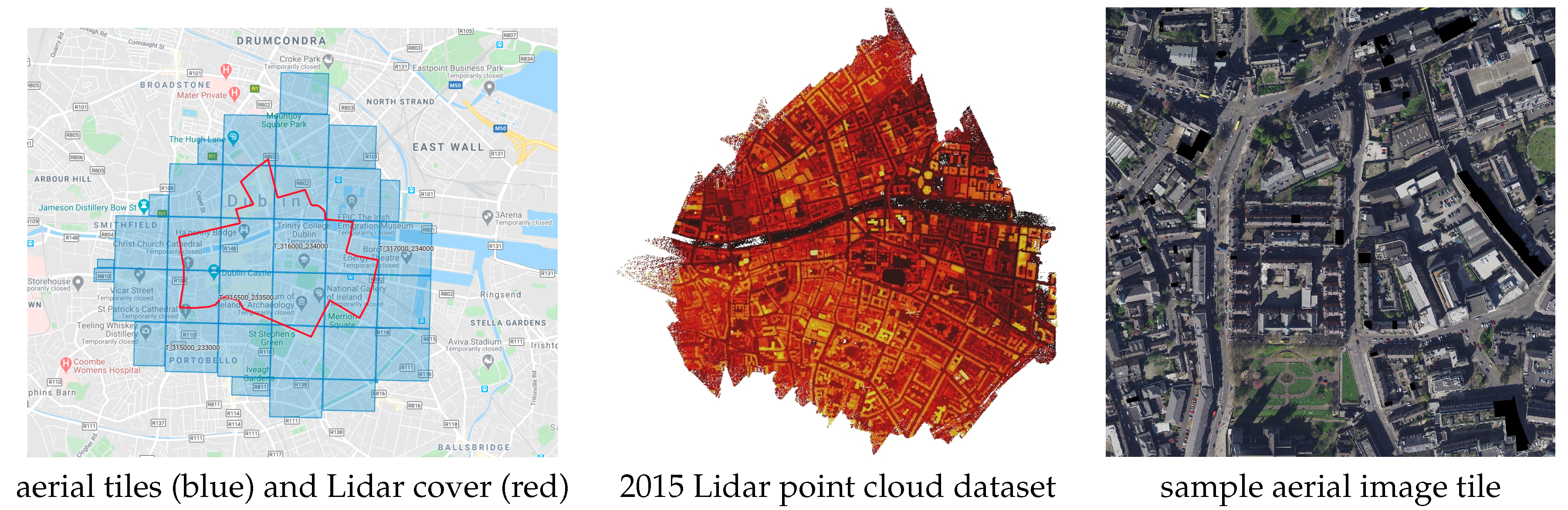

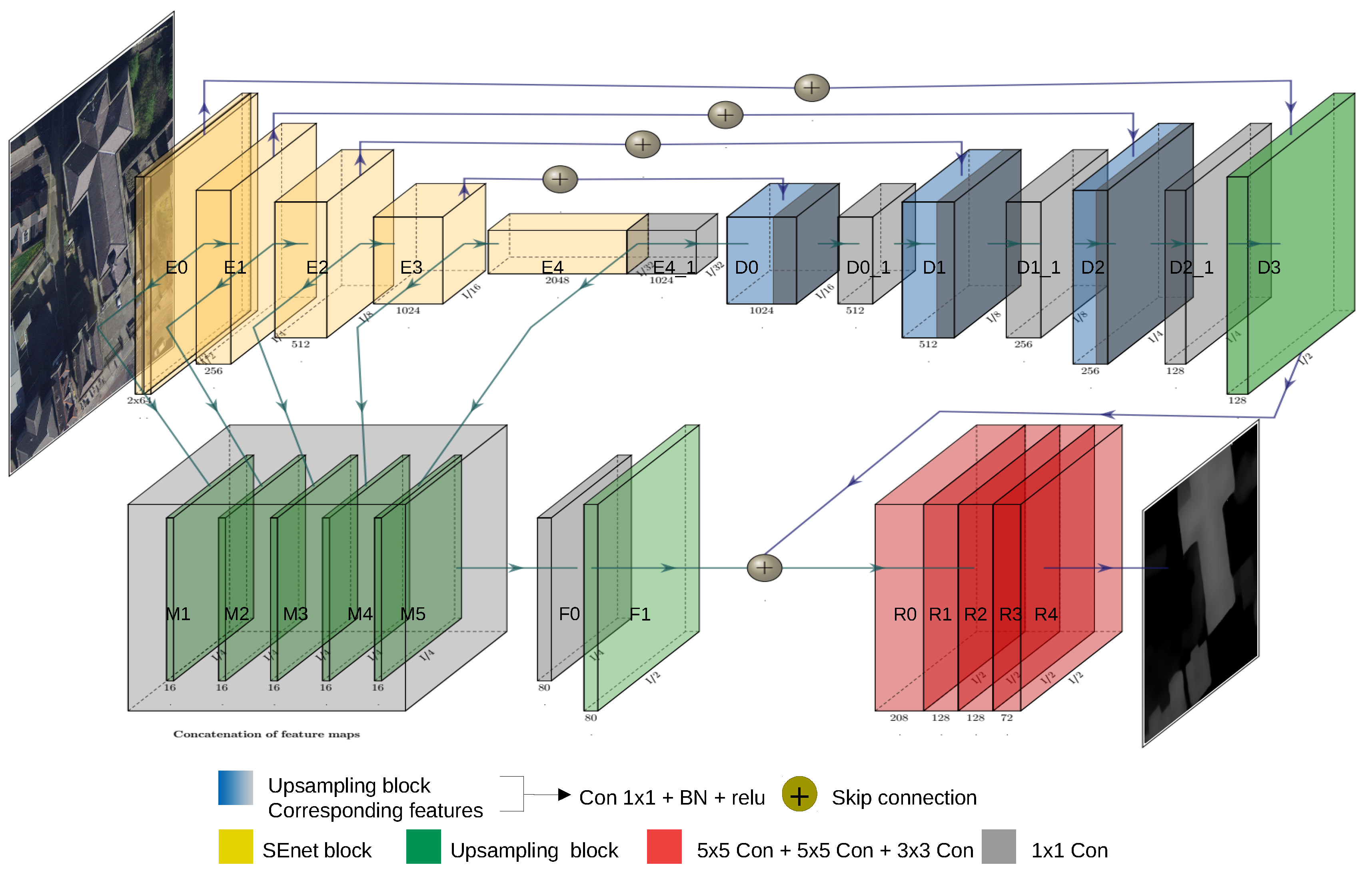

4. CNN Network Design

5. Results

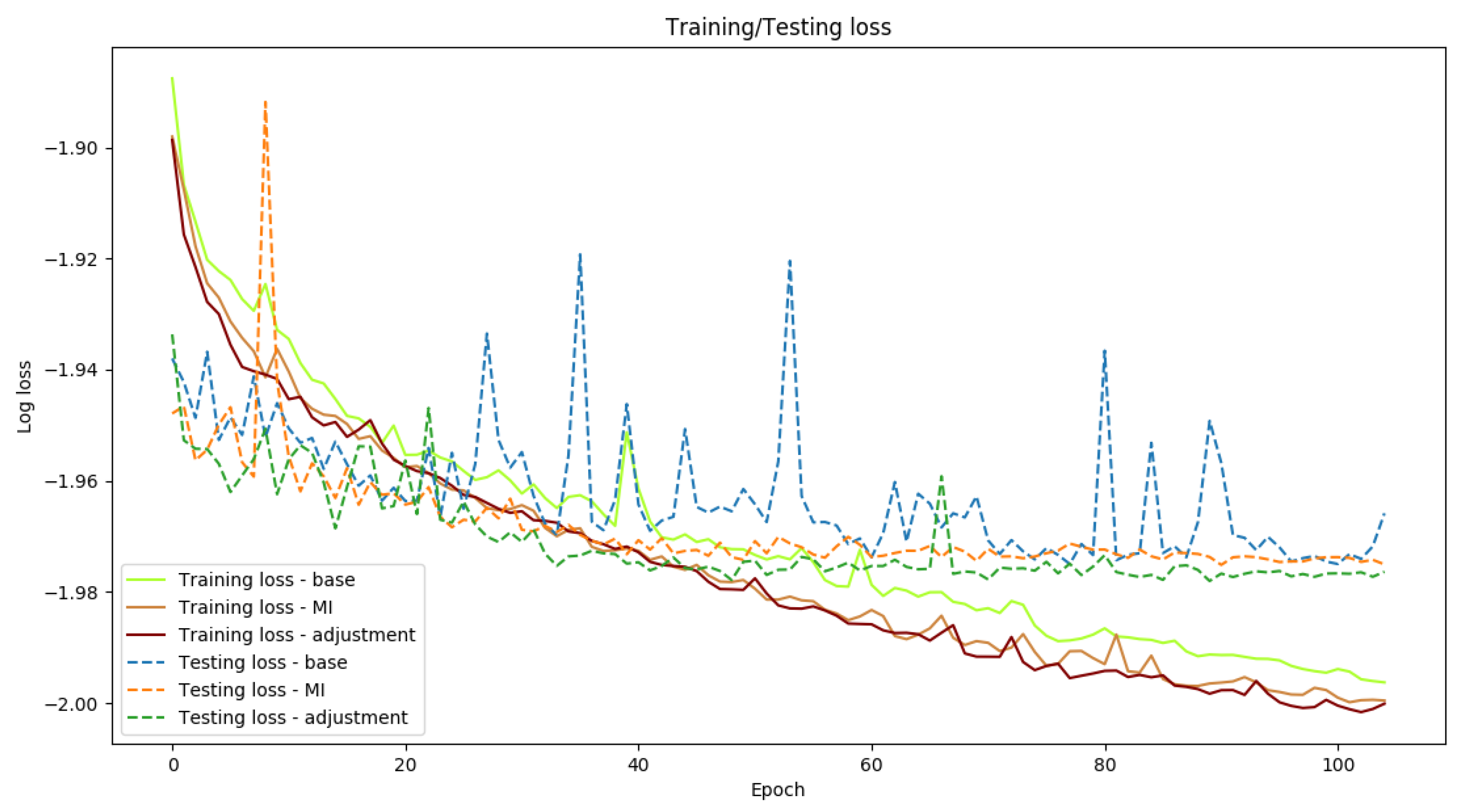

5.1. MI Registration and Hough Line Validation

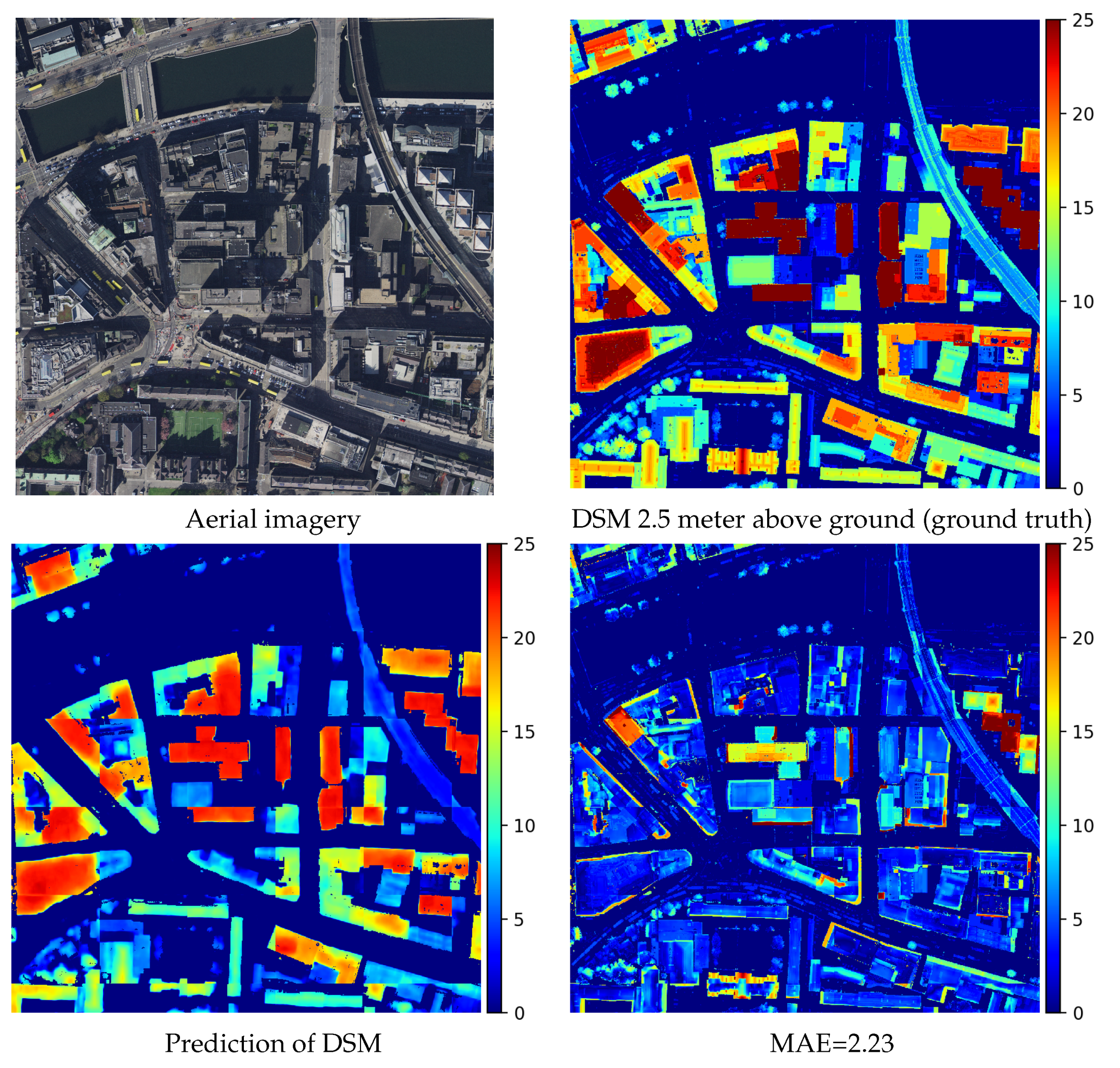

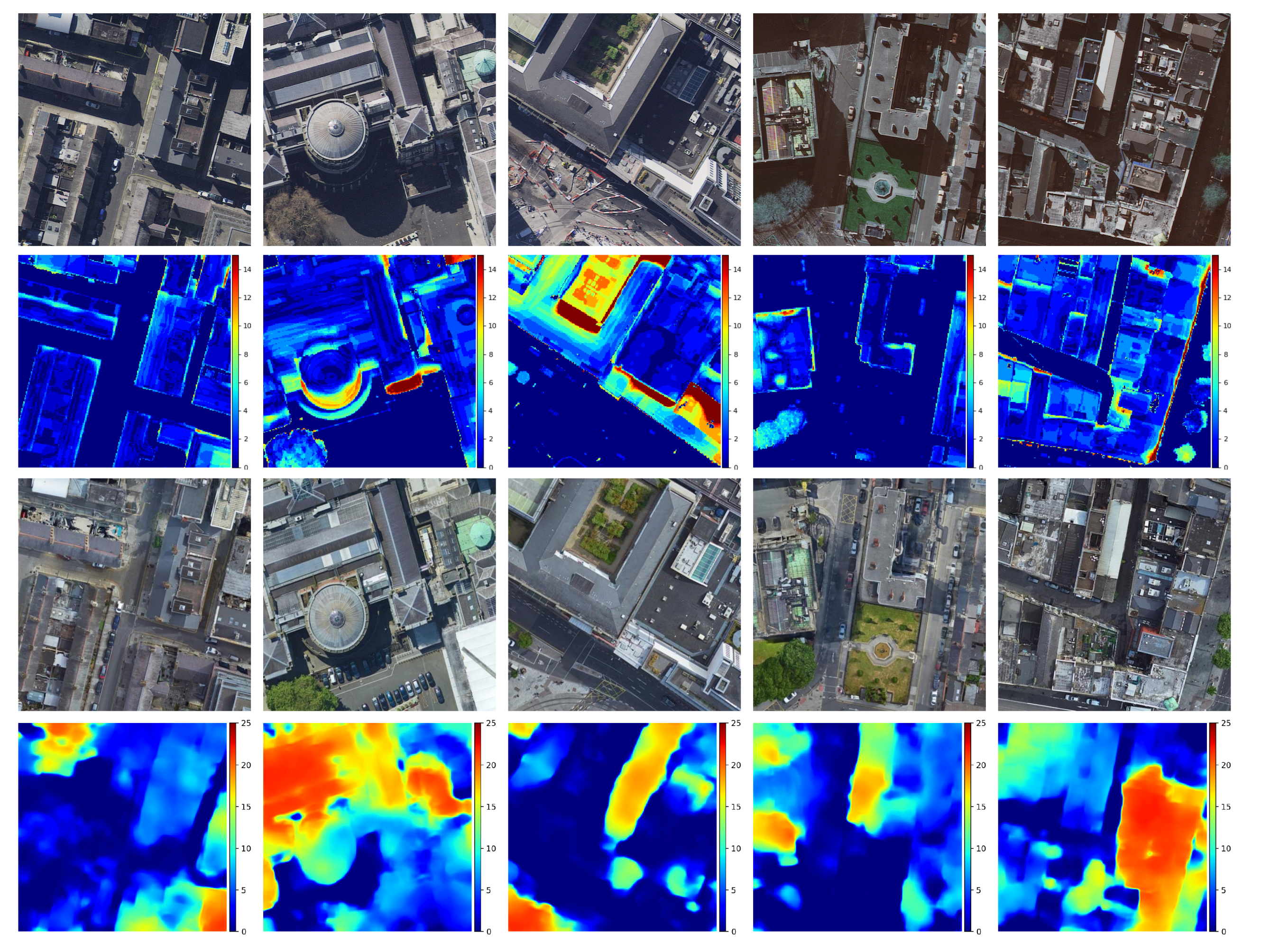

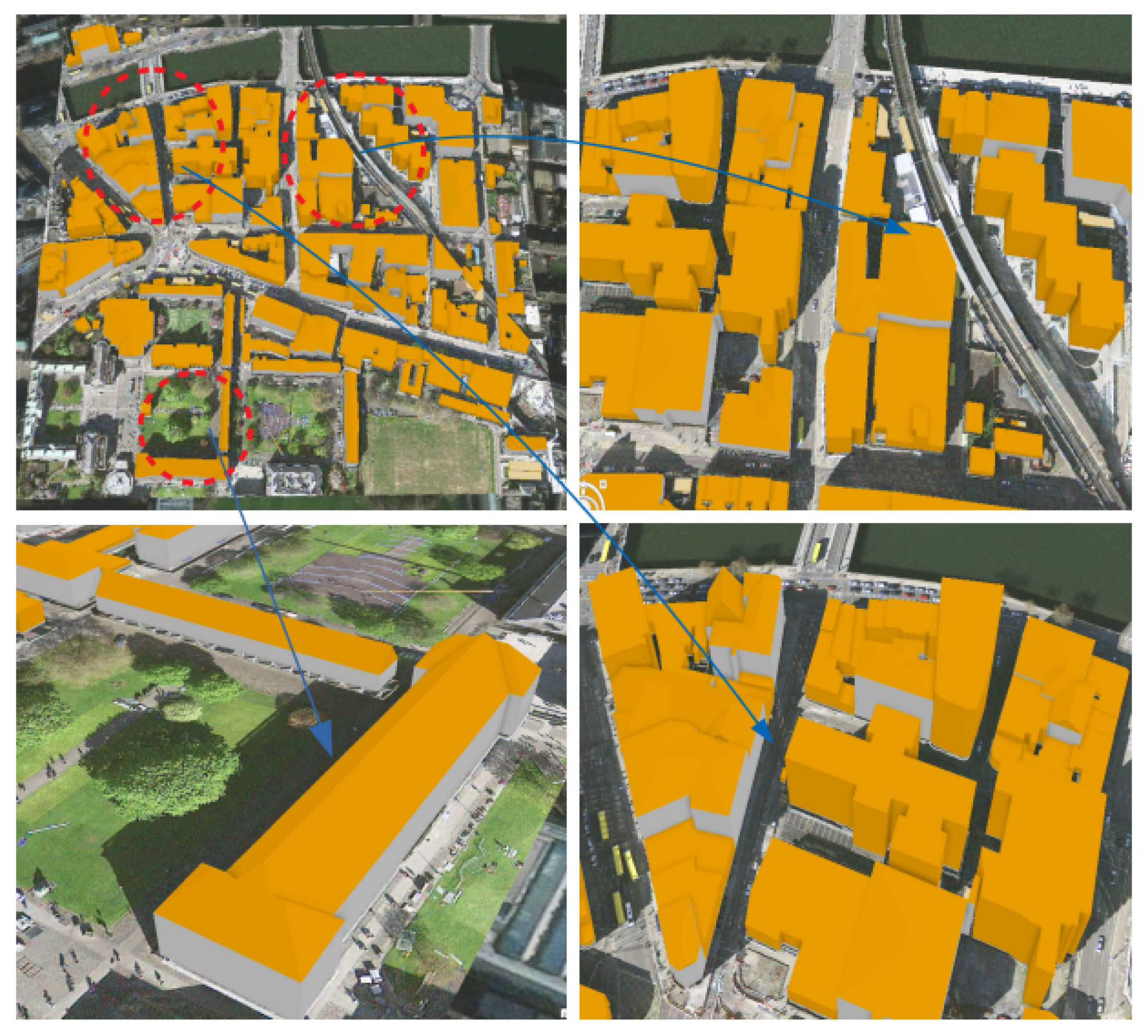

5.2. Height Inference with CNN

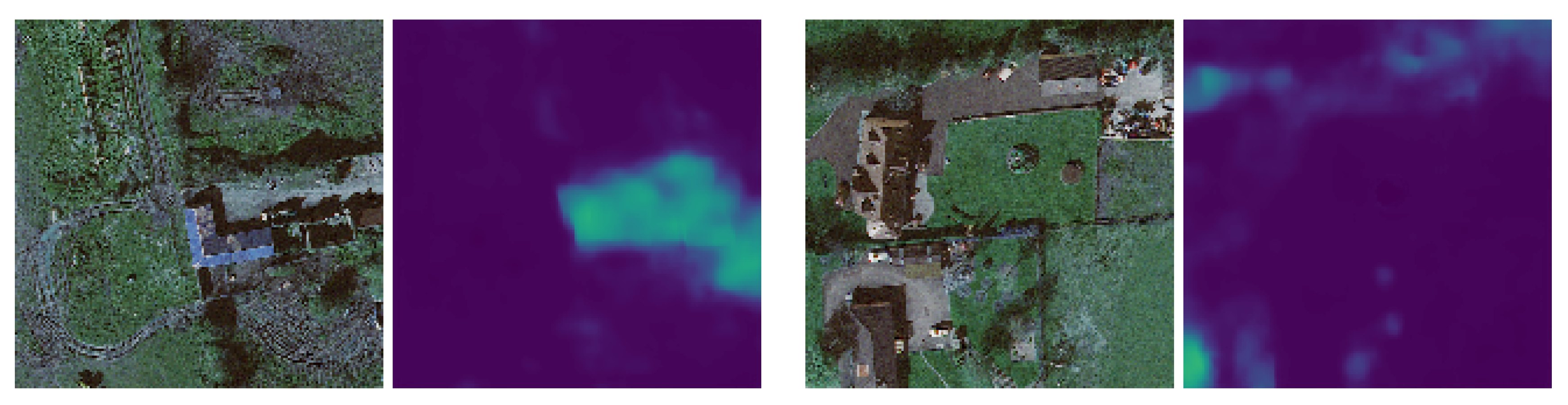

5.3. Height Inference from Stereoscopic Imagery

6. Discussion

7. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Bosch, M.; Foster, K.; Christie, G.; Wang, S.; Hager, G.D.; Brown, M. Semantic stereo for incidental satellite images. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1524–1532. [Google Scholar]

- Schönberger, J.L.; Frahm, J.M. Structure-from-Motion Revisited. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 26 June–1 July 2016. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7132–7141. [Google Scholar]

- Hu, J.; Ozay, M.; Zhang, Y.; Okatani, T. Revisiting single image depth estimation: Toward higher resolution maps with accurate object boundaries. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1043–1051. [Google Scholar]

- Laefer, D.F.; Saleh Abuwarda, A.V.V.; Truong-Hong, L.; Gharibi, H. 2015 Aerial Laser and Photogrammetry Survey of Dublin City Collection Record. 2015. Available online: https://geo.nyu.edu/catalog/nyu_2451_38684 (accessed on 21 August 2020). [CrossRef]

- LIDAR Point Cloud UK. Available online: https://data.gov.uk/dataset/977a4ca4-1759-4f26-baa7-b566bd7ca7bf/lidar-point-cloud (accessed on 21 August 2020).

- Malof, J.M.; Bradbury, K.; Collins, L.M.; Newell, R.G. Automatic detection of solar photovoltaic arrays in high resolution aerial imagery. Appl. Energy 2016, 183, 229–240. [Google Scholar] [CrossRef]

- HERE Geodata Models offer global precise 3D dataset for 5G deployment. Geo Week News. 2 April 2020. Available online: https://www.geo-week.com/here-geodata-models-offer-global-precise-3d-dataset-for-deploying-5g/ (accessed on 21 August 2020).

- Ahmad, K.; Pogorelov, K.; Riegler, M.; Ostroukhova, O.; Halvorsen, P.; Conci, N.; Dahyot, R. Automatic detection of passable roads after floods in remote sensed and social media data. Signal Process. Image Commun. 2019, 74, 110–118. [Google Scholar] [CrossRef]

- Bulbul, A.; Dahyot, R. Social media based 3D visual popularity. Comput. Graph. 2017, 63, 28–36. [Google Scholar] [CrossRef]

- Micusik, B.; Kosecka, J. Piecewise planar city 3D modeling from street view panoramic sequences. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 2906–2912. [Google Scholar]

- Krylov, V.A.; Kenny, E.; Dahyot, R. Automatic Discovery and Geotagging of Objects from Street View Imagery. Remote Sens. 2018, 10, 661. [Google Scholar] [CrossRef]

- Laumer, D.; Lang, N.; van Doorn, N.; Aodha, O.M.; Perona, P.; Wegner, J.D. Geocoding of trees from street addresses and street-level images. ISPRS J. Photogramm. Remote Sens. 2020, 162, 125–136. [Google Scholar] [CrossRef]

- Liu, C.J.; Krylov, V.; Dahyot, R. 3D point cloud segmentation using GIS. In Proceedings of the 20th Irish Machine Vision and Image Processing Conference, Belfast, UK, 29–31 August 2018; pp. 41–48. [Google Scholar]

- Byrne, J.; Connelly, J.; Su, J.; Krylov, V.; Bourke, M.; Moloney, D.; Dahyot, R. Trinity College Dublin Drone Survey Dataset. (Imagery, Mesh and Report), Trinity College Dublin. 2017. Available online: http://hdl.handle.net/2262/81836 (accessed on 21 August 2020).

- Benedek, C.; Descombes, X.; Zerubia, J. Building Development Monitoring in Multitemporal Remotely Sensed Image Pairs with Stochastic Birth-Death Dynamics. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 33–50. [Google Scholar] [CrossRef] [PubMed]

- Lafarge, F.; Descombes, X.; Zerubia, J.; Pierrot-Deseilligny, M. Structural Approach for Building Reconstruction from a Single DSM. IEEE Trans. Pattern Anal. Mach. Intell. 2010, 32, 135–147. [Google Scholar] [CrossRef] [PubMed]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can Semantic Labeling Methods Generalize to Any City? The Inria Aerial Image Labeling Benchmark. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017. [Google Scholar]

- Palmer, D.; Koumpli, E.; Cole, I.; Gottschalg, R.; Betts, T.A. GIS-Based Method for Identification of Wide Area Rooftop Suitability for Minimum Size PV Systems Using LiDAR Data and Photogrammetry. Energies 2018, 11, 3506. [Google Scholar] [CrossRef]

- Song, X.; Huang, Y.; Zhao, C.; Liu, Y.; Lu, Y.; Chang, Y.; Yang, J. An Approach for Estimating Solar Photovoltaic Potential Based on Rooftop Retrieval from Remote Sensing Images. Energies 2018, 11, 3172. [Google Scholar] [CrossRef]

- Saxena, A.; Sun, M.; Ng, A.Y. Make3D: Depth Perception from a Single Still Image. AAAI 2008, 3, 1571–1576. [Google Scholar]

- Saxena, A.; Chung, S.H.; Ng, A.Y. Learning depth from single monocular images. In Proceedings of the Advances in Neural Information Processing Systems, Vancouver, BC, Canada, 4–7 December 2006; pp. 1161–1168. [Google Scholar]

- Zhuo, W.; Salzmann, M.; He, X.; Liu, M. Indoor scene structure analysis for single image depth estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 614–622. [Google Scholar]

- Eigen, D.; Fergus, R. Predicting depth, surface normals and semantic labels with a common multi-scale convolutional architecture. In Proceedings of the IEEE International Conference on Computer Vision, Santiago, Chile, 13–16 December 2015; pp. 2650–2658. [Google Scholar]

- Liu, F.; Shen, C.; Lin, G. Deep convolutional neural fields for depth estimation from a single image. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 5162–5170. [Google Scholar]

- Xu, D.; Ricci, E.; Ouyang, W.; Wang, X.; Sebe, N. Multi-scale continuous crfs as sequential deep networks for monocular depth estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 5354–5362. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 3431–3440. [Google Scholar]

- Laina, I.; Rupprecht, C.; Belagiannis, V.; Tombari, F.; Navab, N. Deeper depth prediction with fully convolutional residual networks. In Proceedings of the 2016 Fourth International Conference on 3D Vision (3DV), Stanford, CA, USA, 25–28 October 2016; pp. 239–248. [Google Scholar]

- Mal, F.; Karaman, S. Sparse-to-dense: Depth prediction from sparse depth samples and a single image. In Proceedings of the 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 1–8. [Google Scholar]

- Eigen, D.; Puhrsch, C.; Fergus, R. Depth map prediction from a single image using a multi-scale deep network. In Proceedings of the Advances in Neural Information Processing Systems, Montreal, QC, Canada, 8–13 December 2014; pp. 2366–2374. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, CA, USA, 3–8 December 2012; pp. 1097–1105. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 770–778. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- Drozdzal, M.; Vorontsov, E.; Chartrand, G.; Kadoury, S.; Pal, C. The importance of skip connections in biomedical image segmentation. In Deep Learning and Data Labeling for Medical Applications; Springer: Berlin/Heidelberg, Germany, 2016; pp. 179–187. [Google Scholar]

- Alidoost, F.; Arefi, H.; Tombari, F. 2D Image-To-3D Model: Knowledge-Based 3D Building Reconstruction (3DBR) Using Single Aerial Images and Convolutional Neural Networks (CNNs). Remote Sens. 2019, 11, 2219. [Google Scholar] [CrossRef]

- Mou, L.; Zhu, X.X. IM2HEIGHT: Height estimation from single monocular imagery via fully residual convolutional-deconvolutional network. arXiv 2018, arXiv:1802.10249. [Google Scholar]

- Amirkolaee, H.A.; Arefi, H. Height estimation from single aerial images using a deep convolutional encoder-decoder network. ISPRS J. Photogramm. Remote Sens. 2019, 149, 50–66. [Google Scholar] [CrossRef]

- Srivastava, S.; Volpi, M.; Tuia, D. Joint height estimation and semantic labeling of monocular aerial images with CNNs. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5173–5176. [Google Scholar]

- Carvalho, M.; Le Saux, B.; Trouvé-Peloux, P.; Champagnat, F.; Almansa, A. Multitask Learning of Height and Semantics From Aerial Images. IEEE Geosci. Remote Sens. Lett. 2019, 17, 1391–1395. [Google Scholar] [CrossRef]

- Ghamisi, P.; Yokoya, N. Img2dsm: Height simulation from single imagery using conditional generative adversarial net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 794–798. [Google Scholar] [CrossRef]

- Bittner, K.; d’Angelo, P.; Körner, M.; Reinartz, P. Dsm-to-lod2: Spaceborne stereo digital surface model refinement. Remote Sens. 2018, 10, 1926. [Google Scholar] [CrossRef]

- Habib, A.; Ghanma, M.; Morgan, M.; Al-Ruzouq, R. Photogrammetric and LiDAR data registration using linear features. Photogramm. Eng. Remote Sens. 2005, 71, 699–707. [Google Scholar] [CrossRef]

- Kwak, T.S.; Kim, Y.I.; Yu, K.Y.; Lee, B.K. Registration of aerial imagery and aerial LiDAR data using centroids of plane roof surfaces as control information. KSCE J. Civ. Eng. 2006, 10, 365–370. [Google Scholar] [CrossRef]

- Peng, S.; Ma, H.; Zhang, L. Automatic Registration of Optical Images with Airborne LiDAR Point Cloud in Urban Scenes Based on Line-Point Similarity Invariant and Extended Collinearity Equations. Sensors 2019, 19, 1086. [Google Scholar] [CrossRef]

- Zhang, W.; Zhao, J.; Chen, M.; Chen, Y.; Yan, K.; Li, L.; Qi, J.; Wang, X.; Luo, J.; Chu, Q. Registration of optical imagery and LiDAR data using an inherent geometrical constraint. Opt. Express 2015, 23, 7694–7702. [Google Scholar] [CrossRef]

- Chen, H.; Xie, W.; Vedaldi, A.; Zisserman, A. AutoCorrect: Deep Inductive Alignment of Noisy Geometric Annotations. arXiv 2019, arXiv:1908.05263. [Google Scholar]

- Viola, P.; Wells, W.M., III. Alignment by maximization of mutual information. Int. J. Comput. Vis. 1997, 24, 137–154. [Google Scholar] [CrossRef]

- Mastin, A.; Kepner, J.; Fisher, J. Automatic registration of LIDAR and optical images of urban scenes. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern recognition, Miami, FL, USA, 20–25 June 2009; pp. 2639–2646. [Google Scholar]

- Parmehr, E.G.; Fraser, C.S.; Zhang, C.; Leach, J. Automatic registration of optical imagery with 3D LiDAR data using statistical similarity. ISPRS J. Photogramm. Remote Sens. 2014, 88, 28–40. [Google Scholar] [CrossRef]

- Shannon, C.E. A mathematical theory of communication. Bell Syst. Tech. J. 1948, 27, 379–423. [Google Scholar] [CrossRef]

- Styner, M.; Brechbuhler, C.; Szckely, G.; Gerig, G. Parametric estimate of intensity inhomogeneities applied to MRI. IEEE Trans. Med. Imaging 2000, 19, 153–165. [Google Scholar] [CrossRef]

- 2018 IEEE GRSS Data Fusion Contest. Available online: http://www.grss-ieee.org/community/technical-committees/data-fusion (accessed on 21 August 2020).

- Xu, Y.; Du, B.; Zhang, L.; Cerra, D.; Pato, M.; Carmona, E.; Prasad, S.; Yokoya, N.; Hänsch, R.; Le Saux, B. Advanced multi-sensor optical remote sensing for urban land use and land cover classification: Outcome of the 2018 IEEE GRSS Data Fusion Contest. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 1709–1724. [Google Scholar] [CrossRef]

- ISPRS Potsdam 2D Semantic Labeling Contest. Available online: http://www2.isprs.org/commissions/comm3/wg4/2d-sem-label-potsdam.html (accessed on 21 August 2020).

- ISPRS Vaihingen 2D Semantic Labeling Dataset. Available online: http://www2.isprs.org/commissions/comm3/wg4/2d-sem-label-vaihingen.html (accessed on 21 August 2020).

- Facciolo, G.; De Franchis, C.; Meinhardt-Llopis, E. Automatic 3D reconstruction from multi-date satellite images. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Honolulu, HI, USA, 21–26 July 2017; pp. 57–66. [Google Scholar]

- Fortune, S. A sweepline algorithm for Voronoi diagrams. Algorithmica 1987, 2, 153. [Google Scholar] [CrossRef]

- Boucheny, C. Visualisation Scientifique de Grands Volumes de Données: Pour une Approche Perceptive. Ph.D. Thesis, Joseph Fourier University, Grenoble, France, 2009. [Google Scholar]

- Gröger, G.; Plümer, L. CityGML–Interoperable semantic 3D city models. ISPRS J. Photogramm. Remote Sens. 2012, 71, 12–33. [Google Scholar] [CrossRef]

| Layer | Output Size | Input/C | Output/C |

|---|---|---|---|

| E0 | 440 × 440 | 3 | 64 |

| E0_1 | 220 × 220 | 64 | 128 |

| E1 | 110 × 110 | 128 | 256 |

| E2 | 55 × 55 | 256 | 512 |

| E3 | 28 × 28 | 512 | 1024 |

| E4 | 14 × 14 | 1024 | 2048 |

| E4_1 | 14 × 14 | 2048 | 1024 |

| M0 | 110 × 110 | 64 | 16 |

| M1 | 110 × 110 | 256 | 16 |

| M2 | 110 × 110 | 512 | 16 |

| M3 | 110 × 110 | 1024 | 16 |

| M4 | 110 × 110 | 2048 | 16 |

| F0 | 110 × 110 | 80 | 80 |

| F1 | 220 × 220 | 80 | 80 |

| D0 | 28 × 28 | 2048 | 1024 |

| D0_1 | 28 × 28 | 1024 | 512 |

| D1 | 55 × 55 | 1024 | 512 |

| D1_1 | 55 × 55 | 512 | 256 |

| D2 | 110 × 110 | 512 | 256 |

| D2_1 | 110 × 110 | 256 | 128 |

| D3 | 220 × 220 | 128 | 208 |

| R1 | 220 × 220 | 208 | 128 |

| R2 | 220 × 220 | 128 | 128 |

| R3 | 220 × 220 | 128 | 128 |

| R4 | 220 × 220 | 72 | 1 |

| Methods | MI Registration | Data Adjustment (Hough Validation) | MI↑ | Intersection↑ |

|---|---|---|---|---|

| I | ✗ | ✗ | 0.7650 | 0.8912 |

| II | ✓ | ✗ | 0.9235 | 0.9880 |

| III | ✓ | ✓ | 0.9193 | 0.9926 |

| Method | Preprocessing | GSD | MAE (m)↓ | RMSE (m)↓ |

|---|---|---|---|---|

| Hu et al. [4] | none (I) | 15 cm/pixel | 1.99 | 5.04 |

| MI registration(II) | 2.08 | 4.12 | ||

| MI, patch adjustment (III) | 1.93 | 3.96 | ||

| Hu et al. [4] + skip connection | MI, patch adjustment (III) | 15 cm/pixel | 2.40 | 4.59 |

| IM2ELEVATION | MI, patch adjustment (III) | 1.46 | 3.05 |

| Methods | Training Input | GSD | MAE (m)↓ | RMSE (m)↓ |

|---|---|---|---|---|

| IEEE DFC2018 dataset | ||||

| Carvalho et al. [39] | DSM | 5cm/pixel | 1.47 | 3.05 |

| Carvalho et al. [39] * | DSM + semantic | 1.26 | 2.60 | |

| IM2ELEVATION, | DSM | 1.19 | 2.88 | |

| ISPRS Vaihingen dataset | ||||

| Amirkolaee et al. [37] with ZCA whitening ** | DSM | 8cm/pixel | - | 2.87 |

| IM2ELEVATION, | DSM | 2.96 | 4.66 | |

| ISPRS Potsdam dataset (nDSM) | ||||

| Amirkolaee et al. [37] with ZCA whitening ** | DSM | 8cm/pixel | - | 3.46 |

| Alidoost et al. [35] | DSM | - | 3.57 | |

| Ghamisi et al. [40] | DSM | - | 3.89 | |

| IM2ELEVATION, | DSM | 1.52 | 2.64 | |

| DSM Method | GSD | MAE (m) ↓ | RMSE (m) ↓ |

|---|---|---|---|

| OSI stereo | 15 cm/pixel | 2.36 | 4.90 |

| IM2ELEVATION (with MI, patch adjustment) | 15 cm/pixel | 1.46 | 3.05 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, C.-J.; Krylov, V.A.; Kane, P.; Kavanagh, G.; Dahyot, R. IM2ELEVATION: Building Height Estimation from Single-View Aerial Imagery. Remote Sens. 2020, 12, 2719. https://doi.org/10.3390/rs12172719

Liu C-J, Krylov VA, Kane P, Kavanagh G, Dahyot R. IM2ELEVATION: Building Height Estimation from Single-View Aerial Imagery. Remote Sensing. 2020; 12(17):2719. https://doi.org/10.3390/rs12172719

Chicago/Turabian StyleLiu, Chao-Jung, Vladimir A. Krylov, Paul Kane, Geraldine Kavanagh, and Rozenn Dahyot. 2020. "IM2ELEVATION: Building Height Estimation from Single-View Aerial Imagery" Remote Sensing 12, no. 17: 2719. https://doi.org/10.3390/rs12172719

APA StyleLiu, C.-J., Krylov, V. A., Kane, P., Kavanagh, G., & Dahyot, R. (2020). IM2ELEVATION: Building Height Estimation from Single-View Aerial Imagery. Remote Sensing, 12(17), 2719. https://doi.org/10.3390/rs12172719