Design, Calibration, and Evaluation of a Backpack Indoor Mobile Mapping System

Abstract

1. Introduction

2. Related Works

2.1. Hand-Held Systems

2.2. Backpack Mapping Systems

2.3. Evaluation Methods

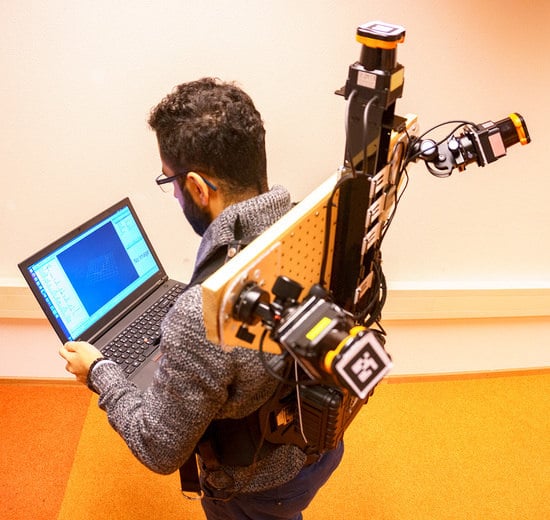

3. Backpack System ITC-IMMS

3.1. System Description

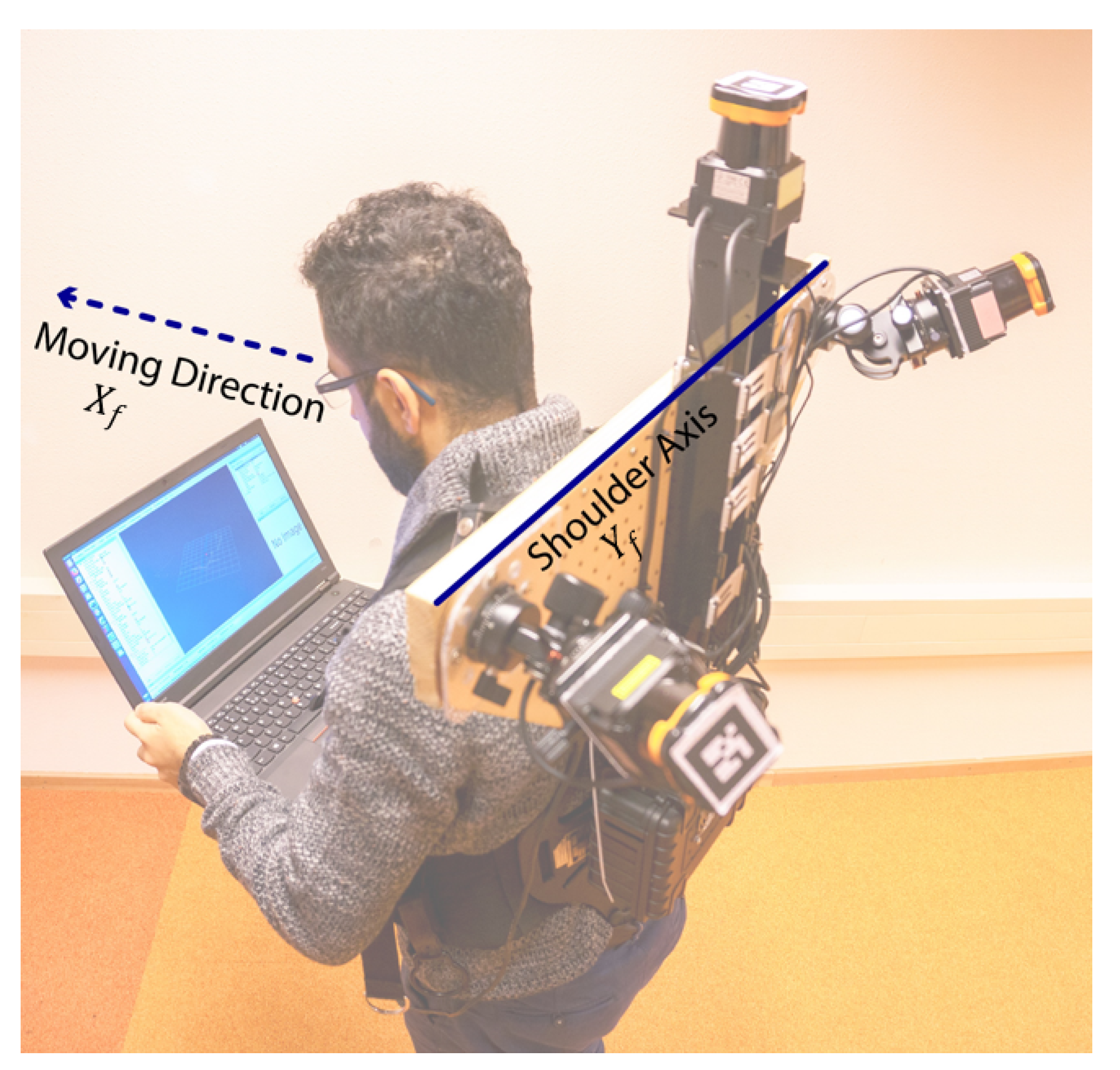

3.2. Coordinate Systems

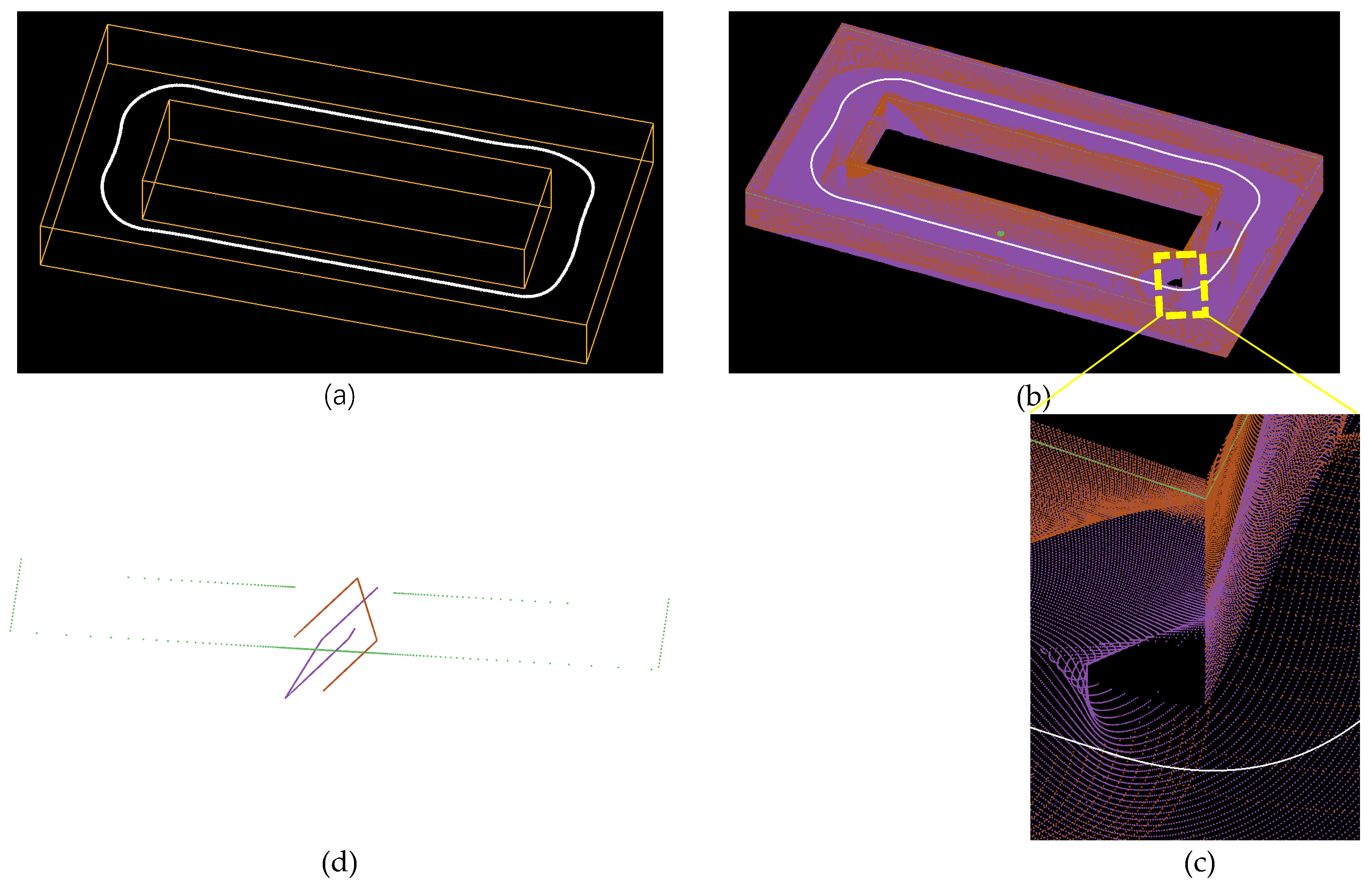

3.3. 6DOF SLAM

4. Calibration Process

4.1. Calibration Facility

4.2. Calibration

4.3. Self-Calibration

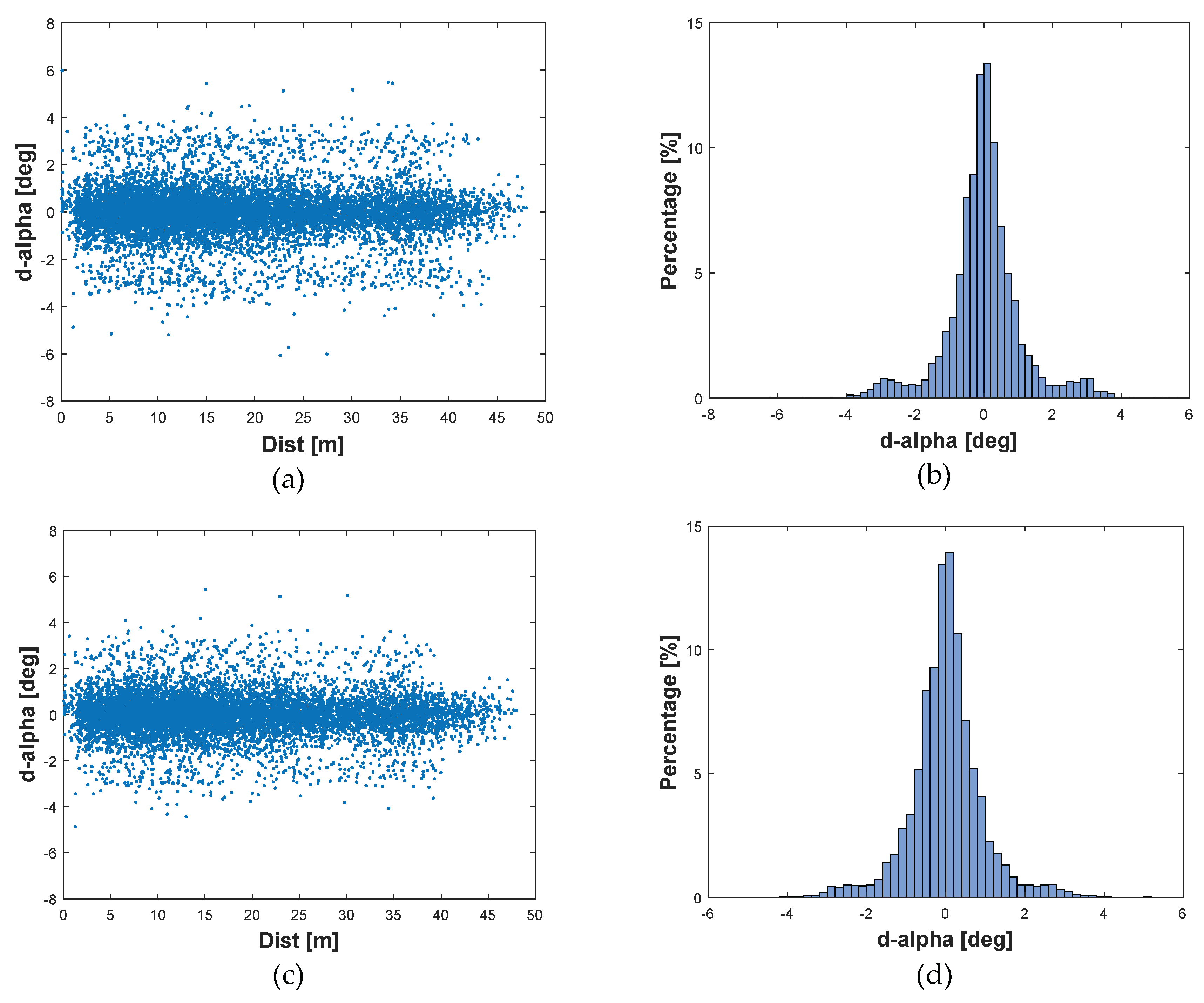

5. Relative Sensor Registration

5.1. Initial Registration

5.2. Fine Registration

5.3. Self-Registration

6. SLAM Performance Measurements and Results

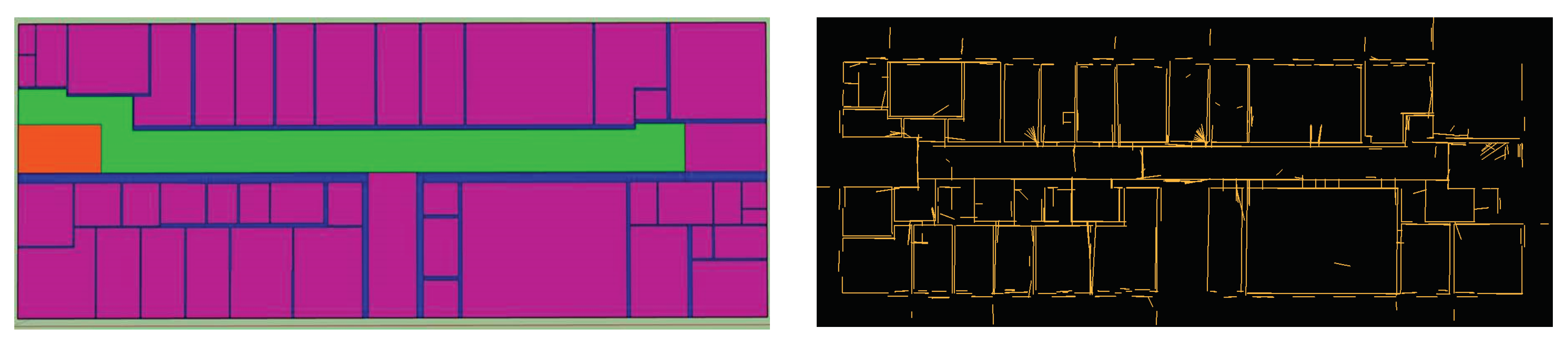

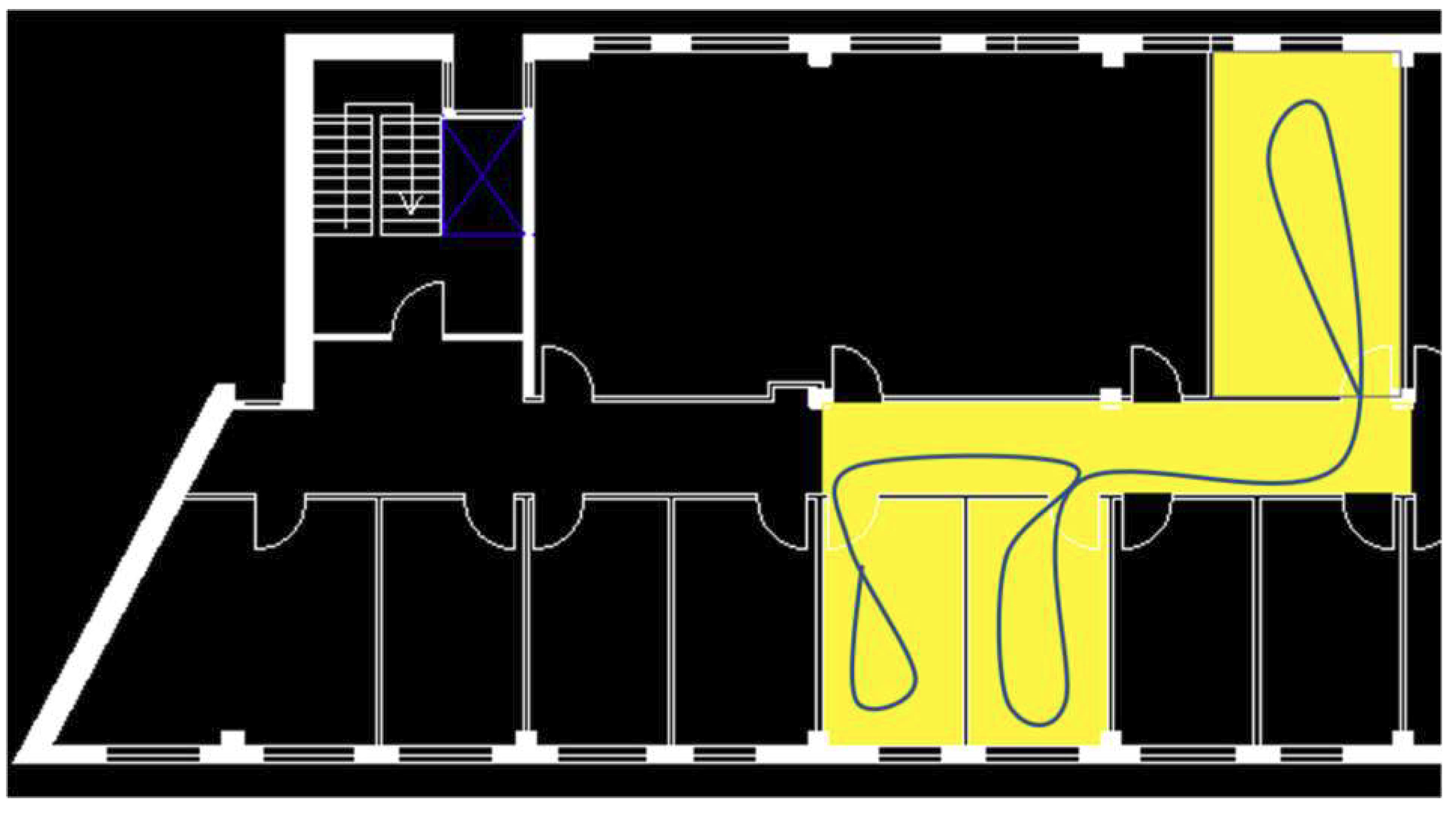

6.1. Dataset

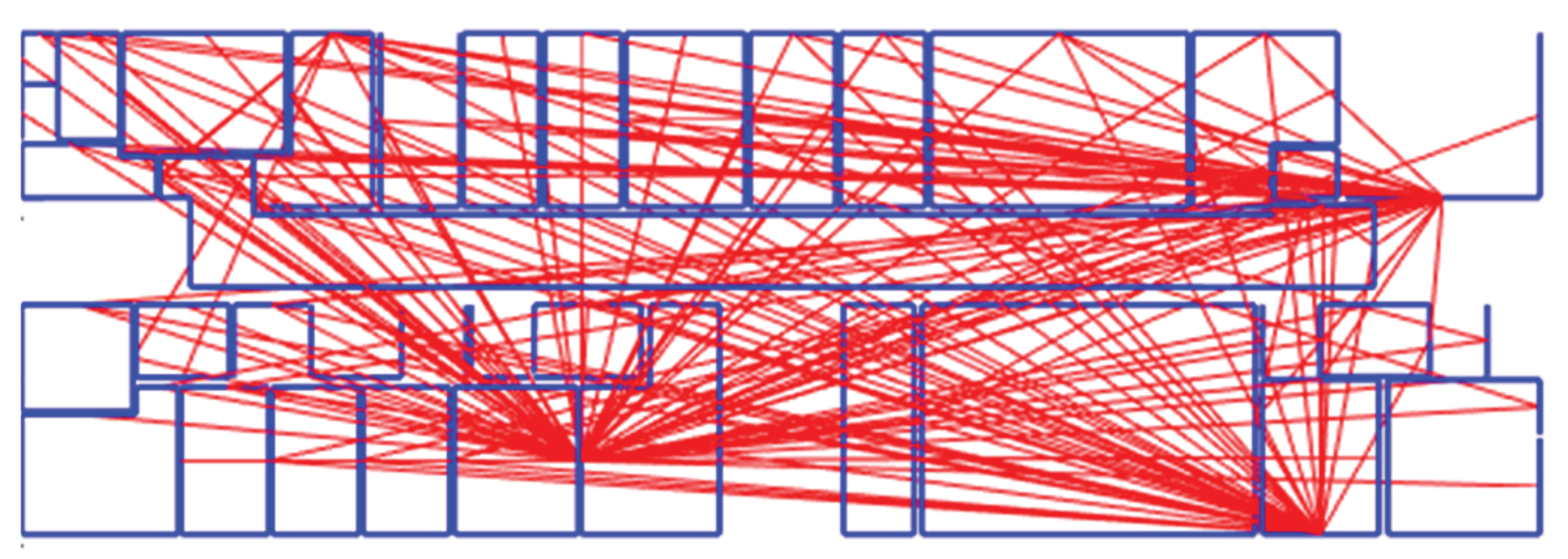

Point to Plane Association (Data Association)

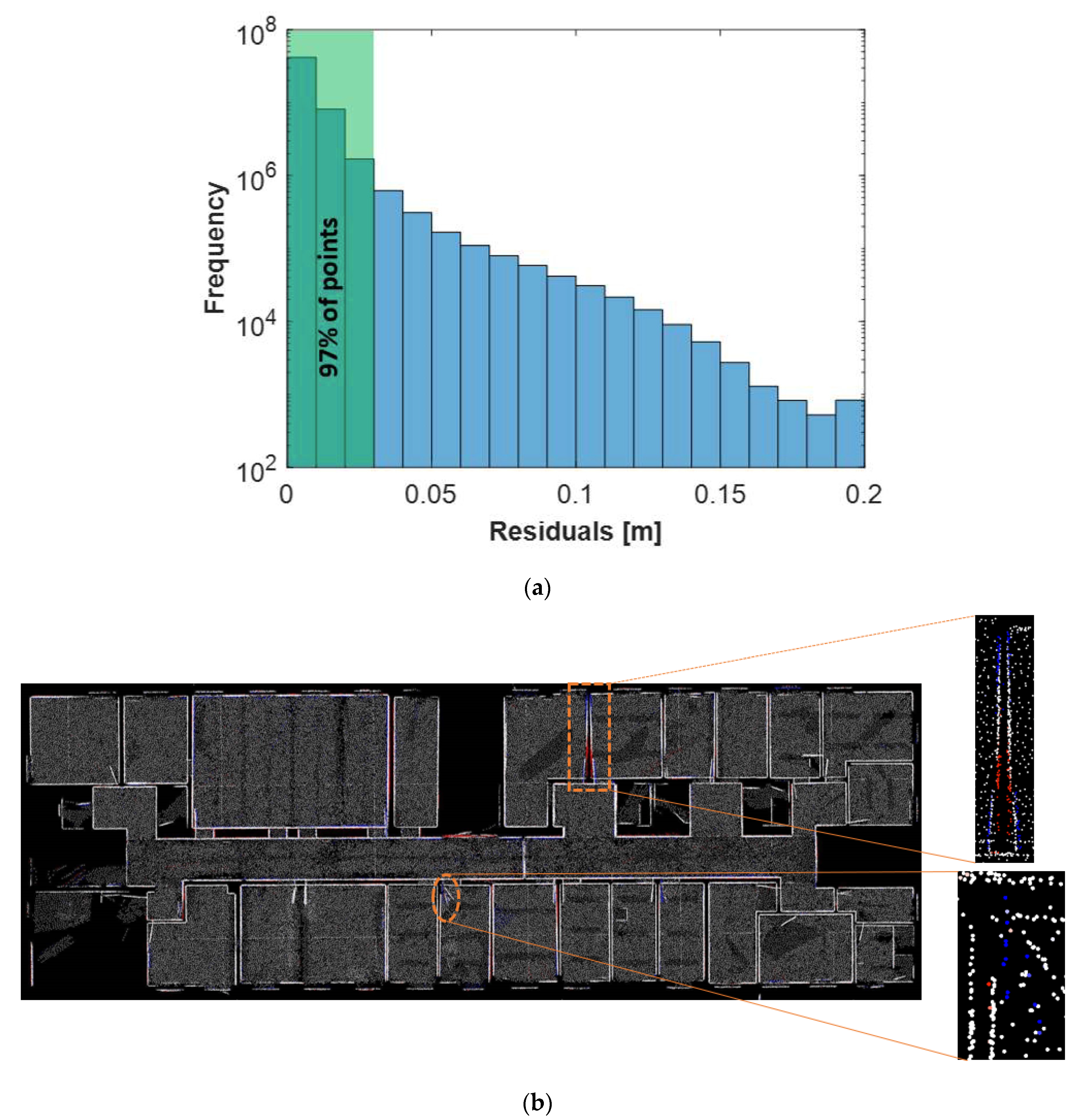

6.2. Evaluation Techniques

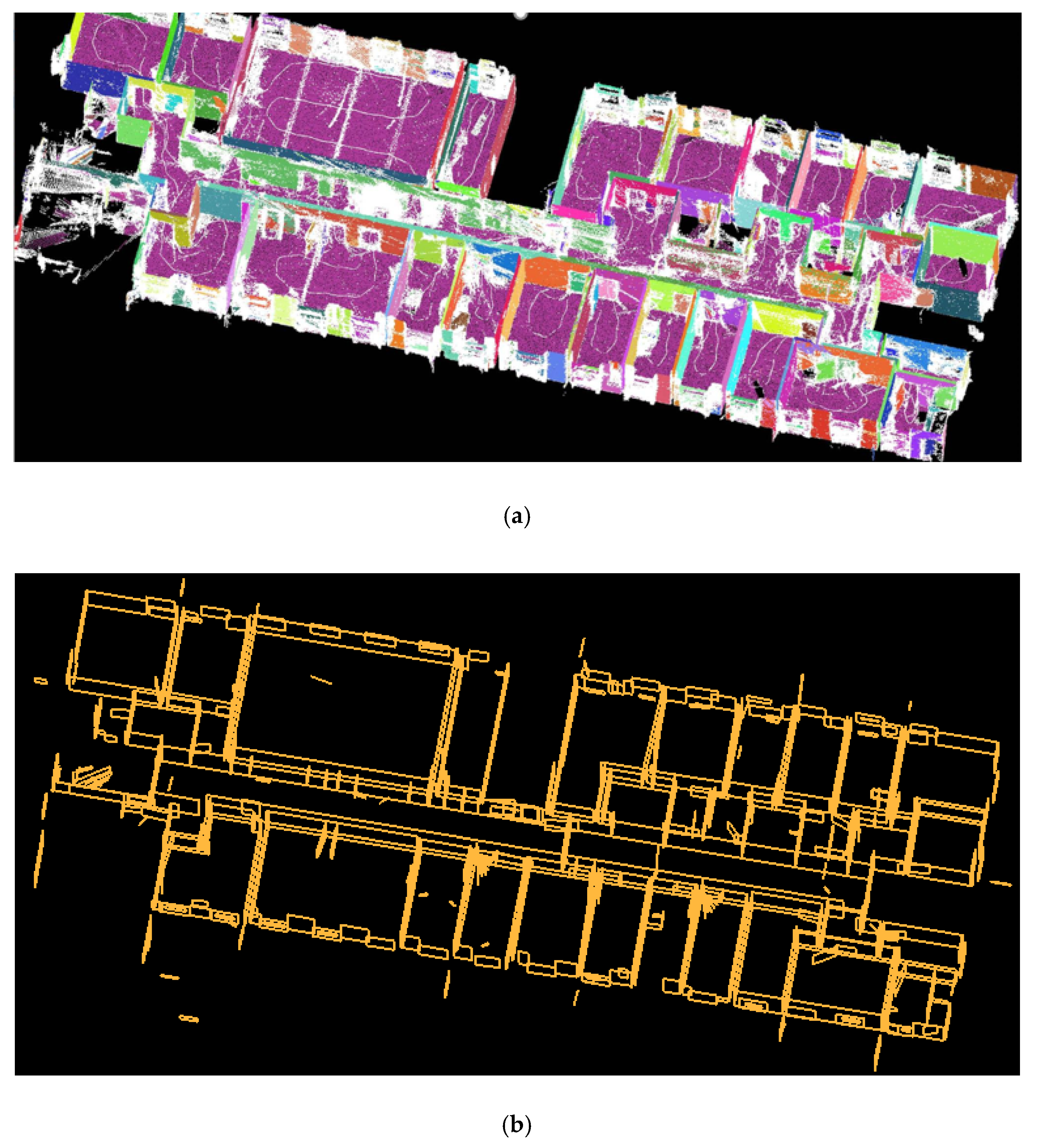

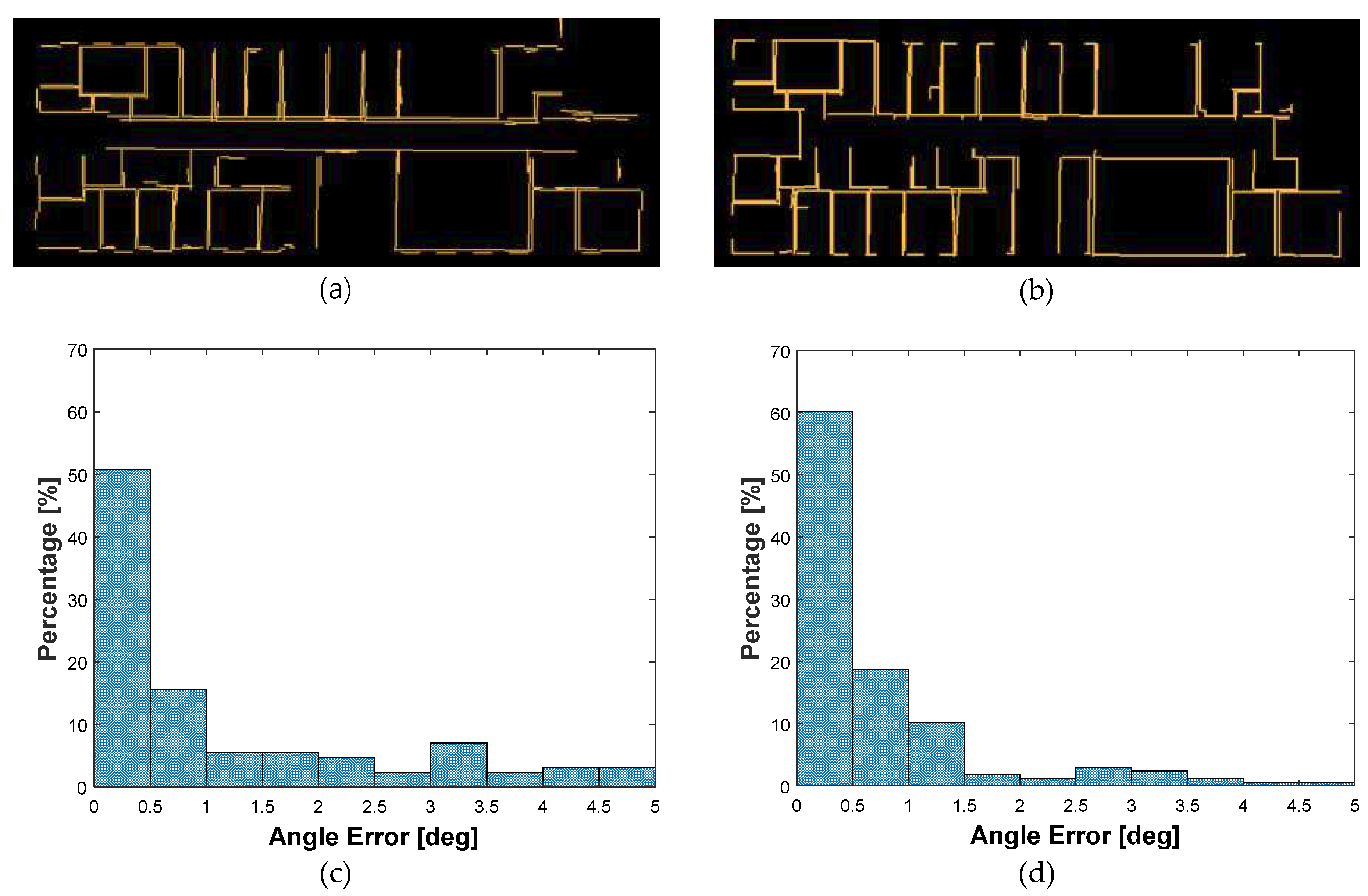

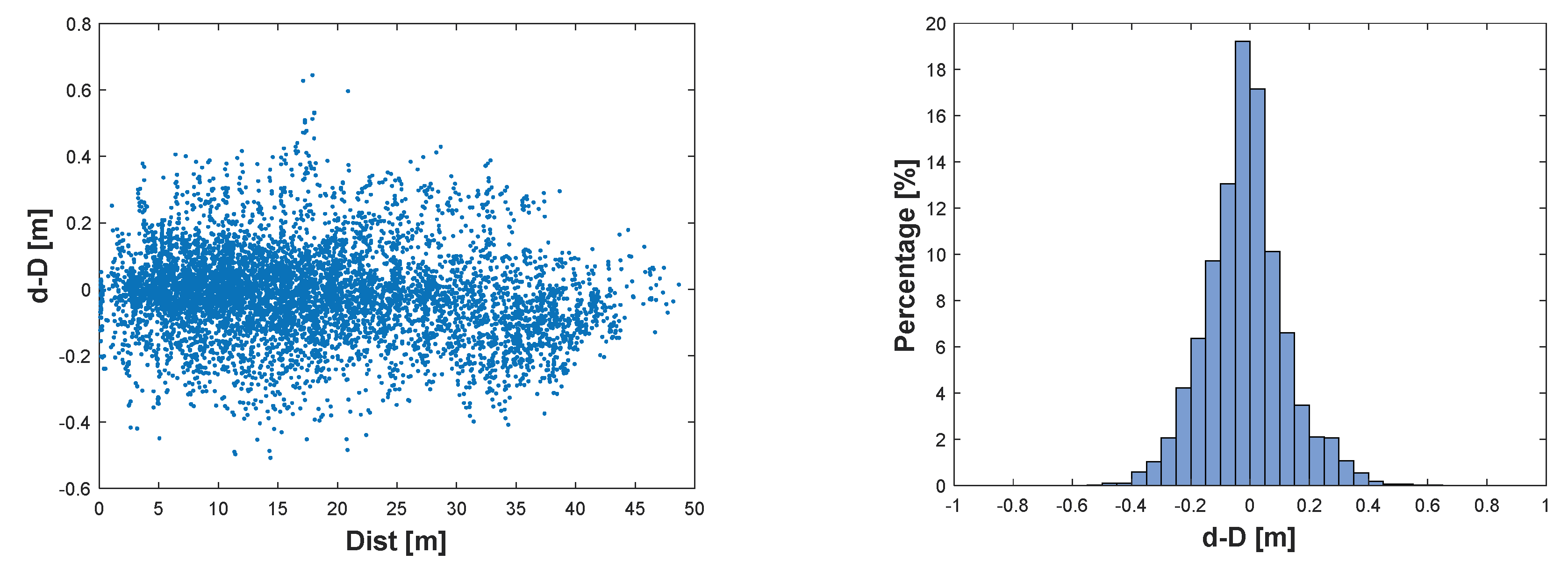

6.2.1. Evaluation Using Architectural Constraints

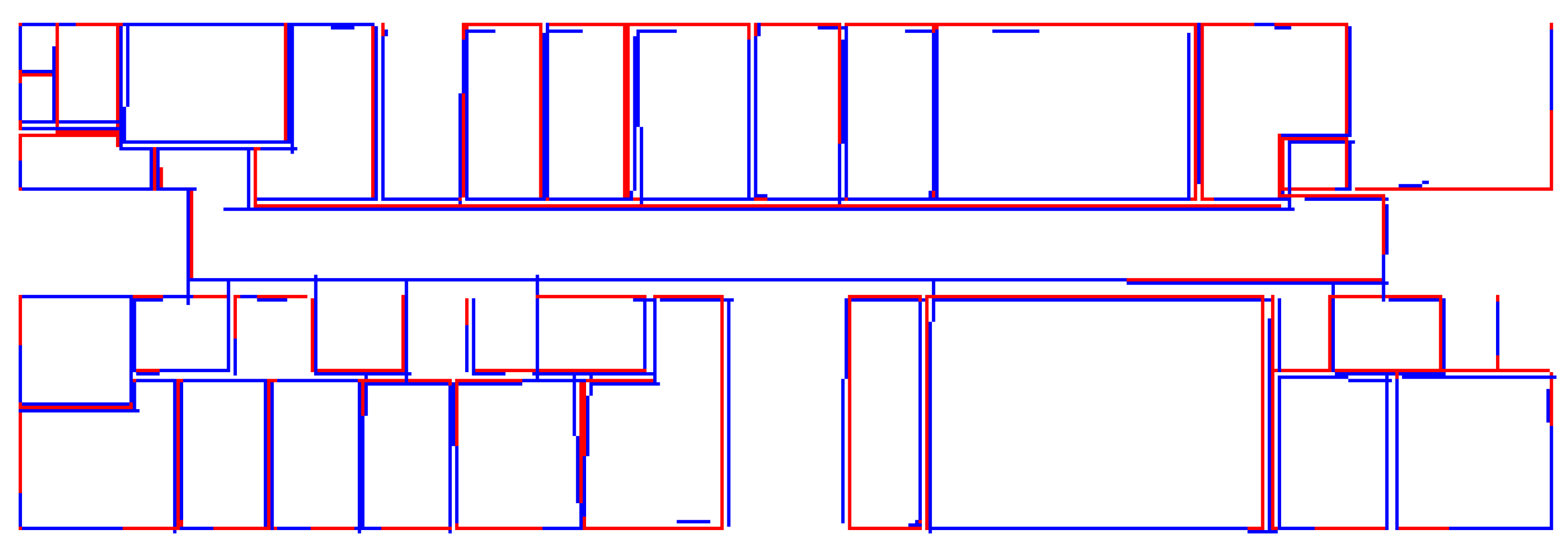

6.2.2. Evaluation Using a Floor Plan

7. Determining Optimal Configuration

7.1. Studied Configurations

7.2. Experimental Comparison of Configurations

7.2.1. Accuracy

7.2.2. Completeness of Data Capturing

7.3. Discussion of Configuration Experiments

8. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Biber, P.; Andreasson, H.; Duckett, T.; Schilling, A. 3D modeling of indoor environments by a mobile robot with a laser scanner and panoramic camera. In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Las Vegas, NV, USA, 28 September–2 October 2004; Volume 4, pp. 3430–3435. [Google Scholar]

- Borrmann, D.; Elseberg, J.; Lingemann, K.; Nüchter, A.; Hertzberg, J. Globally consistent 3D mapping with scan matching. Rob. Auton. Syst. 2008, 56, 130–142. [Google Scholar] [CrossRef]

- Henry, P.; Krainin, M.; Herbst, E.; Ren, X.; Fox, D. RGB-D Mapping: Using Depth Cameras for Dense 3D Modeling of Indoor Environments. In Experimental Robotics, Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2014; Volume 79, pp. 477–491. [Google Scholar]

- Lehtola, V.V.; Kaartinen, H.; Nüchter, A.; Kaijaluoto, R.; Kukko, A.; Litkey, P.; Honkavaara, E.; Rosnell, T.; Vaaja, M.T.; Virtanen, J.P.; et al. Comparison of the selected state-of-the-art 3D indoor scanning and point cloud generation methods. Remote Sens. 2017, 9, 796. [Google Scholar] [CrossRef]

- Karam, S.; Peter, M.; Hosseinyalamdary, S.; Vosselman, G. An Evaluation Pipeline for Indoor Laser Scanning Point Clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 85–92. [Google Scholar] [CrossRef]

- Bosse, M.; Zlot, R.; Flick, P. Zebedee: Design of a spring-mounted 3-D range sensor with application to mobile mapping. IEEE Trans. Robot. 2012, 28, 1104–1119. [Google Scholar] [CrossRef]

- Viametris iMS3D-VIAMETRIS. Available online: http://www.viametris.com/products/ims3d/ (accessed on 20 November 2018).

- Trimble Applanix: TIMMS Indoor Mapping. Available online: https://www.applanix.com/products/timms-indoor-mapping.htm (accessed on 20 November 2018).

- Wen, C.; Pan, S.; Wang, C.; Li, J. An Indoor Backpack System for 2-D and 3-D Mapping of Building Interiors. IEEE Geosci. Remote Sens. Lett. 2016, 13, 992–996. [Google Scholar] [CrossRef]

- Blaser, S.; Cavegn, S.; Nebiker, S. Development of a portable high performance mobile mapping system using the robot operation system. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 4, 13–20. [Google Scholar] [CrossRef]

- Naikal, N.; Kua, J.; Chen, G.; Zakhor, A. Image Augmented Laser Scan Matching for Indoor Dead Reckoning. In Proceedings of the 2009 IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 10–15 October 2009; pp. 4134–4141. [Google Scholar]

- Lehtola, V.V.; Virtanen, J.-P.; Vaaja, M.T.; Hyyppä, H.; Nüchter, A. Localization of a mobile laser scanner via dimensional reduction. ISPRS J. Photogramm. Remote Sens. 2016, 121, 48–59. [Google Scholar] [CrossRef]

- Leica Geosystems. Leica Pegasus: Backpack. Available online: https://leica-geosystems.com/ (accessed on 4 February 2019).

- Chen, G.; Kua, J.; Shum, S.; Naikal, N.; Carlberg, M.; Zakhor, A. Indoor localization algorithms for a human-operated backpack system. In Proceedings 3D Data Processing, Visualization, and Transmission; University of California: Berkeley, CA, USA, 2010; pp. 15–17. [Google Scholar]

- Liu, T.; Carlberg, M.; Chen, G.; Chen, J.; Kua, J.; Zakhor, A. Indoor localization and visualization using a human-operated backpack system. In Proceedings of the 2010 International Conference on Indoor Positioning and Indoor Navigation, Zurich, Switzerland, 15–17 September 2010; pp. 1–10. [Google Scholar]

- Thomson, C.; Apostolopoulos, G.; Backes, D.; Boehm, J. Mobile Laser Scanning for Indoor Modelling. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 2, 289–293. [Google Scholar] [CrossRef]

- Maboudi, M.; Bánhidi, D.; Gerke, M. Evaluation of Indoor Mobile Mapping Systems. In Proceedings of the GFaI Workshop 3D North East 2017, Berlin, Germany, 7–8 December 2017; pp. 125–134. [Google Scholar]

- Maboudi, M.; Bánhidi, D.; Gerke, M. Investigation of geometric performance of an indoor mobile mapping system. ISPRS Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, 42, 637–642. [Google Scholar] [CrossRef]

- Tran, H.; Khoshelham, K.; Kealy, A. Geometric comparison and quality evaluation of 3D models of indoor environments. ISPRS J. Photogramm. Remote Sens. 2019, 149, 29–39. [Google Scholar] [CrossRef]

- Vosselman, G. Design of an indoor mapping system using three 2D laser scanners and 6 DOF SLAM. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2014, 2, 173–179. [Google Scholar] [CrossRef]

- GeoSLAM GeoSLAM—The Experts in ‘Go-Anywhere’ 3D Mobile Mapping Technology. Available online: https://geoslam.com/ (accessed on 25 November 2018).

- VIAMETRIS iMS2D-VIAMETRIS. Available online: http://www.viametris.com/products/ims2d/ (accessed on 4 December 2018).

- Kim, B.K. Indoor localization and point cloud generation for building interior modeling. In Proceedings of the IEEE RO-MAN, Gyeongju, Korea, 26–29 August 2013; pp. 186–191. [Google Scholar]

- Filgueira, A.; Arias, P.; Bueno, M. Novel inspection system, backpack-based, for 3D modelling of indoor scenes. In Proceedings of the 2016 International Conference on Indoor Positioning and Indoor Navigation (IPIN), Alcalá Henares, Spain, 4–7 October 2016; pp. 4–7. [Google Scholar]

- Zhang, J.; Singh, S. LOAM: Lidar Odometry and Mapping in Real-Time. In Proceedings of the Robotics: Science and Systems Foundation, Berkeley, CA, USA, 12–16 July 2014. [Google Scholar] [CrossRef]

- Lagüela, S.; Dorado, I.; Gesto, M.; Arias, P.; Gonz, D.; Lorenzo, H. Behavior analysis of novel wearable indoor mapping system based on 3D-SLAM. Sensors 2018, 18, 766. [Google Scholar] [CrossRef] [PubMed]

- Sirmacek, B.; Shen, Y.; Lindenbergh, R.; Zlatanova, S.; Diakite, A. Comparison of ZEB1 and Leica C10 indoor laser scanning point clouds. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2016, 143–149. [Google Scholar] [CrossRef]

- Hokuyo Ltd. Hokuyo Automatic Co., Ltd. Available online: https://www.hokuyo-aut.jp/ (accessed on 4 December 2018).

- Peter, M.; Jafri, S.R.U.N.; Vosselman, G. Line segmentation of 2D laser scanner point clouds for indoor SLAM based on a range of residuals. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 4, 363–369. [Google Scholar] [CrossRef]

- Park, C.-S.; Kim, D.; You, B.-J.; Oh, S.-R. Characterization of the Hokuyo UBG-04LX-F01 2D laser rangefinder. In Proceedings of the 19th International Symposium in Robot and Human Interactive Communication, Viareggio, Italy, 13–15 September 2010; pp. 385–390. [Google Scholar]

- Niekum, S. ar_track_alvar-ROS Wiki. 2013. Available online: http://wiki.ros.org/ar_track_alvar (accessed on 25 November 2018).

- Fernández-Moral, E.; Arévalo, V.; González-Jiménez, J. Extrinsic calibration of a set of 2D laser rangefinders. In Proceedings of the 2015 IEEE International Conference on Robotics and Automation (ICRA), Seattle, WA, USA, 26–30 May 2015; pp. 2098–2104. [Google Scholar] [CrossRef]

- Choi, D.G.; Bok, Y.; Kim, J.S.; Kweon, I.S. Extrinsic calibration of 2D laser sensors. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 3027–3033. [Google Scholar] [CrossRef]

| Before | After | |

|---|---|---|

| Mean | 0.01° | 0.00° |

| Std Dev. | 1.14° | 0.85° |

| Edge-Pairs Nr | 10296 | 9591 |

| Orientation Configuration of LRFs | Arch. Constraints | loor Plan | Gap | ||||||

|---|---|---|---|---|---|---|---|---|---|

| RMSE [deg] | λ % | RMSE [deg] | λ % | Size | |||||

| 1 | 20 1 | 20 1 | 20 2 | 20 2 | 1.57 | 70 | 0.79 | 67 | Small |

| 2 | 30 | 30 | 30 | 30 | 0.81 | 85 | 0.40 | 85 | No |

| 3 | 40 | 40 | 40 | 40 | 0.94 | 83 | 0.56 | 76 | No |

| 4 | 15 | 20 | 30 | 30 | 0.94 | 84 | 0.57 | 73 | Small |

| 5 | 30 | 30 | 20 | 20 | 0.76 | 90 | 0.51 | 79 | No |

| 6 | 20 | 20 | 40 | 40 | 0.54 | 95 | 0.71 | 83 | Small |

| 7 | 40 | 40 | 20 | 20 | 0.77 | 86 | 0.57 | 74 | No |

| 8 | 20 | 20 | 30 | 30 | 1.38 | 83 | 0.82 | 72 | Small |

| 9 | 0 | 20 | 20 | 90 | 1.25 | 81 | 0.76 | 70 | Large |

| 10 | 0 | 30 | 30 | 90 | 0.65 | 99 | 0.54 | 87 | Large |

| 11 | 0 | 40 | 40 | 90 | 0.73 | 91 | 0.52 | 80 | Large |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Karam, S.; Vosselman, G.; Peter, M.; Hosseinyalamdary, S.; Lehtola, V. Design, Calibration, and Evaluation of a Backpack Indoor Mobile Mapping System. Remote Sens. 2019, 11, 905. https://doi.org/10.3390/rs11080905

Karam S, Vosselman G, Peter M, Hosseinyalamdary S, Lehtola V. Design, Calibration, and Evaluation of a Backpack Indoor Mobile Mapping System. Remote Sensing. 2019; 11(8):905. https://doi.org/10.3390/rs11080905

Chicago/Turabian StyleKaram, Samer, George Vosselman, Michael Peter, Siavash Hosseinyalamdary, and Ville Lehtola. 2019. "Design, Calibration, and Evaluation of a Backpack Indoor Mobile Mapping System" Remote Sensing 11, no. 8: 905. https://doi.org/10.3390/rs11080905

APA StyleKaram, S., Vosselman, G., Peter, M., Hosseinyalamdary, S., & Lehtola, V. (2019). Design, Calibration, and Evaluation of a Backpack Indoor Mobile Mapping System. Remote Sensing, 11(8), 905. https://doi.org/10.3390/rs11080905