Building Damage Assessment Based on the Fusion of Multiple Texture Features Using a Single Post-Earthquake PolSAR Image

Abstract

:1. Introduction

2. Materials and Methods

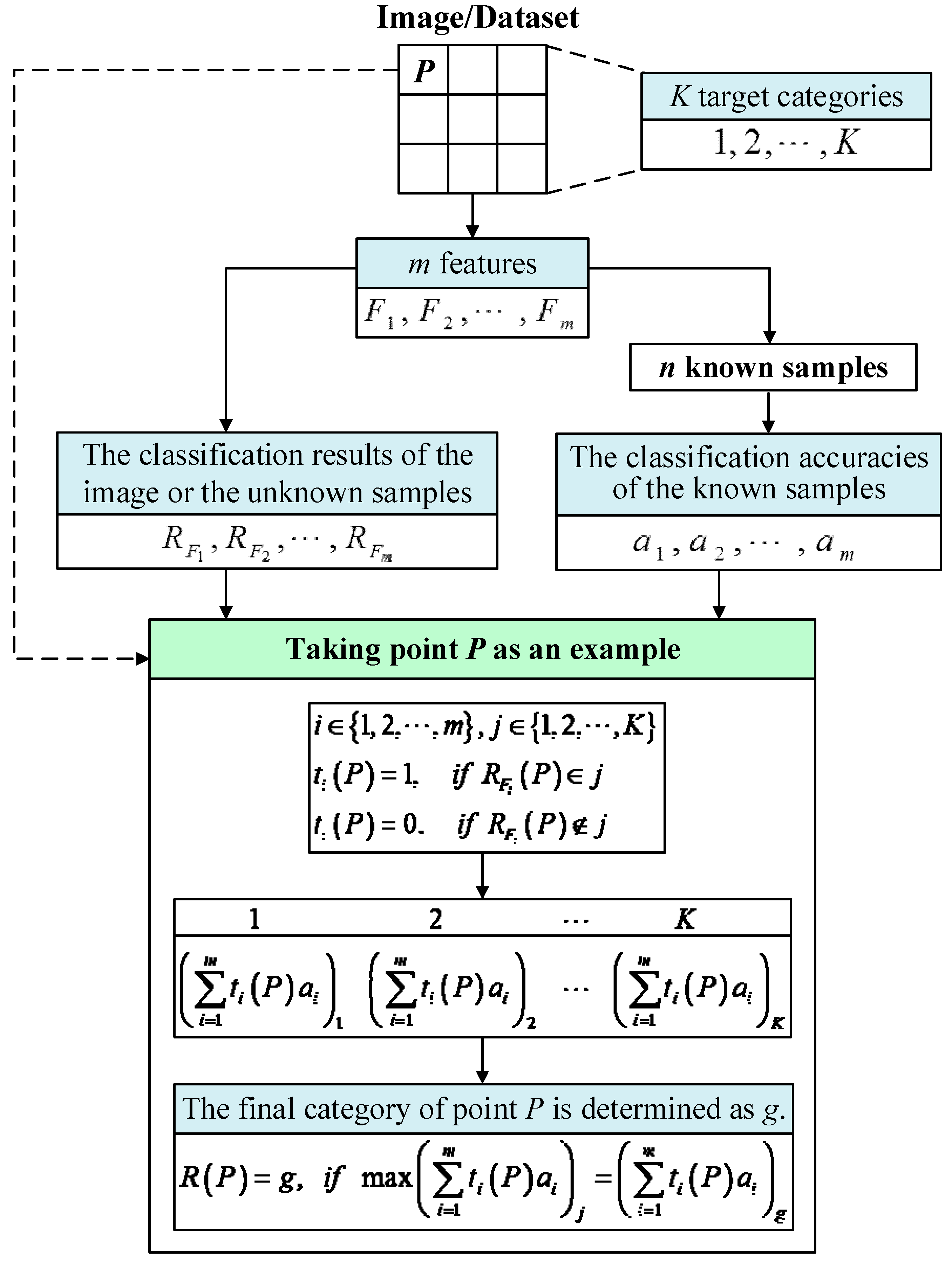

2.1. Multifeature Fusion Algorithm

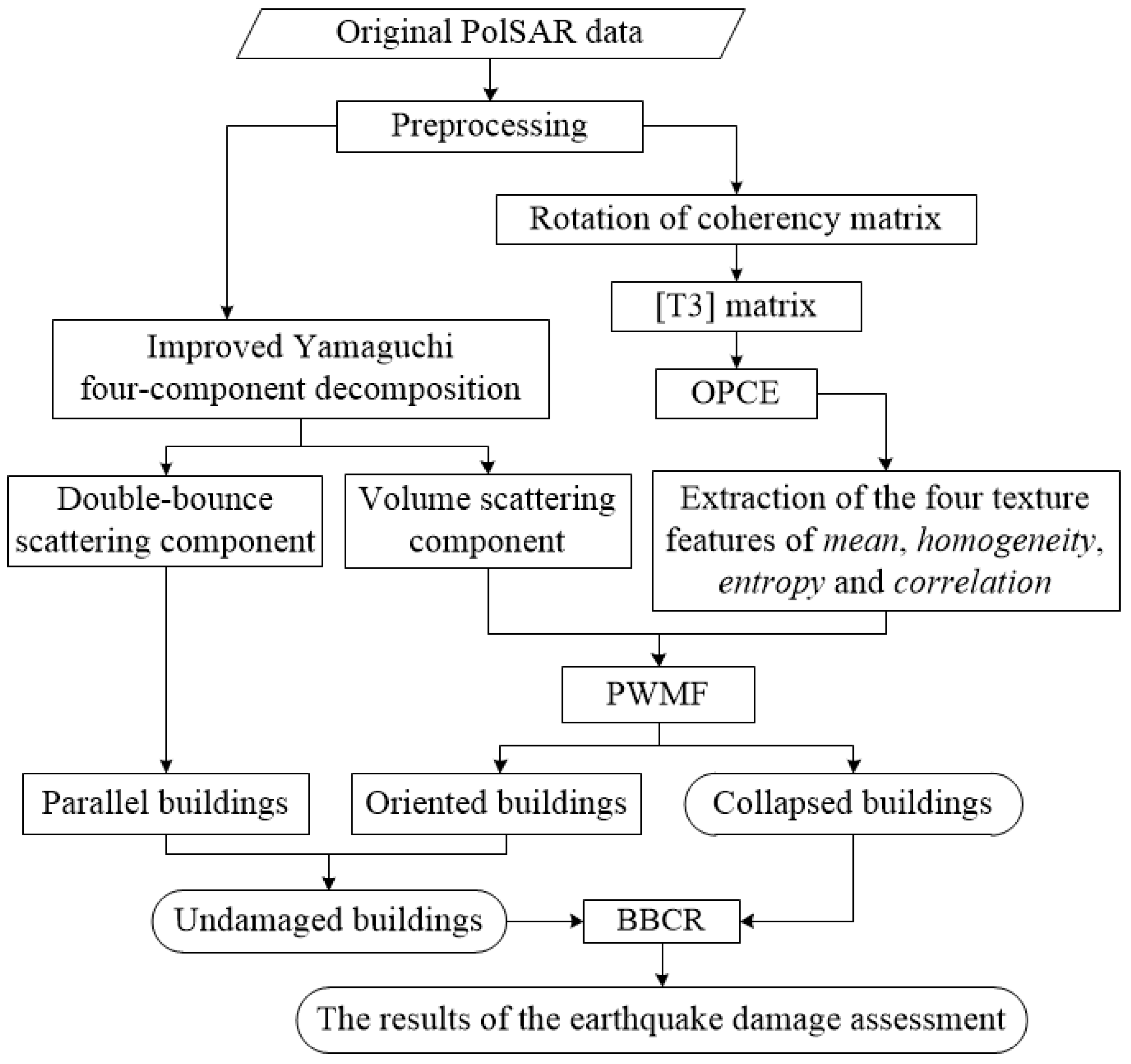

2.2. Methodology Development for the Building Damage Assessment

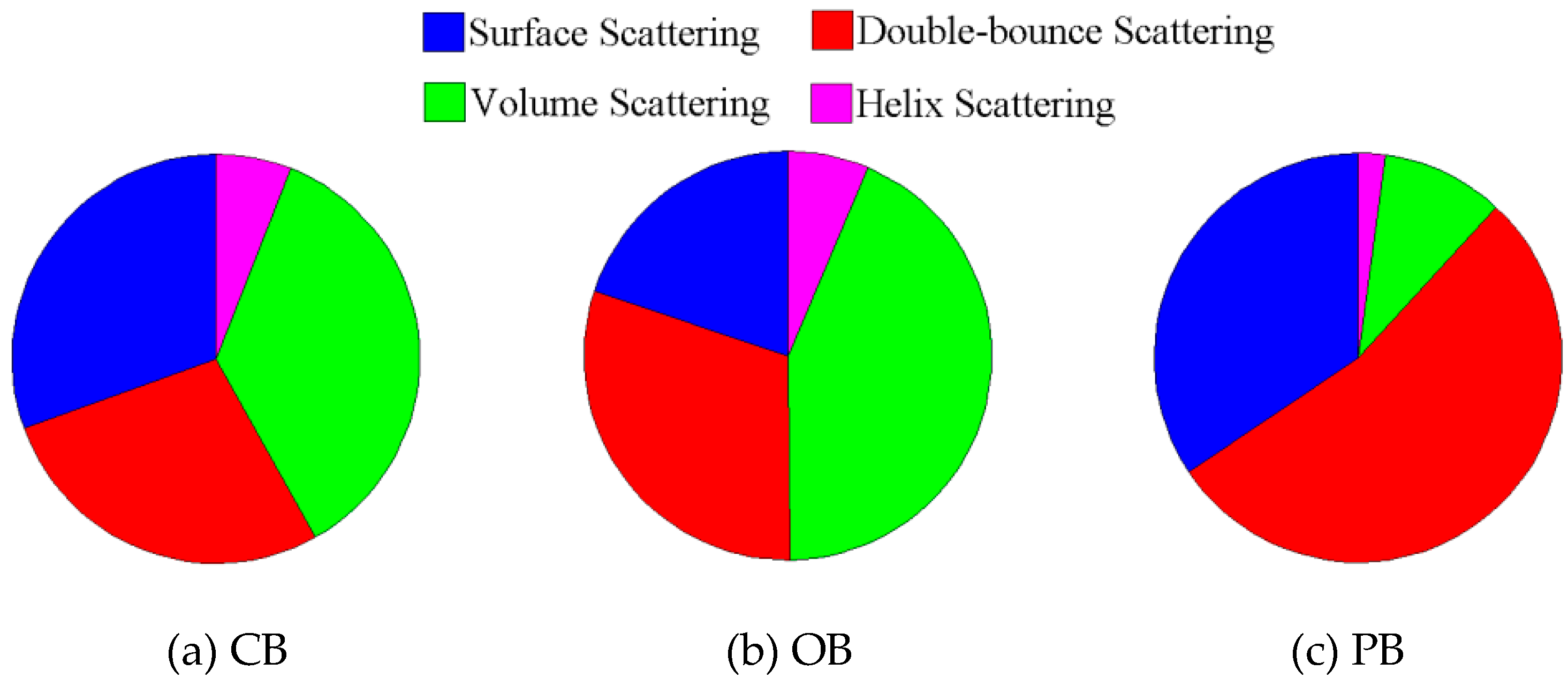

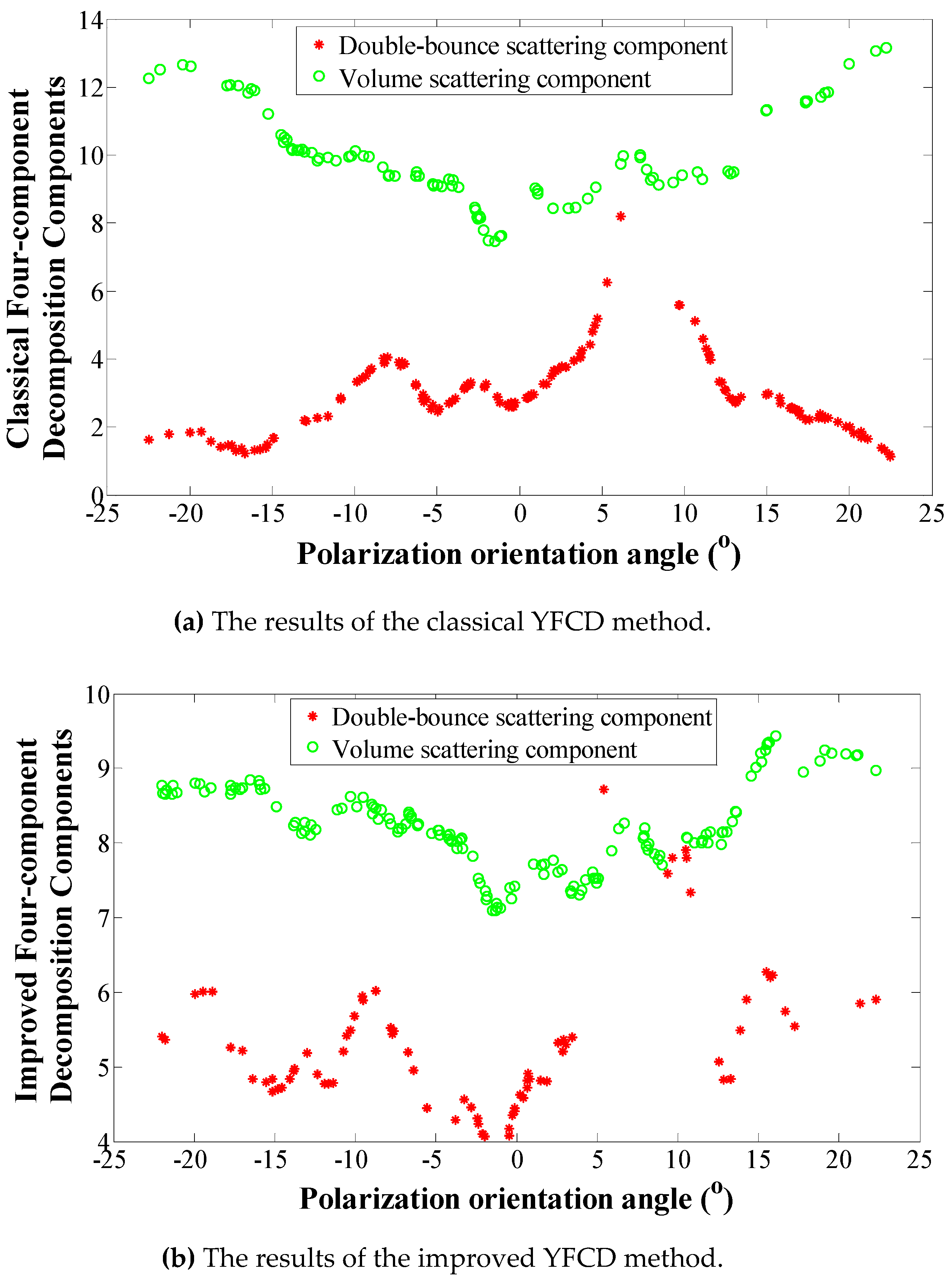

2.2.1. Polarimetric Decomposition

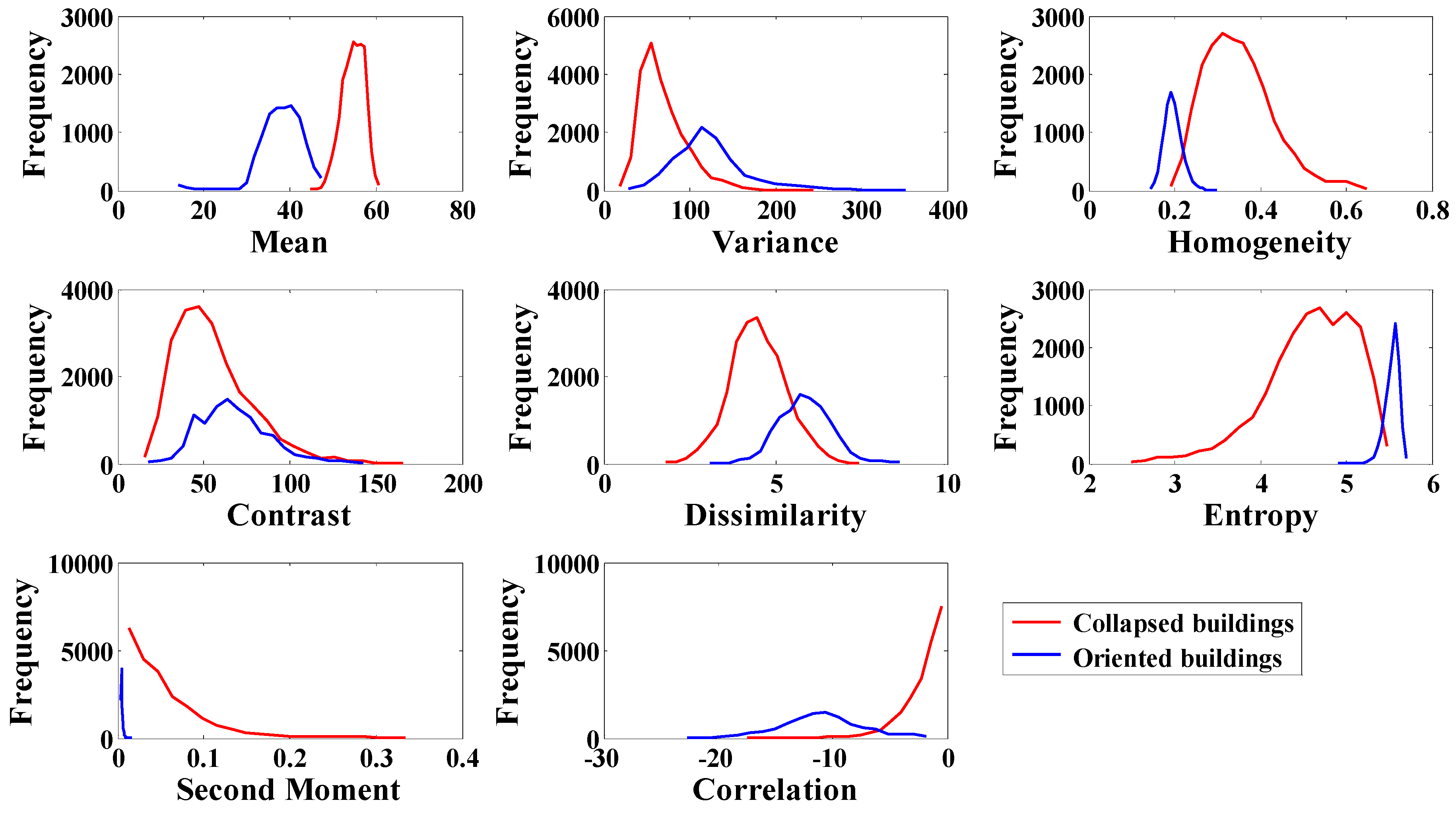

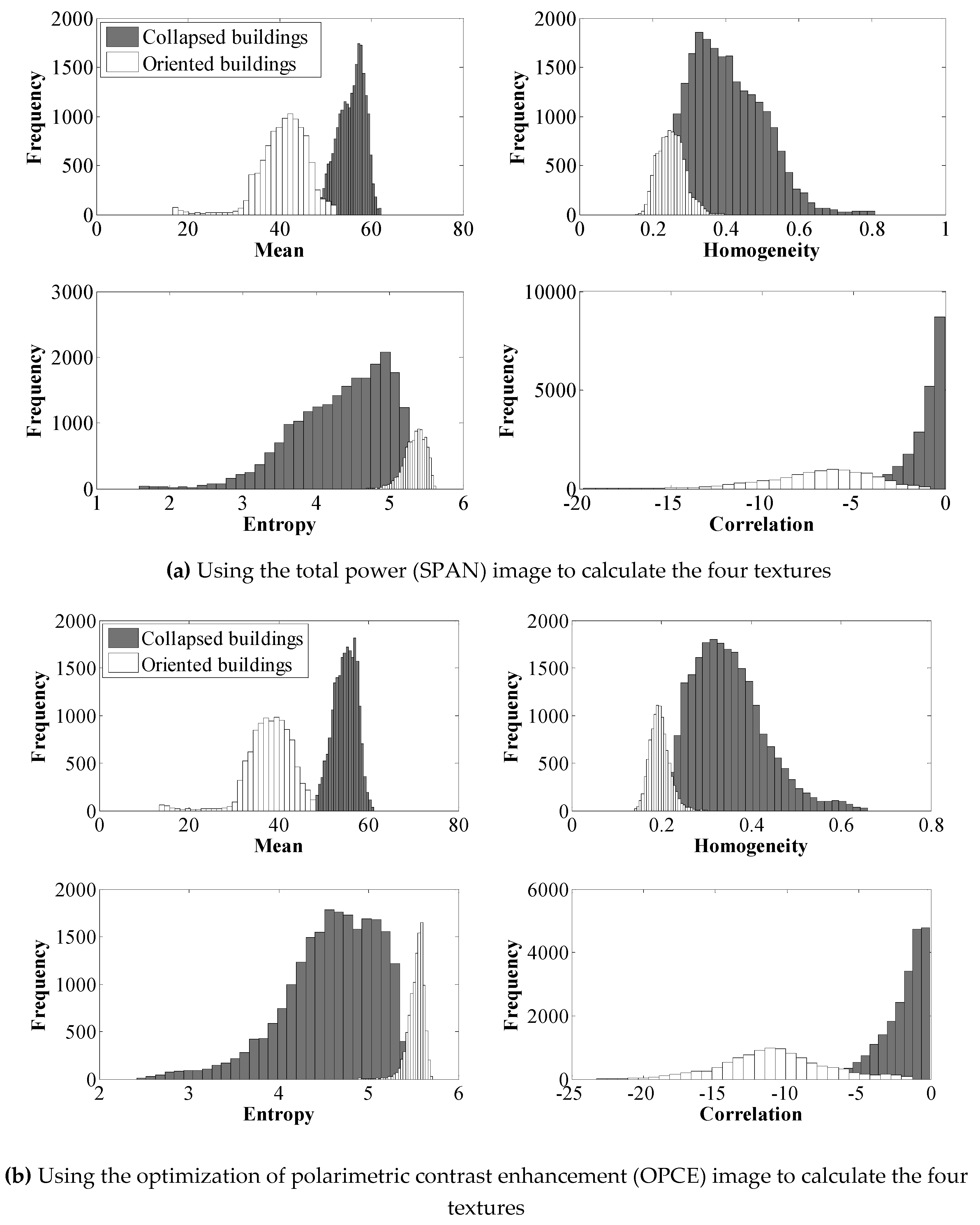

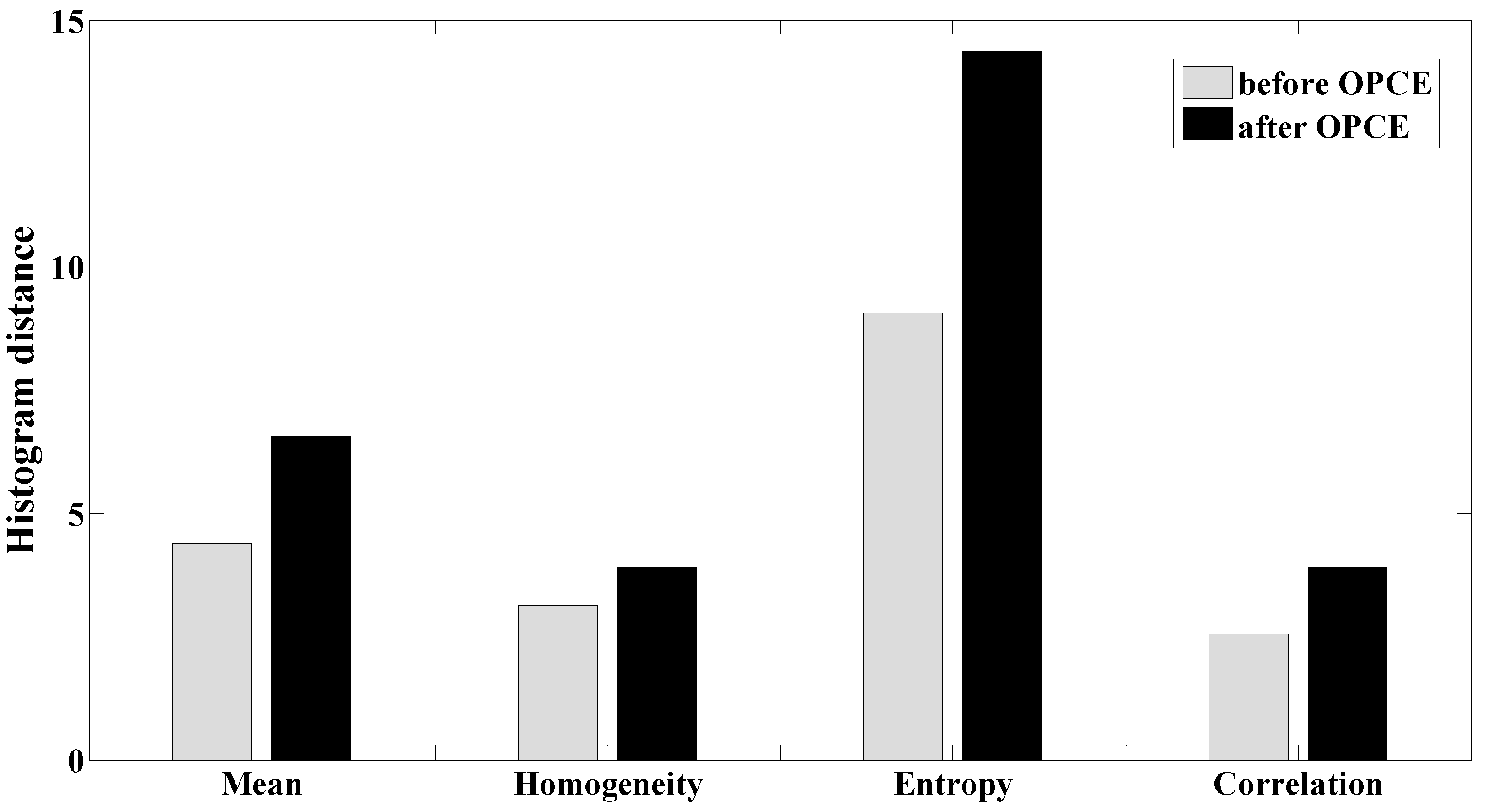

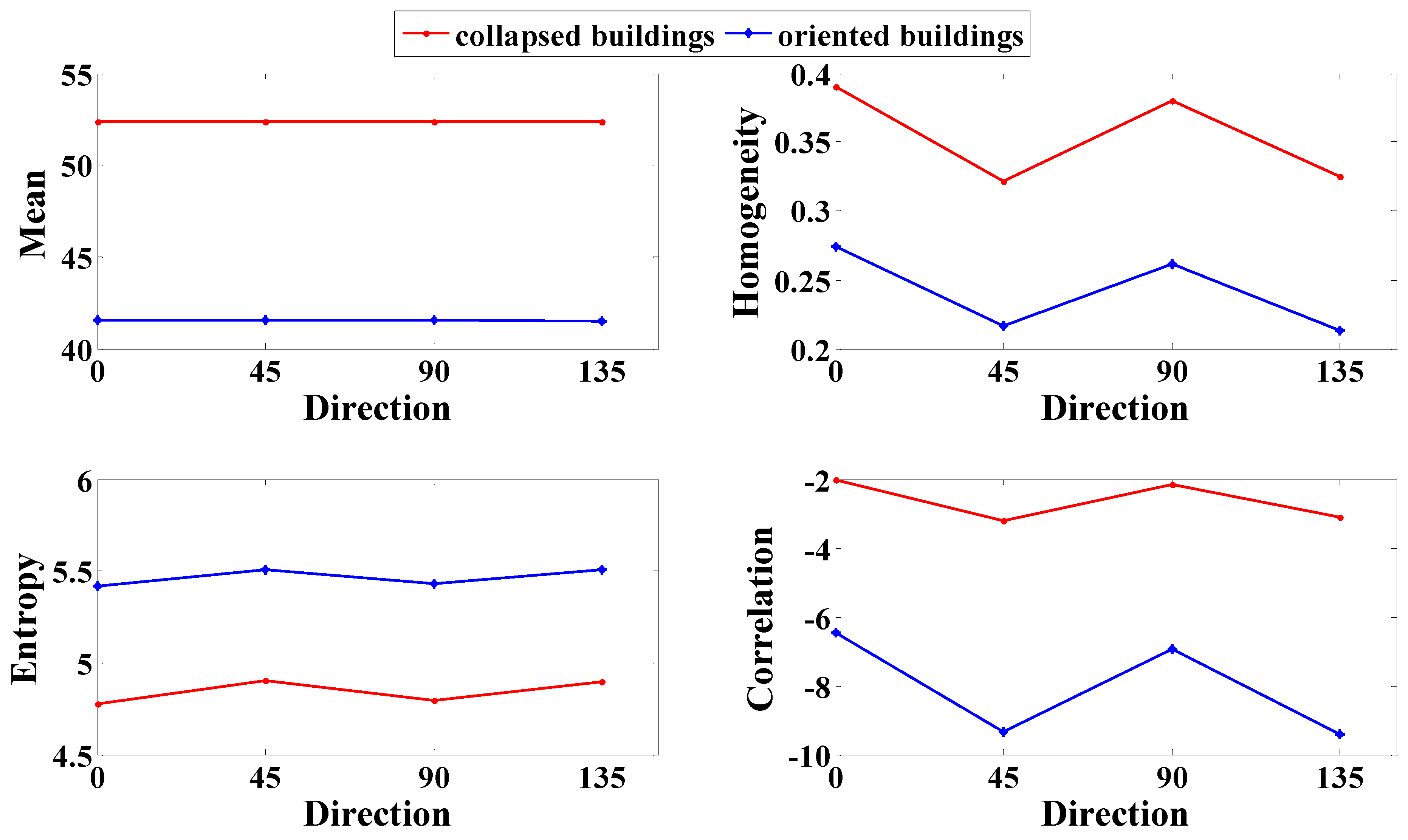

2.2.2. Texture Feature Extraction

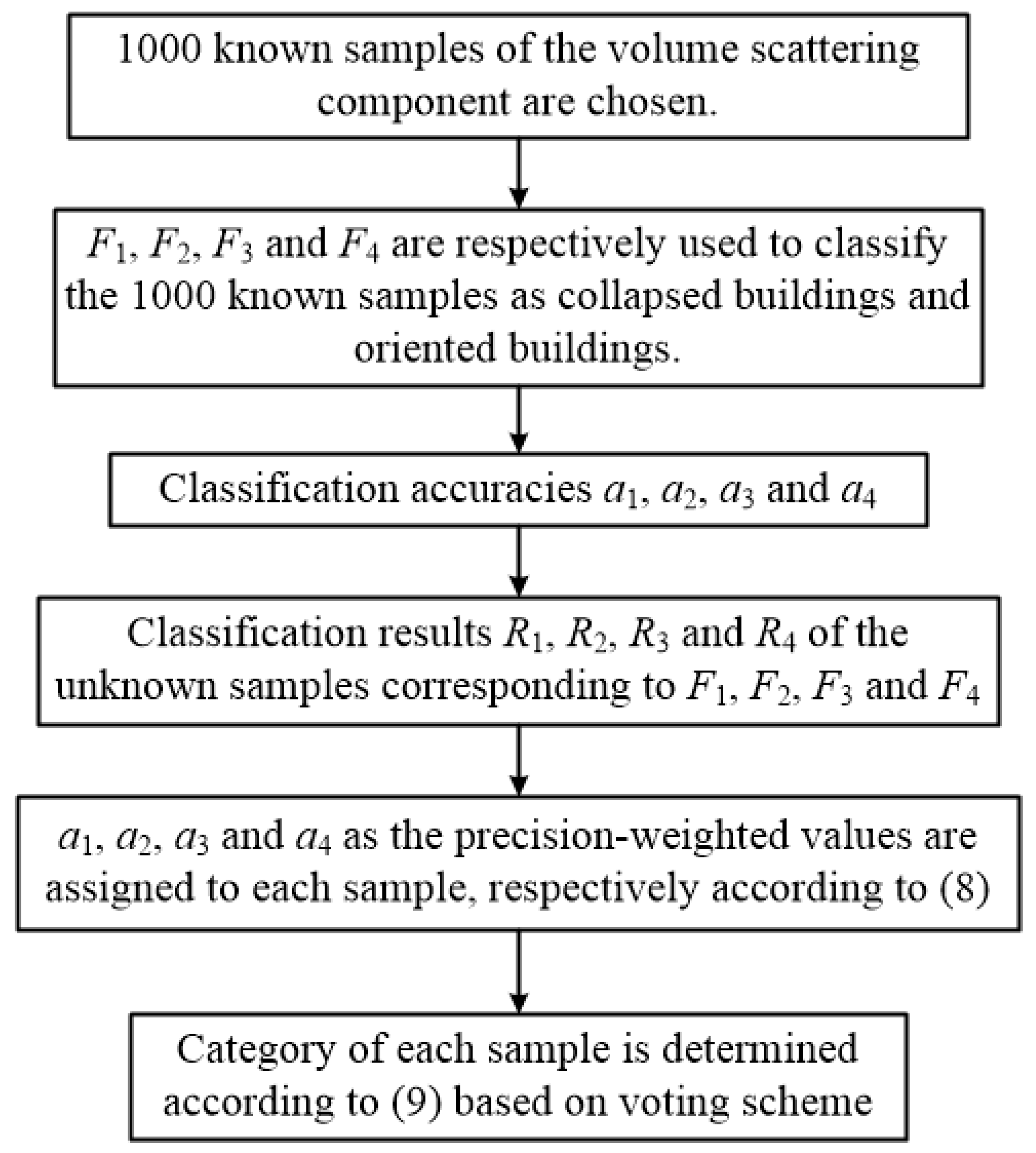

2.2.3. Fusion of the Multiple Texture Features Using the PWMF Method

2.2.4. Building Collapse Rate Calculation

2.2.5. Building Damage Assessment Framework

3. Results

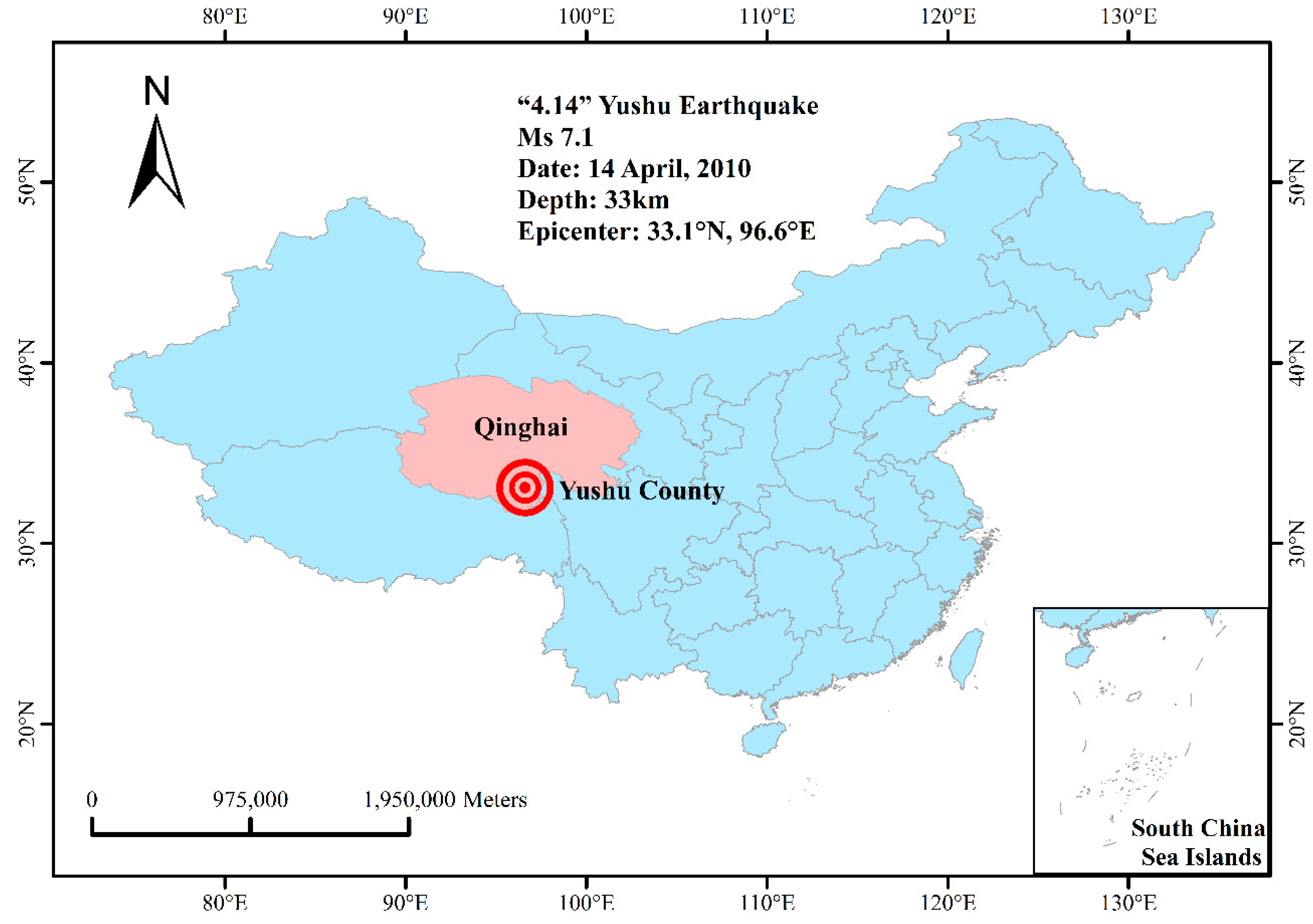

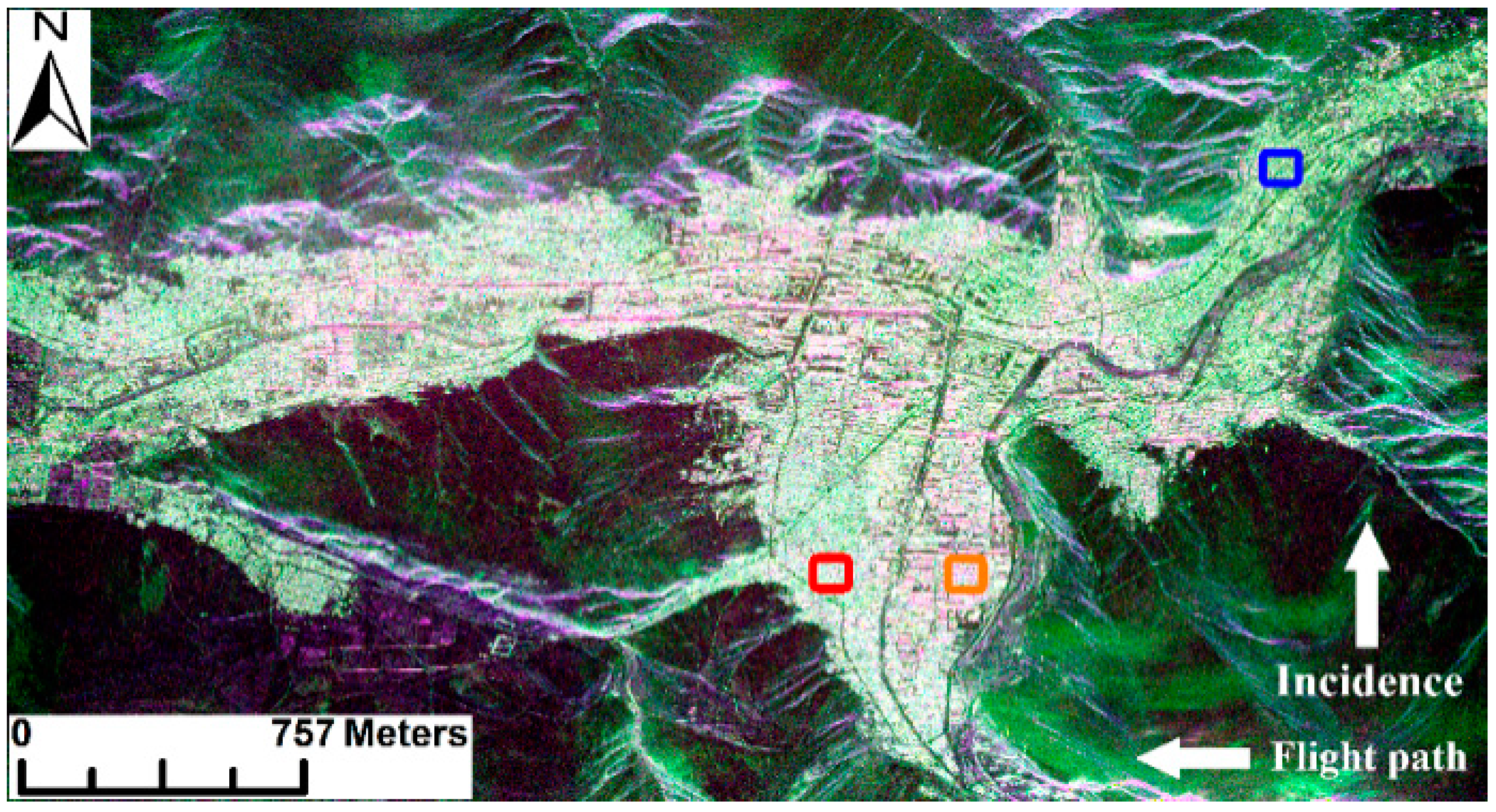

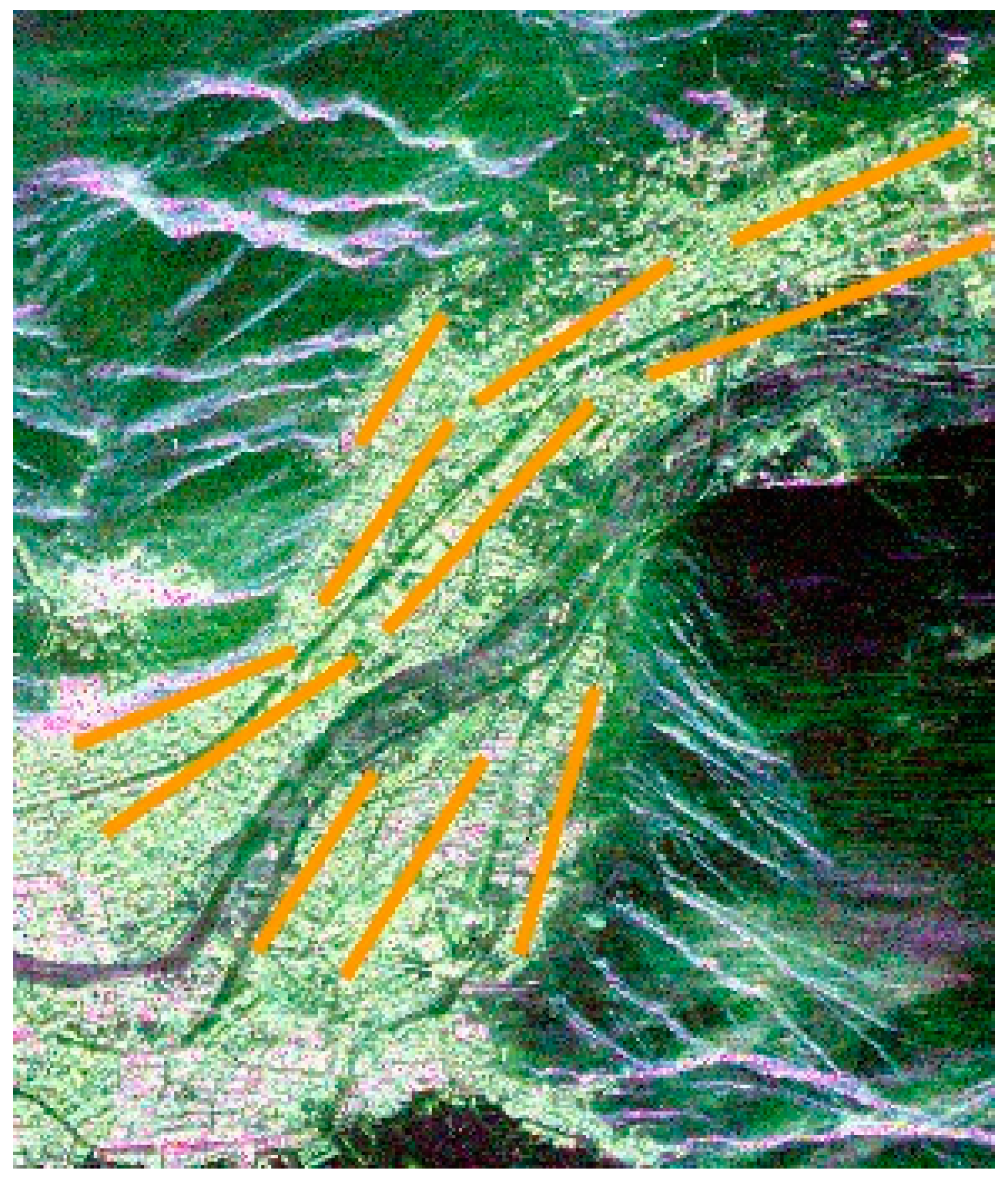

3.1. Study Area Description and Data Preparation

3.2. Experiment Demonstration

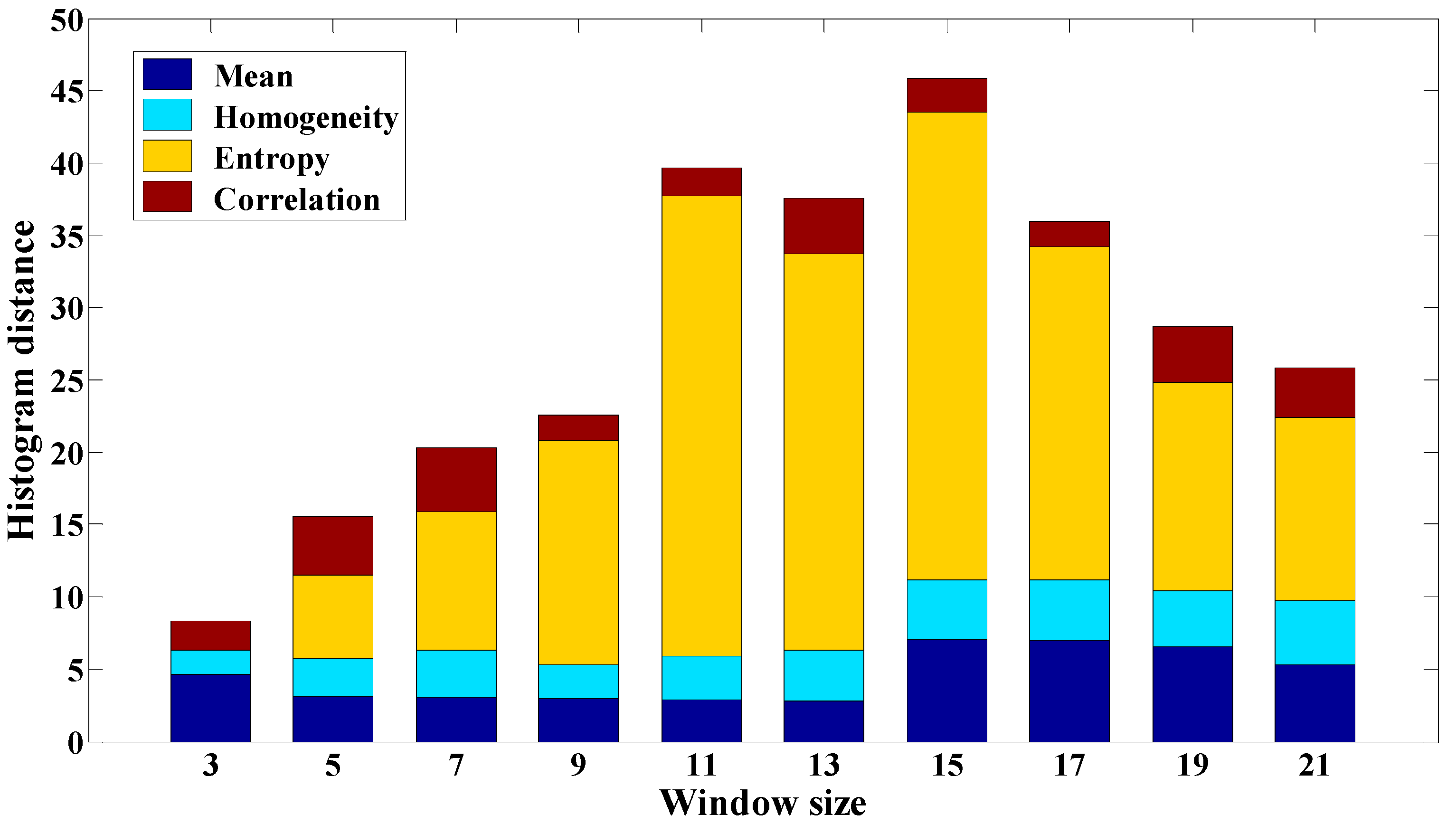

3.2.1. Texture Feature Extraction and Fusion Using OPCE Image

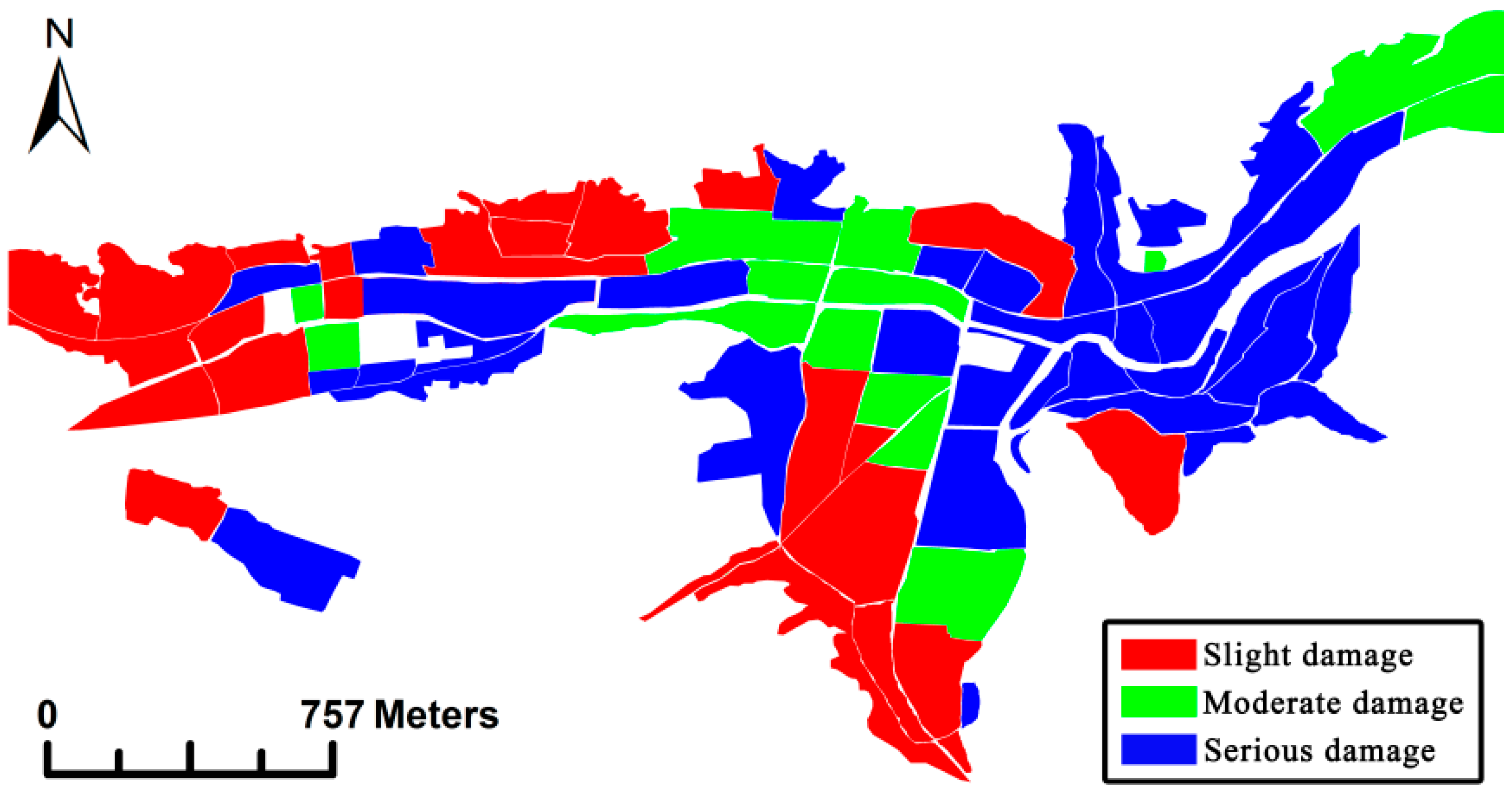

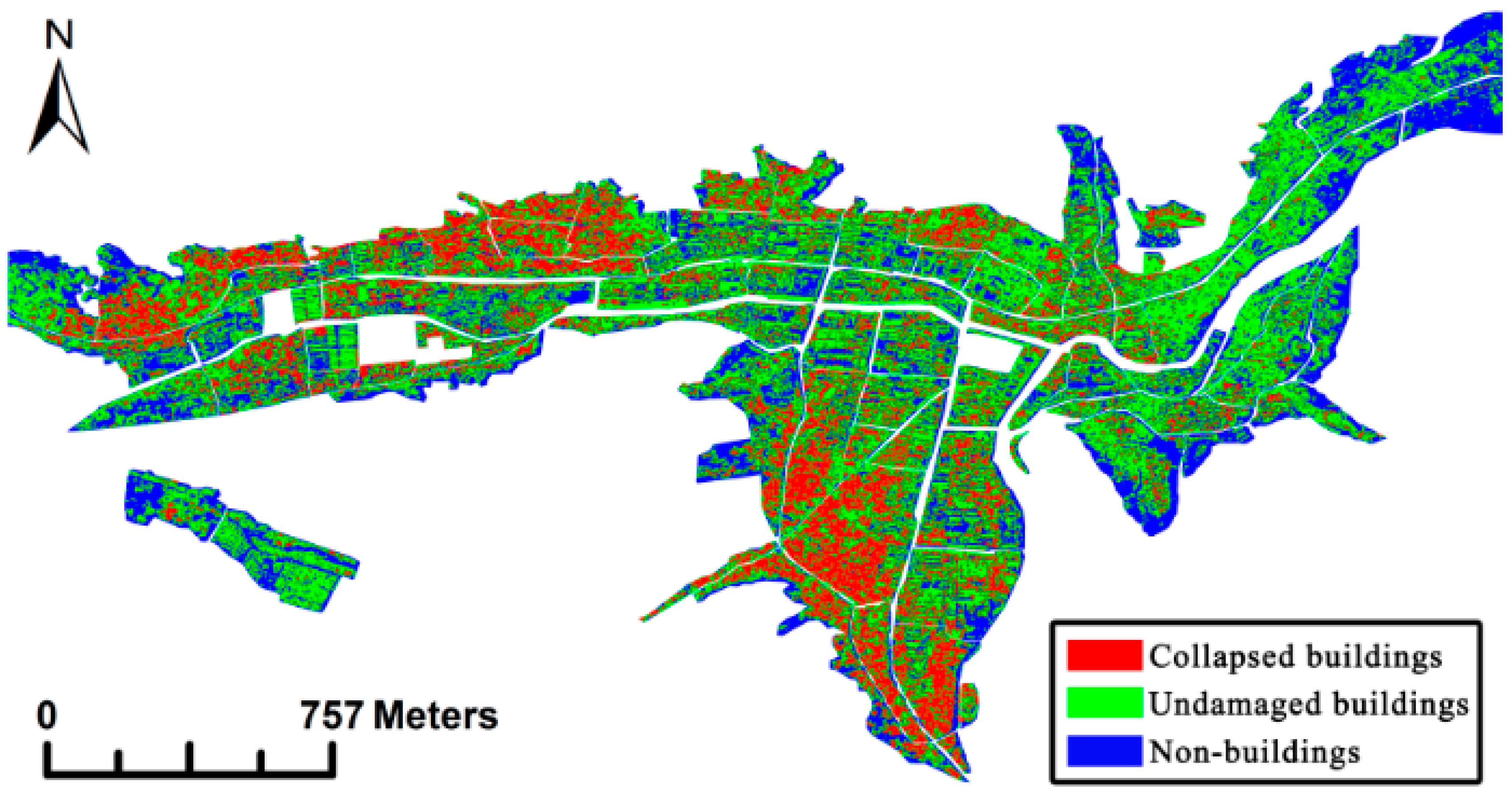

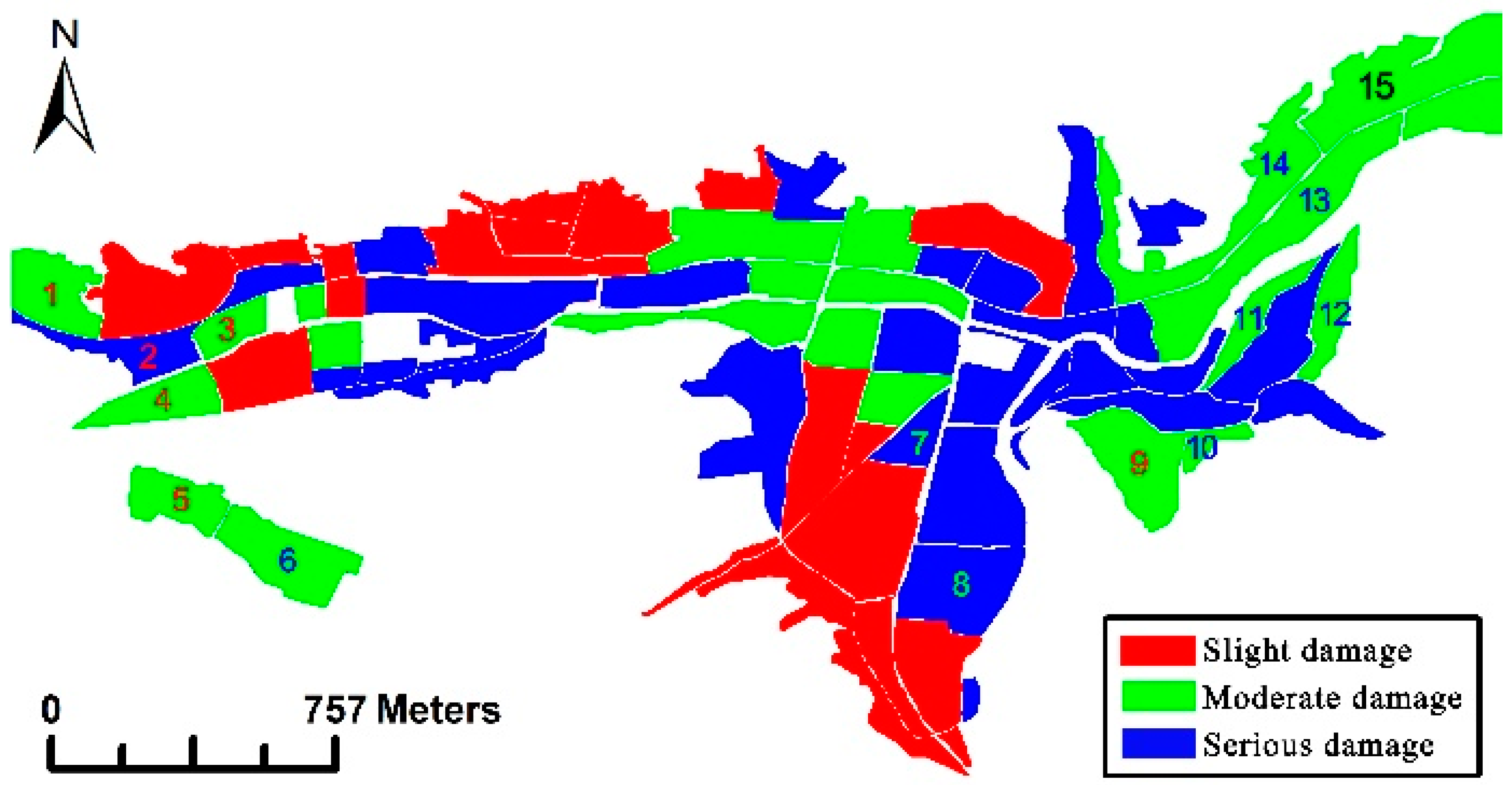

3.2.2. Building Damage Assessment Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Peduto, D.; Ferlisi, S.; Nicodemo, G.; Reale, D.; Pisciotta, G.; Gullà, G. Empirical fragility and vulnerability curves for buildings exposed to slow-moving landslides at medium and large scales. Landslides 2017, 14, 1993–2007. [Google Scholar] [CrossRef]

- Dell’Acqua, F.; Polli, D.A. Post-event only VHR radar satellite data for automated damage assessment. Photogramm. Eng. Remote Sens. 2011, 77, 1037–1043. [Google Scholar] [CrossRef]

- Zhang, J.Q. Design of Earthquake Loss Value Evaluation Model Based on Big Data. China Earthq. Eng. J. 2018, 40, 356–362. [Google Scholar] [CrossRef]

- Huang, F.Q.; Li, M.; Ma, Y.C.; Han, Y.Y.; Tian, L.; Yan, W.; Li, X.F. Studies on earthquake precursors in China: A review for recent 50 years. Geod. Geodyn. 2017, 8, 1–12. [Google Scholar] [CrossRef]

- Ferrentino, E.; Marino, A.; Nunziata, F.; Migliaccio, M. A dual–polarimetric approach to earthquake damage assessment. Int. J. Remote Sens. 2019, 40, 197–217. [Google Scholar] [CrossRef]

- Zhai, W.; Shen, H.F.; Huang, C.L.; Pei, W.S. Building earthquake damage information extraction from a single post–earthquake PolSAR image. Remote Sens. 2016, 8, 171. [Google Scholar] [CrossRef]

- Xia, R.F.; Cheng, G.Q. Application of the LM-BP Neural Network in the Economic Loss Assessment Model for Post-earthquake Buildings. China Earthq. Eng. J. 2019, 41, 208–214. [Google Scholar] [CrossRef]

- Balz, T.; Liao, M.S. Building-damage detection using post-seismic high-resolution SAR satellite data. Int. J. Remote Sens. 2010, 31, 3369–3391. [Google Scholar] [CrossRef]

- Karimzadeh, S.; Mastuoka, M. Building Damage Assessment Using Multisensor Dual-Polarized Synthetic Aperture Radar Data for the 2016 M 6.2 Amatrice Earthquake, Italy. Remote Sens. 2017, 9, 330. [Google Scholar] [CrossRef]

- Ferrentino, E.; Nunziata, F.; Migliaccio, M.; Vicari, A. A sensitivity analysis of dual-polarization features to damage due to the 2016 Central-Italy earthquake. Int. J. Remote Sens. 2018, 6846–6863. [Google Scholar] [CrossRef]

- Miura, H.; Midorikawa, S.; Matsuoka, M. Building damage assessment using high–resolution satellite SAR images of the 2010 Haiti earthquake. Earthq. Spectra 2016, 32, 591–610. [Google Scholar] [CrossRef]

- Shen, H.; Li, X.; Cheng, Q.; Zeng, C.; Yang, G.; Li, H.F.; Zhang, L.P. Missing information reconstruction of remote sensing data: A technical review. IEEE Geosci. Remote Sens. Mag. 2015, 3, 61–85. [Google Scholar] [CrossRef]

- Wang, C.; Zhang, H.; Wu, F.; Zhang, B.; Tang, Y.X.; Wu, H.G.; Wen, X.Y.; Yan, D.M. Disaster phenomena of Wenchuan earthquake in high resolution airborne synthetic aperture radar images. J. Appl. Remote Sens. 2009, 3, 1–16. [Google Scholar] [CrossRef]

- Peduto, D.; Nicodemo, G.; Maccabiani, J.; Ferlisi, S. Multi-scale analysis of settlement-induced building damage using damage surveys and DInSAR data: A case study in The Netherlands. Eng. Geol. 2017, 218, 117–133. [Google Scholar] [CrossRef]

- Zhai, W.; Shen, H.F.; Huang, C.L.; Pei, W.S. Fusion of polarimetric and texture information for urban building extraction from fully polarimetric SAR imagery. Remote Sens. Lett. 2015, 7, 31–40. [Google Scholar] [CrossRef]

- Yamaguchi, Y. Disaster monitoring by fully polarimetric SAR data acquired with ALOS–PALSAR. Proc. IEEE 2012, 100, 2851–2860. [Google Scholar] [CrossRef]

- Chen, S.W.; Wang, X.S.; Sato, M. Urban Damage Level Mapping Based on Scattering Mechanism Investigation Using Fully Polarimetric SAR Data for the 3.11 East Japan Earthquake. IEEE Trans. Geosci. Remote Sens. 2016, 54, 6919–6929. [Google Scholar] [CrossRef]

- Chen, S.W.; Sato, M. Tsunami damage investigation of built–up areas using multitemporal spaceborne full polarimetric SAR images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1985–1997. [Google Scholar] [CrossRef]

- Park, S.E.; Yamaguchi, Y.; Kim, D. Polarimetric SAR remote sensing of the 2011 Tohoku earthquake using ALOS/PALSAR. Remote Sens. Environ. 2013, 132, 212–220. [Google Scholar] [CrossRef]

- Watanabe, M.; Motohka, T.; Miyagi, Y.; Yonezawa, C.; Shimada, M. Analysis of urban areas affected by the 2011 off the Pacific Coast of Tohoku earthquake and tsunami with L–band SAR full–polarimetric mode. IEEE Geosci. Remote Sens. Lett. 2012, 9, 472–476. [Google Scholar] [CrossRef]

- Sato, M.; Chen, S.W.; Satake, M. Polarimetric SAR analysis of tsunami damage following the March 11, 2011 East Japan earthquake. Proc. IEEE 2012, 100, 2861–2875. [Google Scholar] [CrossRef]

- Singh, G.; Yamaguchi, Y.; Boerner, W.M.; Park, S.E. Monitoring of the March 11, 2011, off–Tohoku 9.0 Earthquake with super–Tsunami disaster by implementing fully polarimetric high–resolution POLSAR techniques. Proc. IEEE 2013, 101, 831–846. [Google Scholar] [CrossRef]

- Watanabe, M.; Thapa, R.B.; Ohsumi, T.; Fujiwara, H.; Yonezawa, C.; Tomii, N.; Suzuki, S. Detection of damaged urban areas using interferometric SAR coherence change with PALSAR-2. Earth Planets Space 2016, 68, 131. [Google Scholar] [CrossRef]

- Zhang, H.Z.; Wang, Q.; Zeng, Q.M.; Jiao, J. A new method for building collapse detection jointly using interferometric and polarimetric information. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015. [Google Scholar] [CrossRef]

- Li, X.W.; Guo, H.D.; Zhang, L.; Chen, X.; Liang, L. A new approach to collapsed building extraction using RADARSAT-2 polarimetric SAR imagery. IEEE Geosci. Remote Sens. Lett. 2012, 9, 677–681. [Google Scholar] [CrossRef]

- Guo, H.D.; Li, X.W.; Zhang, L. Study of detecting method with advanced airborne and spaceborne synthetic aperture radar data for collapsed urban buildings from the Wenchuan earthquake. J. Appl. Remote Sens. 2009, 3, 031695. [Google Scholar] [CrossRef]

- Guo, H.D.; Wang, X.Y.; Li, X.W.; Liu, G.; Zhang, L.; Yan, S.Y. Yushu earthquake synergic analysis using multimodal SAR datasets. Chin. Sci. Bull. 2010, 55, 3499–3503. [Google Scholar] [CrossRef]

- Masaka, M.; Yamaguchi, Y.; Yamada, H. Experimental study on detecting deformed man–made objects based on eigenvalue/eigenvector analysis. In Proceedings of the IEEE 5th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Singapore, 1–4 September 2015. [Google Scholar] [CrossRef]

- Chen, Q.H.; Li, L.L.; Jiang, P.; Liu, X.G. Building collapse extraction using modified freeman decomposition from post–disaster polarimetric SAR image. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar] [CrossRef]

- Zhai, W.; Shen, H.F.; Huang, C.L. Collapsed Buildings Extraction from the PolSAR Image based on the Analysis of Texture Features. Remote Sens. Technol. Appl. 2016, 31, 975–982. [Google Scholar] [CrossRef]

- Li, L.L.; Liu, X.G.; Chen, Q.H.; Yang, S. Building damage assessment from PolSAR data using texture parameters of statistical model. Comput. Geosci. 2018, 113, 115–126. [Google Scholar] [CrossRef]

- Shen, J.C.; Xu, X.; Dong, H.; Gui, R.; Song, C. Collapsed Building Extraction from Single Full Polarimetric SAR Image after Earthquake. Sci. Technol. Eng. 2015, 15, 86–91. [Google Scholar]

- Zhai, W.; Huang, C.L. Fast building damage mapping using a single post–earthquake PolSAR image: A case study of the 2010 Yushu earthquake. Earth Planets Space 2016, 68, 86. [Google Scholar] [CrossRef]

- Zhang, H.Z.; Wang, Q.; Zeng, Q.M.; Jiao, J. A novel approach to building collapse detection from post–seismic polarimetric SAR imagery by using optimization of polarimetric contrast enhancement. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Milan, Italy, 26–31 July 2015. [Google Scholar] [CrossRef]

- Zhang, H.Z.; Zeng, Q.M.; Jiao, J.; Gao, S. A new framework of building collapse extraction based on azimuth time–frequency analysis. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016. [Google Scholar] [CrossRef]

- Shi, L.; Sun, W.D.; Yang, J.; Li, P.X.; Lu, L.J. Building collapse assessment by the use of postearthquake Chinese VHR airborne SAR. IEEE Geosci. Remote Sens. Lett. 2015, 12, 2021–2025. [Google Scholar] [CrossRef]

- Sun, W.D.; Shi, L.; Yang, J.; Li, P.X. Building collapse assessment in urban areas using texture information from postevent SAR data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3792–3808. [Google Scholar] [CrossRef]

- Freeman, A.; Durden, S.L. A three-component scattering model for polarimetric SAR. IEEE Trans. Geosci. Remote Sens. 1998, 36, 963–973. [Google Scholar] [CrossRef]

- Yamaguchi, Y.; Moriyama, T.; Ishido, M.; Yamada, H. Four-component scattering model for polarimetric SAR image decomposition. IEEE Trans. Geosci. Remote Sens. 2005, 43, 1699–1706. [Google Scholar] [CrossRef]

- Yamaguchi, Y.; Yajima, Y.; Yamada, H. A four-component decomposition of POLSAR images based on the coherency matrix. IEEE Geosci. Remote Sens. Lett. 2006, 3, 292–296. [Google Scholar] [CrossRef]

- Yamaguchi, Y.; Sato, A.; Boerner, W.M.; Sato, R.; Yamada, H. Four-component scattering power decomposition with rotation of coherency matrix. IEEE Trans. Geosci. Remote Sens. 2011, 49, 2251–2258. [Google Scholar] [CrossRef]

- Moya, L.; Zakeri, H.; Yamazaki, F.; Liu, W.; Mas, E.; Koshimura, S. 3D gray level co-occurrence matrix and its application to identifying collapsed buildings. ISPRS J. Photogramm. Remote Sens. 2019, 149, 14–28. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I.H. Textural features for image classification. IEEE Trans. Syst., Man Cybern. 1973, 610–621. [Google Scholar] [CrossRef]

- Yang, J.; Peng, Y.N. Recent development of the optimization of polarimetric contrast enhancement. Remote Sens. Technol. Appl. 2005, 20, 38–41. [Google Scholar]

- Yang, J.; Dong, G.W.; Peng, Y.N.; Yamaguchi, Y.; Yamada, H. Generalized optimization of polarimetric contrast enhancement. IEEE Geosci. Remote. Sens. Lett. 2004, 1, 171–174. [Google Scholar] [CrossRef]

- Yang, J.; Yamaguchi, Y.; Boerner, W.M.; Lin, S.M. Numerical methods for solving the optimal problem of contrast enhancement. IEEE Trans. Geosci. Remote Sens. 2000, 38, 965–971. [Google Scholar] [CrossRef]

- Yang, J. On Theoretical Problems in Radar Polarimetry. Ph.D. Thesis, Department of Information Engineering, Niigata University, Niigata, Japan, 1999. [Google Scholar]

- Dou, J.J.; Wen, J.; Liu, C.Q. Histogram-based color image retrieval. Infrared Laser Eng. 2005, 34, 84–88. [Google Scholar]

- Guo, H.D.; Zhang, B.; Lei, L.P.; Zhang, L.; Chen, Y. Spatial distribution and inducement of collapsed buildings in Yushu earthquake based on remote sensing analysis. Sci. China Earth Sci. 2010, 53, 794–796. [Google Scholar] [CrossRef]

- Preliminary Remote Sensing Results of Qinghai Yushu Earthquake Emergency. Available online: http://www.cea.gov.cn/manage/html/8a8587881632fa5c0116674a018300cf/_content/10_04/17/1271485532211.html (accessed on 20 June 2017).

- China National Standardization Management Committee. The Chinese Seismic Intensity Scale GB/T 17742-2008; China National Standardization Management Committee: Beijing, China, 2008.

- Lee, J.S.; Lee, J.S.; Wen, J.H.; Ainsworth, T.L.; Chen, K.S.; Chen, A.J. Improved sigma filter for speckle filtering of SAR imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 202–213. [Google Scholar] [CrossRef]

- Wu, J.C.; Zheng, S.M.; Li, H.; Cai, Y.J. Building damage characteristics during the Zigui, Hubei M4.7 Earthquake on March 30, 2014. China Earthq. Eng. J. 2016, 38, 669–672. [Google Scholar] [CrossRef]

- Shen, H.F.; Meng, X.C.; Zhang, L.P. An integrated framework for the spatio–temporal–spectral fusion of remote sensing images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7135–7148. [Google Scholar] [CrossRef]

| The Proposed Method | IYFD | |||||

|---|---|---|---|---|---|---|

| (Experiment) | ||||||

| Slight | Moderate | Serious | Slight | Moderate | Serious | |

| (No. of Blocks) | (No. of Blocks) | |||||

| Reference | ||||||

| Slight | 12 | 2 | 0 | 9 | 4 | 1 |

| Moderate | 6 | 27 | 0 | 2 | 21 | 10 |

| Serious | 5 | 1 | 19 | 1 | 2 | 22 |

| Overall accuracy: 80.56% | Overall accuracy: 72.22% | |||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhai, W.; Huang, C.; Pei, W. Building Damage Assessment Based on the Fusion of Multiple Texture Features Using a Single Post-Earthquake PolSAR Image. Remote Sens. 2019, 11, 897. https://doi.org/10.3390/rs11080897

Zhai W, Huang C, Pei W. Building Damage Assessment Based on the Fusion of Multiple Texture Features Using a Single Post-Earthquake PolSAR Image. Remote Sensing. 2019; 11(8):897. https://doi.org/10.3390/rs11080897

Chicago/Turabian StyleZhai, Wei, Chunlin Huang, and Wansheng Pei. 2019. "Building Damage Assessment Based on the Fusion of Multiple Texture Features Using a Single Post-Earthquake PolSAR Image" Remote Sensing 11, no. 8: 897. https://doi.org/10.3390/rs11080897

APA StyleZhai, W., Huang, C., & Pei, W. (2019). Building Damage Assessment Based on the Fusion of Multiple Texture Features Using a Single Post-Earthquake PolSAR Image. Remote Sensing, 11(8), 897. https://doi.org/10.3390/rs11080897