Robot-Assisted Floor Surface Profiling Using Low-Cost Sensors

Abstract

1. Introduction

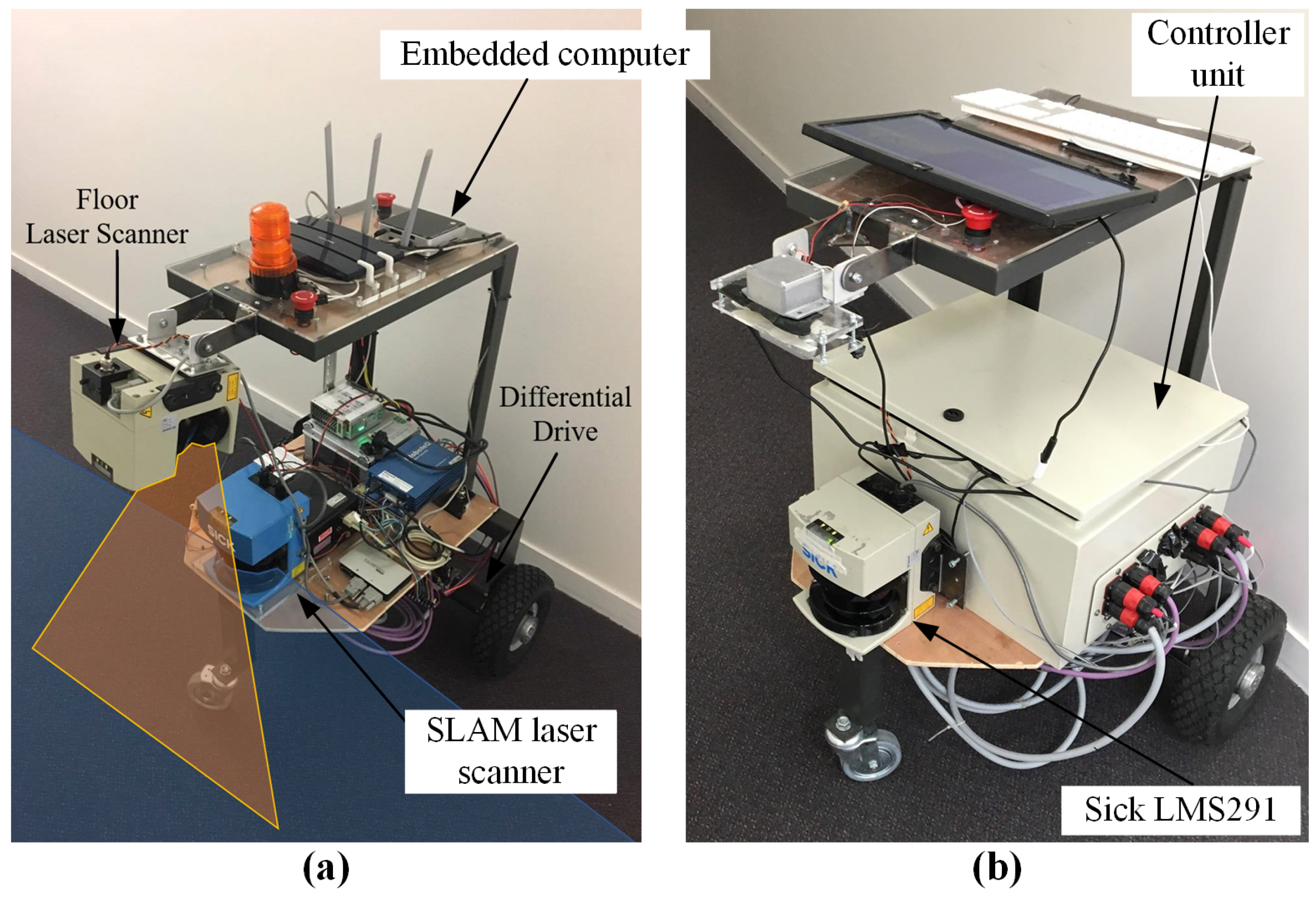

2. Robotic Platform Development

2.1. System Requirements

2.2. Mechanical System

2.3. Electrical System

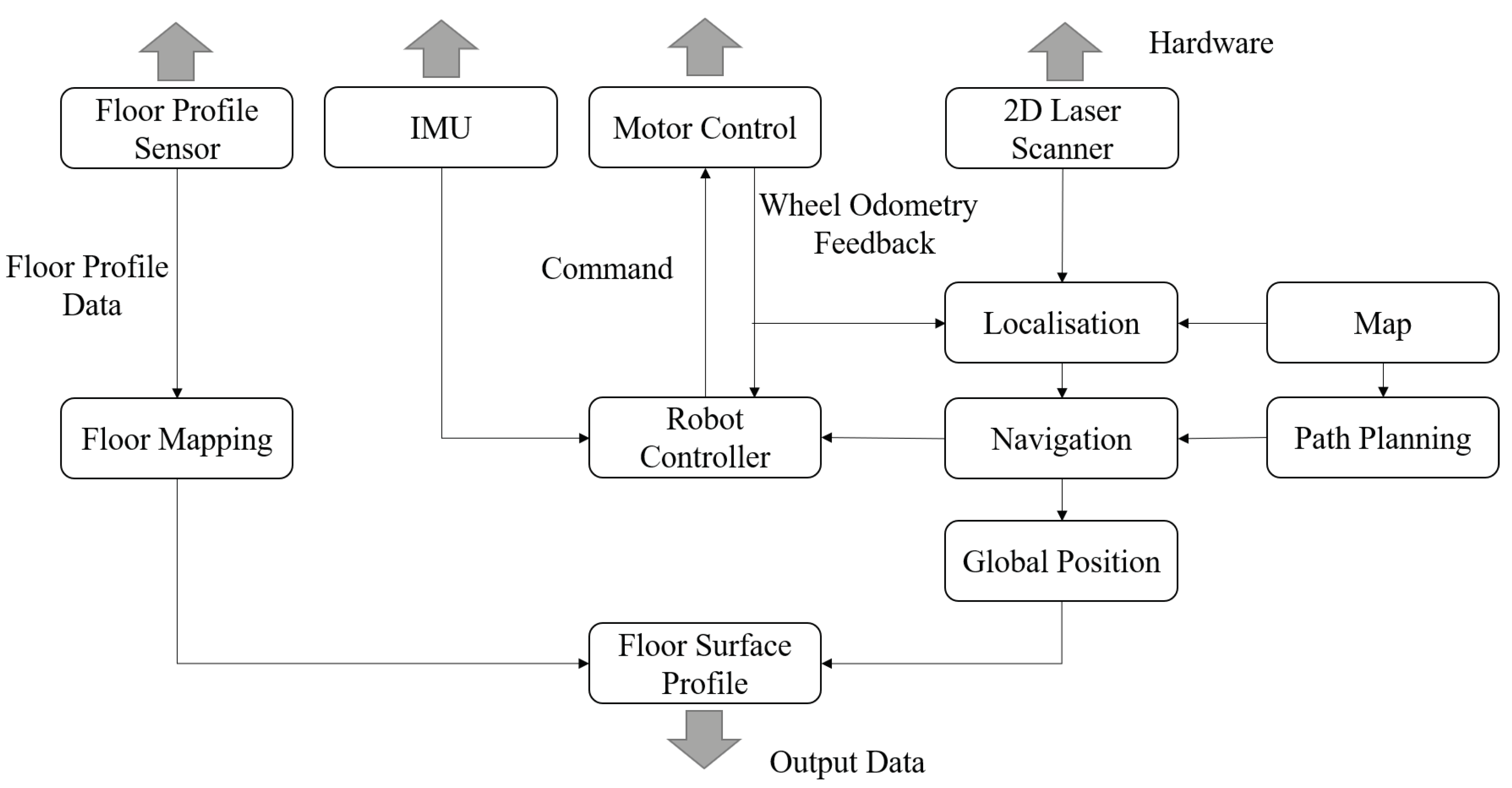

2.4. Software System

2.5. Floor Profile Creation

3. Localization and Floor Scanning Sensor Selection

3.1. Localization Sensor

3.2. Floor Scanning Sensor

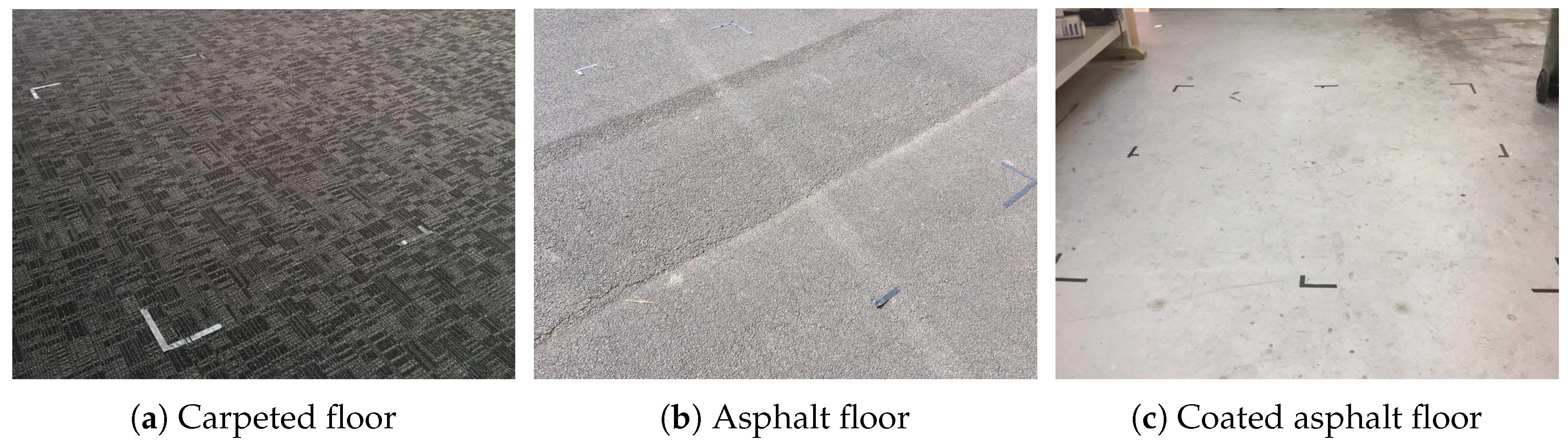

4. Initial Testing

4.1. Experiment Methodology

4.2. Measurement Methods

4.3. Initial Floor Capture Results

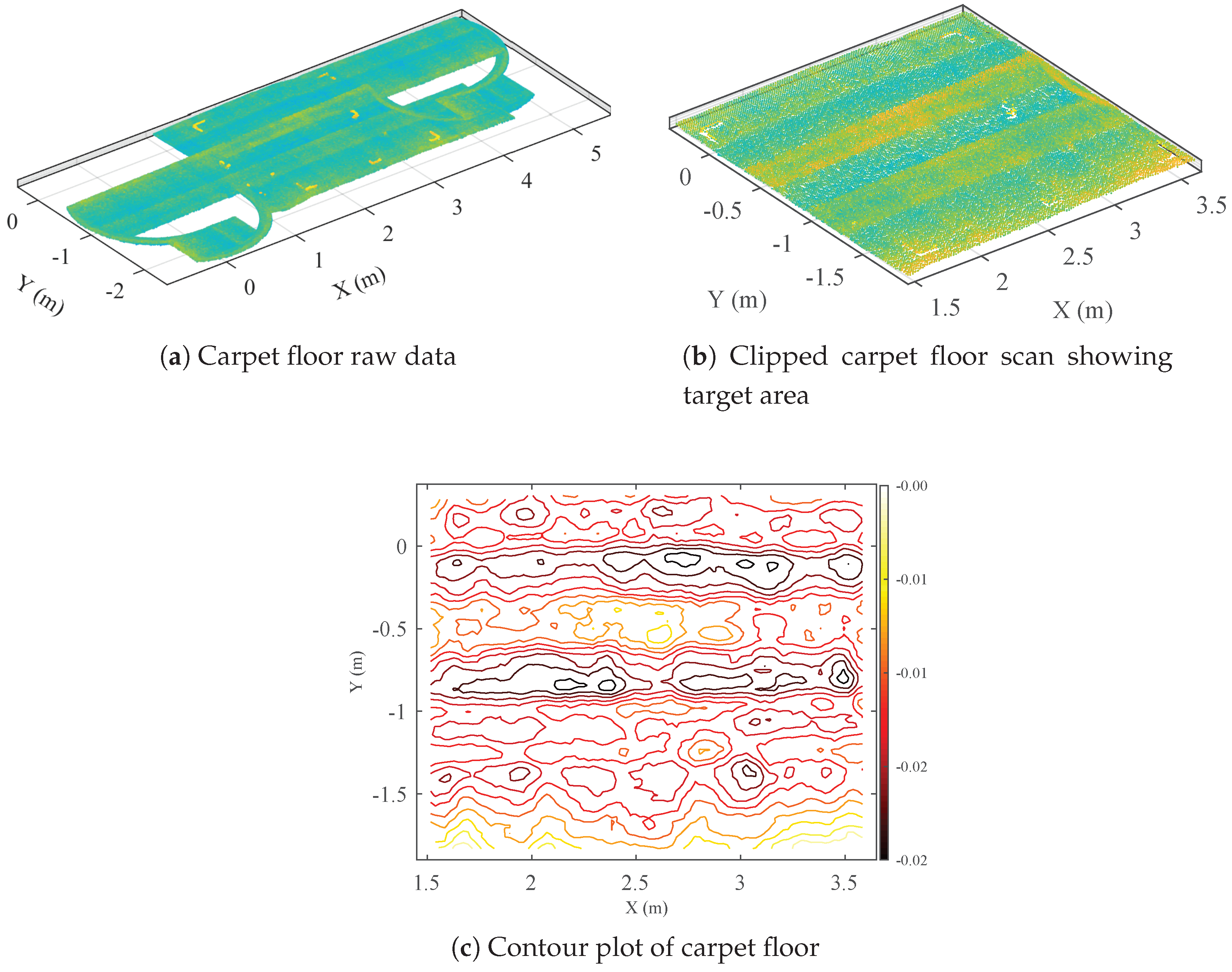

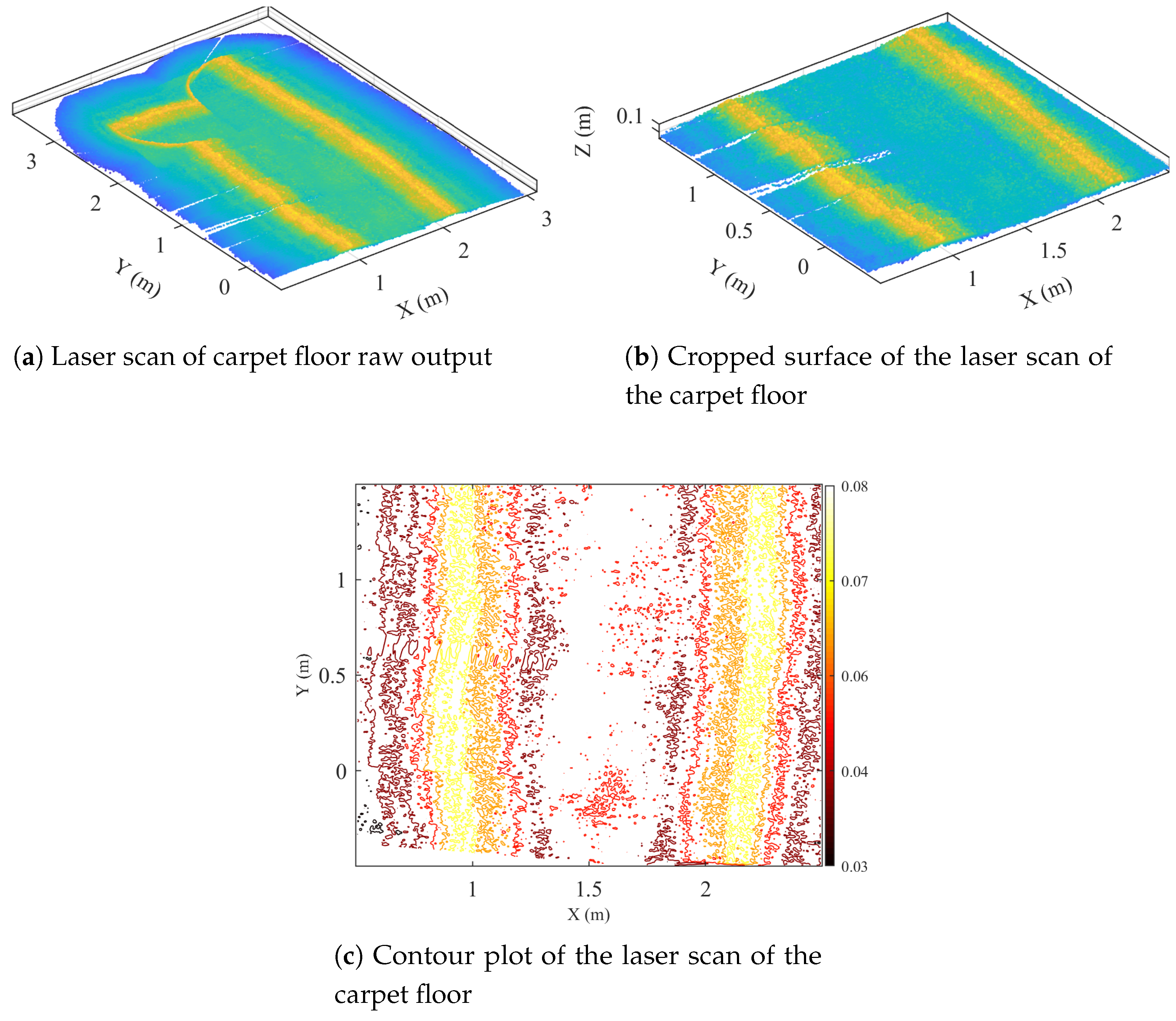

4.3.1. Carpeted Floor

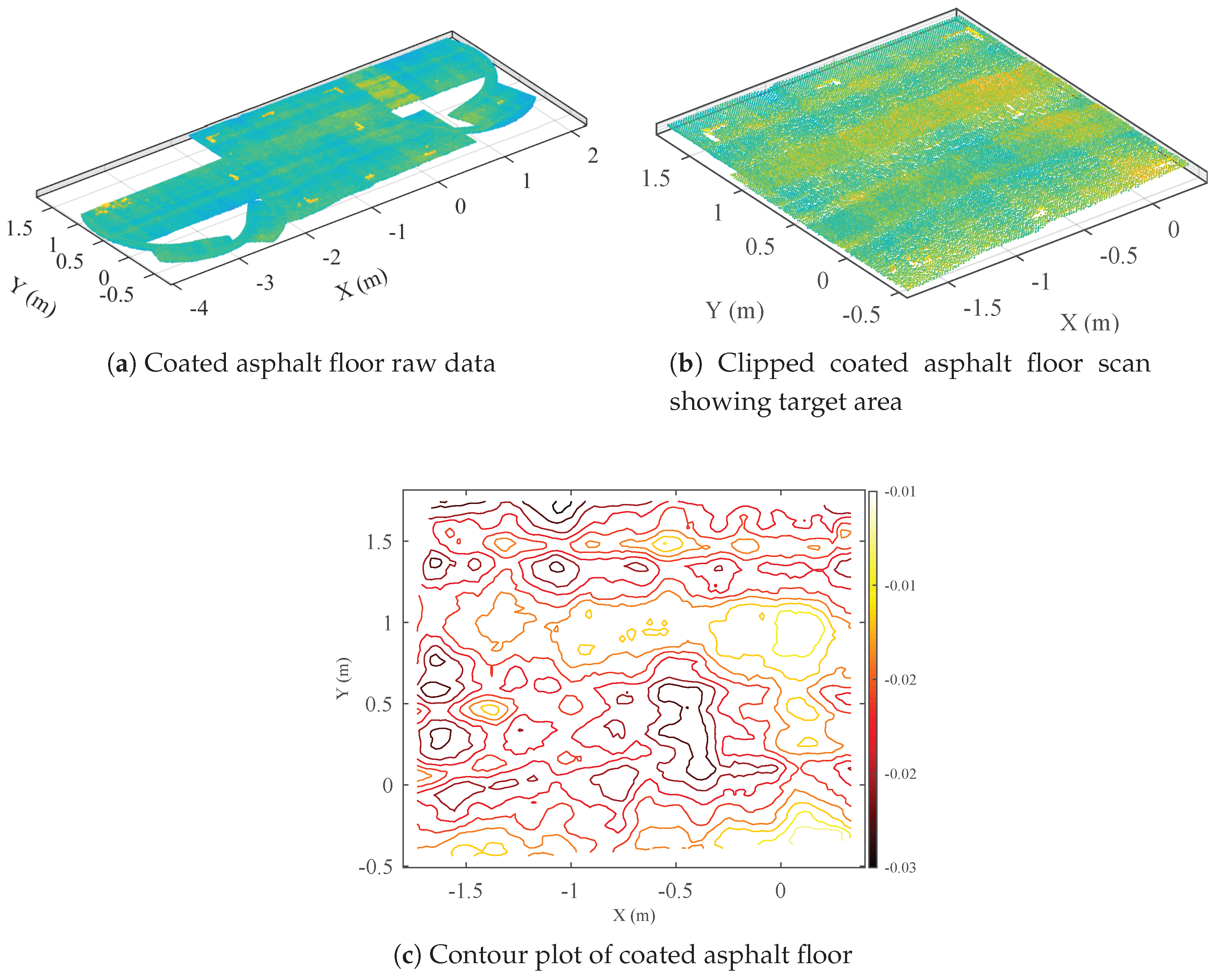

4.3.2. Workshop Floor

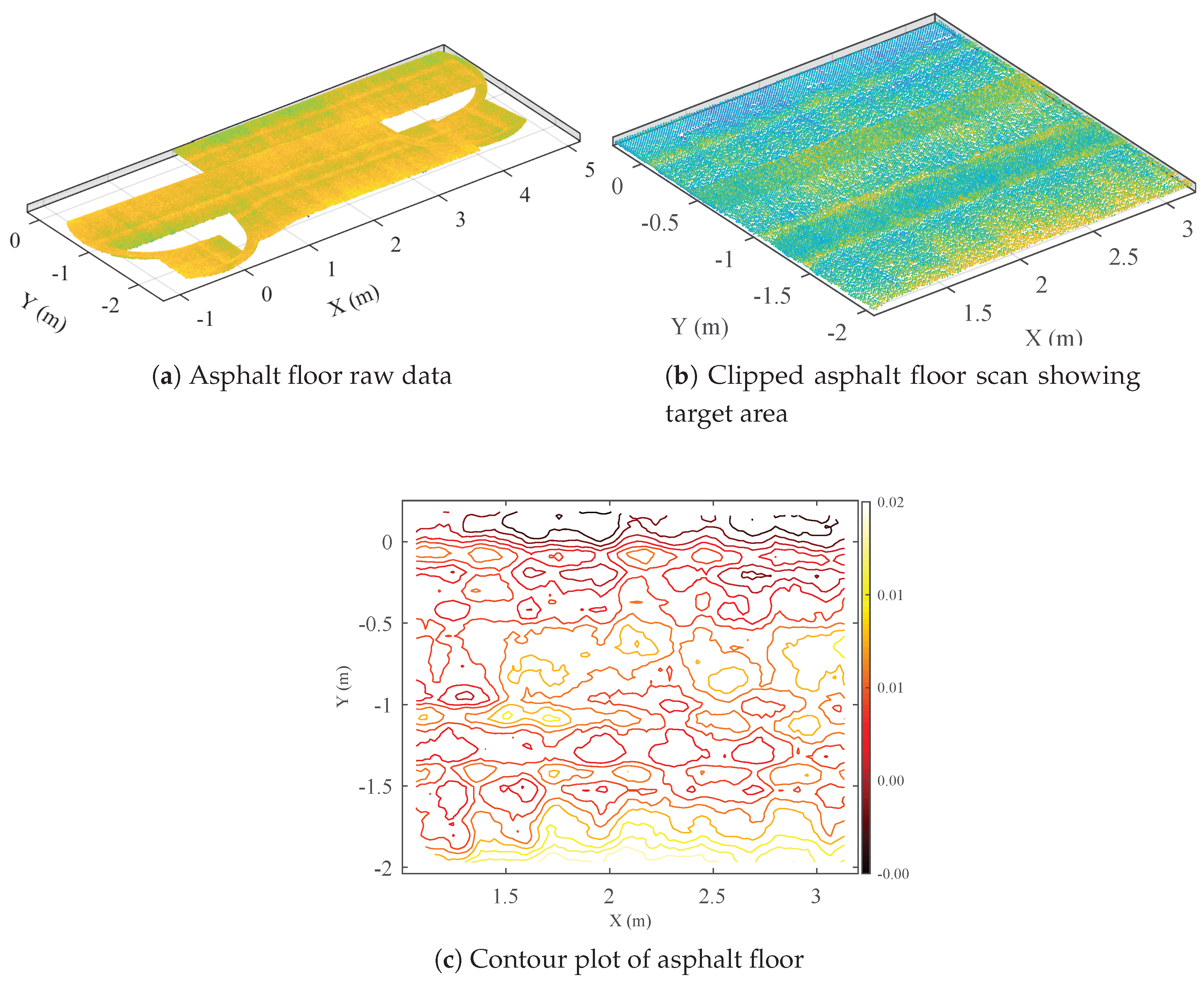

4.3.3. Asphalt

5. Initial Challenges

5.1. Localization

5.2. 2D Limitations

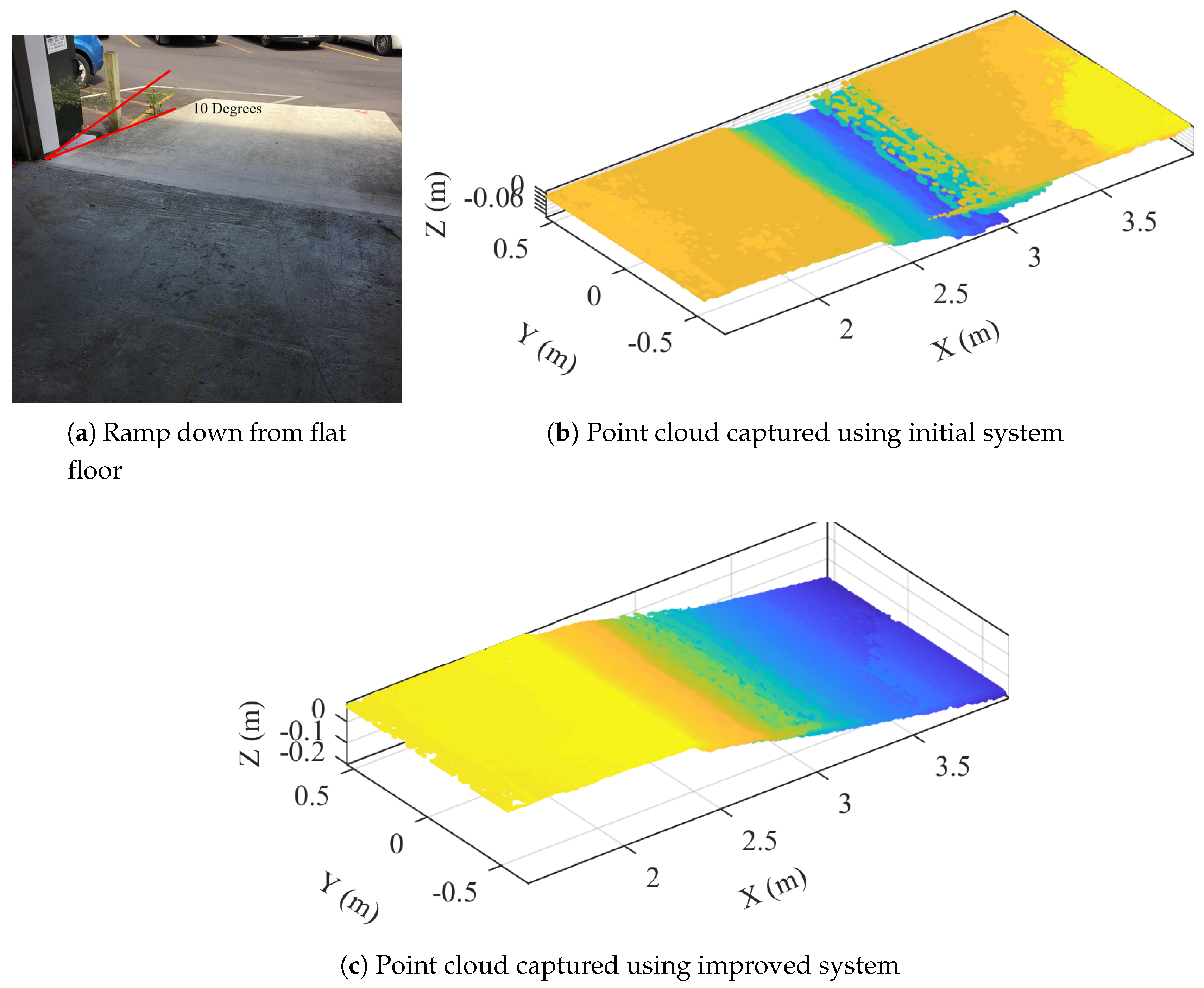

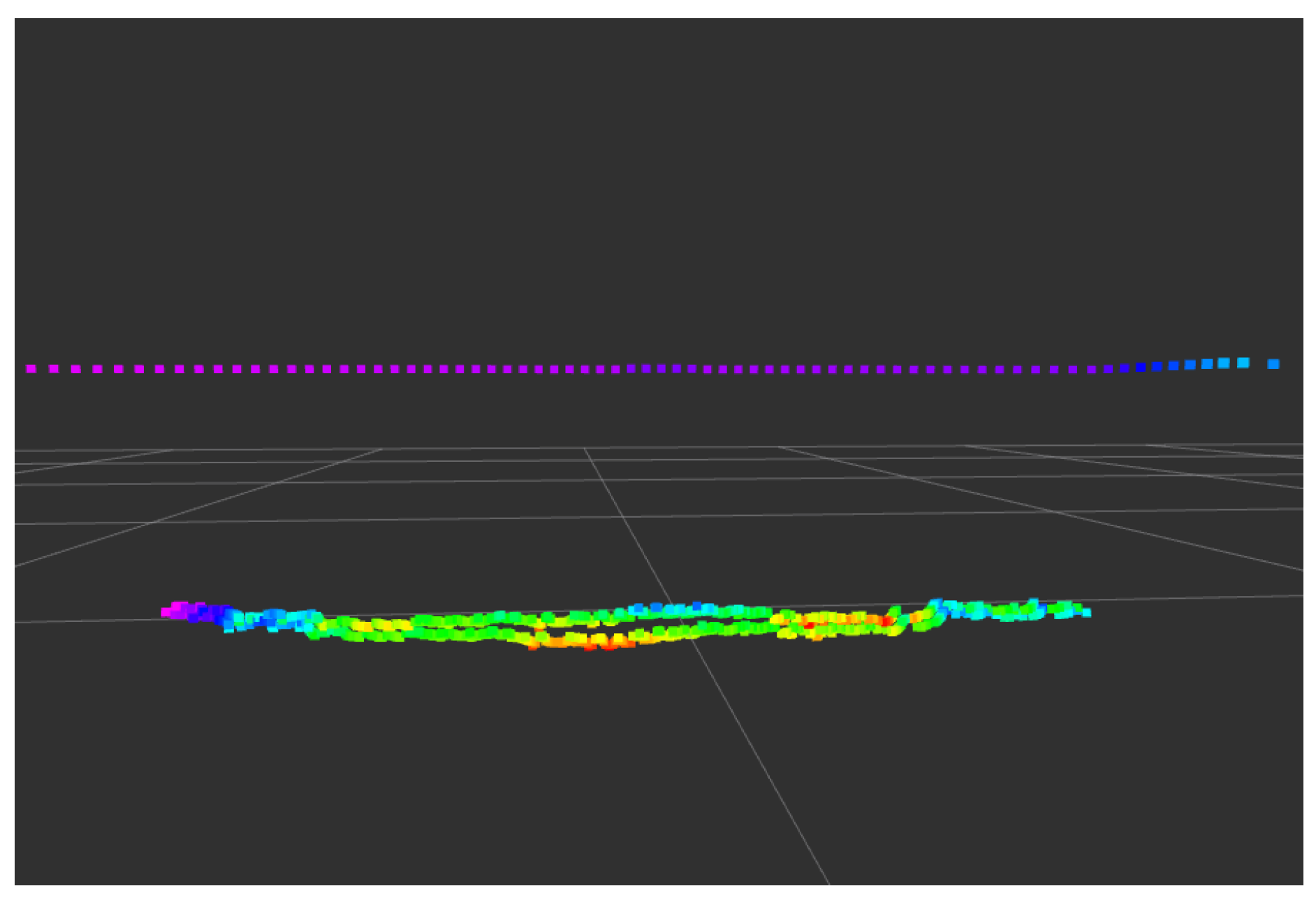

6. Further Development

6.1. Sensor Accuracy

6.2. Map Creation

6.3. 2D to 3D Extrapolation

6.4. RealSense Camera Testing

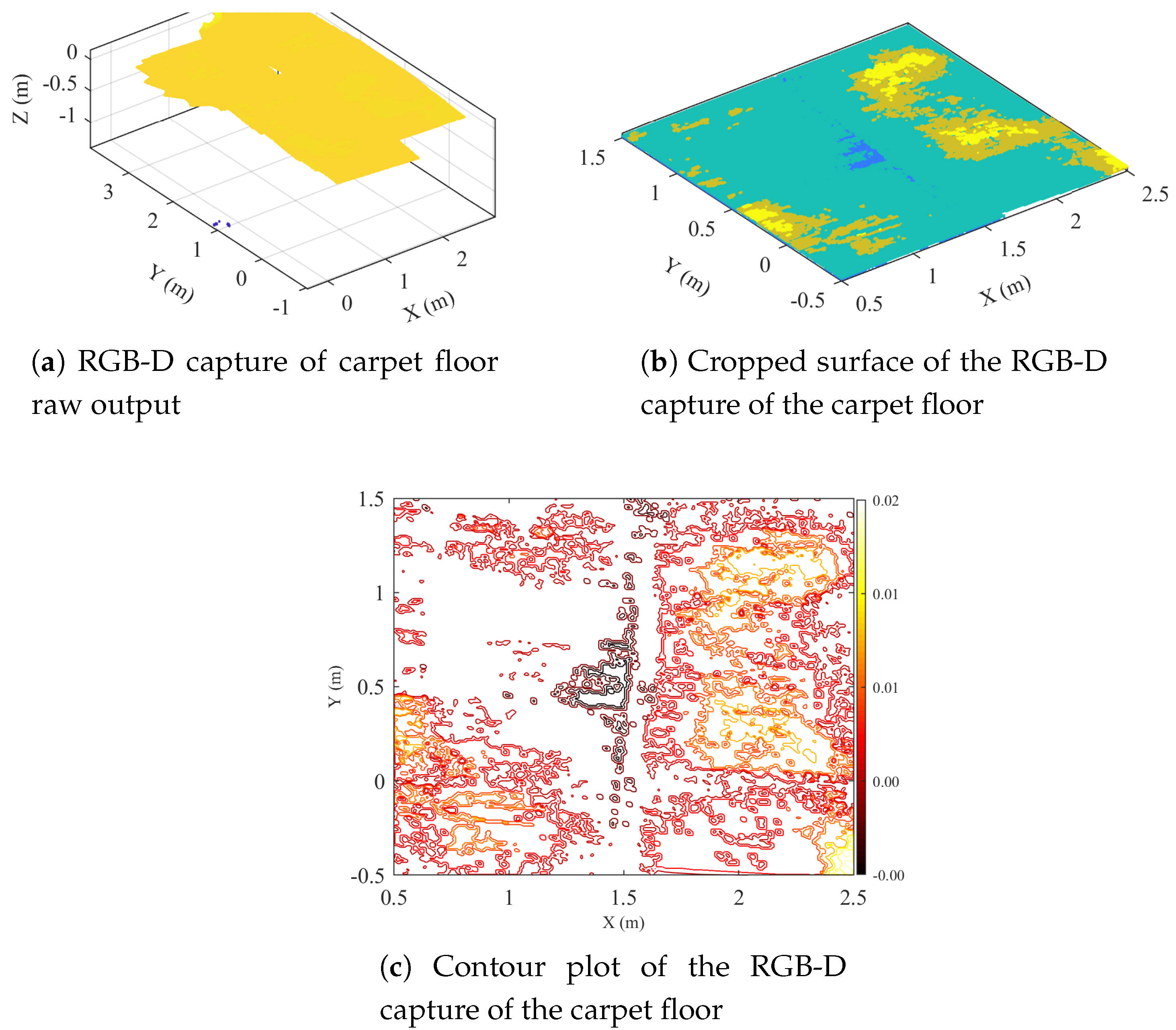

6.5. Floor Profile Creation with RGB-D Camera

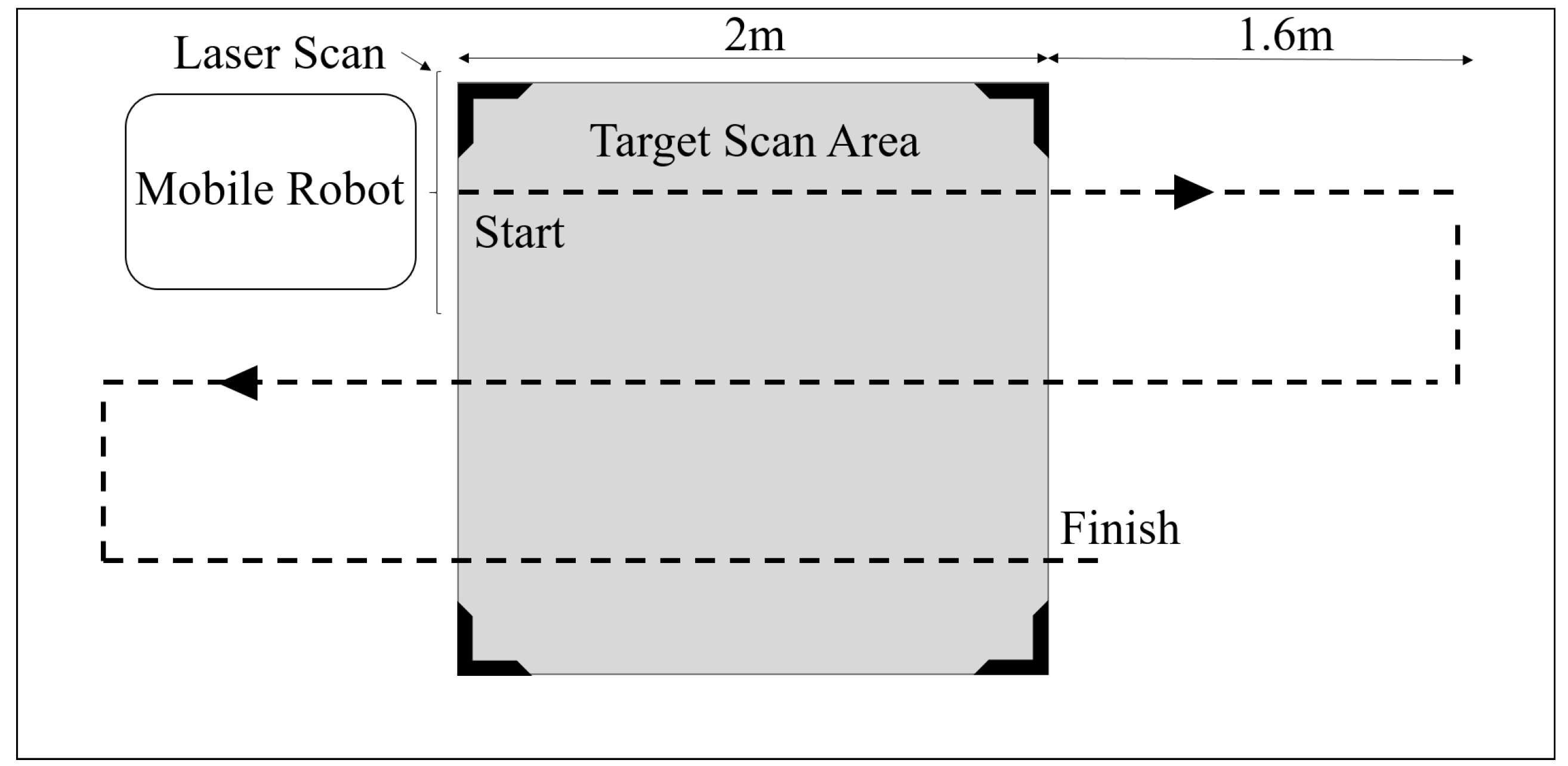

7. Improved Experiment Methodology

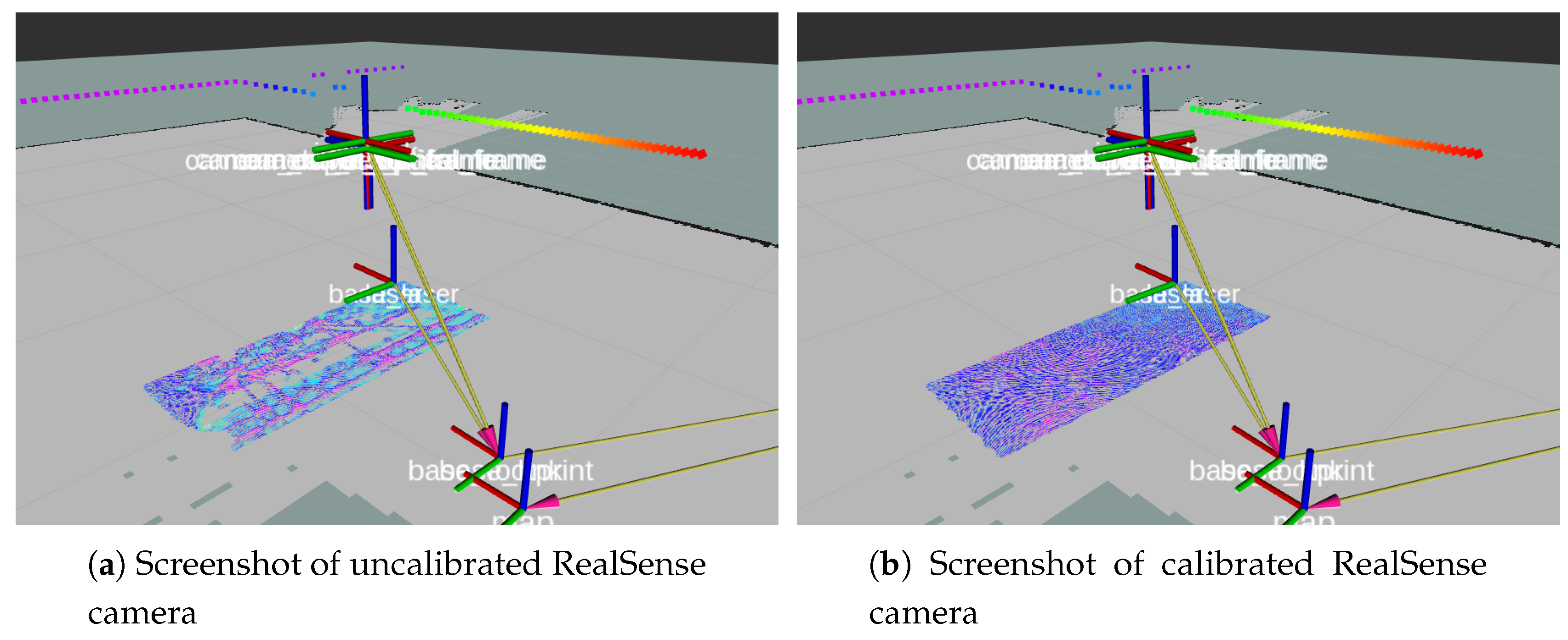

7.1. Sensor Calibration

7.2. Measurement Methods

8. Testing of Improved Floor Surface Capture System

8.1. Experiment Methodology

8.2. Improved Floor Capture Results

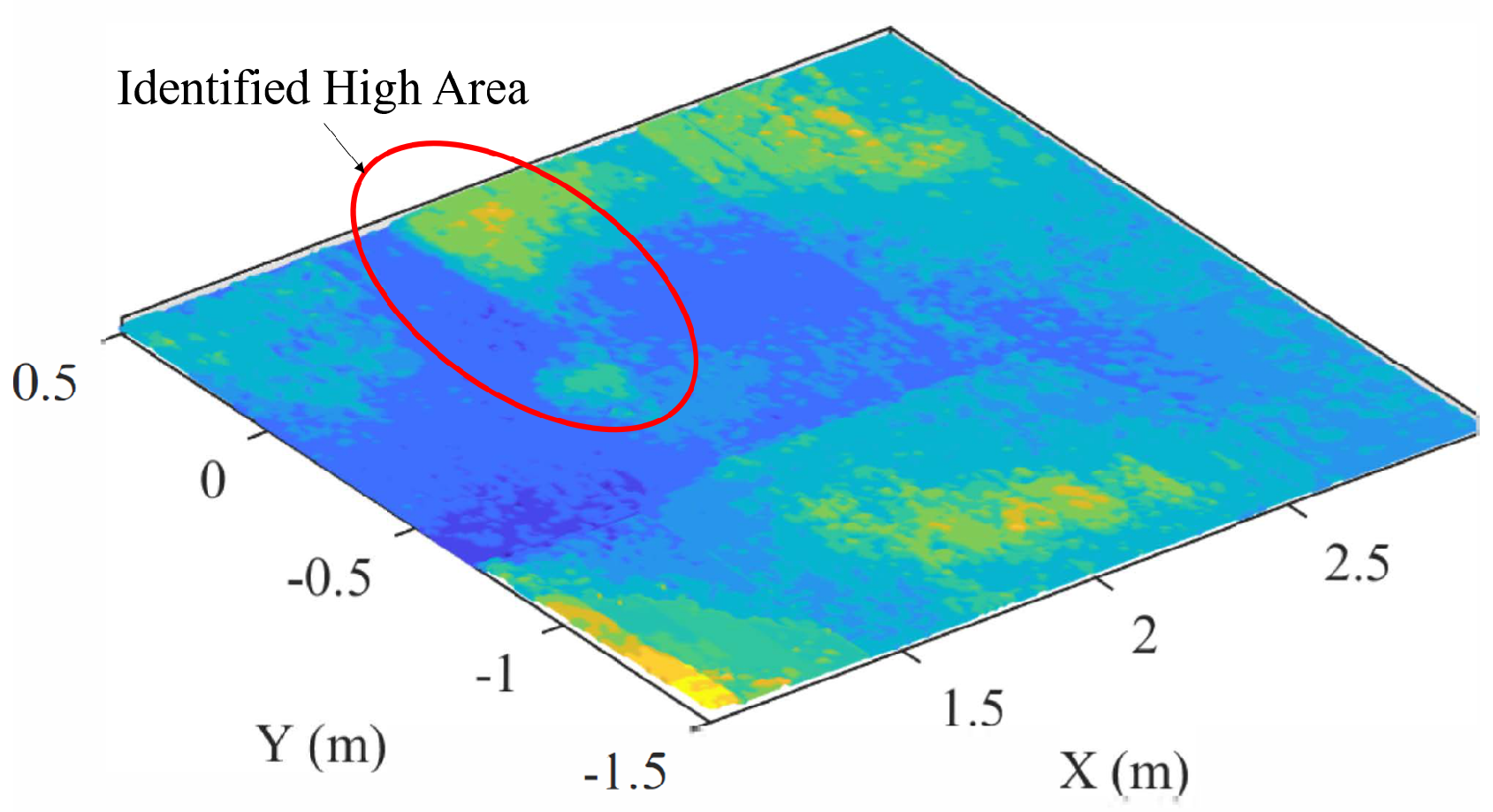

8.2.1. Capture of Carpeted Floor

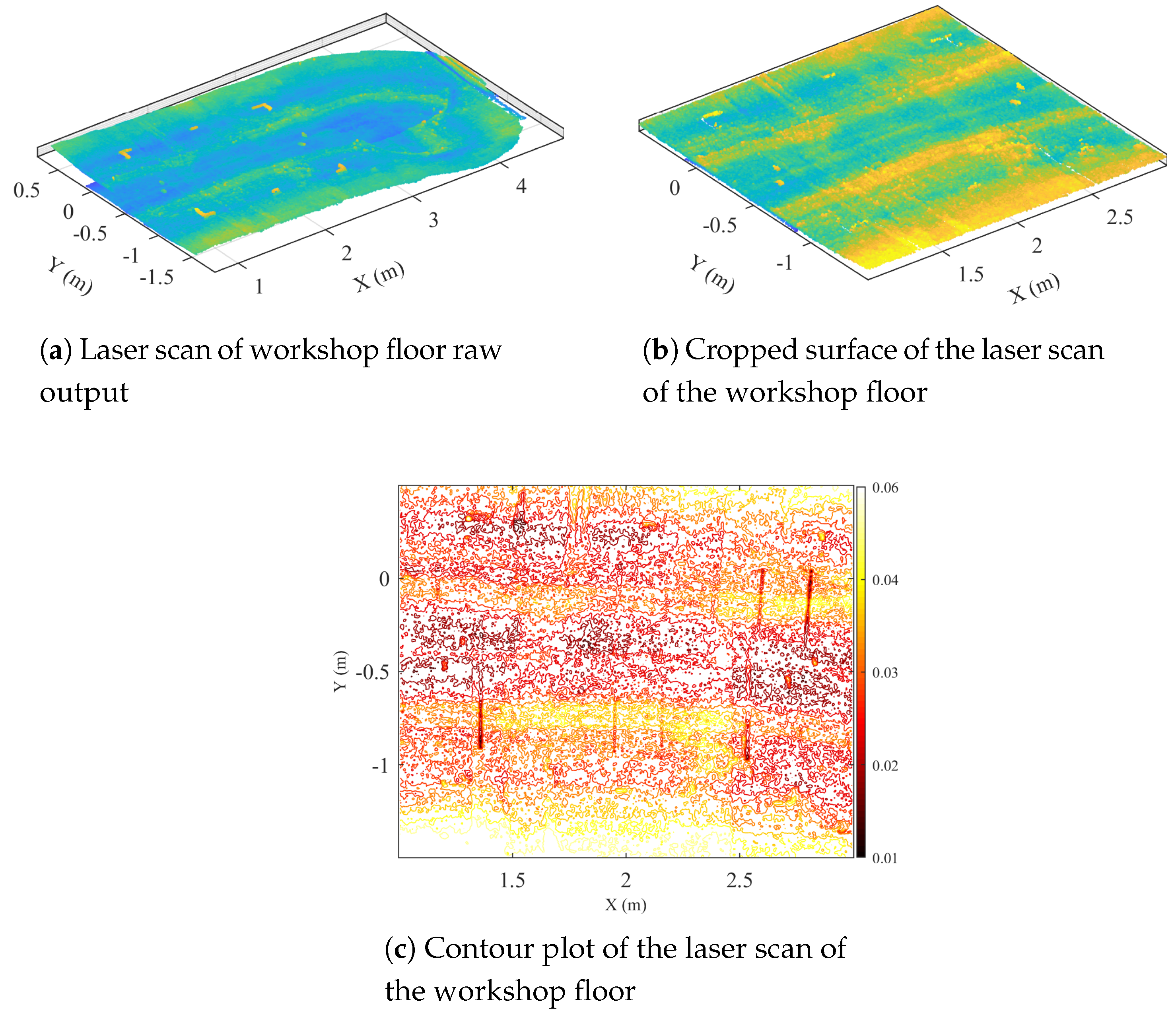

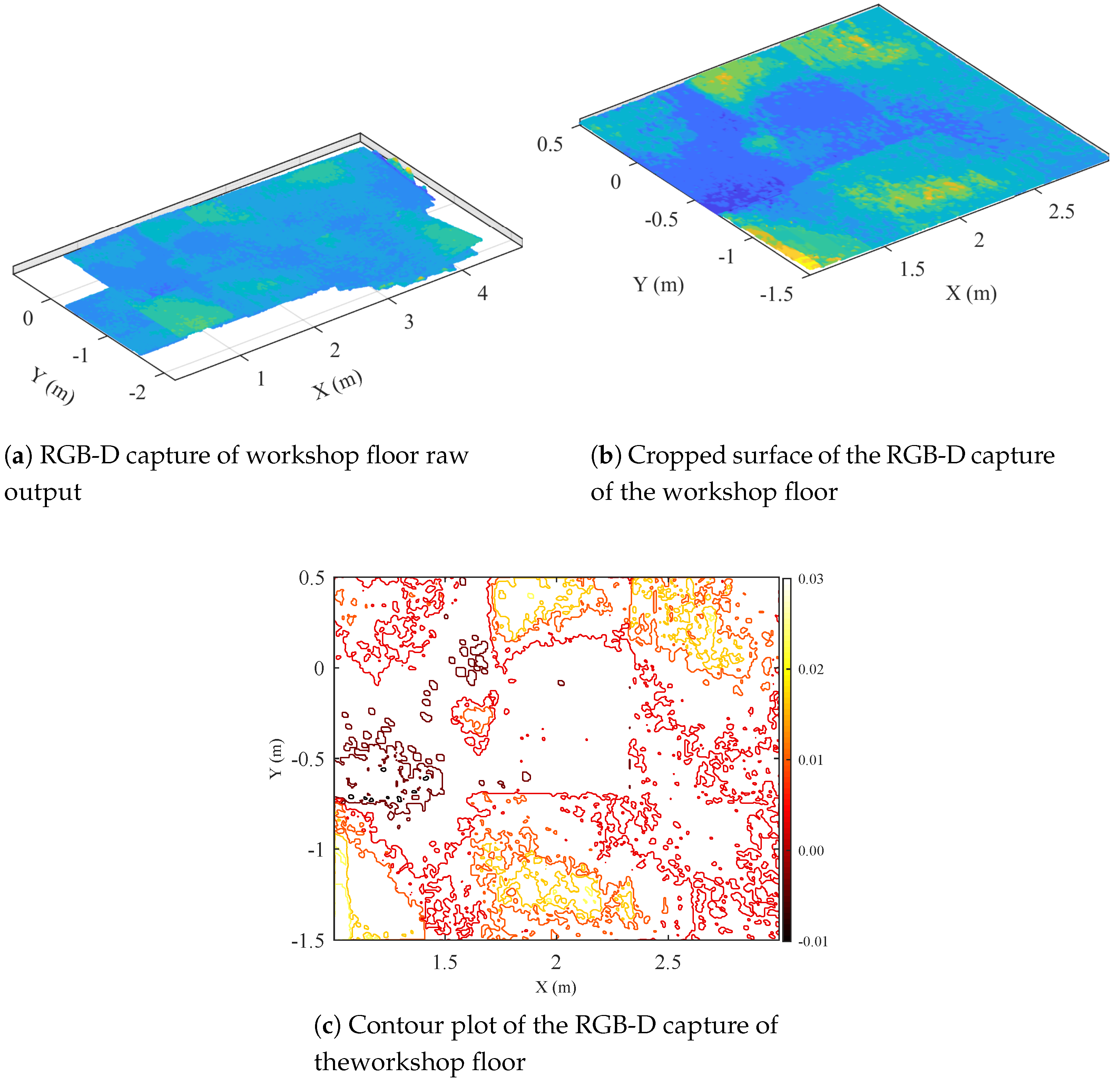

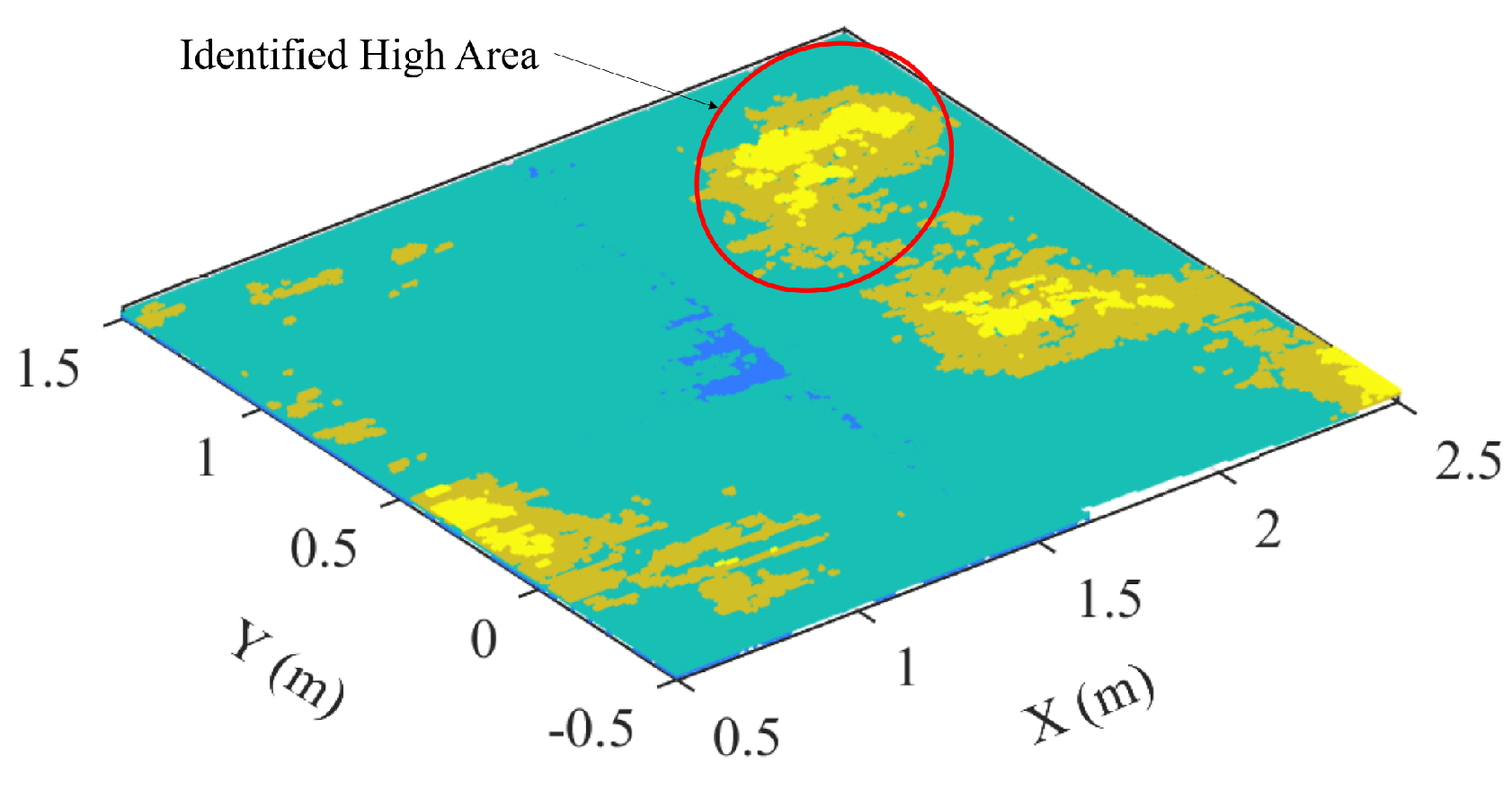

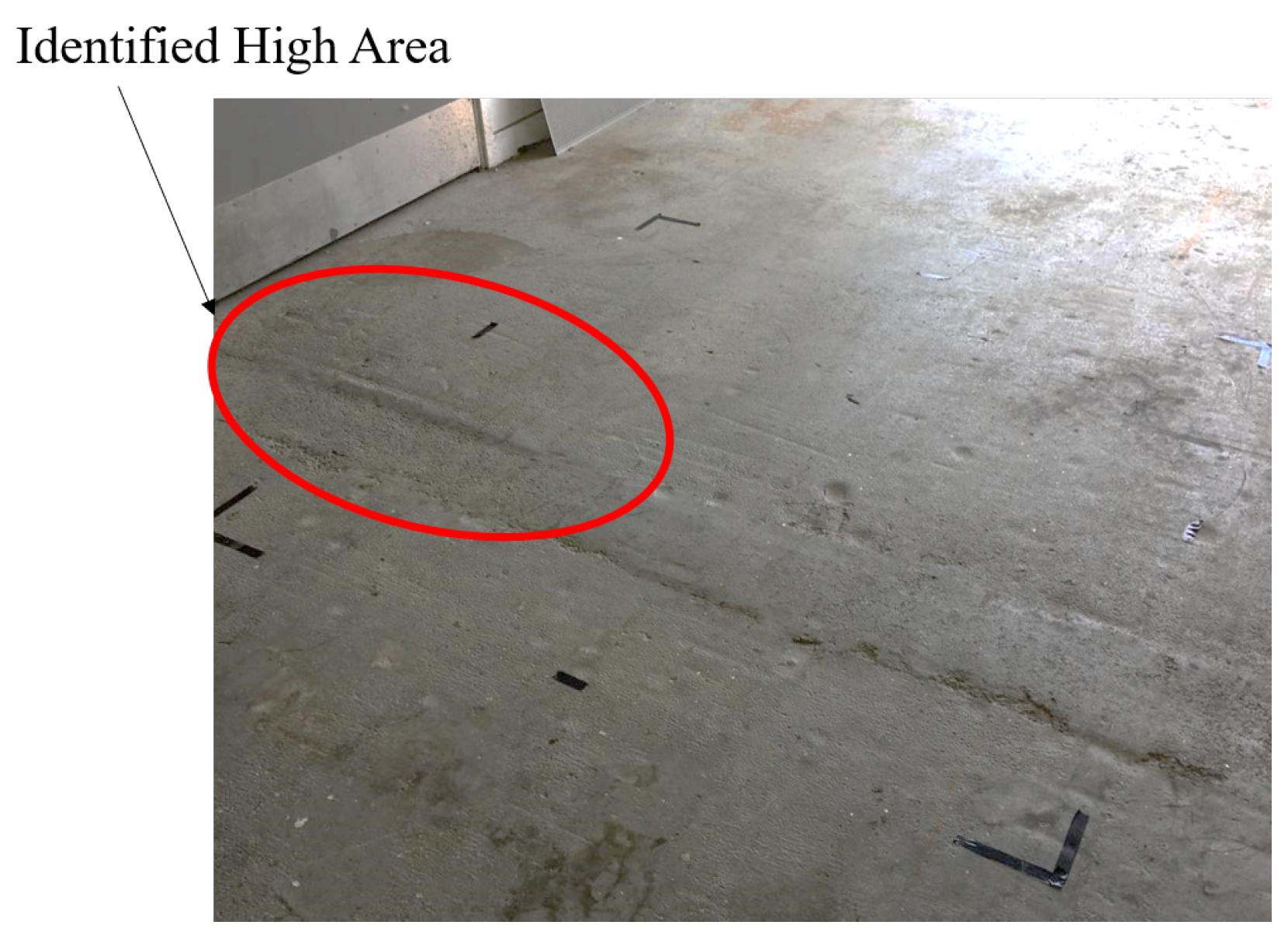

8.2.2. Capture of Workshop Floor

9. Discussion

9.1. Floor Surface Reflectivity

9.2. Sources of Error

9.3. Surface Thickness

9.4. System Improvements

9.5. Sensor Comparison

9.6. Floor Capture Capability

9.7. Surface Thickness

9.8. Sources of Error

9.9. Sensor Selection and Limitations

9.9.1. Material Reflection and Laser Scanner

9.9.2. Light Interference and RGB-D Sensor

9.10. Justification for Improvements

9.10.1. 2D Extrapolation Limitations

9.10.2. Localisation

10. Conclusions

Supplementary Materials

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wang, Q.; Kim, M.K. Applications of 3D point cloud data in the construction industry: A fifteen-year review from 2004 to 2018. Adv. Eng. Inform. 2019, 39, 306–319. [Google Scholar] [CrossRef]

- Bosché, F.; Guenet, E. Automating surface flatness control using terrestrial laser scanning and building information models. Autom. Constr. 2014, 44, 212–226. [Google Scholar] [CrossRef]

- Valero, E.; Bosché, F. Automatic Surface Flatness Control using Terrestrial Laser Scanning Data and the 2D Continuous Wavelet Transform. In Proceedings of the International Symposium on Automation and Robotics in Construction (ISARC), Auburn, AL, USA, 18–21 July 2016; Volume 33, p. 1. [Google Scholar]

- Alhasan, A.; White, D.J.; De Brabanterb, K. Continuous wavelet analysis of pavement profiles. Autom. Constr. 2016, 63, 134–143. [Google Scholar] [CrossRef]

- Chuang, T.Y.; Perng, N.H.; Han, J.Y. Pavement performance monitoring and anomaly recognition based on crowdsourcing spatiotemporal data. Autom. Constr. 2019, 106, 102882. [Google Scholar] [CrossRef]

- Tsuruta, T.; Miura, K.; Miyaguchi, M. Mobile robot for marking free access floors at construction sites. Autom. Constr. 2019, 107, 102912. [Google Scholar] [CrossRef]

- Gao, F. Interferometry for Online/In-Process Surface Inspection. In Optical Interferometry; InTech: London, UK, 2017; pp. 41–59. [Google Scholar]

- Chow, J.C.; Lichti, D.D.; Hol, J.D.; Bellusci, G.; Luinge, H. IMU and multiple RGB-D camera fusion for assisting indoor stop-and-go 3D terrestrial laser scanning. Robotics 2014, 3, 247–280. [Google Scholar] [CrossRef]

- Puente, I.; González-Jorge, H.; Martínez-Sánchez, J.; Arias, P. Review of mobile mapping and surveying technologies. Measurement 2013, 46, 2127–2145. [Google Scholar] [CrossRef]

- Zlot, R.; Bosse, M. Efficient large-scale 3D mobile mapping and surface reconstruction of an underground mine. In Field and Service Robotics; Springer: Berlin/Heidelberg, Germany, 2014; pp. 479–493. [Google Scholar]

- Banica, C.; Paturca, S.V.; Grigorescu, S.D.; Stefan, A.M. Data acquisition and image processing system for surface inspection. In Proceedings of the 2017 10th International Symposium on Advanced Topics in Electrical Engineering (ATEE), Bucharest, Romania, 23–25 March 2017; pp. 28–33. [Google Scholar]

- Wen, C.; Qin, L.; Zhu, Q.; Wang, C.; Li, J.J. Three-dimensional indoor mobile mapping with fusion of two-dimensional laser scanner and RGB-D camera data. IEEE Geosci. Remote Sens. Lett. 2014, 11, 843–847. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source software, Kobe, Japan, 17 May 2009; Volume 3, p. 5. [Google Scholar]

- AG, S. LMS200/211/221/291 Laser Measurement Systems. 2006. Available online: http://sicktoolbox.sourceforge.net/docs/sick-lms-technical-description.pdf (accessed on 26 March 2018).

- Grisetti, G.; Stachniss, C.; Burgard, W. Improved techniques for grid mapping with rao-blackwellized particle filters. IEEE Trans. Robot. 2007, 23, 34–46. [Google Scholar] [CrossRef]

- Grisettiyz, G.; Stachniss, C.; Burgard, W. Improving grid-based slam with rao-blackwellized particle filters by adaptive proposals and selective resampling. In Proceedings of the 2005 IEEE International Conference on Robotics and Automation, ICRA 2005, Barcelona, Spain, 18–22 April 2005; pp. 2432–2437. [Google Scholar]

- Thrun, S.; Fox, D.; Burgard, W.; Dellaert, F. Robust Monte Carlo localization for mobile robots. Artif. Intell. 2001, 128, 99–141. [Google Scholar] [CrossRef]

- RobotShop. Hokuyo URG Scanning Laser Rangefinder. 2019. Available online: https://www.robotshop.com/en/hokuyo-urg-04lx-ug01-scanning-laser-rangefinder.html (accessed on 19 August 2019).

- Intel. Intel RealSense Depth Camera D435. 2018. Available online: https://click.intel.com/intelr-realsensetm-depth-camera-d435.html (accessed on 19 August 2019).

- Engine, N. NextEngine 3D Scanner Tech Specs. 2019. Available online: http://www.nextengine.com/assets/pdf/scanner-techspecs-uhd.pdf (accessed on 19 August 2019).

- Wilson, S.; Potgieter, J.; Arif, K. Floor surface mapping using mobile robot and 2D laser scanner. In Proceedings of the 2017 24th International Conference on Mechatronics and Machine Vision in Practice (M2VIP), Auckland, New Zealand, 21–23 November 2017; pp. 1–6. [Google Scholar]

- Borenstein, J.; Feng, L. Measurement and correction of systematic odometry errors in mobile robots. IEEE Trans. Robot. Autom. 1996, 12, 869–880. [Google Scholar] [CrossRef]

- Droeschel, D.; Schwarz, M.; Behnke, S. Continuous mapping and localization for autonomous navigation in rough terrain using a 3D laser scanner. Robot. Auton. Syst. 2017, 88, 104–115. [Google Scholar] [CrossRef]

- Hornung, A.; Wurm, K.M.; Bennewitz, M.; Stachniss, C.; Burgard, W. OctoMap: An efficient probabilistic 3D mapping framework based on octrees. Auton. Robot. 2013, 34, 189–206. [Google Scholar] [CrossRef]

- Endres, F.; Hess, J.; Sturm, J.; Cremers, D.; Burgard, W. 3-D mapping with an RGB-D camera. IEEE Trans. Robot. 2014, 30, 177–187. [Google Scholar] [CrossRef]

- Boehler, W.; Vicent, M.B.; Marbs, A. Investigating laser scanner accuracy. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2003, 34, 696–701. [Google Scholar]

| Sensor | Range | Accuracy | Resolution | Price |

|---|---|---|---|---|

| SICK LMS291 | 8 m or up to 80 m | ±35 mm and 50 mm | 0.25 degrees | US$6000 |

| Hokuyo URG | 20 mm to 5600 mm | ±30 mm | 0.36 degrees | US$1080 |

| Intel D435 | 10 m | not stated | 640 × 480 pixels | US$180 |

| NextEngine 3D | 200 mm | ±0.30 mm | 3.50 | US$2995 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wilson, S.; Potgieter, J.; Arif, K.M. Robot-Assisted Floor Surface Profiling Using Low-Cost Sensors. Remote Sens. 2019, 11, 2626. https://doi.org/10.3390/rs11222626

Wilson S, Potgieter J, Arif KM. Robot-Assisted Floor Surface Profiling Using Low-Cost Sensors. Remote Sensing. 2019; 11(22):2626. https://doi.org/10.3390/rs11222626

Chicago/Turabian StyleWilson, Scott, Johan Potgieter, and Khalid Mahmood Arif. 2019. "Robot-Assisted Floor Surface Profiling Using Low-Cost Sensors" Remote Sensing 11, no. 22: 2626. https://doi.org/10.3390/rs11222626

APA StyleWilson, S., Potgieter, J., & Arif, K. M. (2019). Robot-Assisted Floor Surface Profiling Using Low-Cost Sensors. Remote Sensing, 11(22), 2626. https://doi.org/10.3390/rs11222626