Comparing Human Versus Machine-Driven Cadastral Boundary Feature Extraction

Abstract

1. Introduction

1.1. Cadastral Intelligence

1.2. The Quest of Automation in Cadastral Mapping

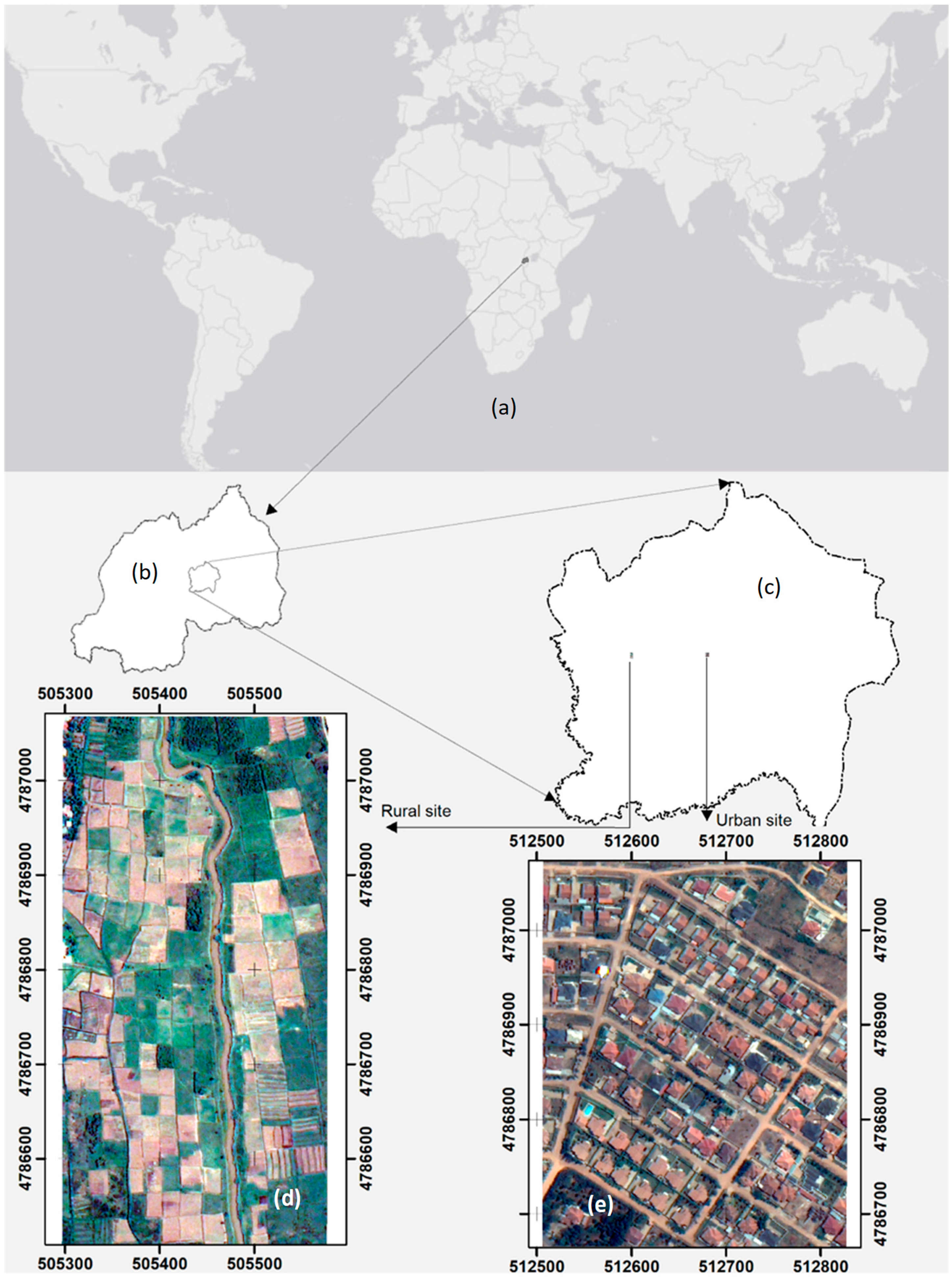

2. Materials and Methods

2.1. Pre-Processing

2.2. Parcels and Building Outline Extraction

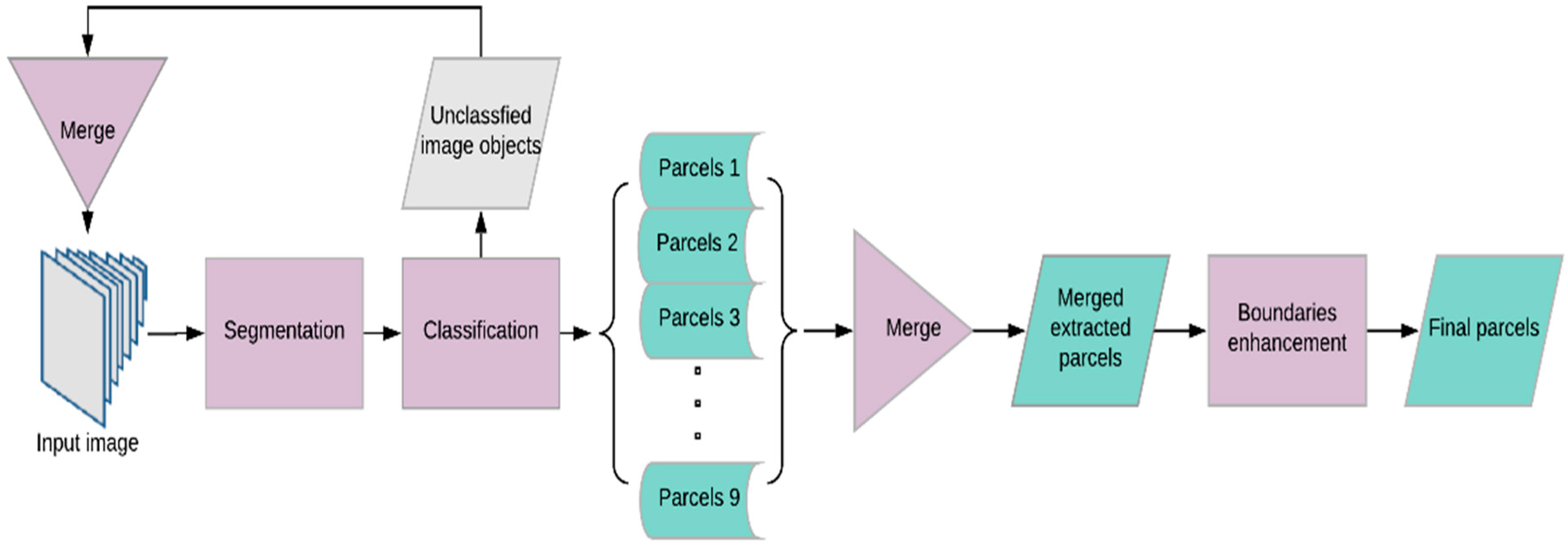

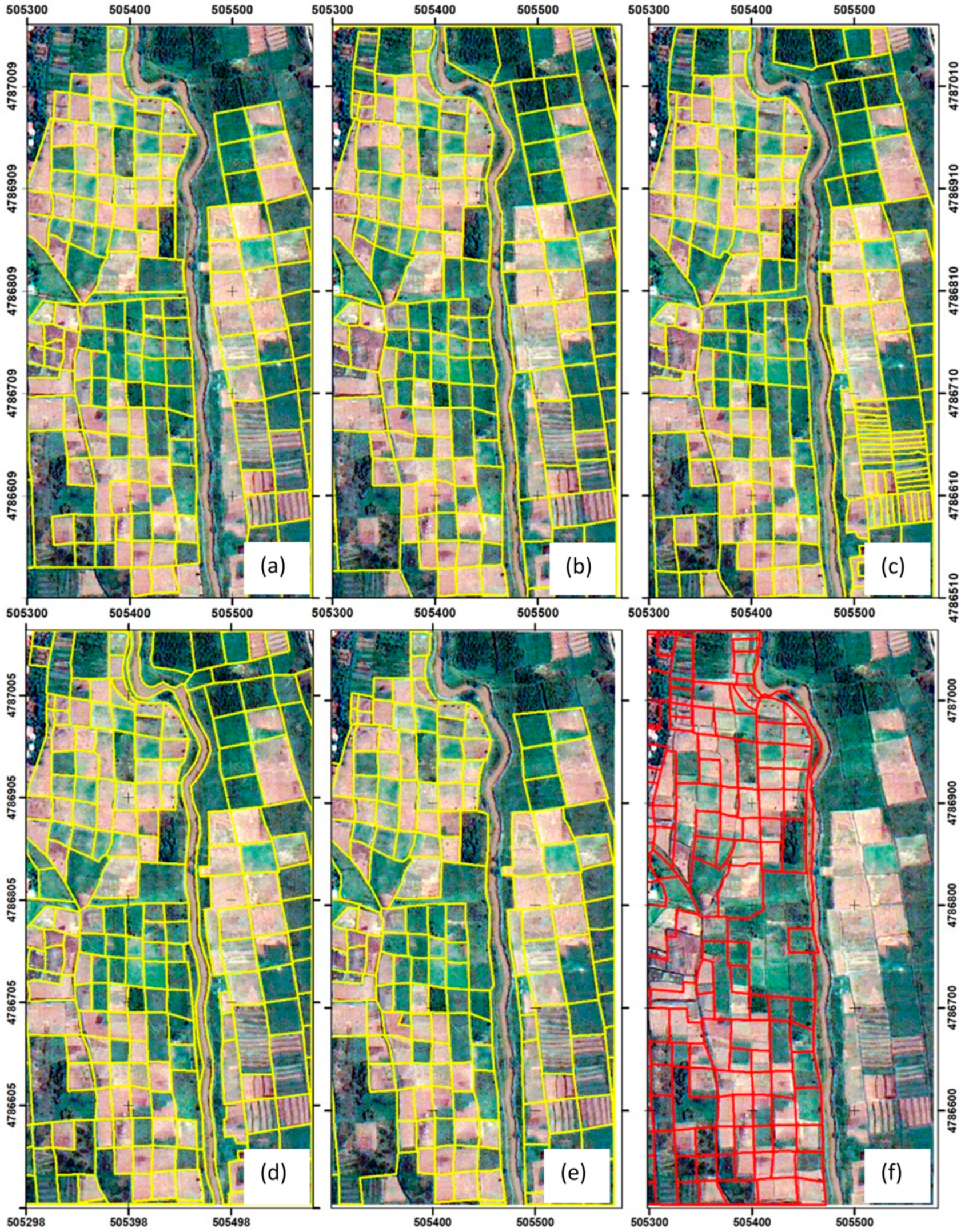

2.2.1. Automatic process

Fully Automated Parameterisation

Expert Knowledge for Parameterisation

2.2.2. Manual Digitisation

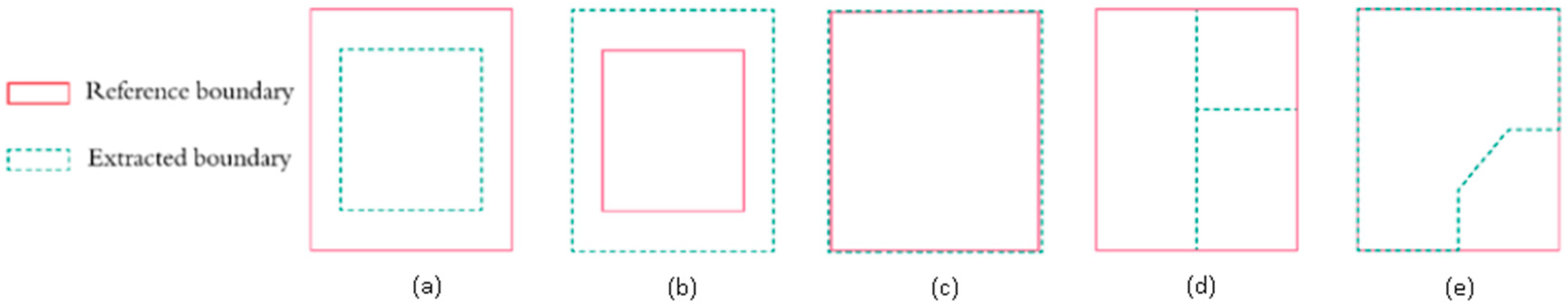

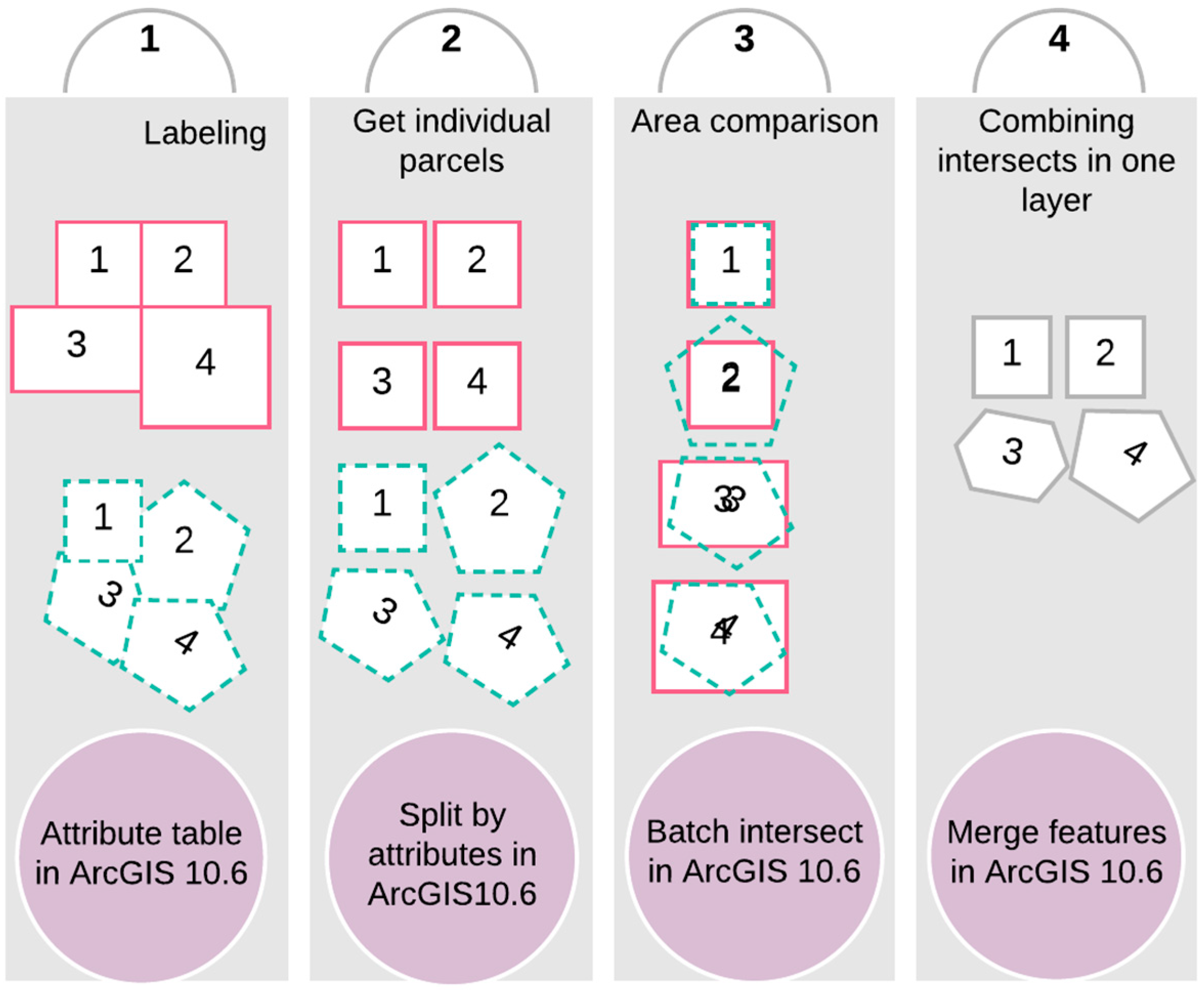

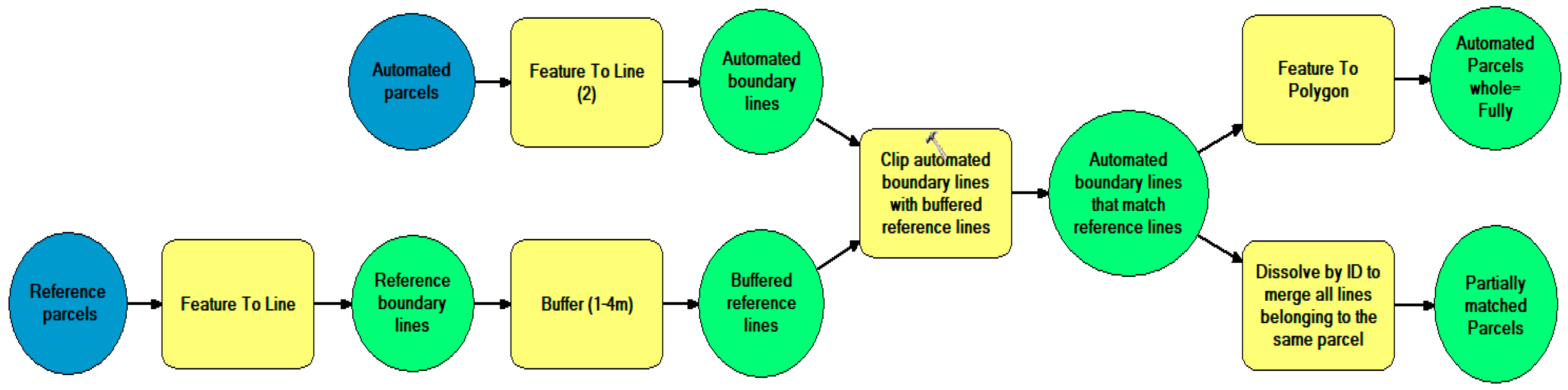

2.2.3. Geometric Comparison of Automation versus Humans

3. Experimental Results

3.1. Extraction of Parcel Boundaries in Rural Area

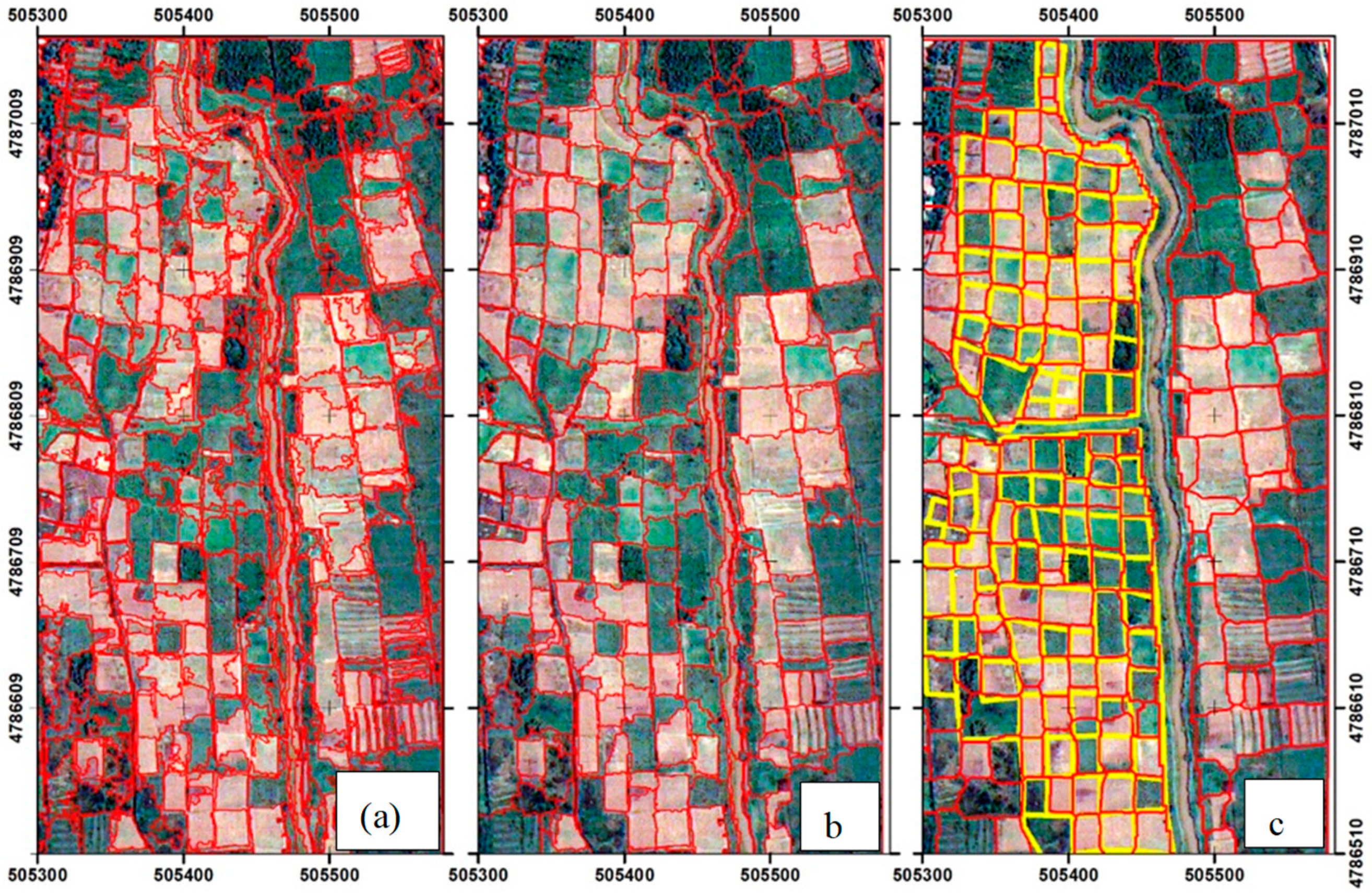

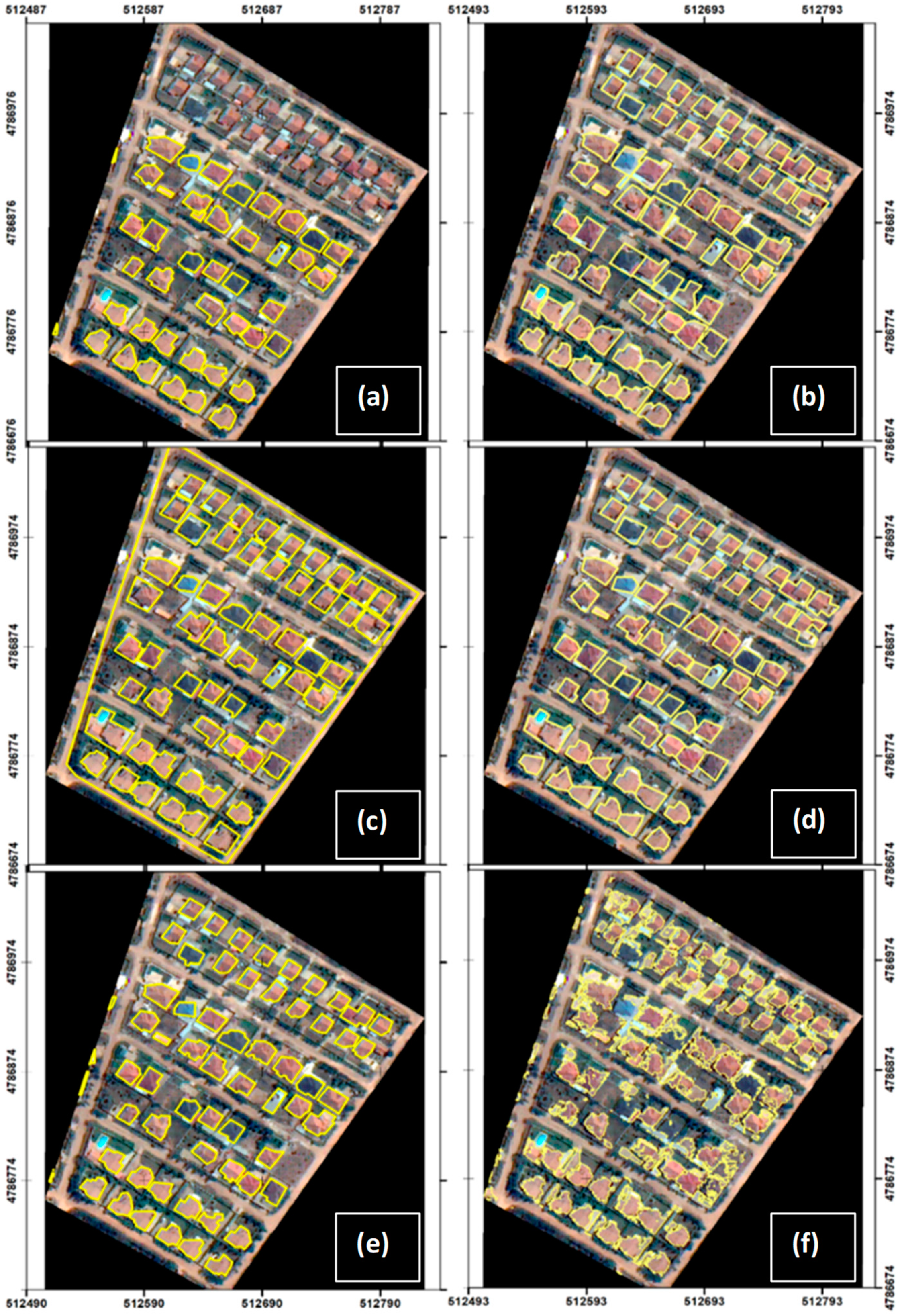

3.2. Extraction Parcels in Urban Areas and Building Outlines

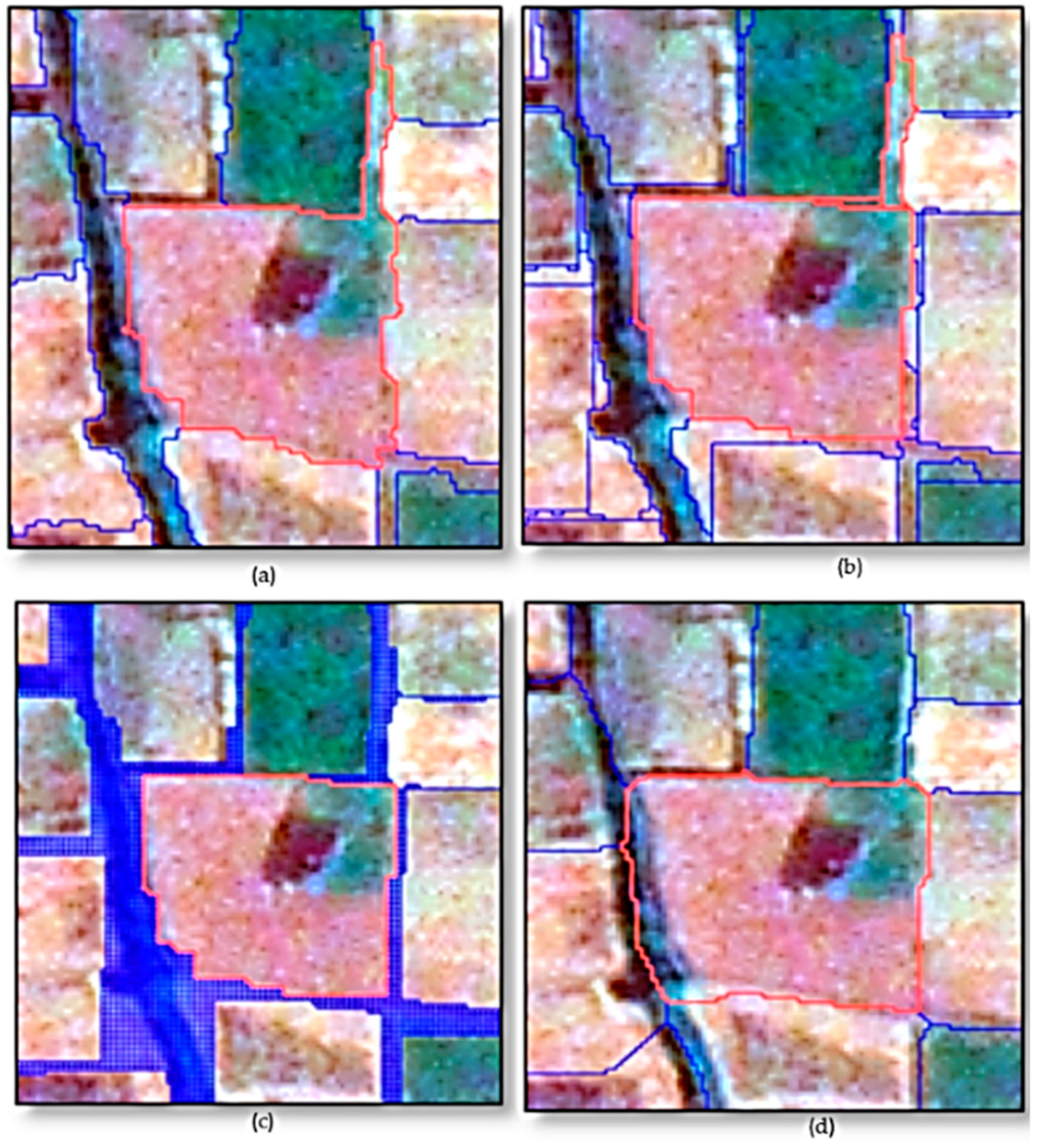

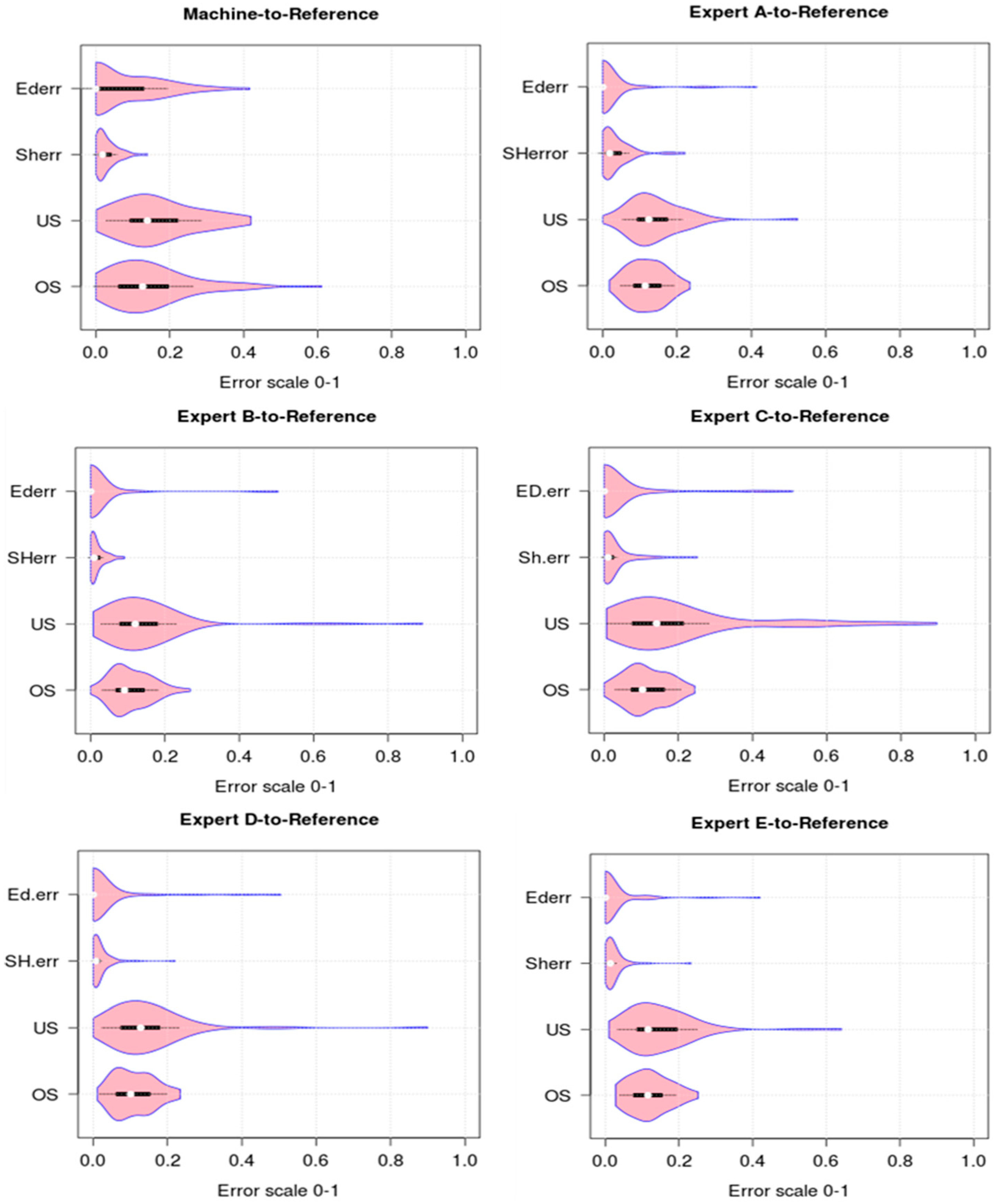

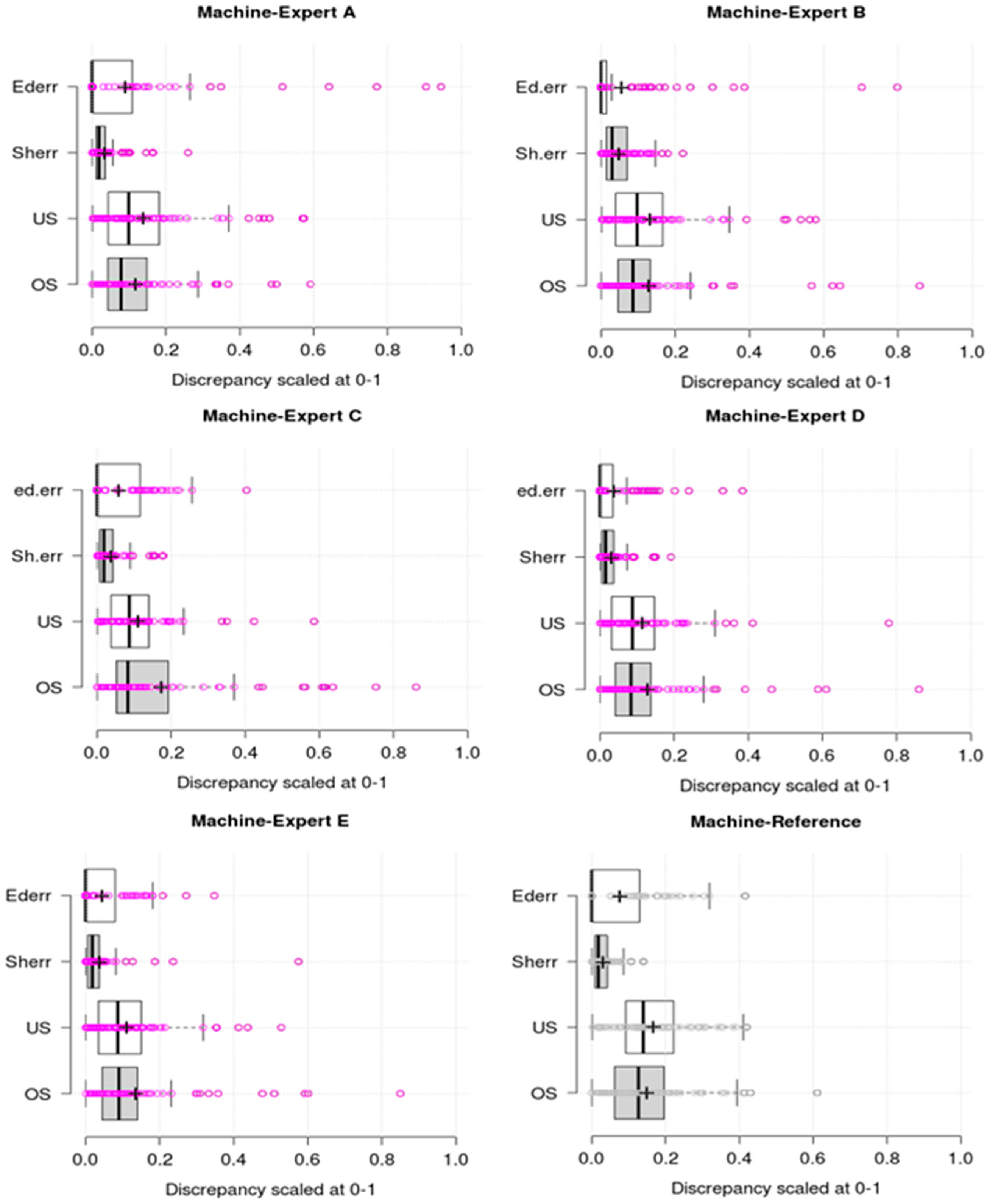

3.3. Geometric Comparison of Automated Against Manually Digitised Boundaries

4. Discussion

4.1. Manual Extraction Creates Quality Issues

4.2. Semi-Automated Is More Feasible Than Fully-Automated

4.3. Invisible Social Boundaries: A Challenge to Both Machines and Humans

4.3.1. Rural Areas Offer Promise, but Inconsistency Is Evident

4.3.2. Urban Areas Surprisingly More Challenging

4.4. Still Areas of Strengths and Weakness for Both Humans and Machines

4.5. Corroboration with Previous Studies

4.6. Implications for Practice and Research

5. Conclusions and Recommendation

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Winston, H.P. Artificial Intelligence, 3rd ed.; Library of Congress: Washington, DC, USA, 1993. [Google Scholar]

- Bennett, R.; Gerke, M.; Crompvoets, J.; Ho, S.; Schwering, A.; Chipofya, M.; Wayumba, R. Building Third Generation Land Tools: Its4land, Smart Sketchmaps, UAVs, Automatic Feature Extraction, and the GeoCloud. In Proceedings of the Annual World Bank Conference on Land and Poverty, Washington, DC, USA, 20–24 March 2017; World Bank: Washington, DC, USA, 2017. [Google Scholar]

- McDermott, M.; Myers, M.; Augustinus, C. Valuation of Unregistered Lands: A Policy Guide. 2018. Available online: https://unhabitat.org/books/valuation-of-unregistered-lands-a-policy-guide/ (accessed on 11 July 2019).

- Goldstein, S.; Naglieri, J. (Eds.) Encyclopedia of Child Behavior and Development; Springer Science + Business Media LLC: Berlin, Germany, 2011. [Google Scholar]

- Linn, M.C.; Petersen, A.C. Emergence and characterization of sex differences in spatial ability: A meta-analysis. Child Dev. 1985, 56, 1479–1498. [Google Scholar] [CrossRef]

- Campbell, L.; Campbell, B.; Dickinson, D. Teaching & Learning through Multiple Intelligences, 3rd ed.; Allyn and Bacon: New York, NY, USA, 1996. [Google Scholar]

- Bennett, R. Cadastral Intelligence, Mandated Mobs, and the Rise of the Cadastrobots. In Proceedings of the FIG Working Week 2016, Christchurch, New Zealand, 2–5 May 2016. [Google Scholar]

- Bennett, R.; Asiama, K.; Zevenbergen, J.; Juliens, S. The Intelligent Cadastre. In Proceedings of the FIG Commission 7/3 Workshop on Crowdsourcing of Land Information, St Juliens, Malta, 16–20 November 2015. [Google Scholar]

- Chen, G.; Haya, G.; St-Onge, B. A GEOBIA framework to estimate forest parameters from lidar transects, Quickbird imagery and machine learning: A case study in Quebec, Canada. Int. J. Appl. Earth Observ. Geoinf. 2012, 15, 28–37. [Google Scholar] [CrossRef]

- Dold, J.; Groopman, J. Geo-spatial Information Science The future of geospatial intelligence. Future Geospat. Intell. 2017, 20, 5020–5151. [Google Scholar]

- Blaschke, T.; Lang, S.; Hay, G. (Eds.) Object-Based Image Analysis: Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Springer Science & Business Media: Berlin, Germany, 2008. [Google Scholar]

- Alkaabi, S.; Deravi, F. A new approach to corner extraction and matching for automated image registration. IGARSS 2005, 5, 3517–3521. [Google Scholar]

- Quackenbush, L.J. A Review of Techniques for Extracting Linear Features from Imagery. Photogramm. Eng. Remote Sens. 2004, 70, 1383–1392. [Google Scholar] [CrossRef]

- Schade, S. Big Data breaking barriers - first steps on a long trail. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, 7, 691–697. [Google Scholar] [CrossRef]

- O’Neil-Dunne, J. Automated Feature Extraction. LiDAR 2011, 1, 18–22. [Google Scholar]

- Luo, X.; Bennett, R.; Koeva, M.; Quadros, N. Cadastral Boundaries from Point Clouds? Towards Semi-Automated Cadastral Boundary Extraction from ALS Data. Available online: https://www.gim-international.com/content/article/cadastral-boundaries-from-point-clouds (accessed on 23 May 2017).

- Donnelly, G.J. Fundamentals of Land Ownership, Land Boundaries, and Surveying; Intergovernmental Committee on Surveying & Mapping; 2012. Available online: https://www.icsm.gov.au/sites/default/files/Fundamentals_of_Land_Ownership_Land_Boundaries_and_Surveying.pdf (accessed on 11 July 2019).

- Williamson, I.P. Cadastral and land information systems in developing countries. Aust. Surv. 1985, 33, 27–43. [Google Scholar] [CrossRef]

- Williamson, I.P. The justification of cadastral systems in developing countries. Geomatica 1997, 51, 21–36. [Google Scholar]

- Williamson, I.P. Best practices for land administration systems in developing countries. In Proceedings of the International Conference on Land Policy Reform, Jakarta, Indonesia, 25–27 July 2000; The World Bank: Washington, DC, USA, 2000. [Google Scholar]

- Yomralioglu, T.; McLaughlin, J. (Eds.) Cadastre: Geo-Information Innovations in Land Administration; Springer: Berlin, Germany, 2017. [Google Scholar]

- Teunissen, P.; Montenbruck, O. (Eds.) Springer Handbook of Global Navigation Satellite Systems; Springer: Berlin, Germany, 2017. [Google Scholar]

- Zevenbergen, J.; Bennett, R. The visible boundary: More than just a line between coordinates. In Proceeding of GeoTechRwanda, Kigali, Rwanda, 18–20 November 2015. [Google Scholar]

- Rogers, S.; Ballantyne, B.; Ballantyne, C. Rigorous Impact Evaluation of Land Surveying Costs: Empiricial evidence from indigenous lands in Canada. In Proceedings of the 2017 World Bank Conference on Land and Poverty, Washington, DC, USA, 20–24 March 2017; The World Bank: Washington, DC, USA, 2017. [Google Scholar]

- Burns, T.; Grant, C.; Nettle, K.; Brits, A.; Dalrymple, K. Land administration reform: indicators of success and future challenges. Agric. Rural Dev. Discuss. Pap. 2007, 37, 1–227. [Google Scholar]

- Zevenbergen, J. A systems approach to land registration and cadastre. Nordic J. Surv. Real Estate Res. 2004, 1. [Google Scholar]

- Windrose. Evolution of Surveying Techniques—Windrose Land Services. Available online: https://www.windroseservices.com/evolution-surveying-techniques/ (accessed on 3 July 2017).

- Crommelinck, S.; Bennett, R.; Gerke, M.; Nex, F.; Yang, M.Y.; Vosselman, G. Review of automatic feature extraction from high-resolution optical sensor data for UAV-based cadastral mapping. Remote Sens. 2016, 8, 689. [Google Scholar] [CrossRef]

- Luo, X.; Bennett, R.M.; Koeva, M.; Lemmen, C. Investigating Semi-Automated Cadastral Boundaries Extraction from Airborne Laser Scanned Data. Land 2017, 6, 60. [Google Scholar] [CrossRef]

- Lemmen, C.H.J.; Zevenbergen, J.A. First experiences with a high-resolution imagery-based adjudication approach in Ethiopia. In Innovations in Land Rights Recognition, Administration, and Governance; Deininger, K., Augustinus, C., Enemark, S., Munro-Faure, P., Eds.; The International Bank for Reconstruction and Development/The World Bank: Washington, DC, USA, 2010. [Google Scholar]

- Lennartz, S.P.; Congalton, R.G. Classifying and Mapping Forest Cover Types Using Ikonos Imagery in the Northeastern United States. In Proceedings of the ASPRS Annual Conference Proceedings, Denver, CO, USA, 23–28 May 2004; pp. 1–12. [Google Scholar]

- Salehi, B.; Zhang, Y.; Zhong, M.; Dey, V. Object-Based Classification of Urban Areas Using VHR Imagery and Height Points Ancillary Data. Remote. Sens. 2012, 4, 2256–2276. [Google Scholar] [CrossRef]

- Gao, M.; Xu, X.; Klinger, Y.; Van Der Woerd, J.; Tapponnier, P. High-resolution mapping based on an Unmanned Aerial Vehicle (UAV) to capture paleoseismic offsets along the Altyn-Tagh fault, China. Sci. Rep. 2017, 7, 8281. [Google Scholar] [CrossRef]

- Koeva, M.; Muneza, M.; Gevaert, C.; Gerke, M.; Nex, F. Using UAVs for map creation and updating. A case study in Rwanda. Surv. Rev. 2018, 50, 312–325. [Google Scholar] [CrossRef]

- Wassie, Y.A.; Koeva, M.N.; Bennett, R.M.; Lemmen, C.H.J. A procedure for semi-automated cadastral boundary feature extraction from high-resolution satellite imagery. J. Spat. Sci. 2017, 63, 75–92. [Google Scholar] [CrossRef]

- Sahar, L.; Muthukumar, S.; French, S.P. Using aerial imagery and GIS in automated building footprint extraction and shape recognition for earthquake risk assessment of urban inventories. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3511–3520. [Google Scholar] [CrossRef]

- Smith, B.; Varzi, A.C. Fiat and bona fide Boundaries: Towards an ontology of spatially extended objects. Philos. Phenomenol. Res. 1997, 1329, 103–119. [Google Scholar]

- Smith, B.; Varzi, A.C. Fiat and Bona Fide Boundaries. Philos. Phenomenol. Res. 2000, 60, 401–420. [Google Scholar] [CrossRef]

- Radoux, J.; Bogaert, P. Good Practices for Object-Based Accuracy Assessment. Remote Sens. 2017, 9, 646. [Google Scholar] [CrossRef]

- Ali, Z.; Tuladhar, A.; Zevenbergen, J.; Ali, D.Z. An integrated approach for updating cadastral maps in Pakistan using satellite remote sensing data. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 386–398. [Google Scholar] [CrossRef]

- Mumbone, M.; Bennett, R.M.; Gerke, M.; Volkmann, W. Innovations in Boundary Mapping: Namibia, Customary Land and UAV’s; University of Twente Faculty of Geo-Information and Earth Observation (ITC): Washington, DC, USA, 2015; pp. 1–22. [Google Scholar]

- Rijsdijk, M.; Van Hinsbergh, W.H.M.; Witteveen, W.; Ten Buuren, G.H.M.; Schakelaar, G.A.; Poppinga, G.; Ladiges, R. Unmanned aerial systems in the process of juridical verification of cadastral border. ISPRS Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 2, 325–331. [Google Scholar] [CrossRef]

- Aryaguna, P.A.; Danoedoro, P. Comparison Effectiveness of Pixel Based Classification and Object Based Classification Using High Resolution Image in Floristic Composition Mapping (Study Case: Gunung Tidar Magelang City). IOP Conf. Ser. Earth Environ. Sci. 2016, 47, 12042. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefevre, S. Semantic Segmentation of Earth Observation Data Using Multimodal and Multi-scale Deep Networks. In Proceedings of the Asian Conference on Computer Vision (ACCV16), Taipei, Taiwan, 20–24 November 2016. [Google Scholar]

- Saito, S.; Aoki, Y. Building and road detection from large aerial imagery. In Image Processing: Machine Vision Applications VIII; International Society for Optics and Photonics: Bellingham, WA, USA, 2015; Volume 9405, p. 94050. [Google Scholar]

- Xie, S.; Tu, Z. Holistically-nested edge detection. In Proceedings of the IEEE International Conference on Computer Vision, Washington, DC, USA, 7–13 December 2015; pp. 1395–1403. [Google Scholar]

- Li, X.; Shao, G. Object-based land-cover mapping with high resolution aerial photography at a county scale in midwestern USA. Remote Sens. 2014, 6, 11372–11390. [Google Scholar] [CrossRef]

- O’Neil-Dunne, J.; Schuckman, K. GEOG 883 Syllabus—Fall 2018 Remote Sensing Image Analysis and Applications. John, A. Dutton e-Education Institute, College of Earth and Mineral Sciences, The Pennsylvania State University. Available online: https://www.e-education.psu.edu/geog883/book/export/html/230 (accessed on 11 July 2019).

- Kohli, D.; Bennett, R.; Lemmen, C.; Kwabena, A.; Zevenbergen, J. A Quantitative Comparison of Completely Visible Cadastral Parcels Using Satellite Images: A Step towards Automation. In Proceedings of the FIG Working Week 2017: Surveying the World of Tomorrow—From Digitalisation to Augmented Reality, Helsinki, Finland, 29 May–2 June 2017. [Google Scholar]

- García-Pedrero, A.; Gonzalo-Martín, C.; Lillo-Saavedra, M. A machine learning approach for agricultural parcel delineation through agglomerative segmentation. Int. J. Remote Sens. 2017, 38, 1809–1819. [Google Scholar] [CrossRef]

- Sun, W.; Chen, B.; Messinger, D.W. Nearest-neighbor diffusion-based pan-sharpening algorithm for spectral images. Opt. Eng. 2014, 53, 13107. [Google Scholar] [CrossRef]

- Drăguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef]

- Trimble. (n.d). eCognition Essentials. Ecognition developer. Developer ruleset. Available online: http://www.ecognition.com/ (accessed on 11 July 2019).

- Noszczyk, T.; Hernik, J. Understanding the cadastre in rural areas in Poland after the socio-political transformation. J. Spat. Sci. 2019, 64, 73–95. [Google Scholar] [CrossRef]

- Al-Kadi, O.S. Supervised Texture Segmentation: A Comparative Study, 1–5. Available online: https://arxiv.org/ftp/arxiv/papers/1601/1601.00212.pdf (accessed on 11 July 2019).

- Ayyub, B.M. Elicitation of Expert Opinions for Uncertainty and Risks; CRC Press: Boca Raton, FL, USA, 2001. [Google Scholar]

- McBride, F.M.; Burgman, M.A. What is expert knowledge, how is such knowledge gathered, and how do we use it to address questions in landscape ecology? In Expert Knowledge and Its Application in Landscape Ecology; Perera, A., Drew, C., Johnson, C., Eds.; Springer Science + Business Media, LLC: New York, NY, USA, 2012. [Google Scholar]

- McKeown, D.M.; Bulwinkle, T.; Cochran, S.; Harvey, W.; McGlone, C.; Shufelt, J.A. Performance evaluation for automatic feature extraction. Int. Arch. Photogramm. Remote Sens. 2000, 33, 379–394. [Google Scholar]

- Koeva, M.; Bennett, R.; Gerke, M.; Crommelinck, S.; Stöcker, C.; Crompvoets, J.; Zein, T. Towards Innovative Geospatial Tools for Fit-For-Purpose Land Rights Mapping. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42. [Google Scholar] [CrossRef]

- Persello, C.; Bruzzone, L. A Novel Protocol for Accuracy Assessment in Classification of Very High-Resolution Images. Geosci. Remote Sens. IEEE Trans. 2010, 48, 1232–1244. [Google Scholar] [CrossRef]

- Liu, Y.; Bian, L.; Meng, Y.; Wang, H.; Zhang, S.; Yang, Y.; Wang, B. Discrepancy measures for selecting optimal combination of parameter values in object-based image analysis. ISPRS 2012, 68, 144–156. [Google Scholar] [CrossRef]

- Carron, J. “Violin Plots 101: Visualizing Distribution and Probability Density.” Mode blog. 26 October 2016. Available online: https://mode.com/blog/violin-plot-examples (accessed on 11 July 2019).

- Trimble. Ecognition Developer 9.0–Reference Book; Trimble Documentation: München, Germany, 2014. [Google Scholar]

- Van Coillie, F.M.; Gardin, S.; Anseel, F.; Duyck, W.; Verbeke, L.P.C.; de Wulf, R.R. Variability of operator performance in remote-sensing image interpretation: The importance of human and external factors. IJRS 2014, 35, 754–778. [Google Scholar] [CrossRef]

- Alkan, M.; Marangoz, M. Creating Cadastral Maps in Rural and Urban Areas of Using High Resolution Satellite Imagery. Appl. Geo-Inform. Soc. Environ. 2009, 2009, 89–95. [Google Scholar]

- Suresh, S.; Merugu, S.; Jain, K. Land Information Extraction with Boundary Preservation for High Resolution Satellite Image. Int. J. Comput. Appl. 2015, 120, 39–43. [Google Scholar]

- Belgiu, M.; Drǎguţ, L. Comparing supervised and unsupervised multiresolution segmentation approaches for extracting buildings from very high-resolution imagery. ISPRS J. Photogramm. Remote Sens. 2014, 96, 67–75. [Google Scholar] [CrossRef]

| Rural | |||

| Operation | Parameters | ||

| Removing non parcel features | Chessboard segmentation. | Size = 5 pixels; | |

| Contextual information | Distance to river = 11 pixels, distance to drainage = 5 pixels | ||

| MRS segmentation | Scale = 10; shape = 0.1; compactness = 0.5 | ||

| GLCM entropy features | (Quick 8/11) R, (all directions) | ||

| MRS segmentation | Scale = 20; shape = 0.4; compactness = 0.8 | ||

| Classification | Ditches: elliptic fit = 0; Asymmetry = 0.92 | ||

| Parcels extract-ion | Iteration 1 | MRS segmentation | Scale = 70; shape = 0.5; compactness = 0.9 |

| Classification | Parcels-1 (shape index < 1.2 and rectangular fit ≥ 0.88 and area >300 m2 | ||

| Iteration 2 | MRS segmentation | Scale = 70; shape = 0.6; compactness = 0.8 | |

| Classification | Parcels-2 (shape index < 1.3 and rectangular fit ≥ 0.9 and area ≥ 200 m2 | ||

| Iteration 3 | MRS segmentation | Scale = 35; shape = 0.4; compactness = 0.8 | |

| Classification | Parcels-3: rectangular fit ≥ 0.93 | ||

| Classification | Parcels-4: shape index = 1.345 and rectangular fit ≥ 0.88 and area ≥ 425 m2 | ||

| Iteration 4 | MRS segmentation | scale = 35; shape = 0.5; compactness = 0.8 | |

| Classification | Parcel 5 = shape index ≤ 1.35 and rectangular fit > 0.9 and area >= 400 m2 | ||

| Iteration 5 | MRS segmentation | Scale = 70; shape = 0.5; compactness = 0.9 | |

| Classification | Parcel 6 = shape index ≤ 1.4 and area ≥ 360 m2 | ||

| Iteration 6 | MRS segmentation | Scale = 60; shape = 0.5; compactness = 0.8 | |

| Classification | Parcel 7 = shape index ≤ 1.4 and rectangular fit > 0.9 and area > 400 m2 | ||

| Iteration 7 | MRS segmentation | Scale = 70; shape = 0.6; compactness = 0.9 | |

| Classification | Parcels 8 = shape index ≤ 1.4 and rectangular fit > 0.85 | ||

| Iteration 8 | MRS segmentation | Scale = 90; shape = 0.5; compactness = 0.8 | |

| Classification | Parcels 9: density ≥ 1.6 | ||

| Enhancemen-t | Opening operator | ||

| Chessboard | Size 1 × 1 pixel, | ||

| Growing region | Loop: parcels <unclassified> = 0 | ||

| Urban | |||

| Buildings extraction | |||

| Removing road strips | Chessboard segment. | Size = 1 × 1 pixel, | |

| Contextual information | Distance to OSM road set = 7 m | ||

| Removing vegetation | Classification | NDVI > 0.73 Maximum difference < 2.05 | |

| Buildings | MRS segmentation | Scale = 70; shape = 0.8; compactness = 0.9 | |

| Classification | Area > 150 m2 | ||

| Fences/parcels extraction | |||

| Contrast segmentation/Edge ration splitting on blue band | Chessboard tile = 30; minimum threshold = 0; maximum threshold = 250, step size = 50 | ||

| Expert ID | Qualification | Professional Body | Experience |

|---|---|---|---|

| A | Master in Geoinformation and Earth Observation | National cadastre | 8 years |

| B | Bachelor of Science in Geography | National cadastre | 8 years |

| C | Bachelor of Science in Geography | National cadastre | 8 years |

| D | Bachelor of Science in Land Surveying | Organisation of surveyor | 5 years |

| E | Bachelor of Science in Geography | National cadastre | 5 years |

| Machine | Expert A | Expert B | Expert C | Expert D | Expert E | |

|---|---|---|---|---|---|---|

| OS | 0.15 | 0.12 | 0.13 | 0.11 | 0.11 | 0.12 |

| US | 0.17 | 0.14 | 0.13 | 0.20 | 0.15 | 0.15 |

| SH.err | 0.03 | 0.02 | 0.05 | 0.03 | 0.03 | 0.02 |

| ED.err (buffer = 4 m) | 0.07 | 0.02 | 0.06 | 0.03 | 0.03 | 0.02 |

| NSR | 0.063 | 0.049 | 0.069 | 0.108 | 0.020 | 0.059 |

| FP | 14.74% | 12.5% | 9.57% | 12.22% | 12.12% | 13.68 |

| FN | 14.85% | 15.84% | 16.83 | 25.74% | 13.86% | 19.80% |

| Correctness | 47.4% | 76% | 67% | 77.8% | 77.8% | 72.6% |

| Completeness | 45% | 73% | 63% | 70% | 77% | 69% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Nyandwi, E.; Koeva, M.; Kohli, D.; Bennett, R. Comparing Human Versus Machine-Driven Cadastral Boundary Feature Extraction. Remote Sens. 2019, 11, 1662. https://doi.org/10.3390/rs11141662

Nyandwi E, Koeva M, Kohli D, Bennett R. Comparing Human Versus Machine-Driven Cadastral Boundary Feature Extraction. Remote Sensing. 2019; 11(14):1662. https://doi.org/10.3390/rs11141662

Chicago/Turabian StyleNyandwi, Emmanuel, Mila Koeva, Divyani Kohli, and Rohan Bennett. 2019. "Comparing Human Versus Machine-Driven Cadastral Boundary Feature Extraction" Remote Sensing 11, no. 14: 1662. https://doi.org/10.3390/rs11141662

APA StyleNyandwi, E., Koeva, M., Kohli, D., & Bennett, R. (2019). Comparing Human Versus Machine-Driven Cadastral Boundary Feature Extraction. Remote Sensing, 11(14), 1662. https://doi.org/10.3390/rs11141662