A Deep Convolutional Generative Adversarial Networks (DCGANs)-Based Semi-Supervised Method for Object Recognition in Synthetic Aperture Radar (SAR) Images

Abstract

:1. Introduction

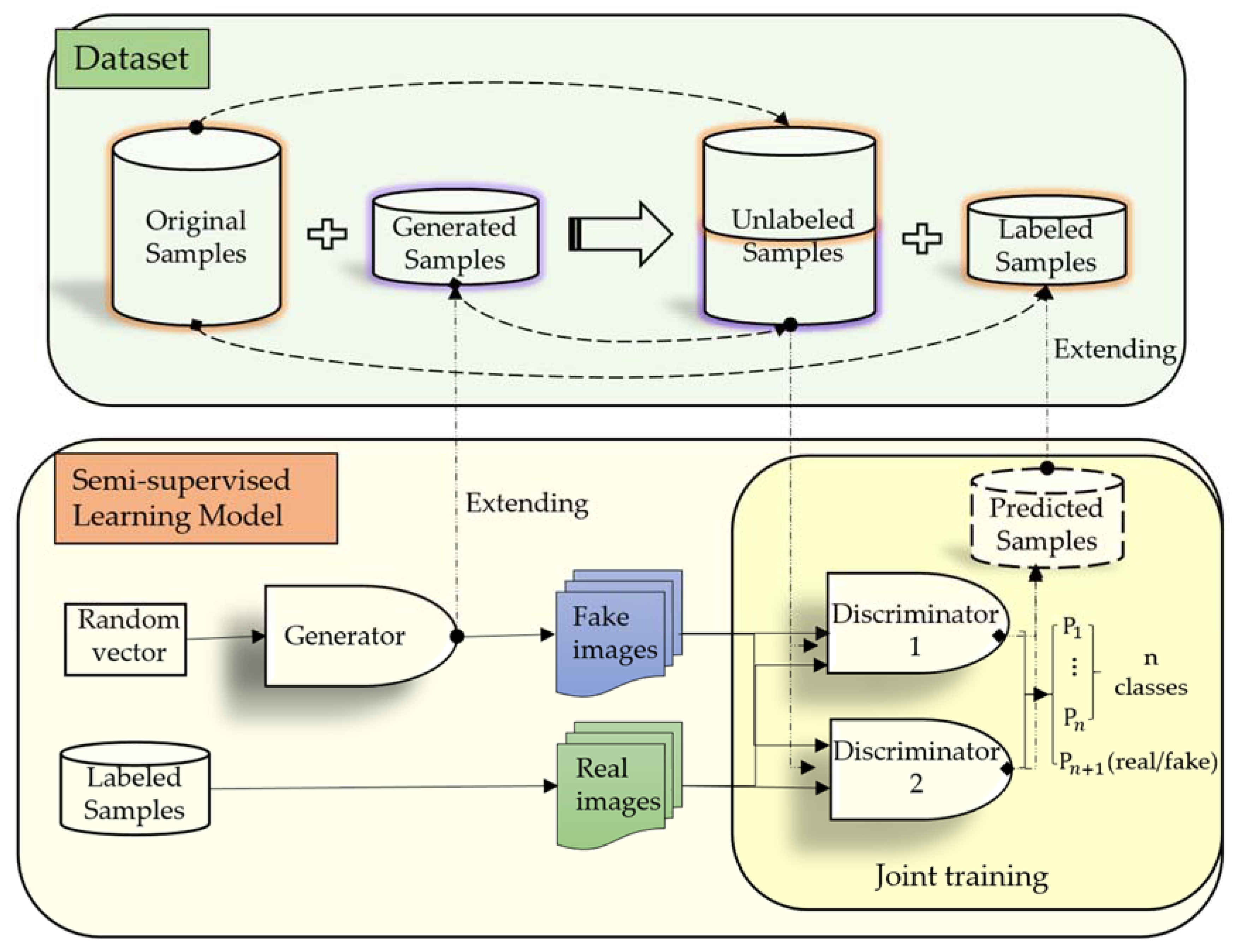

2. DCGANs-Based Semi-Supervised Learning

2.1. Framework

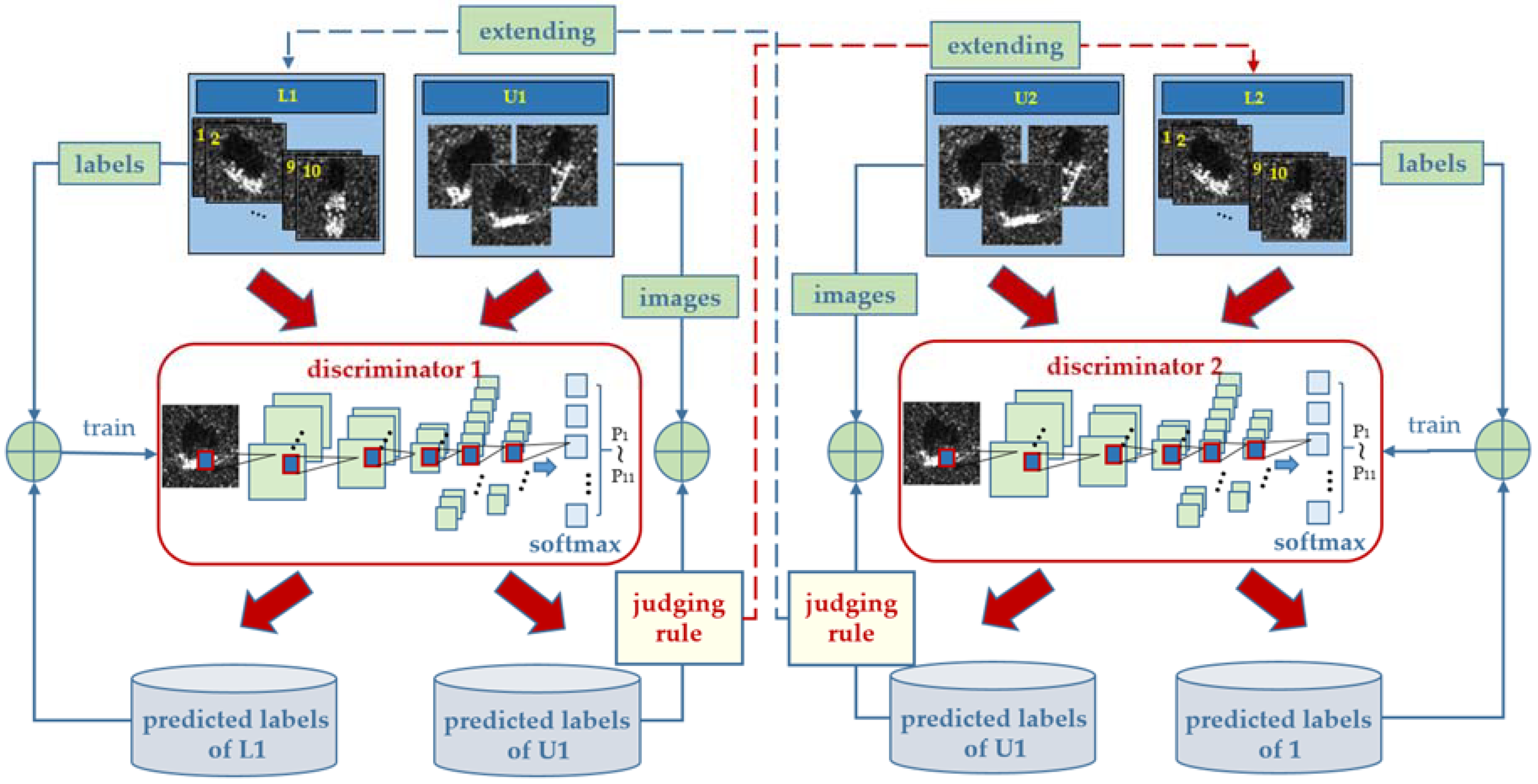

2.2. MO-DCGANs

2.3. Semi-Supervised Learning

2.3.1. Joint Training

- (1)

- utilize () to train ();

- (2)

- use () to predict the labels of the samples in; and,

- (3)

- () selectspositive samples from the newly labeled samples according to certain criteria and adds them to () for the next round of training.

2.3.2. Noisy Data Learning

| Algorithm 1. Semi-supervised learning based on multi-output DCGANs. |

| Inputs: Original labeled training sets and, original unlabeled training sets and, the prediction sample sets and , the discriminators D1 and D2, the error rates and , the update flags of the classifiers and . Outputs: Two vectors of class probabilities and. |

|

3. Experiments and Discussions

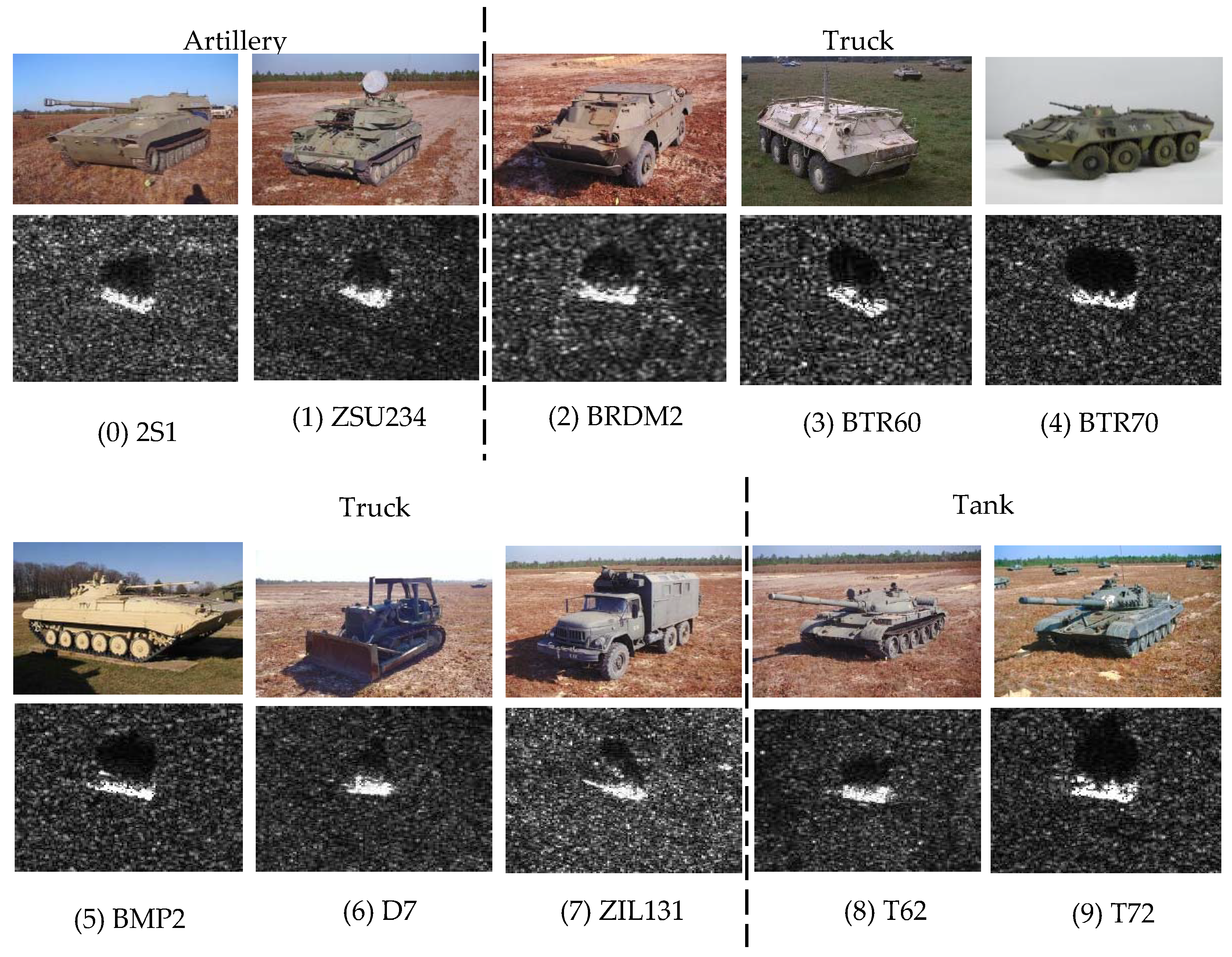

3.1. MSTAR Dataset

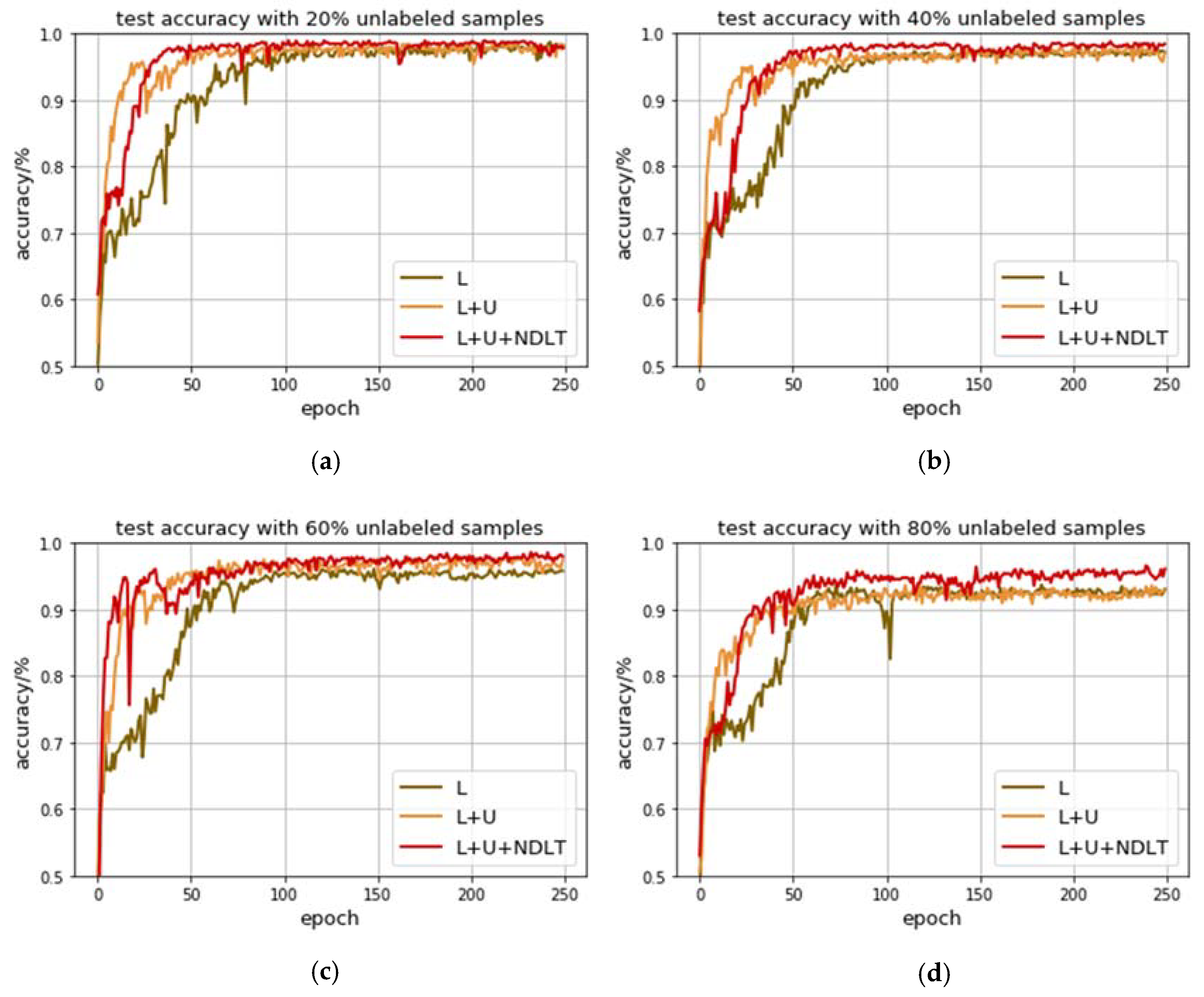

3.2. Experiments with Original Training Set under Different Unlabeled Rates

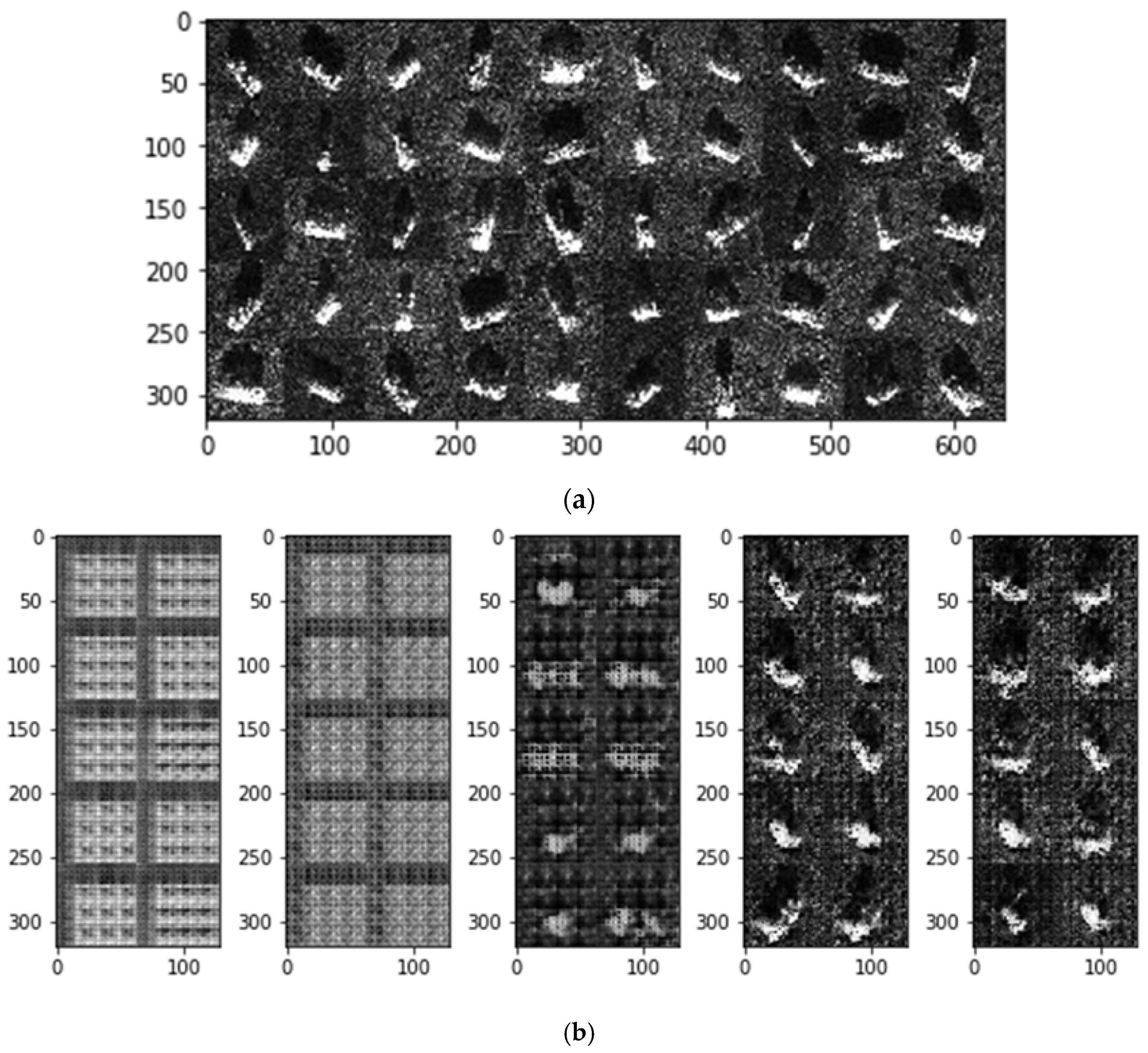

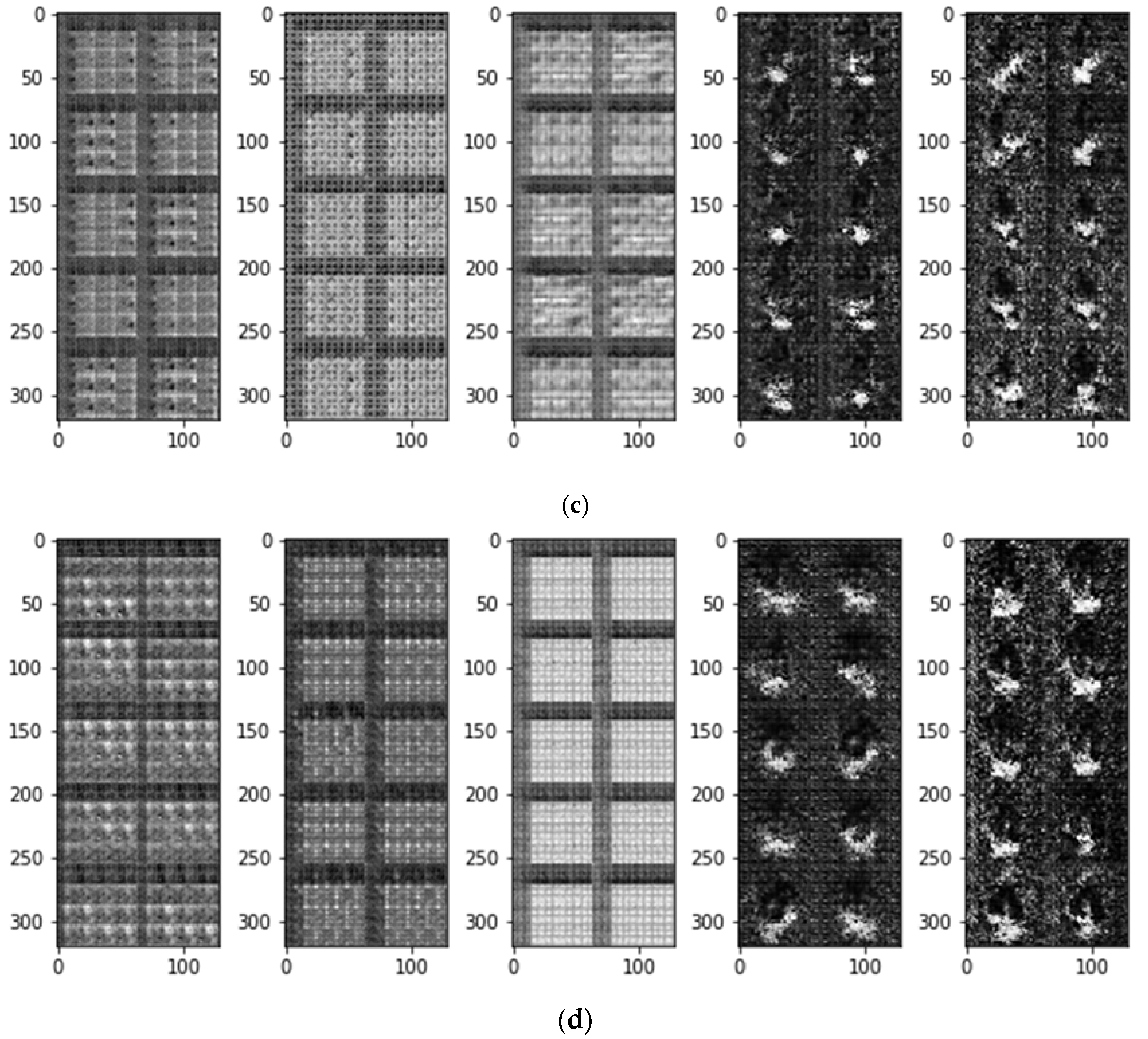

3.3. Quality Evaluation of Generated Samples

3.4. Experiments with Unlabeled Generated Samples under Different Unlabeled Rates

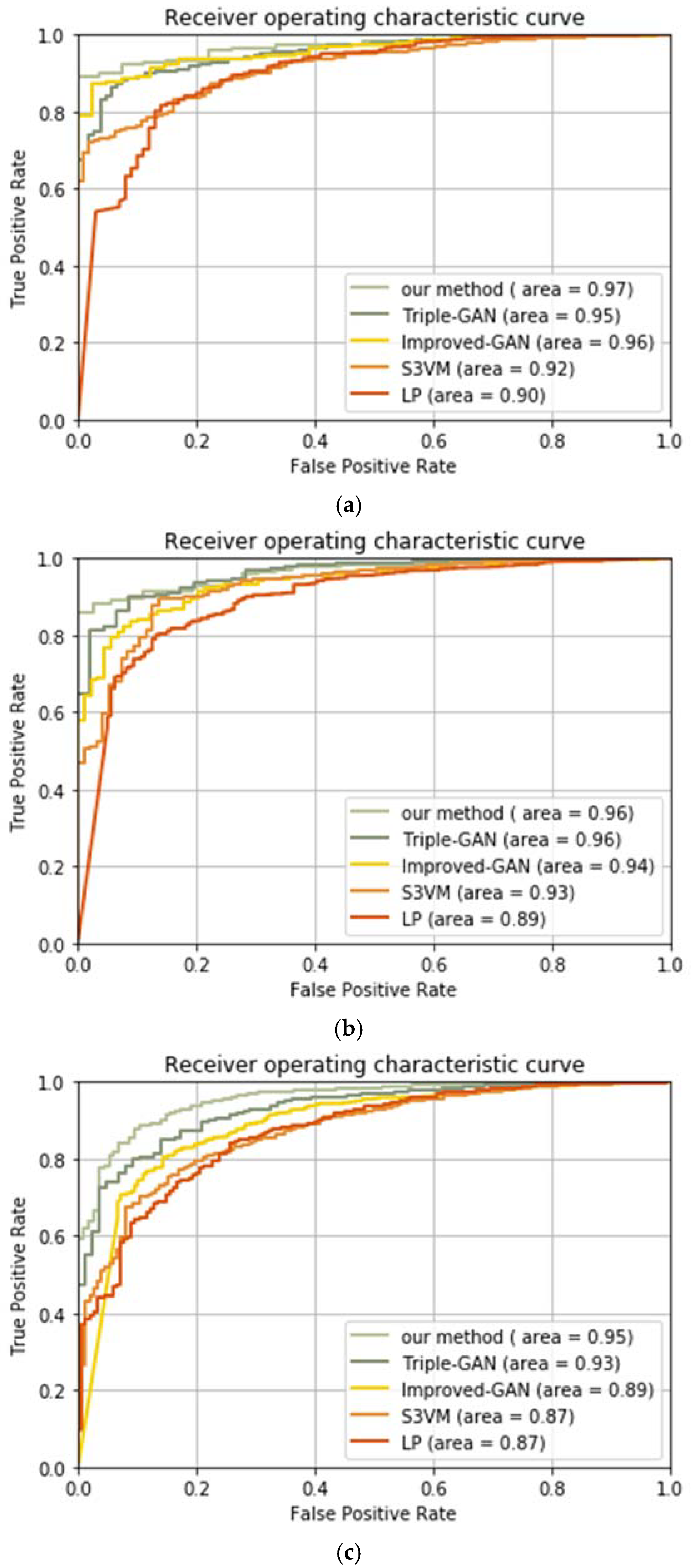

3.5. Comparison Experiment with Other Methods

4. Discussion

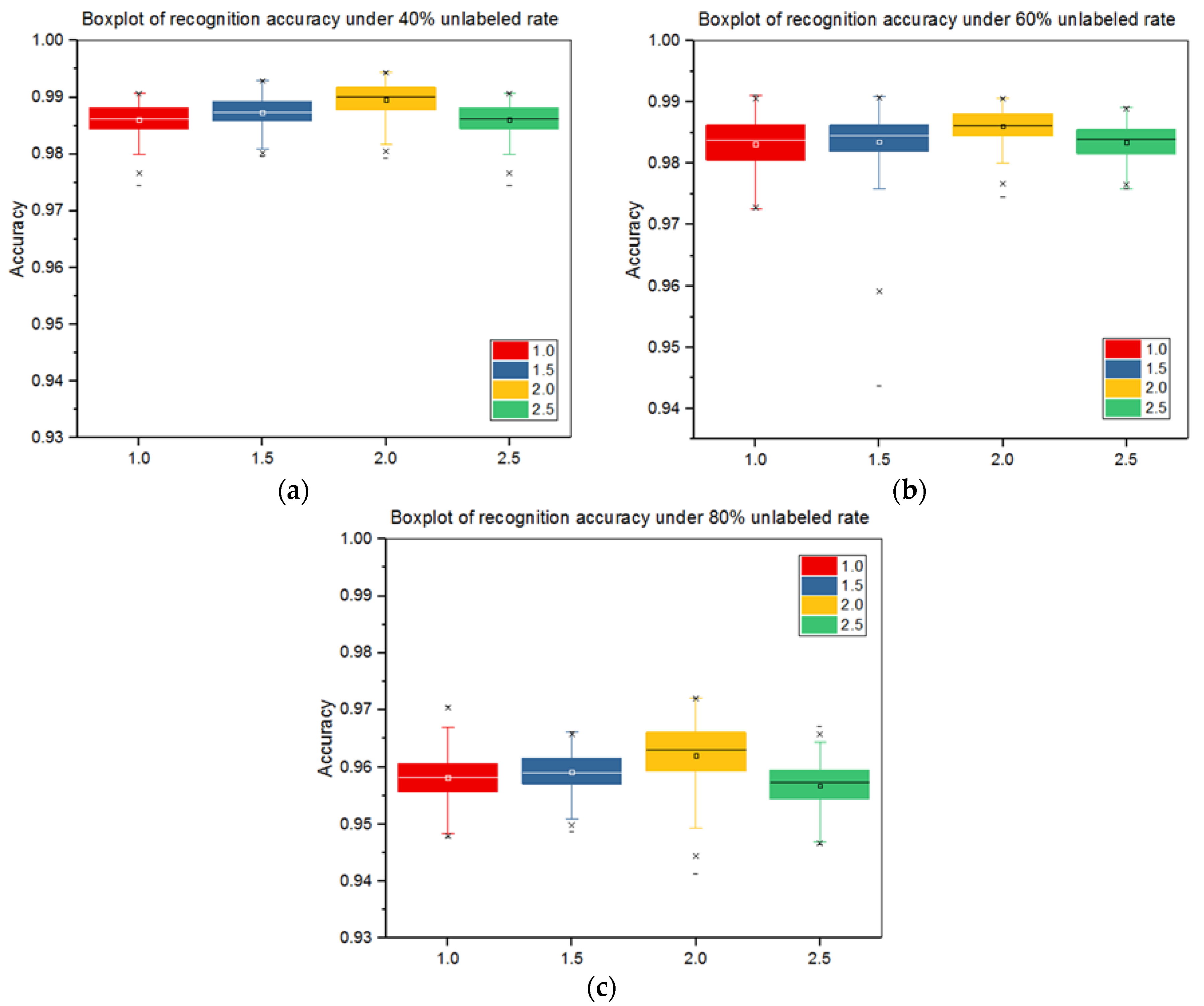

4.1. Choice of Parameter

4.2. Performance Evaluation

4.2.1. ROC Curve

4.2.2. Training Time

5. Conclusions

- Introducing the noisy data learning theory into our method can reduce the adverse effect of the wrongly labeled sample on the network and significantly improve the recognition accuracy.

- Our method can achieve high recognition accuracy on the MSTAR dataset, and especially performs well when there are a small number of labeled samples and a large number of unlabeled samples. When the unlabeled rate increases from 20% to 80%, the overall accuracy improvement increases from 0 to 5%, and the overall recognition accuracies are over 95%.

- The experimental results have confirmed that when the number of the labeled samples is small, our model performs better after utilizing those high-quality generated images for the network training. The less the labeled samples, the higher the accuracy improvement. However, when the labeled samples are less than 500, the quality of the generated samples are too few to make the system work.

Author Contributions

Funding

Conflicts of Interest

References

- Wang, G.; Shuncheng, T.; Chengbin, G.; Na, W.; Zhaolei, L. Multiple model particle flter track-before-detect for range am-biguous radar. Chin. J. Aeronaut. 2013, 26, 1477–1487. [Google Scholar] [CrossRef]

- Dong, G.; Kuang, G.; Wang, N.; Zhao, L.; Lu, J. SAR Target Recognition via Joint Sparse Representation of Monogenic Signal. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3316–3328. [Google Scholar] [CrossRef]

- Sun, Y.; Du, L.; Wang, Y.; Wang, Y.; Hu, J. SAR Automatic Target Recognition Based on Dictionary Learning and Joint Dynamic Sparse Representation. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1777–1781. [Google Scholar] [CrossRef]

- Han, P.; Wu, J.; Wu, R. SAR Target feature extraction and recognition based on 2D-DLPP. Phys. Procedia 2012, 24, 1431–1436. [Google Scholar] [CrossRef]

- Zhao, B.; Zhong, Y.; Zhang, L. Scene classification via latent Dirichlet allocation using a hybrid generative/discriminative strategy for high spatial resolution remote sensing imagery. Remote Sens. Lett. 2013, 4, 1204–1213. [Google Scholar] [CrossRef]

- Zhong, Y.; Zhu, Q.; Zhang, L. Scene classification based on the multifeature fusion probabilistic topic model for high spatial resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6207–6222. [Google Scholar] [CrossRef]

- Zhu, Q.; Zhong, Y.; Zhang, L.; Li, D. Scene Classification Based on the Sparse Homogeneous-Heterogeneous Topic Feature Model. IEEE Trans. Geosci. Remote Sens. 2018, 56, 2689–2703. [Google Scholar] [CrossRef]

- Han, J.; Zhang, D.; Cheng, G.; Guo, L.; Ren, J. Object detection in optical remote sensing images based on weakly supervised learning and high-level feature learning. IEEE Trans. Geosci. Remote Sens. 2015, 53, 3325–3337. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Semi-supervised discriminative random field for hyperspectral image classification. In Proceedings of the 2012 4th Workshop on Hyperspectral Image and Signal Processing: Evolution in Remote Sensing (WHISPERS), Shanghai, China, 4–7 June 2012; pp. 1–4. [Google Scholar]

- Zhong, P.; Wang, R. Learning conditional random fields for classification of hyperspectral images. IEEE Trans. Image Process. 2010, 19, 1890–1907. [Google Scholar] [CrossRef] [PubMed]

- Wang, Q.; Zhang, F.; Li, X. Optimal Clustering Framework for Hyperspectral Band Selection. IEEE Trans. Geosci. Remote Sens. 2018, 1–13. [Google Scholar] [CrossRef]

- Starck, J.L.; Elad, M.; Donoho, D.L. Image decomposition via the combination of sparse representations and a variational approach. IEEE Trans. Image Process. 2005, 14, 1570–1582. [Google Scholar] [CrossRef] [PubMed]

- Tang, Y.; Lu, Y.; Yuan, H. Hyperspectral image classification based on three-dimensional scattering wavelet transform. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2467–2480. [Google Scholar] [CrossRef]

- Zhou, J.; Cheng, Z.S.X.; Fu, Q. Automatic target recognition of SAR images based on global scattering center model. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3713–3729. [Google Scholar]

- Fauvel, M.; Benediktsson, J.A.; Chanussot, J.; Sveinsson, J.R. Spectral and spatial classification of hyperspectral data using SVMs and morphological profiles. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3804–3814. [Google Scholar] [CrossRef]

- Hearst, M.A. Support Vector Machines; IEEE Educational Activities Department: Piscataway, NJ, USA, 1998; pp. 18–28. [Google Scholar]

- Friedman, J.; Hastie, T.; Tibshirani, R. Special Invited Paper. Additive Logistic Regression: A Statistical View of Boosting. Ann. Stat. 2000, 28, 337–374. [Google Scholar] [CrossRef]

- Chatziantoniou, A.; Petropoulos, G.P.; Psomiadis, E. Co-Orbital Sentinel 1 and 2 for LULC Mapping with Emphasis on Wetlands in a Mediterranean Setting Based on Machine Learning. Remote Sens. 2017, 9, 1259. [Google Scholar] [CrossRef]

- Guo, D.; Chen, B. SAR image target recognition via deep Bayesian generative network. In Proceedings of the IEEE International Workshop on Remote Sensing with Intelligent Processing, Shanghai, China, 19–21 May 2017; pp. 1–4. [Google Scholar]

- Ji, X.X.; Zhang, G. SAR Image Target Recognition with Increasing Sub-classifier Diversity Based on Adaptive Boosting. In Proceedings of the IEEE Sixth International Conference on Intelligent Human-Machine Systems and Cybernetics, Hangzhou, China, 26–27 August 2014; pp. 54–57. [Google Scholar]

- Ruohong, H.; Yun, P.; Mao, K. SAR Image Target Recognition Based on NMF Feature Extraction and Bayesian Decision Fusion. In Proceedings of the Second Iita International Conference on Geoscience and Remote Sensing, Qingdao, China, 28–31 August 2010; pp. 496–499. [Google Scholar]

- Wang, L.; Li, Y.; Song, K. SAR image target recognition based on GBMLWM algorithm and Bayesian neural networks. In Proceedings of the IEEE CIE International Conference on Radar, Guangzhou, China, 10–13 October 2017; pp. 1–5. [Google Scholar]

- Wang, Y.; Duan, H. Classification of Hyperspectral Images by SVM Using a Composite Kernel by Employing Spectral, Spatial and Hierarchical Structure Information. Remote Sens. 2018, 10, 441. [Google Scholar] [CrossRef]

- Wei, G.; Qi, Q.; Jiang, L.; Zhang, P. A New Method of SAR Image Target Recognition based on AdaBoost Algorithm. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008. [Google Scholar] [CrossRef]

- Xue, X.; Zeng, Q.; Zhao, R. A new method of SAR image target recognition based on SVM. In Proceedings of the 2005 IEEE International Geoscience and Remote Sensing Symposium, Seoul, Korea, 29–29 July 2005; pp. 4718–4721. [Google Scholar]

- Yan, F.; Mei, W.; Chunqin, Z. SAR Image Target Recognition Based on Hu Invariant Moments and SVM. In Proceedings of the IEEE International Conference on Information Assurance and Security, Xi’an, China, 18–20 August 2009; pp. 585–588. [Google Scholar]

- Huang, Z.; Pan, Z.; Lei, B. Transfer Learning with Deep Convolutional Neural Network for SAR Target Classification with Limited Labeled Data. Remote Sens. 2017, 9, 907. [Google Scholar] [CrossRef]

- Kim, S.; Song, W.-J.; Kim, S.-H. Double Weight-Based SAR and Infrared Sensor Fusion for Automatic Ground Target Recognition with Deep Learning. Remote Sens. 2018, 10, 72. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–8 December 2012; Curran Associates Inc.: Nice, France, 2012; pp. 1097–1105. [Google Scholar]

- Liu, Y.; Zhong, Y.; Fei, F.; Zhu, Q.; Qin, Q. Scene Classification Based on a Deep Random-Scale Stretched Convolutional Neural Network. Remote Sens. 2018, 10, 444. [Google Scholar] [CrossRef]

- Ding, J.; Chen, B.; Liu, H.; Huang, M. Convolutional Neural Network with Data Augmentation for SAR Target Recognition. IEEE Geosci. Remote Sens. Lett. 2016, 13, 364–368. [Google Scholar] [CrossRef]

- Chen, S.; Wang, H.; Xu, F.; Jin, Y.Q. Target Classification using the Deep Convolutional Networks for SAR Images. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4806–4817. [Google Scholar] [CrossRef]

- Masci, J.; Meier, U.; Ciresan, D.; Schmidhuber, J. Stacked Convolutional Auto-Encoders for Hierarchical Feature Extraction. In Artificial Neural Networks and Machine Learning, Proceedings of the ICANN 2011: 21st International Conference on Artificial Neural Networks, Espoo, Finland, 14–17 June 2011; Springer: Heidelberg, Germany, 2011; pp. 52–59. [Google Scholar]

- Zhang, Y.; Lee, K.; Lee, H.; EDU, U. Augmenting Supervised Neural Networks with Unsupervised Objectives for Large-Scale Image classification. In Proceedings of the Machine Learning Research, New York, NY, USA, 20–22 June 2016; Volume 48, pp. 612–621. [Google Scholar]

- Lin, Z.; Ji, K.; Kang, M.; Leng, X.; Zou, H. Deep Convolutional Highway Unit Network for SAR Target Classification with Limited Labeled Training Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1091–1095. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, Z.; Zhao, X.; Wang, G.; Gu, Y. Deep learning-based classification of hyperspectral data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 2094–2107. [Google Scholar] [CrossRef]

- Ma, X.; Wang, H.; Geng, J. Spectral–Spatial Classification of Hyperspectral Image Based on Deep Auto-Encoder. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4073–4085. [Google Scholar] [CrossRef]

- Zhong, Y.; Fei, F.; Liu., Y.; Zhao, B.; Jiao, H.; Zhang, P. SatCNN: Satellite Image Dataset Classification Using Agile Convolutional Neural Networks. Remote Sens. Lett. 2017, 8, 136–145. [Google Scholar] [CrossRef]

- Wang, Q.; Wan, J.; Yuan, Y. Deep Metric Learning for Crowdedness Regression. IEEE Trans. Circuits Syst. Video Technol. 2017. [Google Scholar] [CrossRef]

- Shahshahani, B.M.; Landgrebe, D.A. The effect of unlabeled samples in reducing the small sample size problem and mitigating the Hughes phenomenon. IEEE Trans. Geosci. Remote Sens. 1994, 32, 1087–1095. [Google Scholar] [CrossRef]

- Pan, Z.; Qiu, X.; Huang, Z.; Lei, B. Airplane Recognition in TerraSAR-X Images via Scatter Cluster Extraction and Reweighted Sparse Representation. IEEE Geosci. Remote Sens. Lett. 2017, 14, 112–116. [Google Scholar] [CrossRef]

- Persello, C.; Bruzzone, L. Active and Semisupervised Learning for the Classification of Remote Sensing Images. I IEEE Trans. Geosci. Remote Sens. 2014, 52, 6937–6956. [Google Scholar] [CrossRef]

- Blum, A.; Chawla, S. Learning from Labeled and Unlabeled Data using Graph Mincuts. In Proceedings of the Eighteenth International Conference on Machine Learning, Williamstown, MA, USA, 28 June–1 July 2001; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 2001; pp. 19–26. [Google Scholar]

- Jebara, T.; Wang, J.; Chang, S.F. Graph construction and b-matching for semi-supervised learning. In Proceedings of the 26th International Conference on Machine Learning (ICML 2009), Montreal, QC, Canada, 14–18 June 2009; pp. 441–448. [Google Scholar]

- Zhou, Z.H.; Li, M. Tri-training: Exploiting unlabeled data using three classifiers. IEEE Trans. Knowl. Data Eng. 2005, 17, 1529–1541. [Google Scholar] [CrossRef]

- Blum, A.; Mitchell, T. Combining labeled and unlabeled data with co-training. In Proceedings of the Conference on Computational Learning Theory, Madison, WI, USA, 24–26 July 1998; pp. 92–100. [Google Scholar]

- Angluin, D.; Laird, P. Learning from noisy examples. Mach. Learn. 1988, 2, 343–370. [Google Scholar] [CrossRef]

- Radford, A.; Metz, L.; Chintala, S. Unsupervised Representation Learning with Deep Convolutional Generative Adversarial Networks. arXiv, 2015; arXiv:1511.06434. [Google Scholar]

- Salimans, T.; Goodfellow, I.; Zaremba, W.; Cheung, V.; Radford, A.; Chen, X. Improved Techniques for Training GANs. arXiv, 2016; arXiv:1606.03498. [Google Scholar]

- Wang, F.; Zhang, C. Label Propagation through Linear Neighborhoods. IEEE Trans. Knowl. Data Eng. 2008, 20, 55–67. [Google Scholar]

- Li, C.; Xu, K.; Zhu, J.; Zhang, B. Triple Generative Adversarial Nets. arXiv, 2016; arXiv:1703.02291. [Google Scholar]

- Fawcett, T. Roc Graphs: Notes and Practical Considerations for Researchers; Technical Report HPL-2003-4; HP Labs: Bristol, UK, 2006. [Google Scholar]

- Senthilnath, J.; Sindhu, S.; Omkar, S.N. GPU-based normalized cuts for road extraction using satellite imagery. J. Earth Syst. Sci. 2014, 123, 1759–1769. [Google Scholar] [CrossRef]

| Tops | Class | Serial No. | Size (Pixels) | Training Set | Testing Set | ||

|---|---|---|---|---|---|---|---|

| Depression | No. Images | Depression | No. Images | ||||

| Artillery | 2S1 | B_01 | 299 | 274 | |||

| ZSU234 | D_08 | 299 | 274 | ||||

| Truck | BRDM2 | E_71 | 298 | 274 | |||

| BTR60 | K10YT_7532 | 256 | 195 | ||||

| BMP2 | SN_9563 | 233 | 195 | ||||

| BTR70 | C_71 | 233 | 196 | ||||

| D7 | 92V_13015 | 299 | 274 | ||||

| ZIL131 | E_12 | 299 | 274 | ||||

| Tank | T62 | A_51 | 299 | 273 | |||

| T72 | #A64 | 232 | 196 | ||||

| Sum | —— | —— | —— | —— | 2747 | —— | 2425 |

| Unlabeled Rate | L | U | Total |

|---|---|---|---|

| 20% | 2197 | 550 | 2747 |

| 40% | 1648 | 1099 | 2747 |

| 60% | 1099 | 1648 | 2747 |

| 80% | 550 | 2197 | 2747 |

| Objects | Unlabeled Rate | |||||||||

| 20% | 40% | |||||||||

| L | L+U | L+U+NDLT | L | L+U | L+U+NDLT | |||||

| SRA | SSRA | imp | SSRA | imp | SRA | SSRA | imp | SSRA | imp | |

| 2S1 | 99.74 | 99.76 | 0.02 | 99.56 | −0.18 | 99.71 | 99.77 | 0.07 | 99.75 | 0.04 |

| BMP2 | 97.75 | 96.62 | −1.16 | 98.07 | 0.33 | 97.59 | 96.65 | −0.97 | 98.36 | 0.79 |

| BRDM2 | 96.32 | 96.04 | −0.29 | 97.13 | 0.84 | 94.94 | 93.04 | −2.00 | 98.61 | 3.87 |

| BTR60 | 99.07 | 98.88 | −0.19 | 98.88 | −0.19 | 98.58 | 98.70 | 0.13 | 99.02 | 0.45 |

| BTR70 | 96.31 | 96.45 | 0.13 | 96.40 | 0.08 | 94.28 | 95.27 | 1.05 | 97.05 | 2.93 |

| D7 | 99.28 | 98.15 | −1.14 | 99.38 | 0.10 | 98.88 | 98.48 | −0.40 | 99.68 | 0.81 |

| T62 | 98.90 | 99.46 | 0.57 | 98.79 | −0.11 | 98.93 | 99.27 | 0.34 | 99.11 | 0.17 |

| T72 | 98.53 | 99.06 | 0.54 | 98.93 | 0.41 | 97.95 | 98.40 | 0.45 | 99.28 | 1.35 |

| ZIL131 | 98.86 | 97.10 | −1.78 | 98.29 | −0.57 | 97.62 | 97.20 | −0.43 | 98.84 | 1.25 |

| ZSU234 | 99.15 | 98.92 | −0.23 | 99.45 | 0.30 | 98.60 | 99.49 | 0.90 | 99.70 | 1.11 |

| Average | 98.39 | 98.04 | −0.35 | 98.49 | 0.10 | 97.71 | 97.63 | −0.08 | 98.94 | 1.26 |

| Objects | Unlabeled Rate | |||||||||

| 60% | 80% | |||||||||

| L | L+U | L+U+NDLT | L | L+U | L+U+NDLT | |||||

| SRA | SSRA | imp | SSRA | imp | SRA | SSRA | imp | SSRA | imp | |

| 2S1 | 99.36 | 99.69 | 0.33 | 99.83 | 0.47 | 99.23 | 99.82 | 0.59 | 99.85 | 0.62 |

| BMP2 | 95.80 | 96.18 | 0.40 | 97.58 | 1.85 | 92.48 | 95.64 | 3.42 | 97.80 | 5.75 |

| BRDM2 | 89.01 | 92.54 | 3.97 | 98.40 | 10.55 | 75.02 | 77.26 | 2.98 | 83.09 | 10.76 |

| BTR60 | 98.67 | 98.89 | 0.21 | 99.20 | 0.54 | 95.48 | 98.02 | 2.66 | 98.94 | 3.62 |

| BTR70 | 91.27 | 87.91 | −3.69 | 94.57 | 3.61 | 84.94 | 87.51 | 3.02 | 96.78 | 13.94 |

| D7 | 97.57 | 99.27 | 1.74 | 99.78 | 0.26 | 90.85 | 90.18 | −0.74 | 98.83 | 8.79 |

| T62 | 98.60 | 99.07 | 0.48 | 99.20 | 0.61 | 98.16 | 99.08 | 0.93 | 99.07 | 0.93 |

| T72 | 95.88 | 98.84 | 3.08 | 99.27 | 3.53 | 91.76 | 94.79 | 3.30 | 98.95 | 7.84 |

| ZIL131 | 92.75 | 96.94 | 4.52 | 97.96 | 5.62 | 86.93 | 82.41 | −5.21 | 83.78 | −3.63 |

| ZSU234 | 98.63 | 99.64 | 1.03 | 99.67 | 1.06 | 97.23 | 99.72 | 2.55 | 99.69 | 2.53 |

| Average | 95.75 | 96.90 | 1.19 | 98.55 | 2.92 | 91.21 | 92.44 | 1.35 | 95.68 | 4.90 |

| Epoch | The Number of Labeled Samples | ||

|---|---|---|---|

| 1099 | 824 | 550 | |

| 50 | 0 | 0 | 0 |

| 150 | 0 | 0 | 0 |

| 250 | 23 | 0 | 0 |

| 350 | 945 | 44 | 76 |

| 450 | 969 | 874 | 551 |

| Objects | The Number of Original Labeled Samples | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| 1099 | 824 | 550 | |||||||

| SRA | SSRA | imp | SRA | SSRA | imp | SRA | SSRA | imp | |

| 2S1 | 99.54 | 99.94 | 0.19 | 99.31 | 99.71 | 0.40 | 99.06 | 99.82 | 0.76 |

| BMP2 | 94.60 | 95.14 | 0.58 | 95.36 | 96.78 | 1.49 | 92.57 | 90.35 | −2.39 |

| BRDM2 | 93.07 | 88.67 | −4.72 | 87.67 | 91.17 | 4.00 | 74.66 | 85.51 | 14.52 |

| BTR60 | 99.11 | 98.57 | −0.55 | 98.19 | 98.11 | −0.07 | 95.95 | 97.16 | 1.26 |

| BTR70 | 91.95 | 95.84 | 4.23 | 88.57 | 91.25 | 3.02 | 85.48 | 86.67 | 1.39 |

| D7 | 99.18 | 99.78 | 0.60 | 96.36 | 98.72 | 2.45 | 91.68 | 96.48 | 5.23 |

| T62 | 98.53 | 99.00 | 0.48 | 99.14 | 99.21 | 0.07 | 98.64 | 98.26 | −0.39 |

| T72 | 97.61 | 98.28 | 0.69 | 95.73 | 95.51 | −0.23 | 90.67 | 94.33 | 4.05 |

| ZIL131 | 95.43 | 94.04 | −1.46 | 91.23 | 93.68 | 2.68 | 87.62 | 85.21 | −2.74 |

| ZSU234 | 98.71 | 98.53 | −0.18 | 98.65 | 98.65 | 0.00 | 97.25 | 98.21 | 0.99 |

| Average | 96.77 | 96.76 | 0.00 | 95.02 | 96.28 | 1.33 | 91.36 | 93.20 | 2.01 |

| Method | Unlabeled Rate | |||

|---|---|---|---|---|

| 20% | 40% | 60% | 80% | |

| LP | 96.05 | 95.97 | 94.11 | 92.04 |

| PVM-D | 96.11 | 96.02 | 95.67 | 95.01 |

| Triple-GAN | 96.46 | 96.13 | 95.97 | 95.70 |

| Improved-GAN | 98.07 | 97.26 | 95.02 | 87.52 |

| Our Method | 98.14 | 97.97 | 97.22 | 95.72 |

| Unlabeled Rate | Source | SS | df | MS | F | Prob > F |

|---|---|---|---|---|---|---|

| 40% | Intergroup | 0.00081 | 3 | 0.00027 | 33.62 | |

| Intragroup | 0.00316 | 396 | 0.00001 | - | - | |

| Total | 0.00397 | 399 | - | - | - | |

| 60% | Intergroup | 0.00055 | 3 | 0.00018 | 11.80 | |

| Intragroup | 0.00619 | 396 | 0.00002 | - | - | |

| Total | 0.00674 | 399 | - | - | - | |

| 80% | Intergroup | 0.00149 | 3 | 0.00050 | 27.11 | |

| Intragroup | 0.00726 | 396 | 0.00002 | - | - | |

| Total | 0.00875 | 399 | - | - | - |

| Unlabeled Rate | Training Time (Sec/Epoch) | Total Epochs |

|---|---|---|

| 20% | 40.71 | 200 |

| 40% | 40.21 | 200 |

| 60% | 39.79 | 200 |

| 80% | 38.80 | 200 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, F.; Yang, Y.; Wang, J.; Sun, J.; Yang, E.; Zhou, H. A Deep Convolutional Generative Adversarial Networks (DCGANs)-Based Semi-Supervised Method for Object Recognition in Synthetic Aperture Radar (SAR) Images. Remote Sens. 2018, 10, 846. https://doi.org/10.3390/rs10060846

Gao F, Yang Y, Wang J, Sun J, Yang E, Zhou H. A Deep Convolutional Generative Adversarial Networks (DCGANs)-Based Semi-Supervised Method for Object Recognition in Synthetic Aperture Radar (SAR) Images. Remote Sensing. 2018; 10(6):846. https://doi.org/10.3390/rs10060846

Chicago/Turabian StyleGao, Fei, Yue Yang, Jun Wang, Jinping Sun, Erfu Yang, and Huiyu Zhou. 2018. "A Deep Convolutional Generative Adversarial Networks (DCGANs)-Based Semi-Supervised Method for Object Recognition in Synthetic Aperture Radar (SAR) Images" Remote Sensing 10, no. 6: 846. https://doi.org/10.3390/rs10060846

APA StyleGao, F., Yang, Y., Wang, J., Sun, J., Yang, E., & Zhou, H. (2018). A Deep Convolutional Generative Adversarial Networks (DCGANs)-Based Semi-Supervised Method for Object Recognition in Synthetic Aperture Radar (SAR) Images. Remote Sensing, 10(6), 846. https://doi.org/10.3390/rs10060846