Estimating Tree Position, Diameter at Breast Height, and Tree Height in Real-Time Using a Mobile Phone with RGB-D SLAM

Abstract

1. Introduction

2. Theory and Technology

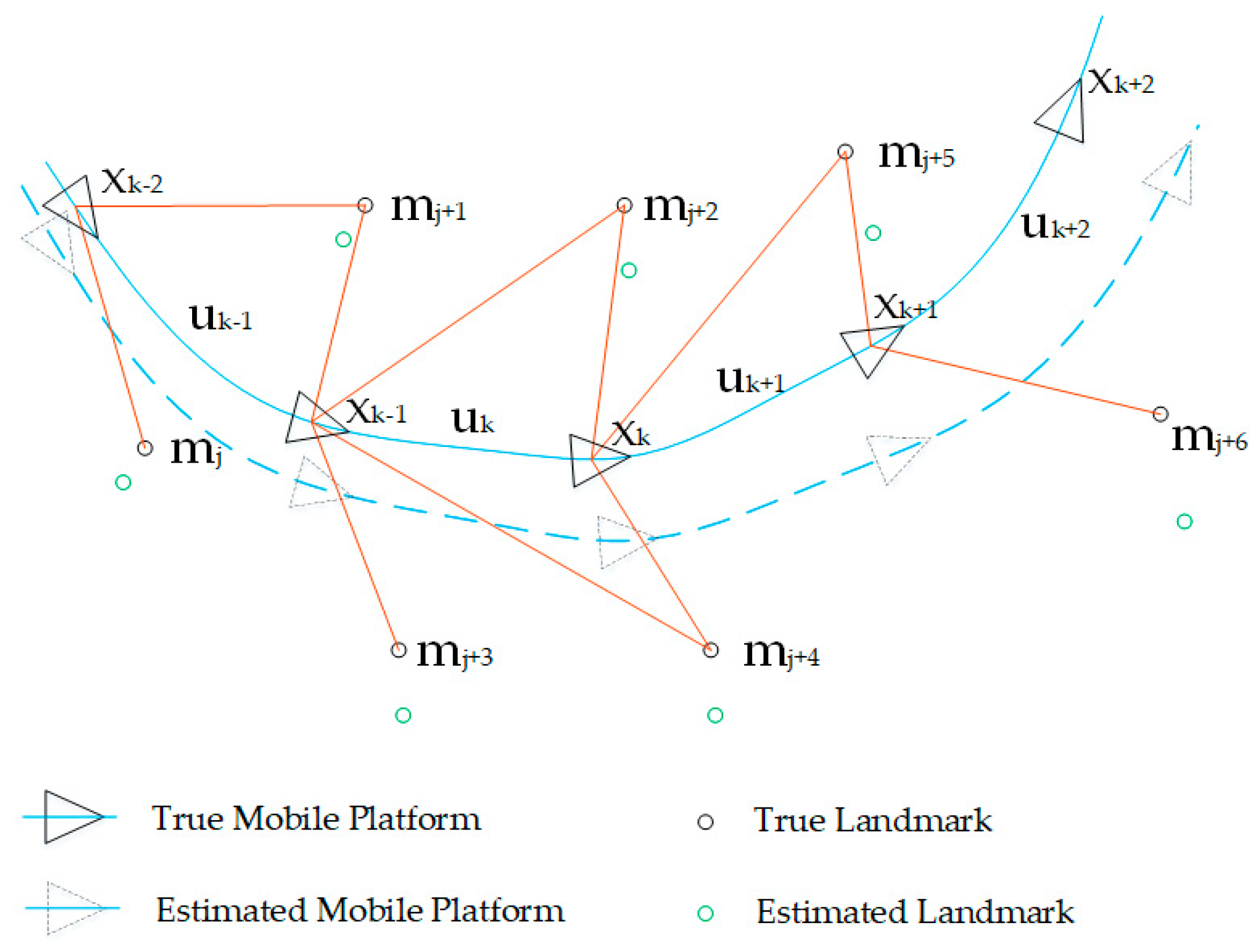

2.1. SLAM

2.2. The Technology of a Portable Graph-SLAM Device

3. Materials and Methods

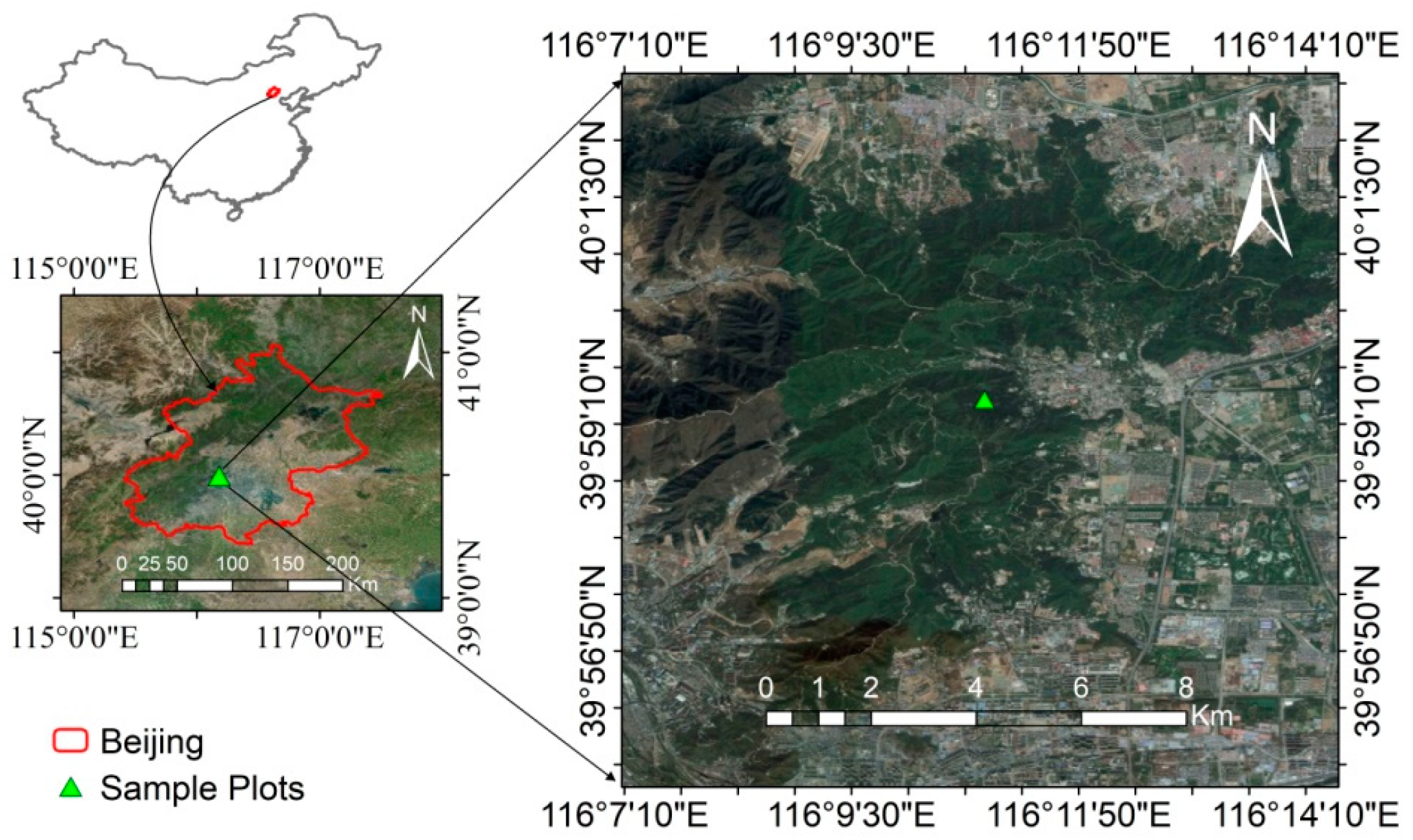

3.1. Study Area

3.2. Methods

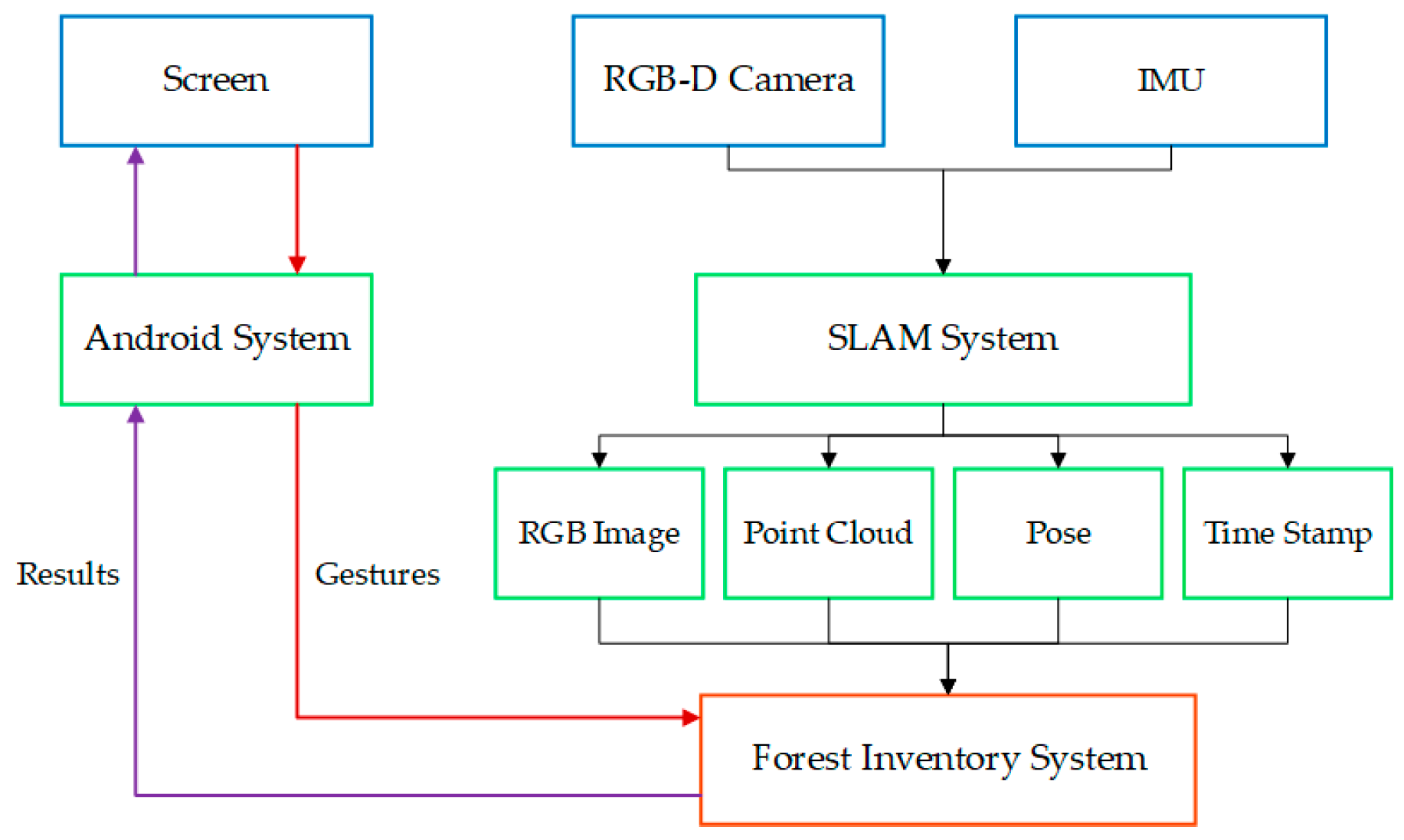

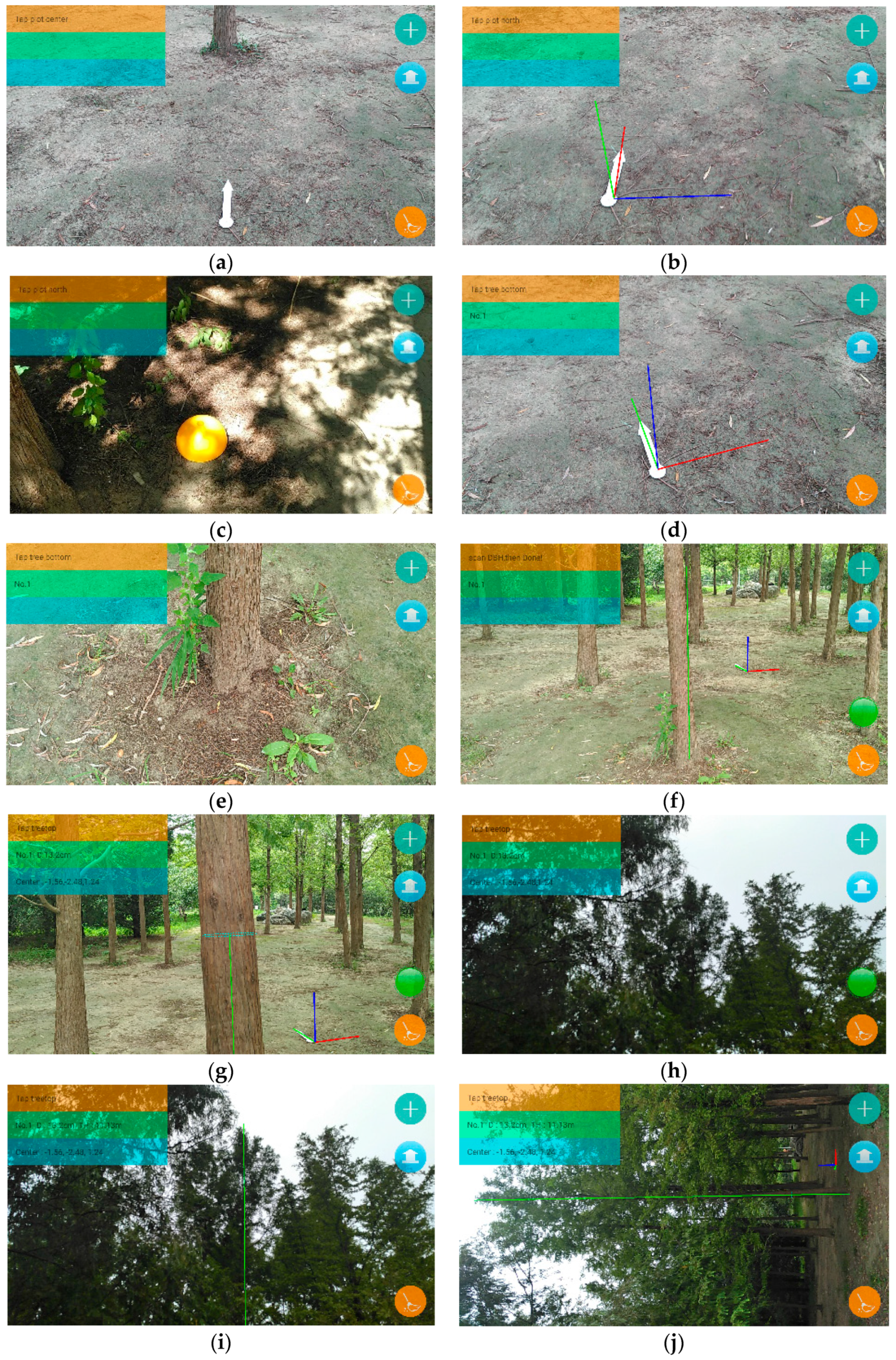

3.2.1. The System Workflow

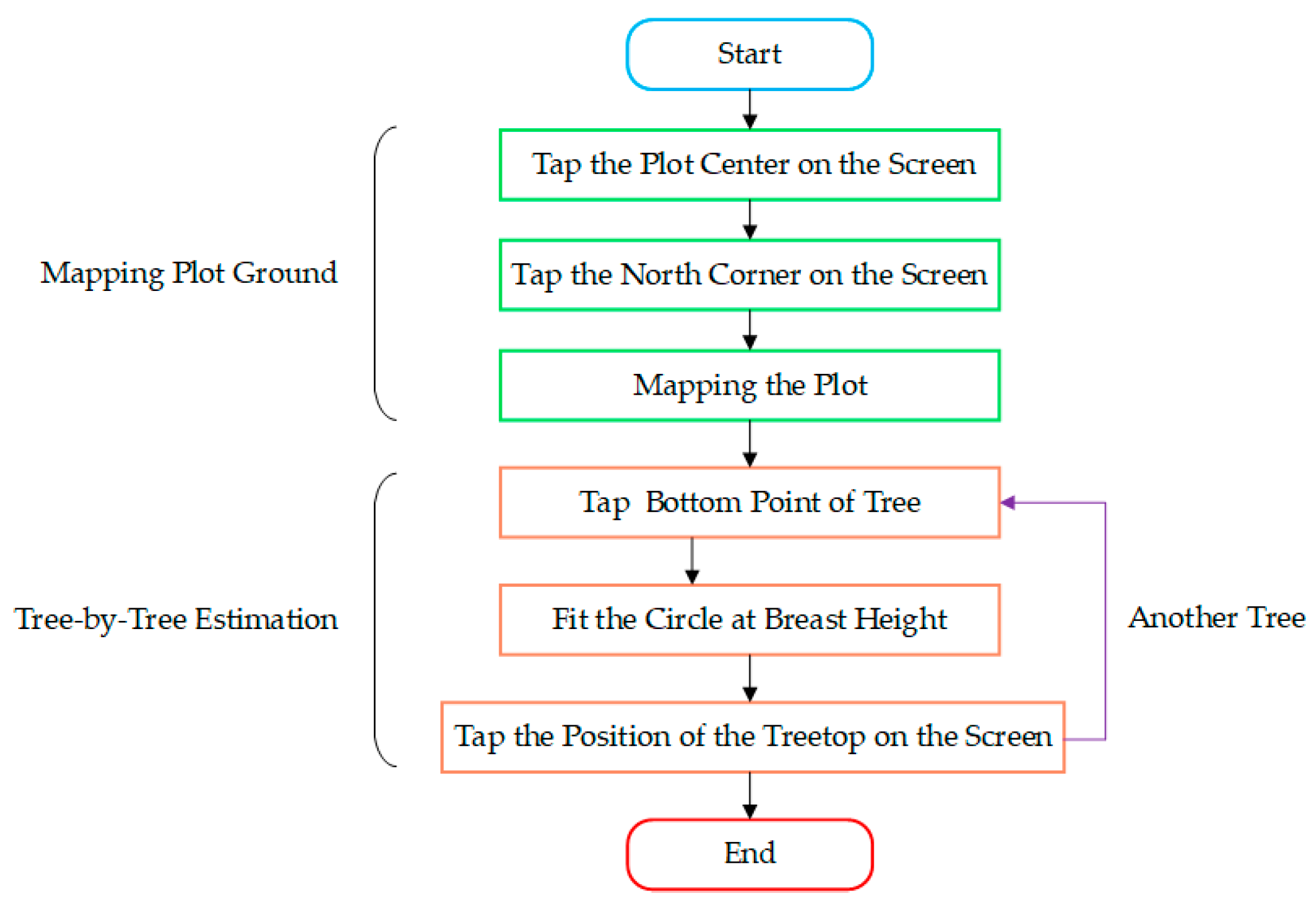

3.2.2. Mapping of the Plot Ground

Building the Plot Coordinate System

3.2.3. Estimation of the Stem Position, DBH, and Tree Height

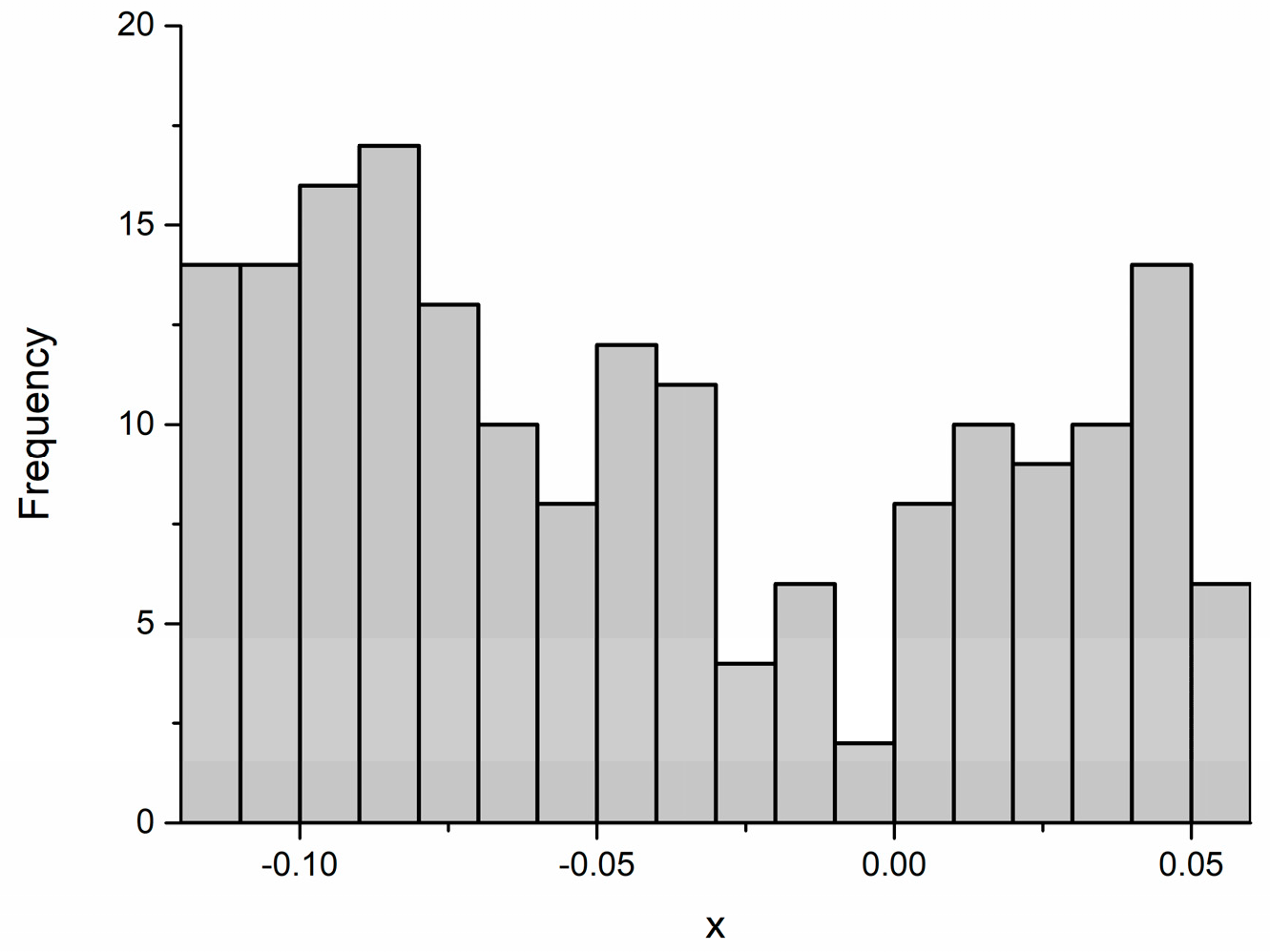

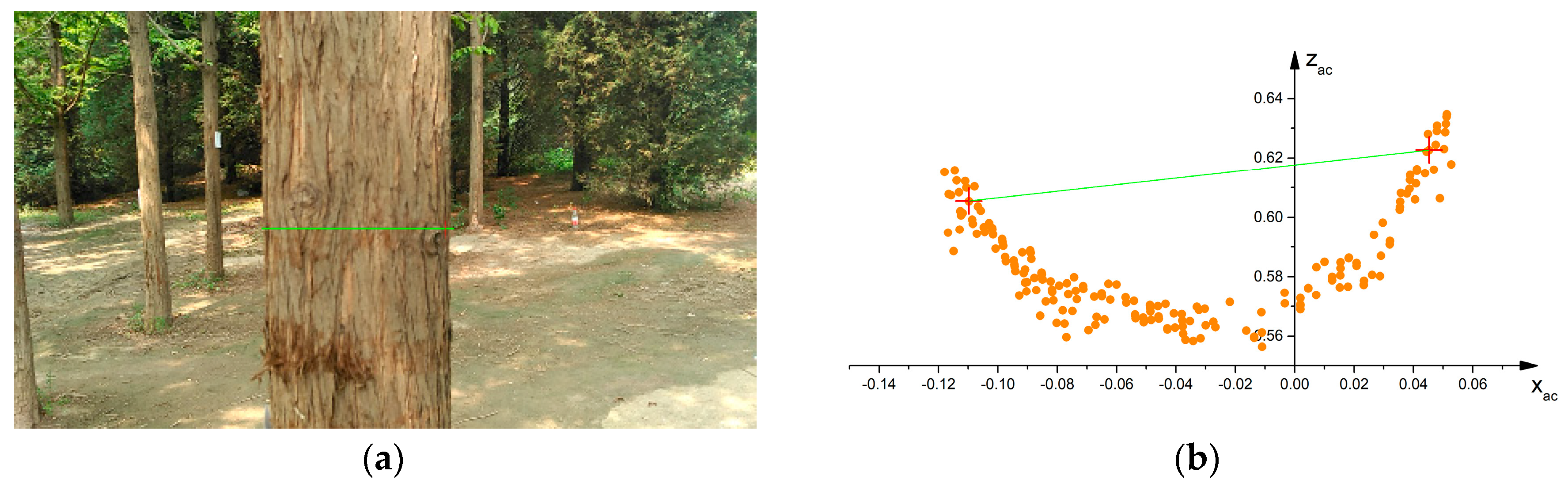

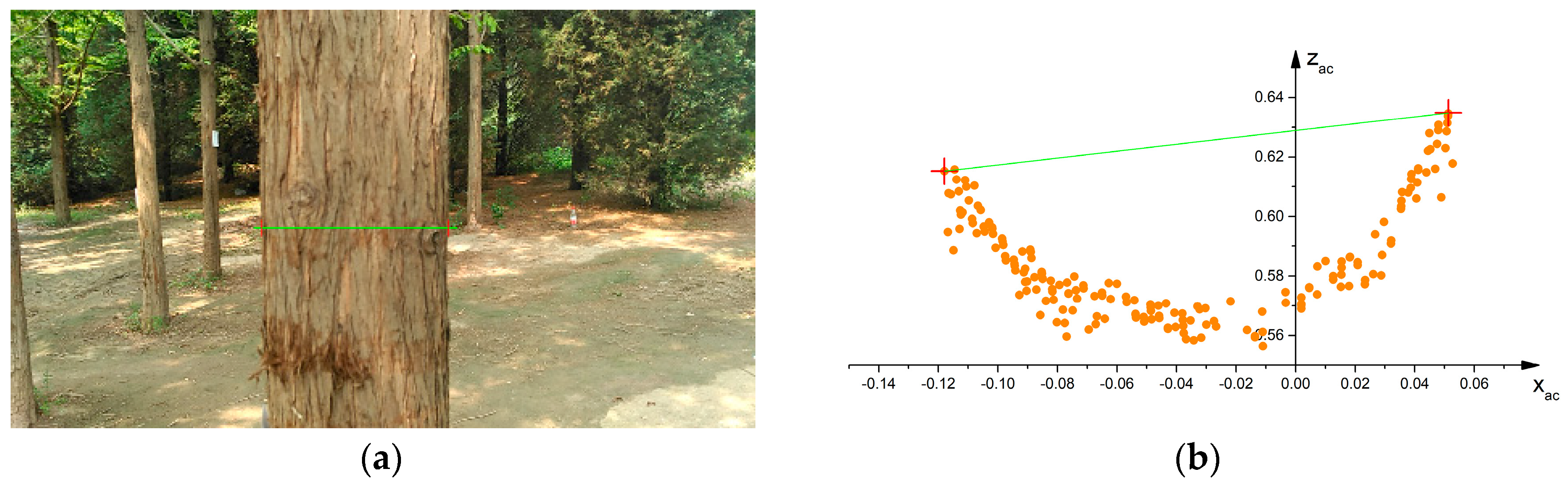

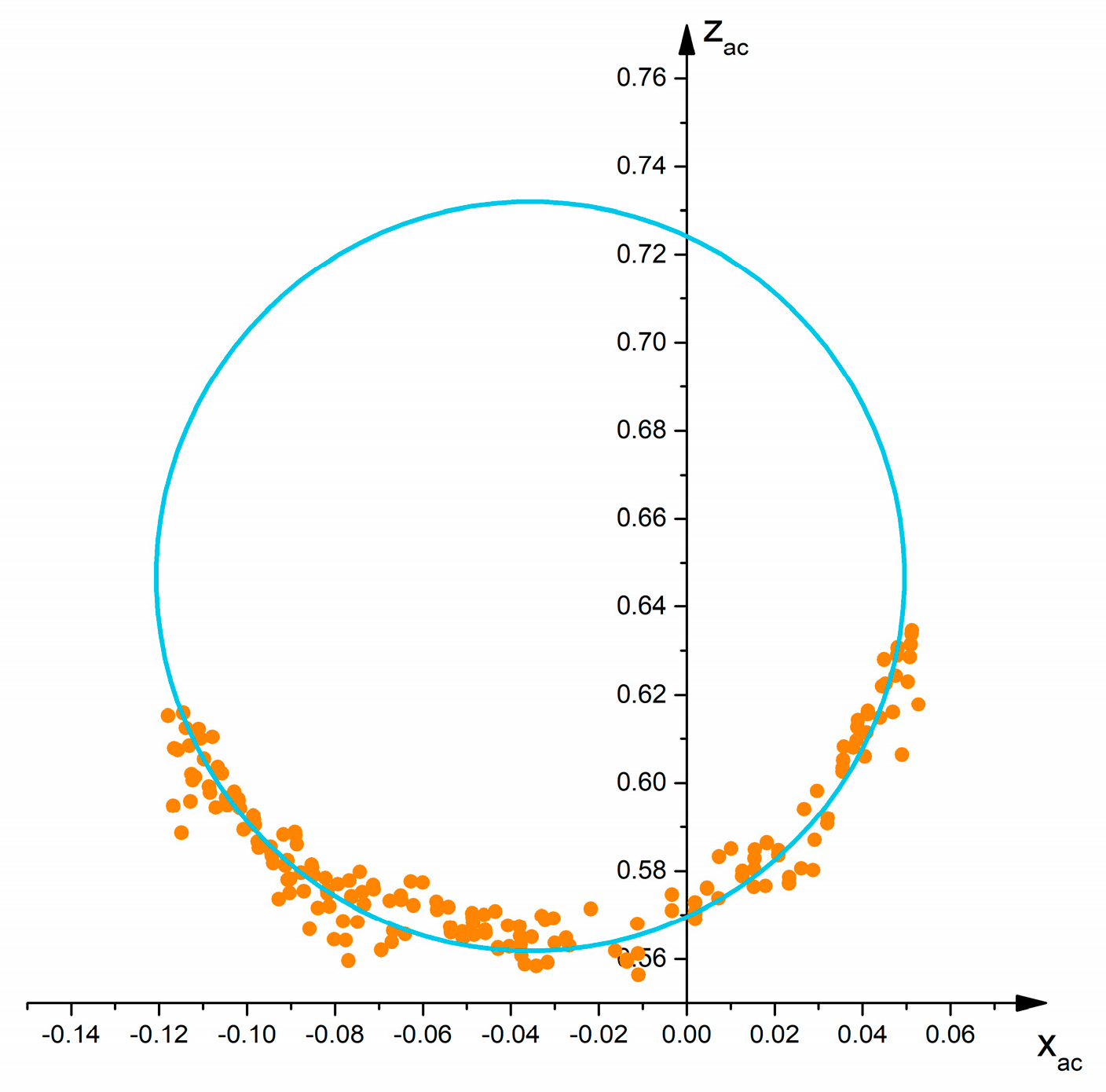

Estimation of the stem position and DBH

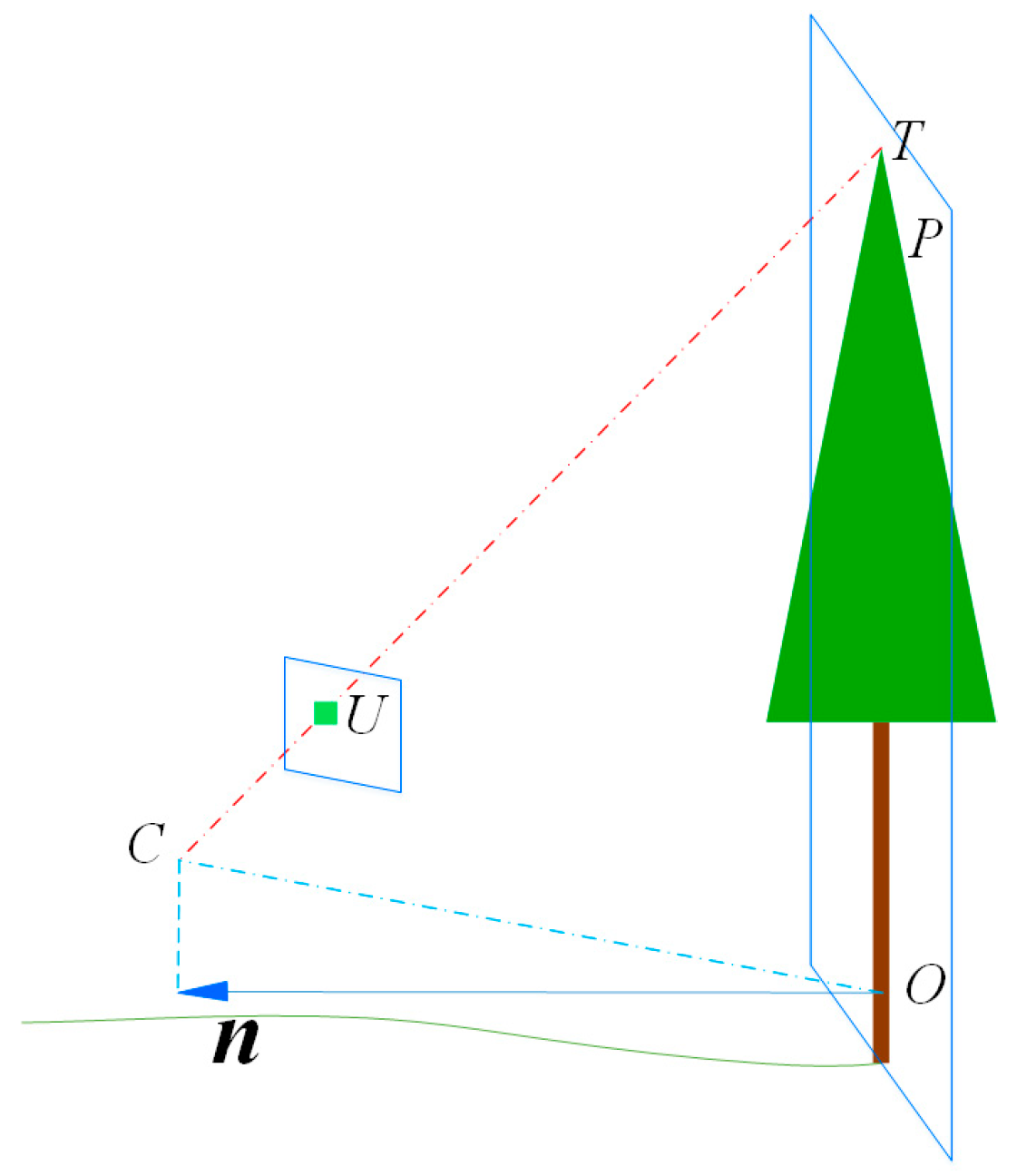

Estimation of the tree height

3.2.4. Evaluation of the Accuracy of the Stem Position, DBH and Tree Height Measurements

4. Results

4.1. Evaluation of Tree Position

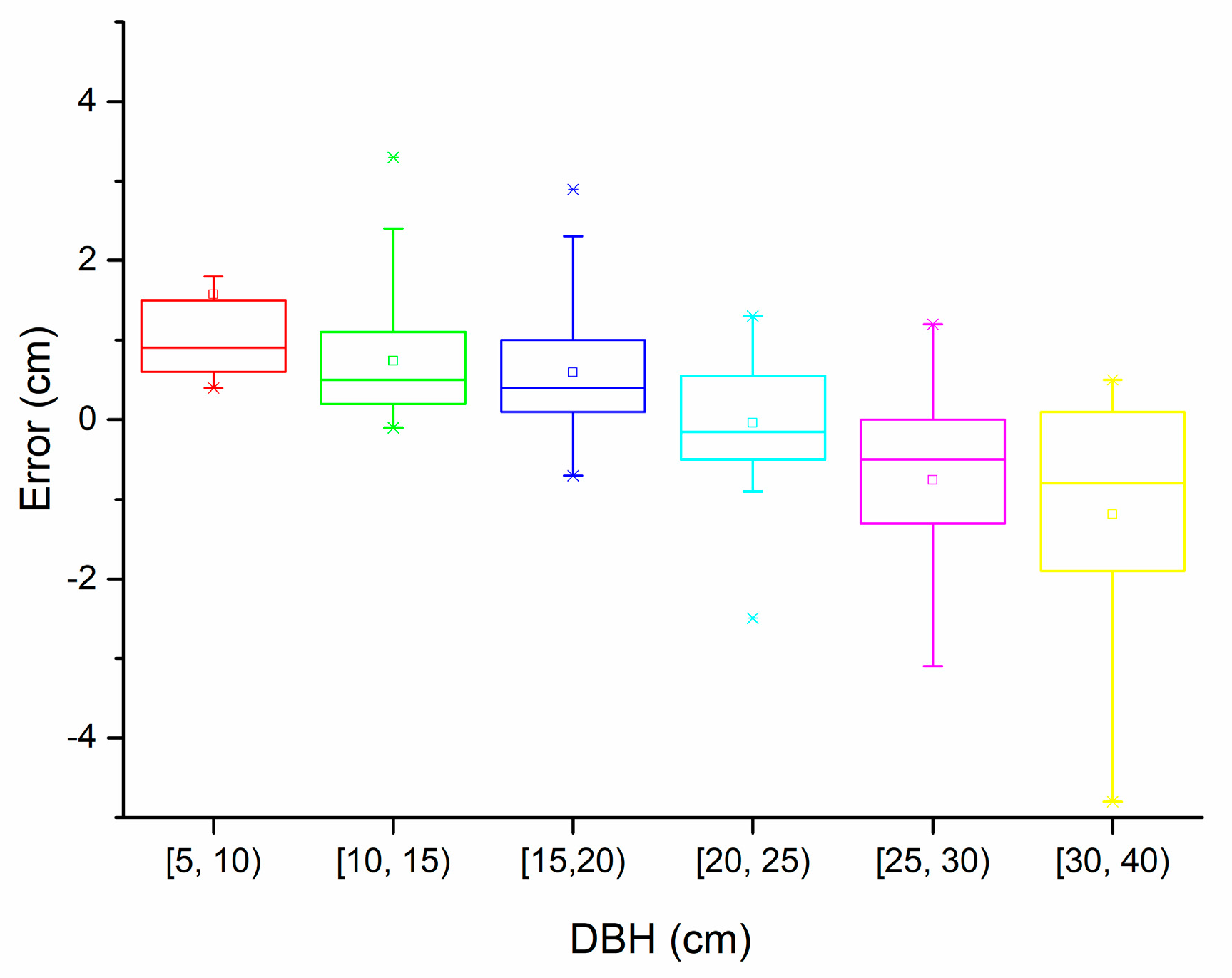

4.2. Evaluation of DBH

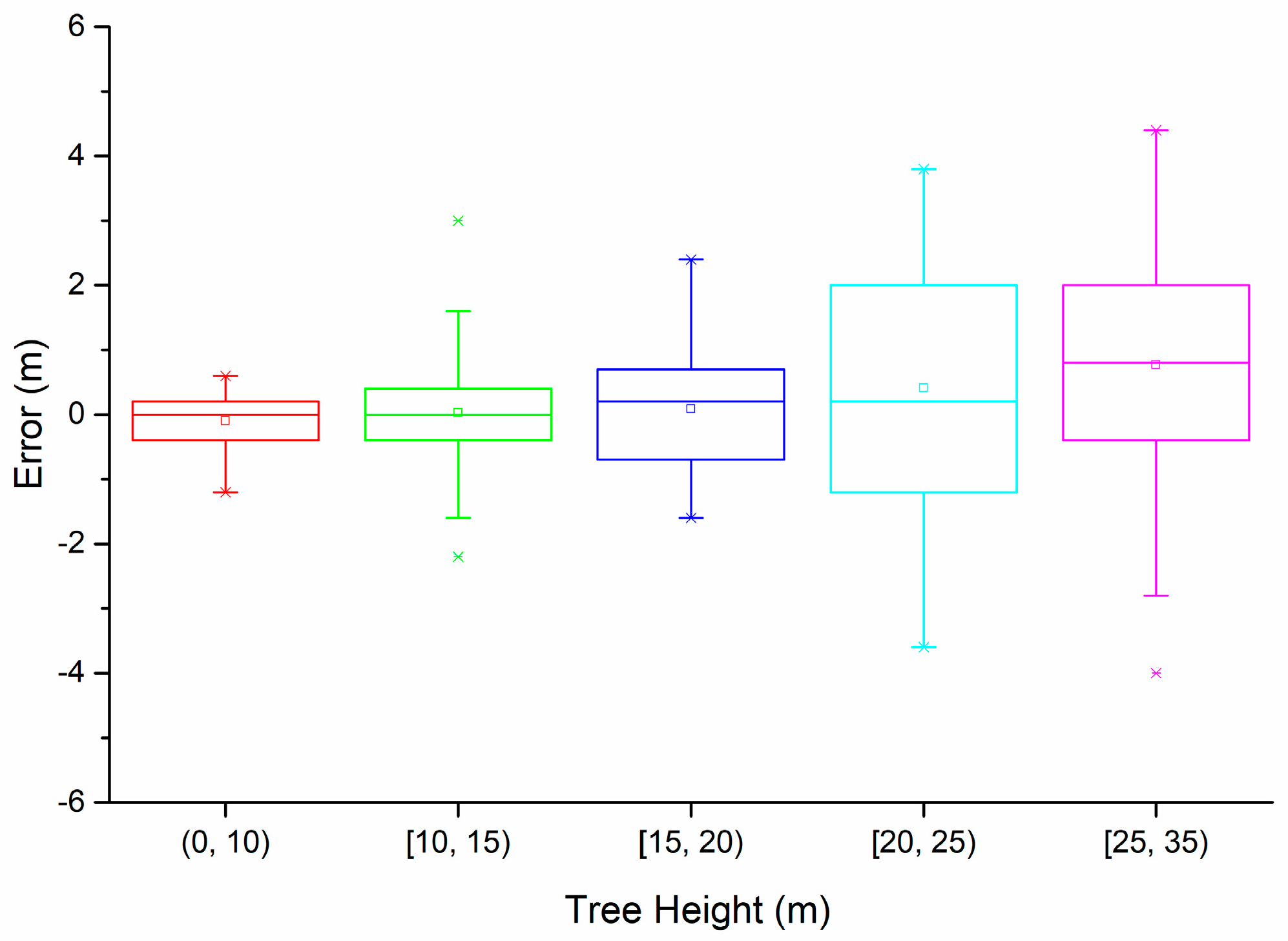

4.3. Evaluation of Tree Height

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Trumbore, S.; Brando, P.; Hartmann, H. Forest health and global change. Science 2015, 349, 814–818. [Google Scholar] [CrossRef] [PubMed]

- FAO (Food and Agriculture Organization of the United Nations). Global Forest Resources Assessment 2010; Main report, FAO Forest paper; FAO: Rome, Italiy, 2010; Volume 163. [Google Scholar]

- Tubiello, F.N.; Salvatore, M.; Ferrara, A.F.; House, J.; Federici, S.; Rossi, S.; Biancalani, R.; Condor Golec, R.D.; Jacobs, H.; Flammini, A.; et al. The contribution of agriculture, forestry and other land use activities to global warming, 1990–2012. Glob. Chang. Biol. 2015, 21, 2655–2660. [Google Scholar] [CrossRef] [PubMed]

- MacDicken, K.G. Global forest resources assessment 2015: What, why and how? For. Ecol. Manag. 2015, 352, 3–8. [Google Scholar] [CrossRef]

- Reutebuch, S.E.; Andersen, H.-E.; McGaughey, R.J. Light detection and ranging (LIDAR): An emerging tool for multiple resource inventory. J. For. 2005, 103, 286–292. [Google Scholar]

- Liang, X.; Kankare, V.; Hyyppä, J.; Wang, Y.; Kukko, A.; Haggrén, H.; Yu, X.; Kaartinen, H.; Jaakkola, A.; Guan, F.; et al. Terrestrial laser scanning in forest inventories. ISPRS J. Photogramm. Remote Sens. 2016, 115, 63–77. [Google Scholar] [CrossRef]

- Cabo, C.; Ordóñez, C.; López-Sánchez, C.A.; Armesto, J. Automatic dendrometry: Tree detection, tree height and diameter estimation using terrestrial laser scanning. Int. J. Appl. Earth Obs. Geoinf. 2018, 69, 164–174. [Google Scholar] [CrossRef]

- Barrett, F.; McRoberts, R.E.; Tomppo, E.; Cienciala, E.; Waser, L.T. A questionnaire-based review of the operational use of remotely sensed data by national forest inventories. Remote Sens. Environ. 2016, 174, 279–289. [Google Scholar] [CrossRef]

- Suciu, G.; Ciuciuc, R.; Pasat, A.; Scheianu, A. Remote Sensing for Forest Environment Preservation. In Proceedings of the 2017 World Conference on Information Systems and Technologies, Madeira, Portuga, 11–13 April 2017; pp. 211–220. [Google Scholar]

- Gougherty, A.V.; Keller, S.R.; Kruger, A.; Stylinski, C.D.; Elmore, A.J.; Fitzpatrick, M.C. Estimating tree phenology from high frequency tree movement data. Agric. For. Meteorol. 2018, 263, 217–224. [Google Scholar] [CrossRef]

- Alcarria, R.; Bordel, B.; Manso, M.Á.; Iturrioz, T.; Pérez, M. Analyzing UAV-based remote sensing and WSN support for data fusion. In Proceedings of the 2018 International Conference on Information Technology & Systems, Libertad City, Ecuador, 10–12 January 2018; pp. 756–766. [Google Scholar]

- Lim, K.; Treitz, P.; Wulder, M.; St-Onge, B.; Flood, M. LiDAR remote sensing of forest structure. Prog. Phys. Geogr. 2003, 27, 88–106. [Google Scholar] [CrossRef]

- Liang, X.; Litkey, P.; Hyyppa, J.; Kaartinen, H.; Vastaranta, M.; Holopainen, M. Automatic stem mapping using single-scan terrestrial laser scanning. IEEE Trans. Geosci. Remote Sens. 2012, 50, 661–670. [Google Scholar] [CrossRef]

- Béland, M.; Widlowski, J.-L.; Fournier, R.A.; Côté, J.-F.; Verstraete, M.M. Estimating leaf area distribution in savanna trees from terrestrial LiDAR measurements. Agric. For. Meteorol. 2011, 151, 1252–1266. [Google Scholar] [CrossRef]

- Liang, X.; Hyyppä, J. Automatic stem mapping by merging several terrestrial laser scans at the feature and decision levels. Sensors 2013, 13, 1614–1634. [Google Scholar] [CrossRef] [PubMed]

- Srinivasan, S.; Popescu, S.C.; Eriksson, M.; Sheridan, R.D.; Ku, N.-W. Terrestrial laser scanning as an effective tool to retrieve tree level height, crown width, and stem diameter. Remote Sens. 2015, 7, 1877–1896. [Google Scholar] [CrossRef]

- Liang, X.; Hyyppä, J.; Kukko, A.; Kaartinen, H.; Jaakkola, A.; Yu, X. The use of a mobile laser scanning system for mapping large forest plots. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1504–1508. [Google Scholar] [CrossRef]

- Lin, Y.; Hyyppa, J. Multiecho-recording mobile laser scanning for enhancing individual tree crown reconstruction. IEEE Trans. Geosci. Remote Sens. 2012, 50, 4323–4332. [Google Scholar] [CrossRef]

- Ryding, J.; Williams, E.; Smith, M.J.; Eichhorn, M.P. Assessing handheld mobile laser scanners for forest surveys. Remote Sens. 2015, 7, 1095–1111. [Google Scholar] [CrossRef]

- Bauwens, S.; Bartholomeus, H.; Calders, K.; Lejeune, P. Forest inventory with terrestrial LiDAR: A comparison of static and hand-held mobile laser scanning. Forests 2016, 7, 127. [Google Scholar] [CrossRef]

- Forsman, M.; Holmgren, J.; Olofsson, K. Tree stem diameter estimation from mobile laser scanning using line-wise intensity-based clustering. Forests 2016, 7, 206. [Google Scholar] [CrossRef]

- Pierzchała, M.; Giguère, P.; Astrup, R. Mapping forests using an unmanned ground vehicle with 3D LiDAR and graph-SLAM. Comput. Electron. Agric. 2018, 145, 217–225. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part, I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Bailey, T.; Durrant-Whyte, H. Simultaneous localization and mapping (SLAM): Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Tang, J.; Chen, Y.; Kukko, A.; Kaartinen, H.; Jaakkola, A.; Khoramshahi, E.; Hakala, T.; Hyyppä, J.; Holopainen, M.; Hyyppä, H. SLAM-aided stem mapping for forest inventory with small-footprint mobile LiDAR. Forests 2015, 6, 4588–4606. [Google Scholar] [CrossRef]

- Holmgren, J.; Tulldahl, H.; Nordlöf, J.; Nyström, M.; Olofsson, K.; Rydell, J.; Willén, E. Estimation of tree position and stem diameter using simultaneous localization and mapping with data from a backpack-mounted laser scanner. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, 42, 59–63. [Google Scholar] [CrossRef]

- Kukko, A.; Kaijaluoto, R.; Kaartinen, H.; Lehtola, V.V.; Jaakkola, A.; Hyyppä, J. Graph SLAM correction for single scanner MLS forest data under boreal forest canopy. ISPRS J. Photogramm. Remote Sens. 2017, 132, 199–209. [Google Scholar] [CrossRef]

- Foix, S.; Alenya, G.; Torras, C. Lock-in time-of-flight (ToF) cameras: A survey. IEEE Sens. J. 2011, 11, 1917–1926. [Google Scholar] [CrossRef]

- Aijaz, M.; Sharma, A. Google Project Tango. In Proceedings of the 2016 International Conference on Advanced Computing, Moradabad, India, 22–23 January 2016. [Google Scholar]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef]

- Lenovo Phab 2 Pro. Available online: http://www3.lenovo.com/us/en/virtual-reality-and-smart-devices/augmented-reality/-phab-2-pro/Lenovo-Phab-2-Pro/p/WMD00000220/ (accessed on 22 October 2018).

- Hyyppä, J.; Virtanen, J.-P.; Jaakkola, A.; Yu, X.; Hyyppä, H.; Liang, X. Feasibility of Google Tango and Kinect for crowdsourcing forestry information. Forests 2017, 9, 6. [Google Scholar] [CrossRef]

- Tomaštík, J.; Saloň, Š.; Tunák, D.; Chudý, F.; Kardoš, M. Tango in forests–An initial experience of the use of the new Google technology in connection with forest inventory tasks. Comput. Electron. Agric. 2017, 141, 109–117. [Google Scholar] [CrossRef]

- Pueschel, P.; Newnham, G.; Rock, G.; Udelhoven, T.; Werner, W.; Hill, J. The influence of scan mode and circle fitting on tree stem detection, stem diameter and volume extraction from terrestrial laser scans. ISPRS J. Photogramm. Remote Sens. 2013, 77, 44–56. [Google Scholar] [CrossRef]

- Olofsson, K.; Holmgren, J.; Olsson, H. Tree stem and height measurements using terrestrial laser scanning and the RANSAC algorithm. Remote Sens. 2014, 6, 4323–4344. [Google Scholar] [CrossRef]

- Kalliovirta, J.; Laasasenaho, J.; Kangas, A. Evaluation of the laser-relascope. For. Ecol. Manag. 2005, 204, 181–194. [Google Scholar] [CrossRef]

- Buerli, M.; Misslinger, S. Introducing ARKit-Augmented Reality for iOS. In Proceedings of the 2017 Apple Worldwide Developers Conference, San Jose, CA, USA, 5–9 June 2017; pp. 1–187. [Google Scholar]

- ARCore. Available online: https://developers.google.com/ar/ (accessed on 22 October 2018).

| Plot | Tree Number | Dominant Species | DBH (cm) | Tree Height (m) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Mean | SD | Min | Max | Mean | SD | Min | Max | |||

| 1 | 20 | Metasequoia glyptostroboides | 14.1 | 2.2 | 8.5 | 17.5 | 11.1 | 3.1 | 3.8 | 17.8 |

| 2 | 26 | Ulmus spp. | 16.3 | 6.7 | 6.4 | 31.3 | 12.2 | 4.3 | 4.9 | 21.7 |

| 3 | 22 | Fraxinus chinensis & Ulmus spp. | 20.7 | 6.9 | 12.4 | 34.5 | 9.5 | 3.4 | 5.4 | 15.9 |

| 4 | 28 | Ginkgo biloba | 16.5 | 1.6 | 13.1 | 19.6 | 11.3 | 1.0 | 9.6 | 13.9 |

| 5 | 19 | Populus spp. | 26.1 | 2.3 | 22.0 | 30.2 | 25.1 | 4.5 | 14.9 | 37.3 |

| 6 | 17 | Populus spp. | 27.8 | 2.6 | 23.2 | 32.0 | 25.4 | 2.6 | 19.1 | 29.5 |

| 7 | 21 | Styphnolobium japonicum | 12.9 | 4.2 | 6.1 | 21.5 | 11.7 | 3.1 | 4.6 | 18.1 |

| 8 | 20 | Fraxinus chinensis | 16.1 | 4.0 | 10.2 | 23.5 | 10.8 | 3.7 | 1.2 | 16.4 |

| 9 | 20 | Ginkgo biloba | 19.6 | 2.4 | 15.6 | 23.7 | 12.8 | 1.8 | 10.4 | 17.5 |

| Plot | (m) | (m) | (m) | (m) | (m) | |||

|---|---|---|---|---|---|---|---|---|

| (m) | (m) | |||||||

| 1 | 0.08 | −0.12 | 0.08 | 0.13 | 0.20 | 0.13 | 0.11 | 0.17 |

| 2 | 0.00 | 0.08 | 0.09 | 0.05 | 0.09 | 0.09 | 0.09 | 0.10 |

| 3 | 0.05 | −0.01 | 0.09 | 0.10 | 0.12 | 0.10 | 0.11 | 0.10 |

| 4 | −0.08 | −0.06 | 0.07 | 0.11 | 0.05 | 0.10 | 0.11 | 0.12 |

| 5 | 0.08 | −0.04 | 0.06 | 0.10 | −0.04 | 0.10 | 0.10 | 0.11 |

| 6 | −0.10 | −0.08 | 0.05 | 0.08 | −0.08 | 0.08 | 0.12 | 0.12 |

| 7 | 0.00 | −0.01 | 0.12 | 0.07 | 0.12 | 0.12 | 0.12 | 0.07 |

| 8 | 0.13 | 0.06 | 0.11 | 0.11 | 0.01 | 0.11 | 0.17 | 0.13 |

| 9 | −0.01 | 0.05 | 0.16 | 0.08 | 0.35 | 0.16 | 0.16 | 0.09 |

| Total | 0.01 | −0.01 | 0.10 | 0.09 | 0.10 | 0.10 | 0.12 | 0.12 |

| Plot | RMSE (cm) | relRMSE (%) | BIAS (cm) | relBIAS (%) |

|---|---|---|---|---|

| 1 | 0.73 | 5.21% | 0.41 | 2.91% |

| 2 | 1.80 | 11.09% | 1.26 | 7.73% |

| 3 | 1.50 | 7.27% | 0.75 | 3.63% |

| 4 | 1.05 | 6.40% | 0.82 | 4.99% |

| 5 | 2.22 | 8.39% | −1.64 | −6.21% |

| 6 | 0.89 | 3.19% | −0.32 | −1.16% |

| 7 | 0.51 | 3.97% | 0.40 | 3.06% |

| 8 | 0.79 | 4.93% | 0.49 | 3.04% |

| 9 | 0.39 | 1.99% | −0.15 | −0.74% |

| Total | 1.26 | 6.39% | 0.33 | 1.78% |

| Plot | RMSE (m) | relRMSE (%) | BIAS (m) | relBIAS (%) |

|---|---|---|---|---|

| 1 | 0.54 | 4.91% | −0.08 | −0.72% |

| 2 | 0.90 | 7.35% | 0.12 | 1.01% |

| 3 | 0.54 | 5.70% | −0.13 | −1.34% |

| 4 | 0.56 | 4.94% | 0.05 | 0.44% |

| 5 | 1.88 | 7.47% | −0.83 | −3.31% |

| 6 | 2.44 | 9.58% | 2.08 | 8.18% |

| 7 | 0.76 | 6.53% | 0.41 | 3.50% |

| 8 | 0.46 | 4.22% | −0.18 | −1.67% |

| 9 | 0.75 | 5.90% | −0.16 | −1.25% |

| Total | 1.11 | 7.43% | 0.15 | 1.08% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, Y.; Feng, Z.; Mannan, A.; Khan, T.U.; Shen, C.; Saeed, S. Estimating Tree Position, Diameter at Breast Height, and Tree Height in Real-Time Using a Mobile Phone with RGB-D SLAM. Remote Sens. 2018, 10, 1845. https://doi.org/10.3390/rs10111845

Fan Y, Feng Z, Mannan A, Khan TU, Shen C, Saeed S. Estimating Tree Position, Diameter at Breast Height, and Tree Height in Real-Time Using a Mobile Phone with RGB-D SLAM. Remote Sensing. 2018; 10(11):1845. https://doi.org/10.3390/rs10111845

Chicago/Turabian StyleFan, Yongxiang, Zhongke Feng, Abdul Mannan, Tauheed Ullah Khan, Chaoyong Shen, and Sajjad Saeed. 2018. "Estimating Tree Position, Diameter at Breast Height, and Tree Height in Real-Time Using a Mobile Phone with RGB-D SLAM" Remote Sensing 10, no. 11: 1845. https://doi.org/10.3390/rs10111845

APA StyleFan, Y., Feng, Z., Mannan, A., Khan, T. U., Shen, C., & Saeed, S. (2018). Estimating Tree Position, Diameter at Breast Height, and Tree Height in Real-Time Using a Mobile Phone with RGB-D SLAM. Remote Sensing, 10(11), 1845. https://doi.org/10.3390/rs10111845