A Novel Hybrid Deep Learning for Attitude Prediction in Sustainable Application of Shield Machine

Abstract

1. Introduction

1.1. Research Background

1.2. Research Gaps and Objectives

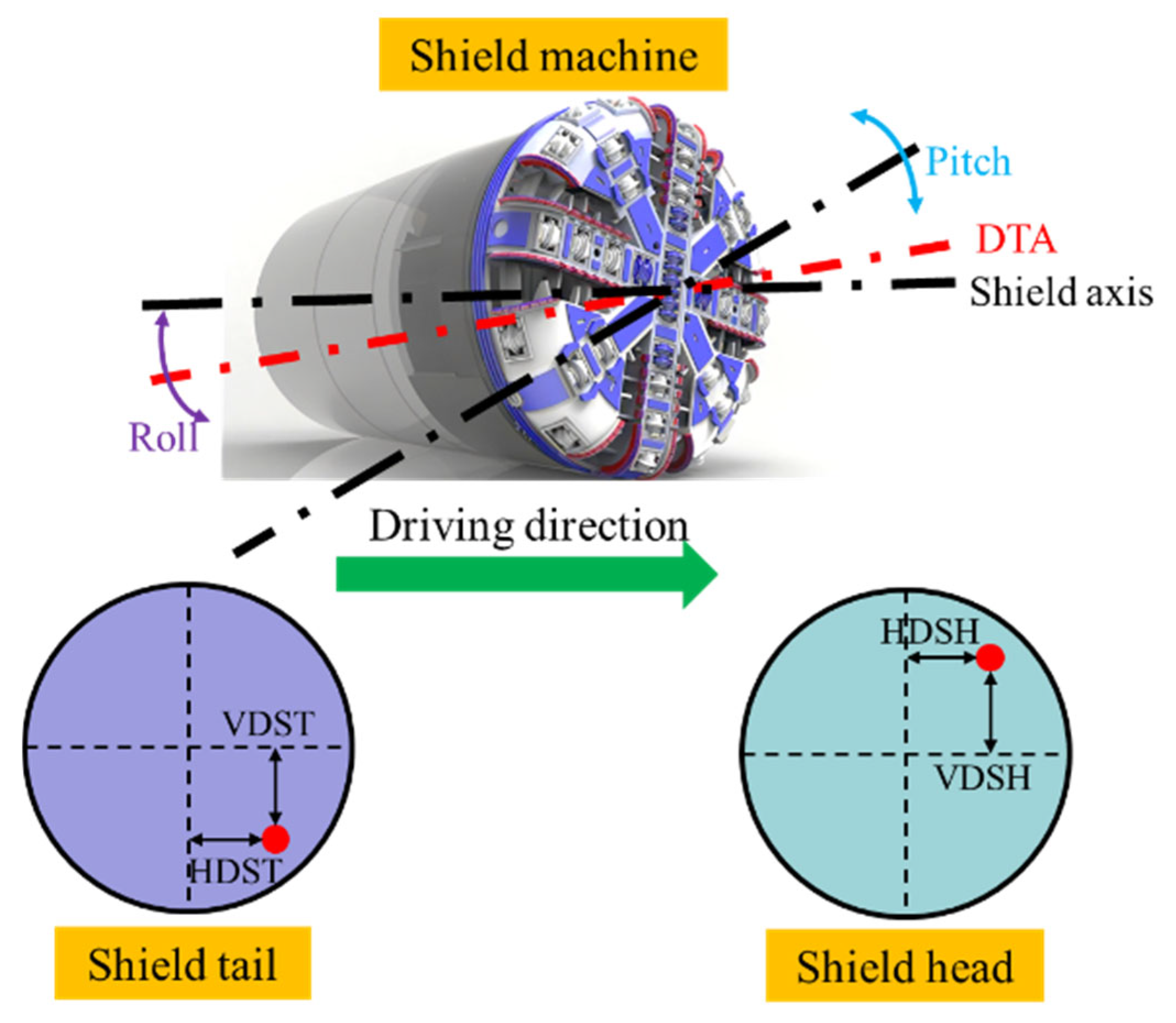

2. Problem Definition

3. Method

3.1. Framework

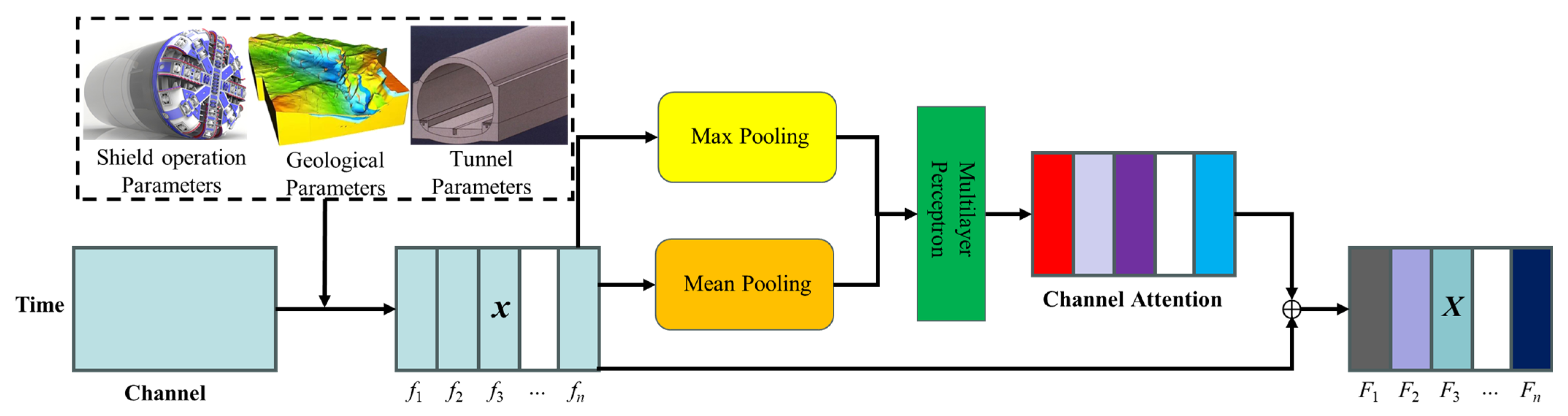

3.2. CNN-Attention Model

3.3. Transformer-BiLSTM Model

4. Case Study

4.1. Project Overview

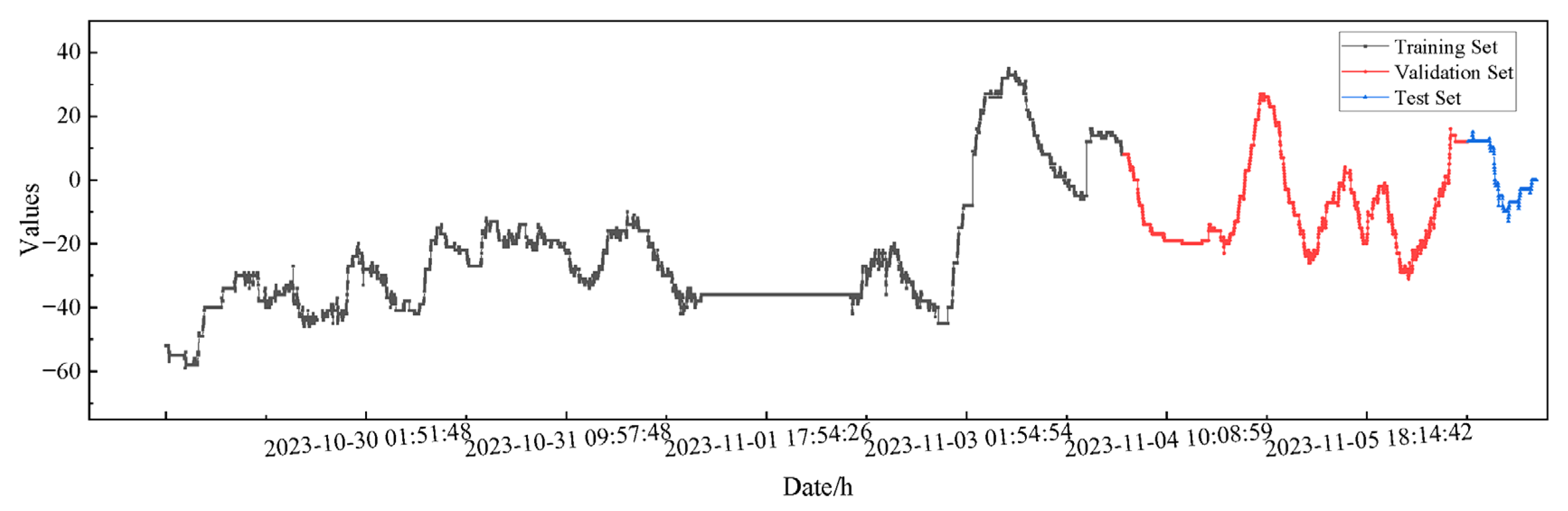

4.2. Parameter Selection

4.3. Baseline Models and Evaluation Metrics

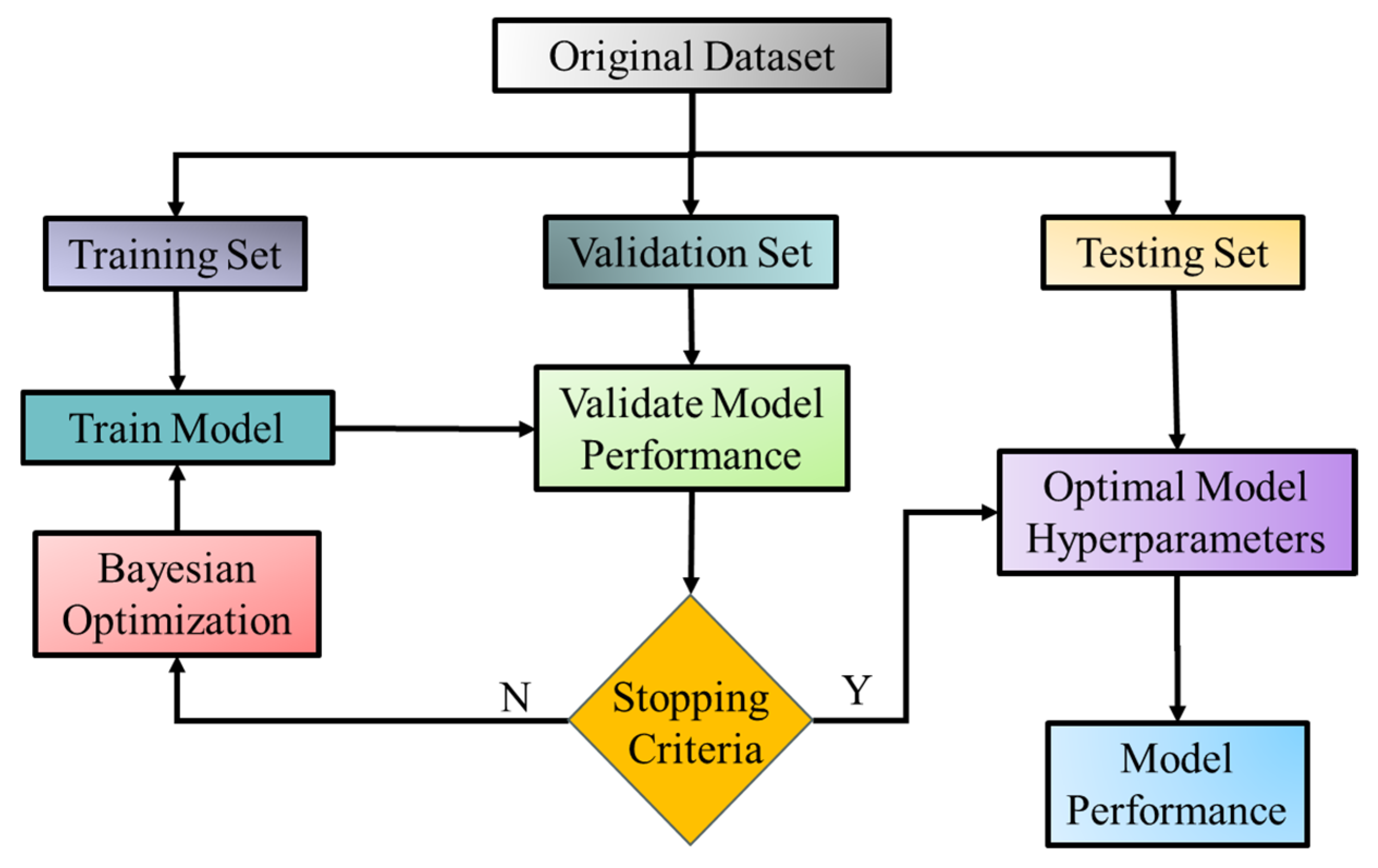

4.4. Prediction Flowchart

5. Results and Discussions

5.1. Loss Values

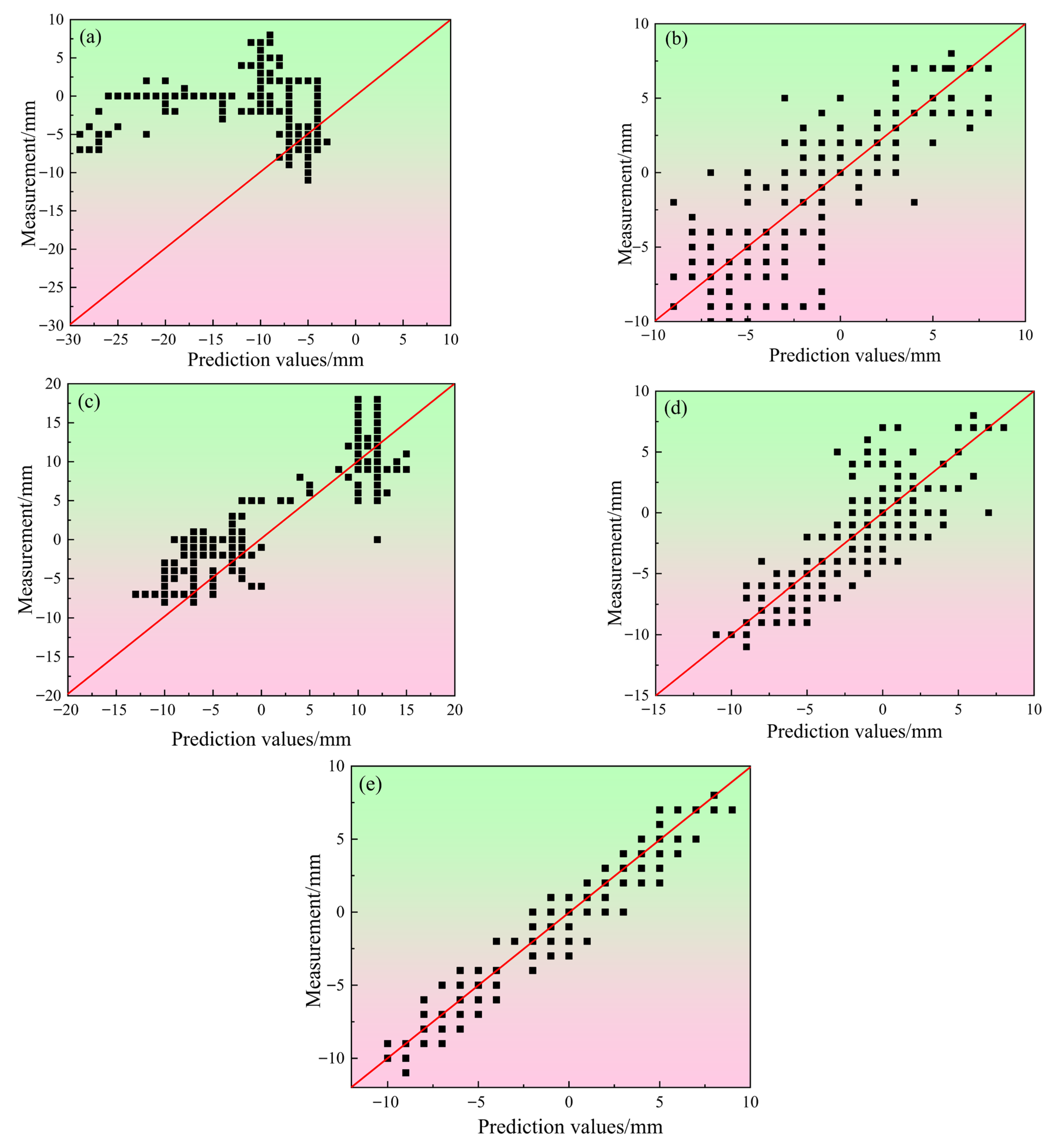

5.2. Comparison with Other Models

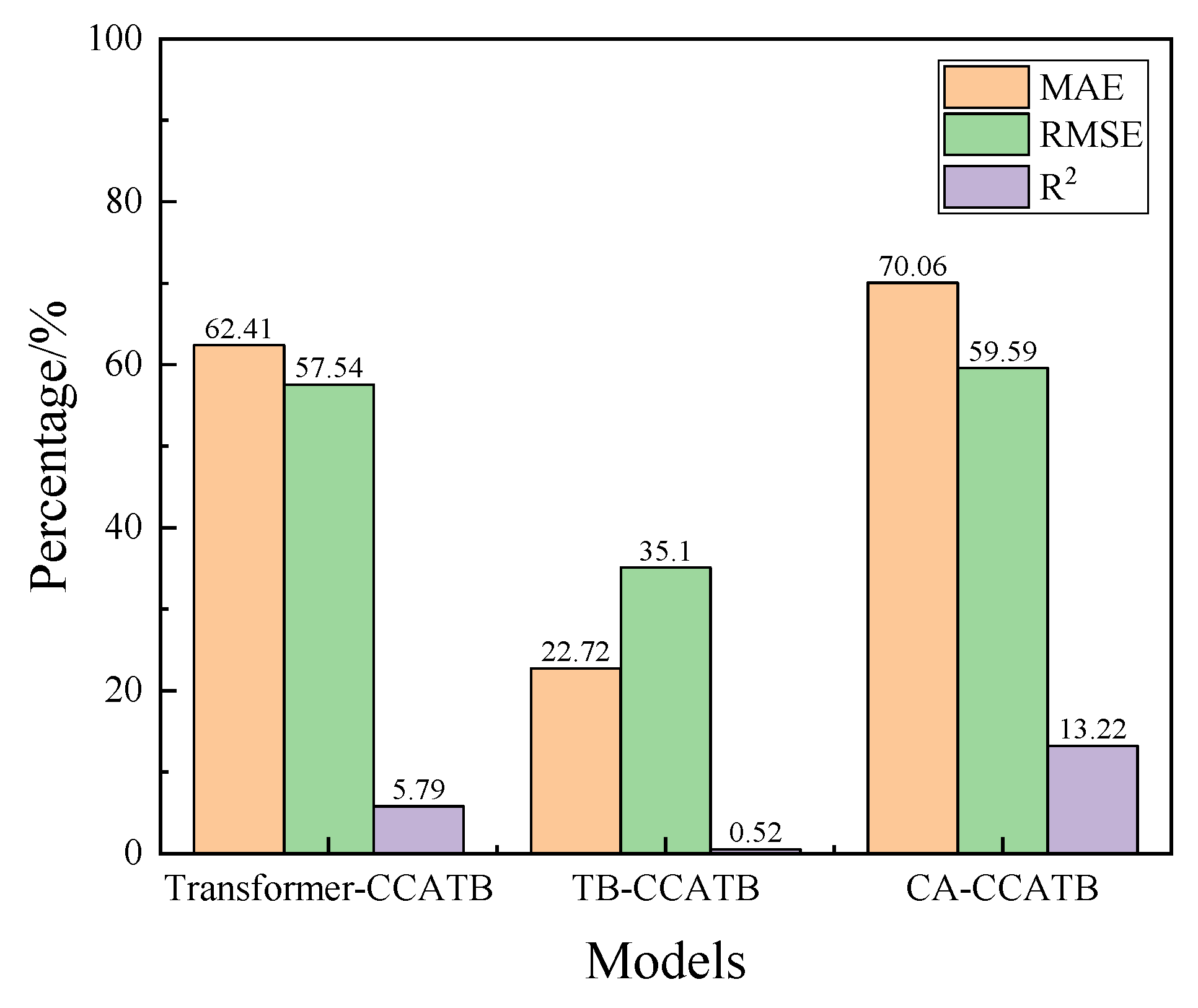

5.3. Ablation Experiments

5.4. Analysis of Generalization Ability

5.5. Engineering Application

5.6. Parameter Correlation Analysis

5.7. Further Discussion

6. Conclusions

- (1)

- Compared with existing shield machine’s attitude prediction models, the CCATB model can not only learn the importance of various parameters affecting the shield machine’s attitude but also capture both global and local information characteristics in the attitude data.

- (2)

- Comparison and ablation experiments revealed that the MAE and RMSE values for the CCATB model are lower than those of the baseline and sub-models. Additionally, the R2 value for the CCATB model is higher than that of the baseline and sub-models, indicating that the CCATB model has superior prediction accuracy.

- (3)

- By selecting monitoring data from another tunnelling interval for experimental verification, it has been confirmed that the CCATB model demonstrates strong generalization ability and reliability, achieving excellent prediction results across different construction segments.

- (4)

- Applying the CCATB model to subsequent shield tunneling processes and integrating it with on-site engineering measures can mitigate the lag effect of field adjustments, effectively reducing shield alignment deviations during tunneling. The proposed CCATB model demonstrates practical significance for controlling shield alignment in shield tunnel construction.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zhang, Y.X.; Ren, X.H.; Zhang, J.X.; Zhang, Y.Z.; Ma, Z.C. A novel workflow including denoising and hybrid deep learning model for shield tunneling construction parameter prediction. Eng. Appl. Artif. Intell. 2024, 133 Pt A, 108103. [Google Scholar] [CrossRef]

- Lin, L.K.; Zhu, H.X.; Ma, Y.B.; Peng, Y.Y.; Xia, Y.M. Surface feature and defect detection method for shield tunnel based on deep learning. J. Comput. Civ. Eng. 2025, 39, 04025019. [Google Scholar] [CrossRef]

- Feng, Z.B.; Wang, J.Y.; Liu, W.; Li, T.J.; Wu, X.G.; Zhao, P.X. Data-driven deformation prediction and control for existing tunnels below shield tunneling. Eng. Appl. Artif. Intell. 2024, 138 Pt A, 109379. [Google Scholar] [CrossRef]

- Li, J.; Wang, H.W. Modeling and analyzing multiteam coordination task safety risks in socio-technical systems based on FRAM and multiplex network: Application in the construction industry. Reliab. Eng. Syst. Saf. 2023, 229, 108836. [Google Scholar] [CrossRef]

- Zhao, S.; Liao, S.M.; Yang, Y.F.; Tang, L.H. Prediction of shield tunneling attitudes: A multi-dimensional feature synthesizing and screening method. J. Rock Mech. Geotech. Eng. 2024, 17, 3358–3377. [Google Scholar] [CrossRef]

- Xu, J.; Bu, J.F.; Qin, N.; Huang, D.Q. SCA-MADRL: Multiagent deep reinforcement learning framework based on state classification and assignment for intelligent shield attitude control. Expert Syst. Appl. 2024, 235, 121258. [Google Scholar] [CrossRef]

- Shen, X.; Yuan, D.J. Influence of shield yawing angle variation on shield–soil interaction. China J. Highw. Transp. 2020, 33, 132–143. [Google Scholar] [CrossRef]

- Pan, G.R.; Fan, W. A rigorous calculating model of inclinometer-data fusion in tunnel-boring-machine attitude. J. Tongji Univ. (Nat. Sci. Ed.) 2018, 46, 1433–1439. Available online: https://link.cnki.net/urlid/31.1267.N.20181112.1116.014 (accessed on 20 November 2025).

- Zhong, X.C.; Yi, B.B.; Zhu, W.B. Numerical simulation of shield attitude and its mutation judgement methods for shield tunneling through fine sand stratum. J. Huazhong Univ. Sci. Technol. (Nat. Sci. Ed.) 2024, 1–8. Available online: https://link.cnki.net/urlid/42.1658.N.20221021.1601.003 (accessed on 20 November 2025).

- Wu, G.Q.; Tan, Y.J.; Zeng, J.Y.; Zheng, J.L.; Zhang, R.; Liu, Y.F.; Yue, S. Laboratory tests of an eccentrically loaded strip footing above single underlying void. J. Build. Eng. 2025, 111, 113211. [Google Scholar] [CrossRef]

- Yang, H.; Shi, H.; Gong, G.; Hu, G. Electro-hydraulic proportional control of thrust system for shield tunneling machine. Autom. Constr. 2009, 18, 950–956. [Google Scholar] [CrossRef]

- Xie, H.; Duan, X.; Yang, H.; Liu, Z. Automatic trajectory tracking control of shield tunneling machine under complex stratum working condition. Tunn. Undergr. Space Technol. 2012, 32, 87–97. [Google Scholar] [CrossRef]

- Liu, H.; Wang, J.; Zhang, L.; Zhao, G. Trajectory tracking of hard rock tunnel boring machine with cascade control structure. In Proceedings of the 2014 IEEE Chinese Guidance, Navigation and Control Conference, Yantai, China, 8–10 August 2014; IEEE: Shanghai, China, 2014; pp. 2326–2331. [Google Scholar] [CrossRef]

- Wang, L.; Yang, X.; Gong, G.; Du, J. Pose and trajectory control of shield tunneling machine in complicated stratum. Autom. Constr. 2018, 93, 192–199. [Google Scholar] [CrossRef]

- Hartmann, T.; Trappey, A. Advanced Engineering Informatics—Philosophical and methodological foundations with examples from civil and construction engineering. Dev. Built Environ. 2020, 4, 100020. [Google Scholar] [CrossRef]

- Lyu, G.W.; Luo, C.Y.; Wu, S.S.; Wang, C.; Ma, W.; Yang, M.; Wang, P.; Yang, S.Q. An enhanced variational mode decomposition method for processing hydrodynamic data of underwater gliders. Measurement 2025, 244, 116468. [Google Scholar] [CrossRef]

- Peng, G.L.; Sun, S.S.; Xu, Z.W.; Du, J.X.; Qin, Y.J.; Sharshir, S.W.; Kandeal, A.W.; Kabeel, A.E.; Yang, N. The effect of dataset size and the process of big data mining for investigating solar-thermal desalination by using machine learning. Int. J. Heat Mass Transf. 2025, 236, 126365. [Google Scholar] [CrossRef]

- Lu, Y.Z.; Wang, H.; Lu, Z.G.; Niu, J.Y.; Liu, C. Gait pattern recognition based on electroencephalogram signals with common spatial pattern and graph attention networks. Eng. Appl. Artif. Intell. 2025, 141, 109680. [Google Scholar] [CrossRef]

- Parashar, A.; Parashar, A.; Rida, I. Journey into gait biometrics: Integrating deep learning for enhanced pattern recognition. Digit. Signal Process. 2024, 147, 104393. [Google Scholar] [CrossRef]

- Chen, H.Y.; Li, X.Y.; Feng, Z.B.; Wang, L.; Qin, Y.W.; Skibniewski, M.J.; Chen, Z.S.; Liu, Y. Shield attitude prediction based on Bayesian-LGBM machine learning. Inf. Sci. 2023, 632, 105–129. [Google Scholar] [CrossRef]

- Wang, L.; Pan, Q.J.; Wang, S.Y. Data-driven predictions of shield attitudes using Bayesian machine learning. Comput. Geotech. 2024, 166, 106002. [Google Scholar] [CrossRef]

- Wu, X.G.; Wang, J.Y.; Feng, Z.B.; Chen, H.Y.; Li, T.J.; Liu, Y. Multisource information fusion for real-time prediction and multiobjective optimization of large-diameter slurry shield attitude. Reliab. Eng. Syst. Saf. 2024, 250, 110305. [Google Scholar] [CrossRef]

- Wang, K.Y.; Wu, X.G.; Zhang, L.M.; Song, X.Q. Data-driven multi-step robust prediction of TBM attitude using a hybrid deep learning approach. Adv. Eng. Inform. 2023, 55, 101854. [Google Scholar] [CrossRef]

- Fu, K.; Xue, Y.G.; Qiu, D.H.; Wang, P.; Lu, H.L. Multi-channel fusion prediction of TBM tunneling thrust based on multimodal decomposition and reconstruction. Tunn. Undergr. Space Technol. 2026, 167, 107061. [Google Scholar] [CrossRef]

- Kang, Q.; Chen, E.J.; Li, Z.C.; Luo, H.B.; Liu, Y. Attention-based LSTM predictive model for the attitude and position of shield machine in tunneling. Undergr. Space 2023, 13, 335–350. [Google Scholar] [CrossRef]

- Zhou, C.; Xu, H.C.; Ding, L.Y.; Wei, L.C.; Zhou, Y. Dynamic prediction for attitude and position in shield tunneling: A deep learning method. Autom. Constr. 2019, 105, 102840. [Google Scholar] [CrossRef]

- Dai, Z.Y.; Li, P.N.; Zhu, M.Q.; Zhu, H.H.; Liu, J.; Zhai, Y.X.; Fan, J. Dynamic prediction for attitude and position of shield machine in tunneling: A hybrid deep learning method considering dual attention. Adv. Eng. Inform. 2023, 57, 102032. [Google Scholar] [CrossRef]

- Chen, L.; Tian, Z.Y.; Zhou, S.H.; Gong, Q.M.; Di, H.G. Attitude deviation prediction of shield tunneling machine using Time-Aware LSTM networks. Transp. Geotech. 2024, 45, 101195. [Google Scholar] [CrossRef]

- Fu, Y.B.; Chen, L.; Xiong, H.; Chen, X.S.; Lu, A.D.; Zeng, Y.; Wang, B.L. Data-driven real-time prediction for attitude and position of super-large diameter shield using a hybrid deep learning approach. Undergr. Space 2024, 15, 275–297. [Google Scholar] [CrossRef]

- Xing, J.H.; Lu, J.; Zhang, K.B.; Chen, X.G. ADT: Person re-identification based on efficient attention mechanism and single-channel dual-channel fusion with transformer features aggregation. Expert Syst. Appl. 2025, 261, 125489. [Google Scholar] [CrossRef]

- Chen, Z.H.; Shamsabadi, E.A.; Jiang, S.; Shen, L.M.; Dias-da-Costa, D. An average pooling designed Transformer for robust crack segmentation. Autom. Constr. 2024, 162, 105367. [Google Scholar] [CrossRef]

- Zhang, L.M.; Li, Y.S.; Wang, L.L.; Wang, J.Q.; Luo, H. Physics-data driven multi-objective optimization for parallel control of TBM attitude. Adv. Eng. Inform. 2025, 65 Pt A, 103101. [Google Scholar] [CrossRef]

- Han, J.C.; Zeng, P. Residual BiLSTM based hybrid model for short-term load forecasting in buildings. J. Build. Eng. 2025, 99, 111593. [Google Scholar] [CrossRef]

- Kong, X.; Ling, X.; Tang, L.; Tang, W.; Zhang, Y. Random forest-based predictors for driving forces of earth pressure balance (EPB) shield tunnel boring machine (TBM). Tunn. Undergr. Space Technol. 2022, 122, 104373. [Google Scholar] [CrossRef]

- Zou, Z.; Gao, P.; Yao, C. City-level traffic flow prediction via LSTM networks. In Proceedings of the 2nd International Conference on Advances in Image Processing; ACM: Chengdu, China, 2018; pp. 149–153. [Google Scholar] [CrossRef]

- Gui, L.; Wang, F.; Zhang, W.C. Study on shield attitude prediction and deflection correction based on deep learning. J. Hebei Univ. Eng. (Nat. Sci. Ed.) 2024, 41, 82–89. [Google Scholar]

- Niño-Adan, I.; Landa-Torres, I.; Portillo, E.; Manjarres, D. Analysis and application of normalization methods with supervised feature weighting to improve K-means accuracy. In Advances in Intelligent Systems and Computing, Proceedings of the 14th International Conference on Soft Computing Models in Industrial and Environmental Applications (SOCO 2019); Springer: Seville, Spain, 2019; Volume 950, pp. 13–23. [Google Scholar] [CrossRef]

- Zhang, W.; Zhang, R.; Wu, C.; Goh, A.; Lacasse, S.; Liu, A. State-of-the-art review of soft computing applications in underground excavations. Geosci. Front. 2020, 11, 1095–1106. [Google Scholar] [CrossRef]

| Parameters | Unit | Max | Min | Average |

|---|---|---|---|---|

| Cutter rotational speed | r/min | 1.56 | 0.00 | 0.78 |

| Driving speed | mm/min | 96.00 | 0.00 | 48.00 |

| Penetration depth | mm | 82.31 | 0.00 | 41.155 |

| Cutter torque | kN·m | 9080.00 | 0.00 | 4540.00 |

| Thrust | kN | 14,400.00 | 0.00 | 7200.00 |

| Grouting volume | L | 2.16 | 0.00 | 1.08 |

| Chamber earth pressure | MPa | 1.54 | 0.12 | 0.83 |

| Cohesion | kPa | 6.70 | 1.74 | 4.22 |

| Friction angle | ° | 36.51 | 28.97 | 32.74 |

| Articulation stroke [Upper right] | mm | 82.00 | 29.00 | 55.50 |

| Articulation stroke [Lower right] | mm | 95.00 | 36.00 | 65.50 |

| Articulation stroke [upper left] | mm | 96.00 | 36.00 | 66.00 |

| Articulation stroke [lower left] | mm | 85.00 | 32.00 | 58.50 |

| Vertical deviations of the middle shield (absolute value) | mm | 58.00 | 0.00 | 29.00 |

| Models | Optimal Hyperparameters |

|---|---|

| RF | Maximum number of features = 4, Maximum depth = 5, The number of trees = 20, The minimum number of samples required for a leaf node = 6 |

| LSTM | LSTM layers = 3, Number of LSTM units = 64, Batch size = 32, The number of iterations = 100, Number of fully connected layers = 1, Dropout rate = 0.5, Learning rate = 0.0001, Optimizer = Adam, Loss function = MSE, Activation function = ReLu |

| CNN-BiLSTM | Filter size = 32, Kernel = 6, Number of convolution layers = 2; BiLSTM layer = 3, Number of the BiLSTM units = 32, Each batch = 64, Iteration = 200, Max pooling = 2, Dense = 1, Learning rate = 0.0001, Dropout rate = 0.5, Optimizer = Adam, Loss function = MSE, Activation function = ReLu |

| LSTM- Attention | Number of hidden LSTM layer units = 10, LSTM layers = 2, Batch size = 20, Iteration = 200, Max pooling = 2, Learning rate = 0.01, Dense = 1, Dropout rate = 0.2, Optimizer = Adam, Loss function = MSE, Activation function = ReLu |

| CCATB | Filter size = 64, Kernel = 3, Number of convolution layers = 2; BiLSTM layer = 2, Number of the BiLSTM units = 64, Embedding Dimension = 256, Hidden Layer Dimension = 256, Feed-Forward Network Dimension = 2048, Number of Attention Heads = 8, Number of transformer Layers = 6, Each batch = 128, Iteration = 220, Max pooling = 4, Dense = 1, Dropout rate = 0.5, Optimizer = Adam, Loss function = MSE, Activation function = ReLu |

| Models | MAE | RMSE | R2 |

|---|---|---|---|

| RF | 3.362 | 4.107 | 0.765 |

| LSTM | 3.316 | 3.961 | 0.781 |

| CNN-BiLSTM | 1.543 | 1.959 | 0.946 |

| LSTM-Attention | 2.033 | 2.578 | 0.907 |

| CCATB | 0.625 | 0.939 | 0.988 |

| Models | MAE | RMSE | R2 |

|---|---|---|---|

| Transformer | 1.982 | 2.334 | 0.915 |

| Transformer-BiLSTM | 0.964 | 1.527 | 0.963 |

| CNN-Attention | 2.488 | 3.259 | 0.855 |

| CCATB | 0.625 | 0.939 | 0.988 |

| Parameters | Tunnel Section 1 | Tunnel Section 2 |

|---|---|---|

| Tunnel buried depth/m | 15.8 | 16.4 |

| Maximum thrust/kN | 14,400 | 15,230 |

| Maximum torque/kN·m | 9080 | 9521 |

| Stratigraphic conditions | ④4 gravelly sand and ⑤3 medium-coarse sand layers | ⑤3 medium-coarse sand layers |

| The frequency of tunneling parameters of shield machines/min | 1 | 1 |

| Models | MAE | RMSE | R2 |

|---|---|---|---|

| RF | 4.268 | 4.897 | 0.712 |

| LSTM | 3.389 | 3.994 | 0.768 |

| CNN-BiLSTM | 1.368 | 1.878 | 0.950 |

| LSTM-Attention | 2.154 | 2.602 | 0.895 |

| CCATB | 0.745 | 0.991 | 0.968 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dong, M.; Chen, C.; Zhong, F.; Jia, P. A Novel Hybrid Deep Learning for Attitude Prediction in Sustainable Application of Shield Machine. Sustainability 2025, 17, 10604. https://doi.org/10.3390/su172310604

Dong M, Chen C, Zhong F, Jia P. A Novel Hybrid Deep Learning for Attitude Prediction in Sustainable Application of Shield Machine. Sustainability. 2025; 17(23):10604. https://doi.org/10.3390/su172310604

Chicago/Turabian StyleDong, Manman, Cheng Chen, Fanwei Zhong, and Pengjiao Jia. 2025. "A Novel Hybrid Deep Learning for Attitude Prediction in Sustainable Application of Shield Machine" Sustainability 17, no. 23: 10604. https://doi.org/10.3390/su172310604

APA StyleDong, M., Chen, C., Zhong, F., & Jia, P. (2025). A Novel Hybrid Deep Learning for Attitude Prediction in Sustainable Application of Shield Machine. Sustainability, 17(23), 10604. https://doi.org/10.3390/su172310604