Diffusion-Driven Time-Series Forecasting to Support Sustainable River Ecosystems and SDG-Aligned Water-Resource Governance in Thailand

Abstract

1. Introduction

- (1)

- A multi-scale diffusion architecture that jointly models coarse and fine temporal structures for improved representational fidelity.

- (2)

- A progressive denoising mechanism guided by coarse-level trends, enhancing stability against noise.

- (3)

- A comprehensive empirical evaluation comparing MDF with both deterministic and diffusion-based baselines.

- (4)

- A fully reproducible implementation, with open-source code, including multi-scale trend extraction, forward/reverse diffusion, conditioning networks, and inference modules.

2. Preliminaries

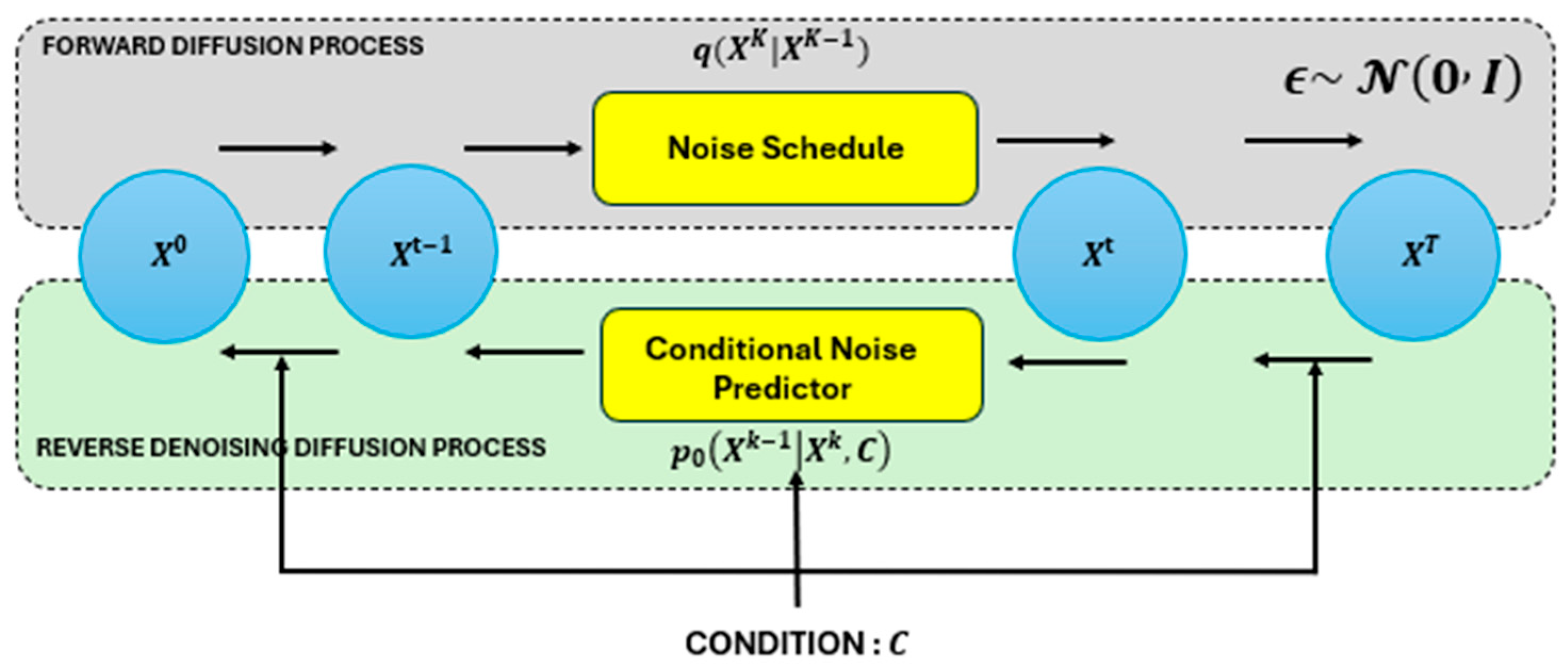

Diffusion Probabilistic Models

3. Related Works

4. Proposed Methods

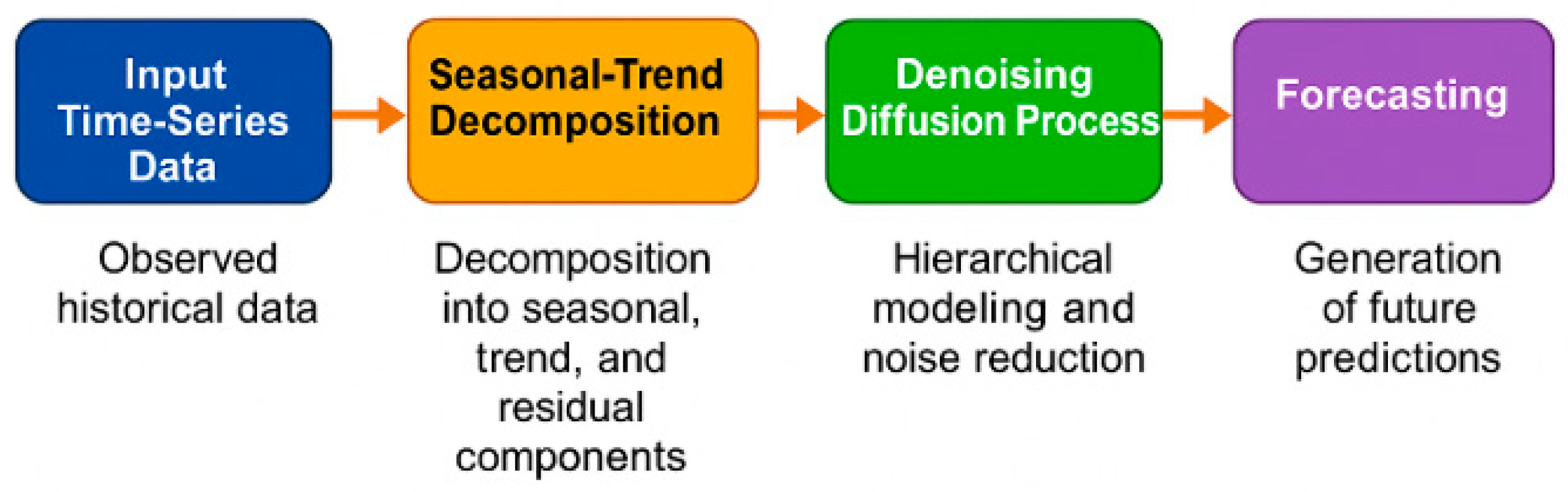

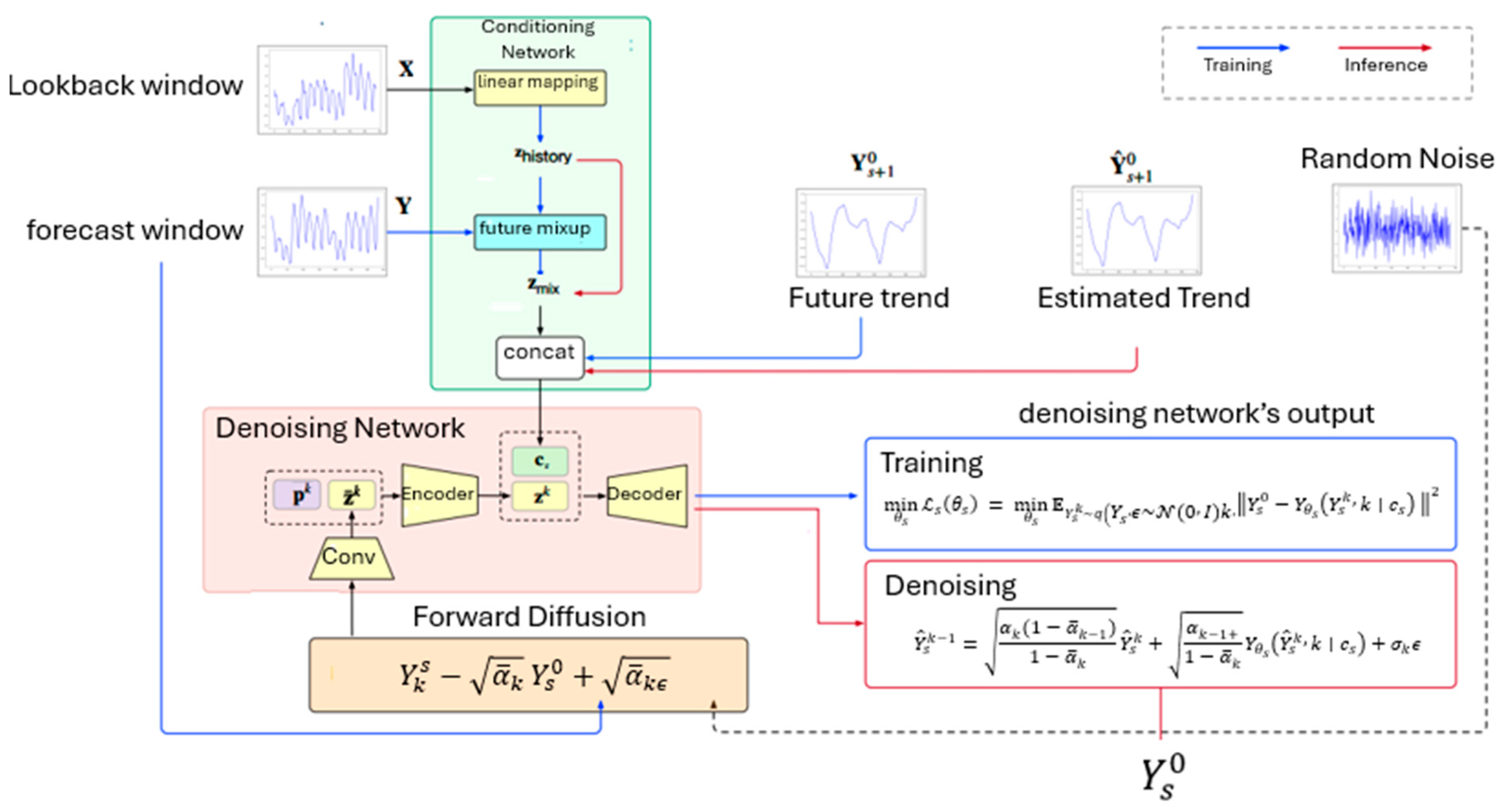

4.1. Multi-Scale Diffusion Forecaster (MDF)

4.2. Trends Extraction Module

4.3. Modified Reverse Denoising Process

4.4. Conditional and Denoising Network

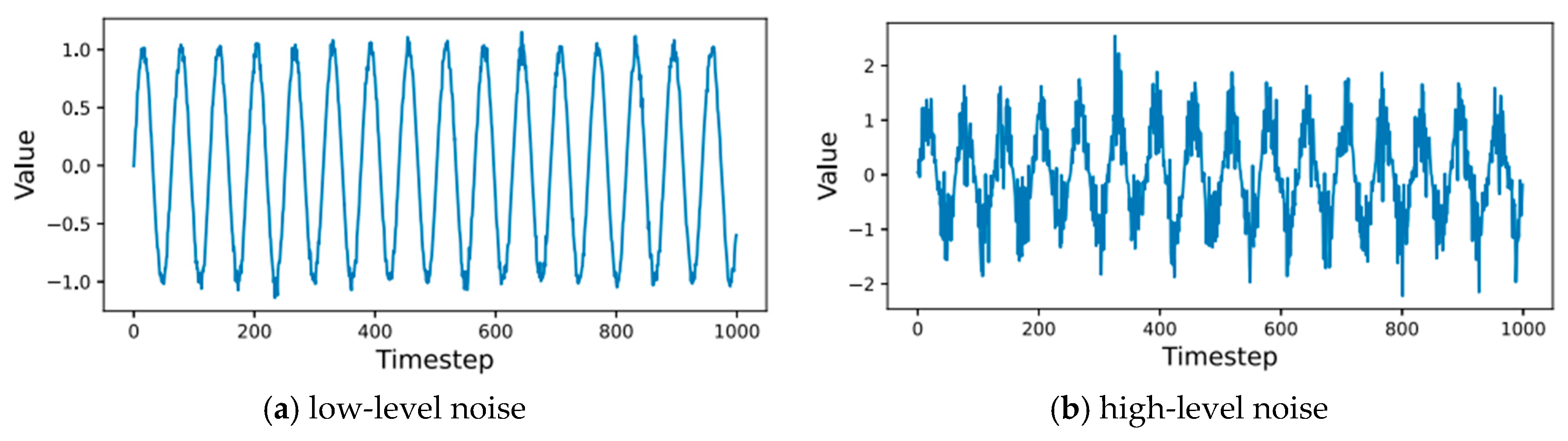

4.5. Synthetic Dataset Configuration

- Laplace noise series: scale b = 0.5, μ = 0, representing heavy-tailed noise.

- Heteroskedastic series: variance σ2t = 0.1 + 0.05 sin 2πt/500); mapping f(xt−1) = 0.7xt−1 + 0.2xt−13 + εt.

4.6. Ablation Study

- (i)

- Number of stages S ∈ {2, 3, 4};

- (ii)

- Embedding dimension d ∈ {64, 128, 256};

- (iii)

- Forecast horizon H ∈ {12, 24, 48}.

5. Dataset

5.1. Synthetic Datasets

5.2. Real-World Datasets

6. Evaluation Setting

6.1. LSTM Baseline for Comparison

6.2. Evaluation Metrics

7. Experimental Results

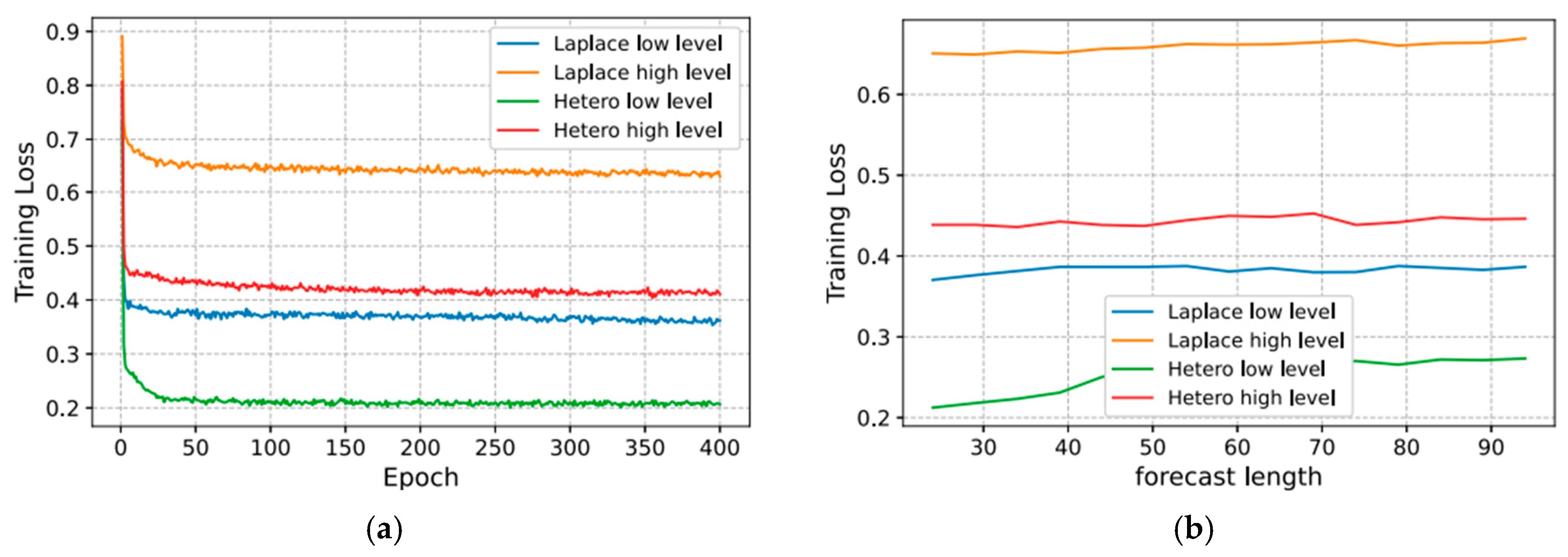

7.1. Influence of Noise Level and Forecast Length on Loss in the Diffusion Model

7.2. Forecasting Evaluation on Synthetic Datasets

7.3. Forecasting Evaluation on Real-World Datasets

8. Discussion

9. Conclusions

- Anticipate contamination events and deploy mitigation resources early;

- Protect public health and freshwater ecosystems;

- Enhance resilience of river systems against climate-induced variability and inform evidence-based environmental policy and planning.

9.1. Sustainability and Policy Implications

9.2. Limitations

- (1)

- Computational cost increases with the number of diffusion steps and scales.

- (2)

- Incomplete seasonal modeling may limit accuracy for highly periodic data.

- (3)

- Underperformance in short horizons suggests that diffusion’s iterative reconstruction may introduce temporal lag.

- (4)

- The present study evaluates single-station training; cross-station and multi-horizon generalization require further exploration.

9.3. Future Work

- (1)

- Adaptive noise scheduling conditioned on signal variance;

- (2)

- Hybrid MDF-transformer architectures for long-term dependencies;

- (3)

- Explicit disentanglement of seasonal-trend residuals;

- (4)

- Multi-task learning to unify imputation and forecasting.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Appendix A

| Algorithm A1. Multi-scale denoising process |

| Input: Time series segments: Number of diffusion steps: Number of scales: Procedure: Initialization: Extract multi-scale trend components: Training Loop: Repeat until convergence: 2.1. Sample diffusion step index: 2.2. Sample Gaussian noise: ϵ∼N(0,I) 2.3. Generate diffused sample: 2.4. Obtain diffusion embedding (Equation (6)). 2.5. Randomly generate matrix (Equation (9)). 2.6. Compute historical mapping: 2.7. Compute mixed representation: 2.8. Form condition vector: If : Else: 2.9. Compute loss at step kkk: (Equation (14)). 2.10. Update parameters: Convergence: Continue until training stabilizes. |

| Algorithm A2. Multi-scale inference process |

| Input: Lookback sequence Output: Final reconstructed sequence Extract trend components: While do 2.1. Compute historical embedding: . 2.2. If then Else End If 2.3. Initialize the diffusion variable: 2.4. While do . Compute the step embedding using (8). Update denoised output by (13): End While End While Return . |

References

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural machine translation by jointly learning to align and translate. In Proceedings of the International Conference on Learning Representations (ICLR), San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Lin, L.; Li, Z.; Li, R.; Li, X.; Gao, J. Diffusion models for time-series applications: A survey. Front. Inf. Technol. Electron. Eng. 2024, 25, 19–41. [Google Scholar] [CrossRef]

- Yang, L.; Zhang, Z.; Song, Y.; Hong, S.; Xu, R.; Zhao, Y.; Zhang, W.; Bin Cui, B.; Yang, M.H. Diffusion Models: A Comprehensive Survey of Methods and Applications. ACM Comput. Surv. 2023, 53, 1–39. [Google Scholar] [CrossRef]

- Murphy, A.H.; Winkler, R.L. A general framework for forecast verification. Mon. Weather. Rev. 1987, 115, 1330–1338. [Google Scholar] [CrossRef]

- Ho, J.; Jain, A.; Abbeel, P. Denoising diffusion probabilistic models. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Virtual, 6–12 December 2020; Volume 33, pp. 6840–6851. [Google Scholar]

- Bergemann, D.; Morris, S. Information design: A unified perspective. J. Econ. Lite. 2019, 57, 44–95. [Google Scholar] [CrossRef]

- Nichol, A.Q.; Dhariwal, P. Improved denoising diffusion probabilistic models. In Proceedings of the 38th International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; Volume 139, pp. 8162–8171. [Google Scholar]

- Sohl-Dickstein, J.; Weiss, E.; Maheswaranathan, N.; Ganguli, S. Deep unsupervised learning using nonequilibrium thermodynamics. In Proceedings of the 32nd International Conference on Machine Learning (ICML), Lille, France, 6–11 July 2015; Volume 37, pp. 2256–2265. [Google Scholar]

- Chen, M.; Mei, S.; Fan, J.; Wang, M. Opportunities and challenges of diffusion models for generative AI. Nat. Sci. Rev. 2024, 11, nwae348. [Google Scholar] [CrossRef]

- Liu, M.; Zeng, A.; Chen, M.; Xu, Z.; Lai, Q.; Ma, L.; Xu, Q. SCINet: Time series modeling and forecasting with sample convolution and interaction. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 5816–5828. [Google Scholar]

- Truong, V.T.; Dang, L.B.; Le, L.B. Attacks and defenses for generative diffusion models: A comprehensive survey. ACM Comput. Surv. 2025, 57, 1–44. [Google Scholar] [CrossRef]

- Metropolitan Waterworks Authority. Metropolitan Waterworks Authority Headquarters. Government Data Catalog. Available online: https://gdcatalog.go.th/organization/4388d502-250d-48b3-bdcc-43c0d6f0287b (accessed on 19 March 2025).

- Li, J.; Chen, W.; Liu, Y.; Yang, J.; Zhou, Z.; Zeng, D. Diffinformer: Diffusion Informer model for long sequence time-series forecasting. Exp. Sys. App. 2026. Available online: https://www.sciencedirect.com/science/article/abs/pii/S0957417425035596?via%3Dihub (accessed on 19 March 2025).

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Long Beach, CA, USA, 4–9 December 2017; Volume 30, pp. 5998–6008. [Google Scholar]

- Zhou, H.; Zhang, S.; Peng, J.; Zhang, S.; Li, J.; Xiong, H.; Zhang, W. Informer: Beyond efficient transformer for long sequence time-series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Virtual, 2–9 February 2021; pp. 11106–11115. [Google Scholar]

- Wu, H.; Xu, J.; Wang, J.; Long, M. Autoformer: Decomposition transformers with auto-correlation for long-term series forecasting. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021; Volume 34, pp. 22419–22430. [Google Scholar]

- Zhou, T.; Ma, Z.; Wen, Q.; Wang, X.; Sun, L.; Jin, R. FED former: Frequency enhanced decomposed transformer for long-term series forecasting. In Proceedings of the International Conference on Machine Learning (ICML), Baltimore, MD, USA, 17–23 July 2022; pp. 27268–27286. [Google Scholar]

- Liu, S.; Yu, H.; Liao, C.; Li, J.; Lin, W.; Liu, A.X.; Dustdar, S. Pyraformer: Low-complexity pyramidal attention for long-range time series modeling and forecasting. In Proceedings of the International Conference on Learning Representations (ICLR), Vienna, Austria, 3–7 May 2021. [Google Scholar]

- Shabani, M.A.; Abdi, A.H.; Meng, L.; Sylvain, T. Scaleformer: Iterative multi-scale refining transformers for time series forecasting. In Proceedings of the International Conference on Learning Representations (ICLR), Kigali, Rwanda, 1–5 May 2023. [Google Scholar]

- Su, J.; Xie, D.; Duan, Y.; Zhou, Y.; Hu, X.; Duan, S. MDCNet: Long-term time series forecasting with mode decomposition and 2D convolution. Knowledge-Based Sys. 2024, 299, 111986. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16 × 16 words: Transformers for image recognition at scale. In Proceedings of the International Conference on Learning Representations (ICLR), Addis Ababa, Ethiopia, 26–30 April 2020. [Google Scholar]

- Zhou, T.; Ma, Z.; Wang, X.; Wen, Q.; Sun, L.; Yao, T.; Yin, W.; Jin, R. FiLM: Frequency improved Legendre memory model for long-term time series forecasting. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), New Orleans, LA, USA, 28 November–9 December 2022; Volume 35, pp. 12677–12689. [Google Scholar]

- Oreshkin, B.N.; Carpov, D.; Chapados, N.; Bengio, Y. N-BEATS: Neural basis expansion analysis for interpretable time series forecasting. In Proceedings of the International Conference on Learning Representations (ICLR), New Orleans, LA, USA, 6–9 May 2019. [Google Scholar]

- Fan, W.; Zheng, S.; Yi, X.; Cao, W.; Fu, Y.; Bian, J.; Liu, T.-Y. DEPTS: Deep expansion learning for periodic time series forecasting. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 25–29 April 2022. [Google Scholar]

- Challu, C.; Olivares, K.G.; Oreshkin, B.N.; Garza, F.; Mergenthaler-Canseco, M.; Dubrawski, A. N-HITS: Neural hierarchical interpolation for time series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence, Washinton, DC, USA, 7–14 February 2023. [Google Scholar]

- Zeng, A.; Chen, M.; Zhang, L.; Xu, Q. Are transformers effective for time series forecasting? In Proceedings of the AAAI Conference on Artificial Intelligence, Washinton, DC, USA, 7–14 February 2023; pp. 11121–11129. [Google Scholar]

- Rasul, K.; Seward, C.; Schuster, I.; Vollgraf, R. Autoregressive denoising diffusion models for multivariate probabilistic time series forecasting. In Proceedings of the International Conference on Machine Learning (ICML), Virtual, 18–24 July 2021; pp. 8827–8837. [Google Scholar]

- Tashiro, Y.; Song, J.; Song, Y.; Ermon, S. CSDI: Conditional score-based diffusion models for probabilistic time series imputation. In Proceedings of the Advances in Neural Information Processing Systems (NeurIPS), Online, 6–14 December 2021; Volume 34, pp. 24804–24816. [Google Scholar]

- Lugmayr, A.; Danelljan, M.; Romero, A.; Yu, F.; Timofte, R.; Van Gool, L. RePaint: Inpainting using denoising diffusion probabilistic models. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), New Orleans, LA, USA, 18–24 June 2022; pp. 11461–11471. [Google Scholar]

- Shen, L.; Kwok, J. Non-autoregressive conditional diffusion models for time series prediction. In Proceedings of the International Conference on Machine Learning (ICML), Honolulu, HI, USA, 23–29 July 2023. [Google Scholar]

- Bui, K.H.N.; Cho, J.; Yi, H. Spatial-temporal graph neural network for traffic forecasting: An overview and open research issues. App. Intel. 2022, 52, 2763–2774. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional networks for biomedical image segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention (MICCAI), Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Niu, H.; Meng, C.; Cao, D.; Habault, G.; Legaspi, R.; Wada, S.; Ono, C.; Liu, Y. Mu2ReST: Multi-resolution recursive spatio-temporal transformer for long-term prediction. In Proceedings of the Pacific-Asia Conference on Knowledge Discovery and Data Mining (PAKDD), Chengdu, China, 16–19 May 2022; pp. 79–91. [Google Scholar]

- Ma, X.; Li, Y.; Zhang, H. An U-Mixer architecture with stationarity correction for long-term time series forecasting. In Proceedings of the AAAI Conference on Artificial Intelligence (Vol. 38, No. 1), Vancouver, BC, Canada, 20–27 February 2024; pp. 1234–1243. [Google Scholar]

- Jeha, P.; Bohlke-Schneider, M.; Mercado, P.; Kapoor, S.; Nirwan, R.S.; Flunkert, V.; Gasthaus, J.; Januschowski, T. PSA-GAN: Progressive self-attention GANs for synthetic time series. In Proceedings of the International Conference on Learning Representations (ICLR), Virtual, 25–29 April 2022. [Google Scholar]

- Ho, J.; Saharia, C.; Chan, W.; Fleet, D.J.; Norouzi, M.; Salimans, T. Cascaded diffusion models for high fidelity image generation. J. Mach. Learn. Res. 2022, 23, 1–33. [Google Scholar]

| Metrics | ||||||

|---|---|---|---|---|---|---|

| Dataset Name | Methods | MSE | MAE | MAPE | sMAPE | CRPS |

| Laplace Low-level | LSTM | 0.0893 | 0.21185 | 410.1492 | 48.22200 | 0.3629 |

| MDF | 0.0988 | 0.25460 | 438.0631 | 64.47935 | 0.2546 | |

| Laplace High-level | LSTM | 0.52440 | 0.51110 | 562.457 | 274.2802 | 0.84835 |

| MDF | 0.78375 | 0.70015 | 486.609 | 119.4255 | 0.70015 | |

| Hetero Low-level | LSTM | 0.02280 | 0.11115 | 3432.831 | 24.90425 | 0.17670 |

| MDF | 0.01995 | 0.11020 | 125.2680 | 30.82560 | 0.11020 | |

| Hetero High-level | LSTM | 0.13015 | 0.26030 | 306.1784 | 71.0752 | 0.40470 |

| MDF | 0.14820 | 0.31255 | 166.5322 | 47.6007 | 0.32395 | |

| Metrics | ||||||

|---|---|---|---|---|---|---|

| Dataset Name | Methods | MSE | MAE | MAPE | sMAPE | CRPS |

| PA (TEMP) | LSTM | 1.55465 | 0.95115 | 3.09910 | 3.17050 | 0.91545 |

| MDF | 2.04000 | 2.80075 | 3.00305 | 2.75995 | 0.90695 | |

| PA (TSD) | LSTM | 34,992.15 | 158.0439 | 51.12240 | 74.46170 | 151.5729 |

| MDF | 37,029.26 | 207.3295 | 58.61005 | 81.95105 | 191.7974 | |

| MK (TEMP) | LSTM | 1.39485 | 0.91205 | 2.9512 | 3.0209 | 0.90950 |

| MDF | 2.12160 | 2.42420 | 4.3673 | 2.6452 | 1.41695 | |

| MK (TSD) | LSTM | 10,449.03 | 86.25970 | 42.52635 | 57.26110 | 80.68965 |

| MDF | 24,054.05 | 217.9681 | 20.17900 | 33.90055 | 47.92640 | |

| KN (TEMP) | LSTM | 1.66515 | 0.90865 | 2.85940 | 2.94100 | 0.95965 |

| MDF | 3.76720 | 7.93475 | 4.54495 | 4.99035 | 1.66515 | |

| KN (TSD) | LSTM | 14,337.38 | 110.1677 | 52.7476 | 76.52380 | 105.5513 |

| MDF | 34,243.71 | 388.0956 | 46.8401 | 28.48775 | 76.93605 | |

| KY (TEMP) | LSTM | 2.02555 | 1.1322 | 3.60995 | 3.71535 | 1.00300 |

| MDF | 1.75950 | 1.4161 | 2.98095 | 2.87810 | 0.96305 | |

| KY (TSD) | LSTM | 3305.91 | 52.67365 | 30.78870 | 37.66520 | 46.22640 |

| MDF | 16,599.96 | 80.93275 | 30.34245 | 18.78415 | 43.63305 | |

| Sustainability Domain | Contribution of Proposed MDF Model |

|---|---|

| Public health and safe water | Early detection of pollution risk for communities |

| Ecosystem preservation | Protects biodiversity through proactive water-quality surveillance |

| Climate adaptation | Supports resilience planning under hydrological uncertainty |

| Sustainable development policy | Aligns with SDGs 6, 13, and 14 for clean water and ecosystem protection |

| Resource optimization | Supports cost-efficient monitoring and intervention scheduling |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rattanatheerawon, W.; Fooprateepsiri, R. Diffusion-Driven Time-Series Forecasting to Support Sustainable River Ecosystems and SDG-Aligned Water-Resource Governance in Thailand. Sustainability 2025, 17, 10295. https://doi.org/10.3390/su172210295

Rattanatheerawon W, Fooprateepsiri R. Diffusion-Driven Time-Series Forecasting to Support Sustainable River Ecosystems and SDG-Aligned Water-Resource Governance in Thailand. Sustainability. 2025; 17(22):10295. https://doi.org/10.3390/su172210295

Chicago/Turabian StyleRattanatheerawon, Weenuttagant, and Rerkchai Fooprateepsiri. 2025. "Diffusion-Driven Time-Series Forecasting to Support Sustainable River Ecosystems and SDG-Aligned Water-Resource Governance in Thailand" Sustainability 17, no. 22: 10295. https://doi.org/10.3390/su172210295

APA StyleRattanatheerawon, W., & Fooprateepsiri, R. (2025). Diffusion-Driven Time-Series Forecasting to Support Sustainable River Ecosystems and SDG-Aligned Water-Resource Governance in Thailand. Sustainability, 17(22), 10295. https://doi.org/10.3390/su172210295