Impact of a Contextualized AI and Entrepreneurship-Based Training Program on Teacher Learning in the Ecuadorian Amazon

Abstract

1. Introduction

2. Materials and Methods

2.1. Research Design

2.2. Participants and Context

2.3. Intervention Program

2.4. Instruments

2.5. Procedure

- Pretest: The 22-item questionnaire was administered to the 39 participating teachers to establish a baseline. Additionally, three open-ended diagnostic questions invited reflections on preparation, challenges, and expectations related to entrepreneurship and AI.

- Implementation of the training program: The three structured modules were developed (see Section 2.3).

- Post-test: The questionnaire was administered again under equivalent conditions. Five additional open-ended questions were included, focusing on teachers’ perceptions of the training, their preferred uses of AI, perceived barriers, the supportive roles of AI, and their preferred applications of AI tools.

2.6. Data Analysis

3. Results and Discussion

3.1. Validation of the Quantitative Data Collection Instrument

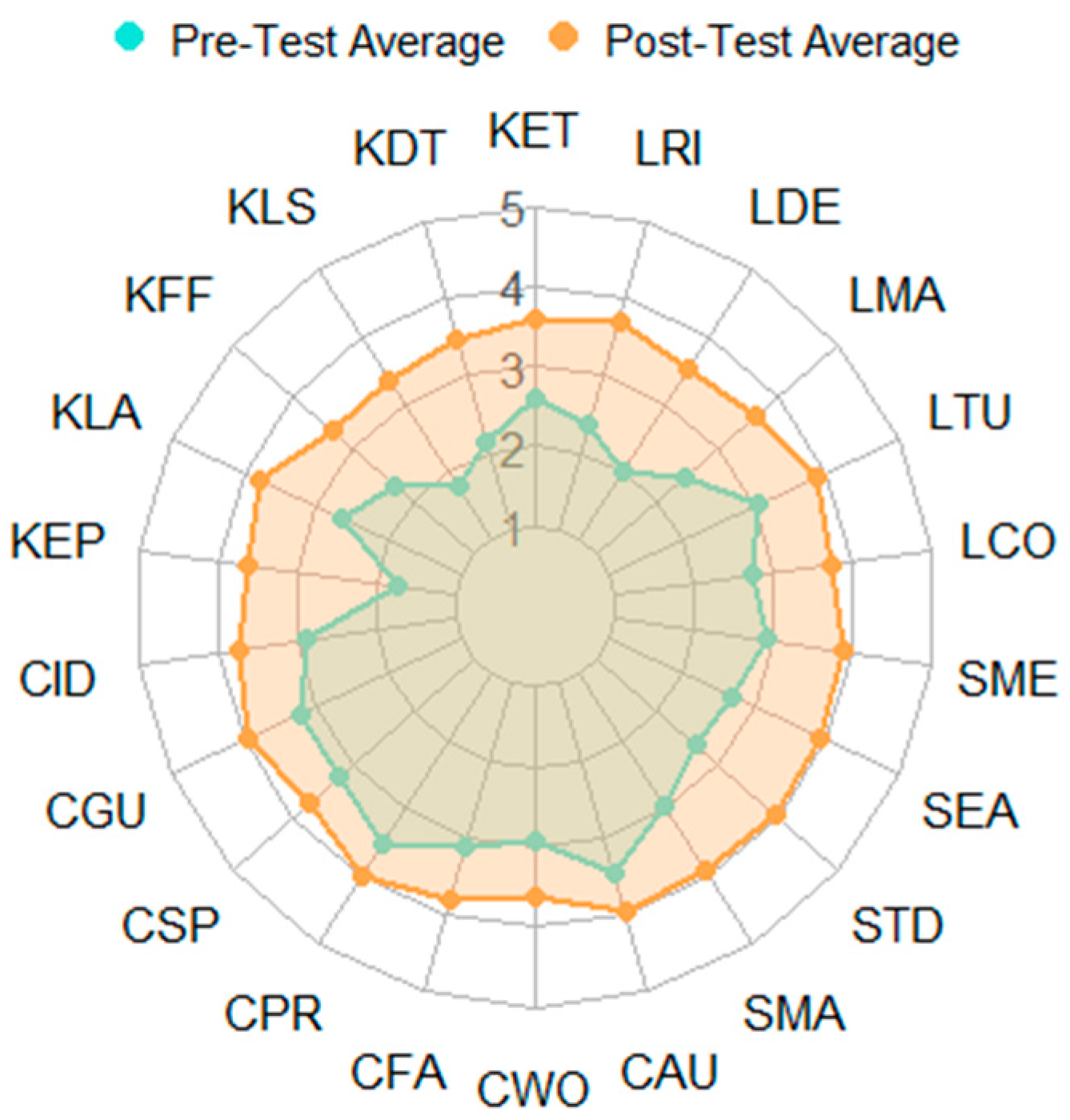

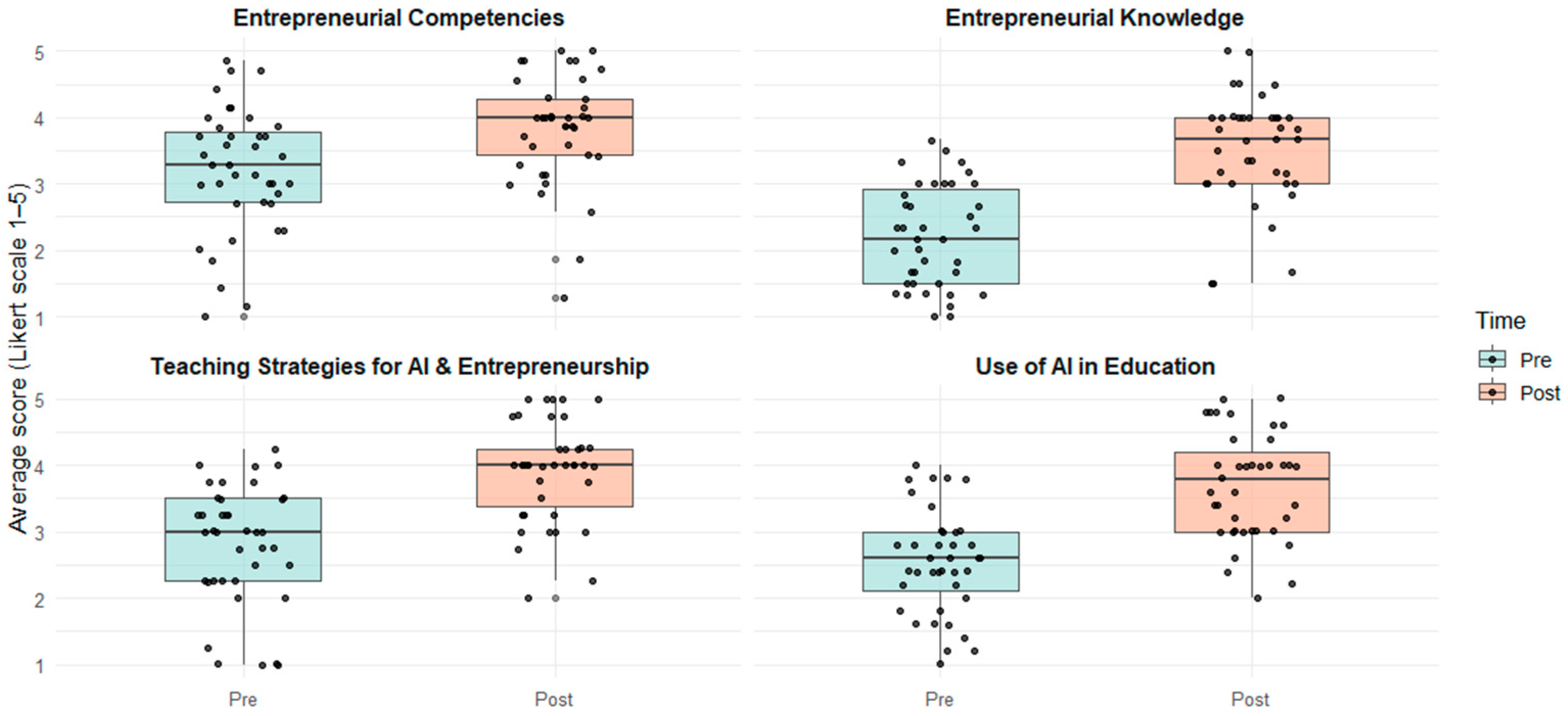

3.2. Quantitative Effects Analysis

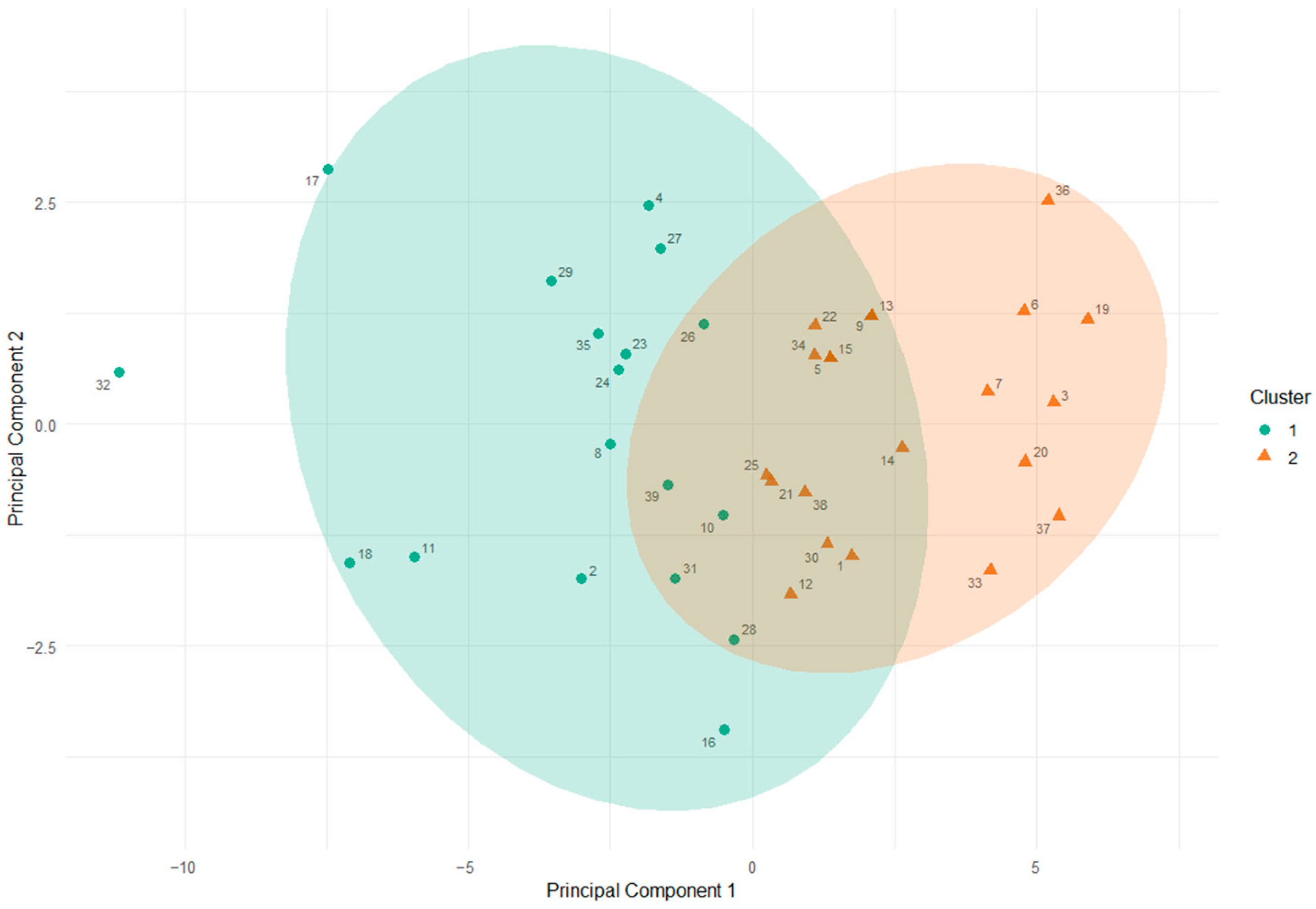

3.3. Multivariate Analysis: Teacher Profiles and Latent Patterns

3.3.1. Emerging Teacher Profiles (K-Means Clustering)

- Group 1 (green circle) is composed of teachers who demonstrate greater development in entrepreneurial skills and pedagogical strategies, but are only just beginning to utilize AI tools.

- Group 2 (orange triangle) exhibits a greater affinity with the technological dimension, particularly in the use of generative AI for tutoring, market analysis, and prototype design.

3.3.2. Extreme Cases: Atypical Trajectories

- Low appropriation cases (T17, T18, T32): located in positions of overall underperformance, possibly linked to previous gaps in continuing education, limited digital literacy, or resistance to change.

- High appropriation cases (T3, T19, T37): stand out for high scores in AI integration, active strategies, and project leadership.

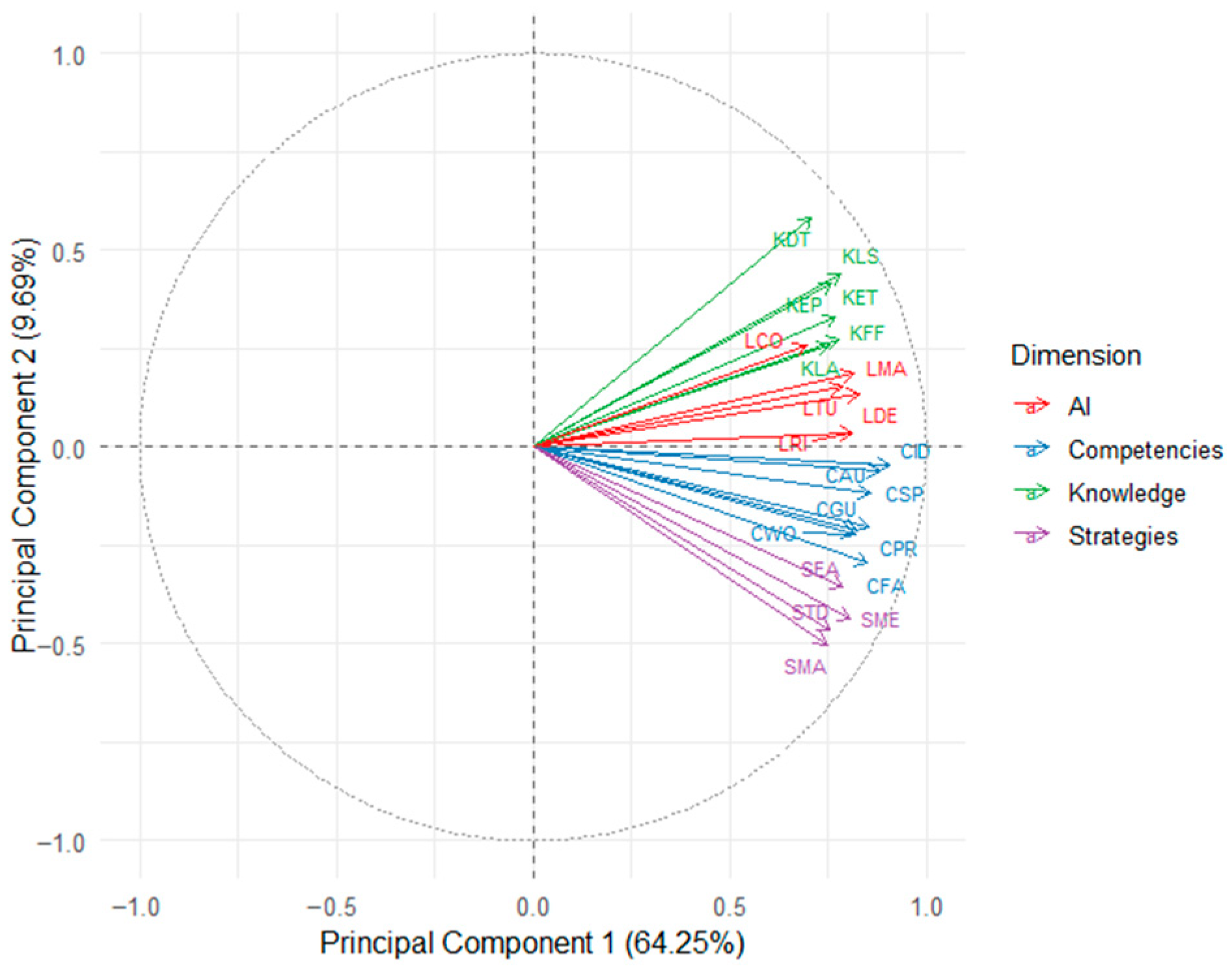

3.3.3. Factorial Structure of the Instrument (Item PCA)

- Competencies (blue) and entrepreneurial knowledge (green) items tend to align with PC1 and PC2 in a complementary manner.

- AI items (red) load strongly on PC1, reflecting their conceptual independence from traditional pedagogical dimensions.

- Methodological strategies (purple) appear more dispersed but still maintain internal coherence, indicating their multidimensional nature.

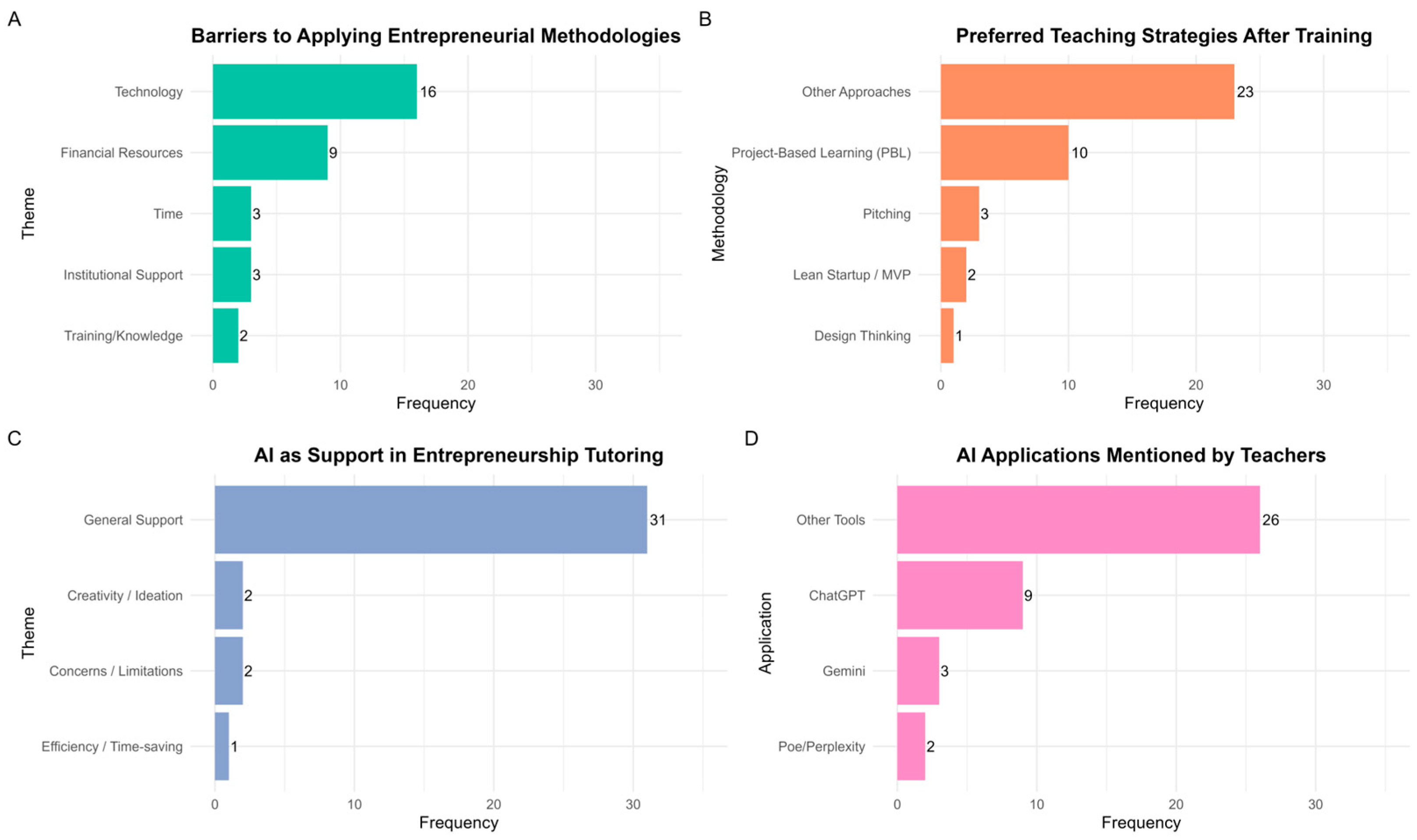

3.4. Qualitative Analysis: Perceptions, Barriers, and Appropriations

“Lack of internet, lack of access to technological devices and connectivity in students”.

“I would like to apply to the Simulation of an Entrepreneurship with Lean Startup”.

“Well, the use of AIs is a great help, and adapting to them is a great help for tutoring”.

“AI helps to a large extent to generate innovative ideas and correctly use resources and tools”.

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Montoya, G.; Valencia, L.; Vargas, L.; García, J.; Franco, J.; Calderón, H. Ruralidad, educación rural e identidad profesional de maestras y maestros rurales. Prax. Saber 2022, 13, e13323. [Google Scholar] [CrossRef]

- Guárdia Ortiz, L.; Bekerman, Z.; Zapata Ros, M. Presentación del número especial “IA generativa, ChatGPT y Educación. Consecuencias para el Aprendizaje Inteligente y la Evaluación Educativa.”. Rev. Educ. Distancia 2024, 5, 12–44. [Google Scholar] [CrossRef]

- Carranza Alcántar, M. del R. Use of Active Methodologies with ICT in Teacher Training. Salud Cienc. Tecnol.-Ser. Conf. 2024, 3, 702. [Google Scholar] [CrossRef]

- Bardales-Cárdenas, M.; Cervantes-Ramón, E.F.; Gonzales-Figueroa, I.K.; Farro-Ruiz, L.M. Entrepreneurship skills in university students to improve local economic development. J. Innov. Entrep. 2024, 13, 55. [Google Scholar] [CrossRef]

- Ávalos, C.; Pérez-Escoda, A.; Monge, L. Lean Startup as a Learning Methodology for Developing Digital and Research Competencies. J. New Approaches Educ. Res. 2019, 8, 227–242. [Google Scholar] [CrossRef]

- Ibrahim, U. Integration of Emerging Technologies in Teacher Education for Global Competitiveness. Int. J. Educ. Life Sci. 2024, 2, 127–138. [Google Scholar] [CrossRef]

- Sandirasegarane, S.; Sutermaster, S.; Gill, A.; Volz, J.; Mehta, K. Context-Driven Entrepreneurial Education in Vocational Schools. Int. J. Res. Vocat. Educ. Train. 2016, 3, 106–126. [Google Scholar] [CrossRef][Green Version]

- Uchima-Marin, C.; Murillo, J.; Salvador-Acosta, L.; Acosta-Vargas, P. Integration of Technological Tools in Teaching Statistics: Innovations in Educational Technology for Sustainable Education. Sustainability 2024, 16, 8344. [Google Scholar] [CrossRef]

- Fissore, C.; Floris, F.; Conte, M.M.; Sacchet, M. Teacher training on artificial intelligence in education. In Proceedings of the Smart Learning Environments in the Post Pandemic Era: Selected Papers from the CELDA 2022 Conference, Lisbon, Portugal, 8–10 November 2022; Springer: Berlin/Heidelberg, Germany, 2024; pp. 227–244. [Google Scholar]

- Bell, R.; Bell, H. Entrepreneurship education in the era of generative artificial intelligence. Entrep. Educ. 2023, 6, 229–244. [Google Scholar] [CrossRef]

- Wipulanusat, W.; Panuwatwanich, K.; Stewart, R.A.; Sunkpho, J. Applying Mixed Methods Sequential Explanatory Design to Innovation Management. In Proceedings of the 10th International Conference on Engineering, Project, and Production Management, Berlin, Germany, 2–4 September 2019; Panuwatwanich, K., Ko, C.-H., Eds.; Springer: Singapore, 2020; pp. 485–495. [Google Scholar]

- Prashar, A.; Gupta, P.; Dwivedi, Y.K. Plagiarism awareness efforts, students’ ethical judgment and behaviors: A longitudinal experiment study on ethical nuances of plagiarism in higher education. Stud. High. Educ. 2023, 49, 929–955. [Google Scholar] [CrossRef]

- Campbell, D.T.; Stanley, J.C. Experimental and Quasi-Experimental Designs for Research. In Handbook of Research on Teaching; Houghton Mifflin Company: Boston, MA, USA, 1963. [Google Scholar]

- Kefalis, C.; Skordoulis, C.; Drigas, A. Digital Simulations in STEM Education: Insights from Recent Empirical Studies, a Systematic Review. Encyclopedia 2025, 5, 10. [Google Scholar] [CrossRef]

- Reid, J.-A. The Politics of Ethics in Rural Social Research: A Cautionary Tale. In Ruraling Education Research: Connections Between Rurality and the Disciplines of Educational Research; Roberts, P., Fuqua, M., Eds.; Springer: Singapore, 2021; pp. 247–263. ISBN 978-981-16-0131-6. [Google Scholar]

- Troyer, M. The gold standard for whom? Schools’ experiences participating in a randomised controlled trial. J. Res. Read. 2022, 45, 406–424. [Google Scholar] [CrossRef]

- Kim, H.; Clasing-Manquian, P. Quasi-Experimental Methods: Principles and Application in Higher Education Research. In Theory and Method in Higher Education Research; Emerald Publishing: Leeds, UK, 2023; Volume 9. [Google Scholar] [CrossRef]

- Denny, M.; Denieffe, S.; O’Sullivan, K. Non-equivalent Control Group Pretest–Posttest Design in Social and Behavioral Research. In The Cambridge Handbook of Research Methods and Statistics for the Social and Behavioral Sciences: Volume 1: Building a Program of Research; Nichols, A.L., Edlund, J., Eds.; Cambridge Handbooks in Psychology; Cambridge University Press: Cambridge, UK, 2023; pp. 314–332. ISBN 9781316518526. [Google Scholar]

- Slocum, T.A.; Pinkelman, S.E.; Joslyn, P.R.; Nichols, B. Threats to Internal Validity in Multiple-Baseline Design Variations. Perspect. Behav. Sci. 2022, 45, 619–638. [Google Scholar] [CrossRef]

- De Santis, A.; Sannicandro, K.; Bellini, C.; Minerva, T. Trends in the use of Multivariate Analysis in Educational Research: A review of methods and applications in 2018–2022. J. E-Learn. Knowl. Soc. 2024, 20, 47–55. [Google Scholar] [CrossRef]

- Downes, N.; Marsh, J.; Roberts, P.; Reid, J.-A.; Fuqua, M.; Guenther, J. Valuing the Rural: Using an Ethical Lens to Explore the Impact of Defining, Doing and Disseminating Rural Education Research. In Ruraling Education Research: Connections Between Rurality and the Disciplines of Educational Research; Roberts, P., Fuqua, M., Eds.; Springer: Singapore, 2021; pp. 265–285. ISBN 978-981-16-0131-6. [Google Scholar]

- Seikkula-Leino, J.; Salomaa, M.; Jónsdóttir, S.R.; McCallum, E.; Israel, H. EU Policies Driving Entrepreneurial Competences—Reflections from the Case of EntreComp. Sustainability 2021, 13, 8178. [Google Scholar] [CrossRef]

- Kusmaryono, I.; Wijayanti, D.; Risqi, H. Number of Response Options, Reliability, Validity, and Potential Bias in the Use of the Likert Scale in Education and Social Science Research: A Literature Review. Int. J. Educ. Methodol. 2022, 8, 625–637. [Google Scholar] [CrossRef]

- Quishpe, Q.; Miguel, L.; Irene, A.-V.; Lorena, R.-R.; Jessica, M.-A.; Daniel, C.-N.; Roldan, T.-G.; Roldan, T.-G. Dataset AI and Entrepreneurship in Amazon Teacher Training 2025. Mendeley Data 2025, V1. Available online: https://data.mendeley.com/datasets/9rmdx6z785/2 (accessed on 7 August 2025).

- Gehlbach, H.; Brinkworth, M.E. Measure Twice, Cut down Error: A Process for Enhancing the Validity of Survey Scales. Rev. Gen. Psychol. 2011, 15, 380–387. [Google Scholar] [CrossRef]

- Wang, D.; Dong, X.; Zhong, J. Enhance College AI Course Learning Experience with Constructivism-Based Blog Assignments. Educ. Sci. 2025, 15, 217. [Google Scholar] [CrossRef]

- Teemant, A.; Sherman, B.; Dagli, C. A Mixed Method Study of a Critical Sociocultural Coaching Intervention. In Handbook of 480 Critical Coaching Research; Routledge: London, UK, 2024; pp. 145–162. [Google Scholar]

- Abulibdeh, A. A systematic and bibliometric review of artificial intelligence in sustainable education: Current trends and future research directions. Sustain. Futures 2025, 10, 101033. [Google Scholar] [CrossRef]

- Ulferts, H.; Wolf, K.M.; Anders, Y. Impact of Process Quality in Early Childhood Education and Care on Academic Outcomes: Longitudinal Meta-Analysis. Child. Dev. 2019, 90, 1474–1489. [Google Scholar] [CrossRef] [PubMed]

- Braun, V.; Clarke, V. Using thematic analysis in psychology. Qual. Res. Psychol. 2006, 3, 77–101. [Google Scholar] [CrossRef]

- Borges, E.M. Hypothesis Tests and Exploratory Analysis Using R Commander and Factoshiny. J. Chem. Educ. 2023, 100, 267–278. [Google Scholar] [CrossRef]

- Hussey, I.; Alsalti, T.; Bosco, F.; Elson, M.; Arslan, R. An aberrant abundance of Cronbach’s alpha values at. 70. Adv. Methods Pract. Psychol. Sci. 2025, 8, 25152459241287124. [Google Scholar] [CrossRef]

- Govindasamy, P.; Cumming, T.M.; Abdullah, N. Validity and reliability of a needs analysis questionnaire for the development of a creativity module. J. Res. Spec. Educ. Needs 2024, 24, 637–652. [Google Scholar] [CrossRef]

- Collin, S.; Brotcorne, P. Capturing digital (in)equity in teaching and learning: A sociocritical approach. Int. J. Inf. Learn. Technol. 2019, 36, 169–180. [Google Scholar] [CrossRef]

- Segal, A. Rethinking Collective Reflection in Teacher Professional Development. J. Teach. Educ. 2023, 75, 155–167. [Google Scholar] [CrossRef]

- Rodrigues, A.L. Entrepreneurship Education Pedagogical Approaches in Higher Education. Educ. Sci. 2023, 13, 940. [Google Scholar] [CrossRef]

- Mohammad Nezhad, P.; Stolz, S.A. Unveiling teachers’ professional agency and decision-making in professional learning: The illusion of choice. Prof. Dev. Educ. 2024, 1–21. [Google Scholar] [CrossRef]

- Franco D’Souza, R.; Mathew, M.; Mishra, V.; Surapaneni, K.M. Twelve tips for addressing ethical concerns in the implementation of artificial intelligence in medical education. Med. Educ. Online 2024, 29, 2330250. [Google Scholar] [CrossRef] [PubMed]

- Ruiz-Rojas, L.I.; Salvador-Ullauri, L.; Acosta-Vargas, P. Collaborative Working and Critical Thinking: Adoption of Generative Artificial Intelligence Tools in Higher Education. Sustainability 2024, 16, 5367. [Google Scholar] [CrossRef]

- Cáceres-Nakiche, K.; Carcausto-Calla, W.; Yabar Arrieta, S.R.; Lino Tupiño, R.M. The SAMR Model in Education Classrooms: Effects on Teaching Practice, Facilities, and Challenges. J. High. Educ. Theory Pract. 2024, 24, 160–172. [Google Scholar] [CrossRef]

- Mishra, P.; Koehler, M.J. Technological Pedagogical Content Knowledge: A Framework for Teacher Knowledge. Teach. Coll. Rec. 2006, 108, 1017–1054. [Google Scholar] [CrossRef]

- Petko, D.; Mishra, P.; Koehler, M.J. TPACK in context: An updated model. Comput. Educ. Open 2025, 8, 100244. [Google Scholar] [CrossRef]

- Alkan, A. Artificial Intelligence: Its Role and Potential in Education TT Yapay Zekâ: Eğitimdeki Rolü ve Potansiyeli. İnsan Ve Toplum. Bilim. Araştırmaları Derg. 2024, 13, 483–497. [Google Scholar] [CrossRef]

- Korthagen, F.A.J. Situated learning theory and the pedagogy of teacher education: Towards an integrative view of teacher behavior and teacher learning. Teach. Teach. Educ. 2010, 26, 98–106. [Google Scholar] [CrossRef]

- Gupta, N.; Khatri, K.; Malik, Y.; Lakhani, A.; Kanwal, A.; Aggarwal, S.; Dahuja, A. Exploring prospects, hurdles, and road ahead for generative artificial intelligence in orthopedic education and training. BMC Med. Educ. 2024, 24, 1544. [Google Scholar] [CrossRef]

| Module | Sessions/Hours | Learning Objectives | Pedagogical Strategies and Tasks | Digital/Material Resources | Assessment Criteria |

|---|---|---|---|---|---|

| 1. Entrepreneurial Competencies | 2 sessions (12 h) | Introduce the EntreComp framework to promote an entrepreneurial mindset, leadership, and reflective practice. | Self-assessment activities; case analysis; structured debates on innovation challenges | EntreCompEdu platform; curated case studies; reflection guides | Completion of self-assessment matrix; quality of contributions in debates; reflective notes |

| 2. Active Methodologies | 2 sessions (14 h) | Apply Lean Startup and Design Thinking in school contexts; strengthen project-based teaching competencies. | Project Canvas design workshop; empathy-mapping exercise; collaborative problem-solving tasks | Project Canvas template; empathy maps; PBL scenarios | Submission of project drafts, peer feedback reports, and instructor evaluation of methodological alignment |

| 3. AI in Education | 3 sessions (14 h) | Develop pedagogical use of generative AI (ChatGPT, DALL·E) for ideation, prototyping, and formative feedback. | AI-assisted lesson design; guided creation of prototypes; role-play simulations for formative assessment | Generative AI platforms (ChatGPT, DALL·E); guided assignments; evaluation rubrics | Presentation of AI-supported lesson prototype; reflective journal on ethical/critical use of AI |

| Short Code | Item | Dimension |

|---|---|---|

| KET | What is your level of knowledge about key entrepreneurship concepts? | Knowledge |

| KDT | Knowledge about Design Thinking as a methodology for innovation projects? | Knowledge |

| KLS | Knowledge about Lean Startup and its application in student projects? | Knowledge |

| KFF | Knowledge about funding sources for innovative projects? | Knowledge |

| KLA | Knowledge about active learning methodologies for entrepreneurship (e.g., PBL)? | Knowledge |

| KEP | Knowledge about structuring a compelling Elevator Pitch? | Knowledge |

| CID | Competence in identifying innovation opportunities in your educational context? | Competence |

| CGU | Competence in guiding students in generating innovative ideas? | Competence |

| CSP | Competence in helping students mobilize resources for their projects? | Competence |

| CPR | Competence in fostering perseverance and motivation in work teams? | Competence |

| CFA | Competence in facilitating the management of school entrepreneurship projects? | Competence |

| CWO | Competence in working with other teachers or external actors to support projects? | Competence |

| CAU | Competence in fostering autonomy and decision-making in students? | Competence |

| SMA | Application of active methodologies (e.g., PBL, gamification) in tutoring? | Strategies |

| STD | Use digital tools for prototyping and validation? | Strategies |

| SEA | Evaluation of projects based on impact and feasibility criteria? | Strategies |

| SME | Use of mentoring and effective feedback in student projects? | Strategies |

| LCO | Knowledge and use of AI concepts in education? | AI Integration |

| LTU | Use of ChatGPT or other generative AI for project tutoring? | AI Integration |

| LMA | Use of AI tools for market analysis and idea validation? | AI Integration |

| LDE | Use of AI for prototyping and product development (e.g., DALL·E)? | AI Integration |

| LRI | Critical reflection on risks and ethics of AI in education? | AI Integration |

| Dimension | α (Pre) | α (Post) |

|---|---|---|

| Entrepreneurial Competencies | 0.946 | 0.962 |

| Entrepreneurial Knowledge | 0.896 | 0.943 |

| Teaching Strategies for AI & Entrepreneurship | 0.896 | 0.944 |

| Use of AI in Education | 0.838 | 0.948 |

| Dimension | Pre-Test (M ± SD) | Post-Test (M ± SD) | p-Value (Wilcoxon) | p-adj (Holm-Bonferroni) | Cohen’s d |

|---|---|---|---|---|---|

| Entrepreneurial Competencies | 2.86 ± 0.70 | 3.91 ± 0.52 | <0.001 | <0.001 | 0.87 (large) |

| Entrepreneurial Knowledge | 2.91 ± 0.61 | 3.84 ± 0.47 | <0.001 | <0.001 | 0.75 (moderate) |

| Teaching Strategies for AI & Entrepreneurship | 2.73 ± 0.65 | 3.76 ± 0.58 | <0.001 | <0.001 | 0.76 (high) |

| Use of AI in Education | 2.41 ± 0.71 | 3.59 ± 0.66 | <0.001 | <0.001 | 0.64 (moderate) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Quishpe-Quishpe, L.; Acosta-Vargas, I.; Rodríguez-Rojas, L.; Medina-Arias, J.; Coronel-Navarro, D.A.; Torres-Gutiérrez, R.; Acosta-Vargas, P. Impact of a Contextualized AI and Entrepreneurship-Based Training Program on Teacher Learning in the Ecuadorian Amazon. Sustainability 2025, 17, 8850. https://doi.org/10.3390/su17198850

Quishpe-Quishpe L, Acosta-Vargas I, Rodríguez-Rojas L, Medina-Arias J, Coronel-Navarro DA, Torres-Gutiérrez R, Acosta-Vargas P. Impact of a Contextualized AI and Entrepreneurship-Based Training Program on Teacher Learning in the Ecuadorian Amazon. Sustainability. 2025; 17(19):8850. https://doi.org/10.3390/su17198850

Chicago/Turabian StyleQuishpe-Quishpe, Luis, Irene Acosta-Vargas, Lorena Rodríguez-Rojas, Jessica Medina-Arias, Daniel Antonio Coronel-Navarro, Roldán Torres-Gutiérrez, and Patricia Acosta-Vargas. 2025. "Impact of a Contextualized AI and Entrepreneurship-Based Training Program on Teacher Learning in the Ecuadorian Amazon" Sustainability 17, no. 19: 8850. https://doi.org/10.3390/su17198850

APA StyleQuishpe-Quishpe, L., Acosta-Vargas, I., Rodríguez-Rojas, L., Medina-Arias, J., Coronel-Navarro, D. A., Torres-Gutiérrez, R., & Acosta-Vargas, P. (2025). Impact of a Contextualized AI and Entrepreneurship-Based Training Program on Teacher Learning in the Ecuadorian Amazon. Sustainability, 17(19), 8850. https://doi.org/10.3390/su17198850