Abstract

In the context of the rapid digitalization of higher education, proactive artificial intelligence (AI) agents embedded within multi-agent systems (MAS) offer new opportunities for personalized learning, improved quality of education, and alignment with sustainable development goals. This study aims to analyze how such AI solutions are perceived by students at Narxoz University (Kazakhstan) prior to their practical implementation. The research focuses on four key aspects: the level of student trust in AI agents, perceived educational value, concerns related to privacy and autonomy, and individual readiness to use MAS tools. The article also explores how these solutions align with the Sustainable Development Goals—specifically SDG 4 (“Quality Education”) and SDG 8 (“Decent Work and Economic Growth”)—through the development of digital competencies and more equitable access to education. Methodologically, the study combines a bibliometric literature analysis, a theoretical review of pedagogical and technological MAS concepts, and a quantitative survey (n = 150) of students. The results reveal a high level of student interest in AI agents and a general readiness to use them, although this is tempered by moderate trust and significant ethical concerns. The findings suggest that the successful integration of AI into educational environments requires a strategic approach from university leadership, including change management, trust-building, and staff development. Thus, MAS technologies are viewed not only as technical innovations but also as managerial advancements that contribute to the creation of a sustainable, human-centered digital pedagogy.

1. Introduction

Artificial intelligence is increasingly recognized as a transformative force in education, capable of personalizing learning and improving student outcomes. Recent analyses and reports highlight the potential of AI-driven educational technology to enhance teaching and learning processes [1,2]. In particular, proactive AI agents represent a paradigm shift from traditional reactive systems: these agents autonomously anticipate user needs and take initiative to support learning without waiting for explicit prompts. Recent implementations illustrate both opportunities and challenges of AI in education. AI tutors have shown significant learning gains compared with in-class active learning [3]. Early-warning analytics predict at-risk students and trigger timely support, yet raise concerns about fairness and false positives [4]. Mainstream LMSs (e.g., Canvas) are embedding AI assistants that proactively nudge students and help instructors, which surfaces issues of privacy, vendor lock-in, and governance [5,6]. Multi-agent simulation environments enable scalable, scenario-based practice but require transparency and careful pedagogical design [7,8]. At the same time, adoption is already widespread among students—around four in five undergraduates report using GenAI for study support—while accuracy and misuse remain salient concerns [9]. Proactive AI agents, such as adaptive feedback systems in Canvas LMS and AI-driven tutoring, are increasingly used to personalize learning. These technologies differ from traditional, reactive digital tools by actively guiding students based on predictive data analysis and contextual insights. While AI offers new opportunities for equitable access to quality education, it also raises critical concerns regarding fairness, bias, and data privacy. Addressing these challenges is crucial for building sustainable digital pedagogies aligned with SDG 4 and SDG 8. When multiple such agents collaborate, they form a multi-agent system (MAS) that can manage complex tasks and interactions in a digital learning environment. MAS architectures, long studied in computer science, are now being applied to online education to create intelligent tutoring systems, virtual peers, and automated support agents working in concert [10,11]. Notably, research on pedagogical agents (virtual characters that assist learners) dates back to the 1970s, and such agents are characterized by attributes like autonomy, adaptability, and proactivity.

Interest in AI for education has surged dramatically in the last decade. A recent bibliometric review shows that the volume of publications on AI in education grew from only a few hundred before 2010 to thousands per year in the 2020s. This rise, accelerated around 2020 by the pivot to online learning during COVID-19, reflects a broadening research agenda encompassing intelligent tutoring systems, adaptive learning, learning analytics, and more [12,13]. Amid this growth, proactive AI agents have gained attention for their ability to actively support learners. Instead of merely responding to student queries, proactive agents can, for example, monitor a student’s progress and intervene with hints, resources, or reminders at the right moments. Barange et al. [14] demonstrated that embedding proactive pedagogical agents in a virtual team-based learning scenario significantly improved student engagement and task completion rates. Similarly, multi-agent system approaches have been used to tackle challenges in large-scale online courses; by delegating roles to different agents (e.g., a tutor agent, a grader agent, a motivator agent), educators can ensure timely feedback and personalized assistance for many students in parallel [10,15]. Bassi et al. [15] described how software agents in a MOOC environment can detect learning difficulties in real time and alert instructors or automatically provide support, thereby improving both efficiency and educational quality.

Trust in digital technologies is increasingly viewed as a cornerstone of sustainable educational governance. In rapidly digitizing learning environments, trust is not an assumed default but a dynamic, cultivatable asset. Especially in the era of AI tools like ChatGPT (OpenAI, GPT-4), students’ trust represents a form of digital capital that institutions must deliberately nurture through transparent design, ethical implementation, and inclusive dialogue. From a managerial perspective, trust in AI systems is closely tied to governance, institutional reputation, and student satisfaction. Leaders must consider not only technological readiness but also socio-psychological readiness—how students understand, evaluate, and feel about intelligent systems that support or guide their learning. The successful implementation of AI tools thus requires strategic planning that aligns with both pedagogical goals and human factors.

While the technological capabilities of proactive AI agents are advancing, understanding student perceptions of these tools is crucial for successful implementation. Research on technology acceptance has long established that users’ beliefs about a system—such as its perceived usefulness and ease of use—strongly influence adoption [16]. In educational contexts, additional factors come into play: students must trust the AI-driven system to provide reliable and fair assistance, and they may harbor concerns (e.g., about privacy, academic integrity, or the replacement of human teachers). Indeed, trust in automation is seen as a key factor that moderates how much users rely on AI recommendations [17]. Recent global survey data indicate that 80% of undergraduates have used GenAI to support their studies [9]. This trust gap underscores the importance of proactively addressing students’ concerns and ensuring transparency in AI’s decisions. It also suggests that even if proactive agents are technically effective, students may not fully embrace them unless they are confident in the agents’ credibility and alignment with ethical norms.

Narxoz University, a leading institution in Kazakhstan, provides a relevant context for studying these issues. With a new emphasis on digital transformation and AI integration in its curriculum (e.g., programs in digital engineering and data science), Narxoz is representative of universities aiming to leverage AI for enhancing learning. Yet, like many institutions in developing and emerging economies, the adoption of AI in day-to-day teaching is still nascent. There is a lack of empirical data on how Narxoz students perceive AI-driven educational technology. To bridge this gap, our study targets the specific population of Narxoz undergraduates (18–20 years old) to gauge their readiness and attitudes toward proactive AI agents embedded in an educational MAS. This focus also allows us to consider any cultural or regional nuances in technology acceptance, which is valuable given that most AI-in-education studies to date have been conducted in North America, Europe, or East Asia [13].

Moreover, the implementation of tools based on artificial intelligence is not only a technological initiative but also a managerial challenge at the institutional level. At Narxoz University, decision-making in the field of digital transformation requires coordination between academic leadership, IT management structures, and faculty professional development departments—all of which play a key role in ensuring that the adopted solutions align with pedagogical objectives and ethical standards.

The purpose of this paper is threefold: (1) to review relevant literature on proactive AI agents and multi-agent systems in education, establishing theoretical baselines and trends; (2) to develop a comprehensive survey instrument for assessing student perceptions (usefulness, trust, impact, concerns, readiness) regarding proactive AI in a digital learning environment; and (3) to analyze student responses, providing a descriptive outlook on how students might accept or challenge such technology. Although no actual MAS has been implemented on the Canvas learning management system (LMS) yet, the study lays important groundwork for future implementation. By situating the findings within the broader context of Sustainable Development Goals (notably SDG 4 on Quality Education and SDG 8 on Decent Work and Economic Growth), we aim to derive implications that extend beyond Narxoz University. The underlying hypothesis is that if proactive AI agents are well-received by students and thoughtfully integrated, they could enhance learning experiences (contributing to SDG 4) and simultaneously build students’ competencies with advanced technologies (contributing to SDG 8).

Research Question: How do university students perceive the trustworthiness, value, and ethical concerns of proactive AI agents in education, and what factors influence their readiness to use such systems?

Hypothesis: If proactive AI agents are perceived as useful, trustworthy, and non-threatening, students will be more willing to adopt MAS-based tools, which in turn may enhance learning quality and personalization.

The theoretical contribution of this study lies in its focus on student perceptions of proactive AI agents prior to their deployment, in contrast to most existing research that evaluates such technologies only after implementation. This preemptive perspective provides a valuable baseline for understanding expectations and offers actionable design insights for future rollouts. In terms of practical relevance, the findings support the development of pilot strategies for implementing multi-agent systems at Narxoz University and similar institutions in developing contexts, presenting a student-centered roadmap for the sustainable integration of AI in education.

The following sections detail the literature foundations, the methodology of our survey approach, the results with visual analyses, and a discussion of the theoretical and practical significance of the findings.

2. Literature Review

The field of Artificial Intelligence in Education (AIED) has evolved from niche experiments in the 1980s and 1990s to a major research domain today. Early efforts, such as rule-based tutoring systems and simple expert systems for teaching, laid the groundwork for concepts like intelligent tutoring systems and learner modeling [1,13]. Over time, the scope of AIED expanded to include data-driven adaptive learning platforms, learning analytics dashboards, and conversational tutors, among others. A recent bibliometric study by Kavitha and Joshith [12] quantitatively confirmed this expansion. Analyzing two decades of publications, they found exponential growth in AI-in-education research output—for example, the annual number of Scopus-indexed papers on AIED jumped from under 300 in 2010 to over 6000 in 2023. This surge reflects not only advances in AI techniques (e.g., machine learning, natural language processing) but also a pressing demand for innovative educational technologies, especially highlighted during the COVID-19 pandemic’s shift to online learning. Common themes in recent literature include personalized learning through AI, automated assessment and feedback, intelligent tutoring across various subjects, and, more recently, the exploration of generative AI (like GPT-based tutors) in classrooms. Reviews of AIED research note that while technical development has been vigorous, more work is needed on pedagogical integration and on understanding human factors (like teacher and student attitudes) in adoption [13]. This aligns with our study’s focus on student perceptions as a crucial element in the success of AI interventions.

Traditional educational software, and even many AI tutors, operate primarily in a reactive mode—responding to student actions or direct inquiries. By contrast, proactive AI agents are designed to anticipate needs and act on their own initiative. In the context of a learning environment, a proactive agent might, for example, notice that a student has been stuck on a particular concept and then volunteer hints or recommend supplementary materials, even if the student has not explicitly asked for help. Key to this behavior is the agent’s ability to monitor environmental cues (such as student performance data or time on task), predict potential issues, and execute an intervention that aligns with pedagogical goals. The concept of software agents with such capabilities has been around for decades in computer science. Jennings and Wooldridge (1995) famously characterized an intelligent agent as an autonomous entity that not only reacts to the environment but also exhibits pro-activeness and goal-directedness in its actions [10]. Similarly, the MIT Media Lab’s Software Agents Group defined agents as computer systems to which tasks can be delegated, noting that agents “differ from conventional software in that they are long-lived, semi-autonomous, proactive, and adaptive”. In educational research, proactive agent behaviors have been explored under various guises, such as metacognitive tutors that prompt students to reflect on their learning process, or motivational agents that pop up to encourage or challenge learners at opportune moments. Barange et al. [14] introduced an agent architecture (termed PC2 BDI) that integrates proactive task-oriented behavior with pedagogical strategies. In their system, one agent in a team training simulation took on a proactive role, frequently offering next steps and information unprompted, which led to learners feeling more supported and staying more engaged. These findings suggest that proactivity can amplify an agent’s educational impact—however, they also raise questions about balance (too many unsolicited interventions might frustrate users). Thus, designing proactive agents requires careful consideration of when and how an agent should act without prompt, so that its autonomy is helpful rather than obtrusive.

Multi-agent systems consist of multiple interacting agents, each possibly with specialized roles, that work together within an environment. In a digital educational context, an MAS could orchestrate different AI components: For example, one agent might handle domain content tutoring, another monitors the learner’s affect or motivation, and another manages scheduling or group collaboration dynamics. The MAS paradigm is beneficial in complex environments like learning management systems (LMS) where numerous tasks (tutoring, grading, feedback, forum moderation, etc.) occur simultaneously. By delegating these tasks to different agents that communicate with each other, the system can, in theory, scale to many students and tasks more effectively than a monolithic system. Researchers have argued that MAS provide a natural way to model the distributed nature of learning settings (with many learners, resources, and activities) [11,15]. For instance, in a large online course, one agent can proactively identify students at risk of falling behind by analyzing quiz results and then trigger another agent to send personalized encouragement or study tips to those students. This kind of “digital teamwork” among AI agents was demonstrated by Bassi et al. [15] in the context of MOOCs. Their approach used agents for course design improvement, content recommendation, and student assessment, operating concurrently to reduce instructors’ workload and enhance student support. Importantly, multi-agent approaches also enable modularity—new agents (e.g., a plagiarism-detection agent or a social learning peer agent) can be added to the system without overhauling the entire architecture, as long as they adhere to communication protocols with other agents. Several studies have reported positive outcomes from MAS-based e-learning systems: increased timeliness of interventions, more tailored learning paths, and better management of class-wide data [11,15,17]. However, MAS are not without challenges. Coordination among agents is complex and requires robust design (often using frameworks like the Belief-Desire-Intention model or reinforcement learning for agent policies). There is also the issue of user transparency—when multiple agents are acting, students and teachers might not easily understand which agent is doing what, potentially causing confusion or reducing trust if not properly handled.

A significant body of literature exists on pedagogical agents—typically animated or avatar-based agents in educational software that interact with learners in a human-like manner (e.g., a cartoon tutor that speaks and gestures). These agents are a subset of AI agents focused on the user interface and social aspects of learning. Prior research has found that pedagogical agents can increase learner motivation and provide social presence, which is particularly useful in online learning, where human teacher presence is limited [18]. For example, studies by Johnson, Lester, Baylor, and others in the 2000s showed that engaging agent personas can make learning more enjoyable and keep students on task. Pedagogical agents often embody roles such as a mentor, a peer learner, or a teachable student to facilitate different pedagogical strategies. In adaptive learning systems, an agent might personalize its feedback tone or the difficulty of hints based on the learner’s performance. A systematic review by Veletsianos and Russell [18] surveyed pedagogical agent literature up to 2011 and noted many claimed benefits (adaptivity, improved engagement, etc.) but also found that empirical evidence was sometimes mixed or context-dependent. They found that while agents were often credited with improving learners’ motivation or understanding, rigorous comparisons showed that not all agent enhancements yielded significant learning gains, and they recommended more longitudinal studies in authentic settings. This indicates that simply adding an agent is not a guarantee of success—it must be well-designed and aligned with sound pedagogy. Nonetheless, the concept of proactive pedagogical agents builds on this lineage: The agents in our study’s vision are essentially pedagogical agents with the added dimension of acting autonomously in anticipation of student needs.

With the increasing sophistication of AI in education, researchers have turned their attention to the human factors influencing adoption. Two critical factors are trust and user concerns. Trust in an AI system reflects the user’s willingness to rely on it and the belief that it will behave as intended, safely, and accurately [17]. In an educational scenario, a student’s trust in a proactive agent would determine whether they follow the agent’s advice and integrate its feedback into their learning. If trust is low, students might ignore or bypass the agent, nullifying its benefits. Lee and See [17] emphasize designing automation (and, by extension, AI agents) in ways that foster appropriate trust—neither distrust (which leads to disuse) nor blind over-trust (which can lead to misuse or complacency). For AI tutors, transparency of the agent’s reasoning and a history of reliable performance can build trust. Conversely, unexplained or erroneous agent actions can quickly degrade trust. Alongside trust are broader concerns that students may have. A prominent concern is whether AI tools might undermine academic integrity or learning itself (for instance, if students rely on AI hints too much, do they still learn the underlying material?). Others include privacy (what data is the agent collecting about me?), fairness (is the AI biased or favoring some students?), and even job-related anxieties (will AI replace teachers or make some skills obsolete?). A recent U.S. Department of Education report (2023) noted that many students and educators worry about AI’s accuracy and the potential for bias or misinformation in educational content [19]. The Chegg.org Global Student Survey 2025 further highlighted that while students are eager to use AI for studying, they desire better tools with educational alignment and express reservations about trusting AI outputs. These findings resonate with the context at Narxoz University as well: Being a region where AI in education is an emerging concept, students likely approach new AI tools with a mix of excitement and skepticism. Addressing these trust and concern issues is crucial. Prior studies have suggested strategies such as involving students in the AI design process (to voice concerns early), providing opt-out or control options over agent assistance, ensuring ethical guidelines (like the UNESCO AI in Education recommendations) are followed, and training students in AI literacy so that they understand the AI’s capabilities and limits [2,20].

At a macro level, the implementation of AI and MAS in education ties into global objectives like the Sustainable Development Goals. SDG 4, Quality Education, calls for inclusive and equitable quality education and the promotion of lifelong learning opportunities. Proactive AI agents could contribute to SDG 4 by enabling more personalized learning support, thus helping diverse learners succeed. For example, AI tutors can scale individualized attention (something traditionally limited by teacher–student ratios) to large classes, potentially reducing inequities in learning outcomes. Moreover, AI can help identify students with learning difficulties or gaps early on, facilitating timely remedial actions. These improvements target the quality and equity aspects of SDG 4. As Arruda and Arruda [21] discuss, appropriately deployed AI in education may mitigate teacher shortages and augment teaching practices, thereby improving educational quality and helping address inclusion by tailoring education to each student’s context. On the other hand, SDG 8, Decent Work and Economic Growth, is indirectly connected to our topic through the development of digital skills and preparation of students for the future workforce. The integration of AI into education helps cultivate AI literacy and experience with intelligent systems among students. This aligns with labor market trends where AI competencies are increasingly valuable. The World Economic Forum’s Future of Jobs reports consistently emphasize the rising demand for AI-related skills and the need for education systems to adapt accordingly [20]. By learning with AI agents, students not only master academic content but also learn how to interact with AI—a meta-skill likely relevant to many future jobs. Additionally, AI can foster decent work by creating new educational roles (e.g., AI curators, data analysts in education) and by ensuring students are not left behind in the digital economy. According to the International Labour Organization, AI’s net impact on jobs can be positive if humans are equipped with the right skills and AI is used to complement human work rather than solely to automate it. In this sense, proactive AI agents in the classroom can be seen as both a means to improve immediate educational outcomes and a long-term investment in human capital for the knowledge economy. Of course, maximizing these benefits requires careful implementation—issues of teacher training, curriculum integration, and policy support must be addressed. Our study’s findings, although based on a hypothetical scenario, aim to shed light on how ready students are to embrace these tools, which is one piece of the larger puzzle in aligning educational AI deployments with sustainable development objectives.

In summary, the literature suggests that proactive AI agents within MAS hold significant potential to transform digital educational environments. They leverage the power of AI to provide timely, tailored support and can manage the scale of online learning through distributed agent collaboration. However, technical optimism must be balanced with human-centered considerations: Student trust, perceived value, and comfort with AI are pivotal for success. Building on these insights, we next describe our methodological approach to investigate Narxoz University students’ perspectives on proactive AI agents in their learning environment. Recent studies highlight real-world applications of proactive AI agents in higher education. For example, Ref. [22] discusses AI-powered Educational Agents, while Ref. [23] examines predictive student support systems in European contexts. These examples illustrate both the potential and the implementation challenges of AI in diverse educational settings. Moreover, current research reveals ongoing debates on trust, autonomy, and transparency in AI-driven education, emphasizing the need for frameworks that ensure ethical and human-centered learning experiences.

3. Methods

3.1. Survey Instrument Design

To explore student perceptions of proactive AI agents in a MAS-based digital learning environment, we developed a structured survey instrument. The survey was informed by technology acceptance theories and prior educational technology studies to ensure it covered relevant constructs. In particular, Davis’s Technology Acceptance Model (TAM) provided guidance for including Perceived Usefulness (the degree to which a student believes the AI agent would enhance their learning) [16], and we also considered aspects of the Unified Theory of Acceptance and Use of Technology (UTAUT) such as facilitating conditions and social influence [24] in the broader survey design. Additionally, drawing on the concept of technology readiness (Parasuraman, 2000) [25], we included a measure of Willingness (Readiness) to capture students’ general propensity to embrace new technologies like MAS-based tools.

After reviewing literature and consulting with two faculty experts in educational technology, we identified five key constructs for the survey: Perceived Usefulness, Trust, Expected Educational Impact, Concerns about AI, and Willingness (Readiness) for MAS-based tools. Each construct was operationalized through multiple Likert-scale items (statements) to increase reliability. Table 1 summarizes the constructs and example items.

Table 1.

Constructs and sample items used in the survey instrument.

Each item was phrased as a statement with a 5-point Likert response scale: 1 = Strongly Disagree (SD), 2 = Disagree (D), 3 = Neutral (N), 4 = Agree (A), 5 = Strongly Agree (SA). Higher numerical values generally correspond to a more favorable perception (except negatively worded concern items, which were reverse-coded for analysis). The survey instrument was prepared in English, which is an instructional language at Narxoz University, and reviewed by a bilingual expert to ensure clarity for any non-native English speakers in the sample. We conducted a pilot test of the questions with a small group of 5 students for feedback on wording and adjusted a few items for clarity accordingly. For instance, the term “proactive AI agent” was explained in the introduction to the survey to avoid confusion (described as “an AI-based digital assistant that can initiate helpful actions on its own, within your online course platform”).

While developing the instrument, we also kept in mind the alignment with our research goals around SDGs. Table 2 shows that “Expected Impact” items touched on the quality of learning (SDG4 relevant) and that “Willingness (Readiness)” implicitly relates to being prepared for a tech-driven work environment (SDG8 relevant). Although students might not directly think in terms of SDGs, these connections guided the conceptual framing of some questions.

Table 2.

Descriptive statistics for survey construct.

3.2. Participants and Data Collection

The target population for this study was undergraduate students at Narxoz University in Almaty, Kazakhstan, specifically those in the typical college-age range of 18–20. We focused on this group because they are digital natives likely to be the first to experience AI-agent-augmented education at the university. The gender distribution in our target population (and consequently our sample) is approximately 60% male and 40% female, reflecting the demographics of certain programs such as economics and technology, where male enrollment has been higher. We aimed to ensure representation of both genders to examine if perceptions differ (though analyzing gender differences was outside the primary scope, it is noted as a contextual factor).

Due to the theoretical nature of this research, no actual deployment of MAS in Narxoz’s Canvas LMS was done. Instead, we collected survey responses under a hypothetical scenario: Students were asked to imagine that their LMS (e.g., Canvas) included AI-driven virtual assistants that could proactively help them with coursework. To facilitate their imagination, the survey included a short description and a few examples of what such proactive agents could do (for example, sending a reminder before deadlines, suggesting extra practice problems if a quiz score is low, or forming study groups by matching students after an assignment). This scenario-based approach is a form of eliciting user acceptance and expectations prior to implementation, akin to technology acceptance surveys used for concept testing.

We distributed the survey using Google Forms (a platform familiar to students) and collected responses over a period of two weeks. Students were informed that this was part of a research study about new educational technologies and participation was voluntary and anonymous. We created a dataset of 150 students.

Ethical considerations were minimal, but we still followed standard protocols: The introduction to the survey assured participants of anonymity and that results would be reported only in aggregate.

3.3. Data Analysis

Since no experimental intervention was conducted and we did not implement an actual MAS, our analysis is purely descriptive. The goal is to summarize the students’ perceptions for each survey dimension using appropriate statistics and visualizations, rather than to test hypotheses about differences or predict behavior (which could be future work). The following steps were taken in the analysis:

- Data Preparation: We coded the Likert responses from 1 to 5 for each item, with higher values indicating more positive attitudes (except for items measuring concerns, which we reverse-coded so that a higher score indicates greater concern). We then aggregated items for each construct by computing the mean score per respondent for that construct. However, for simplicity and clearer presentation, we often focus on the distribution of responses to a key representative item for each construct, given that our instrument had high internal consistency per construct. Cronbach’s α values were as follows: Trust (0.79), Usefulness (0.82), Value (0.77), Confidentiality (Privacy concerns) (0.81), and Willingness (Readiness) (0.83), indicating strong reliability for all survey dimensions.

- Descriptive Statistics: We calculated the overall mean and standard deviation for each construct’s score across the 150 students. Additionally, we computed the frequency distribution of responses (in percentage) for each Likert category (SD, D, N, A, SA) per key item. These distributions provide insight into how consensus or polarized the opinions are. For example, a construct where 70% “Agree” or “Strongly Agree” indicates a strong positive leaning, whereas a construct with responses spread more evenly suggests divergent views or uncertainty.

- Visualizations: To fulfill the objective of including diverse visual representations, we generated separate charts for each of the five main survey dimensions:

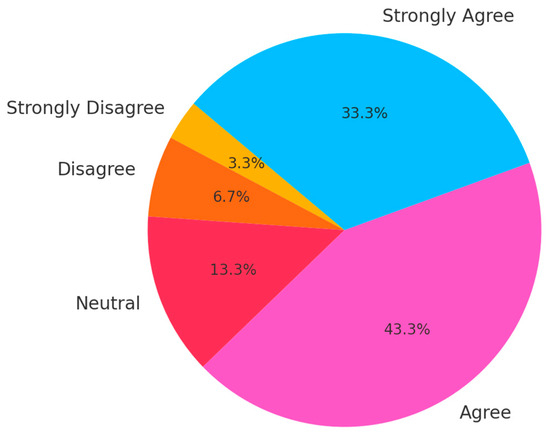

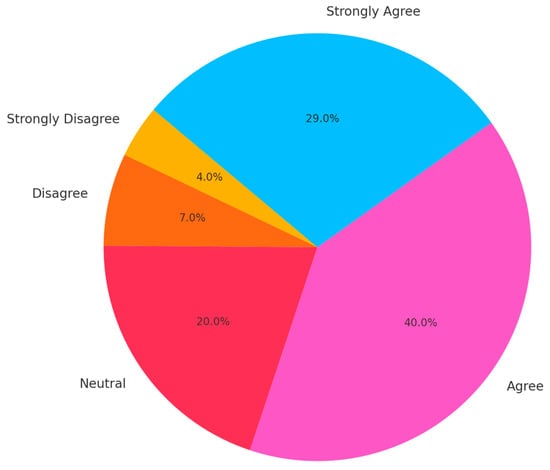

- Perceived Usefulness: Displayed with a pie chart showing the proportion of students selecting each response option from Strongly Disagree to Strongly Agree. This visualization (Figure 1) provides a quick snapshot of overall sentiment about usefulness.

Figure 1. Perceived usefulness of proactive AI Agents—distribution of student responses (in percent). A large majority agreed that proactive AI agents would be useful for their learning.

Figure 1. Perceived usefulness of proactive AI Agents—distribution of student responses (in percent). A large majority agreed that proactive AI agents would be useful for their learning. - Trust: Displayed with a chart (Figure 2) where the y-axis is the number of students and the x-axis lists the five response categories (SD through SA). This shows the count of students at each trust level.

Figure 2. Trust in proactive AI agents—chart of responses. While a majority would trust AI agent recommendations (~60% agree at some level), a substantial proportion of students are neutral or skeptical about trusting the agent fully.

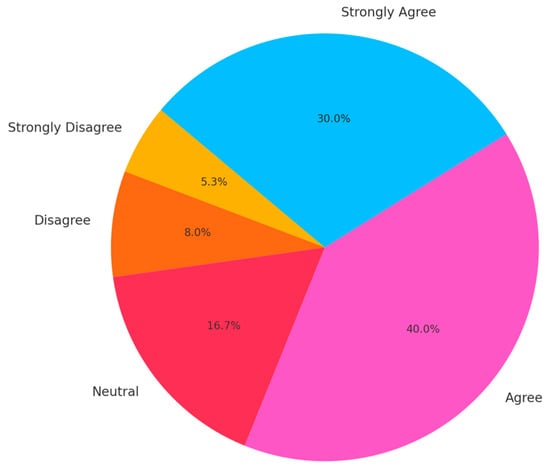

Figure 2. Trust in proactive AI agents—chart of responses. While a majority would trust AI agent recommendations (~60% agree at some level), a substantial proportion of students are neutral or skeptical about trusting the agent fully. - Expected Impact: Displayed with a chart (a pie chart) in Figure 3, illustrating the percentage breakdown of agreement levels on expected impact.

Figure 3. Expected educational impact—chart showing percentage of students by level of agreement that proactive AI agents will improve learning outcomes. There is strong optimism, with about 74% expressing agreement that such AI would make learning more effective overall.

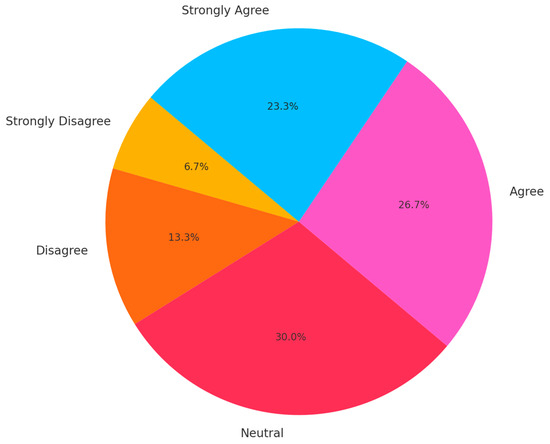

Figure 3. Expected educational impact—chart showing percentage of students by level of agreement that proactive AI agents will improve learning outcomes. There is strong optimism, with about 74% expressing agreement that such AI would make learning more effective overall. - Concerns about AI: Displayed with a chart in Figure 4. Here, the number of students and the response categories listed are from “Not Concerned” (Strongly Disagree with concern statements) to “Highly Concerned” (Strongly Agree). Inverting the y-axis (so high concern is at the top) allows us to emphasize the top bars as those with the most concern.

Figure 4. Concerns about AI—chart of student agreement with concern statements (e.g., worries about privacy, accuracy, dependence on AI). Over half of respondents (55%) indicated they are concerned or highly concerned about potential negative aspects of AI in education, while only about 20% expressed little to no concern.

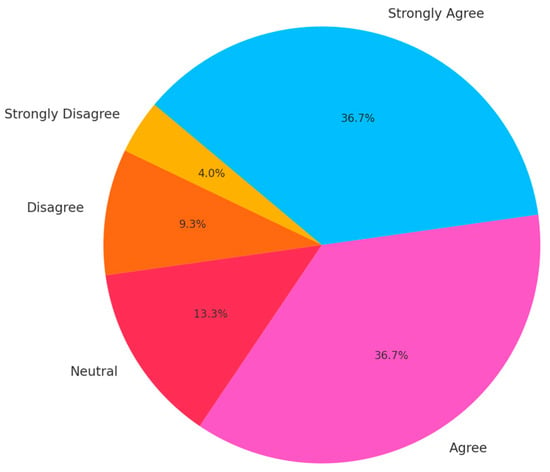

Figure 4. Concerns about AI—chart of student agreement with concern statements (e.g., worries about privacy, accuracy, dependence on AI). Over half of respondents (55%) indicated they are concerned or highly concerned about potential negative aspects of AI in education, while only about 20% expressed little to no concern. - Willingness (Readiness): Displayed with a 100% stacked chart in Figure 5. This pie chart is segmented into colored portions representing the percentage of students in each category of readiness (from strongly disagree to strongly agree). It effectively shows the proportion of students not ready, neutral, and ready on a continuum of 100%. A legend indicates which color corresponds to which Likert category.

Figure 5. Willingness (Readiness) for MAS-based educational tools—100% stacked bar showing the proportion of students indicating each level of readiness.

Figure 5. Willingness (Readiness) for MAS-based educational tools—100% stacked bar showing the proportion of students indicating each level of readiness.

Each figure is labeled and cited in the text (as Figure 1, Figure 2, etc.), accompanied by a brief description in the Results section. Data analysis was conducted in Python 3.11 using the libraries pandas 2.2.2, seaborn 0.13.2, and matplotlib 3.8.4 to ensure transparency and reproducibility.

Ethical Statement: Informed consent was obtained from all subjects involved in the study. Participation was voluntary and anonymous.

4. Results

Sample Demographics. A total of 150 student responses were analyzed. The mean age was 18.9 years (SD 0.8, since most were first- or second-year undergraduates). In terms of gender composition, 60% of respondents identified as male (n = 90) and 40% as female (n = 60), matching the intended stratification. Students came from a variety of majors including economics, digital technology, and business administration, giving a reasonably broad representation of the Narxoz student body in terms of academic background. All participants had prior experience using the university’s Canvas LMS for at least one semester, and about 75% reported that they use the LMS on a daily or weekly basis for course-related activities. This familiarity with the digital learning environment is important, as it means their responses about AI agents are grounded in the context of a platform they know. None of the students had previously used an AI tutoring system formally in their classes, though about 30% mentioned they had tried general AI tools (like ChatGPT or AI-based language learning apps) on their own. This indicates a baseline awareness of AI among a substantial subset of the sample.

Instrument reliability. The instrument demonstrated good internal consistency: α = 0.79 (Trust), 0.82 (Usefulness), 0.77 (Value), 0.81 (Confidentiality (Privacy concerns)), and 0.83 (Willingness (Readiness)).

Perceived Usefulness. Students overwhelmingly believed that proactive AI agents would be beneficial to their learning. The average usefulness score (on a 1–5 scale) was 4.2, corresponding to a position between “Agree” and “Strongly Agree” on usefulness statements. Figure 1 illustrates the distribution of responses on a representative usefulness item (“AI agents would help me learn faster or more effectively”). Figure 1 shows that a large majority of students responded positively: 43.3% Agree and 33.3% Strongly Agree, meaning 76.6% in total expressed a favorable view of the agent’s usefulness. About 13.3% were Neutral, and only a small minority (~6.7%) disagreed. This skew toward the agree end of the spectrum suggests that students recognize or at least believe in the potential of AI assistance. Qualitatively, this aligns with general student enthusiasm for technology; many are accustomed to using smart apps or services in daily life and expect similar efficiency gains in academics. The high perceived usefulness aligns with findings in other contexts that young learners often see personalized tech as helpful for learning [1]. In our scenario description, we gave examples of agents providing timely reminders or study tips, which likely resonated with students who can immediately see how forgetting less or getting targeted advice could improve their outcomes. The strong positive sentiment here provides a foundation for likely acceptance—according to TAM, if a system is seen as useful, students will have a motive to use it [16]. However, it is notable that a non-trivial fraction (around one-fifth) did not actively agree, indicating that while no one outright rejects the idea, some students are uncertain about the benefit. Those neutral students may be adopting a “wait and see” attitude—perhaps they think AI agents might help but are not sure until they experience it. This is an area where pilot implementations and showcasing evidence of effectiveness could convert the neutral group into believers.

Trust levels were moderately positive but noticeably lower than perceived usefulness. The mean trust score across all responses was approximately 3.6 on a 5-point scale. Figure 2 presents a chart of responses to a representative trust item: “I would trust the AI agent’s suggestions for my studies.” As shown, 60 students (40%) agreed, and 45 students (30%) strongly agreed, meaning 70% of respondents express at least some level of trust in the proactive AI agent. 25 students (17%) remained neutral, indicating caution or indecision, while 13% expressed some level of distrust (8% disagreed, 5% strongly disagreed). This distribution reflects a generally favorable orientation toward trusting AI but not an unqualified one. A clear majority are inclined to rely on the agent’s recommendations, but the presence of neutrality and mild skepticism indicates that trust is still forming at this conceptual stage. These responses likely reflect students’ mindset of “It seems helpful, but I need to see it work reliably before I fully trust it,” which is consistent with broader patterns in emerging AI adoption research. This is understandable given that trust is a construct often shaped by experience and interaction. At this early conceptual stage—before students have used the system—many may be thinking, “It seems promising, but I’d need to see how it actually performs before fully trusting it.” This aligns with findings in broader AI adoption literature, where users express openness but also a healthy degree of caution toward automated systems. Interestingly, no strong gender differences were apparent in trust in our data—both male and female students had similar trust averages (males 3.7 vs. females 3.5, a difference not statistically significant in a descriptive sense). This suggests that trust (or lack thereof) in AI may be more influenced by individual experiences with technology or personality factors than by gender, at least in this sample. The neutral quarter of students likely represents those who simply do not know if the AI will be trustworthy. They might be thinking of scenarios where AI could be wrong or might not understand them as a human would, reflecting a healthy skepticism. On the other hand, the majority with positive trust probably assume that if the university implements such a system, it would be tested and accurate. Some may also have had positive experiences with AI in other domains (e.g., trust in navigation apps or autocorrect), which they are projecting onto this context.

From a design perspective, the fact that trust is not as high as perceived usefulness implies that system designers and educators should take active steps to build trust—for example, by providing explanations for the AI’s suggestions (increasing transparency) or by demonstrating the AI’s track record of correctness early on. According to Lee and See’s framework [17], trust will calibrate over time with use, but initial communication about what the AI can and cannot do will help set appropriate trust levels. Our results reinforce that trust needs to be earned; even though students are tech-savvy, they will not blindly trust a new AI tutor without some proof of its credibility.

Students generally expect proactive AI agents to have a positive influence on education. This construct received one of the highest average scores (mean ≈ 4.3), reflecting strong optimism about the role of AI in enhancing academic performance and learning efficiency. As seen in Figure 3, 36.7% of students agreed and an equal 36.7% strongly agreed with the statement, “Proactive AI tutors will help students learn more effectively in the long run.” This means that nearly 74% of respondents expressed positive expectations. A further 13.3% remained neutral, possibly indicating curiosity or a wait-and-see approach, while only 9.3% disagreed and 4% strongly disagreed—together totaling just over 13% who were skeptical. This distribution reinforces the interpretation that students are generally optimistic not only about how AI might assist them individually, but also about its broader educational impact at the institutional level.

This overwhelming positivity might be influenced by a general cultural zeitgeist that technology drives progress. Many of today’s students have seen how digital tools (from search engines to educational apps) can open new learning opportunities, so the idea that a more advanced AI could further improve education is plausible to them. Some students might also be extrapolating the idea that if one-on-one tutoring is the gold standard (per educational psychologists like Bloom’s 2 sigma finding), and AI can provide quasi-one-on-one tutoring, then the overall learning in a class could improve significantly. Their high expectations align with some optimistic voices in literature that view AI as a means to augment human teaching capacity and personalize learning at scale [1,2].

It is worth noting that expected impact was framed in positive terms; we did not explicitly ask about potential negative impacts in that construct (those aspects came under concerns). Therefore, this result should be interpreted as the proportion of students who see clear benefits, not as a dismissal that there are no downsides. Indeed, some of the same students who “Strongly Agree” that AI will improve learning might also “Agree” that they have concerns about AI—humans often hold simultaneously hopeful and cautious views about technology.

In summary, Narxoz students appear highly optimistic that proactive AI MAS could enhance educational quality. This bodes well for initiatives aiming to leverage AI for improving instruction: Student buy-in, in terms of believing it can help, is largely present. Managing these expectations will be important; if implementation occurs and the AI agents under-deliver relative to the high hopes, there could be disappointment or backlash. Therefore, while it is good to see enthusiasm, educators and AI developers should be transparent about what to realistically expect (e.g., “The AI can help with certain tasks but is not a substitute for your effort or instructor guidance”).

The Concerns about AI dimension reflects the more cautionary side of students’ attitudes. Although students generally recognize the benefits of AI agents, many still harbor reservations. The average score for concern-related items (reverse-coded so that a higher score indicates more concern) was approximately 3.4, suggesting a moderate level of concern overall. Figure 4 presents a chart summarizing student responses to a representative concern item, such as “I worry that using AI agents could have negative side effects.” The distribution reveals that 40 students (26.7%) agreed and 35 students (23.3%) strongly agreed, totaling 50% who expressed a significant level of concern. Additionally, 45 students (30%) responded neutrally, indicating uncertainty or ambivalence. On the other end, 20 students (13.3%) disagreed, and 10 students (6.7%) strongly disagreed, indicating they were relatively unconcerned. This spread suggests that while concern is not dominant, it is definitely present and not negligible. Institutions planning to implement AI-driven MAS should take this sentiment seriously by emphasizing privacy protection, transparency, and giving users agency in how AI is used in their learning environments.

The types of concerns voiced (via multiple items) include the following: Confidentiality (Privacy concerns): Many students indicated uncertainty about what data an AI agent might collect about them and who has access to it. This is reasonable given the increased awareness of data privacy issues. In an educational MAS, data like student performance, study habits, and possibly even chat interactions could be tracked by AI; students want assurance that these data are secure and used ethically. Misinformation/Accuracy: Several students were concerned that an AI might give incorrect answers or misleading guidance. This directly ties to trust—if the agent is occasionally wrong, students worry it could hurt their learning or grades. This concern likely stems from experiences with AI outside class (e.g., knowing that ChatGPT can sometimes produce incorrect answers confidently). Over-reliance: Some respondents feared that easy AI help might make them dependent and reduce their own learning effort or critical thinking. For example, one item stated, “I’m concerned that if I rely on the AI agent, I won’t develop my own problem-solving skills,” and a sizable number agreed. This echoes a common refrain in debates about AI in education, where the goal is to avoid crutches that impair skill development. Fairness and Bias: A few concern items addressed whether the AI agent could be biased or favor certain students. While not the top concern for most, those who are aware of algorithmic bias in AI did register this issue. In our sample, students in the digital technology program were slightly more likely to agree with bias-related concerns (perhaps due to their studies in computer science making them aware of such issues). Role of Teachers: An interesting qualitative comment (from an open feedback option) that some respondents gave is that they worry that increasing AI use might reduce human instructor interaction or even threaten teaching jobs. While our survey itself did not directly ask that, it it a plausible concern in the back of students’ minds: Will learning become too automated or impersonal?

Given that over half have significant concerns, these cannot be ignored. The presence of high perceived usefulness and high concern underlines a point often made in technology adoption literature: Users can be enthusiastic about benefits and anxious about risks at the same time. Effective change management would involve addressing these concerns through communication and system design. For instance, to assuage privacy worries, clear privacy policies and perhaps giving students control over certain data sharing would help. To handle accuracy concerns, one could initially keep the AI’s scope limited to low-stakes advice or have it provide supporting explanations and sources (so that students can verify information). Over-reliance issues could be mitigated by designing the agent to encourage student reasoning (for example, by asking questions Socratically instead of just giving answers outright).

Interestingly, the neutral group may include students who have not yet reflected deeply on the risks, or who feel they lack enough information. Experience with an actual AI agent might shift their views—toward concern if they encounter issues, or toward acceptance if the experience is smooth and beneficial. The less concerned segment may simply trust the institution or developers to handle risks, or they may be inherently more optimistic or less critical of emerging technology.

In any case, the takeaway is clear: Students do have real concerns, and if left unaddressed, these concerns could hinder the acceptance and effectiveness of proactive AI agents in educational environments. Therefore, any implementation plan should include clear communication, user education, and opportunities for dialogue about potential issues. It should also include technical safeguards for privacy, fairness, and reliability. As one study noted, “Identifying and managing users’ concerns is essential for sustained technology adoption in education” [26].

The Willingness (Readiness) construct captures students’ self-assessed preparedness and openness to using multi-agent AI tools in their educational experience. The average score for this dimension was approximately 3.8, reflecting a generally favorable disposition toward integrating such technologies.

As illustrated in Figure 5, a 100% chart reveals that approximately 35% of students strongly agreed and 40% agreed that they feel ready to use AI-driven learning agents. This totals a robust 75% expressing readiness. About 15% remained neutral, indicating some uncertainty, while 7% disagreed and 3% strongly disagreed, representing a small group who currently do not feel prepared.

This distribution is encouraging: Three-quarters of students already see themselves as ready to engage with these tools. Readiness here implies not only technical skills (e.g., navigating platforms like Canvas), but also psychological readiness—openness to new forms of instruction, adaptability, and confidence. This high level of readiness likely reflects the digital fluency of today’s university students, many of whom have grown up with internet-connected devices and online tools. At Narxoz University, ongoing investments in digital learning infrastructure and digital literacy may also play a role in cultivating this confidence.

The minority of students who indicated they are not ready may include those less familiar with advanced technologies or who prefer conventional learning environments. For these students, support mechanisms such as onboarding tutorials, low-stakes practice sessions, or optional training could be beneficial.

It is also worth noting that the 15% who selected “Neutral” might not be opposed, but simply unsure about what using AI agents entails. As with any innovation, hands-on exposure is likely to shift these perceptions, potentially increasing readiness once students realize the tools are intuitive and helpful. That said, some students may overestimate their own readiness, especially without understanding the complexity or learning curve involved. Follow-up research after actual implementation would be valuable in tracking these shifts.

Strategically, having three out of four students already ready provides a strong foundation for the successful rollout of AI-enhanced MAS platforms. This majority can act as peer influencers, normalizing the use of AI tools and encouraging hesitant students to engage—a phenomenon supported by the “social influence” principle in the Unified Theory of Acceptance and Use of Technology (UTAUT) [24].

Approximately 69% of students agreed or strongly agreed that they feel prepared to use proactive AI agents in their studies, 20% were neutral, and about 11% did not feel ready for such tools.

The results obtained are of significant importance for educational management. In order for proactive AI-based agents to be effectively accepted and utilized, university leadership should regard digital trust and student readiness as key elements of the digital transformation strategy. This includes change management, clear communication of the value of the implemented solutions, and support for both students and faculty during the adaptation process.

5. Discussion

This study set out to theoretically examine how undergraduate students at Narxoz University might perceive the integration of proactive AI agents within a multi-agent system in their digital learning environment. The patterns observed offer valuable insights when considered in light of existing research and the current educational context. In this section, we discuss the implications of the key findings—high perceived usefulness and expected impact, moderate trust and significant concerns, and overall readiness—and how these align with or diverge from prior studies. We also interpret these findings through the lens of Sustainable Development Goals 4 and 8, exploring how proactive AI in education could play a role in advancing these goals.

Trust in AI systems is not static—it evolves through direct experience, contextual feedback, and institutional cues. A student’s initial trust level may increase or decrease based on system behavior, clarity of explanations, and instructor endorsement. For example, pilot implementations often recalibrate trust: Students may shift from skeptical curiosity to confident use when they observe reliable outcomes and transparent AI functioning. Institutions can shape this dynamic by providing scaffolded experiences and consistently reinforcing the human–AI partnership model.

Student Acceptance and Technology Adoption: The strong positive response on perceived usefulness signals that students are primed to see value in AI assistance. This mirrors findings from technology acceptance research, where perceived usefulness is often the single most influential factor in a user’s decision to adopt a new system [16]. Our result is consistent with recent surveys indicating that students generally believe that digital tools (and, by extension, AI) can make learning more efficient [19]. It is worth noting that Narxoz students, similar to their global peers, juggle coursework, part-time jobs, and other responsibilities; therefore, an AI that could save them time or help improve grades is naturally attractive. The theoretical implication is that interventions to introduce proactive agents should highlight these usefulness aspects; for instance, emphasizing that the agent can help clarify difficult concepts, keep you on schedule, and provide instant feedback, all tangible benefits aligned with student needs.

However, the adoption will not hinge on usefulness alone. The relatively moderate levels of trust observed suggest that students will need reassurance and evidence of the system’s reliability. In practice, this means that any pilot implementation should perhaps start small, allowing students to test the agent’s recommendations in low-stakes scenarios to build trust. Trust in educational AI is a growing area of research; our findings echo Nazaretsky et al. (2023) [20], who developed a trust measurement for AI in education and found that transparency and student understanding of AI significantly improve trust. An actionable takeaway is that developers should incorporate explainable AI techniques; for example, if an agent suggests a study resource, it could briefly explain why it chose that resource (“You scored low on last week’s quiz on Topic X, so I suggest reviewing this chapter.”). Such transparency can turn the black-box nature of AI into a more open dialog, thereby increasing student comfort in trusting the agent. Moreover, involving instructors in mediating the AI’s role can help; if a trusted teacher endorses or validates some of the AI agent’s suggestions initially, students are likely to transfer some of that trust (a phenomenon akin to borrowing credibility) [27].

The finding that concerns are widely held—over half of students—is significant. It underscores that any rollout of AI in education must proactively address ethical and practical issues, not as an afterthought but as a core component of the implementation strategy. Students’ top concerns in our survey (privacy, accuracy, over-reliance) align with the literature on challenges of AI in education [2,19]. For instance, the UNESCO 2023 report on Technology in Education warns that without proper governance, EdTech (including AI) can exacerbate inequalities or pose risks to student data privacy. In the context of Narxoz University or similar institutions, this means that administrators and tech providers should craft clear policies: Who owns the data collected by AI agents? How is it stored and used? Can students opt out of certain data collection? Addressing these questions transparently can mitigate privacy fears. On the accuracy front, setting the correct expectations is key. Students should be informed that the AI agent is a support tool, not an oracle; its suggestions are helpful aids but not guarantees of correctness. In the same vein, training sessions or guides on “How to use the AI assistant effectively” could teach students to critically evaluate the AI’s help (for example, cross-checking an AI-provided solution or using it as a hint rather than a final answer). This approach fosters what one might call mindful use of AI, reducing the risk of over-reliance or blind acceptance.

It is encouraging that readiness is quite high among the student body. This suggests that once trust is earned and concerns are addressed, there is little attitudinal barrier to students actually using the system. Many are ready, and perhaps even eager, to try it; they see themselves as capable of handling new tech. This readiness is an asset; it means an implementation could leverage student ambassadors or early adopters to get things going. One could envision a phased introduction, starting with a subset of courses or a voluntary pilot where keen students sign up to use the proactive agent. Their experiences (hopefully positive) and word-of-mouth would then help bring others on board. This peer influence strategy has worked in other EdTech deployments, effectively creating a culture where using the new tool is seen as the norm or even as an advantage in studying.

The positive student outlook on the usefulness and impact of AI agents provides theoretical support for the idea that MAS in education can indeed enhance learning processes (validating, at least in perception, the claims made in earlier MAS studies [11,15]). If students expect better outcomes with AI, they may be more engaged when such systems are used, which can become a self-fulfilling effect; higher engagement often leads to better learning. Trust and concerns, however, introduce important moderating variables in the theoretical model of MAS adoption. A conceptual framework emerging from this could integrate elements of TAM/UTAUT with trust and risk factors specifically for AI. For example, one might extend UTAUT by adding Perceived Risk (which could be influenced by privacy and accuracy concerns) as a factor that negatively affects Behavioral Intention to use the AI, and Trust as a factor that positively affects it. Our study provides empirical data points for these factors, showing that while intention (readiness) is high, risk and trust are meaningful considerations that can temper actual use. Researchers could build on this by conducting real experiments to quantify how interventions (like providing explanations or data privacy guarantees) shift trust and usage metrics.

Another interesting theoretical angle is the role of proactivity itself. Traditional intelligent tutoring systems often wait for student input; a proactive system changes the dynamic. Some learning theories might caution that too much unsolicited help can impede productive struggle, a necessary part of learning. The fact that students largely welcomed proactive help (with the caveat of not wanting to become dependent) suggests a sweet spot might exist in agent proactivity. The MAS could be designed to offer help proactively but in a way that still prompts student thinking (for instance, by asking guiding questions rather than giving answers outright). This aligns with Vygotsky’s Zone of Proximal Development: The agent should step in when the student is at the edge of their independent capability, not too early and not too late. The practical challenge is tuning the agent’s triggers and sensitivity to achieve this balance, something that can be informed by both educational theory and iterative user testing. Our findings imply that students are open to these agents acting on their own (since usefulness and impact are rated high), but to maintain their agency in learning, the AI’s actions should be supportive rather than controlling. A theoretical model of “assistance versus autonomy” could be worth exploring: How do we quantitatively or qualitatively determine the optimal level of agent autonomy that maximizes learning? Our student feedback that they remain concerned about over-reliance indicates that preserving student autonomy (perhaps by letting them choose when to accept or ignore an agent’s suggestion) is important.

The discussion of our results in an SDG 4 context is quite illuminating. SDG 4 emphasizes inclusive and equitable quality education. Proactive AI agents, as suggested by our survey, have the perceived potential to improve quality by providing personalized support to each learner. If 86% of students think AI can make learning more effective, they are essentially recognizing that these tools could help meet their individual learning needs better, a cornerstone of quality education. Moreover, because MAS can be scaled, such personalized support could, in principle, reach all students in a class, including those who might be too shy to ask questions or those who otherwise would not receive much individual attention. This speaks to the inclusivity aspect: An AI agent does not get tired or biased in whom it helps (assuming it is well-designed), so every student can benefit from some level of tailored assistance. In practice, this could help weaker students catch up (the agent can spend extra time and effort on them, something a single teacher with 100 students may find hard) while also challenging advanced students with extension material, thus reducing the achievement gap. Of course, one has to ensure that the AI content is accessible (language, disability accommodations, etc.) to truly serve all, but those are implementation details that can be addressed. Our sample did not specifically include students with disabilities, but proactive agents could be even more transformative for them (e.g., an AI agent could proactively provide captions or translations, or suggest alternative learning modalities to suit a student’s needs).

The concerns students had, if addressed properly, also tie into quality. For instance, ensuring data privacy and security is part of providing a safe learning environment, an element of quality education that might not be explicitly stated in SDG 4 but is inherent in the idea of a positive learning environment. If students do not have to worry about how their data is used, they can engage more freely with the AI tools.

One caution is that quality is not just about outcomes (test scores) but also about the learning experience. Proactive AI should aim to enrich the learning experience, not make it too mechanized or detached. The feedback about fears of over-reliance or reduced self-efficacy suggests that students do value the learning process (they do not want it to be just AI spoon-feeding answers). Thus, quality education with AI will require designing agents that encourage active learning. For example, instead of simply giving answers, a proactive agent might engage a student in a step-by-step solution, asking them to attempt next steps with nudges. That way the student is still doing the cognitive work. Achieving SDG 4 via AI is not automatic; it requires mindful integration of AI where it amplifies good pedagogical practices. Our research supports this by showing students are positive but also mindful of pitfalls, giving a nuanced mandate to educators and technologists: “We welcome these tools, but implement them in a way that truly enhances learning and doesn’t undermine our development.”

There are two facets in which our findings relate to SDG 8. First, the content of education—using AI tools—is itself preparation for the future workforce. The majority readiness and optimism indicate that students are likely to engage with AI tools, which will build their digital skills. This directly contributes to their employability in an AI-rich job market. If about 80% already feel ready before even using the tools, imagine that after a few semesters of working alongside AI agents, that number would be even higher, as would their skill level too. They will have experience in critically evaluating AI outputs, collaborating with AI (almost as a team member in projects), and managing their own learning with AI support—all of which are emerging competencies in many professions. For instance, fields like finance, marketing, or IT now often expect new hires to be comfortable using AI-driven analytics or decision support systems. By introducing AI in their education, we effectively give students a simulated work-life experience where AI assistants are commonplace. This can ease the school-to-work transition, supporting SDG 8’s aim of full and productive employment for youth.

Second, from a broader economic perspective, if AI helps students learn more effectively (as ~80% expect) and possibly faster or better, it could lead to a more skilled workforce in less time. Improved educational outcomes can translate into higher productivity growth—a link recognized in human capital theory and implicitly in SDG 8. Additionally, as our students hinted through their responses, an AI-augmented education might free up human teachers to focus on more complex or creative aspects of teaching (since AI can handle repetitive queries or routine feedback). This could improve the working conditions for teachers, aligning with the “decent work” component. If teaching becomes less about drudging through 100 assignments giving the same feedback and more about engaging in high-level mentoring (with AI handling first-pass feedback), teacher job satisfaction could improve. A happier, more effective teaching workforce contributes indirectly to economic growth by nurturing better learners and reducing teacher turnover.

Nonetheless, some concerns point to challenges for SDG 8: One is the fear of automation replacing jobs (e.g., could AI agents reduce the need for some teaching or tutoring positions?). While our study was from the student perspective, it is important to consider that angle. Sustainable economic growth (SDG 8) is about creating decent jobs, and if AI is not managed, there is a scenario where certain educational support roles might diminish. The key is what the International Labour Organization suggests—AI should augment, not replace, and new roles (like AI curriculum specialists and learning data analysts) may emerge [28]. Our students did not strongly voice “AI will replace teachers” in the survey (though a few were concerned about reduced instructor interaction), which might reflect a trust that human roles remain vital. This trust can be reinforced by how we implement AI: Positioning the agent as an assistant to both student and teacher, rather than as an independent teacher. In communications, one might emphasize, “The AI helps your professor help you, it doesn’t replace your professor.” This messaging can help set the tone that human oversight remains, aligning with the notion of complementarity between AI and human work, a concept often cited as essential for achieving positive outcomes from AI adoption in workplaces.

Integrating AI Agents in Canvas LMS—Practical Outlook: Although this is not the core of our paper, our results have implications for how a system like Canvas could incorporate these ideas. Currently, as referenced by Instructure’s announcements, LMS platforms are beginning to open up to AI integrations [29]. The fact that Narxoz uses Canvas means any proactive AI MAS might be delivered via that platform. Students’ readiness and positive stance mean that if Canvas were to have, say, an AI tutor button or a proactive alert system, students would likely use it (provided it is well-designed). Canvas could leverage MAS by having multiple agents: one agent monitors assignment submissions (and proactively reminds or even asks “Would you like an extension?” if a deadline is near and a student has not started—with policy parameters of course); another agent might analyze discussion forum participation and nudge students who are less active (“There’s an interesting thread on this week’s topic you might join”), etc. Our usefulness ratings suggest students would indeed find such actions useful. However, they would also need transparency, e.g., Canvas might include a feature showing, “Why am I seeing this suggestion?” to address trust.

One of our findings is that 55% are concerned about things like privacy. Canvas and similar platforms will need to be very clear on data usage. Perhaps they might include an AI privacy disclaimer or controls for students (like the ability to turn off the AI’s proactive suggestions if they feel uncomfortable—though few might do that if they find it useful). Offering that agency, however, could actually build trust (knowing you can opt-out often makes users more comfortable opting in) [30].

Interestingly, because a large fraction of Narxoz courses are now hybrid or online (especially after pandemic adaptations), proactive agents in Canvas could significantly help those who lack face-to-face access to instructors. It essentially gives an “extra hand” to instructors. For example, Canvas could integrate an agent that identifies common questions students ask in emails and proactively populates a course FAQ or hints section—reducing repetitive Q&A for teachers. Students in our study would likely appreciate this; it is useful and saves them waiting time.

From our survey, one potential friction point is making sure the AI does not overwhelm or spam students—those who were neutral or skeptical about trust might be very put off if an agent bombards them with notifications. MAS design should implement intelligent frequency and relevance filters (e.g., do not send more than X unsolicited messages per week and ensure each proactive action has high relevance to the student’s current context) [31]. Fine-tuning this will probably require pilot testing and gathering user feedback (which, ironically, an agent could also gather proactively by asking students, “Was this suggestion helpful?”).

To ensure successful adoption of MAS-based AI, universities should consider phased pilot implementations, coupled with comprehensive AI-literacy programs for both students and faculty. Transparent communication on AI decision-making, data privacy policies, and algorithmic accountability is essential for fostering trust and readiness.

Theoretical integration. Our findings align with recent evidence that AI tutors can accelerate learning [3] while complementing—not replacing—human instruction. At the same time, predictive systems require algorithmic audits to mitigate bias and false positives [4].

Managerial implications for educational management. Universities should treat trust as a managed asset: disclose model capabilities/limits, provide opt-out controls, and institute human-in-the-loop review for sensitive actions (grading and risk flags) [19].

Sustainability lens (SDG 4 and 8). Skill-building around AI literacy and staff development links educational quality with employability, consistent with SDGs 4 and 8; our results justify phased pilots with evaluation plans in LMS environments [5,6].

6. Conclusions

This theoretical study explored students’ perceptions of proactive AI agents in a future Multi-Agent System (MAS) for digital education at Narxoz University. Based on responses from 150 bachelor students, we analyzed five core constructs: perceived usefulness, trust, expected educational impact, concerns about AI, and readiness to adopt MAS-based tools. The results, while purely descriptive, offer important insights for shaping the direction of AI-enhanced learning environments. Students generally responded positively to the idea of AI agents enhancing their learning. Perceived usefulness received strong support, indicating that students believe such tools can directly improve their academic performance. Similarly, the majority expect a broader educational benefit from AI integration. Readiness levels were encouraging, with three-quarters of students indicating they feel prepared to engage with these technologies, reflecting the digital fluency of the current student body.

Trust in AI emerged as a pivotal theme, not only as a determinant of student acceptance but also as a critical lever for building resilient educational systems. Institutions that foster trust through transparency, ethical standards, and mediated AI deployment strategies are more likely to succeed in sustaining student engagement with intelligent systems. In this regard, trust becomes an asset to be cultivated, not simply assumed. From a sustainability standpoint, students who learn to trust and critically engage with AI in academic settings are more prepared to collaborate with AI in future work environments. This dual exposure—cognitive and ethical—forms a role-model approach to building a future-ready, AI-literate workforce. It reinforces the connection between digital education and sustainable labor markets, fulfilling the promise of SDGs 4 and 8 [32].

At the same time, trust in AI agents, while moderately high, lagged behind usefulness and impact perceptions—suggesting that real-life exposure and transparency in AI functionality will be important for building deeper confidence. Concerns about AI were also evident, with half of the students expressing reservations related to privacy, fairness, and overreliance on automation. These findings underscore that the successful implementation of proactive AI systems in education will depend not just on technical development but also on institutional strategies for fostering understanding, managing expectations, and providing support. As this research is in its first year and no MAS system has yet been implemented, these findings serve as a theoretical and empirical foundation for future work. Further research will involve pilot testing actual AI agents integrated with platforms like Canvas LMS, followed by evaluation of student outcomes, behavior, and satisfaction. Ultimately, aligning technological innovation with student readiness and concerns will be key to advancing sustainable, inclusive, and effective digital education systems.

Limitations and Future Work

Future research should implement a prototype proactive MAS in a controlled setting to validate if students indeed respond as they claimed. It would be particularly interesting to measure changes in trust and perceived usefulness after using the system for some time. Perhaps initially, trust is low, but it climbs after students see the AI working correctly, or, conversely, some overoptimistic expectations might be tempered if the AI is less magical than imagined. The study is limited to a single university context with a sample of 150 students, which may affect the generalizability of findings. Future research should replicate the survey across diverse institutions and cultural contexts.