1. Introduction

Solar irradiance forecasting plays a key role in optimizing energy systems, supporting agricultural planning, and improving weather prediction accuracy [

1,

2,

3]. In energy management, the integration of intermittent and stochastic renewable sources such as photovoltaic and wind power introduces significant challenges in maintaining the real-time supply–demand balance across all time scales. Anticipating energy production through accurate solar and wind forecasts thus becomes essential for grid stability (at a very short time step) and efficient electricity dispatch (at a short time step) [

4,

5,

6,

7]. Without anticipating production (and consumption), it is difficult, if not impossible, to continue the energy transition towards a more sustainable model that is less dependent on fossil reserves [

4]. In fact, to ensure the security of the electricity supply, it would be necessary to limit the participation of intermittent renewable energies. These forecasts also provide clear economic benefits, both from the standpoint of electricity market operations and broader system reliability at a larger time step and horizon [

8,

9]. Numerous methods exist for predicting solar irradiance or irradiation across various time horizons. A full overview of these methods would be redundant and add limited new insights compared to the many literature reviews conducted in recent years. New bibliographical studies were carried out on available solar forecasting methods, such as the NWP model, satellite imagery, sky imager, machine learning, statistical models, and hybrid models [

10,

11]. In the context of this study, only so-called time series models are considered. These models are typically applied to short-term solar radiation forecasting from a few minutes to several hours and combine statistical techniques with artificial intelligence methods. Recent years have seen a growing use of machine learning and deep learning approaches [

12,

13,

14,

15]. These studies [

13,

14,

15] highlight the importance of solar forecasting and the potential of machine learning and deep learning to overcome traditional limitations. Machine learning-based forecasting is also increasingly applied in complex systems under uncertainty, including supply-chain disruptions [

16] and renewable energy system design [

17], highlighting the versatility and importance of such techniques in predictive modeling.

Given the impressive capabilities of machine learning methods and the rapid growth in computing power, researchers tend to multiply the amount of data, particularly as input to models, often overlooking that these data are not always available and that the interpretability of the results may be compromised [

18]. It therefore appears necessary to carry out, prior to any development of a prediction model, a judicious selection of input data, whether endogenous or exogenous. The question that follows is how to carry out this selection of useful variables. This is this question that we will try to answer after having first examined what has been achieved in the literature.

A survey on Feature Selection Procedure (FSPs) in various machine learning (ML) fields showed [

19] why FSP is necessary and how it must be implemented. Three FS models were tested: a filter one, the fastest; a wrapper model, which offers high accuracy; and an embedded model, which seems to be a good compromise.

A recent review aimed to provide researchers with the most suitable FSP algorithm based on the characteristics of their datasets, with the conclusion being that there is no single feature selection approach that works well for diverse objective functions across multiple domains [

20]. This confirms the need to carry out the study we propose on the prediction of solar radiation.

In the energy domain, Xie et al. [

21], following a review of 46 papers, emphasize the importance of using FSPs because they facilitate and improve the applicability of ML, particularly in the studied topic: gas turbines. Salcedo-Sanz et al. [

22] reviewed the applications of FSPs in renewable energy prediction problems (wind, solar, and marine) and demonstrated that wrapper FSP approaches are the mostly used due to their higher performance.

With respect to solar radiation forecasting, a forecasting model incorporates feature extraction to identify the minimum features required to accurately forecast solar irradiance using deep reinforcement learning (DRL) [

23]. This method significantly reduces the volume of data required for accurate irradiance forecasting for different weather patterns.

In ref. [

24], a combined fuzzy strategy is used to fuzzy the data, and an improved multi-objective optimization algorithm is proposed to search for the optimal parameters; from this, it emerges that the use of an effective FSP to determine the optimal and effective input variables consistently improves solar forecasting performance and model efficiency.

Often, only previous endogenous data are used as the input in forecasting models. For instance, to predict the global horizontal irradiation (GHI) value at a future time horizon

t +

n∆

t, GHI(

t +

n), only values GHI(

t −

i) with

i = 1, …,

m are used (

i corresponding to previous time steps); in this case, the variable selection is limited to the calculation of the value

m. That is to say, the number of necessary or optimal past GHI data must be determined to calculate the future GHI value, keeping in mind that the value

m can be different for each time horizon

n. Such a study was realized to determine the number of past observations (lags) at a one-hour time step to estimate particle number concentrations in Jordan [

25]. Three methods were compared: the use of the statistical autocorrelation function; a hybrid method (LSTM + genetic algorithm) and a parallel dynamic selection based on the LSTM (Long Short-Term Memory) method. The LSTM model with a heuristic algorithm gave the best results. Pearson’s correlation coefficient is probably the most used metric to assess the relationship between variables (between inputs themselves or between inputs and outputs) or by trying various combinations of inputs and retaining the combination leading to the lowest error [

26,

27,

28,

29,

30]. Sometimes, the Spearman coefficient is preferred because it makes it possible to determine the degree of monotonic relationships (whether linear or not) between two variables [

31,

32]. Castangia et al. [

33] estimated the improvement due to the addition of exogenous inputs in short-term GHI forecasting. They previously identified the subset of relevant input variables for predicting GHI using different feature selection techniques: correlation, information, sequential forward selection, sequential backward selection, LASSO regression (least absolute shrinkage and selection operator), and Random Forest. Exogenous inputs improve model performance starting on a 15-min horizon, with an improvement of more than 22% for a 4-h forecast. This limited usefulness of using exogenous variables when the prediction horizon is short was also shown by Rana et al. [

34]. Moreover, authors think that there is a limitation in using Pearson’s coefficient for the machine learning (ML) applications because it can only identify linear relationships between variables. Some authors [

35,

36] preferred to use Mutual Information (MI) criteria, which can detect both linear and non-linear relations. A new feature selection method with improved forward-feature selection (IFFS) is proposed by Tao et al. [

37]. Compared with LightGBM, IFFS improves prediction accuracy by 0.67% and computational efficiency by 20%. Solano et al. [

26] conducted an ensemble feature selection based on Pearson’s coefficient, Random Forest, Mutual Information, and relief for several ML forecasting techniques for three-time horizons from 1 h to 3 h applied to solar radiation data measured in Salvador, Brazil; the proposed ensemble feature selection approach improves forecasting accuracy. Niu et al. [

38] developed a new feature selection method called RReliefF and compared it with more conventional ones—LASSO, NCA (Necessary Condition Analysis), and MI; this new selection method appeared to outperform the three other selection techniques.

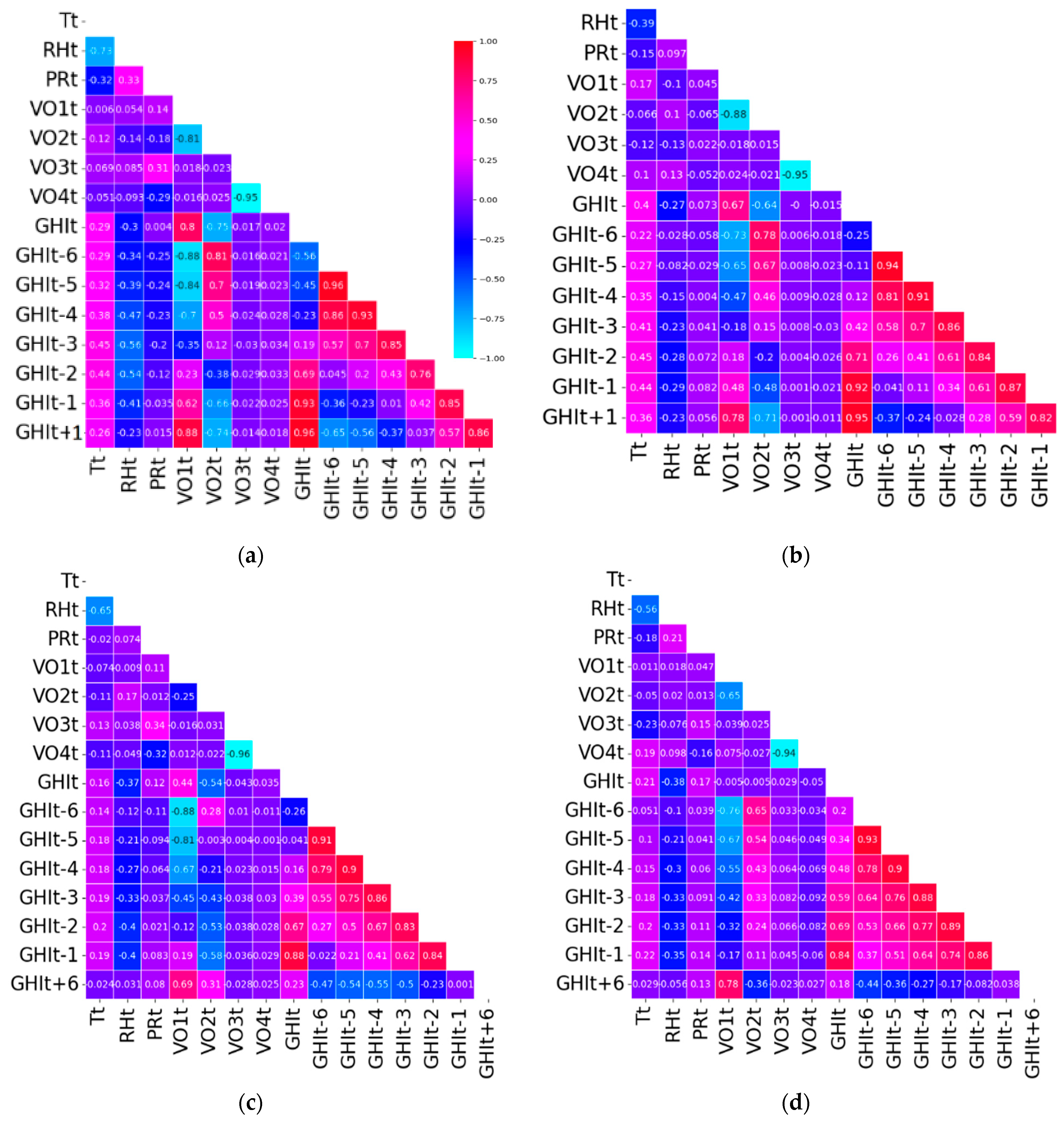

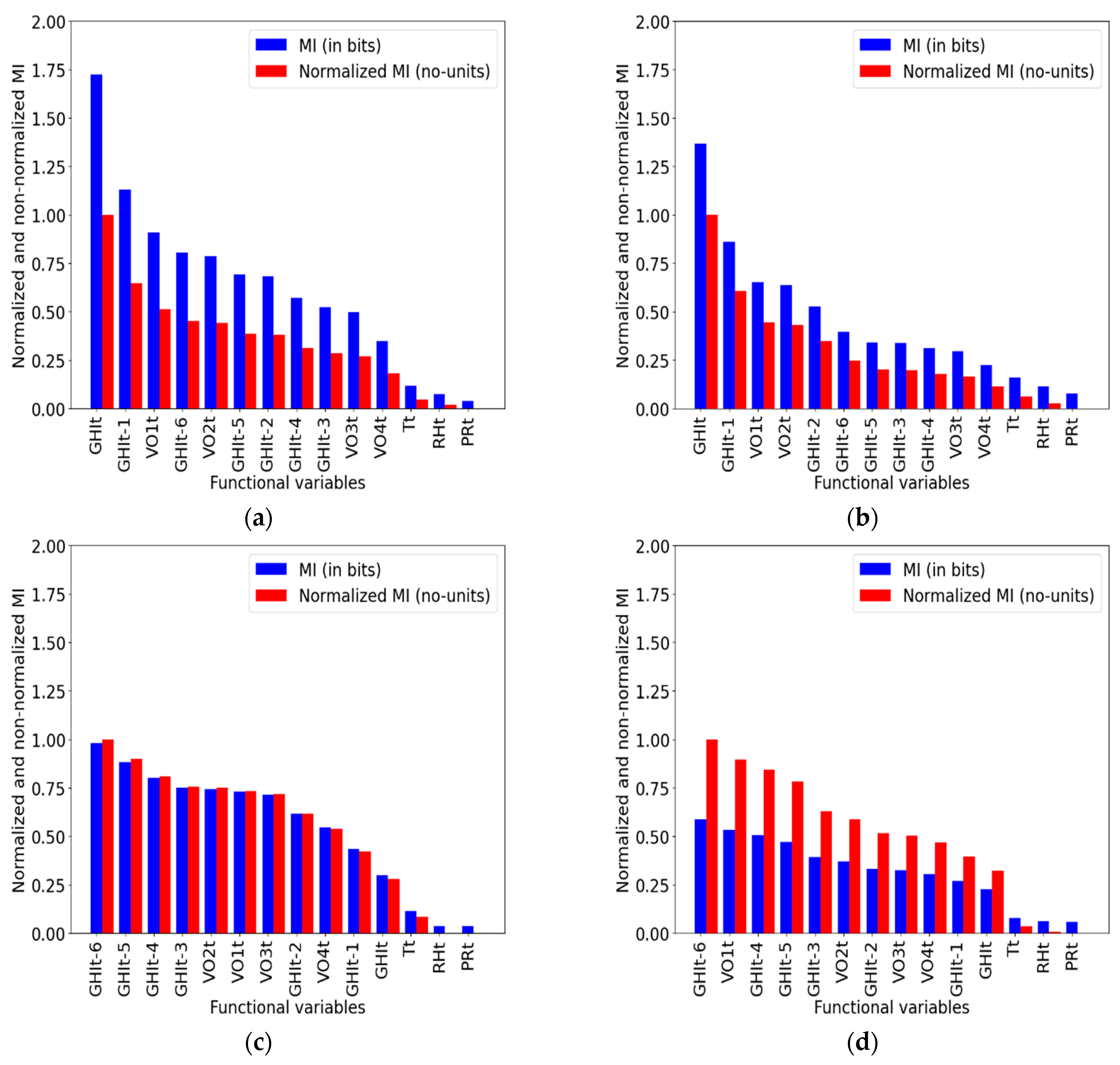

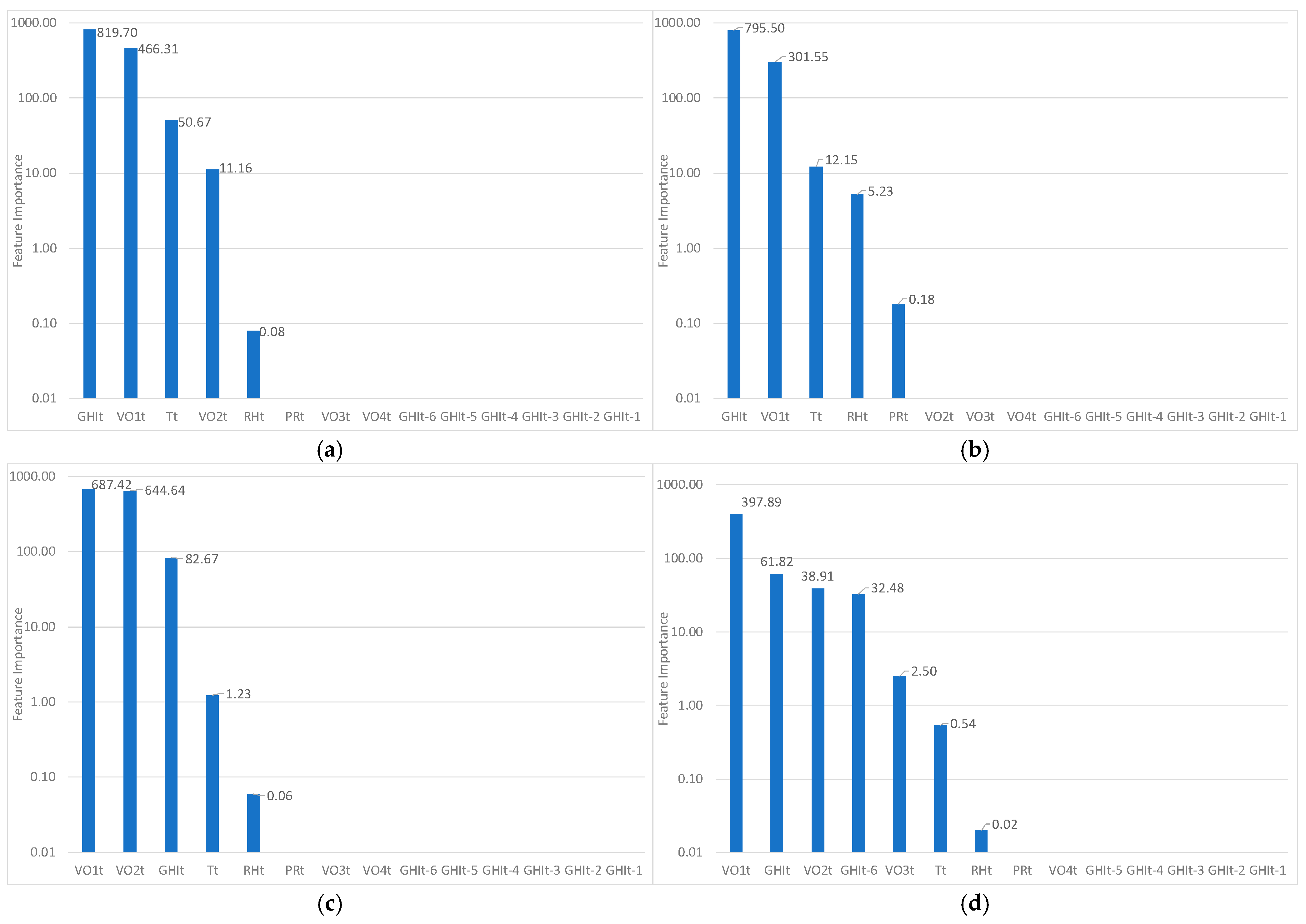

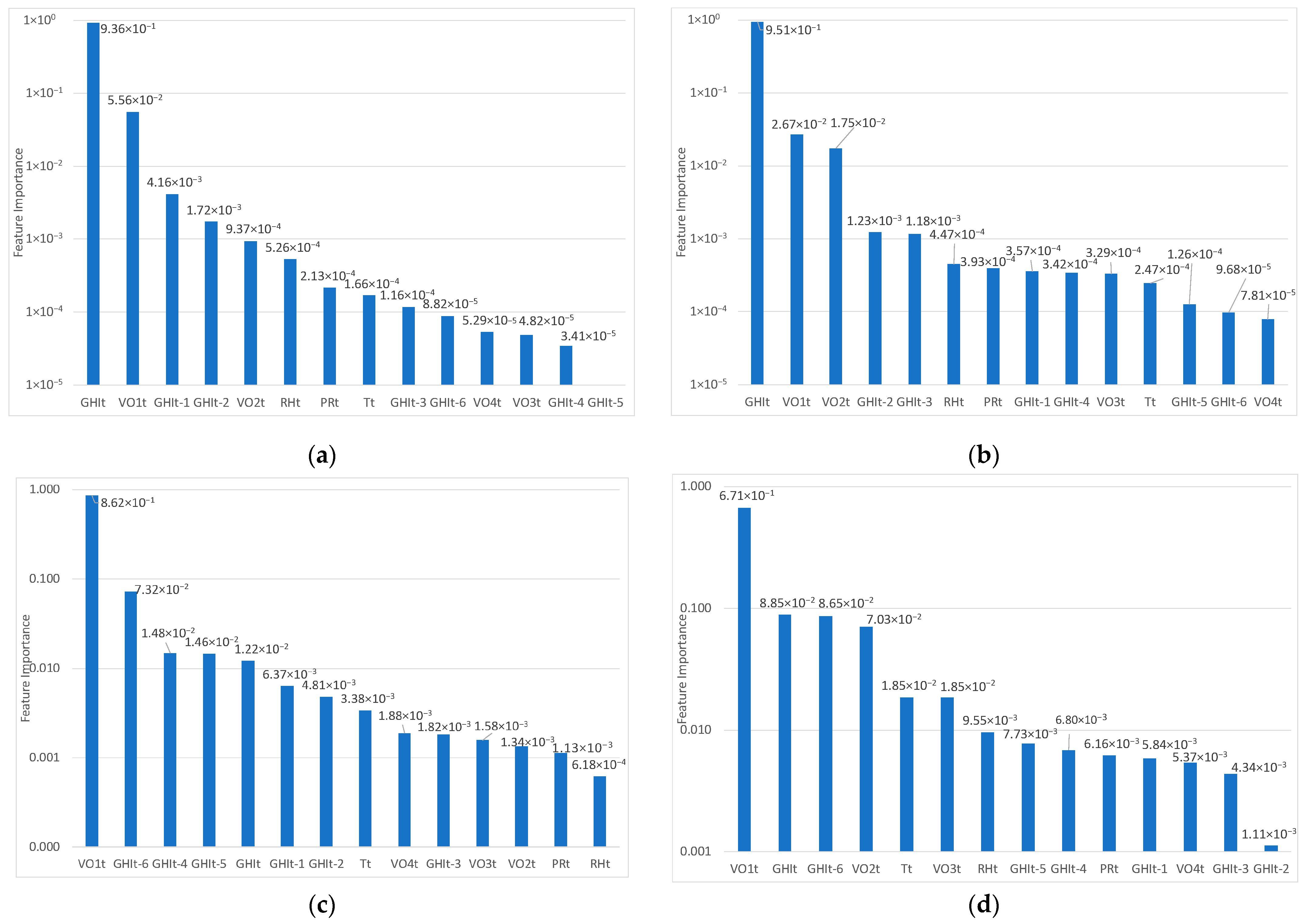

Table 1 compares representative works in the literature, highlighting their methodological choices (feature selection (FS) techniques and ML models), scope (climatic context and forecasting horizon), and evaluation strategies. This overview positions this study within this landscape, underscoring its dual-site comparative approach and integration of multiple FS methods across several horizons.

This study focuses on feature selection for solar irradiation forecasting, which is a critical step in building accurate and efficient predictive models. It is particularly important for balancing simplicity and performance, given the complexity of solar irradiance, which is influenced by climatic variability, periodicity, and local environmental factors [

2,

33].

This study can be considered to be distinguished from previous studies as a result of the following aspects:

Eight different statistical feature selection techniques were used, which is a relatively large number of methods; our aim is to test methods that are recognized and relatively easy to use, which is why we will not implement more complex selection methods;

These methods are applied on two Algerian sites with different climatic conditions: Ghardaïa (arid) and Algiers (Mediterranean);

The FS procedures are applied for six forecasting time horizons, which is rarely the case in the literature; the differences obtained for each horizon will be highlighted;

An input data set of hourly data is used, including Global Horizontal Irradiation (GHI), meteorological factors (i.e., temperature, humidity, pressure), and periodic components (e.g., seasonal and diurnal patterns);

This periodic nature of solar irradiation is captured using ordinal variables previously tested for the first time in [

42] and

rarely or never introduced into an input data set for such an application; their contribution will be assessed.

After selecting variables using each method for each forecast horizon, a Gradient Boosting forecasting model will be developed and for each time horizon, a forecasting algorithm based on the Gradient Boosting method will be developed and implemented with the set of inputs. The performances will be evaluated using nMAE and nRMSE metrics; thus, the most efficient selection method will be highlighted. The focus is not on the forecasting model’s performance but on evaluating the effectiveness of the feature selection methods. While numerous studies have explored solar irradiation forecasting using machine learning techniques, fewer studies have conducted a systematic comparison of feature selection strategies across different climatic regions and time horizons.

In

Section 2, the methodology will be presented, first setting out the key characteristics and the available data of the two meteorological sites, Ghardaïa and Algiers, in Algeria. Then, the feature selection methods will be succinctly presented. The Gradient Boosting method used as a forecasting tool will be described. In

Section 3, the results obtained by the eight selection methods applied to six forecasting horizons will be shown, discussed, and compared. The forecasting algorithm will then be applied in

Section 4, for each time horizon and each site, using 96 input sets constituted by the first four features retained by each selection method. Finally, a conclusion will be drawn as well as some research perspectives.

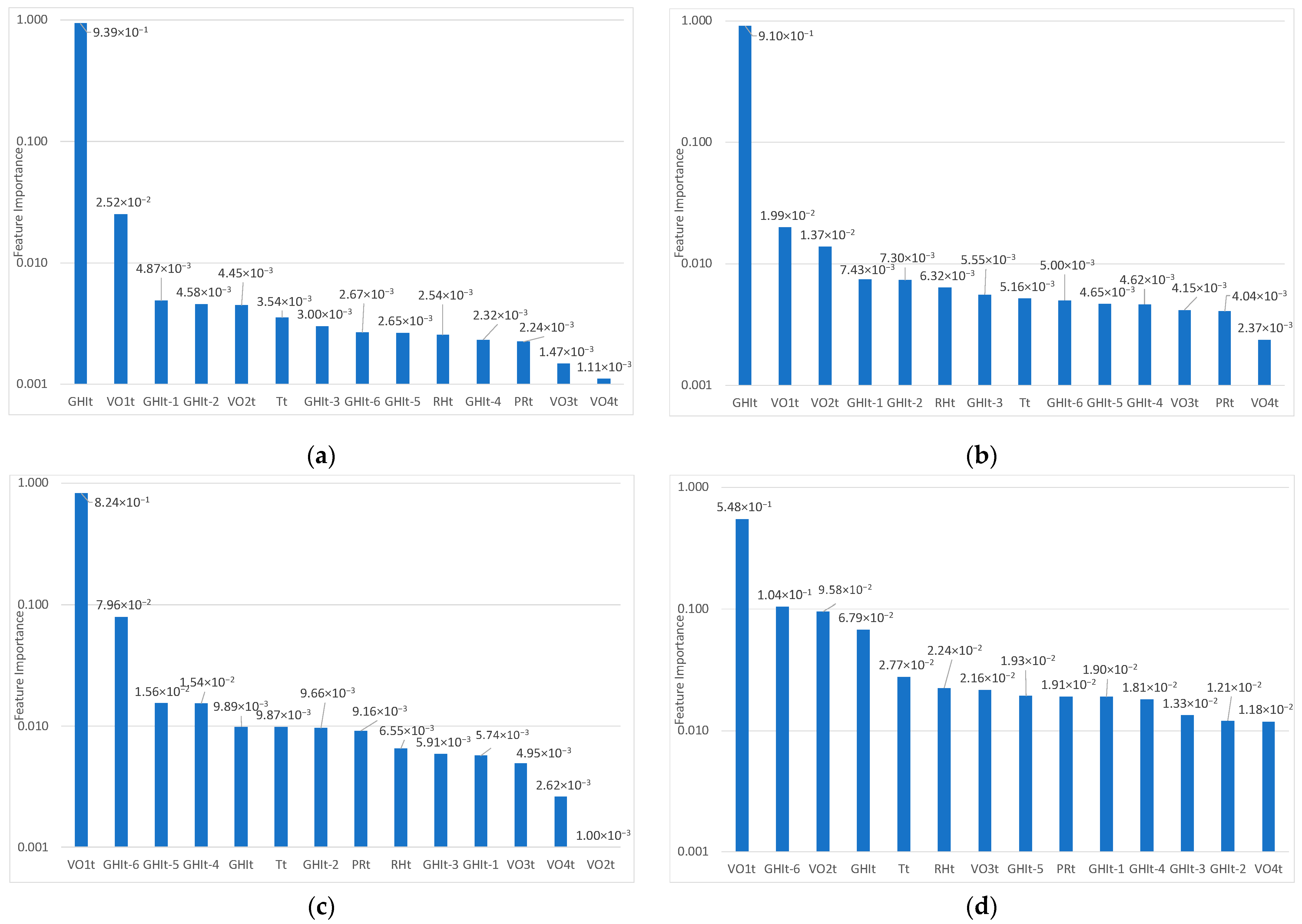

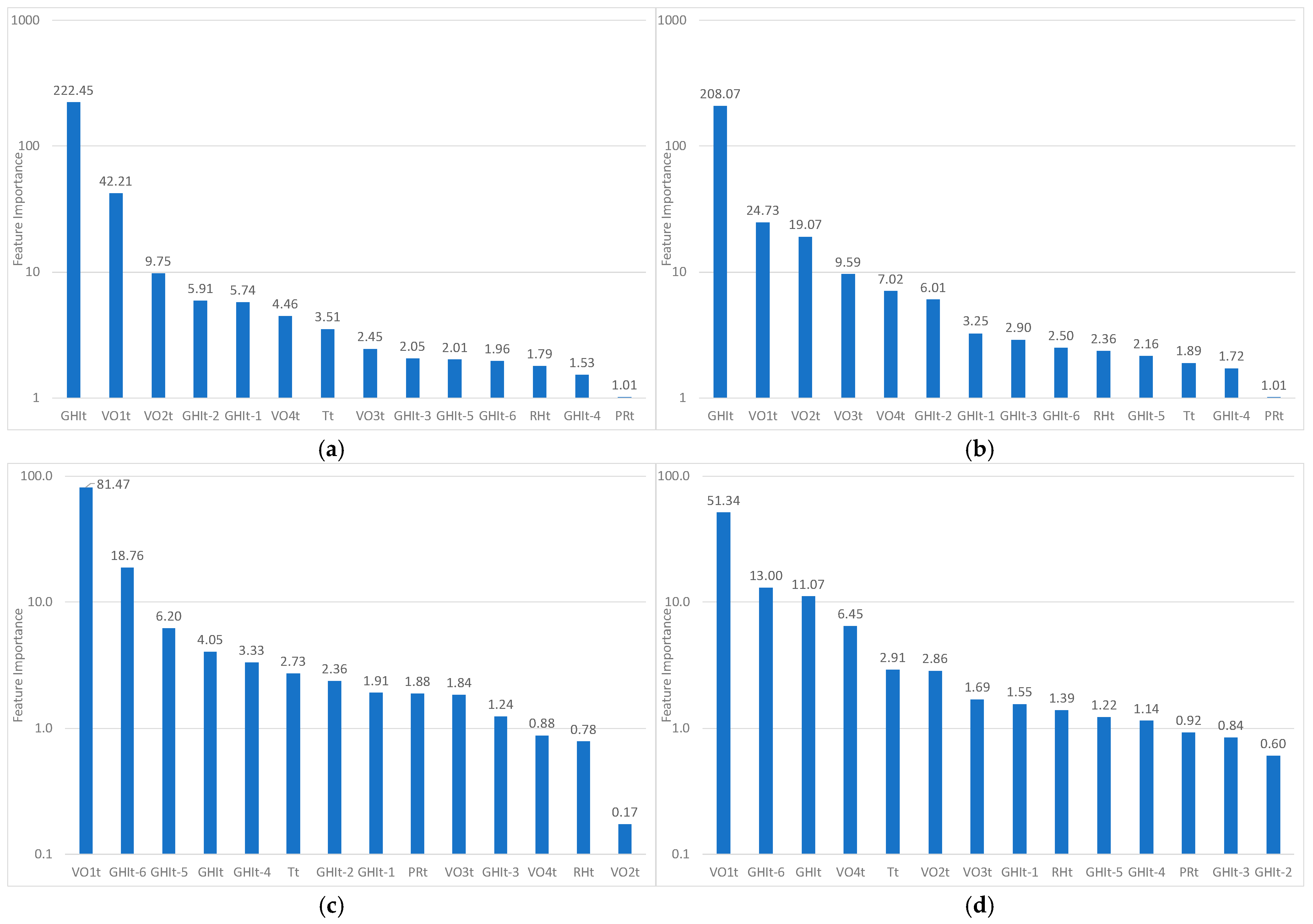

4. Forecasting Algorithm Application and Comparison of Performance of Selection Methods

To assess the relevance of each feature selection method, the four input variables retained for each method (

Table 11) were used in a Gradient Boosting (GB) model, chosen for its robustness and proven performance in solar forecasting tasks. Performance was assessed using normalized Mean Absolute Error (nMAE) and normalized Root Mean Square Error (nRMSE). It should be noted that the exclusion of meteorological variables (e.g., temperature, humidity, pressure) in most selection outcomes is the result of data-driven procedures and is not based on manual judgment. While these features showed limited relevance in the specific climatic conditions of Algiers and Ghardaïa, they may be more informative in different geographical contexts or under seasonal extremes. Therefore, feature selection results should be interpreted with respect to the local environment and forecasting objectives.

Table 12 presents error values for each method across six forecasting horizons. The errors metrics are computed as follows:

where

is the predicted GHI,

the observed GHI, and

the mean of observed GHI values over the test set of size N. As an example, for Ghardaïa at horizon t+1 with Mutual Information (Method 3), an nMAE of 6.44% means that the average absolute error represents 6.44% of the average measured GHI over the evaluation period.

Table 13 summarizes the best-performing feature selection method for each forecast horizon and meteorological site, based on the lowest nMAE values. For Ghardaïa, Mutual Information and SHAP-based approaches dominate, particularly at short and long horizons, respectively. In Algiers, LASSO yields consistent performance up to t+4, while RFE-GB outperforms others at longer horizons. This summary may serve as a practical reference for practitioners tailoring forecasting models to specific climatic contexts and timeframes.

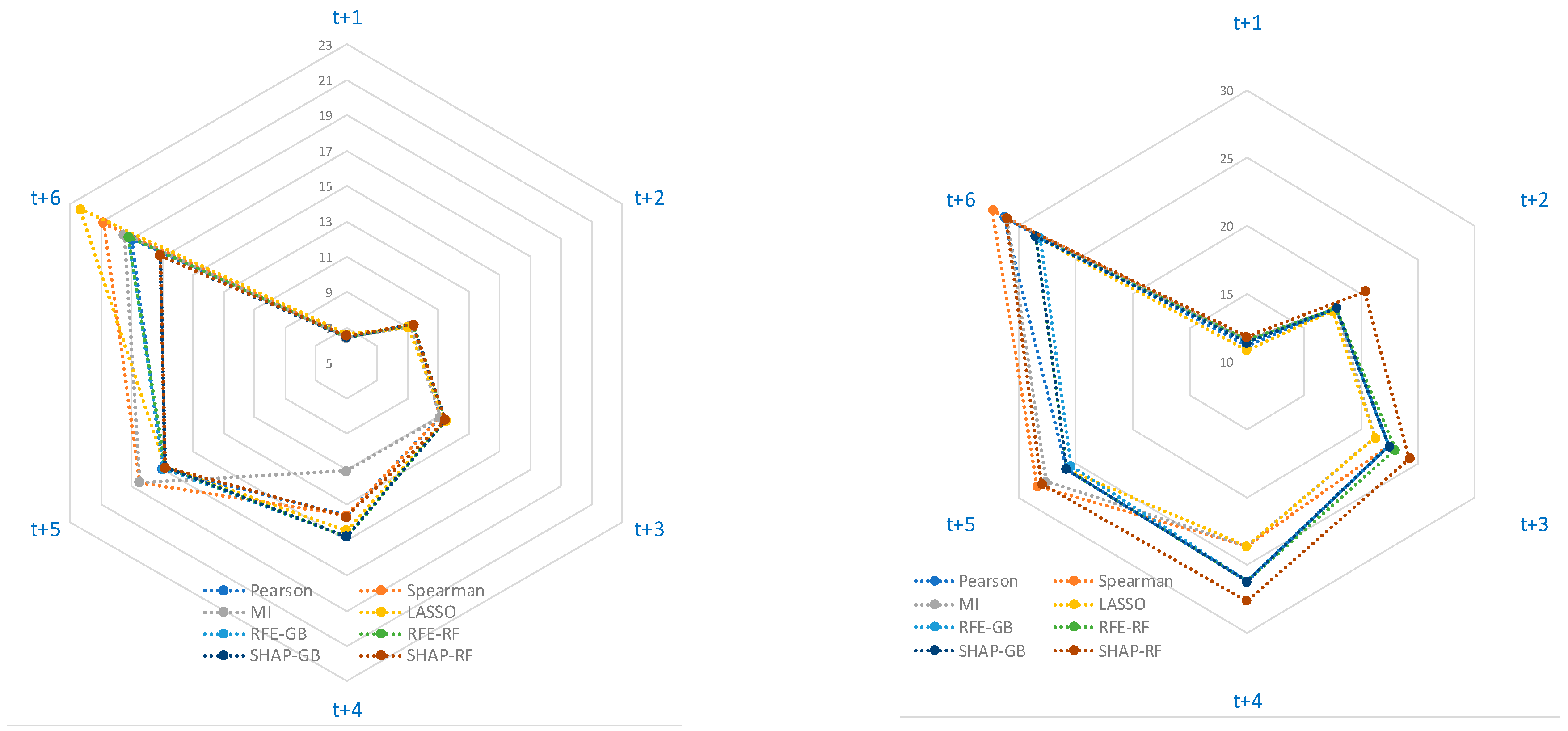

Figure 9 shows radar plots of nMAE for Ghardaïa and Algiers (nRMSE plots are omitted due to similar feature rankings). The results indicate consistently lower errors in Ghardaïa, reflecting its more stable arid climate.

At short forecast horizons (e.g., t+1), most methods show similar performance, with only small differences in nMAE (e.g., 6.44% vs. 6.52% in Ghardaïa). However, even such small differences can be meaningful in operational contexts such as energy dispatch. As the forecast horizon increases, performance gaps between methods become more pronounced, with relative improvements reaching 10–30%, highlighting the growing impact of feature selection. This trend is also confirmed by the overall increase in nMAE and nRMSE values with time, reflecting the decreasing accuracy of predictions at extended horizons. Prediction errors are consistently higher for Algiers than for Ghardaïa, likely due to greater solar radiation variability, which increases forecasting complexity. In Ghardaïa, the Mutual Information method produced the most accurate forecasts at short-term horizons, with the lowest nMAE values at t+1 (6.44%) and t+4 (11.12%). In Algiers, LASSO consistently performed well across all horizons, achieving the best nMAE at t+1 (10.82%) and maintaining strong results up to t+6. Conversely, LASSO was less effective in Ghardaïa. SHAP- and RFE-based methods showed more mixed results: while they outperformed other approaches at longer horizons in Ghardaïa (particularly at t+6, with SHAP-GB and SHAP-RF both reaching nMAE = 17.17%), they were less competitive for short-term forecasts. It is worth examining whether the choice of input variables significantly influences forecast accuracy. This influence selection becomes more evident at longer horizons. In Ghardaïa, its impact is limited at t+1, with a gain of 0.18 percentage points (a 2.79% improvement in nMAE), but increases significantly by t+6, reaching 5.2 percentage points (a 30.28% improvement). In Algiers, the impact of feature selection is also evident at horizon t+6. The best performance is achieved with RFE-GB (nMAE = 28.13%), while the worst comes from Spearman-based selection (nMAE = 32.28%), yielding an improvement of 4.15% points, or 12.86% relative. To statistically assess the influence of input selection methods on forecast accuracy, we applied the Wilcoxon signed-rank test to compare the best- and worst-performing methods across all horizons. The results show that in Ghardaïa, differences in performance are not statistically significant (p > 0.2), which may be due to the lower variability in solar radiation. In contrast, in Algiers, the difference is significant for nMAE (p = 0.031) and near-significant for nRMSE (p = 0.063), confirming that input selection plays a more critical role under more variable conditions.

Lower values indicate better performance. For Ghardaïa, methods converge at short horizons but diverge at longer ones, with SHAP-based approaches showing the lowest errors at t+6. In Algiers, differences are less marked, though LASSO and RFE-GB perform slightly better overall. Overall, although feature selection has a growing impact at extended horizons, the performance differences between methods remain moderate. This indicates that simpler selection techniques may still yield competitive results, depending on the forecasting context and local climate conditions.

Summary of Forecasting Results: Forecasting accuracy degrades as the prediction horizon increases, with both nMAE and nRMSE values rising accordingly. At short horizons (e.g., t+1), all feature selection methods perform comparably. However, performance gaps widen significantly from t+4 onward, particularly in Ghardaïa, where SHAP- and RFE-based methods outperform others at t+6. Mutual Information leads at t+1, reflecting its strength in capturing short-term dependencies. In Algiers, a more variable site, LASSO proves the most consistent across all horizons. To assess whether these differences are statistically meaningful, Wilcoxon signed-rank tests were applied. The results show that differences in nMAE are statistically significant in Algiers (p = 0.031) but not in Ghardaïa or for nRMSE. This suggests that feature selection influences average forecast accuracy (nMAE) most in climatically unstable environments, while error dispersion (nRMSE) remains less sensitive to the method used. For solar engineers, these results imply that advanced feature selection is especially beneficial:

At longer horizons, where predictive complexity increases;

In sites with high solar variability, where precision is more difficult to achieve;

When the priority is to minimize systematic errors (nMAE), relevant for energy dispatch and storage decisions.

These findings support the use of feature selection strategies depending on the forecast horizon, site characteristics, and operational goals.

5. Conclusions

This study investigated feature selection for solar irradiance forecasting in two contrasting Algerian climates: Ghardaïa (arid) and Algiers (Mediterranean). The results show that the relevance of input variables (and the most effective selection method) depends strongly on both the forecast horizon and the local climate. The analysis covered a range of techniques, including correlation-based approaches (Pearson and Spearman), Mutual Information, LASSO, Recursive Feature Elimination (RFE), and SHAP. While all the methods yielded similar performance at short horizons, their effectiveness varied with time horizon and site, and no single method consistently outperformed the others. In terms of variable importance, a clearer picture emerges. Most methods agree on the central role of GHIt (irradiance at forecast launch) for short-term horizons and the growing importance of periodic variables such as VO1t and VO2t for longer horizons. Some lagged variables, like GHIt–6, also become relevant as the forecast extends. Interestingly, the ordinal variable VO1t (rarely used in forecasting literature) proved consistently useful across stations and methods, though its generalizability should be tested further in locations with greater solar variability. The added value of feature selection becomes more pronounced as the forecast horizon increases. For instance, while the gain in nMAE from optimal feature selection is limited to +2.79% at t+1 in Ghardaïa, it reaches +30.28% at t+6. In Algiers, the relative improvement ranges from +9.43% to +12.86% across the same horizons. Despite this, differences between selection methods remain moderate, indicating that simpler techniques such as Mutual Information or LASSO can be sufficient when appropriately tailored to the forecasting context. As an outlook, applying a deseasonalized formulation using the clear-sky index could reduce the dominance of periodic variables like VO1 and VO2.

However, this approach introduces challenges related to timestamp accuracy and the reliability of the clear-sky model itself, which may affect forecast consistency and generalizability. Moreover, this study is limited to two Algerian sites, chosen for their distinct climatic conditions. Extending the analysis to only a few more locations would increase the complexity without providing statistically meaningful generalization. A more robust validation would require access to consistent data from a larger network (e.g., 15–20 sites), which is not currently available in the region. In addition, the forecasting model used in this study (Gradient Boosting) was chosen for its balance between predictive performance, computational simplicity, and interpretability, particularly its compatibility with feature selection techniques such as SHAP or RFE. While deep learning methods (e.g., attention-based models) offer alternative strategies, a comprehensive and fair benchmarking of such architectures lies beyond the scope of this study. Finally, while the accuracy of forecasts is evaluated in statistical terms (nMAE, nRMSE), their downstream impact (such as economic benefits for energy dispatch, storage optimization, or grid management) has not been quantified here. Future research should address these limitations by expanding the geographical scope, comparing forecasting architectures, and integrating prediction outputs into operational or economic decision-making models.