1. Introduction

With the vigorous development of the technology industry, the shortage of human resources has increased significantly, and attractive salaries increased the willingness of many people to switch careers. However, the technology industry is diverse, and each field includes specialized knowledge that needs to be learned. Therefore, whether it is an undergraduate student who wants to change fields or a non-undergraduate student who wants to change jobs across industries, it will take a lot of time to screen and read relevant documents to learn relevant knowledge. Although articles or multimedia videos and other related resources on the Internet are easy to obtain for ordinary people, when learners use search platforms for information, the search results are often mixed with irrelevant information and advertisements. For beginners or students, it will take a lot of time to accumulate experience to judge whether the search result information provided by these websites meets their own levels and needs. This may reduce learners’ willingness and motivation to learn, thereby affecting their performance and satisfaction in the educational environment [

1]. Ref. [

2] suggests that learning through reading and grading is ineffective. Therefore, many methods exist to improve learning, such as flipped classroom teaching. The authors of [

3] believe that the flipped classroom has excellent potential. By carefully preparing classroom activities and improving students’ learning skills and attitudes, it can become an effective teaching tool for students at any level. Student participation in learning can improve their attention and concentration, train them to have better critical thinking abilities, and deepen their learning experience [

4].

Although many studies have shown that flipped teaching can help improve grades and learning motivation, it still faces many challenges [

5]. Learning is a gradual process, and one must consolidate foundational knowledge such as building blocks before tackling more complex problems. Schools typically design a suitable “curriculum learning map” for students to learn and develop their future employability gradually. Learning pathways involve the unique developmental trajectories or routes that individuals may take toward achieving their learning goals. While different learners may end up with similar learning achievements or performances, their experiences along the way can vary. This emphasizes the importance of conducting a person-centered analysis to understand the diverse pathways individuals may follow in their learning journeys [

6]. Creating a clear and appropriate map can reduce the confusion of learning and allow students to focus on “career-oriented” and “skill-oriented” learning to build their research plans. It fosters the ability for “self-directed learning”. However, search engines do not provide information on article difficulty or discussion topics, leading users to spend a lot of time filtering and categorizing search results to find articles that match their needs and levels. Therefore, this study aims to develop an automatically constructed learning pathway system. It provides a variety of educational articles, automatically identifies keywords represented in each article, and analyzes their difficulty. Thus, learners can use this additional information to quickly find articles and materials that meet their needs. In addition, the system will also analyze each user’s search and viewing logs, adjust the learning path automatically generated by the system, improve the accuracy of recommendation and search results, and gradually allow learners to learn the correct knowledge and concepts in the correct learning pathway.

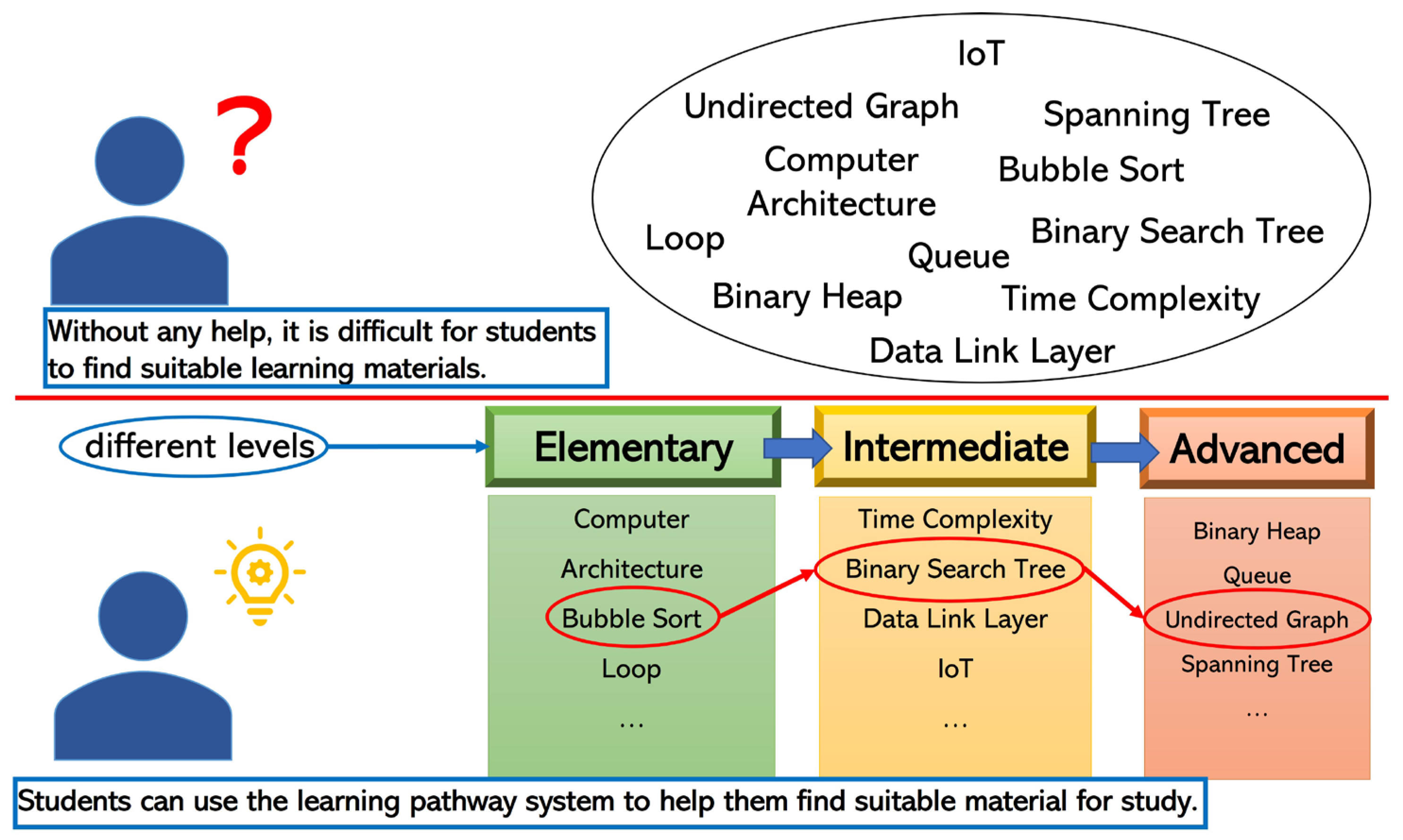

The system developed in this study includes automatic analysis of article difficulty, which is a key technology for constructing learning paths. The purpose is to allow users to choose learning materials according to their own level to achieve personalized learning effects. The system allows learners to quickly and conveniently obtain the required information at any time, as shown in

Figure 1. The system divides keywords into elementary, intermediate, and advanced and divides the difficulty of articles into simple, medium, and challenging. All articles will be marked with difficulty, which is convenient for users to learn.

2. Literature Review

2.1. Content-Based Recommend System

To provide users with practical and easily interpretable articles for information retrieval, a content-based recommendation system is considered essential as it enables users to search for diverse sources of information [

7,

8]. This system, which is derived from the field of information retrieval, primarily uses text data as an information source and predicts recommendations based on various factors that influence users, such as keywords and similarity. To transform documents into vectors in a multi-dimensional space for analysis, it employs techniques such as term frequency-inverse document frequency (TF-IDF), vector space model, and latent semantic indexing [

9]. Algorithms, such as support vector machine (SVM), k-means, k-nearest neighbor rule, and decision tree, are then used to establish a recognition model for media features, among others. The system assigns different scores to items based on their attributes, features, and weights in the training samples and generates recommendation results. Finally, it further analyzes these items.

2.2. Learning Pathway

A learning pathway, also known as a developmental trajectory, is the process leading to a learning goal [

10]. Different learners may have the same learning goal, but their experiences in the learning process may vary. Thus, understanding the student’s learning pathway requires analyzing it with the student as the center. Studies have shown that adopting students’ learning pathways to provide adaptive support can improve their learning situation [

11]. In recent years, there have been many studies on the recommendation, classification, and construction of learning pathways. Some studies compare learning pathways to examine their impact on emotions and grades [

6]. Others use variables such as learning behavior and motivation to predict learners’ grades [

12], while some argue that designing adaptive, customized learning pathways is crucial in the design of learning environments [

13].

Learning pathways help learners stay organized and oriented during the learning process, providing a clear idea of their progress. Additionally, they enable educators to design and manage courses better, offering a more personalized and targeted learning experience. Combining learning pathway maps with other educational technology tools and technologies, such as online learning platforms, adaptive learning systems, and learning analytics and data mining technologies, can provide more support and feedback to both learners and educators.

2.3. Text Mining

Text mining is an extension of data mining. It involves the difficult task of extracting valuable information from diverse language texts. The purpose of text mining is to extract useful information from unstructured text. The process of text mining involves natural language processing tasks. Any processing of text that leads to obtaining valuable information is considered part of text mining. For example, the TF-IDF method extracts keywords from the text for classification or other applications [

14]. TF-IDF is a numerical representation used in information retrieval and text mining to determine the importance of a term within a document or article.

In research related to the information field, there is generally a certain degree of correlation between words. It can usually be obtained through association analysis algorithms. The most famous algorithm of association analysis, the Apriori algorithm, was first proposed by Agrawal and Srikant in 1994. The concept of the Apriori algorithm is to find the law and correlation between two or more combinations in big data. It calculates the frequency of their occurrence among data sets and establishes association rules based on support, confidence, and lift. The following example illustrates the use of algorithm-related articles A and keyword K.

- (1)

Support:

Support is the proportion of the combination of particular keywords K (

) appearing in all articles A. For example, if there are a total of 600 articles in A and the keyword pair (machine learning, deep learning) appears in 150 of them, then the support of the keyword pair (machine learning, deep learning) is 150/600 = 0.25 (25%). Equation (1) shows the formula for support.

- (2)

Confidence:

The confidence level represents the probability of the occurrence of keywords K (

) when the keyword K (

) appears. For example, if the keyword K (machine learning) appears in a total of 200 articles, and among those 200 articles, the keyword K (deep learning) appears in 100 of them, the confidence level can be calculated as 100/200 = 0.5 (50%). Equation (2) shows the formula for confidence.

- (3)

Lift:

Lift refers to the probability of the occurrence of K (

) when the keyword K (

) appears, representing the correlation between the keywords. For example, it calculates the lift of K (machine learning, deep learning) by dividing the confidence level of K (machine learning, deep learning) by the support level of K (deep learning). If the lift is greater than 1, it indicates that when K (machine learning) appears, K (deep learning) is also likely to appear. If the lift is less than 1, it indicates that when K (machine learning) appears, K (deep learning) is less likely to appear. If the lift is equal to 1, it indicates that there is no correlation between K (machine learning) and K (deep learning). Equation (3) shows the formula for lift.

The main concept of the Apriori algorithm is when a set of items frequently appears in the data set, the sub-collections contained in the item must also appear frequently. On the contrary, the number of occurrences of this subset is relatively rare. On the basis of this concept, match the given minimum support and minimum confidence to achieve the purpose of screening data and generating association rules between items.

The execution flow of the Apriori algorithm is as follows:

Scan the dataset to find all candidate subsets;

Mark the frequency and count of all candidate subsets appearing in the dataset;

Set minimum support and minimum confidence levels to filter the data;

Generate candidate subsets of length K + 1 from subsets of length K;

Repeat steps 2–4 until the generated candidate subsets reach the maximum length.

2.4. Text Classification

Text classification is categorizing text into multiple classes according to given rules. The basis of text classification can be valuable information extracted from the text content through text mining methods. However, helpful information is not only present in the text content. In [

15], it also extracted non-text features and text features. They are used to evaluate app reviews on the Apple App Store and Google Play Store. It found that incorporating non-text elements can improve the accuracy of classification tasks.

There are many algorithms used for text classification. It can be divided into traditional and deep-learning-based machine learning methods. Traditional machine learning methods such as Naive Bayes, Support Vector Machine (SVM), and Random Forest are commonly used [

16]. Ref. [

17] used Random Forest in short medical diagnosis notes and achieved an accuracy rate of 91% in a binary classification task. Deep-learning-based methods such as Recurrent Neural Networks (RNN), Convolutional Neural Networks (CNN), and Attention Mechanism are also popular [

18].

Currently, deep-learning-based methods have achieved outstanding results in text classification tasks. The authors of [

19] fully utilized the context of the text content. They combined a BiLSTM model with a self-attention mechanism and achieved good results in fine-grained sentiment polarity classification tasks for short text. They also demonstrated that using repeated data to fill in imbalanced data can improve model training efficiency. Some studies have tried to combine several different models to achieve a compact architecture or better results, such as [

20] combining CNN and RNN in the famous IMDB dataset for sentiment classification and achieving an accuracy rate of 93.2%. The authors of [

21] not only used the architecture of CNN and RNN but also fused the time domain and space domain to classify Chinese financial news, achieving a classification accuracy of 96.45%.

Although many research results have shown that deep-learning-based methods generally have higher accuracy than traditional machine learning methods, the prerequisite is sufficient training data. If the text classification task data is difficult to collect or annotate, traditional machine learning methods are more advantageous.

Due to the high cost of obtaining well-labeled training data for text classification tasks but the ease of obtaining large amounts of unlabeled text, [

22] used a large part of the unlabeled text to augment a small quantity of labeled text, thereby improving the accuracy of the text classifier. At the same time, [

23] also pointed out that the number of samples between training data categories also affects the accuracy of the text classifier.

The following is a discussion and analysis of relevant literature on the “association analysis method”.

2.5. Word Segmentation

The segmentation difficulty of Chinese sentences is more incredible than that of English sentences, as English sentences can be easily segmented using spaces. Common methods for Chinese word segmentation include lexicon segmentation, N-Gram, and hybrid segmentation.

Lexicon segmentation: Lexicon-based segmentation involves building a keyword database using commonly used keywords in a specific field, such as “artificial intelligence” and “deep learning.” It extracts keywords through string matching. The most well-known Chinese word segmentation package is jieba, which has a more comprehensive lexicon for simplified and traditional Chinese. Therefore, this project adopts jieba as the main word segmentation method for analyzing difficulty, and experts manually establish the keyword database. Lexicon-based segmentation is also used for expanding the keyword database for information domains.

N-Gram: N-Gram segmentation is commonly used for preprocessing Chinese, Japanese, and Korean text, as shown in Equation (4):

N in N-Gram represents the length of the segmented characters. For example, when N = 1, each character is regarded as a single entity. The results show that when N = 3, it performs best for precision, recall, and F-measure.

2.6. Sustainable Development Goals

As a specialized UN agency, UNESCO promotes the development of experience-based higher education policies [

24]. It provides technical assistance to member states in strategic and policy reviews to ensure access to quality education, academic mobility, and accountability for all [

25,

26]. The United Nations has identified 17 Sustainable Development Goals (SDGs) for the survival and development of humans and other species, with SDG 4 addressing the social dimension. By 2030, it aims to “ensure inclusive and equitable quality education and promote lifelong learning opportunities for all, including university education, for both men and women”. Education is the foundation for improving quality of life and achieving global sustainable development [

27,

28]. SDG 4 aims for inclusive, equitable, quality, and lifelong education. While it is only 1 of the 17 SDGs, SDG 4 is the foundation for the other goals [

29].

3. Methods

3.1. System Architecture

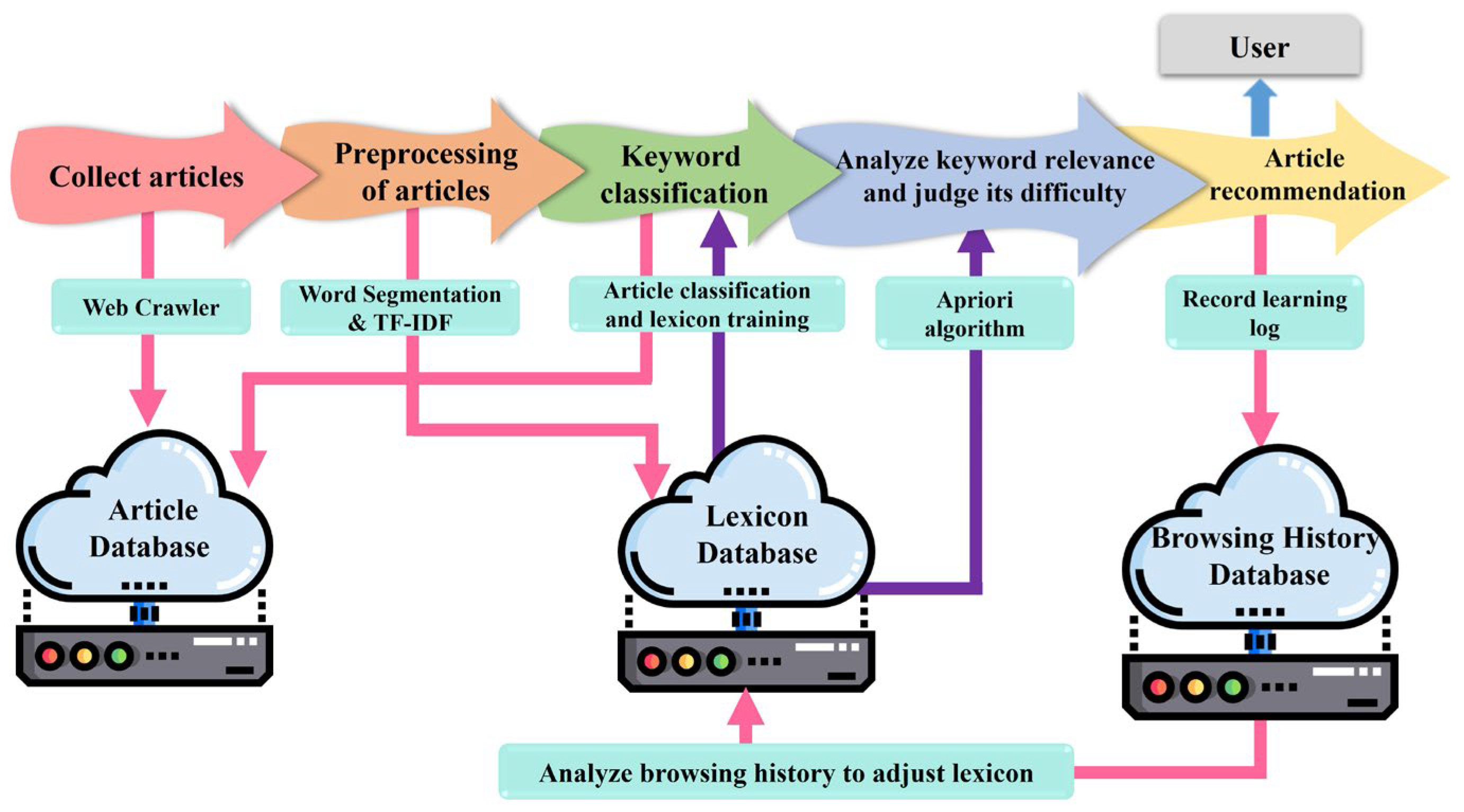

In this study, web articles and pictures were obtained from the Internet using web crawler technology. A total of 133,962 articles were collected, and 56,676 articles in computer science category were filtered out automatically by text mining techniques. The collected data is then preprocessed. The preprocessing steps include removing punctuation marks, stop words, English, N-gram segmentation processing, and long and short word processing. Then, use the Term Frequency-Inverse Document Frequency (TF-IDF) algorithm to calculate the keywords that can represent each article. To determine whether an article is computer-science-related depends on whether these keywords are computer-related. At the same time, if the TF-IDF value of the words processed by the N-gram word segmentation method is high, it can also be used as the basis for automatic word expansion. After filtering out non-computer-science-related articles, use jieba to segment them. Then, carry out the calculations of difficulty level for each keyword and estimate the difficulty of the article according to the difficulty of the keywords. Finally, the calculated difficulty level of each article is integrated into the learning pathway system that combines the difficulty level and article keywords. Users can enter keywords to query, and then related articles are recommended. The difficulty level of the articles will also be displayed in the topic search result list for users’ reference. In this study, this system is provided to the students in the algorithm course. The continuous expansion of students’ feedback is used as the verification standard to classify the difficulty of the article. The system architecture of this study is shown in

Figure 2.

The method of building articles in this system is to collect articles through web crawlers first, and then perform the first preprocessing “word segmentation”. The system uses the N-Gram method for word segmentation. When the value of N is 1, the word segmentation length is 1, and the text is divided into single words. When the value of N is 3, the text is split into units of 3 words. N-Gram can adjust the segmentation length according to the needs of the system, such as 2-Gram or 3-Gram.

The weight calculation method used in this study is TF-IDF. TF represents the frequency of occurrence of a word in a single article, while IDF represents the frequency of a word in all articles. Therefore, the method can extract terms representing articles.

In TF-IDF, its TF represents the frequency of a word in a specific article. The TF formula is shown in Formula (5).

Among them, represents the frequency of word in document . indicates the number of times word appears in document . represents the total number of words in document .

IDF is Inverse Document Frequency. When a word appears in several articles, the word has higher importance. This means that if the word appears in multiple articles, it is less important. The IDF formula is shown in Formula (6).

represents the total number of documents.

represents the number of documents in which the word

occurs. The result obtained by multiplying TF and IDF can define the weight value of word

in document

. TF-IDF formula is shown in Formula (7).

When using N-gram word segmentation to process an article, it is possible to find new keywords to expand the Lexicon. Handling long and short words involves comparing keywords with a given threshold to a dictionary. Words that appear in the dictionary are preserved. Next, utilize web crawler to collect new articles for newly expanded keywords. Then, text preprocessing and long and short word processing are implemented in the collected articles. By repeating these processes, the keyword thesaurus will be more extensive, and the long and short words selected will become more accurate. After the web crawler obtains web page data, proceed to judge whether it is computer-science-related. This study calculated the TF-IDF value of keywords and the proportion of keywords in the articles. Higher proportion of desired topic-related keywords in the article indicates that the article is computer-science-related. A total of 133,962 articles were retrieved using web crawlers for this study, and 56,676 articles remained after the filtering.

Figure 3 illustrates the process of obtaining algorithm-related articles using TF-IDF.

The system collects technical terms during word selection and builds an initial thesaurus. With the increase in the number and types of articles extracted by web mining, the quantity and correctness of the initial lexicon will be tested. Therefore, this study will revise and expand the initial lexicon. If an article is classified as computer-science-related, but its keywords are not in the Lexicon, they will be expanded. When it modifies the thesaurus, we inevitably remove some words from the system. Therefore, this system will also calculate word weights through the TF-IDF method in correctly classified documents. If a word meets the threshold criteria, we capture it and add it to the dictionary for expansion, while assigning it an initial weight. Continuous revision significantly reduces the misjudgment of documents. In the past, when dealing with the relevance of keywords, most of them directly input the data set of the rules to be searched into the Apriori algorithm for calculation, find out the words with the highest frequency of all words, and list their setting options. However, the method used in this study is different from previous studies. Before using the Apriori algorithm, the TF and IDF in the TF-IDF formula will be performed on the data set to calculate the weight of each keyword. Additionally, use the weight to carry out the Apriori algorithm operation to find the relevant set of keywords.

After using TF-IDF to obtain the keywords of each article, the next step is to calculate the difficulty level of keywords and articles. First, we calculate the difficulty level of the keyword, and then we use the calculated difficulty level of the keyword to determine the difficulty level of the article.

This system contains a total of 515 feedbacks, and statistical analysis was carried out on the difficulty distribution of keywords. Of the 515 feedbacks, 169 are rated as Level 1, 226 as Level 2, and 120 as Level 3. Multiply the difficulty level of each keyword by the corresponding TF-IDF value and then sum it up to calculate the difficulty level of the article. The calculation formula of article difficulty is shown in Formula (8).

3.2. Participants and Experiment Process

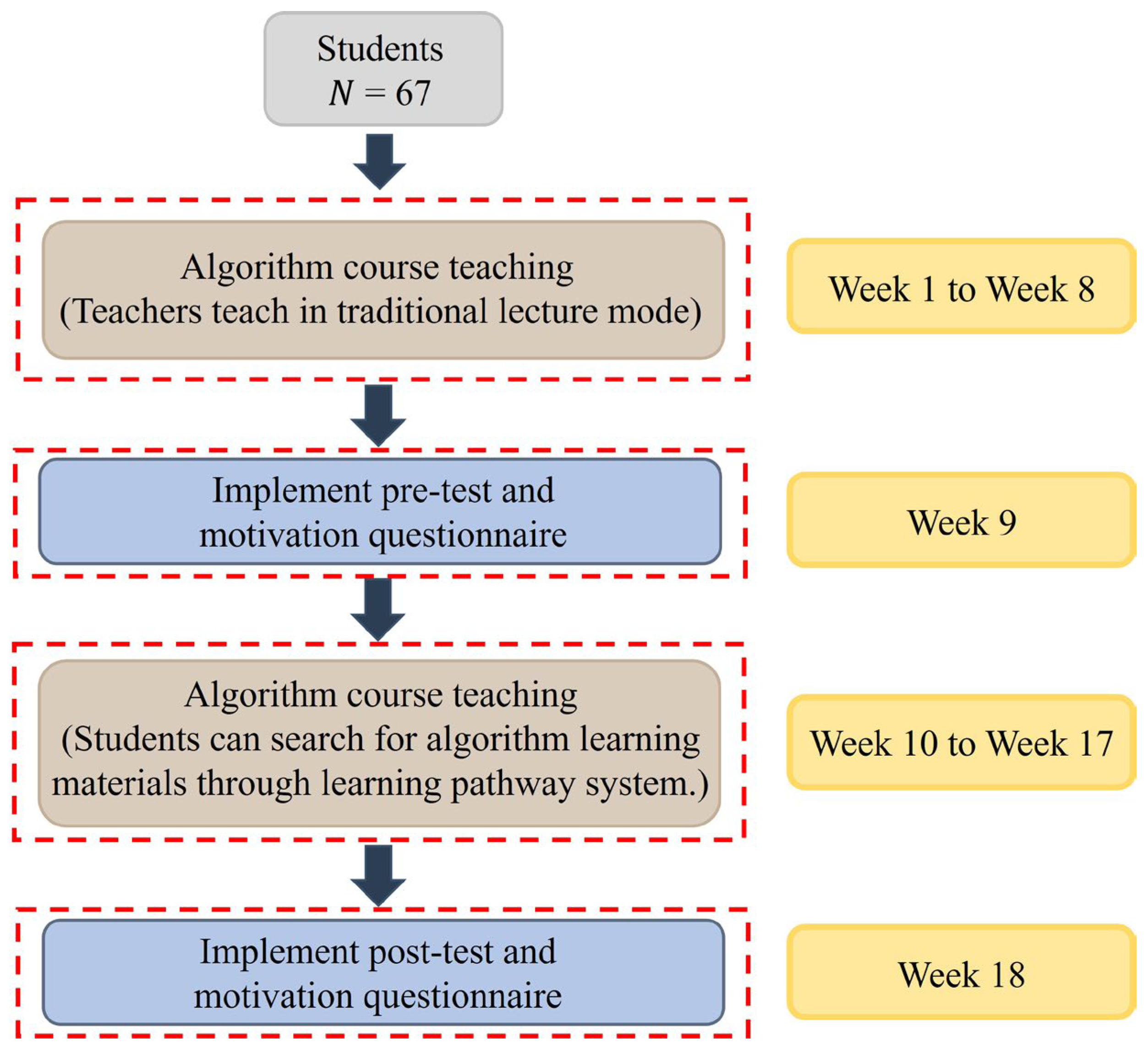

In this study, 67 students were surveyed to implement a 9-week experimental activity. A total of 67 junior students in the Department of Computer Science and Information Engineering at a university in Taiwan participated in this experiment. Additionally, this study does not have any inclusion or exclusion criteria.

Figure 4 shows the experimental process of this study. Before the start of the experiment (from the first week to the eighth week), the teacher implemented the algorithm course through traditional classroom teaching. In week 9, a pre-test and a learning motivation questionnaire were administered to all students. From weeks 10 to 17, all students could use the learning pathway system to search for algorithmic articles after class. At week 18, all students completed a post-test and a learning motivation questionnaire to examine the impact on student performance and motivation. The midterm exam is the pre-test, while the final exam is the post-test.

3.3. Data Collection and Analysis

This study experimented in an algorithm course to explore whether introducing a learning pathway system can help improve students’ learning performance and learning motivation. Therefore, 67 students were recruited in this study, and the results of the learning motivation questionnaires were distributed and collected in the 9th and 18th weeks, with a total of 67 valid questionnaires.

This study referred to the Motivated Strategies for Learning Questionnaire (MSLQ) scale proposed by Pintrich and De Groot (1991) and edited its items to meet the requirements of this experiment. For example, “in the algorithms course, I prefer materials that are challenging so that I can learn new content”; “in the algorithms course, I prefer materials that can arouse my curiosity, even if the content is difficult”; “for me, the greatest satisfaction in the algorithm course came from trying to understand what I learned”. This questionnaire is eight items in total and is divided into two dimensions, namely, four items of intrinsic motivation and four items of extrinsic motivation. In addition, this study uses reliability analysis to test the internal consistency of the learning motivation questionnaire. The Cronbach’s Alpha value is 0.817, which is good reliability. In addition, this study used the dependent sample t-test to analyze the learning performance and motivation of students using the learning pathway system.

4. Result

Table 1 shows the results of the dependent

t-test analysis of learning performance for all students. The mean and standard deviation of the pre-test were 56.4 and 22.42. The mean and standard deviation of the post-test were 68.13 and 19.36, and the t-value was 4.612 (

p < 0.001), which means that there is a significant difference in learning performance between the pre-test and post-test. On the other hand, this study divides all students into high-achieving and low-achieving students according to the pre-test results in order to analyze the differences in the learning performance of students with different achievements. There were 34 high-achieving students and 33 low-achieving students.

Table 2 shows the results of the dependent

t-test analysis of learning performance for high- and low-achieving students. High-achieving students are students with PR50 or above. Low-achieving students are those with a grade below PR50. The mean and standard deviation of the pre-test for high-achieving students were 74.68 and 10.99. The mean and standard deviation of the post-test for high-achieving students were 82.75 and 8.47, and the t-value was 3.394 (

p < 0.01), which means that there is a significant difference in learning performance between the pre-test and post-test. The mean and standard deviation of the pre-test for low-achieving students were 37.58 and 13.84. The mean and standard deviation of the post-test for low-achieving students were 53.06 and 15.4, and the t-value was 4.466 (

p < 0.001), which means that there is a significant difference in learning performance between the pre-test and post-test. According to

Table 1 and

Table 2, students using the learning pathway system in the algorithm course can significantly improve their learning performance.

This study explored and analyzed learning motivation. Additionally, this study also analyzed the two dimensions of learning motivation, namely intrinsic motivation and extrinsic motivation.

Table 3 shows the results of the dependent

t-test analysis of learning motivation, intrinsic motivation, and extrinsic motivation for all students. In learning motivation, the mean and standard deviation of the pre-test were 3.74 and 0.54. The mean and standard deviation of the post-test were 3.76 and 0.59, and the t-value was 0.333 (

p > 0.05). When analyzing learning motivation, there is no significant difference between the pre-test and post-test. In intrinsic motivation, the mean and standard deviation of the pre-test were 3.78 and 0.61. The mean and standard deviation of the post-test were 3.83 and 0.63, and the t-value was 0.815 (

p > 0.05). When analyzing intrinsic motivation, there is no significant difference between the pre-test and post-test. In extrinsic motivation, the mean and standard deviation of the pre-test were 3.69 and 0.62. The mean and standard deviation of the post-test were 3.67 and 0.66, and the t-value was −0.133 (

p > 0.05). When analyzing extrinsic motivation, there is no significant difference between the pre-test and post-test.

Although students did not significantly improve their learning motivation after using the system,

Table 3 and

Table 4 show that students’ learning and intrinsic motivation in the post-test are higher than in the pre-test. This means that students can still search for auxiliary materials for algorithms in their spare time through the learning pathway provided by this system. In addition, it can also be inferred from

Table 4 that introducing this system in the algorithm course can enhance students’ intrinsic motivation, enable students to learn more autonomously and spontaneously, and then achieve the benefits of students’ autonomous learning. This result also echoes previous research, some of which have indicated that improving intrinsic motivation in students can promote more spontaneous and autonomous learning [

30,

31].

In addition, this study analyzed the differences in learning motivation and its two dimensions among students with different achievements.

Table 4 shows the results of the dependent

t-test analysis for high- and low-achieving students’ learning motivation, intrinsic motivation, and extrinsic motivation. In learning motivation, the mean and standard deviation of the pre-test for high-achieving students were 3.91 and 0.48. The mean and standard deviation of the post-test for high-achieving students were 3.86 and 0.54, and the t-value was −0.4 (

p > 0.05). When analyzing learning motivation, there is no significant difference between the pre-test and post-test. The mean and standard deviation of the pre-test for low-achieving students were 3.55 and 0.55. The mean and standard deviation of the post-test for low-achieving students were 3.65 and 0.63, and the t-value was 0.691 (

p > 0.05). When analyzing learning motivation, there is no significant difference between the pre-test and post-test.

In intrinsic motivation, the mean and standard deviation of the pre-test for high-achieving students were 3.89 and 0.58. The mean and standard deviation of the post-test for high-achieving students were 3.95 and 0.62, and the t-value was 0.469 (p > 0.05). When analyzing intrinsic motivation, there is no significant difference between the pre-test and post-test. The mean and standard deviation of the pre-test for low-achieving students were 3.67 and 0.64. The mean and standard deviation of the post-test for low-achieving students were 3.72 and 0.64, and the t-value was 0.366 (p > 0.05). When analyzing intrinsic motivation, there is no significant difference between the pre-test and post-test.

In the extrinsic motivation part, the mean and standard deviation of the pre-test for high-achieving students were 3.93 and 0.55. The mean and standard deviation of the post-test for high-achieving students were 3.78 and 0.62, and the t-value was −1.096 (p > 0.05). When analyzing extrinsic motivation, there is no significant difference between the pre-test and post-test. The mean and standard deviation of the pre-test for low-achieving students were 3.43 and 0.58. The mean and standard deviation of the post-test for low-achieving students were 3.57 and 0.69, and the t-value was 0.9 (p > 0.05). When analyzing extrinsic motivation, there is no significant difference between the pre-test and post-test.

In summary, this method significantly improves students’ learning performance, and the learning pathway system can improve students’ learning motivation, although not significantly. Additionally, it is shown that the learning motivation of low-achieving students in the post-test is higher than in the pre-test. In addition, the intrinsic and extrinsic motivation for the low-achieving students in the post-test is higher than those in the pre-test. This means that the learning pathway provided by this study is more helpful for low-achieving students to improve their learning motivation and using this system in the algorithm course can assist low-achieving students in searching for more appropriate algorithms supplementary materials. It can reduce information overload for low-achieving students and obtain more suitable resources for autonomous learning and enhance the motivation of their autonomous learning algorithms. Even though the learning motivation and extrinsic motivation of the post-test for high-achieving students are lower than those of the pre-test, it is shown that the intrinsic motivation for high-achieving students is still improved. This study infers that high-achieving students can actively learn more auxiliary materials for algorithms through the learning pathway of this system, thereby increasing their familiarity and knowledge understanding of algorithm courses.

6. Conclusions

This study introduced a learning pathway and conducted experimental activities in an algorithm course. A total of 67 valid pre-tests, post-test, and learning motivation questionnaires were collected, and a related sample t-test was carried out using SPSS Statistics software. In addition, students were divided into high-achieving and low-achieving groups according to the pre-test. The results showed that the post-test was significantly higher than the pre-test for all students. In addition, the intrinsic motivation of high-achieving students was improved, while the intrinsic and extrinsic motivation of low-achieving students were both improved.

According to the results, this method significantly improves students’ learning performance. It helps students avoid reading materials that are too difficult due to limited prior knowledge or inadequate preliminary learning, which could affect learning quality and decrease students’ motivation. In addition, this study provides keywords for learners’ reference, allowing them to quickly browse the contents of articles and select materials before reading. On the other hand, the results show that the learning pathway system can improve students’ learning motivation, although not significantly. This echoes the findings of other studies [

35,

36]. Through a novel approach of analyzing difficulty levels and providing keywords, this project effectively reduces the challenges in the learning process and promotes students’ motivation, ultimately achieving the goal of tailored instruction.