Exploring Artificial Intelligence in Smart Education: Real-Time Classroom Behavior Analysis with Embedded Devices

Abstract

1. Introduction

1.1. Classroom Behavior Analysis

1.2. Requirement

1.3. Challenges

1.4. Research Content

2. Literature Review

2.1. AI Technology in Education

2.2. Computer Vision Technology Applications

3. Materials and Methods

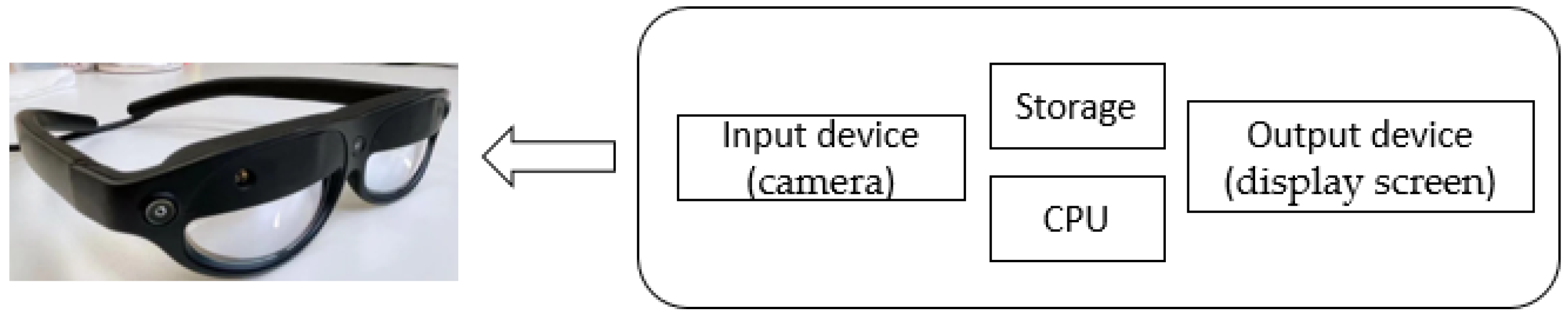

3.1. Solution Structure

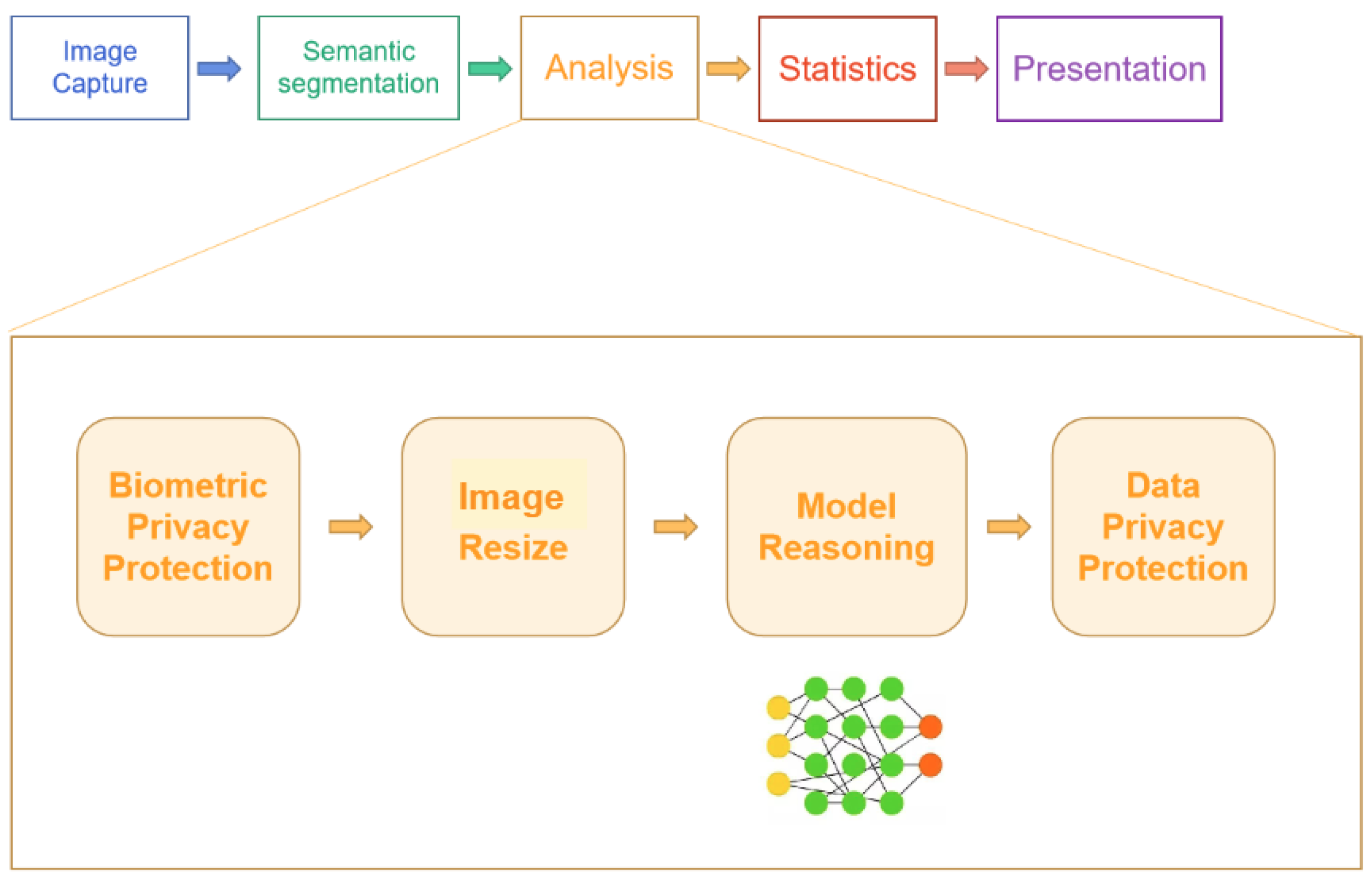

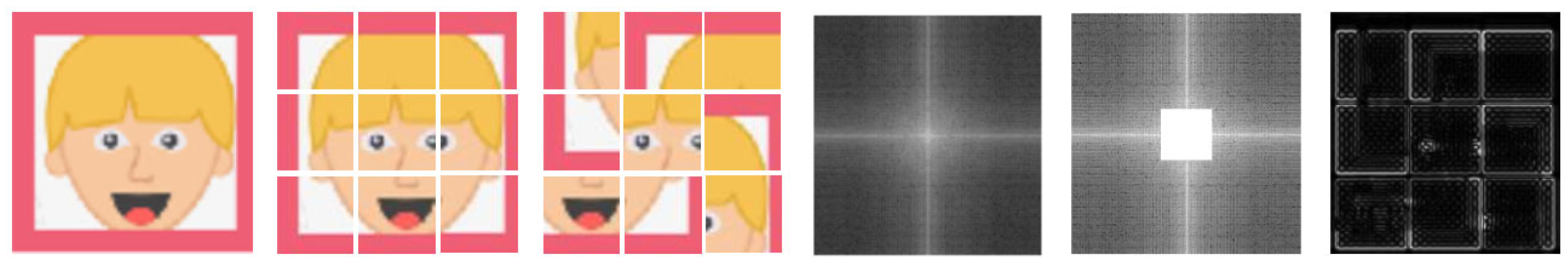

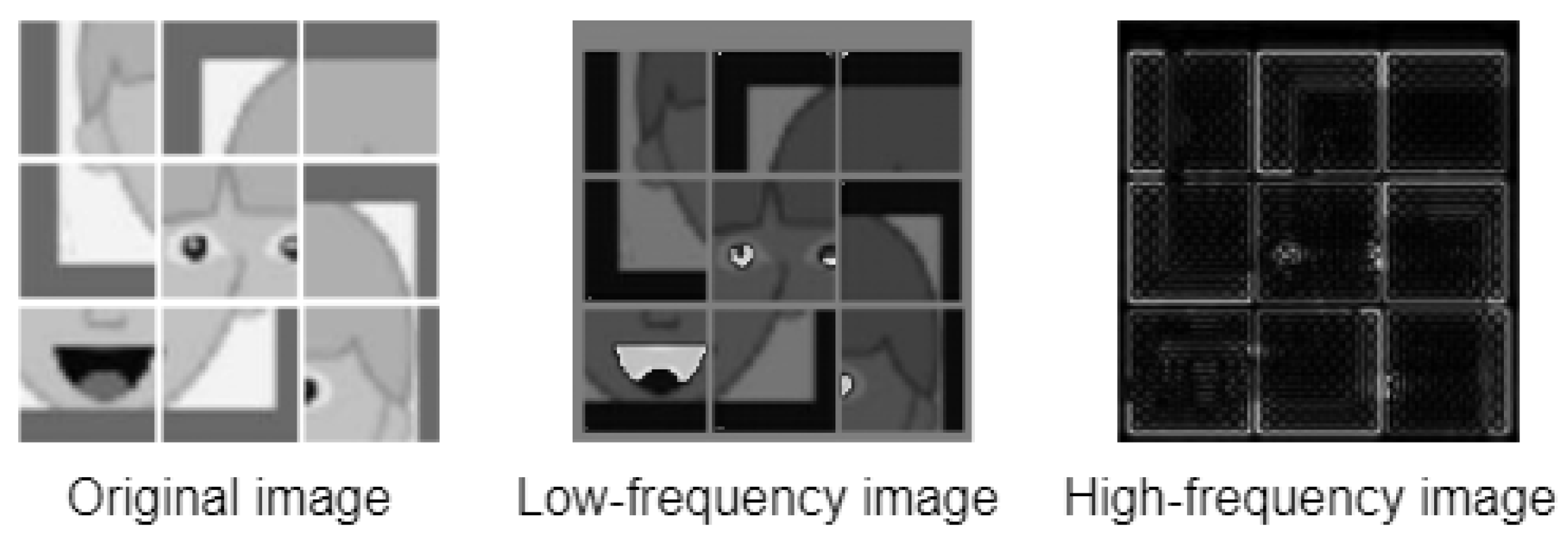

- Biological privacy protection. The Fourier transform is used to remove the low-frequency information from the images and process them into high-frequency images. The images thus processed are not recognizable to the naked eye but do not affect the training effect of the AI model.

- Uniform image size. The size of the images after semantic segmentation varies. To facilitate model training, we unify the size of the images to 224 × 224 × 3.

- Visual model analysis. Vision models need to run on embedded devices, so they must be small in size, fast in response, and accurate. We propose the model PIDM (Portable Intelligent Device Model), which is only 76% of the size of MobileNet, with higher accuracy and a faster response rate.

- Behavior privacy protection. The results of the visual model analysis are not associated with individuals to protect the privacy of student behavior.

3.2. Function Module Description

3.2.1. Privacy Protection Module

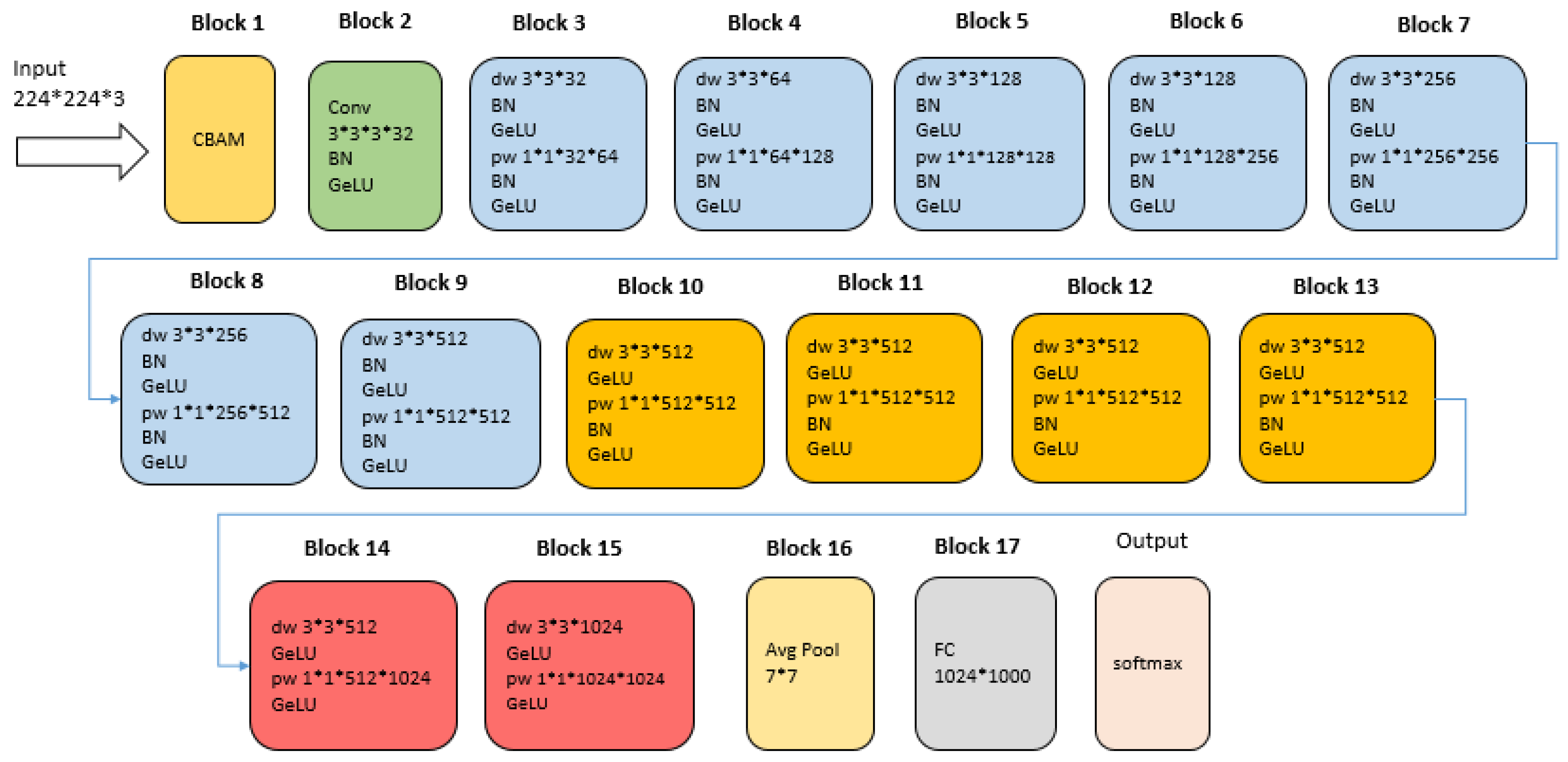

3.2.2. Lightweight Model

- 1.

- Input

- 2.

- Block 1

- 3.

- Block 2

- 4.

- Block 3–Block 9

- 5.

- Block 10–Block 13

- 6.

- Block 14 and Block 15

- 7.

- Block 16

- 8.

- Block 17

- 9.

- Output

4. Experiment

4.1. Experimental Environment

4.1.1. Hardware and Software

4.1.2. Dataset

4.2. Purpose of the Experiment

5. Results

5.1. Running in an Embedded Device Environment

5.2. Better Performance

5.3. Impact of Privacy Protection on Model Accuracy

6. Discussion

6.1. Analysis of Technology

6.1.1. Information Hiding Based on Fourier Transform

6.1.2. Ablation Experiments

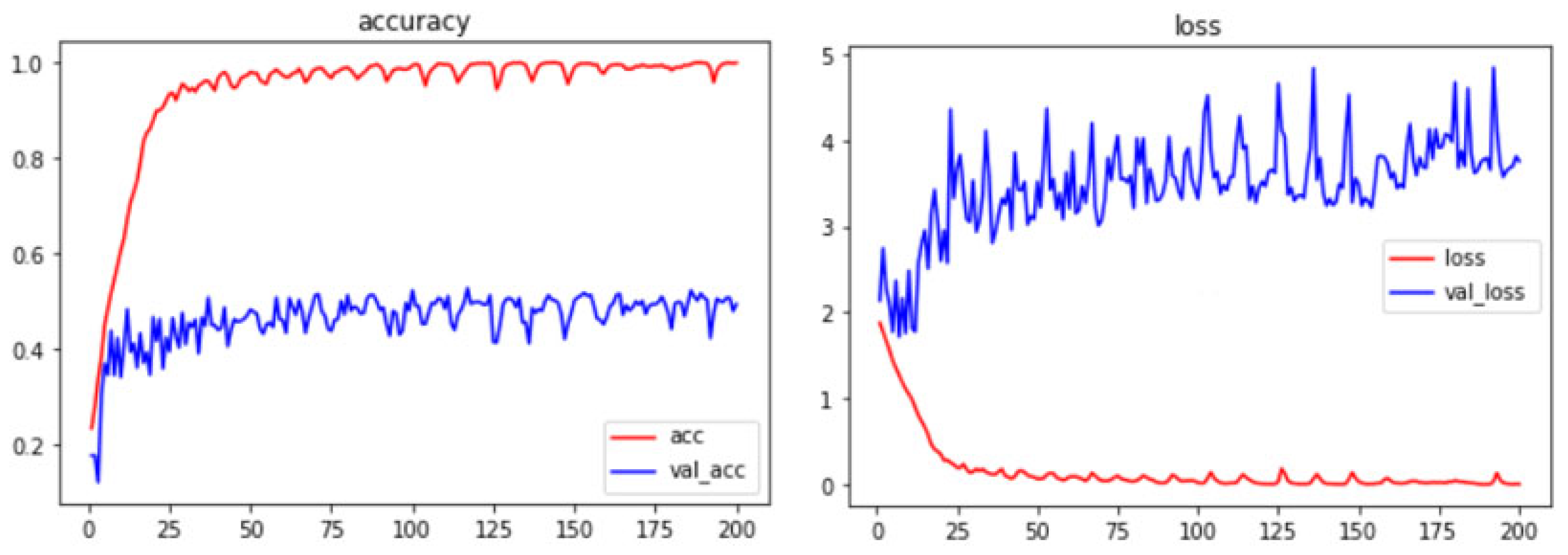

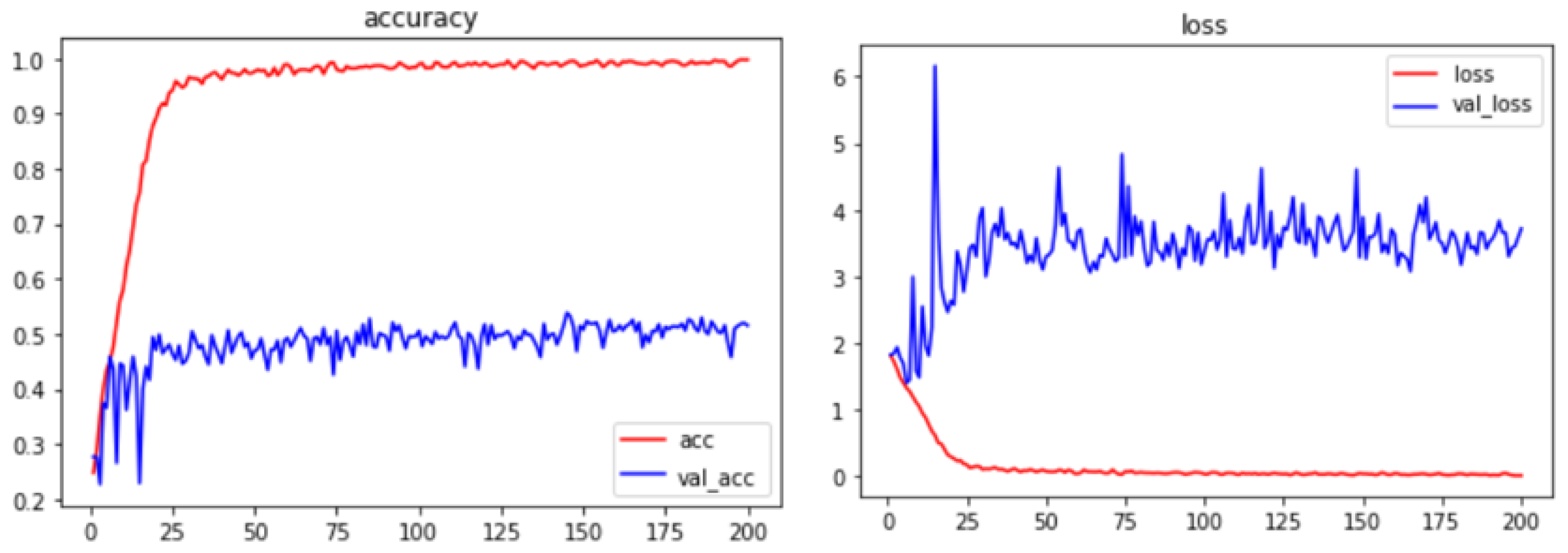

- From Figure 12, we can see that after pruning one DSC layer, the model accuracy is less than 50%, which is not good.

- From Figure 13, we can see that after pruning the number of channels of one DSC layer, the model accuracy is less than 50%, which is not good.

- From Figure 14, we can see that the model crashes after pruning all BN layers. This indicates that BN layers have a rather critical role in the model and cannot be removed completely.

- Comparing Figure 15 and Figure 16, model_4 is pruning BN at the beginning layers of the model, and model_5 is pruning BN at the final layers of the model. Model_5 has obviously produced a better result. It shows that the role of the BN layer at the beginning of the model is very obvious. However, it is not very important in the last few layers of the model. Therefore, the focus of pruning BN layers should be on the final part of the model.

- Model_6 prunes all BNs in the last three DSC layers, and the accuracy rate decreases significantly, as shown in Figure 17. It can be seen that it is not feasible to prune BN excessively in the final part of the model.

- PIDM is in line with our idea to step down the BN layers in the second half of the neural network model. In addition, it does not use the BN layers before the softmax layer. As shown in Figure 18, PIDM achieves acceptable results.

6.2. Analysis of Teaching Applications

6.2.1. Analysis of Teaching Effect

6.2.2. Analysis of Teacher Questionnaire

6.2.3. Analysis of Student Questionnaire

6.3. Contribution

- Accuracy

- Efficiency

- Ease of use

- Real-time display of analysis data

- Localization services

6.4. Deficiency and Improvement Direction

6.4.1. Technology

6.4.2. Applications in Teaching

7. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Zeng, H.; Shu, X.; Wang, Y.; Wang, Y.; Zhang, L.; Pong, T.-C.; Qu, H. EmotionCues: Emotion-Oriented Visual Summarization of Classroom Videos. IEEE Trans. Vis. Comput. Graph. 2020, 27, 3168–3181. [Google Scholar] [CrossRef] [PubMed]

- Putra, W.B.; Arifin, F. Real-Time Emotion Recognition System to Monitor Student’s Mood in a Classroom. J. Phys. Conf. Ser. 2019, 1413, 012021. [Google Scholar] [CrossRef]

- Li, Y.Y.; Tang, Z.G. Design and implementation of the interactive analysis system software ET Toolbox FIAS 2011 based on Flanders. China Educ. Technol. Equip. 2011, 102–104. [Google Scholar]

- Taylor, S.S. Behavior basics: Quick behavior analysis and implementation of interventions for classroom teachers. Clear. House A J. Educ. Strateg. Issues Ideas 2011, 84, 197–203. [Google Scholar] [CrossRef]

- Alberto, P.; Troutman, A.C. Applied Behavior Analysis for Teachers; Pearson: London, UK, 2013. [Google Scholar]

- Chen, C.P.; Cui, Y.; Chen, Y.; Meng, S.; Sun, Y.; Mao, C.; Chu, Q. Near-eye display with a triple-channel waveguide for metaverse. Opt. Express 2022, 30, 31256–31266. [Google Scholar] [CrossRef] [PubMed]

- Timms, M.J. Letting artificial intelligence in education out of the box: Educational cobots and smart classrooms. Int. J. Artif. Intell. Educ. 2016, 26, 701–712. [Google Scholar] [CrossRef]

- Mikropoulos, T.A.; Natsis, A. Educational virtual environments: A ten-year review of empirical research (1999–2009). Comput. Educ. 2011, 56, 769–780. [Google Scholar] [CrossRef]

- Rus, V.; D’mello, S.; Hu, X.; Graesser, A. Recent Advances in Conversational Intelligent Tutoring Systems. AI Mag. 2013, 34, 42–54. [Google Scholar] [CrossRef]

- Sharma, R.C.; Kawachi, P.; Bozkurt, A. The Landscape of Artificial Intelligence in Open, Online and Distance Education: Promises and concerns. Asian J. Distance Educ. 2019, 14, 1–2. [Google Scholar] [CrossRef]

- Pokrivcakova, S. Preparing teachers for the application of AI-powered technologies in foreign language education. J. Lang. Cult. Educ. 2019, 7, 135–153. [Google Scholar] [CrossRef]

- Chassignol, M.; Khoroshavin, A.; Klimova, A.; Bilyatdinova, A. Artificial Intelligence trends in education: A narrative overview. Procedia Comput. Sci. 2018, 136, 16–24. [Google Scholar] [CrossRef]

- Rafika, A.S.; Sudaryono; Hardini, M.; Ardianto, A.Y.; Supriyanti, D. Face Recognition based Artificial Intelligence with AttendX Technology for Student Attendance. In Proceedings of the 2022 International Conference on Science and Technology (ICOSTECH), Batam City, Indonesia, 3–4 February 2022; pp. 1–7. [Google Scholar] [CrossRef]

- Roy, M.L.; Malathi, D.; Jayaseeli, J.D.D. Facial Recognition Techniques and Their Applicability to Student Concentration Assessment: A Survey. In Proceedings of the International Conference on Deep Learning, Computing and Intelligence; Springer: Singapore, 2022; pp. 213–225. [Google Scholar] [CrossRef]

- Savchenko, A.V.; Savchenko, L.V.; Makarov, I. Classifying Emotions and Engagement in Online Learning Based on a Single Facial Expression Recognition Neural Network. IEEE Trans. Affect. Comput. 2022, 13, 2132–2143. [Google Scholar] [CrossRef]

- Bu, Q. The global governance on automated facial recognition (AFR): Ethical and legal opportunities and privacy challenges. Int. Cybersecur. Law Rev. 2021, 2, 113–145. [Google Scholar] [CrossRef]

- Andrejevic, M.; Selwyn, N. Facial recognition technology in schools: Critical questions and concerns. Learn. Media Technol. 2020, 45, 115–128. [Google Scholar] [CrossRef]

- Kumalija, E.J.; Nakamoto, Y. MiniatureVQNet: A Light-Weight Deep Neural Network for Non-Intrusive Evaluation of VoIP Speech Quality. Appl. Sci. 2023, 13, 2455. [Google Scholar] [CrossRef]

- Aloufi, B.O.; Alhakami, W. A Lightweight Authentication MAC Protocol for CR-WSNs. Sensors 2023, 23, 2015. [Google Scholar] [CrossRef] [PubMed]

- Mnih, V.; Heess, N.; Graves, A. Recurrent models of visual attention. Adv. Neural Inf. Process. Syst. 2014, 27. [Google Scholar] [CrossRef]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. CBAM: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision, Munich, Germany, 8–14 September 2018. [Google Scholar] [CrossRef]

- Sifre, L.; Mallat, S. Rigid-Motion Scattering for Texture Classification. Comput. Sci. 2014, 3559, 501–515. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar]

- Gomez, A.N.; Kaiser, L.M.; Chollet, F. Depthwise Separable Convolutions for Neural Machine Translation. Available online: https://arxiv.org/abs/1706.03059 (accessed on 1 May 2023).

- Prasetyo, E.; Purbaningtyas, R.; Adityo, R.D.; Suciati, N.; Fatichah, C. Combining MobileNetV1 and Depthwise Separable convolution bottleneck with Expansion for classifying the freshness of fish eyes. Inf. Process. Agric. 2022, 9, 485–496. [Google Scholar] [CrossRef]

- Yoo, B.; Choi, Y.; Choi, H. Fast depthwise separable convolution for embedded systems. In Proceedings of the Neural Information Processing: 25th International Conference (ICONIP 2018), Siem Reap, Cambodia, 13–16 December 2018. [Google Scholar]

- Hossain, S.M.M.; Aashiq Kamal, K.M.; Sen, A.; Deb, K. Tomato Leaf Disease Recognition Using Depthwise Separable Convolution; Springer International Publishing: Cham, Switzerland, 2022. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. Available online: https://arxiv.org/abs/1409.1556 (accessed on 1 May 2023).

- Blalock, D.; Gonzalez Ortiz, J.J.; Frankle, J.; Guttag, J. What is the state of neural network pruning? Comput. Sci. 2022, 2, 129–146. [Google Scholar] [CrossRef]

- Wang, Z.; Li, C.; Wang, X. Convolutional Neural Network Pruning with Structural Redundancy Reduction. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Virtual, 19–25 June 2021; pp. 14908–14917. [Google Scholar] [CrossRef]

- Szegedy, C.; Liu, W.; Jia, Y.; Sermanet, P.; Reed, S.; Anguelov, D.; Erhan, D.; Vanhoucke, V.; Rabinovich, A. Going Deeper with Convolutions. IEEE Comput. Soc. 2014, 1–9. [Google Scholar] [CrossRef]

- Kim, G.H.; An, S.H.; Kang, K.I. Comparison of construction cost estimating models based on regression analysis, neural networks, and case-based reasoning. Build. Environ. 2004, 39, 1235–1242. [Google Scholar] [CrossRef]

- Zhang, Y.F.; Fuh, J.Y.; Chan, W.T. Feature-based cost estimation for packaging products using neural networks. Comput. Ind. 1996, 32, 95–113. [Google Scholar] [CrossRef]

| Model Training Environment | Amlogic T962 Embedded Environment | Raspberry Pi 3B Embedded Environment | |

|---|---|---|---|

| CPU | Kaggle kernels | Cortex-A53 1.5 GHz | Cortex-A53 1.2 GHz |

| RAM | 13 G | 2 G | 1 G |

| GPU | p100 16 G | Mali 450 MP | Broadcom VideoCore IV |

| System | Kaggle platform | Android 9.0 | Windows NT 6.1 |

| Hardware: Amlogic T962 System: Android 9.0 | Hardware: Raspberry Pi 3B System: Windows NT 6.1 | |

|---|---|---|

| PIDM | √ | √ |

| Model | FERPlus Accuracy | ExpW Accuracy | AffectNet Accuracy | Million Parameters | Response time/k |

|---|---|---|---|---|---|

| VGG16 | 83.76% | 81.52% | 78.25% | 138 | 3.5387 s |

| GoogLeNet InceptionV3 | 78.67% | 76.75% | 74.87% | 23 | 3.4376 s |

| MobileNet | 81.25% | 80.46% | 76.32% | 4.2 | 2.5783 s |

| PIDM | 82.43% | 80.65% | 76.24% | 3.2 | 1.8703 s |

| Hardware: Amlogic T962 System: Android 9.0 | Hardware: Raspberry Pi 3B System: Windows NT 6.1 | |||

|---|---|---|---|---|

| Protected | Unprotected | Protected | Unprotected | |

| 1 | 82.43% | 82.58% | 81.25% | 81.16% |

| 2 | 82.35% | 82.26% | 81.65% | 80.87% |

| 3 | 81.82% | 82.47% | 80.76% | 81.38% |

| Name | Description |

|---|---|

| Model_1 | Remove a DSC layer, Block 9. |

| Model_2 | Modify the channel of pw convolution in Block12 from 512 to 256. |

| Model_3 | Remove all BN layers. |

| Model_4 | Remove all BN layers of Block3 and Block4. |

| Model_5 | Remove all BN layers of Block14 and Block 15. |

| Model_6 | Remove all BN layers of Block13, Block14 and Block 15. |

| PIDM | 1. Remove all BN layers of Block14 and Block 15. 2. Remove BN layers after dw in Block10, Block11, Block12, and Block13. |

| Name | Parameter Size | FERPlus Accuracy |

|---|---|---|

| Model_1 | 2.96 M | 46.32% |

| Model_2 | 2.98 M | 43.21% |

| Model_3 | 3.02 M | 26.00% |

| Model_4 | 3.26 M | 50.68% |

| Model_5 | 3.26 M | 81.92% |

| Model_6 | 3.24 M | 71.46% |

| PIDM | 3.22 M | 82.46% |

| Student | 2 Class Hours | 8 Class Hours | 16 Class Hours | |

|---|---|---|---|---|

| Teacher A | Team A | 79.23 | 85.67 | 84.12 |

| Team B | 76.41 | 73.53 | 77.49 | |

| Teacher B | Team A | 82.19 | 80.67 | 83.27 |

| Team B | 74.16 | 76.57 | 74.28 |

| 2 Class Hours | 8 Class Hours | 16 Class Hours | Overall Average | |

|---|---|---|---|---|

| Team A | 80.71 | 83.17 | 83.70 | 82.53 |

| Team B | 75.28 | 75.05 | 75.89 | 75.41 |

| Convenience Rating (Average) | Teaching Effectiveness Rating (Average) | |

|---|---|---|

| Group A | 4.33 | 3.53 |

| Group B | 4.57 | 4.31 |

| Total | 4.45 | 3.92 |

| With Smart Devices | Without Smart Devices | |

|---|---|---|

| Liberal arts students | 4.03 | 4.14 |

| Science students | 3.62 | 4.18 |

| Sample variance | 0.83 | 0.75 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, L.; Chen, C.P.; Wang, L.; Liang, K.; Bao, W. Exploring Artificial Intelligence in Smart Education: Real-Time Classroom Behavior Analysis with Embedded Devices. Sustainability 2023, 15, 7940. https://doi.org/10.3390/su15107940

Li L, Chen CP, Wang L, Liang K, Bao W. Exploring Artificial Intelligence in Smart Education: Real-Time Classroom Behavior Analysis with Embedded Devices. Sustainability. 2023; 15(10):7940. https://doi.org/10.3390/su15107940

Chicago/Turabian StyleLi, Liujun, Chao Ping Chen, Lijun Wang, Kai Liang, and Weiyue Bao. 2023. "Exploring Artificial Intelligence in Smart Education: Real-Time Classroom Behavior Analysis with Embedded Devices" Sustainability 15, no. 10: 7940. https://doi.org/10.3390/su15107940

APA StyleLi, L., Chen, C. P., Wang, L., Liang, K., & Bao, W. (2023). Exploring Artificial Intelligence in Smart Education: Real-Time Classroom Behavior Analysis with Embedded Devices. Sustainability, 15(10), 7940. https://doi.org/10.3390/su15107940