1. Introduction

Transportation carbon emissions are characterized by a significant share, fast growth rate and slow peak attainment [

1]. Traffic congestion generates more carbon emissions [

2].

When there is a high ratio of trucks in the traffic flow, it leads to a drop in highway capacity, which in turn results in traffic congestion. Additionally, traffic accidents, especially those with vehicles hitting the W-Beam barrier, can also result in traffic congestion. To reduce the congestion caused by these two situations, innovations in and new practices of accident detection technologies are encouraged [

3]. We developed a W-Beam barrier vibration detector based on inclination sensors in 2019. During its use, we found that there was a large amount of natural noise in the sensor data, making it impossible to distinguish whether a section of anomalous data was due to noise or to the passage of a truck. In addition, the method of setting thresholds by experience led to a significant false alarm rate when identifying barrier impact events.

Therefore, several methods in data-driven are used in this paper. First, noise reduction is applied to the sensor data and the number of trucks over a given period is identified and counted. Online monitoring is conducted to see identify any incidents of barrier collision. The obtained results are shared with the established highway operation management system.

When no accident occurs, the ratio of trucks is one source of traffic congestion. When the ratio of trucks increases, the effect of a moving bottleneck caused by trucks increases sharply, with the traffic flow forming gathering and dissipating waves, resulting in a rapid decrease in the average speed of traffic flow. This causes an increase in the average delay and a slowdown in the average speed of traffic flow, which can cause a breakdown when serious [

4]. At this moment, traffic management strategies need to be adjusted in real time. These include prohibiting trucks from overtaking, active speed limits, the temporary opening of shoulders, etc. [

5]. The trigger conditions of existing highway management policies are set by managers based on subjective judgments. The method proposed in this paper can be used as one of the automatic trigger conditions for daily management policies.

The barrier vibration monitoring device has now been tested on a cumulative total of 100+ km of highway in China. The structure of this device is composed of a three-axis inclination sensor (X-axis facing the driving direction, Y-axis facing the vertical ground direction, and Z-axis facing the outside of the road), LOT, and a 5 V battery. The sensors are fixed in the steel posts of the W-Beam barrier and 2 sets are deployed every 200 m (1 set each in the inner and outer barriers with symmetrical deployment). A control machine is deployed every 1.5 km with LOT communication between the control machine and each detector. We encourage the operator to set up the control machine and road cameras together to share the power and fiber optic communication system conveniently. The installation needs to set the starting position as the initial value, and the collected raw data is the offset value between each axis and the initial value.

We found through the field data in the non-accident state that a slight change occurs on the Y-axis when a truck passes by the waveform barrier vibration sensor, and the frequency of the offset value on the Y-axis increases as the number of nearby trucks increases. Using this characteristic, we intend to try to obtain the approximate proportion of trucks passing each sensor through the barrier monitoring data.

However, barrier crash data are not easily available in practice, so the data are sourced from experiments with human-simulated crashes to verify the recognition accuracy. Barrier crash events will be reflected in all axes: serious crash events will lead to large offset values in the Y and Z axes and will not return to the initial value. Less serious events will not lead to extensive deformation of the barrier, but will still be reflected in the Y-axis at the time of crash.

In China, there are legal restrictions on active speed limits and open shoulders, so not all highways can implement this strategy [

6]. However, no-truck-overtaking management does not have this restriction [

7]. The device will determine when to turn on the no-truck-overtaking management policy downstream based on the percentage of large vehicles upstream. The judgment condition to open the ban on truck overtaking is: in the upstream, 5 continuous sensors (1 km) detect a truck passing at least every 10 s, and this situation lasts for more than 15 min, indicating that at least 100 trucks have passed through the 1 km section during this period.

In this case, we suggest the highway operation management system to post a message at the variable message sign (VMS) at the upstream interchange of this section to alert truck drivers that the road ahead is forbidden for trucks to overtake. The VMS message will be automatically cancelled when the barrier detection data registers fewer than 100 trucks in a 15 min period. The field data comes from a highway in Guangdong, China, with four lanes in both directions and a speed limit of 120 km/h. The ratio of trucks on this section is maintained at 10–20% all year round. The maximum measured capacity is 1800 pcu/h/ln, far from the design capacity value of 2200 pcu/h/ln. The calculation method of pollution caused by congestion is referred to Wang [

2] Indicators used. By comparing the data for one month with and one month without the device, it was found that the highway capacity increased by around 12.7% and the environmental pollution index due to congestion was significantly reduced. There were no barrier impact accidents during the experiment period.

Therefore, the aim of this paper is to improve congestion management for daily highway operations and barrier collisions. The approach is to decide whether to turn on the no-overtaking management strategy for trucks by estimating the proportion of large vehicles upstream. By accurately identifying barrier collisions to help rapid highway decongestion. In terms of method, this paper chose to use Chang’s wavelet multiscale algorithm to achieve noise filtration by analyzing the original data [

8]. By comparing Gertler X’s PCA [

9], Lee Y’s PLS [

10] and Gharavian Z’s [

11] FDA algorithms, we found that FDA is more suitable for application in monitoring collision events, while PCA is more suitable for evaluating the judgment of the approximate proportion of trucks. Therefore, we intend to use two sets of algorithms to achieve the estimation of the approximate proportion of large trucks and the identification of crash events, respectively.

This paper is an attempt to achieve the goal of carbon neutrality in transportation in China. In addition to significantly reducing the pollution caused by congestion, it can also serve as an alternative solution, based on video and radar technology, to reduce the cost of highway electromechanical equipment and contribute to the sustainable development of China’s highway network.

3. Methods

In this paper, we first need to reduce the noise at multiple scales by using wavelet thresholding to remove the noise. Second, we must compare the principle of each algorithm, select the suitable algorithm for estimating the proportion of trucks, and compare the experimental results of non-accident information with the video to confirm the accuracy of the algorithm. Using the simulated impact data, the accuracy of each fault diagnosis algorithm is compared and the suitable algorithm is selected to merge with the wavelet thresholding algorithm to form the core algorithm for W-Beam Barrier collision identification.

3.1. Sensor Data Analysis

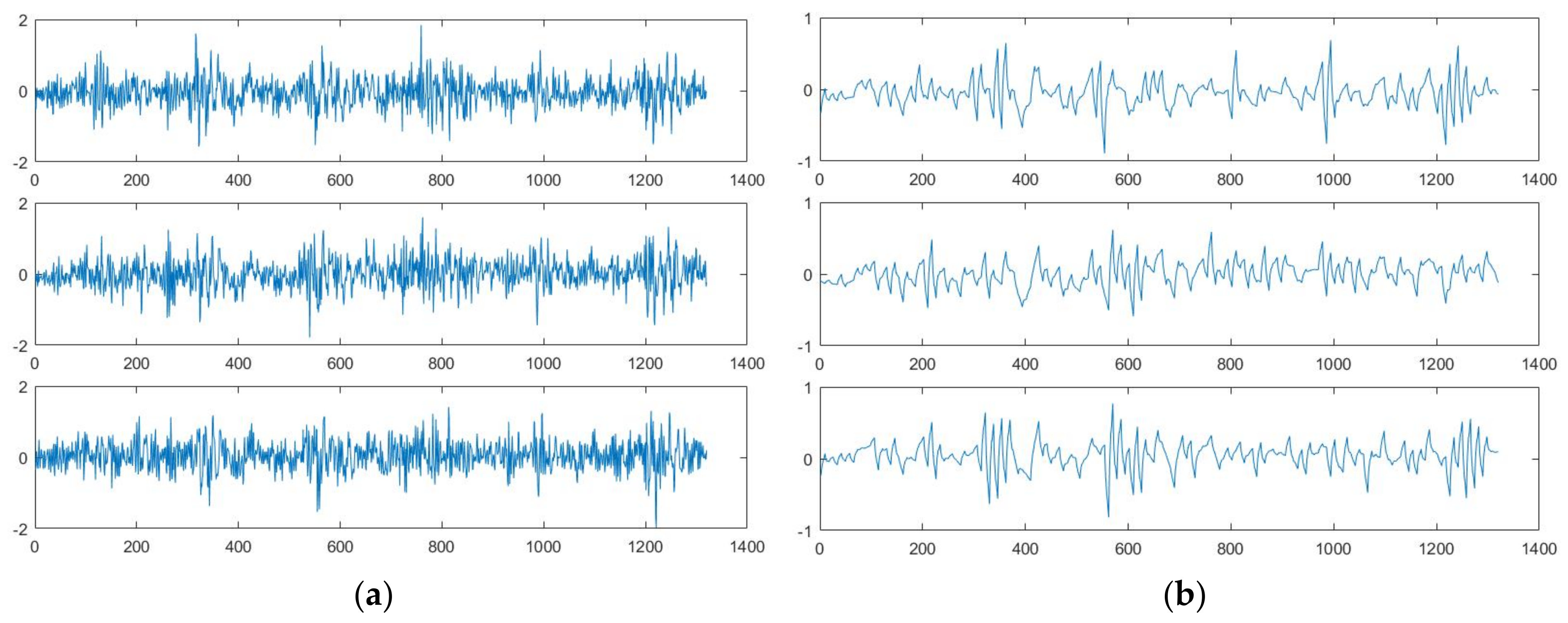

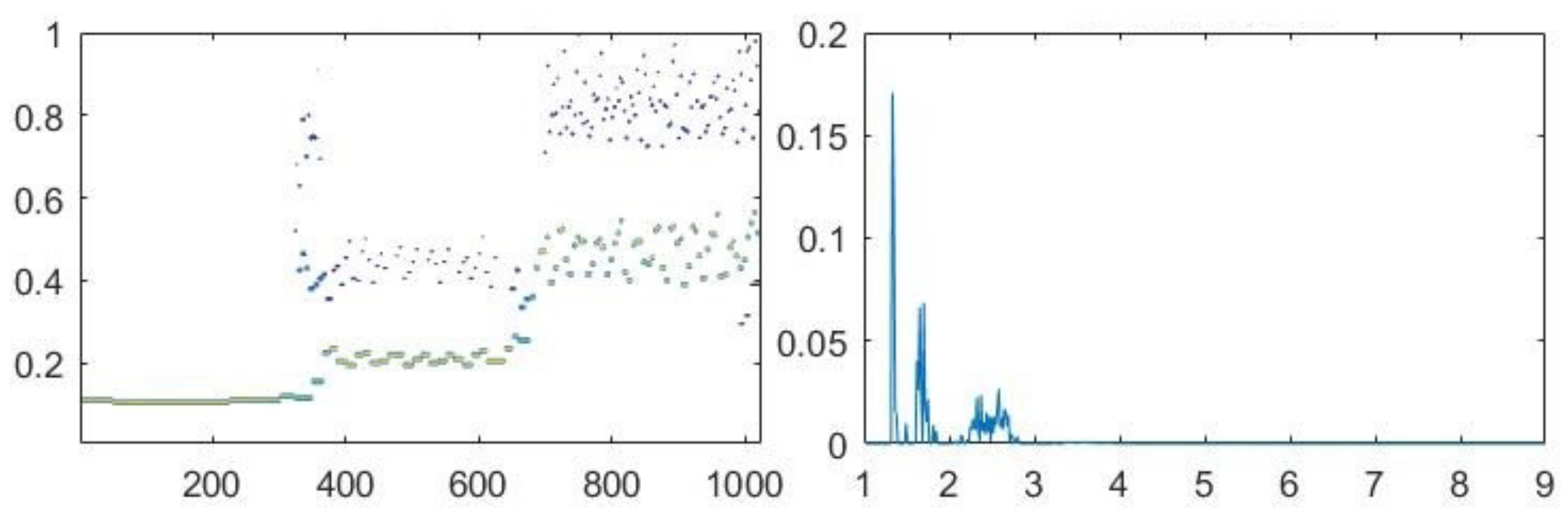

We placed 20 monitoring points at 200 m intervals on a freeway in Guangdong Province, China, and collected one set of data per second. Data were collected for about 1 h during the flat-peak period. There were no crashes during the data collection, and the ratio of trucks exceeded 15% for about 15 min. In

Figure 1, data from three monitoring points were selected to compare about 1350 sets of data during the peak hours (a) as well as the flat-peak time period (b).

Since there is no crash, the data contain a large amount of noise and vibration data. The causes of this noise can come from disturbances in nature, geomagnetic interference and inconsistencies in the operating properties of the front-end sensor software and hardware. As the amount of data increases, a significant amount of noise obscures useful information at multiple scales. For example, the drift gradient of the sensor tends to show a slow-varying, constant-deviation type, while the vibration data generated when a truck passes by will show a high-frequency, sudden-varying type. Such data is often masked by noise.

3.2. Improved Wavelet Threshold Noise Removal Algorithm

The improved wavelet threshold noise removal method proposed in this paper is based on previous research. Since the previous research results were designed for other kinds of detectors, the threshold value needs to be adjusted down and the sensitivity needs to be enhanced when applied to barrier monitoring devices. In this paper, three aspects of the noise standard variance estimation, threshold setting and wavelet coefficient adjustment function in the literature [

13] are modified to make it more suitable for the use of barrier monitoring data.

3.2.1. Noise Standard Deviation Estimation Formula

The noise standard deviation is a measure of the difference between the noise and the information it is supposed to correct. After decomposing the signal with scale

J, there will be a high-frequency signal and a low-frequency signal under each scale. Where the high-frequency signal is

, the noise standard deviation estimation formula is defined as Equation (1):

where

j is the scale,

N is the total number of wavelet coefficients at scale

j, and

k is the number of current wavelet coefficients.

3.2.2. Threshold Setting Functions

Depending on the actual signal-to-noise ratio of the original signal, the threshold setting needs to be changed according to the actual situation. The uniform threshold equation given in the literature [

13] is known.

After decomposing the signal with scale

J,

J sets of high-frequency signal coefficients are obtained, and each set of wavelet coefficients is arranged from smallest to largest in absolute value to obtain a vector:

, 1 ≤

n ≤ N. Use this vector to calculate the evaluation vector under the

jth layer of wavelet coefficients:

, 1 ≤

n ≤ N, where:

The evaluation vector interruption values are then sorted from largest to smallest, and the smallest value is taken as the approximation error to find the corresponding wavelet coefficient

, which is used to calculate the threshold value at the

jth layer wavelet decomposition as:

. The threshold selection function at the

jth layer wavelet decomposition is:

where

is the average of the absolute values of the wavelet coefficients and

is the very small energy level of this wavelet coefficient vector. The calculation equation is as follows.

3.2.3. Calculation of Wavelet Coefficient Estimates

The estimated wavelet coefficients, i.e., the wavelet coefficients at that place, are suspected to be caused by noise and need to be reduced to the signal. The original wavelet coefficient values are replaced by the estimated wavelet coefficient values through a series of calculations, and then the wavelets are reconstructed to finally achieve the purpose of denoising. A coefficient

, which reflects the noise intensity of the high frequency signal of the

jth layer wavelet, is introduced. The calculation formula is as follows:

, where

denotes the coefficient amplitude of the high-frequency part of the

jth layer wavelet. The estimated values of wavelet coefficients are given in Equation (6).

The detailed steps of the improved wavelet threshold denoising algorithm are described in the literature [

13].

3.3. PCA-Based Fault Diagnosis Model

The PCA model is a typical data dimensionality reduction method that allows the relevant multidimensional variables to be combined linearly to extract their characteristic parameters and used to determine fault information. The PCA-based fault diagnosis model works by using a large amount of normal offline data for statistical modeling, i.e., divided into two orthogonal and complementary subspaces, namely, the principal element subspace and the residual subspace. When the sample data is monitored online, any sample data x(t) can be decomposed and projected on both spaces, i.e.,

,

,

, and

. Where

is the modeled part and

is the unmodeled part [

23].

Squared prediction error (SPE) and Hotelling’s

are usually used to detect whether a process is abnormal when it is necessary to distinguish normal data from faulty data [

24].The SPE metric measures the change in the projection of the sample vector in the residual space:

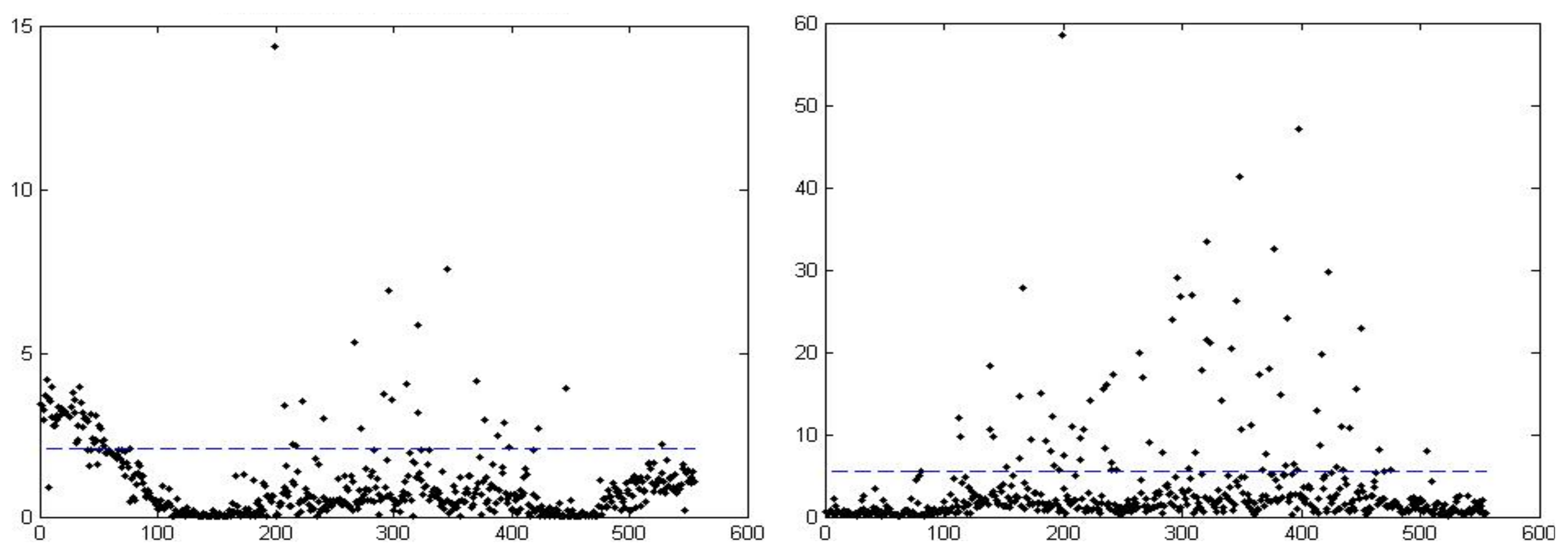

When the statistical metric with faulty data exceeds the statistical control limits, the change in correlation between the data is reflected in the change in the SPE value. As shown in

Figure 2,

is the radius of the control domain, and the black dots indicate the SPE values of the data points in the residual space; if the data points fall outside the domain, a fault is considered to have occurred [

25].

The statistic measures the variation of variables in the principal metric space: . A sample data variable is considered faulty when it is projected in the principal metric space at a distance exceeding the radius of the control domain.

Since SPE and indicators have different monitoring priorities, a phenomenon will often occur: the indicator for a sample is outside its control domain while the SPE indicator for that sample is within the normal range. This indicates that the data for that sample may be a data fault, or there may be a change in the measurement range that requires other means to aid in the decision making of fault information.

3.4. PLS-Based Fault Diagnosis Model

In the practical application of a barrier monitoring system, only one or two kinds of sensor data need to be focused on in order to judge the collision event, and the data of other kinds of sensors are only auxiliary. The PLS model is able to achieve this function. The PLS model is based on the PCA model: from the variable

, the variable

is selected to focus on, and the space X is PLS-decomposed into two subspaces,

and

,. The fault detection is monitored by these two subspaces. If a fault occurs in the variable space Y, i.e., the fault affects the quality change, the fault must occur in the subspace

,.I If the fault does not affect the quality change, the fault must occur in the subspace

. Usually, the

indicator is used to detect faults in

, and the Q indicator is used to detect faults occurring in

[

26].

The calculation [

27] is that there are variables

, which can reduce X into two subspaces:

and

. Unlike in the PCA model,

and

are not orthogonal:

is the oblique projection of x along the subspace

to

, while

is the oblique projection of x along the subspace

to

. When online monitoring is performed, the principal and residual spaces of the newly obtained samples can be decomposed as:

and

. Calculate the control limit index:

.

In the above calculation process, a phenomenon will be found that and Q are correlated, which will lead to the fact that a certain fault can appear in both subspaces at the same time. This is similar to the use of SPE, indicators in the PCA model. As such, this type of phenomenon requires other means to assist in the decision making process for fault information. Additionally, fault diagnosis models using the PLS model can be more accurate and efficient than using the PCA model only if there is a better understanding of the monitored system.

3.5. FDA-Based Fault Diagnosis Model

The FDA model, like the PCA and PLS models, is a method that also uses training data to construct a reduced dimensional space. It then projects online data into that space and uses the projected feature parameters for fault diagnosis of the data. However, this model differs in that it requires both the training of normal data and data under labeled fault conditions [

20]. If the data from barrier impacts are considered as a type of data fault, various types of impact events can be artificially created and the FDA model can be trained with both the collected data and the normal data.

Principle of FDA-based fault diagnosis model [

28]: the space

X consisting of the variables

mentioned previously is the normal data. The created crash event monitoring data are divided by class into a fault event data space G constructed from

. It is assumed that G is incorporated into

X,

, to train the FDA model. Define the total data dispersion as:

where

is the mean vector of n samples. Define

as the set of sample vectors belonging to the jth class of data, and the intra-class dispersion

and the total intra-class dispersion

of the jth class of data as Equation (7), respectively.

In Equation (7),

is the mean vector of class

j data, and the interclass dispersion is defined as:

, satisfying the total dispersion equal to the sum of intraclass dispersion and interclass dispersion:

. The FDA model is used to satisfy the minimum intraclass dispersion while maximizing the dispersion, i.e., the optimization objective function as Equation (8).

In Equation (8), assuming that

is invertible, the FDA vector is equivalent to:

. Since the rank of

is less than p, there are at most p-1 nonzero characteristic roots, and the computed FDA vector, by column, forms the projection matrix

. Thus, the sample

can be projected into the p-1 dimensional FDA space to obtain

, thereby achieving optimal separation of the data. When online monitoring is performed, the online sample x is projected into the low-dimensional space composed of column vectors of the matrix

, and the FDA score of the sample is obtained:

,. This is combined with a metric such as the Marxian distance of the literature [

29] to find the source of the fault to which x belongs and achieve fault diagnosis.

Since the FDA model takes the data under fault conditions into consideration during the training process, its fault diagnosis accuracy is theoretically better than both the PCA and PLS models. Additionally, it arranges the modeled data while satisfying the criteria of minimizing intra-class dispersion and maximizing inter-class dispersion to complete fault diagnosis, avoiding the uncertainty between PCA and PLS fault diagnosis indexes.

5. Results and Discussion

5.1. PCA Fault Diagnosis Model Results

The PCA-based fault diagnosis model requires two indicators of statistical SPE and T2 to achieve fault diagnosis. The results of each control limit indicator are shown in

Figure 4.

In order to determine the location of the sensor where the fault occurred, the contribution map method was used and the results are shown in

Figure 5.

Due to the large amount of vibration information interference, the PCA model is unable to achieve the diagnosis of crash events but is more sensitive to vibration information. The number of data failures that occurred was counted as 90, which means that the system believes that 90 trucks passed through the experimental section.

5.2. PLS Fault Diagnosis Model Results

The PLS-based fault diagnosis model requires statistical SPE and Q indicators to achieve fault diagnosis. The results of each control limit indicator are shown in

Figure 6. To determine the location of the sensor where the data fault occurred, the contribution map method was used. The results are shown in

Figure 7.

Compared with the PCA model, the PLS model also has a high false alarm rate for collision event recognition and suppresses vibration information, leading to a larger error in estimating the proportion of trucks. The PLS model assumes that 35 trucks passed by during the experiment, which differs significantly from the fact.

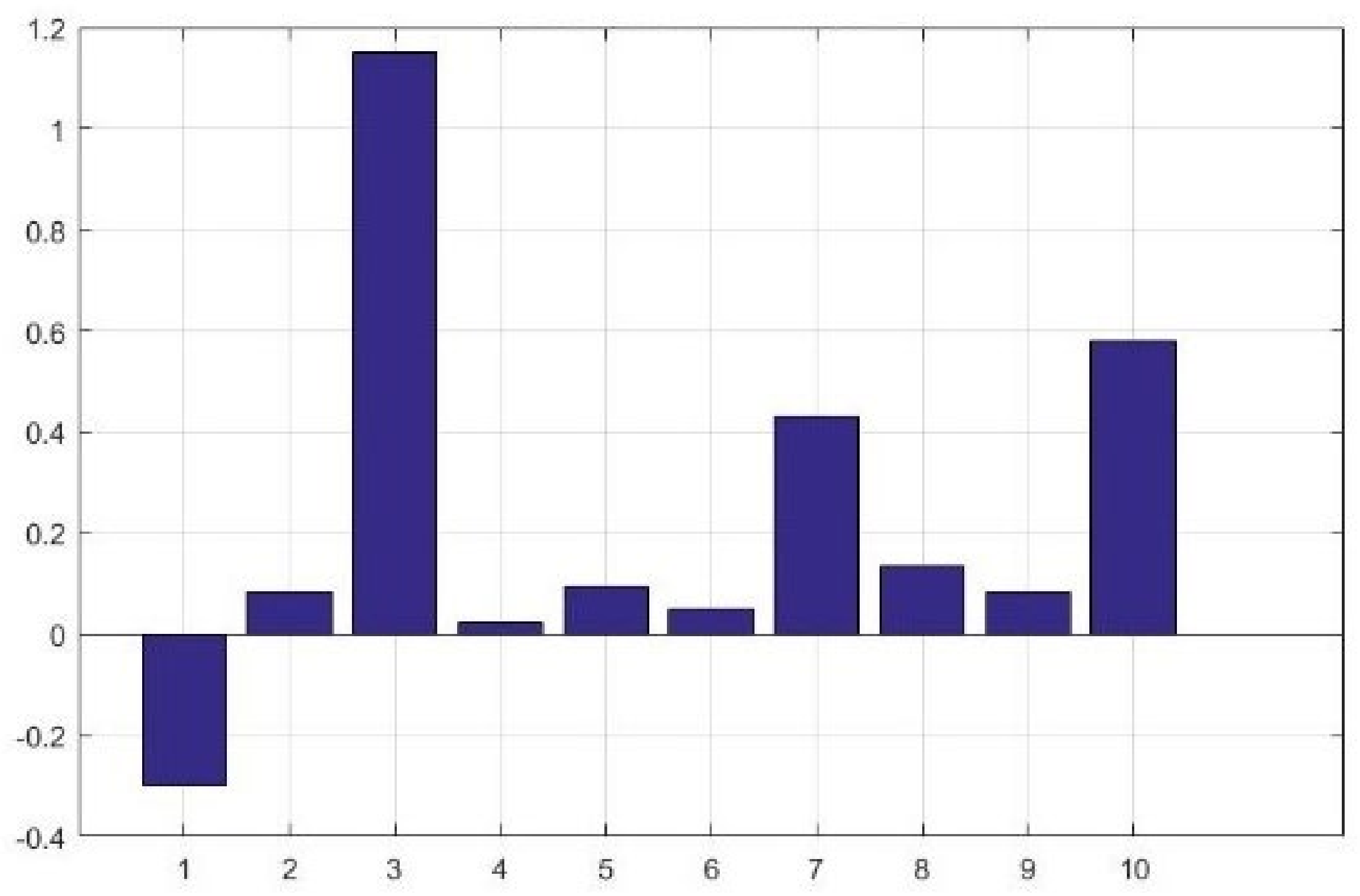

5.3. FDA Fault Diagnosis Model Results

Figure 8 shows the results of the FDA-based fault diagnosis. Since the noise and fault data were involved together with normal data during the training process, the faults were accurately located in S1, S2, and S3 when they occurred, as the right side of

Figure 8. The fault location may be closer to S1, which is effectively consistent with the simulated online data.

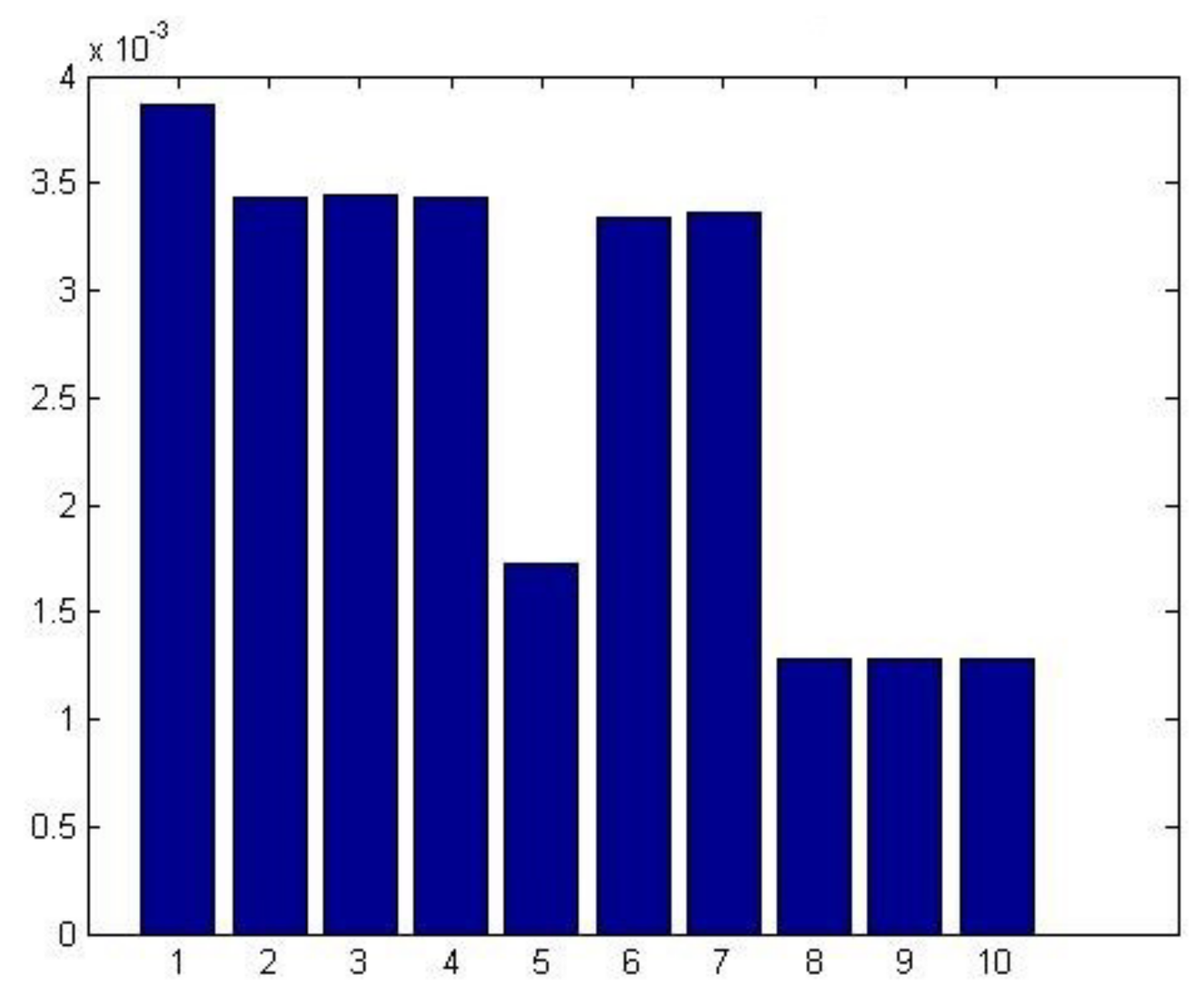

5.4. Error Comparison Results

Comparison Experiment 1: The algorithm suitable for truck proportion estimation was selected from the PCA, PLS, and FDA models. By comparing the number of fault data found with the manual survey of traffic flow (466 pcu of traffic flow during the experiment, including 75 large vehicles, the proportion of large vehicles 15%), using the accuracy rate for comparison. The results are shown in

Table 1.

Comparison experiment 2: The algorithm suitable for identifying barrier impacts was selected from the PCA, PLS, and FDA models. The data were compared by comparing simulated impact events. The results are shown in

Table 2.

As can be seen from the results: the PCA model is more suitable for truck proportion estimation, while the FDA model is more suitable for barrier impact event monitoring.

5.5. Application Effect Comparison

In 2021, we installed barrier monitoring devices in an approximately 3 km long section of highway. The section consists of a system interchange as the starting point and a service interchange as the ending point. The service interchange has a toll gate, which has two ETC gantries in the upstream section and two in the downstream section. These can be used to count traffic volume. There is a VMS upstream of the system interchange that is used to post information. The experiment first extracted historical data from December 2020 to January 2021, as shown in

Table 3. The Average Daily Congestion Index for this month was calculated using Wang’s method [

2] based on the congestion index to calculate the CO

2, CO, HC and NOX emissions per hour.

In December 2021, we loaded the algorithm studied in this paper into the outfield computer of the barrier monitoring devices. When the barrier monitoring devices within 1 km detect 100 trucks passing within a 15 min period, the message “No overtaking trucks on the road ahead” was be posted in the upstream of the system interchange. When the situation does not reoccur within 30 min, the message is automatically cancelled. This control policy is set to operate between 6:00 and 21:00. Because the traffic flow is not high at night, trucks overtaking do not lead to a drop in highway capacity.

Based on the statistical results of ETC gantry data, the average daily traffic volume, truck volume, average daily congestion index and emissions for the same months in both years were compared. The results are shown in

Table 3.

The traffic volumes, congestion factors and emissions were compared for the same period in two different years. It was found that the reduction in emissions was not significant, but the average daily traffic volume improved by 12.7%, despite a significant increase in the proportion of trucks, and a reduction in highway congestion occurred. This may be due to the strong correlation between Zhao’s method of calculating emissions and the congestion index.

6. Conclusions

The purpose of the W-Beam barrier monitoring device: real-time estimation of truck ratio and real-time accurate identification of traffic events that hit the barrier. The monitoring results will be shared with the highway operation management system and used to implement strategies to restrict trucks from overtaking. It can improve highway capacity, relieve congestion and detect traffic accidents in real time. However, when the sensor sensitivity is too low, it will lead to many events not being detected even when the noise is low in the raw data. When the sensor sensitivity is too high, there is a large amount of noise in the raw data that obscures the useful information. Therefore, a data-driven approach is needed to process the data when turning up the sensor sensitivity.

We found that: (1), the wavelet thresholding algorithm can effectively remove the noise from the original data, but the determination of the threshold value needs further optimization. This paper found that, by adjusting the threshold value, the data after noise removal can support the diagnosis of subsequent data faults. (2), The PCA, PLS, FDA and other models have been used in many applications for process data monitoring. However, there is no research in barrier monitoring. In this paper, these three representative models were selected and tried according to the characteristics of monitoring data. The experimental results show that the PCA model is more suitable to be applied to the estimation of truck proportion and the FDA model is more suitable for the accurate identification of collision events. However, this does not indicate that there are no additional suitable algorithms. In the future, we will add research in this area. (3) Regarding long-term application effects, the adoption of the barrier monitoring device proposed in this paper resulted in a 12.7% increase in highway capacity, a slight reduction in congestion index and a slight reduction in emissions despite an increase in the proportion of trucks. The data processing method based on data-driven barrier monitoring device proposed in this paper provides an implementable solution for the sustainable development of highways.