Abstract

Traffic prediction is an important part of the Intelligent Transportation System (ITS) and has broad application prospects. However, traffic data are affected not only by time, but also by the traffic status of other nearby roads. They have complex temporal and spatial correlations. Developing a means for extracting specific features from them and effectively predicting traffic status such as road speed remains a huge challenge. Therefore, in order to reduce the speed prediction error and improve the prediction accuracy, this paper proposes a dual-GRU traffic speed prediction model based on neighborhood aggregation and the attention mechanism: NA-DGRU (Neighborhood aggregation and Attention mechanism–Dual GRU). NA-DGRU uses the neighborhood aggregation method to extract spatial features from the neighborhood space of the road, and it extracts the correlation between speed and time from the original features and neighborhood aggregation features through two GRUs, respectively. Finally, the attention model is introduced to collect and summarize the information of the road and its neighborhood in the global time to perform traffic prediction. In this paper, the prediction performance of NA-DGRU is tested on two real-world datasets, SZ-taxi and Los-loop. In the 15-, 30-, 45- and 60-min speed prediction results of NA-DGRU on the SZ-taxi dataset, the RMSE values were 4.0587, 4.0683, 4.0777 and 4.0851, respectively, and the MAE values were 2.7387, 2.728, 2.7393 and 2.7487; on the Los-loop dataset, the RMSE values for the speed prediction results were 5.1348, 6.1358, 6.7604 and 7.2776, respectively, and the MAE values were 3.0281, 3.6692, 4.0567 and 4.4256, respectively. On the SZ-taxi dataset, compared with other baseline methods, NA-DGRU demonstrated a maximum reduction in RMSE of 6.49% and a maximum reduction in MAE of 6.17%; on the Los-loop dataset, the maximum reduction in RMSE was 31.01%, and the maximum reduction in MAE reached 24.89%.

1. Introduction

The concept of the Intelligent Transportation System (ITS) represents the development direction of future transportation systems. Traffic safety, traffic congestion and traffic pollution are three major problems in the process of urban development. ITS can effectively use traffic resources, reduce traffic load, improve traffic efficiency, ensure traffic safety, and reduce traffic pollution, and has become an indispensable part of modern urban development and planning processes. Traffic forecasting is closely related to the development of ITS and is directly related to the service quality and operational efficiency of ITS. Traffic forecasting refers to the prediction of the traffic conditions in a future period on the basis of historical traffic road information, such as traffic flow, traffic speed and vehicle density. Although traffic data are considered to be time series data, they have complex spatio-temporal correlations, which are affected not only by time, but also by the traffic status of other nearby roads. Therefore, in order to improve the accuracy of traffic forecasting, it is necessary to consider both the temporal and spatial features of the road.

Existing traffic prediction methods can be divided into classical and deep learning methods. Classical methods can be subdivided into statistical-based methods and traditional machine learning methods [1]. Methods such as the historical average model (HA) [2], vector autoregressive (VAR) [3] and autoregressive integrated moving average (ARIMA) [4] are the most representative statistical methods. However, these methods require the data to meet certain conditions and are not suitable for complex traffic data. Traditional machine learning methods, such as least squares support vector machine (LSSVM) [5,6], support vector regression (SVR) [7,8], Bayesian network [9] and random forest (RFR) [10], are able to use historical data for learning and capturing relationships between data to achieve successful traffic prediction.

In recent years, with the rapid development of deep learning, various deep neural network methods have been applied to traffic prediction. The artificial neural network [11] is a computational model that imitates the structure and function of biological neural networks, and can model data. For example, Olayode et al. [12,13] used artificial neural network models to predict traffic flow at intersections. The extreme learning machine [14,15] has the advantages of high learning efficiency and strong generalization ability, so Wei et al. [16] used the extreme learning machine to predict short-term traffic flow. The adaptive fuzzy neural reasoning system [17] combines the learning mechanism of neural network and the language reasoning ability of fuzzy systems, and is often applied to traffic prediction. For example, Olayode et al. [18] used ANFIS to predict the traffic flow of expressways. Recurrent neural networks (RNN) can handle time series well, so many researchers have used RNNs to predict traffic. Li et al. [19] used an RNN for short-term traffic prediction. Pan et al. [20] used an RNN-based encoder–decoder model for traffic flow prediction. Because RNNs are less effective when dealing with long time series, improved variant models of RNN, such as long short-term memory (LSTM) [21] and gated recurrent unit (GRU) [22], have also been applied in complex traffic data analysis. For example, Cui et al. [23] built a model of the correlation between speed and time by stacking unidirectional and bidirectional LSTMs to extract bidirectional time-dependent features. In addition, Fang et al. [24] and Yao et al. [25] captured temporal trends using extended causal convolution. However, these methods only consider changes in traffic state over time, ignoring the spatial characteristics of the road under consideration. Therefore, some researchers have used convolutional neural networks to extract spatial features. Yu et al. [26] is a representative work in the field of transportation research, and uses pure convolution to extract spatial features from sequences of graph structures. Ma et al. [27] converted the data containing time and space into an image through a matrix, and extracted features from the image through CNN. In [28,29], the CNN and RNN models were combined, using CNN to extract spatial features, and RNN to further extract temporal features. These models not only do not consider the impact of feature changes in the global time period on the prediction results, but the ability of CNN to extract spatial information from urban traffic networks is also not as good as those of GCN [30], GraphSage [31] and other graph neural networks. The research of Yu [32], Zhao et al. [33] and Bai et al. [34] proved that the graph neural network can extract the spatial features of roads in the traffic network and improve the accuracy of traffic prediction.

In summary, the problems faced when predicting traffic speed using traditional methods are as follows:

- (1)

- The traffic speed at each historical moment has a complex impact on the speed at future moments.

- (2)

- The traditional method only considers the features of the road itself, that is, the time features, and it ignores the spatial position relationship of the road and seldom considers the spatial features of the road.

Therefore, in order to solve the above problems, this paper proposes a dual-GRU traffic speed prediction model based on neighborhood aggregation and the attention mechanism—NA-DGRU. NA-DGRU uses the idea of node neighborhood aggregation in GraphSage to extract spatial features for each road, and it uses two GRUs to model temporal features and spatial features. All hidden states output by the two GRUs are input into the attention model, and the attention model performs weighted summation on all hidden states to obtain the output of the attention model. Finally, the output of the attention model is input into the fully connected layer to obtain the final speed prediction value. Therefore, the speed prediction value obtained by NA-DGRU contains the temporal features and spatial features of the road at each historical moment. The main contributions of this paper are as follows:

- (1)

- NA-DGRU integrates neighborhood aggregation, GRU and attention models, which can effectively extract spatial and temporal feature information from road and urban networks and establish speed- and time-dependent models.

- (2)

- Using the attention mechanism to fuse the outputs of two GRUs, the temporal and spatial features of road historical moments are adjusted and summarized, and traffic predictions at various time lengths are implemented.

- (3)

- Tested on two real datasets and compared with other baseline methods, the results show that NA-DGRU can effectively reduce the prediction error.

2. Research Methods

2.1. Problem Definition

This study uses neighborhood information of urban roads and historical traffic speeds to predict future traffic speeds.

Definition 1.

Urban road topological graph G: The urban road topological graph can be expressed as G = {V,E}, where V = {v1,v2,v3,…,vN} is the set of N-many roads and E is the set of connected edges between roads. For a given road graph G, the connection relationship between the contained roads can be described by the adjacency matrix A. Each row and column in the adjacency matrix A represents a road, and if the value of the element in row i and column j of A is zero, it means that the i-th road and the j-th road are not connected. If the value is not zero, the value is 1 in the unweighted road graph, which represents that the i-th road and j-th road are connected; in the weighted road graph, the magnitude of the value represents the weight of the i-th road and j-th road are connected. In this paper, the default value of the elements in row i and column i of A is 0. For a particular road vi, all roads connected to vi constitute the neighborhood of vi.

Definition 2.

Feature matrix : If each road in the road graph G is abstracted as a node, the traffic speed on the road can be regarded as the attribute of the node, which can be represented by the feature matrix . Where N is the number of roads, that is, the number of nodes, and P is the length of the historical time series, that is, the number of features of the nodes. The traffic speed at the t-th moment on all roads is denoted by Xt.

In this study, the neighborhood aggregation feature of the road in the urban road topology graph G and the traffic speed feature of the road are used to learn the mapping function f, and this function is used to predict the traffic speed on the road at time T in the future. The calculation formula is shown in Formula (1):

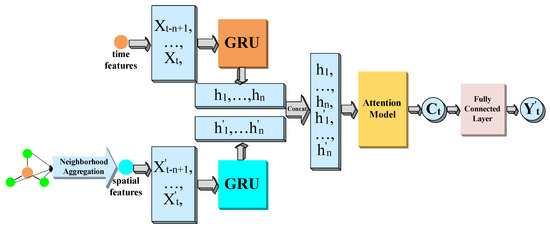

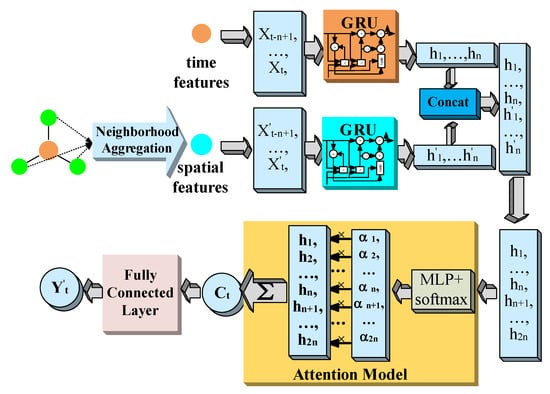

where T is the length of the time series to be predicted, n is the length of the given historical time series, Xt is the original feature of all roads at time t, that is, time feature, and is the neighborhood aggregation feature of all roads at time t, that is, the corresponding spatial characteristics of all roads at time t. The prediction process of the model in this paper is shown in Figure 1. The temporal and spatial features of the road are sent to two GRUs, and all the hidden states output from the two GRUs are input to the attention model, and the output of the attention model goes through the fully connected layer to get the final prediction output of the model.

Figure 1.

NA-DGRU prediction flowchart.

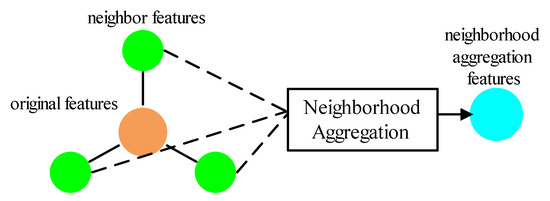

2.2. Neighborhood Aggregation

Neighborhood aggregation refers to the use of the features of the neighbor nodes of the target node to generate the aggregated features of the target node. Neighborhood aggregation can effectively extract the spatial information in the graph structure. A schematic diagram of neighborhood aggregation is shown in Figure 2. One of the key reasons why graph neural networks such as GCN [30], GAT [35] and GraphSage [31] are able to successfully complete various tasks on the graph is that they can effectively utilize the neighborhood information of each node. The features of adjacent nodes are similar, and the node neighborhood can reflect the homogeneity between a node and its neighbor nodes, that is, the trend of sharing features between nodes [36]. Through neighborhood aggregation, the traffic information contained in the neighborhood space, that is, the spatial features of the road, can be extracted.

Figure 2.

Schematic diagram of neighborhood aggregation.

This study uses mean aggregation on the neighborhood of each road to extract spatial features. Mean aggregation is adaptive to the number of roads in the neighborhood, that is, no matter how many roads are in the neighborhood, mean aggregation can ensure that the feature dimension of the aggregation output is consistent with the feature dimension of the original road. Let any road feature be ri, and there are M roads in its neighborhood with features ri1,ri2,ri3,…,riM; rim(j) is the feature on rim at time j, ri′ is the neighborhood aggregation feature of ri, and MEAN is the mean aggregation function, then the operation of neighborhood mean aggregation can be expressed as shown in Formula (2):

Neighborhood mean aggregation calculates the mean value of ri1, ri2, ri3,…, riM at each moment to obtain the neighborhood aggregation feature ri’ of ri. The calculation formula of mean aggregation is shown in Formula (3):

The mean aggregation eigenvalue of ri’ at time j can be calculated by Formula (4):

The adjacency matrix reflects the connection relationship between roads, and the adjacency matrix can be used to obtain the neighborhood of each road. For all roads in the road network, the neighborhood mean aggregation method is used to extract spatial features, and the neighborhood aggregation feature matrix of the road can be obtained. The neighborhood aggregation features of all roads at time t are denoted by .

From Formulas (3) and (4), it can be seen that the neighborhood aggregation feature ri’ is not only related to the number of roads in the neighborhood, but also covers the features of all roads in the neighborhood space of ri. Therefore, in this study, the original feature of ri is regarded as the temporal feature, and the neighborhood aggregation feature ri’ is regarded as the spatial feature of ri.

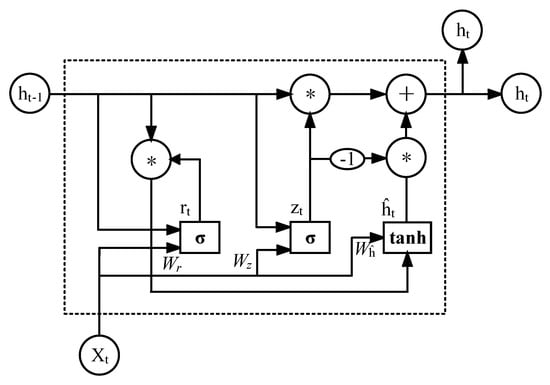

2.3. GRU Model

Traffic data are essentially temporal data. The traffic speed on the road will change with time, and analyzing the relationship between time and speed is a necessary step in traffic forecasting. There are a large number of loops in RNN, such that information can be retained for a long time when it is transmitted in the network; therefore, RNN has a better memory ability than ordinary neural networks. However, RNN has problems such as information transmission attenuation, gradient disappearance, and gradient explosion, meaning that RNN is still greatly limited in dealing with long-term time series. LSTM [21], as a variant of RNN, can handle long-term time series well by designing special memory units. GRU [22] is an RNN variant model that improves on LSTM by offering faster convergence while maintaining an accuracy close to that of the LSTM. GRU characterized by a relatively simple model structure, the use of fewer parameters, and easier calculation and implementation. Using two GRU models will not make the structure of the model in this paper too complicated, or the calculation too cumbersome.

The model in this paper uses GRU to deal with the correlation between traffic speed and time on the road. The structure of GRU is shown in Figure 3, and the GRU calculation process is shown in Formulas (5)–(8), where is the update gate, and the value range is 0~1. The closer is to 1, the more the model will remember past information; the closer is to 0, the more past information is forgotten. will be the speed of all roads at time t, and is the hidden state at time t − 1. is the reset gate, the value range of which is 0~1. The closer is to 0, the more the model will discard past hidden information; the closer is to 1, the more the model will add past information to the current information. is a candidate state, which contains the information of and makes targeted reservations for the information of . is the output state at the current moment calculated by , and . is the sigmoid function, denotes the splicing of and , refers to a matrix dot product, refers to a matrix product, , , , , and are learnable parameters. In the experiment, the dimension of is N×1, the dimensions of , and are all N × u, and the dimensions of , and are all (u + 1) × u, where N is the number of all roads in the road network, and u is the number of hidden units used by the GRU.

Figure 3.

GRU structure diagram.

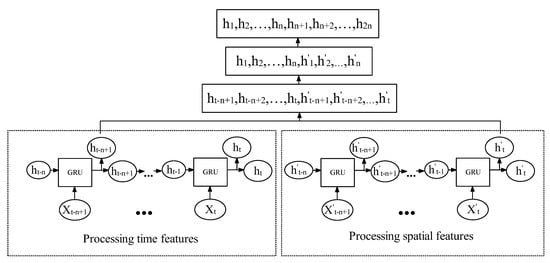

The model in this paper uses two GRUs to process the temporal and spatial features of the road, respectively, and output the hidden state at each moment. Figure 4 shows the process by which the two GRUs process the temporal and spatial features of n historical moments. After the temporal feature or spatial features of each historical moment is calculated in the input GRU, a hidden state will be output. This hidden state will not only be applied to the feature calculation at the next moment but will also be retained and input into the attention model. The number of hidden units and the dimension size of learnable parameters are the same for both GRUs in the experiments.

Figure 4.

Flow chart of two GRUs, processing temporal features and spatial features respectively.

2.4. Attention Model

The attention model is a neural network based on the encoding–decoding model. Originally applied to machine translation [37,38], attention models have now become an important component of neural networks, and are widely used in natural language processing [39], computer vision [40] and recommender systems [41], among other fields. The attention model assigns weights to information according to its importance, and the more important information is assigned to a larger weight. Therefore, this paper introduces an attention model to assign weights to all hidden states output by the two GRUs.

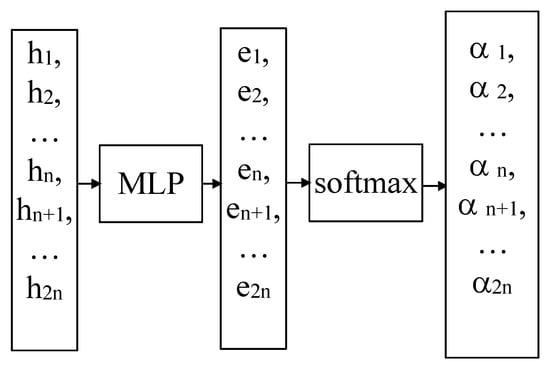

Let the hidden states of the original road features at each moment after GRU processing be , and the hidden states of the neighborhood aggregation features at each moment after GRU processing be , where n is the length of the time series. If all the hidden states are denoted by H, then . For the sake of the convenience of the subsequent formula representation, let . The process of calculating the weights of the attention model is shown in Figure 5.

Figure 5.

Calculation process of attention weight.

To obtain the weights (i = 1, 2, 3,…, 2n) for each hidden state, first compute the score (i = 1, 2, 3,…, 2n) for each hidden state using a multilayer perceptron [34] with two hidden layers on H (i = 1, 2, 3,…, 2n):

In the formula, , represents the matrix dot product, and , and , are the learnable parameters of the two hidden layers.

Then, the softmax function is used to calculate the weights :

Finally, the weights are multiplied by the corresponding hidden states and then summed to obtain the final output of the attention model:

2.5. NA-DGRU Model

NA-DGRU uses two GRUs and an attention model, with an overall structure as shown in Figure 6. NA-DGRU first performs neighborhood aggregation on the time features Xt-n+1,…, Xt of the input n historical moments, and obtains the corresponding spatial features X’t-n+1,…, X’t of the n historical moments; n temporal features and n spatial features are respectively input into the two GRUs for calculation. All hidden states output by the two GRUs are concatenated and fed into the attention model. The attention model first uses a multi-layer perceptron to calculate the score of each hidden state, and then uses the softmax function to calculate the attention weight of each hidden state. The output of the attention model can be obtained by multiplying the weight with the corresponding hidden state and summing, and finally, inputting it into the fully connected layer to obtain the final predicted value of the model.

Figure 6.

Overall structure diagram of NA-DGRU.

NA-DGRU uses the L2 norm of the difference between the actual speed value and the predicted value to calculate the loss function L:

NA-DGRU uses the Adam optimizer to optimize the model to minimize the value of L as much as possible.

This paper proposes an NA-DGRU model to perform traffic prediction. The NA-DGRU model extracts spatial features through neighborhood mean aggregation, and it uses two GRUs to capture the original road features and the relationship between aggregated features and time; finally, the attention model is used to fuse the output of GRUs to adjust the importance of features at different time points and capture the changing trend of the road and its neighborhood at the global moment to achieve traffic speed prediction.

3. Experiments

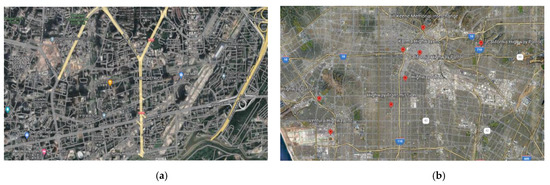

3.1. Data Description

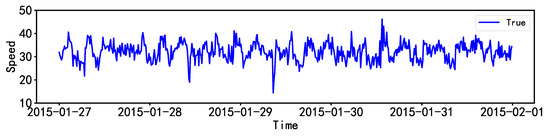

In order to evaluate the performance of the model, this paper uses two real traffic datasets, SZ-taxi and Los-loop, for the experiments. The SZ-taxi and Los-loop datasets have been used in previous studies [33,34,42,43,44,45].The datasets can be downloaded from https://github.com/lehaifeng/T-GCN/tree/master/data. Each dataset consists of a feature matrix of the road and an adjacency matrix, where the feature matrix reflects the change in speed on the road with time and the adjacency matrix reflects the connectivity between the roads.SZ-taxi and Los-loop come from two different countries, China and the United States, and represent different road types and graph types. SZ-taxi comes from an urban traffic system, where the adjacency matrix is an unweighted graph, and Los-loop comes from a highway traffic system, where the adjacency matrix is a weighted graph. The data in SZ-taxi were sourced from taxi trajectories on 156 roads in Luohu District, Shenzhen, China, from January 1 to 31 January 2015; the size of the adjacency matrix is 156 × 156, and the speed on the road is summarized every 15 min. The data in Los-loop were sourced 207 sensors on the highway in Los Angeles, USA, in 2012, from 1 March to 7 March; the size of the adjacency matrix is 207 × 207, and the speed is summarized every 5 min. Table 1 shows the statistics of the two datasets [44], and Figure 7 shows the geographic locations of Shenzhen and Los Angeles. Figure 8 provides a visualization of the speed on a certain road from 27 January 2015 to 31 January 2015 obtained from SZ-taxi. Figure 9 provides a visualization of the speed of a loop detector obtained from the Los-loop dataset over the course of a day. In this experiment, 80% of the data are used as the training set, and the other 20% of the data comprise the test set [33,34,42,43,44,45].

Table 1.

Statistics of the SZ-taxi and Los-loop datasets.

Figure 7.

Geographical location of Shenzhen and Los Angeles: (a) Luohu District, Shenzhen; (b). Los Angeles.

Figure 8.

The speed on a certain road on SZ-taxi from 27 January 2015 to 31 January 2015.

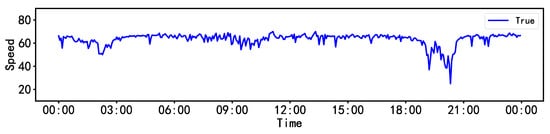

Figure 9.

The speed of a loop detector on the Los-loop dataset in one day.

3.2. Evaluation Metrics

In this study, three metrics were used to evaluate the prediction results of the NA-DGRU model. The three evaluation metrics are root mean squared error (RMSE), mean absolute error (MAE) and accuracy, which are calculated as shown in Equations (13)–(15), respectively, where M is the time step and N is the number of nodes, that is, the number of roads; and denote the true and predicted values at the j-th moment in the i-th road, respectively; and and are the sets of and , respectively.

- (1)

- Root Mean Squared Error (RMSE).

RMSE calculates the average of the squared error between predicted and true values.

- (2)

- Mean Absolute Error (MAE).

MAE calculates the average of the absolute values of the error between the predicted and true values.

- (3)

- Accuracy.

Accuracy is calculated using the F-norm. The F norm is the root value of the sum of the squares of the absolute values of each element in the matrix.

RMSE is often used as a standard for measuring the prediction results of machine learning models, and MAE can well reflect the actual situation of prediction error. Accuracy is obtained by calculating the F norm, which can reflect the degree of difference between the predicted value and the real value. RMSE and MAE describe the error value. The smaller the value, the better the prediction effect of the model; Accuracy describes the prediction accuracy. The larger the value, the better the prediction effect of the model.

3.3. Experiment Settings

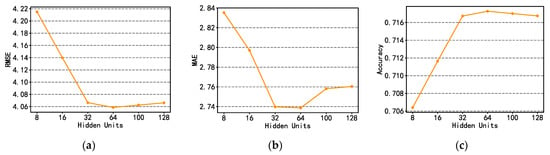

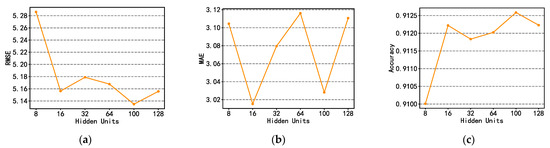

According to studies [33,34,42,43,44,45], this paper uses the historical traffic speed of the previous 60 min as input to predict the traffic speed for the next 15, 30, 45 and 60 min. On SZ-taxi, the values of 4 historical moments are used as input; on Los-loop, 12 historical moments are used as input. In the experiment, the speed data input to NA-DGRU is normalized to [0, 1]. The learning rate of the NA-DGRU model is set to 0.001, the epoch is 2000, and the batch size is 32. In this paper, different numbers of GRU hidden units are used to predict the speed of the next 15 min on two datasets, and the number of GRU hidden units used in subsequent experiments is selected according to the prediction results. Figure 10 and Figure 11 show the impact of different hidden unit numbers on the evaluation indicators on the SZ-taxi and Los-loop datasets, respectively. The horizontal axis indicates the number of hidden units, and the vertical axis indicates the value of different evaluation metrics.

Figure 10.

The influence of different hidden unit numbers on the SZ-taxi dataset on RMSE, MAE and Accuracy. (a) The influence of different number of hidden units on RMSE. (b) The influence of different numbers of hidden units on MAE. (c) The influence of different hidden unit numbers on Accuracy.

Figure 11.

The influence of different hidden unit numbers on the Los-loop dataset on RMSE, MAE and Accuracy. (a) The influence of different number of hidden units on RMSE. (b) The influence of different numbers of hidden units on MAE. (c) The influence of different hidden unit numbers on Accuracy.

From Figure 10a–c, it can be seen that the values of RMSE and MAE are the smallest and Accuracy is the largest when the number of hidden units is 64 on SZ-taxi dataset. Therefore, the number of hidden units for each GRU in the NA-DGRU model is chosen to be 64 when the experiments are conducted on SZ-taxi; as seen in Figure 11a–c, the value of RMSE is the smallest and the value of Accuracy is the largest when the number of hidden units is 100 on the Los-loop dataset, and the value of MAE is the smallest when the number of hidden units is 16. Therefore, on the Los-lop dataset, the number of hidden units for each GRU in the NA-DGRU model is selected as 16 and 100 for experimental testing, respectively.

This paper compares NA-DGRU with the following methods:

- (1)

- Historical Average model (HA) [2]: HA uses the average value of traffic speed at historical moments as a prediction value for future traffic speed.

- (2)

- Support Vector Recession model (SVR) [46]: SVR uses a linear kernel function to predict traffic speeds at future moments.

- (3)

- Gated Recurrent Unit model (GRU) [22]: Uses a GRU for prediction.

- (4)

- Temporal Graph Convolutional Network (T-GCN) [33]: T-GCN uses a two-layer GCN and a GRU for traffic prediction.

- (5)

- Attention Temporal Graph Convolutional Network (A3T-GCN) [34]: A3T-GCN accesses an attention model after T-GCN for traffic prediction.

The SVR model uses a linear kernel function with 20,000 iterations. Referring to [33,34], the learning rate, epoch and batch size of GRU, T-GCN and A3T-GCN are 0.001, 2000 and 32 respectively. When experimenting on SZ-taxi and Los-loop, the number of GRU hidden units of GRU, T-GCN and A3T-GCN is the same as that of NA-DGRU, which are 64 and 100, respectively.

3.4. Experimental Results and Analysis

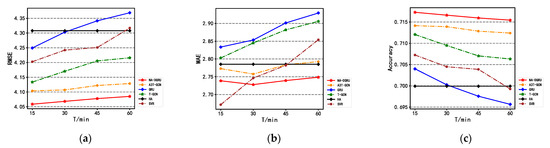

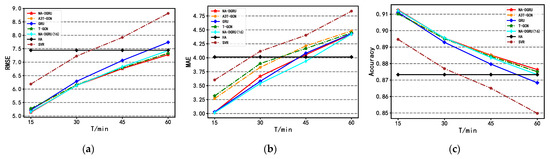

Table 2 shows the test results obtained using different methods on the SZ-taxi dataset at 15, 30, 45 and 60 min, where the best results are indicated in bold. Figure 12 shows the test result plots obtained using different methods on the SZ-taxi dataset at each moment.

Table 2.

Test results on the SZ-taxi dataset.

Figure 12.

The test result plots of different methods on the SZ-taxi dataset at each moment. (a) RMSE plots of different methods. (b) MAE plots of different methods. (c) Accuracy plots of different methods.

As can be seen from the data in Table 2, for 15-min traffic prediction on the SZ-taxi dataset, NA-DGRU performs best in terms of RMSE and Accuracy. Furthermore, NA-DGRU achieves the best results on all evaluation metrics for 30, 45 and 60 min traffic predictions. The optimization ratio of NA-DGRU at each moment is shown in Table 3, in comparison with other methods. Compared with GRU, NA-DGRU is able to reduce the RMSE by 4.49%, 5.45%, 6.07% and 6.49% for the 15 min, 30 min, 45 min and 60 min predictions, respectively.

Table 3.

Optimization ratio of NA-DGRU at each moment compared with other models on the SZ-taxi dataset.

Compared with T-GCN and A3T-GCN, NA-DGRU has the highest degree of MAE optimization for speed prediction at each moment. Compared with T-GCN, the MAE optimization ratios at each moment are 2.29%, 4.11%, 4.95% and 5.54% respectively; compared with A3T-GCN, the MAE optimization ratios at each moment are 1.24%, 1.07%, 1.59% and 1.57% respectively.

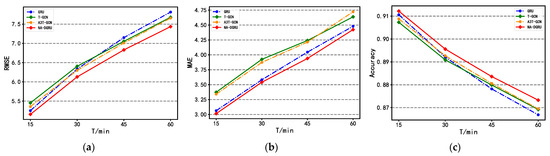

Table 4 shows the test results of different methods on the Los-loop dataset at 15, 30, 45 and 60 min, where the best results are indicated in bold. Figure 13 shows the result plots for the tests obtained using different methods on the Los-loop dataset at each moment. In Table 4 and Figure 13, NA-DGRU (16) indicates that the number of GRU hidden units in NA-DGRU is 16, while NA-DGRU indicates that the number of GRU hidden units of NA-DGRU is 100.

Table 4.

Test results on the Los-loop dataset.

Figure 13.

The test result plots obtained using different methods on the Los-loop dataset at each moment. (a) RMSE plots of different methods. (b) MAE plots of different methods. (c) Accuracy plots of different methods.

As can be seen from the data in Table 4, the model prediction results using GRU are significantly better than those using HA and SVR on the Los-loop dataset, and the results of NA-DGRU (16) and NA-DGRU are both excellent. For the 30 min traffic prediction, NA-DGRU (16) achieves optimal values on all three evaluation metrics. Compared with the other models, the optimal values predicted by NA-DGRU and NA-DGRU (16) are shown in Table 5 for the optimization ratio at each prediction time. Compared with the GRU model, the optimal values of NA-DGRU demonstrate higher RMSE optimization ratios at each moment, corresponding to 1.66%, 2.53%, 4.3%, and 5.96% for each moment, respectively. Compared to the T-GCN model, NA-DGRU presents a higher percentage of MAE optimization at the first three moments, representing 9.11%, 9.15% and 5.47%, respectively. Similarly, the optimal values determined by NA-DGRU also have a higher percentage of MAE optimization in the first three moments compared to A3T-GCN, corresponding to 7.77%, 7.52%, and 6.51%, respectively.

Table 5.

The optimization ratio of NA-DGRU at each moment compared with other models on the Los-loop dataset.

It can be seen from Figure 12 and Figure 13 that, in the prediction results of the two datasets, the predictions provided by the HA model are constant values, and they are not able to reflect the dynamic relationship between speed and time. The RMSE and MAE of other models showed an increasing trend over time, and Accuracy showed a decreasing trend over time. This shows that the prediction error of the model gradually increases, and the prediction accuracy gradually decreases with increasing prediction moment.

It can be seen from Table 3 and Table 5 that, compared with the HA, SVR and GRU models, which only use temporal features, NA-DGRU has the highest RMSE optimization ratios for 15, 30, 45, and 60 min on the SZ-taxi dataset, corresponding to 5.78%, 5.56%, 6.07% and 6.49%, respectively, the highest MAE optimization ratios, corresponding to 3.35%, 4.39%, 5.59% and 6.17%, respectively, and the highest Accuracy optimization ratios, corresponding to 2.49%, 2.39%, 2.62% and 2.83%. The highest RMSE optimization ratios for 15, 30, 45 and 60 min on the Los-loop dataset are 31.01%, 17.65%, 14.7% and 17.44%, respectively; the highest MAE optimization ratios are 24.89%, 14%, 10.56% and 8.54%, respectively; and the highest Accuracy optimization ratios are 4.5%, 2.55%, 2.31% and 3.13%, respectively. From the above analysis, it can be concluded that, compared with the HA, SVR and GRU models, which only use time features, NA-DGRU is able to reduce prediction error at each time point and improve the prediction accuracy.

In addition, it can also be seen from Table 3 and Table 5 that the highest RMSE optimization ratios for NA-DGRU compared to models such as T-GCN and A3T-GCN, which also use spatio-temporal feature prediction, are 1.91%, 2.45%, 3.04% and 3.1% for 15, 30, 45 and 60 min on the SZ-taxi dataset, respectively; the highest MAE optimization ratios are 2.29%, 4.11%, 4.95%, and 5.41%, respectively; and the highest Accuracy optimization ratios were 0.74%, 1%, 1.26%, and 1.29%, respectively. The highest RMSE optimization ratios were 2.7%, 0.5%, 0.46%, and 0.78% for 15, 30, 45, and 60 min on the Los-loop dataset; the highest MAE optimization ratios were 9.11%, 9.15%, 6.51% and 1.3%, respectively; and the highest Accuracy optimization ratios were 0.26%, 0.06%, 0.09% and 0.16%, respectively. From the above analysis, it can be obtained that NA-DGRU is also effective in extracting spatio-temporal features and further reducing the error compared with models such as T-GCN and A3T-GCN that also use spatio-temporal features for prediction, proving that NA-DGRU can effectively extract temporal and spatial information from roads and their neighborhoods and achieve traffic prediction.

On the basis of the above analysis, it can be found that NA-DGRU performs better on the SZ-taxi than on the Los-loop dataset, compared with the HA, SVR and GRU models that only use temporal features. Compared with models such as T-GCN and A3T-GCN, which also use spatio-temporal feature prediction, NA-DGRU sometimes performs better on the Los-loop dataset and it sometimes performs better on the SZ-taxi dataset. There may be two reasons for this phenomenon:

- (1)

- SZ-taxi and Los-loop are an urban traffic dataset and a highway traffic dataset, respectively. The sampling time for speed in the two datasets is different, with the speed being sampled once every 15 min in SZ-taxi, while the speed in the Los-loop dataset is sampled once every 5 min. The first 60 min of historical speed is used as input in the experiments, i.e., four historical moments of speed are used in SZ-taxi and 12 historical moments of speed are used in Los-loop. By contrast, the speed of each road in SZ-taxi can be considered a discrete flow, while that in Los-loop can be considered a continuous flow, thus leading to a smaller boost in performance on SZ-taxi than on Los-loop.

- (2)

- The graph types of SZ-taxi and Los-loop are different. SZ-taxi is an unweighted graph, and the value in the adjacency matrix is 0 or 1; Los-loop is a weighted graph, and the value range in the adjacency matrix is [0, 1]. The T-GCN and A3T-GCN models use the GCN model to extract spatial features and consider the weight values of the edges in the adjacency matrix. However, NA-DGRU uses mean value aggregation to extract spatial features. It does not pay attention to the weight value of the edge in the adjacency matrix, and only pays attention to whether the edge exists in the adjacency matrix. Therefore, compared with T-GCN and A3T-GCN, NA-DGRU will perform worse on the SZ-taxi than on the Los-loop dataset.

3.5. Experiments Using GRUs with a Low Number of Hidden Units

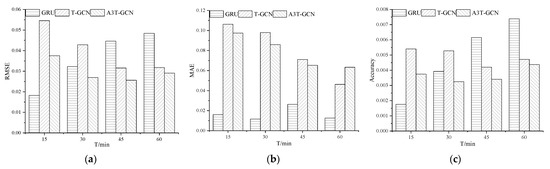

Different numbers of GRU hidden units will have different impacts on the prediction results. If the number of hidden units is too low, the GRU may not be able to fully extract information; if the number of hidden units is too high, it will increase the complexity and computational difficulty of the model, and could even cause overfitting. On the Los-loop dataset, when the number of GRU hidden units of NA-DGRU is 16, it can still achieve good prediction results, especially in short-term predictions of 15 and 30 min. In order to fairly discuss the traffic prediction ability of NA-DGRU on the Los-loop dataset, this paper also tests GRU, T-GCN and A3T-GCN when the number of GRU hidden units is 16. The test results are shown in Table 6, and the plot of the test results is shown in Figure 14. Compared with other models, the optimized ratio of NA-DGRU at each moment is shown in Table 7, and the histogram of the optimized ratio is shown in Figure 15.

Table 6.

The prediction results of each model when the number of GRU hidden units is 16.

Figure 14.

The test result plots of different methods on the Los-loop dataset at each moment when the number of GRU hidden units is 16. (a) RMSE plots of different methods. (b) MAE plots of different methods. (c) Accuracy plots of different methods.

Table 7.

The improvement ratio of NA-DGRU on the Los-loop dataset at each moment compared with other models when the number of GRU hidden units is 16.

Figure 15.

Histogram of the improvement ratio of NA-DGRU on the Los-loop dataset compared with other models at each moment when the number of GRU hidden units is 16. (a) Histogram of RMSE improvement for different methods. (b) Histogram of MAE improvement for different methods. (c) Histogram of Accuracy improvement for different methods.

It can be seen from Figure 14 and Figure 15 that, when the number of GRU hidden units is 16, the prediction of NA-DGRU at each moment achieves the best results. Compared with GRU, NA-DGRU shows the highest RMSE improvement for speed prediction at each moment, with improvements of 1.82%, 3.23%, 4.46% and 4.84%, respectively. Compared with T-GCN, NA-DGRU shows the highest MAE improvement of speed prediction at each moment, with improvements of 10.62%, 9.79%, 7.10% and 4.62%, respectively. Compared with A3T-GCN, the speed prediction results of NA-DGRU at each moment also demonstrate the highest MAE improvement, with improvements of 9.75%, 8.59%, 6.52% and 6.34%, respectively.

In addition, by analyzing the data in Table 4 and Table 7, it can be found that NA-DGRU (16) performs better than T-GCN and A3T-GCN when using a hidden unit count of 100 for short-time prediction (15 and 30 min), proving that NA-DGRU has better short-time prediction ability when using a low number of GRU hidden units.

3.6. Visualization Analysis

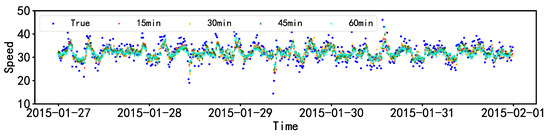

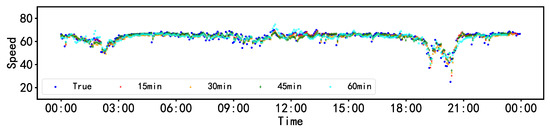

In this paper, the prediction results of NA-DGRU on two datasets are visualized. On the SZ-taxi dataset, the prediction results on a certain road from 27 January 2015 to 31 January 2015 are visualized in this paper, as shown in Figure 16; on the Los-loop dataset, the prediction results of a certain loop detector on one day are visualized with a GRU hidden unit number of 100, as shown in Figure 17.

Figure 16.

Visualization of the true value and 15-, 30-, 45- and 60-min prediction results on the SZ-taxi dataset.

Figure 17.

Visualization of the true value and 15-, 30-, 45- and 60-min prediction results on the Los-loop dataset.

Analysis of the speed trends in Figure 16 and Figure 17 reveals that NA-DGRU can predict results close to the actual values at different prediction time lengths. In addition, NA-DGRU can capture the change trend of speed, effectively track the peaks and valleys of the speed curve, and accurately identify the starting and ending points of the speed peak; in particular, in Figure 9, NA-DGRU is able to excellently track the sudden rise and fall of the speed curve with two valleys and one peak between 18:00 and 21:00, proving that NA-DGRU can effectively realize real-time traffic prediction.

4. Conclusions and Future Work

This paper proposes a new traffic prediction model: NA-DGRU. Different from traditional models, NA-DGRU can utilize the temporal and spatial characteristics of roads to realize traffic prediction. NA-DGRU integrates neighborhood aggregation, GRU and attention models. NA-DGRU extracts the spatial features of roads through the method of neighborhood aggregation, and it uses two GRUs to process temporal and spatial features respectively. The two GRUs output the hidden state of each historical moment to the attention model. The attention model weights and sums all hidden states and inputs the obtained results into the fully connected layer to obtain the predicted value. The prediction results obtained by NA-DGRU include the time and space features of the road at each historical moment, which can be used to realize the accurate prediction of traffic speed. In this paper, the predictive ability of NA-DGRU was tested on two datasets, SZ-taxi and Los-loop. Compared with the baseline methods of HA, SVR, GRU, T-GCN and A3T-GCN, the RMSE on SZ-taxi was reduced by up to 6.49%, the MAE was reduced by up to 6.17%, and Accuracy was improved by up to 2.83%; on the Los-loop dataset, the RMSE was reduced by up to 31.01%, the MAE was reduced by up to 24.89%, and Accuracy was improved by up to 4.5%. In addition, experiments were also conducted with GRU, T-GCN and A3T-GCN on the Los-loop dataset using a low number of GRU hidden units (16), and NA-DGRU showed maximum reductions of 5.46% in RMSE and 10.62% in MAE, demonstrating that NA-DGRU still has excellent traffic prediction ability when using a low number of GRU hidden units (16). Finally, the results of NA-DGRU were also visualized and analyzed on two datasets, and the results showed that NA-DGRU was able to capture the trend of the speed profile at all prediction moments and accurately identify the starting and ending points of speed peaks.

In summary, it is feasible and effective to consider the spatial characteristics of roads for traffic prediction.NA-DGRU maintains excellent prediction results at all time lengths, which proves that NA-DGRU is able to effectively extract temporal and spatial information from urban road networks and achieve real-time prediction of traffic speed, reduce prediction error and improve prediction accuracy, and can be applied in speed prediction of urban traffic and highway traffic. The model in this paper also has some limitations:

- (1)

- The information in the adjacency matrix is not fully utilized. For the weighted graph, the aggregation method in this paper only focuses on whether the roads are connected to each other, not on the weights of the connections.

- (2)

- The prediction effect over a long time is poor. The prediction effect of the model decreases with increasing prediction length.

- (3)

- In future work, the model will continue to be optimized in the following respects:

- (4)

- We will continue to explore new neighborhood aggregation methods, in order to aggregate more and richer spatial features, and consider the impact of noise in the data on the results.

- (5)

- The parameter selection in NA-DGRU may not be optimal, and there is room for optimization.

- (6)

- We will study other time series models and combine them with neighborhood aggregation to explore their application in traffic forecasting.

- (7)

- The position of the vehicle on the road affects the speed. Considering this is also very important for speed prediction. For example, the vehicle will slow down when approaching an intersection, there are fast and slow lanes on some highways, etc.

Author Contributions

Conceptualization, X.T.; data curation, X.T., C.Z. and Y.Z.; formal analysis, X.T. and Y.Z.; funding acquisition, X.T.; investigation, L.D. and S.W.; methodology, X.T. and C.Z.; project administration, C.Z.; resources, Y.Z. and L.D.; software, C.Z. and S.W.; supervision, X.T.; validation, C.Z. and L.D.; visualization, Y.Z. and S.W.; writing—original draft, C.Z.; writing—review and editing, X.T., Y.Z., L.D. and S.W. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by Key Laboratory of Police Internet of Things Application Ministry of Public Security. People’s Republic of China, grant number JWWLWKFKT2022001.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

The data used in this study including the SZ-taxi and Los-loop sets are openly available.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yin, X.; Wu, G.; Wei, J.; Shen, Y.; Qi, H.; Yin, B. Deep Learning on Traffic Prediction: Methods, Analysis, and Future Directions. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4927–4943. [Google Scholar] [CrossRef]

- Wei, G. A Summary of Traffic Flow Forecasting Methods. J. Highw. Transp. Res. Dev. 2004, 21, 82–85. [Google Scholar]

- Vector Autoregressive Models for Multivariate Time Series. In Modeling Financial Time Series with S-PLUS®; Springer: New York, NY, USA, 2006; pp. 385–429.

- Williams, B.M.; Hoel, L.A. Modeling and Forecasting Vehicular Traffic Flow as a Seasonal ARIMA Process: Theoretical Basis and Empirical Results. J. Transp. Eng. 2003, 129, 664–672. [Google Scholar] [CrossRef]

- Ikram, R.M.A.; Dai, H.-L.; Ewees, A.A.; Shiri, J.; Kisi, O.; Zounemat-Kermani, M. Application of improved version of multi verse optimizer algorithm for modeling solar radiation. Energy Rep. 2022, 8, 12063–12080. [Google Scholar] [CrossRef]

- Ueda, K.; Abe, S.; Shen, Z. Short-Time Traffic Flow Prediction Based on Improved LSSVM. 2021. Available online: https://assets.researchsquare.com/files/rs-702558/v1_covered.pdf?c=1631873456 (accessed on 18 December 2022).

- Chen, R.; Liang, C.Y.; Hong, W.C.; Gu, D.X. Forecasting holiday daily tourist flow based on seasonal support vector regression with adaptive genetic algorithm. Appl. Soft Comput. 2015, 26, 435–443. [Google Scholar] [CrossRef]

- Adnan, R.M.; Kisi, O.; Mostafa, R.R.; Ahmed, A.N.; El-shafie, A. The potential of a novel support vector machine trained with modified mayfly optimization algorithm for streamflow prediction. Hydrol. Sci. J. 2022, 67, 161–174. [Google Scholar] [CrossRef]

- Shiliang, S.; Changshui, Z.; Guoqiang, Y. A bayesian network approach to traffic flow forecasting. IEEE Trans. Intell. Transp. Syst. 2006, 7, 124–132. [Google Scholar] [CrossRef]

- Johansson, U.; Boström, H.; Löfström, T.; Linusson, H. Regression conformal prediction with random forests. Mach. Learn. 2014, 97, 155–176. [Google Scholar] [CrossRef]

- Ikram, R.M.A.; Ewees, A.A.; Parmar, K.S.; Yaseen, Z.M.; Shahid, S.; Kisi, O. The viability of extended marine predators algorithm-based artificial neural networks for streamflow prediction. Appl. Soft Comput. 2022, 131, 109739. [Google Scholar] [CrossRef]

- Olayode, I.O.; Tartibu, L.K.; Okwu, M.O.; Severino, A. Comparative Traffic Flow Prediction of a Heuristic ANN Model and a Hybrid ANN-PSO Model in the Traffic Flow Modelling of Vehicles at a Four-Way Signalized Road Intersection. Sustainability 2021, 13, 10704. [Google Scholar] [CrossRef]

- Olayode, I.O.; Tartibu, L.K.; Okwu, M.O. Prediction and modeling of traffic flow of human-driven vehicles at a signalized road intersection using artificial neural network model: A South African road transportation system scenario. Transp. Eng. 2021, 6, 100095. [Google Scholar] [CrossRef]

- Adnan, R.M.; Mostafa, R.R.; Kisi, O.; Yaseen, Z.M.; Shahid, S.; Zounemat-Kermani, M. Improving streamflow prediction using a new hybrid ELM model combined with hybrid particle swarm optimization and grey wolf optimization. Knowl. Based Syst. 2021, 230, 107379. [Google Scholar] [CrossRef]

- Ikram, R.M.A.; Dai, H.-L.; Al-Bahrani, M.; Mamlooki, M. Prediction of the FRP reinforced concrete beam shear capacity by using ELM-CRFOA. Measurement 2022, 205, 112230. [Google Scholar] [CrossRef]

- Wei, Y.; Zheng, S.; Yang, X.; Huang, B.; Tan, G.; Zhou, T. A Noise-Immune Extreme Learning Machine for Short-Term Traffic Flow Forecasting. In Proceedings of the International Conference on Smart Transportation and City Engineering 2021, Chongqing, China, 26–28 October 2021. [Google Scholar]

- Adnan, R.M.; Mostafa, R.R.; Elbeltagi, A.; Yaseen, Z.M.; Shahid, S.; Kisi, O. Development of new machine learning model for streamflow prediction: Case studies in Pakistan. Stoch. Environ. Res. Risk Assess. 2022, 36, 999–1033. [Google Scholar] [CrossRef]

- Olayode, I.O.; Severino, A.; Tartibu, L.K.; Arena, F.; Cakici, Z. Performance Evaluation of a Hybrid PSO Enhanced ANFIS Model in Prediction of Traffic Flow of Vehicles on Freeways: Traffic Data Evidence from South Africa. Infrastructures 2021, 7, 2. [Google Scholar] [CrossRef]

- Li, Y.; Shahabi, C. A brief overview of machine learning methods for short-term traffic forecasting and future directions. ACM SIGSPATIAL Spec. 2018, 10, 3–9. [Google Scholar] [CrossRef]

- Pan, Z.; Liang, Y.; Wang, W.; Yu, Y.; Zheng, Y.; Zhang, J. Urban Traffic Prediction from Spatio-Temporal Data Using Deep Meta Learning. In Proceedings of the 25th ACM SIGKDD international conference on knowledge discovery & data mining, Anchorage, AK, USA, 4–8 August 2019. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Cho, K.; Merrienboer, B.v.; Bahdanau, D.; Bengio, Y. On the Properties of Neural Machine Translation: Encoder─Decoder Approaches. arXiv 2014, arXiv:1409.1259. [Google Scholar]

- Cui, Z.; Ke, R.; Wang, Y. Deep Bidirectional and Unidirectional LSTM Recurrent Neural Network for Network-wide Traffic Speed Prediction. arXiv 2018, arXiv:1801.02143. [Google Scholar]

- Fang, S.; Zhang, Q.; Meng, G.; Xiang, S.; Pan, C. GSTNet: Global Spatial-Temporal Network for Traffic Flow Prediction. In Proceedings of the IJCAI, Macao, China, 10–16 August 2019. [Google Scholar]

- Yao, H.; Liu, Y.; Wei, Y.; Tang, X.; Li, Z.J. Learning from Multiple Cities: A Meta-Learning Approach for Spatial-Temporal Prediction. In Proceedings of the World Wide Web Conference, San Francisco, CA, USA, 13–17 May 2019. [Google Scholar] [CrossRef]

- Yu, T.; Yin, H.; Zhu, Z. Spatio-Temporal Graph Convolutional Networks: A Deep Learning Framework for Traffic Forecasting. In Proceedings of the IJCAI, Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Ma, X.; Dai, Z.; He, Z.; Ma, J.; Wang, Y.; Wang, Y. Learning Traffic as Images: A Deep Convolutional Neural Network for Large-Scale Transportation Network Speed Prediction. Sensors 2017, 17, 818. [Google Scholar] [CrossRef]

- Lv, Z.; Xu, J.; Zheng, K.; Yin, H.; Zhao, P.; Zhou, X. LC-RNN: A Deep Learning Model for Traffic Speed Prediction. In Proceedings of the IJCAI, Stockholm, Sweden, 13–19 July 2018. [Google Scholar]

- Wang, W.; Li, X. Travel Speed Prediction with a Hierarchical Convolutional Neural Network and Long Short-Term Memory Model Framework. arXiv 2018, arXiv:1809.01887. [Google Scholar]

- Kipf, T.; Welling, M. Semi-Supervised Classification with Graph Convolutional Networks. arXiv 2017, arXiv:1609.02907. [Google Scholar]

- Hamilton, W.L.; Ying, Z.; Leskovec, J. Inductive Representation Learning on Large Graphs. In Proceedings of the NIPS, Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Yu, J.J.Q. Citywide traffic speed prediction: A geometric deep learning approach. Knowl. Based Syst. 2021, 212, 106592. [Google Scholar] [CrossRef]

- Zhao, L.; Song, Y.; Zhang, C.; Liu, Y.; Wang, P.; Lin, T.; Deng, M.; Li, H. T-GCN: A Temporal Graph Convolutional Network for Traffic Prediction. IEEE Trans. Intell. Transp. Syst. 2020, 21, 3848–3858. [Google Scholar] [CrossRef]

- Bai, J.; Zhu, J.; Song, Y.; Zhao, L.; Hou, Z.; Du, R.; Li, H. A3T-GCN: Attention Temporal Graph Convolutional Network for Traffic Forecasting. ISPRS Int. J. Geo-Inf. 2021, 10, 485. [Google Scholar] [CrossRef]

- Velickovic, P.; Cucurull, G.; Casanova, A.; Romero, A.; Lio, P.; Bengio, Y. Graph Attention Networks. arXiv 2018, arXiv:1710.10903. [Google Scholar]

- McPherson, M.; Smith-Lovin, L.; Cook, J.M. Birds of a Feather: Homophily in Social Networks. Annu. Rev. Sociol. 2001, 27, 415–444. [Google Scholar] [CrossRef]

- Chaudhari, S.; Polatkan, G.; Ramanath, R.; Mithal, V. An Attentive Survey of Attention Models. ACM Trans. Intell. Syst. Technol. (TIST) 2021, 12, 1–32. [Google Scholar] [CrossRef]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2015, arXiv:1409.0473. [Google Scholar]

- Galassi, A.; Lippi, M.; Torroni, P. Attention in Natural Language Processing. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 4291–4308. [Google Scholar] [CrossRef]

- Khan, S.H.; Naseer, M.; Hayat, M.; Zamir, S.W.; Khan, F.S.; Shah, M. Transformers in Vision: A Survey. ACM Comput. Surv. (CSUR) 2022, 54, 1–41. [Google Scholar] [CrossRef]

- Zhang, S.; Yao, L.; Sun, A.; Tay, Y.; Zhang, S.; Yao, L.; Sun, A. Deep Learning based Recommender System: A Survey and New Perspectives. arXiv 2017, arXiv:1707.07435. [Google Scholar] [CrossRef]

- Guo, Z.; Liu, S.; Pan, L.; Li, Q. Addressing the Uncertainty in Urban Traffic Prediction via Bayesian Graph Neural Network. 2022. Available online: https://www.researchsquare.com/article/rs-1751349/v1 (accessed on 18 December 2022).

- Wang, J.; Wang, W.; Liu, X.; Yu, W.; Li, X.; Sun, P. Traffic prediction based on auto spatiotemporal Multi-graph Adversarial Neural Network. Phys. A Stat. Mech. Its Appl. 2022, 590, 126736. [Google Scholar] [CrossRef]

- Wang, Y.; Zheng, J.; Du, Y.; Huang, C.; Li, P. Traffic-GGNN: Predicting Traffic Flow via Attentional Spatial-Temporal Gated Graph Neural Networks. IEEE Trans. Intell. Transp. Syst. 2022, 23, 18423–18432. [Google Scholar] [CrossRef]

- Zhang, H.; Chen, L.; Cao, J.; Zhang, X.; Kan, S. A combined traffic flow forecasting model based on graph convolutional network and attention mechanism. Int. J. Mod. Phys. C 2021, 32, 2150158. [Google Scholar] [CrossRef]

- Smola, A.; Schölkopf, B. A tutorial on support vector regression. Stat. Comput. 2004, 14, 199–222. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).