Towards Sustainable Virtual Reality: Gathering Design Guidelines for Intuitive Authoring Tools

Abstract

:1. Introduction

2. Materials and Methods

2.1. Planning

2.2. Defining the Scope

2.3. Literature Search

2.4. Assessing the Evidence Base

- E1.1.: The entry title or abstract did not have one or more of the terms described in the search phrase;

- E1.2.: Published before 2018;

- E1.3.: Entry not written in the English language;

- E1.4.: Virtual reality is not a keyword;

- E1.5.: Duplicate entry.

- E2.1.: Entry is theoretical work (e.g., information system proposal, literature review, poster);

- E2.2.: Entry does not consider the development of authoring tools for virtual reality immersive experiences creation;

- E2.3.: Entry focus on augmented reality;

- E2.4.: Entry develops authoring tools for virtual reality experience creation not based on the use of HMD on virtual environments (e.g., CAVE, 360 video).

- E3.1.: Entry with less than 5 pages;

- E3.2.: Entry related to the development of authoring tools not directly defined as intuitive and easy to use for beginners and unskilled professionals;

- E3.3.: Entry limited on the development of authoring tools specific to an area of application (e.g., health, engineering, education, and culture).

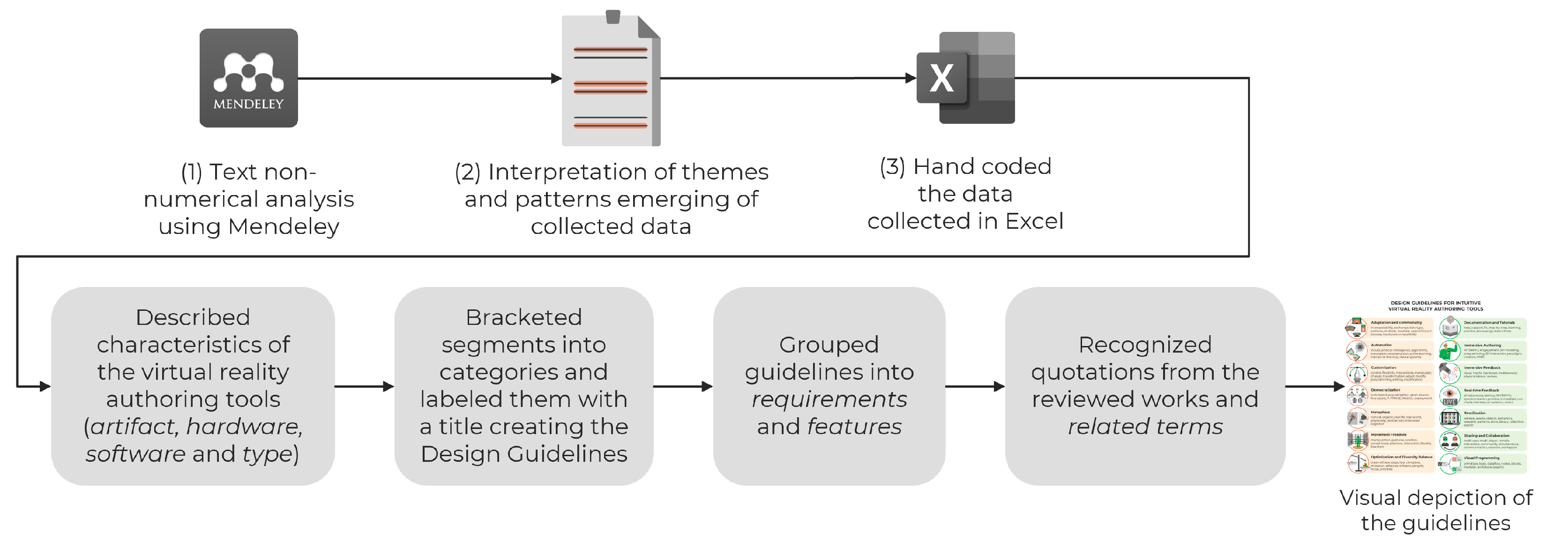

2.5. Synthesizing and Analyzing

3. Results

3.1. Characteristics of the Virtual Reality Authoring Tools

- 1.

- Virtual environment creation, where everything that the user sees is a 3D model, also containing collaborative interaction, visual programming, and immersive authoring [16];

- 2.

- Generic purpose, not developed for the use in a specific field, such as Mechanical Engineering or Medicine [16];

- 3.

- Manipulating and importing 3D objects by searching online, either by text or with an immersive sketch in VR mode, editing assets, and adding behaviors;

- 4.

- Facilitating interaction between software and hardware through haptic feedback visualization and multisensory stimuli;

- 5.

- Interactive human characters development, giving the user pre-setted behaviors such as mouth movements to speak;

- 6.

- Artificial intelligence automation using different types of networks to help the user achieve their goals with more efficiency.

3.2. Definition of Intuitiveness in Virtual Reality Authoring Tools

| Ref. | Intuitiveness Quote |

|---|---|

| [32] | “[...] users can perform search more quickly and intuitively [...]” |

| [33] | “[...] rapidly create haptic feedback after a short training session.” |

| [34] | “The tool features an intuitive and easy to use graphical user interface appropriate for non-expert users.” |

| [35] | “[...] positive feedback from users regarding ease of use and acceptability.” “[...] an authoring tool that is intuitive and easy to use.” |

| [36] | “[...] most participants commented positively on this application and [...] expressed that the application is easier for beginners.” |

| [37] | “AffordIt! offers an intuitive solution [...], show high usability with low workload ratings.” |

| [38] | “[...] people with little or even no experience [...] can install VAIF and build interaction scenes from scratch, with relatively low rates of encountering problem episodes.” |

| [8] | “FlowMatic introduces [...] intuitive interactions, [...], reducing complexity, and programmatically creating/destroying objects in a scene.” |

| [17] | “[...] efficient data structure, for simple creation, easy maintenance and fast traversal [...] users can create VR training scenarios without advanced programming knowledge.” |

| [39] | “[...] An immersive nugget-based approach is facilitating the authoring of VR learning content for laymen authors.” |

| [10] | “The interaction procedures are simple, easy to understand and use, and don’t demand any specific skill expertise from users.” |

| [40] | “We choose user interface elements [...] to minimize learning time [...]” “it was useful to see each others’ work in real time to improve workspace awareness, and it was easy to share findings with one another.” |

| [41] | “Evaluation results indicate the positive adoption of non-experts in programming [...] participants felt somewhat comfortable using the system, considering it also as simple to use.” |

| [12] | “[...] we have analyzed the effectiveness, efficiency, and user satisfaction of VREUD which shows promising results to empower novices in creating their interactive VR scenes.” |

3.3. Defining the Design Guidelines

3.3.1. Adaptation and Commonality

- “To ensure a proper multisensory delivery, the authoring tool must communicate effectively with the output devices” [35];

- “using semantically data from heterogeneous resources” [17];

- “Establishing an exchange format and standardizing the concept of VR nuggets is a next step that can help to make it accessible for a greater community” [39].

3.3.2. Automation

- “The number of triangles on high polygon objects were reduced to optimize the cutting time to an order of magnitude of seconds” [37];

- “In other words, the interaction manager enables developers to create events that are easy to configure and are applied automatically to the characters” [38];

- “The idea is to provide users with a modeling tool that intuitively uses the reality scene as a modeling reference for derivative scene reconstruction with added interactive functionalities” [10].

3.3.3. Customization

- “While the state-of-the-art immersive authoring tools allow users to define the behaviors of existing objects in the scene, they cannot dynamically operate on 3D objects, which means that users are not able to author scenes that can programmatically create or destroy objects, react to system events, or perform discrete actions” [8]—missing customization;

- “The system workflow design of VRFromX that enables creation of interactive VR scenes [...] establishing functionalities and logical connections among virtual contents” [10];

- “Some requests were [...] more freedom to change the parameters of the experience, i.e., to right click on 3D models and change the parameters of the assets on the fly” [41].

3.3.4. Democratization

- “[...] the advances of WebVR have also given rise to libraries and frameworks such as Three.js and A-FRAME, which enable developers to build VR scenes as web applications that can be loaded by web browsers” [8];

- “FlowMatic is open source and publicly available for other researchers to build on and evaluate” [8];

- “[...] democratization is focused on providing people with access to technical expertise (application development) via a radically simplified experience and without requiring extensive and costly training” [41].

3.3.5. Metaphors

- “They can draw edges to and from these abstract models to specify dependencies and behaviors (for example, to specify the dynamics of where it should appear in the scene when it shows up)” [8];

- “Similar to Alice in Wonderland, the users will gradually shrink as they trigger the entry procedure. Authors can access the world in miniature model and experience it in full scale to make changes to the content” [39];

- “Compared to the logic used in the construction of interactions, the task construction uses generic activities which should be also clear to novices without a technical background since they are comparable to actions in the real world” [12].

3.3.6. Movement Freedom

- “One reason is that through direct manipulation users can feel more immersed—as if the wire is in their hands” [36];

- “A brush tool was developed which enables users to select regions on point cloud or sketch in mid-air in a free-form manner” [10];

- “Users can also perform simple hand gestures to grab and alter the position, orientation and scale of the virtual models based on their requirements” [10].

3.3.7. Optimization and Diversity Balance

- “To make our system more efficient, we have to limit the capabilities of the Action entity targeting simple but commonly used tasks in training” [17];

- “The construction uses two dialogs to create the task and the activities so that the novice only needs to focus on the current task or activity” [12];

- “We decreased further the complexity by using wizards to focus the user on smaller steps in the development” [12].

3.3.8. Documentation and Tutorials

- “For each step, instructions are visualized as text in the menu to help participants remember which step they are performing” [37];

- “We believe that more visual aid in the form of animations showing the movement path can help ease the thinking process of participants” [37];

- “Documentation would be another interesting direction in the future, as two participants said they preferred A-FRAME in the sense that the APIs documentation was detailed and easy to understand” [8].

3.3.9. Immersive Authoring

- “[...] expedites the process of creating immersive multisensory content, with real-time calibration of the stimuli, creating a “what you see is what you get (WYSWYG)” experience” [35];

- “[...] immersive authoring tools can leverage our natural spatial reasoning capabilities” [36];

- “With the lack of additional spatial information and the disconnection between developing environments (2D displays) and testing environments (3D worlds), users have to mentally translate between 3D objects and their 2D projections and predict how their code will execute in VR” [8]—(missing immersive authoring).

3.3.10. Immersive Feedback

- “Rendering haptic feedback in virtual reality is a common approach to enhancing the immersion of virtual reality content” [33];

- “[...] various types of haptic feedback, such as thermal, vibrotactile, and airflow, are included; each was presented with a 2D iconic pattern. According to the type of haptic feedback, different properties, such as the intensity and frequency of the vibrotactile feedback, and the direction of the airflow feedback, are considered” [33];

- “The use of multisensory support is justified by the fact that the more the senses engaged in a VR application, the better and more effective is the experience” [35].

3.3.11. Real-Time Feedback

- “AffordIt! offers an intuitive solution that allows a user to select a region of interest for the mesh cutter tool, assign an intrinsic behavior, and view an animation preview of their work” [37];

- “We believe that more visual aid in the form of animations showing the movement path can help ease the thinking process of participants” [37];

- “The novices are supported in the construction by visualizing the interactive VR scene in the development. This ensures direct feedback of added entities to the scene and modified representative parameters of the entities inside the scene. This enables the novice to spot mistakes immediately” [12].

3.3.12. Reutilization

- “We propose that by utilizing recent advances in virtual reality and by providing a guided experience, a user will more easily be able to retrieve relevant items from a collection of objects” [32];

- “[...] we propose intuitive interaction mechanisms for controlling programming primitives, abstracting and re-using behaviors” [8];

- “Users can also save the abstraction in the toolbox for future use by pressing a button on the controller” [8].

3.3.13. Sharing and Collaboration

- “[...] directly transmitted to others, and they can observe the doings of others in real time. The users work together on a virtual scene where they can add, remove, and update 3D models” [34];

- “This is useful because multisensory VR experiences might require multiple features that are produced by different professionals, and a collaborative feature will enable the entire team to work simultaneously” [35];

- “Each user is uniquely identified by a floating nameplate and avatar color. The same color is also used for shared brush selections. This allows users to see the actions of others to support collaborative tasks and information sharing, as well as to avoid physical collisions” [40].

3.3.14. Visual Programming

- “FlowMatic uses novel visual representations to allow these primitives to be represented directly in VR” [8];

- “Unreal Blueprint, a mainstream platform for developing 3D applications, also uses event graphs and function calls to assist novices in programming interactive behaviors related to system events” [8];

- “The development of a visual scripting system as an assistive tool aimed to visualize the VR training scenario in a convenient way, if possible fit everything into one window. The simplicity of this tool was carefully measured to provide tools used also from non-programmers. From the beginning of the project, one of the main design principles was to strategically abstract the software building blocks into basic elements” [17].

3.3.15. Additional Considerations

- Engagement: “The system also provides an engaging and immersive experience inside VR through spatial and embodied interactions” [10];

- Fun-to-use: “[...] the results of the statement if the participant had fun constructing the interactive VR scene suggests that VREUD supplies novices with a playful construction of interactive VR scenes, which could motivate them to develop their first interactive VR scene” [12];

- Immersiveness: “[...] the majority of VR applications rely essentially on audio and video stimuli supported by desktop-like setups that do not allow to fully exploit all known VR benefits” [35];

- Physical comfort: “[...] some participants commented that navigating the virtual world could cause slight motion sickness” [8]/“[...] we could observe impatience and fatigue when the participants had to type in the text for the callouts using the immersive technology (a virtual keyboard) or had to connect the nuggets to bring them in chronological order” [39];

- Graphical quality: “One disadvantage of these tools is that they do not support highly photorealistic graphics and first person view edits which are achievable only by Unreal Engine and professional CAD software in runtime environments” [41];

4. Conclusions

Supplementary Materials

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Matt, C.; Hess, T.; Benlian, A. Digital transformation strategies. Bus. Inf. Syst. Eng. 2015, 57, 339–343. [Google Scholar] [CrossRef]

- Ebert, C.; Duarte, C.H.C. Digital transformation. IEEE Softw. 2018, 35, 16–21. [Google Scholar] [CrossRef]

- Scurati, G.W.; Bertoni, M.; Graziosi, S.; Ferrise, F. Exploring the use of virtual reality to support environmentally sustainable behavior: A framework to design experiences. Sustainability 2021, 13, 943. [Google Scholar] [CrossRef]

- de Freitas, F.V.; Gomes, M.V.M.; Winkler, I. Benefits and challenges of virtual-reality-based industrial usability testing and design reviews: A patents landscape and literature review. Appl. Sci. 2022, 12, 1755. [Google Scholar] [CrossRef]

- da Silva, A.G.; Mendes Gomes, M.V.; Winkler, I. Virtual Reality and Digital Human Modeling for Ergonomic Assessment in Industrial Product Development: A Patent and Literature Review. Appl. Sci. 2022, 12, 1084. [Google Scholar] [CrossRef]

- Cassola, F.; Pinto, M.; Mendes, D.; Morgado, L.; Coelho, A.; Paredes, H. A novel tool for immersive authoring of experiential learning in virtual reality. In Proceedings of the 2021 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Lisbon, Portugal, 27 March–1 April 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 44–49. [Google Scholar]

- Nebeling, M.; Speicher, M. The trouble with augmented reality/virtual reality authoring tools. In Proceedings of the 2018 IEEE International Symposium on Mixed and Augmented Reality Adjunct (ISMAR-Adjunct), Munich, Germany, 16–20 October 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 333–337. [Google Scholar]

- Zhang, L.; Oney, S. Flowmatic: An immersive authoring tool for creating interactive scenes in virtual reality. In Proceedings of the 33rd Annual ACM Symposium on User Interface Software and Technology, Virtual Event, 20–23 October 2020; pp. 342–353. [Google Scholar]

- Sherman, W.R.; Craig, A.B. Understanding Virtual Reality: Interface, Application, and Design; Morgan Kaufmann: Burlington, MA, USA, 2018. [Google Scholar]

- Ipsita, A.; Li, H.; Duan, R.; Cao, Y.; Chidambaram, S.; Liu, M.; Ramani, K. VRFromX: From scanned reality to interactive virtual experience with human-in-the-loop. In Proceedings of the Extended Abstracts of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–7. [Google Scholar]

- Krauß, V.; Boden, A.; Oppermann, L.; Reiners, R. Current practices, challenges, and design implications for collaborative AR/VR application development. In Proceedings of the 2021 CHI Conference on Human Factors in Computing Systems, Yokohama, Japan, 8–13 May 2021; pp. 1–15. [Google Scholar]

- Yigitbas, E.; Klauke, J.; Gottschalk, S.; Engels, G. VREUD—An end-user development tool to simplify the creation of interactive VR scenes. In Proceedings of the 2021 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), St. Louis, MO, USA, 10–13 October 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1–10. [Google Scholar]

- Berns, A.; Reyes Sánchez, S.; Ruiz Rube, I. Virtual reality authoring tools for teachers to create novel and immersive learning scenarios. In Proceedings of the Eighth International Conference on Technological Ecosystems for Enhancing Multiculturality, Salamanca, Spain, 21–23 October 2020; pp. 896–900. [Google Scholar]

- Velev, D.; Zlateva, P. Virtual reality challenges in education and training. Int. J. Learn. Teach. 2017, 3, 33–37. [Google Scholar] [CrossRef]

- Vergara, D.; Extremera, J.; Rubio, M.P.; Dávila, L.P. Meaningful learning through virtual reality learning environments: A case study in materials engineering. Appl. Sci. 2019, 9, 4625. [Google Scholar] [CrossRef]

- Coelho, H.; Monteiro, P.; Gonçalves, G.; Melo, M.; Bessa, M. Authoring tools for virtual reality experiences: A systematic review. Multimed. Tools Appl. 2022, 81, 28037–28060. [Google Scholar] [CrossRef]

- Zikas, P.; Papagiannakis, G.; Lydatakis, N.; Kateros, S.; Ntoa, S.; Adami, I.; Stephanidis, C. Immersive visual scripting based on VR software design patterns for experiential training. Vis. Comput. 2020, 36, 1965–1977. [Google Scholar] [CrossRef]

- Lynch, T.; Ghergulescu, I. Review of virtual labs as the emerging technologies for teaching STEM subjects. In Proceedings of the INTED2017 International Technology, Education and Development Conference, Valencia, Spain, 6–8 March 2017; Volume 6, pp. 6082–6091. [Google Scholar]

- O’Connor, E.A.; Domingo, J. A practical guide, with theoretical underpinnings, for creating effective virtual reality learning environments. J. Educ. Technol. Syst. 2017, 45, 343–364. [Google Scholar] [CrossRef]

- Ashtari, N.; Bunt, A.; McGrenere, J.; Nebeling, M.; Chilana, P.K. Creating augmented and virtual reality applications: Current practices, challenges, and opportunities. In Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems, Honolulu, HI, USA, 25–30 April 2020; pp. 1–13. [Google Scholar]

- Pressman, R.S. Software Engineering: A Practitioner’s Approach; Palgrave Macmillan: London, UK, 2021. [Google Scholar]

- Jerald, J. The VR book: Human-Centered Design for Virtual Reality; Morgan & Claypool: San Rafael, CA, USA, 2015. [Google Scholar]

- Wang, Y.; Su, Z.; Zhang, N.; Xing, R.; Liu, D.; Luan, T.H.; Shen, X. A survey on metaverse: Fundamentals, security, and privacy. IEEE Commun. Surv. Tutorials 2022. [Google Scholar] [CrossRef]

- Creswell, J.W.; Creswell, J.D. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches; Sage Publications: Thousand Oaks, CA, USA, 2017. [Google Scholar]

- Page, M.J.; McKenzie, J.E.; Bossuyt, P.M.; Boutron, I.; Hoffmann, T.C.; Mulrow, C.D.; Shamseer, L.; Tetzlaff, J.M.; Akl, E.A.; Brennan, S.E.; et al. The PRISMA 2020 statement: An updated guideline for reporting systematic reviews. Syst. Rev. 2021, 10, n71. [Google Scholar] [CrossRef] [PubMed]

- Booth, A.; Sutton, A.; Clowes, M.; Martyn-St James, M. Systematic Approaches to a Successful Literature Review; Sage Publications: Thousand Oaks, CA, USA, 2021. [Google Scholar]

- How Scopus Works. Available online: https://www.elsevier.com/solutions/scopus/how-scopus-works/content (accessed on 7 December 2022).

- Matthews, T. LibGuides: Resources for Librarians: Web of Science Coverage Details. Available online: https://clarivate.libguides.com/librarianresources/coverage (accessed on 7 December 2022).

- Major HTC Vive VR “Breakthrough” to Be Shown at CES 2016. Available online: https://www.techradar.com/news/wearables/major-htc-vive-breakthrough-to-be-unveiled-at-ces-2016-1311518 (accessed on 7 December 2022).

- HTC Vive to Demo a “Very Big” Breakthrough in VR at CES. Available online: https://www.engadget.com/2015-12-18-htc-vive-vr-big-breakthrough-ces.html (accessed on 7 December 2022).

- Brief History of Virtual Reality until the Year 2020. Available online: https://ez-360.com/brief-history-of-virtual-reality-until-the-year-2020/ (accessed on 7 December 2022).

- Giunchi, D.; James, S.; Steed, A. 3D sketching for interactive model retrieval in virtual reality. In Proceedings of the Joint Symposium on Computational Aesthetics and Sketch-Based Interfaces and Modeling and Non-Photorealistic Animation and Rendering, Victoria, BC, Canada, 17–19 August 2018; pp. 1–12. [Google Scholar]

- Chan, L.; Chen, M.H.; Ho, W.C.; Peiris, R.L.; Chen, B.Y. SeeingHaptics: Visualizations for communicating haptic designs. In Proceedings of the 21st International Conference on Human-Computer Interaction with Mobile Devices and Services, Taipei, Taiwan, 1–4 October 2019; pp. 1–5. [Google Scholar]

- Capece, N.; Erra, U.; Losasso, G.; D’Andria, F. Design and implementation of a web-based collaborative authoring tool for the virtual reality. In Proceedings of the 2019 15th International Conference on Signal-Image Technology & Internet-Based Systems (SITIS), Sorrento, Italy, 26–29 November 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 603–610. [Google Scholar]

- Coelho, H.; Melo, M.; Martins, J.; Bessa, M. Collaborative immersive authoring tool for real-time creation of multisensory VR experiences. Multimed. Tools Appl. 2019, 78, 19473–19493. [Google Scholar] [CrossRef]

- Zhang, L.; Oney, S. Studying the Benefits and Challenges of Immersive Dataflow Programming. In Proceedings of the 2019 IEEE Symposium on Visual Languages and Human-Centric Computing (VL/HCC), Memphis, TN, USA, 14–18 October 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 223–227. [Google Scholar]

- Masnadi, S.; González, A.N.V.; Williamson, B.; LaViola, J.J. Affordit!: A tool for authoring object component behavior in vr. In Proceedings of the 2020 IEEE Conference on Virtual Reality and 3D User Interfaces Abstracts and Workshops (VRW), Atlanta, GA, USA, 22–26 March 2020; IEEE: Piscataway, NJ, USA, 2020; pp. 740–741. [Google Scholar]

- Novick, D.; Afravi, M.; Martinez, O.; Rodriguez, A.; Hinojos, L.J. Usability of the Virtual Agent Interaction Framework. In Proceedings of the International Conference on Human-Computer Interaction, Copenhagen, Denmark, 5–8 October 2020; Springer: Berlin/Heidelberg, Germany, 2020; pp. 123–134. [Google Scholar]

- Horst, R.; Naraghi-Taghi-Off, R.; Rau, L.; Dörner, R. Bite-Sized Virtual Reality Learning Applications: A Pattern-Based Immersive Authoring Environment. J. Univers. Comput. Sci. 2020, 26, 947–971. [Google Scholar] [CrossRef]

- Lee, B.; Hu, X.; Cordeil, M.; Prouzeau, A.; Jenny, B.; Dwyer, T. Shared surfaces and spaces: Collaborative data visualisation in a co-located immersive environment. IEEE Trans. Vis. Comput. Graph. 2020, 27, 1171–1181. [Google Scholar] [CrossRef] [PubMed]

- Ververidis, D.; Migkotzidis, P.; Nikolaidis, E.; Anastasovitis, E.; Papazoglou Chalikias, A.; Nikolopoulos, S.; Kompatsiaris, I. An authoring tool for democratizing the creation of high-quality VR experiences. Virtual Real. 2022, 26, 105–124. [Google Scholar] [CrossRef]

- Knapp, J.; Zeratsky, J.; Kowitz, B. Sprint: How to Solve Big Problems and Test New Ideas in Just Five Days; Simon and Schuster: New York, NY, USA, 2016. [Google Scholar]

- Brooke, J. SUS: A retrospective. J. Usability Stud. 2013, 8, 29–40. [Google Scholar]

- Park, S.M.; Kim, Y.G. A Metaverse: Taxonomy, components, applications, and open challenges. IEEE Access 2022, 10, 4209–4251. [Google Scholar] [CrossRef]

- Ball, M. The Metaverse and How it Will Revolutionize Everything; Liveright Publishing: New York, NY, USA, 2022. [Google Scholar]

| Ref | Artifact | Hardware | Software | Type |

|---|---|---|---|---|

| [32] | Tool for 3D assets search through immersive handmaid sketch in VR | Oculus Consumer Version 1 (HMD) and Oculus Touch Controllers | Unity3D Engine and Convolutional Neural Network (CNN) | Plugin |

| [33] | Tool for visual feedback of haptic properties in VR | HTC Vive Kit (HMD) | Unity3D Engine | Plugin |

| [34] | Collaborative web-based authoring tool for creating virtual environments in VR | Oculus Rift Consumer version and HTC Vive (HMD) | Node.js/EasyRTC, Socket.IO, WebRTC and A-FRAME (Three.js + WebVR) | Standalone |

| [35] | Architecture for a collaborative immersive tool for multisensory experiences creation in VR | HTC Vive (HMD), Bose QuietComfort 25 headphones, Sensory Co SmX-4D for Smell, Buttkickers LFE kit and wind simulator | Unity3D Engine | Plugin |

| [36] | Immersive authoring tool that allows applying reaction behaviors to objects using visual programming | Oculus Rift (HMD) | NOT INFORMED | Standalone |

| [37] | Tool for 3D assets editing and application of behaviors to objects | HTC Vive Pro Eye (HMD) and controllers | Unity3D Engine (C#) and SteamVR | Plugin |

| [38] | Authoring tool for developing interactive agents for VR applications | NOT INFORMED | Unity3D Engine and Cortana for speech recognition | Plugin |

| [8] | Immersive authoring tool that allows applying reaction behaviors to objects using visual programming | NOT INFORMED | A-FRAME (Three.js and WebVR), Node.js and RxJS | Standalone |

| [17] | Immersive authoring tool with visual programming to reproduce gamified training scenarios through modular architecture | Oculus Quest 2 (HMD), HTC Vive (HMD), Microsoft Hololens (HMD) and Magic Leap | Unity3D Engine and CodeDOM (.NET Framework) | Plugin |

| [39] | Authoring tool that uses “nugget tiles” (blocks) so authors can create reduced learning experiences in VR | HTC Vive (HMD) | Unity3D Engine and Virtual Reality Tool-kit (VRTK) | Plugin |

| [10] | Platform for interactive virtual objects creation in VR through physical objects presented in the real world, using AI | Oculus Quest (HMD), Oculus Link, Touch controllers and iPad Pro | Unity3D Engine and AppScanner | Plugin |

| [40] | Authoring tool that allows flexible control of work spaces for data analysis during collaborative activities in groups inside an immersive space in VR | HTC Vive Pro (HMD), Backpack | Unity3D Engine, Immersive Analytics Toolkit (IATK) and Oculus Avatar SDK (body) | Plugin |

| [41] | Web-based authoring tool with better graphic quality | NOT INFORMED | Unity3D Engine and WordPress/MySQL | Plugin |

| [12] | Web-based intuitive authoring tool for interactive 3D scenes creation in VR | NOT INFORMED | A-FRAME (Three.js), React and JavaScript | Standalone |

| N | Design Guidelines | Classif. | Articles | Related Terms |

|---|---|---|---|---|

| 1 | Adaptation and commonality | Requirement | [8,12,17,34,35,38,39,40,41] | interoperability, exchange, data type, patterns, multiple, modular, export/import process, hardware compatibility |

| 2 | Automation | [10,12,17,32,37,38,41] | inputs, artificial intelligence, algorithms, translation, reconstruction, active learning, human-in-the-loop, neural systems | |

| 3 | Customization | [8,10,12,34,35,36,37,38,39,40,41] | control, flexibility, interactions, manipulate, change, transformation, adapt, modify, programming, editing, modification | |

| 4 | Democratization | [8,12,34,38,39,40,41] | web-based, popularization, open-source, free assets, A-FRAME, WebGL, deployment | |

| 5 | Metaphors | [8,10,12,17,32,33,34,36,37,39,40] | natural, organic, real life, real-world, physicality, abstraction; embodied cognition | |

| 6 | Movement Freedom | [8,10,32,34,36,37,39,40] | manipulation, gestures, position, unrestricted, selection, interaction, flexible, free-form | |

| 7 | Optimization and Diversity Balance | [8,10,12,17,32,35,37,39,40,41] | trade-off, less steps, fast, complete, limitation, effective, efficient, simplify, focus, priorities | |

| 8 | Documentation and Tutorials | Feature | [8,12,17,37,38,41] | help, support, fix, step-by-step, learning, practice, knowledge, instructions |

| 9 | Immersive Authoring | [8,10,12,17,32,34,35,36,37,39,40] | WYSIWYG, engagement, 3D modeling, programming, 3D interaction, paradigm, creation, HMD | |

| 10 | Immersive Feedback | [33,35,36,37] | visual, haptic, hardware, multisensory, physical stimuli, senses | |

| 11 | Real-time Feedback | [8,12,17,32,33,34,35,36,37,39,40] | simultaneous, latency, WYSIWYG, synchronization, preview, immediate, run-mode, liveness, compilation, direct | |

| 12 | Reutilization | [8,10,12,17,32,34,36,38,39,41] | retrieve, assets, objects, behaviors, reusable, patterns, store, library, collection, search | |

| 13 | Sharing and Collaboration | [12,34,35,40,41] | multi-user, multi-player, remote interaction, community, simultaneous, communication, network, workspace | |

| 14 | Visual Programming | [8,17,36,39,41] | primitives, logic, dataflow, nodes, blocks, modular, prototype, graphic |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chamusca, I.L.; Ferreira, C.V.; Murari, T.B.; Apolinario, A.L., Jr.; Winkler, I. Towards Sustainable Virtual Reality: Gathering Design Guidelines for Intuitive Authoring Tools. Sustainability 2023, 15, 2924. https://doi.org/10.3390/su15042924

Chamusca IL, Ferreira CV, Murari TB, Apolinario AL Jr., Winkler I. Towards Sustainable Virtual Reality: Gathering Design Guidelines for Intuitive Authoring Tools. Sustainability. 2023; 15(4):2924. https://doi.org/10.3390/su15042924

Chicago/Turabian StyleChamusca, Iolanda L., Cristiano V. Ferreira, Thiago B. Murari, Antonio L. Apolinario, Jr., and Ingrid Winkler. 2023. "Towards Sustainable Virtual Reality: Gathering Design Guidelines for Intuitive Authoring Tools" Sustainability 15, no. 4: 2924. https://doi.org/10.3390/su15042924

APA StyleChamusca, I. L., Ferreira, C. V., Murari, T. B., Apolinario, A. L., Jr., & Winkler, I. (2023). Towards Sustainable Virtual Reality: Gathering Design Guidelines for Intuitive Authoring Tools. Sustainability, 15(4), 2924. https://doi.org/10.3390/su15042924