Tracking Visual Programming Language-Based Learning Progress for Computational Thinking Education

Abstract

1. Introduction

- (1)

- After participating in the course of Scratch programming, is there any difference in the frequency of using computational thinking skills?

- (2)

- Does participation in the Scratch programming course affect learning motivation, learning anxiety, and learning confidence?

2. Research Method

2.1. Participants

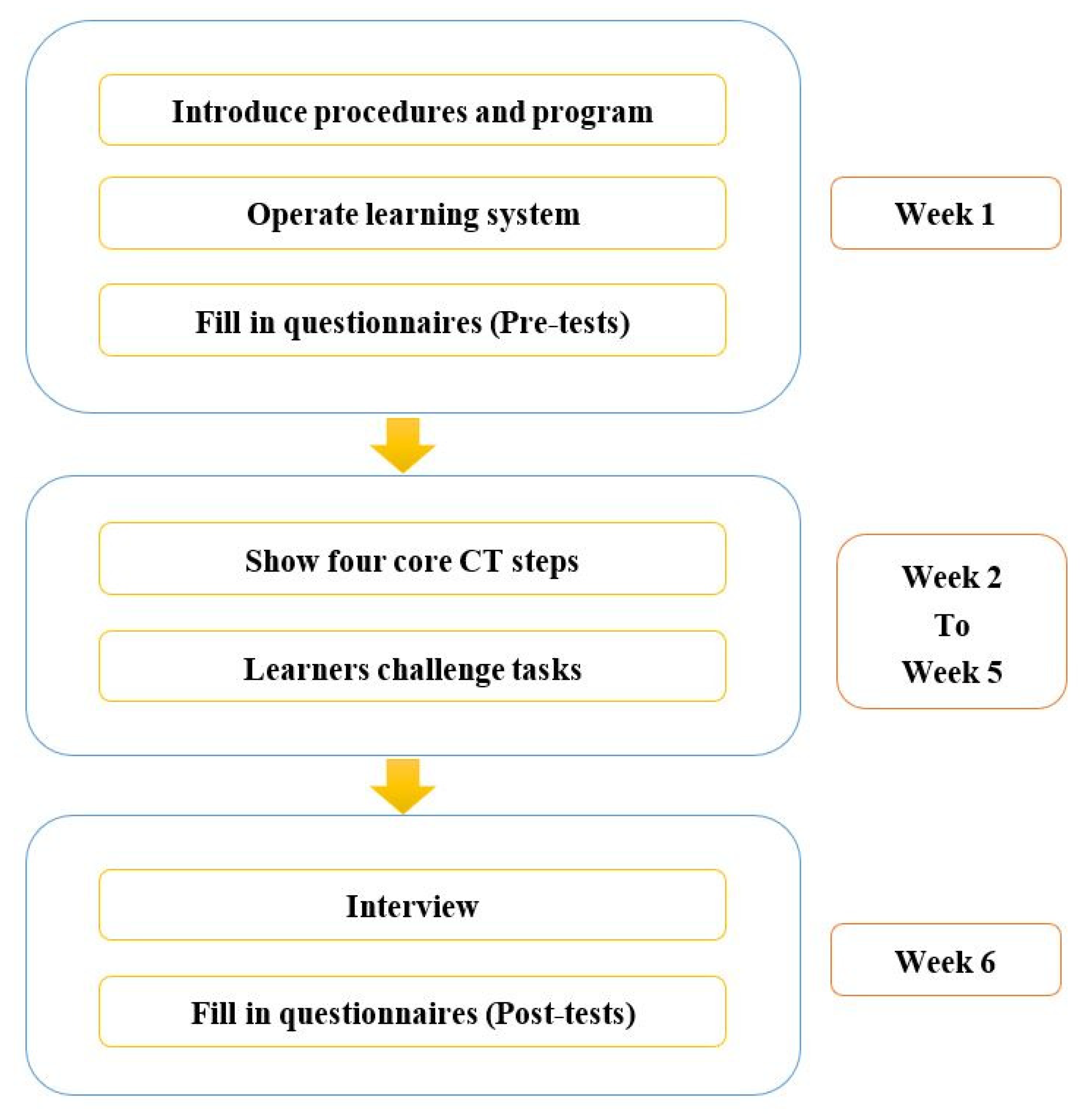

2.2. Experimental Design

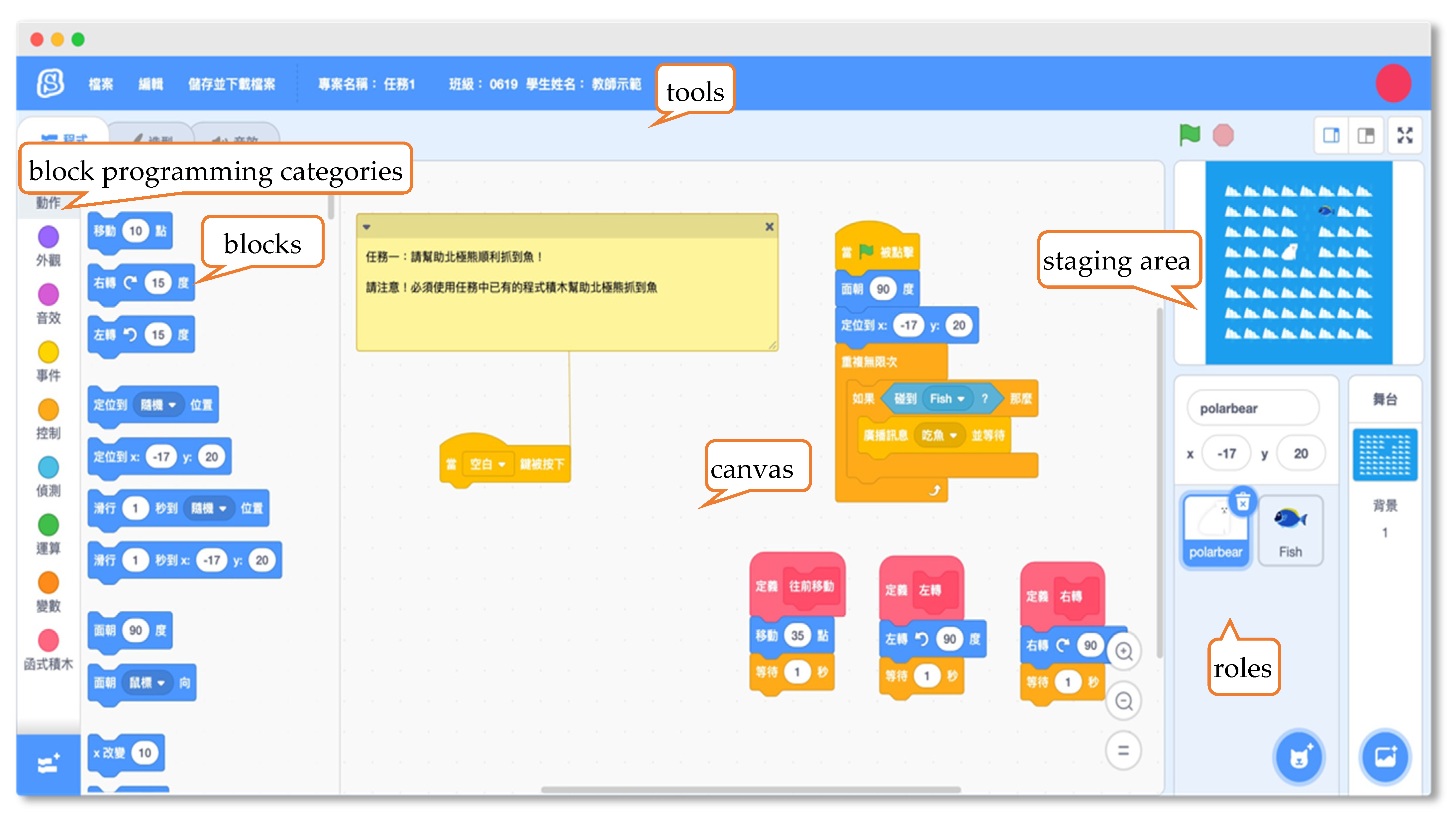

2.3. Learning Platform

2.4. Assessment Tools

- Frequency of CT Skill Use (FCT)

- 2.

- Learning anxiety

- 3.

- Learning motivations

- 4.

- Learning confidence

- 5.

- Interviews

3. Research Results

3.1. FCTQ

3.2. Learning Anxiety

3.3. Learning Motivation

3.4. Learning Confidence

3.5. Findings from Interviews

4. Discussion

5. Conclusions and Recommendations for Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- House, W. FACT SHEET: President Obama Announces New Actions to Promote Rehabilitation and Reintegration for the Formerly-Incarcerated; The White House, Office of the Press Secretary: Washington, DC, USA, 2015. [Google Scholar]

- Wang, T.-H.; Lim, K.-Y.T.; Lavonen, J.; Clark-Wilson, A. Maker-Centred Science and Mathematics Education: Lenses, Scales and Contexts. Int. J. Sci. Math. Educ. 2019, 17, 1–11. [Google Scholar] [CrossRef]

- Clapp, E.P.; Jimenez, R.L. Implementing STEAM in maker-centered learning. Psychol. Aesthet. Creat. Arts 2016, 10, 481–491. [Google Scholar] [CrossRef]

- Chen, J.; Huang, Y.; Lin, K.; Chang, Y.; Lin, H.; Lin, C.; Hsiao, H. Developing a hands-on activity using virtual reality to help students learn by doing. J. Comput. Assist. Learn. 2019, 36, 46–60. [Google Scholar] [CrossRef]

- Hadad, R.; Thomas, K.; Kachovska, M.; Yin, Y. Practicing Formative Assessment for Computational Thinking in Making Environments. J. Sci. Educ. Technol. 2019, 29, 162–173. [Google Scholar] [CrossRef]

- Taylor, B. Evaluating the benefit of the maker movement in K-12 STEM education. Electron. Int. J. Educ. Arts Sci. EIJEAS 2016, 2, 1–22. [Google Scholar]

- Lee, V.R.; Fischback, L.; Cain, R. A wearables-based approach to detect and identify momentary engagement in afterschool Makerspace programs. Contemp. Educ. Psychol. 2019, 59, 101789. [Google Scholar] [CrossRef]

- Schlegel, R.J.; Chu, S.L.; Chen, K.; Deuermeyer, E.; Christy, A.G.; Quek, F. Making in the classroom: Longitudinal evidence of increases in self-efficacy and STEM possible selves over time. Comput. Educ. 2019, 142, 103637. [Google Scholar] [CrossRef]

- Tang, X.; Yin, Y.; Lin, Q.; Hadad, R.; Zhai, X. Assessing computational thinking: A systematic review of empirical studies. Comput. Educ. 2020, 148, 103798. [Google Scholar] [CrossRef]

- Wing, J.M. Computational thinking. Commun. ACM 2006, 49, 33–35. [Google Scholar] [CrossRef]

- Hu, C. Computational thinking: What it might mean and what we might do about it. In Proceedings of the 16th Annual Joint Conference on Innovation and Technology in Computer Science Education, Darmstadt, Germany, 27–29 June 2011. [Google Scholar]

- Grover, S.; Pea, R. Computational thinking: A competency whose time has come. Comput. Sci. Educ. Perspect. Teach. Learn. Sch. 2018, 19, 1257–1258. [Google Scholar]

- Slisko, J. Self-Regulated Learning in A General University Course: Design of Learning Tasks, Their Implementation and Measured Cognitive Effects. J. Eur. Educ. 2017, 7, 12–24. [Google Scholar]

- Hsu, T.-C.; Chang, S.-C.; Hung, Y.-T. How to learn and how to teach computational thinking: Suggestions based on a review of the literature. Comput. Educ. 2018, 126, 296–310. [Google Scholar] [CrossRef]

- Selby, C.; Woollard, J. Computational Thinking: The Developing Definition. In Proceedings of the 45th ACM Technical Symposium on Computer Science Education, SIGCSE 2014, Atlanta, GA, USA, 5–8 March 2014; ACM: Atlanta, GA, USA, 2013. [Google Scholar]

- Futschek, G.; Moschitz, J. Learning algorithmic thinking with tangible objects eases transition to computer programming. In Proceedings of the International Conference on Informatics in Schools: Situation, Evolution, and Perspectives, Bratislava, Slovakia, 26–29 October 2011; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Sung, W.; Ahn, J.; Black, J.B. Introducing Computational Thinking to Young Learners: Practicing Computational Perspectives Through Embodiment in Mathematics Education. Technol. Knowl. Learn. 2017, 22, 443–463. [Google Scholar] [CrossRef]

- Papadakis, S. Evaluating a game-development approach to teach introductory programming concepts in secondary education. Int. J. Technol. Enhanc. Learn. 2020, 12, 127. [Google Scholar] [CrossRef]

- Bau, D.; Gray, J.; Kelleher, C.; Sheldon, J.; Turbak, F. Learnable programming: Blocks and beyond. Commun. ACM 2017, 60, 72–80. [Google Scholar] [CrossRef]

- Resnick, M.; Maloney, J.; Monroy-Hernández, A.; Rusk, N.; Eastmond, E.; Brennan, K.; Millner, A.; Rosenbaum, E.; Silver, J.; Silverman, B.; et al. Scratch: Programming for all. Commun. ACM 2009, 52, 60–67. [Google Scholar] [CrossRef]

- Pasternak, E.; Fenichel, R.; Marshall, A.N. Tips for Creating a Block Language with Blockly; IEEE blocks and beyond workshop (B&B); IEEE: Piscataway, NJ, USA, 2017. [Google Scholar]

- Zhang, L.; Nouri, J. A systematic review of learning computational thinking through Scratch in K-9. Comput. Educ. 2019, 141, 103607. [Google Scholar] [CrossRef]

- Kalelioğlu, F. A new way of teaching programming skills to K-12 students: Code.org. Comput. Hum. Behav. 2015, 52, 200–210. [Google Scholar] [CrossRef]

- Cutumisu, M.; Adams, C.; Lu, C. A Scoping Review of Empirical Research on Recent Computational Thinking Assessments. J. Sci. Educ. Technol. 2019, 28, 651–676. [Google Scholar] [CrossRef]

- Moreno-León, J.; Robles, G.; Román-González, M. Dr. Scratch: Automatic analysis of scratch projects to assess and foster computational thinking. RED Rev. Educ. Distancia 2015, 46, 1–23. [Google Scholar]

- Korkmaz, Ö.; Çakir, R.; Özden, M.Y. A validity and reliability study of the computational thinking scales (CTS). Comput. Hum. Behav. 2017, 72, 558–569. [Google Scholar] [CrossRef]

- Tsai, M.-J.; Liang, J.-C.; Hsu, C.-Y. The Computational Thinking Scale for Computer Literacy Education. J. Educ. Comput. Res. 2020, 59, 579–602. [Google Scholar] [CrossRef]

- Jiang, B.; Zhao, W.; Zhang, N.; Qiu, F. Programming trajectories analytics in block-based programming language learning. Interact. Learn. Environ. 2019, 30, 113–126. [Google Scholar] [CrossRef]

- Zhong, B.; Wang, Q.; Chen, J.; Li, Y. An Exploration of Three-Dimensional Integrated Assessment for Computational Thinking. J. Educ. Comput. Res. 2015, 53, 562–590. [Google Scholar] [CrossRef]

- Chien, Y.-C. Evaluating the Learning Experience and Performance of Computational Thinking with Visual and Tangible Programming Tools for Elementary School Students; Department of Engineering Science, National Cheng Kung University: Taiwan, China, 2018. [Google Scholar]

- Yin, Y.; Hadad, R.; Tang, X.; Lin, Q. Improving and Assessing Computational Thinking in Maker Activities: The Integration with Physics and Engineering Learning. J. Sci. Educ. Technol. 2019, 29, 189–214. [Google Scholar] [CrossRef]

- Venkatesh, V. Determinants of Perceived Ease of Use: Integrating Control, Intrinsic Motivation, and Emotion into the Technology Acceptance Model. Inf. Syst. Res. 2000, 11, 342–365. [Google Scholar] [CrossRef]

- Pintrich, P.R.; Smith, D.A.F.; Garcia, T.; McKeachie, W.J. A Manual for the Use of the Motivated Strategies for Learning Questionnaire (MSLQ); Michigan State University: East Lansing, MI, USA, 1991. [Google Scholar]

- Levine, T.; Donitsa-Schmidt, S. Computer use, confidence, attitudes, and knowledge: A causal analysis. Comput. Hum. Behav. 1998, 14, 125–146. [Google Scholar] [CrossRef]

- Young, D.J. An Investigation of Students’ Perspectives on Anxiety and Speaking. Foreign Lang. Ann. 1990, 23, 539–553. [Google Scholar] [CrossRef]

- Munn, N.L.; Fernald, L.D., Jr.; Fernald, P.S. Introduction to Psychology, 2nd ed.; Houghton Mifflin: Oxford, UK, 1969; p. 752-xvi. [Google Scholar]

- Bandura, A. Self-efficacy: Toward a unifying theory of behavioral change. Psychol. Rev. 1977, 84, 191. [Google Scholar] [CrossRef]

- Jackson, P.R.; Warr, P.B. Unemployment and psychological ill-health: The moderating role of duration and age. Psychol. Med. 1984, 14, 605–614. [Google Scholar] [CrossRef]

- Hull, C.L. Principles of Behavior: An Introduction to Behavior Theory; Appleton-Century: New York, NY, USA, 1943. [Google Scholar]

- Yerkes, R.M.; Dodson, J.D. The relation of strength of stimulus to rapidity of habit-formation. J. Comp. Neurol. Psychol. 1908, 18, 459–482. [Google Scholar] [CrossRef]

| Core CT Step | Step Statement | System Interface |

|---|---|---|

| Decomposition |

|  |

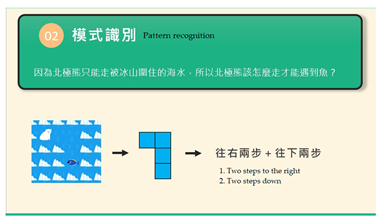

| Pattern recognition |

|  |

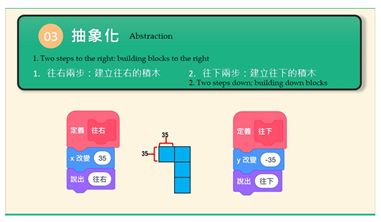

| Abstraction |

|  |

| Algorithms | Use program blocks to control the polar bear, have the polar bear move in the order of the blocks |  |

| Level | Task | Statement | System Interface |

|---|---|---|---|

| Basic task 1 | Maze problem: polar bear eating fish | Complete tasks using blocks with defined topics |  |

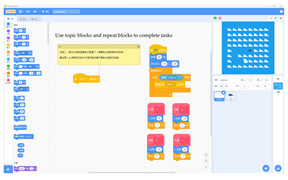

| Basic task 2 | Maze problem: polar bear eating fish | Use topic blocks and repeat blocks to complete tasks |  |

| Basic task 3 | Maze problem: polar bear eating fish | Define blocks and repeat blocks to complete tasks |  |

| Basic task 4 | Draw a rectangle | Repeat blocks to draw rectangles |  |

| Basic task 5 | Draw three squares | Define blocks and repeat blocks to draw three squares |  |

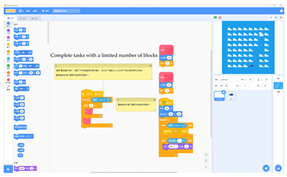

| Advanced task 1 | Maze problem: polar bear eating fish | Complete tasks with a limited number of blocks |  |

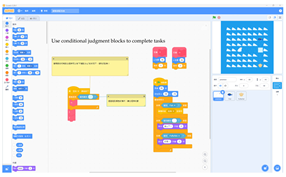

| Advanced task 2 | Maze problem: polar bear eating fish | Use conditional judgment blocks to complete tasks |  |

| Advanced task 3 | Maze problem: polar bear eating fish | Use specified blocks and conditional judgment blocks to complete tasks |  |

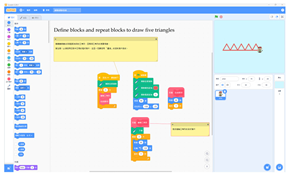

| Advanced task 4 | Draw five triangles | Define blocks and repeat blocks to draw five triangles |  |

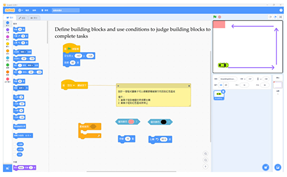

| Advanced task 5 | Automated obstacle-avoiding vehicle | Define building blocks and use conditions to judge building blocks to complete tasks |  |

| Items | N | Mean | SD | df | t | p |

|---|---|---|---|---|---|---|

| CT_F1 | 28 | 4.50 | 0.51 | 27 | 46.77 * | 0.00 |

| CT_F2 | 28 | 4.36 | 0.49 | 27 | 47.25 * | 0.00 |

| CT_F3 | 28 | 4.43 | 0.50 | 27 | 46.50 * | 0.00 |

| CT_F4 | 28 | 4.46 | 0.51 | 27 | 46.51 * | 0.00 |

| CT_F5 | 28 | 4.39 | 0.50 | 27 | 46.74 * | 0.00 |

| CT_F6 | 28 | 4.46 | 0.51 | 27 | 46.51 * | 0.00 |

| CT_F7 | 28 | 4.46 | 0.51 | 27 | 46.51 * | 0.00 |

| CT_F8 | 28 | 4.39 | 0.50 | 27 | 46.74 * | 0.00 |

| CT_F9 | 28 | 4.43 | 0.50 | 27 | 46.50 * | 0.00 |

| Mean | SD | |||||

|---|---|---|---|---|---|---|

| Items | Pre | Post | Pre | Post | t | p |

| A1 | 1.54 | 2.96 | 0.51 | 0.84 | −10.95 * | 0.00 |

| A2 | 1.46 | 2.82 | 0.51 | 0.61 | −12.85 * | 0.00 |

| A3 | 1.57 | 2.82 | 0.50 | 0.67 | −11.30 * | 0.00 |

| A4 | 1.54 | 2.82 | 0.51 | 0.55 | −14.79 * | 0.00 |

| A5 | 1.54 | 2.93 | 0.51 | 0.72 | −11.72 * | 0.00 |

| A6 | 1.57 | 2.89 | 0.50 | 0.69 | −12.76 * | 0.00 |

| A7 | 1.54 | 2.89 | 0.51 | 0.61 | −10.23 * | 0.00 |

| A8 | 1.46 | 2.64 | 0.51 | 0.62 | −11.38 * | 0.00 |

| A9 | 1.46 | 2.53 | 0.51 | 0.64 | −7.91 * | 0.00 |

| Mean | SD | |||||

|---|---|---|---|---|---|---|

| Items | Pre | Post | Pre | Post | t | p |

| A1 | 3.21 | 4.39 | 0.83 | 0.69 | −11.38 * | 0.00 |

| A2 | 3.29 | 4.36 | 0.85 | 0.62 | −9.38 * | 0.00 |

| A3 | 3.25 | 4.50 | 0.89 | 0.51 | −12.76 * | 0.00 |

| A4 | 3.29 | 4.50 | 0.85 | 0.51 | −12.89 * | 0.00 |

| A5 | 3.32 | 4.46 | 0.82 | 0.58 | −13.49 * | 0.00 |

| A6 | 3.36 | 4.57 | 0.78 | 0.50 | −15.38 * | 0.00 |

| A7 | 3.21 | 4.43 | 0.83 | 0.50 | −12.89 * | 0.00 |

| A8 | 3.32 | 4.50 | 0.82 | 0.51 | −13.11 * | 0.00 |

| Mean | SD | |||||

|---|---|---|---|---|---|---|

| Items | Pre | Post | Pre | Post | t | p |

| A1 | 3.18 | 4.32 | 0.82 | 0.67 | −10.23 * | 0.00 |

| A2 | 3.29 | 4.36 | 0.81 | 0.62 | −10.51 * | 0.00 |

| A3 | 3.21 | 4.43 | 0.83 | 0.50 | −11.31 * | 0.00 |

| A4 | 3.25 | 4.46 | 0.84 | 0.51 | −12.89 * | 0.00 |

| A5 | 3.29 | 4.46 | 0.81 | 0.58 | −15.99 * | 0.00 |

| A6 | 3.36 | 4.57 | 0.78 | 0.50 | −15.38 * | 0.00 |

| A7 | 3.21 | 4.39 | 0.79 | 0.57 | −13.11 * | 0.00 |

| A8 | 3.32 | 4.50 | 0.82 | 0.51 | −13.11 * | 0.00 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, T.-T.; Lin, C.-J.; Wang, S.-C.; Huang, Y.-M. Tracking Visual Programming Language-Based Learning Progress for Computational Thinking Education. Sustainability 2023, 15, 1983. https://doi.org/10.3390/su15031983

Wu T-T, Lin C-J, Wang S-C, Huang Y-M. Tracking Visual Programming Language-Based Learning Progress for Computational Thinking Education. Sustainability. 2023; 15(3):1983. https://doi.org/10.3390/su15031983

Chicago/Turabian StyleWu, Ting-Ting, Chia-Ju Lin, Shih-Cheng Wang, and Yueh-Min Huang. 2023. "Tracking Visual Programming Language-Based Learning Progress for Computational Thinking Education" Sustainability 15, no. 3: 1983. https://doi.org/10.3390/su15031983

APA StyleWu, T.-T., Lin, C.-J., Wang, S.-C., & Huang, Y.-M. (2023). Tracking Visual Programming Language-Based Learning Progress for Computational Thinking Education. Sustainability, 15(3), 1983. https://doi.org/10.3390/su15031983