Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization

Abstract

:1. Introduction

2. Theoretical Framework

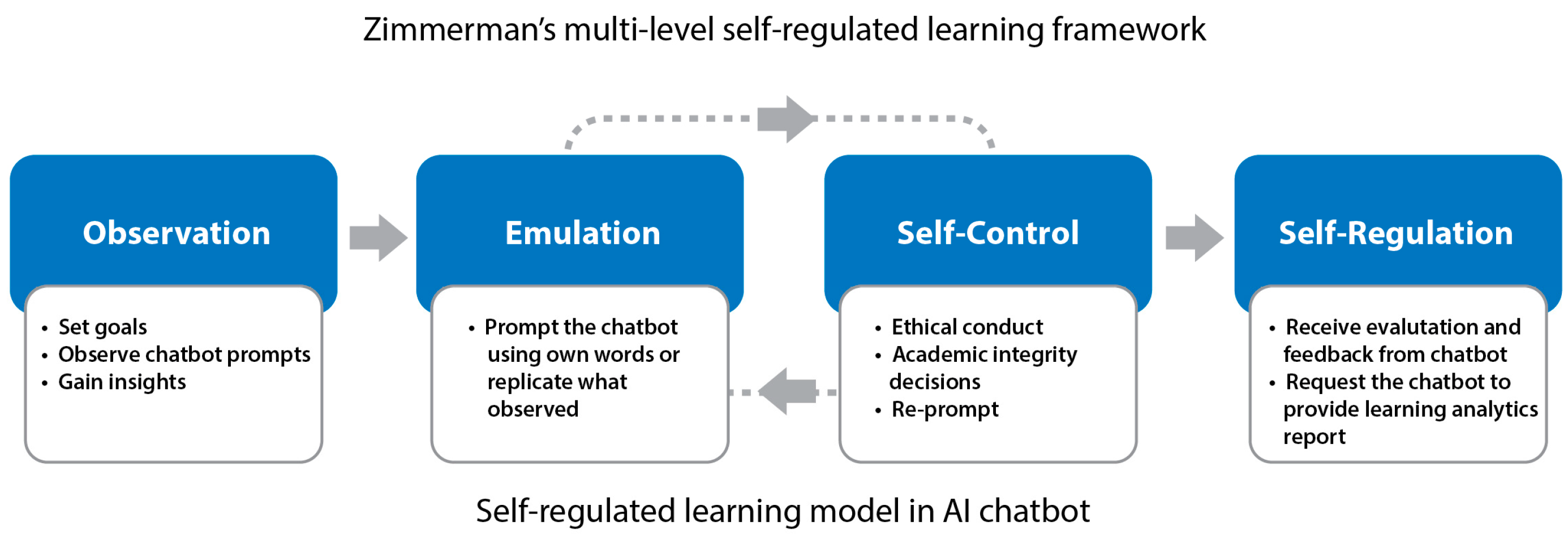

2.1. Review of Zimmerman’s Multi-Level Self-Regulated Learning Framework

2.2. Definition and Background of JOL

3. Educational Principles That Guide Integration of Chatbots

3.1. Define Chatbots and Describe Their Potential Use in Educational Settings

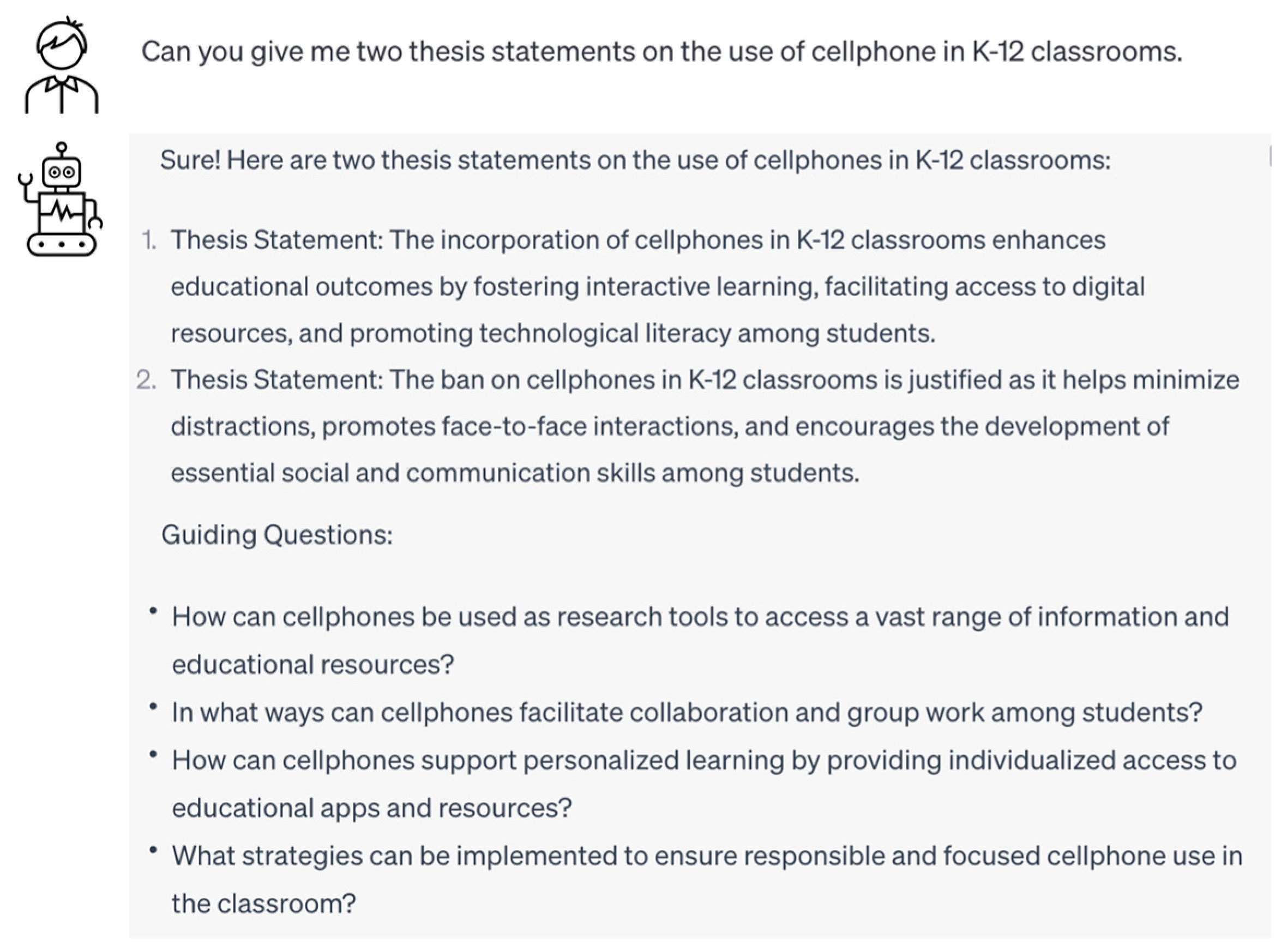

3.2. Goal Setting and Prompting

3.3. Feedback and Self-Assessment Mechanism

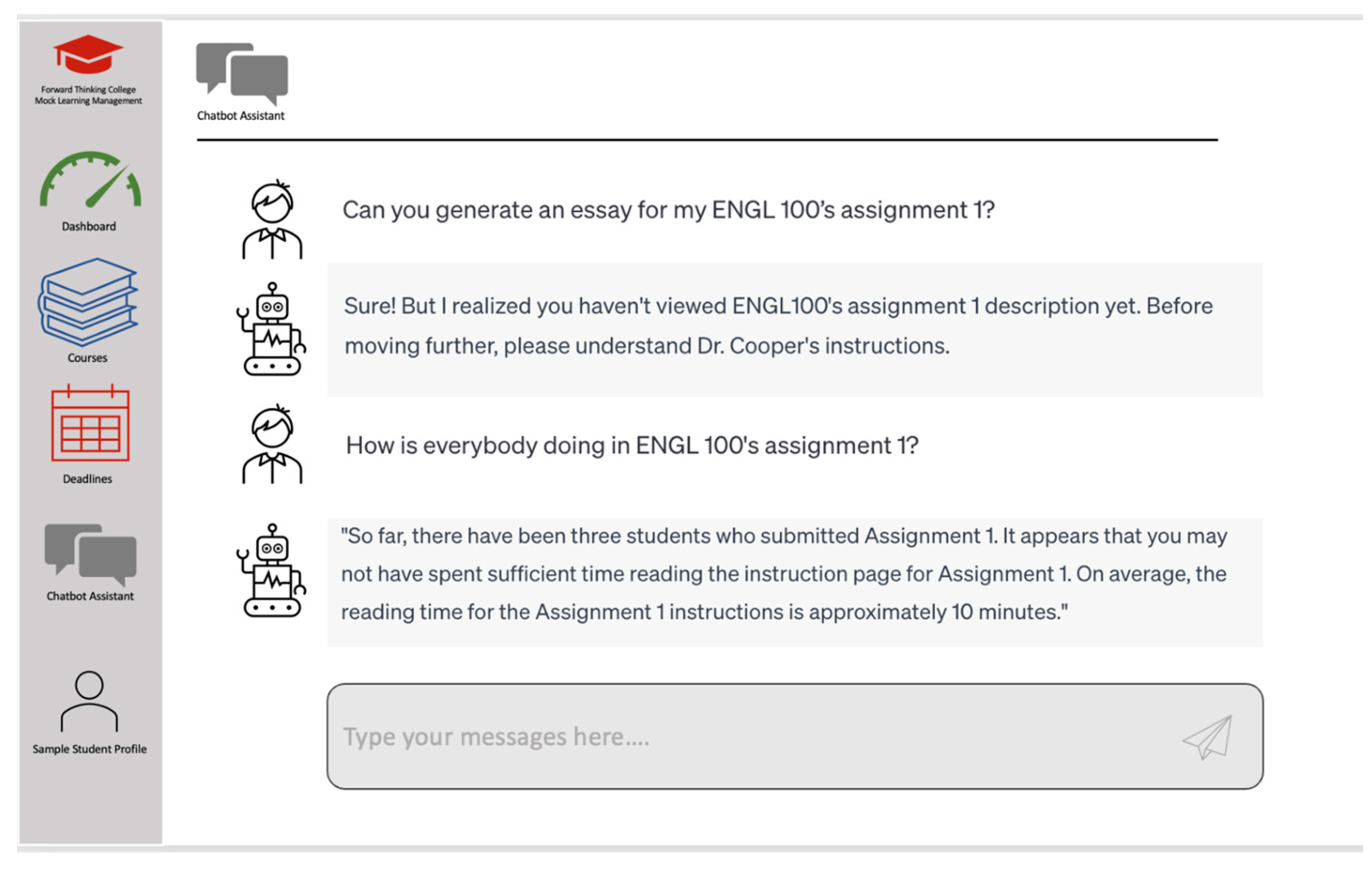

3.4. Facilitating Self-Regulation: Personalization and Adaptation

4. Limitations

5. Concluding Remarks

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Pérez, J.Q.; Daradoumis, T.; Puig, J.M.M. Rediscovering the use of chatbots in education: A systematic literature review. Comput. Appl. Eng. Educ. 2020, 28, 1549–1565. [Google Scholar] [CrossRef]

- Smutny, P.; Schreiberova, P. Chatbots for learning: A review of educational chatbots for the Facebook Messenger. Comput. Educ. 2020, 151, 103862. [Google Scholar] [CrossRef]

- Kuhail, M.A.; Alturki, N.; Alramlawi, S.; Alhejori, K. Interacting with educational chatbots: A systematic review. Educ. Inf. Technol. 2023, 28, 973–1018. [Google Scholar] [CrossRef]

- Okonkwo, C.W.; Ade-Ibijola, A. Chatbots applications in education: A systematic review. Comput. Educ. Artif. Intell. 2021, 2, 100033. [Google Scholar] [CrossRef]

- Koriat, A. Monitoring one’s own knowledge during study: A cue-utilization approach to judgments of learning. J. Exp. Psychol. Gen. 1997, 126, 349–370. [Google Scholar] [CrossRef]

- Son, L.K.; Metcalfe, J. Judgments of learning: Evidence for a two-stage process. Mem. Cogn. 2005, 33, 1116–1129. [Google Scholar] [CrossRef] [PubMed]

- Panadero, E. A Review of Self-regulated Learning: Six Models and Four Directions for Research. Front. Psychol. 2017, 8, 422. [Google Scholar] [CrossRef] [PubMed]

- Zimmerman, B.J. Attaining Self-Regulation. In Handbook of Self-Regulation; Elsevier: Amsterdam, The Netherlands, 2000; pp. 13–39. [Google Scholar] [CrossRef]

- Baars, M.; Wijnia, L.; de Bruin, A.; Paas, F. The Relation Between Students’ Effort and Monitoring Judgments During Learning: A Meta-analysis. Educ. Psychol. Rev. 2020, 32, 979–1002. [Google Scholar] [CrossRef]

- Leonesio, R.J.; Nelson, T.O. Do different metamemory judgments tap the same underlying aspects of memory? J. Exp. Psychol. Learn. Mem. Cogn. 1990, 16, 464–470. [Google Scholar] [CrossRef]

- Double, K.S.; Birney, D.P.; Walker, S.A. A meta-analysis and systematic review of reactivity to judgements of learning. Memory 2018, 26, 741–750. [Google Scholar] [CrossRef]

- Janes, J.L.; Rivers, M.L.; Dunlosky, J. The influence of making judgments of learning on memory performance: Positive, negative, or both? Psychon. Bull. Rev. 2018, 25, 2356–2364. [Google Scholar] [CrossRef]

- Hamzah, M.I.; Hamzah, H.; Zulkifli, H. Systematic Literature Review on the Elements of Metacognition-Based Higher Order Thinking Skills (HOTS) Teaching and Learning Modules. Sustainability 2022, 14, 813. [Google Scholar] [CrossRef]

- Veenman, M.V.J.; Van Hout-Wolters, B.H.A.M.; Afflerbach, P. Metacognition and learning: Conceptual and methodological considerations. Metacognition Learn. 2006, 1, 3–14. [Google Scholar] [CrossRef]

- Nelson, T.; Narens, L. Why investigate metacognition. In Metacognition: Knowing about Knowing; MIT Press: Cambridge, MA, USA, 1994. [Google Scholar] [CrossRef]

- Tuysuzoglu, B.B.; Greene, J.A. An investigation of the role of contingent metacognitive behavior in self-regulated learning. Metacognition Learn. 2015, 10, 77–98. [Google Scholar] [CrossRef]

- Bandura, A. Social Cognitive Theory: An Agentic Perspective. Asian J. Soc. Psychol. 1999, 2, 21–41. [Google Scholar] [CrossRef]

- Bem, D.J. Self-Perception Theory. In Advances in Experimental Social Psychology; Berkowitz, L., Ed.; Academic Press: Cambridge, MA, USA, 1972; Volume 6, pp. 1–62. [Google Scholar] [CrossRef]

- Abu Shawar, B.; Atwell, E. Different measurements metrics to evaluate a chatbot system. In Proceedings of the Workshop on Bridging the Gap: Academic and Industrial Research in Dialog Technologies, Rochester, NY, USA, 26 April 2007; pp. 89–96. [Google Scholar] [CrossRef]

- Turing, A.M. Computing machinery and intelligence. Mind 1950, 59, 433–460. [Google Scholar] [CrossRef]

- Weizenbaum, J. ELIZA—A computer program for the study of natural language communication between man and machine. Commun. ACM 1966, 9, 36–45. [Google Scholar] [CrossRef]

- Wallace, R.S. The anatomy of A.L.I.C.E. In Parsing the Turing Test: Philosophical and Methodological Issues in the Quest for the Thinking Computer; Epstein, R., Roberts, G., Beber, G., Eds.; Springer: Dordrecht, The Netherlands, 2009; pp. 181–210. [Google Scholar] [CrossRef]

- Hwang, G.-J.; Chang, C.-Y. A review of opportunities and challenges of chatbots in education. Interact. Learn. Environ. 2021, 1–14. [Google Scholar] [CrossRef]

- Yamada, M.; Goda, Y.; Matsukawa, H.; Hata, K.; Yasunami, S. A Computer-Supported Collaborative Learning Design for Quality Interaction. IEEE MultiMedia 2016, 23, 48–59. [Google Scholar] [CrossRef]

- Muniasamy, A.; Alasiry, A. Deep Learning: The Impact on Future eLearning. Int. J. Emerg. Technol. Learn. (iJET) 2020, 15, 188–199. [Google Scholar] [CrossRef]

- Bendig, E.; Erb, B.; Schulze-Thuesing, L.; Baumeister, H. The Next Generation: Chatbots in Clinical Psychology and Psychotherapy to Foster Mental Health—A Scoping Review. Verhaltenstherapie 2022, 32, 64–76. [Google Scholar] [CrossRef]

- Kennedy, C.M.; Powell, J.; Payne, T.H.; Ainsworth, J.; Boyd, A.; Buchan, I. Active Assistance Technology for Health-Related Behavior Change: An Interdisciplinary Review. J. Med. Internet Res. 2012, 14, e80. [Google Scholar] [CrossRef]

- Poncette, A.-S.; Rojas, P.-D.; Hofferbert, J.; Sosa, A.V.; Balzer, F.; Braune, K. Hackathons as Stepping Stones in Health Care Innovation: Case Study with Systematic Recommendations. J. Med. Internet Res. 2020, 22, e17004. [Google Scholar] [CrossRef] [PubMed]

- Ferrell, O.C.; Ferrell, L. Technology Challenges and Opportunities Facing Marketing Education. Mark. Educ. Rev. 2020, 30, 3–14. [Google Scholar] [CrossRef]

- Behera, R.K.; Bala, P.K.; Ray, A. Cognitive Chatbot for Personalised Contextual Customer Service: Behind the Scene and beyond the Hype. Inf. Syst. Front. 2021, 1–21. [Google Scholar] [CrossRef]

- Crolic, C.; Thomaz, F.; Hadi, R.; Stephen, A.T. Blame the Bot: Anthropomorphism and Anger in Customer–Chatbot Interactions. J. Mark. 2022, 86, 132–148. [Google Scholar] [CrossRef]

- Clarizia, F.; Colace, F.; Lombardi, M.; Pascale, F.; Santaniello, D. Chatbot: An education support system for student. In CSS 2018: Cyberspace Safety and Security; Castiglione, A., Pop, F., Ficco, M., Palmieri, F., Eds.; Lecture Notes in Computer Science Book Series; Springer International Publishing: Cham, Switzerland, 2018; Volume 11161, pp. 291–302. [Google Scholar] [CrossRef]

- Firat, M. What ChatGPT means for universities: Perceptions of scholars and students. J. Appl. Learn. Teach. 2023, 6, 57–63. [Google Scholar] [CrossRef]

- Kim, H.-S.; Kim, N.Y. Effects of AI chatbots on EFL students’ communication skills. Commun. Ski. 2021, 21, 712–734. [Google Scholar]

- Hill, J.; Ford, W.R.; Farreras, I.G. Real conversations with artificial intelligence: A comparison between human–human online conversations and human–chatbot conversations. Comput. Hum. Behav. 2015, 49, 245–250. [Google Scholar] [CrossRef]

- Wu, E.H.-K.; Lin, C.-H.; Ou, Y.-Y.; Liu, C.-Z.; Wang, W.-K.; Chao, C.-Y. Advantages and Constraints of a Hybrid Model K-12 E-Learning Assistant Chatbot. IEEE Access 2020, 8, 77788–77801. [Google Scholar] [CrossRef]

- Brandtzaeg, P.B.; Følstad, A. Why people use chatbots. In INSCI 2017: Internet Science; Kompatsiaris, I., Cave, J., Satsiou, A., Carle, G., Passani, A., Kontopoulos, E., Diplaris, S., McMillan, D., Eds.; Lecture Notes in Computer Science Book Series; Springer International Publishing: Cham, Switzerland, 2017; Volume 10673, pp. 377–392. [Google Scholar] [CrossRef]

- Deng, X.; Yu, Z. A Meta-Analysis and Systematic Review of the Effect of Chatbot Technology Use in Sustainable Education. Sustainability 2023, 15, 2940. [Google Scholar] [CrossRef]

- de Quincey, E.; Briggs, C.; Kyriacou, T.; Waller, R. Student Centred Design of a Learning Analytics System. In Proceedings of the 9th International Conference on Learning Analytics & Knowledge, Tempe, AZ, USA, 4 March 2019; pp. 353–362. [Google Scholar] [CrossRef]

- Hattie, J. The black box of tertiary assessment: An impending revolution. In Tertiary Assessment & Higher Education Student Outcomes: Policy, Practice & Research; Ako Aotearoa: Wellington, New Zealand, 2009; pp. 259–275. [Google Scholar]

- Wisniewski, B.; Zierer, K.; Hattie, J. The Power of Feedback Revisited: A Meta-Analysis of Educational Feedback Research. Front. Psychol. 2020, 10, 3087. [Google Scholar] [CrossRef]

- Winne, P.H. Cognition and metacognition within self-regulated learning. In Handbook of Self-Regulation of Learning and Performance, 2nd ed.; Routledge: London, UK, 2017. [Google Scholar]

- Serban, I.V.; Sankar, C.; Germain, M.; Zhang, S.; Lin, Z.; Subramanian, S.; Kim, T.; Pieper, M.; Chandar, S.; Ke, N.R.; et al. A deep reinforcement learning chatbot. arXiv 2017, arXiv:1709.02349. [Google Scholar]

- Shneiderman, B.; Plaisant, C. Designing the User Interface: Strategies for Effective Human-Computer Interaction, 4th ed.; Pearson: Boston, MA, USA; Addison Wesley: Hoboken, NJ, USA, 2004. [Google Scholar]

- Abbasi, S.; Kazi, H. Measuring effectiveness of learning chatbot systems on student’s learning outcome and memory retention. Asian J. Appl. Sci. Eng. 2014, 3, 57–66. [Google Scholar] [CrossRef]

- Winkler, R.; Soellner, M. Unleashing the Potential of Chatbots in Education: A State-Of-The-Art Analysis. Acad. Manag. Proc. 2018, 2018, 15903. [Google Scholar] [CrossRef]

- Dwivedi, Y.K.; Kshetri, N.; Hughes, L.; Slade, E.L.; Jeyaraj, A.; Kar, A.K.; Baabdullah, A.M.; Koohang, A.; Raghavan, V.; Ahuja, M.; et al. Opinion Paper: “So what if ChatGPT wrote it?” Multidisciplinary perspectives on opportunities, challenges and implications of generative conversational AI for research, practice and policy. Int. J. Inf. Manag. 2023, 71, 102642. [Google Scholar] [CrossRef]

- Dai, W.; Lin, J.; Jin, F.; Li, T.; Tsai, Y.S.; Gasevic, D.; Chen, G. Can large language models provide feedback to students? A case study on ChatGPT. 2023; preprint. [Google Scholar] [CrossRef]

- Lin, M.P.-C.; Chang, D. Enhancing post-secondary writers’ writing skills with a Chatbot: A mixed-method classroom study. J. Educ. Technol. Soc. 2020, 23, 78–92. [Google Scholar]

- Zhu, C.; Sun, M.; Luo, J.; Li, T.; Wang, M. How to harness the potential of ChatGPT in education? Knowl. Manag. E-Learn. 2023, 15, 133–152. [Google Scholar] [CrossRef]

- Prøitz, T.S. Learning outcomes: What are they? Who defines them? When and where are they defined? Educ. Assess. Eval. Account. 2010, 22, 119–137. [Google Scholar] [CrossRef]

- Burke, J. (Ed.) Outcomes, Learning and the Curriculum: Implications for Nvqs, Gnvqs and Other Qualifications; Routledge: London, UK, 1995. [Google Scholar] [CrossRef]

- Locke, E.A. New Developments in Goal Setting and Task Performance, 1st ed.; Routledge: London, UK, 2013. [Google Scholar] [CrossRef]

- Leake, D.B.; Ram, A. Learning, goals, and learning goals: A perspective on goal-driven learning. Artif. Intell. Rev. 1995, 9, 387–422. [Google Scholar] [CrossRef]

- Greene, J.A.; Azevedo, R. A Theoretical Review of Winne and Hadwin’s Model of Self-Regulated Learning: New Perspectives and Directions. Rev. Educ. Res. 2007, 77, 334–372. [Google Scholar] [CrossRef]

- Pintrich, P.R. A Conceptual Framework for Assessing Motivation and Self-Regulated Learning in College Students. Educ. Psychol. Rev. 2004, 16, 385–407. [Google Scholar] [CrossRef]

- Schunk, D.H.; Greene, J.A. (Eds.) Handbook of Self-Regulation of Learning and Performance, 2nd ed.; In Educational Psychology Handbook Series; Routledge: New York, NY, USA; Taylor & Francis Group: Milton Park, UK, 2018. [Google Scholar]

- Chen, C.-H.; Su, C.-Y. Using the BookRoll e-book system to promote self-regulated learning, self-efficacy and academic achievement for university students. J. Educ. Technol. Soc. 2019, 22, 33–46. [Google Scholar]

- Michailidis, N.; Kapravelos, E.; Tsiatsos, T. Interaction analysis for supporting students’ self-regulation during blog-based CSCL activities. J. Educ. Technol. Soc. 2018, 21, 37–47. [Google Scholar]

- Paans, C.; Molenaar, I.; Segers, E.; Verhoeven, L. Temporal variation in children’s self-regulated hypermedia learning. Comput. Hum. Behav. 2019, 96, 246–258. [Google Scholar] [CrossRef]

- Morisano, D.; Hirsh, J.B.; Peterson, J.B.; Pihl, R.O.; Shore, B.M. Setting, elaborating, and reflecting on personal goals improves academic performance. J. Appl. Psychol. 2010, 95, 255–264. [Google Scholar] [CrossRef]

- Krathwohl, D.R. A Revision of Bloom’s Taxonomy: An Overview. Theory Pract. 2002, 41, 212–218. [Google Scholar] [CrossRef]

- Bouffard, T.; Boisvert, J.; Vezeau, C.; Larouche, C. The impact of goal orientation on self-regulation and performance among college students. Br. J. Educ. Psychol. 1995, 65, 317–329. [Google Scholar] [CrossRef]

- Javaherbakhsh, M.R. The Impact of Self-Assessment on Iranian EFL Learners’ Writing Skill. Engl. Lang. Teach. 2010, 3, 213–218. [Google Scholar] [CrossRef]

- Zepeda, C.D.; Richey, J.E.; Ronevich, P.; Nokes-Malach, T.J. Direct instruction of metacognition benefits adolescent science learning, transfer, and motivation: An in vivo study. J. Educ. Psychol. 2015, 107, 954–970. [Google Scholar] [CrossRef]

- Ndoye, A. Peer/self assessment and student learning. Int. J. Teach. Learn. High. Educ. 2017, 29, 255–269. [Google Scholar]

- Schunk, D.H. Goal and Self-Evaluative Influences During Children’s Cognitive Skill Learning. Am. Educ. Res. J. 1996, 33, 359–382. [Google Scholar] [CrossRef]

- King, A. Enhancing Peer Interaction and Learning in the Classroom Through Reciprocal Questioning. Am. Educ. Res. J. 1990, 27, 664–687. [Google Scholar] [CrossRef]

- Mason, L.H. Explicit Self-Regulated Strategy Development Versus Reciprocal Questioning: Effects on Expository Reading Comprehension Among Struggling Readers. J. Educ. Psychol. 2004, 96, 283–296. [Google Scholar] [CrossRef]

- Newman, R.S. Adaptive help seeking: A strategy of self-regulated learning. In Self-Regulation of Learning and Performance: Issues and Educational Applications; Lawrence Erlbaum Associates, Inc.: Hillsdale, NJ, USA, 1994; pp. 283–301. [Google Scholar]

- Rosenshine, B.; Meister, C. Reciprocal Teaching: A Review of the Research. Rev. Educ. Res. 1994, 64, 479–530. [Google Scholar] [CrossRef]

- Baleghizadeh, S.; Masoun, A. The Effect of Self-Assessment on EFL Learners’ Self-Efficacy. TESL Can. J. 2014, 31, 42. [Google Scholar] [CrossRef]

- Moghadam, S.H. What Types of Feedback Enhance the Effectiveness of Self-Explanation in a Simulation-Based Learning Environment? Available online: https://summit.sfu.ca/item/34750 (accessed on 14 July 2023).

- Vanichvasin, P. Effects of Visual Communication on Memory Enhancement of Thai Undergraduate Students, Kasetsart University. High. Educ. Stud. 2020, 11, 34–41. [Google Scholar] [CrossRef]

- Schumacher, C.; Ifenthaler, D. Features students really expect from learning analytics. Comput. Hum. Behav. 2018, 78, 397–407. [Google Scholar] [CrossRef]

- Marzouk, Z.; Rakovic, M.; Liaqat, A.; Vytasek, J.; Samadi, D.; Stewart-Alonso, J.; Ram, I.; Woloshen, S.; Winne, P.H.; Nesbit, J.C. What if learning analytics were based on learning science? Australas. J. Educ. Technol. 2016, 32, 1–18. [Google Scholar] [CrossRef]

- Akhtar, S.; Warburton, S.; Xu, W. The use of an online learning and teaching system for monitoring computer aided design student participation and predicting student success. Int. J. Technol. Des. Educ. 2015, 27, 251–270. [Google Scholar] [CrossRef]

- Lo, C.K. What Is the Impact of ChatGPT on Education? A Rapid Review of the Literature. Educ. Sci. 2023, 13, 410. [Google Scholar] [CrossRef]

- Baidoo-Anu, D.; Ansah, L.O. Education in the era of generative Artificial Intelligence (AI): Understanding the potential benefits of ChatGPT in promoting teaching and learning. SSRN Electron. J. 2023, 1–22. [Google Scholar] [CrossRef]

- Mogali, S.R. Initial impressions of ChatGPT for anatomy education. Anat. Sci. Educ. 2023, 1–4. [Google Scholar] [CrossRef] [PubMed]

| Prompt Types | Process-Based | Outcome-Based |

|---|---|---|

| Cognitive | Understand Remember | Create Apply |

| Metacognitive | Evaluate | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chang, D.H.; Lin, M.P.-C.; Hajian, S.; Wang, Q.Q. Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization. Sustainability 2023, 15, 12921. https://doi.org/10.3390/su151712921

Chang DH, Lin MP-C, Hajian S, Wang QQ. Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization. Sustainability. 2023; 15(17):12921. https://doi.org/10.3390/su151712921

Chicago/Turabian StyleChang, Daniel H., Michael Pin-Chuan Lin, Shiva Hajian, and Quincy Q. Wang. 2023. "Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization" Sustainability 15, no. 17: 12921. https://doi.org/10.3390/su151712921

APA StyleChang, D. H., Lin, M. P.-C., Hajian, S., & Wang, Q. Q. (2023). Educational Design Principles of Using AI Chatbot That Supports Self-Regulated Learning in Education: Goal Setting, Feedback, and Personalization. Sustainability, 15(17), 12921. https://doi.org/10.3390/su151712921