6+: A Novel Approach for Building Extraction from a Medium Resolution Multi-Spectral Satellite

Abstract

:1. Introduction

- (1)

- A novel approach named ‘6+’ is proposed for improving the building extraction performance of various deep learning models in medium spatial resolution, i.e., 10 m satellite images.

- (2)

- A novel medium spatial resolution building extraction dataset is prepared using Sentinel-2 and OpenStreetMap (OSM) data.

- (3)

- Extensive experiments are drawn to validate the performance of the proposed work.

2. Related Literature

3. Materials and Methodology

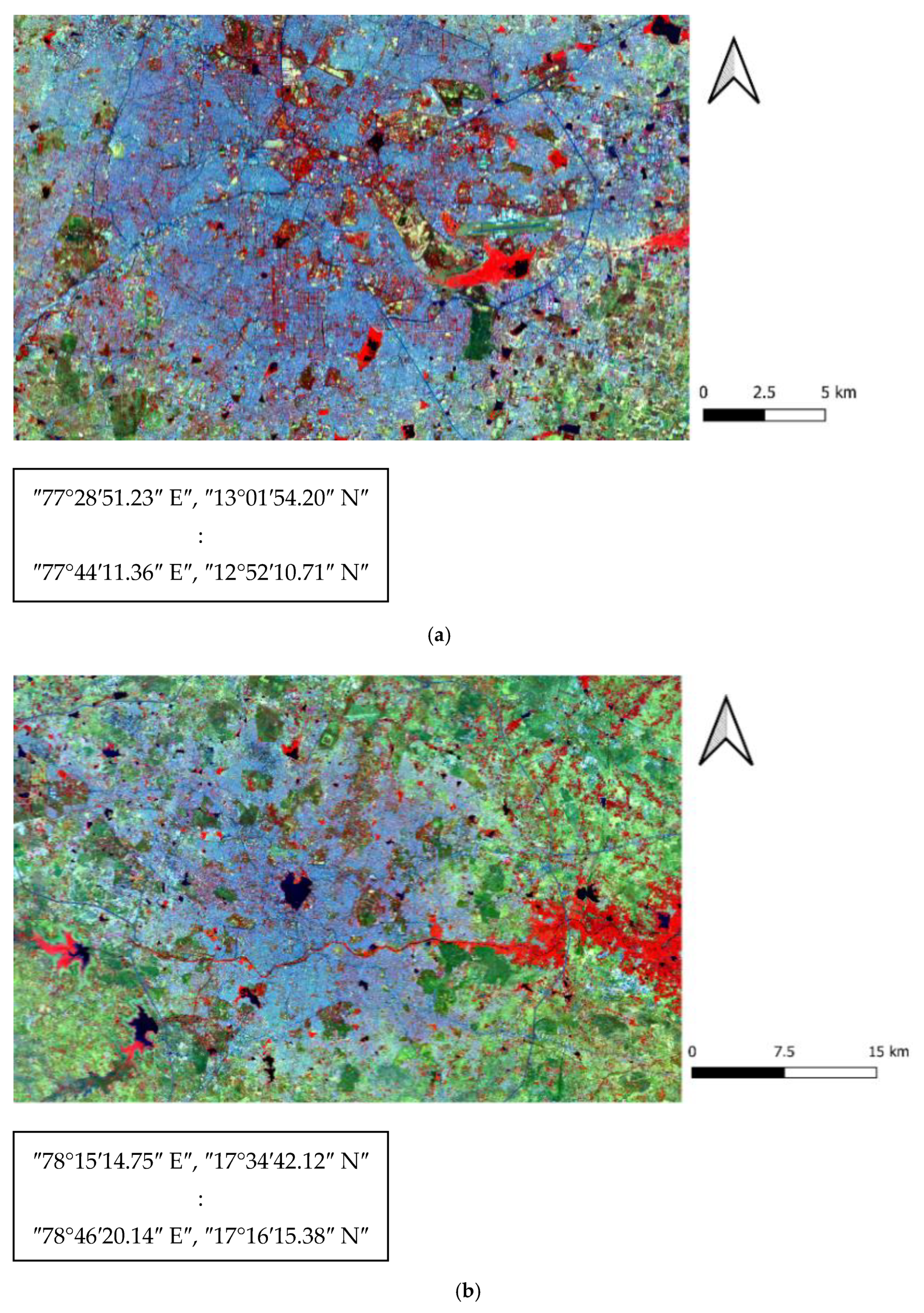

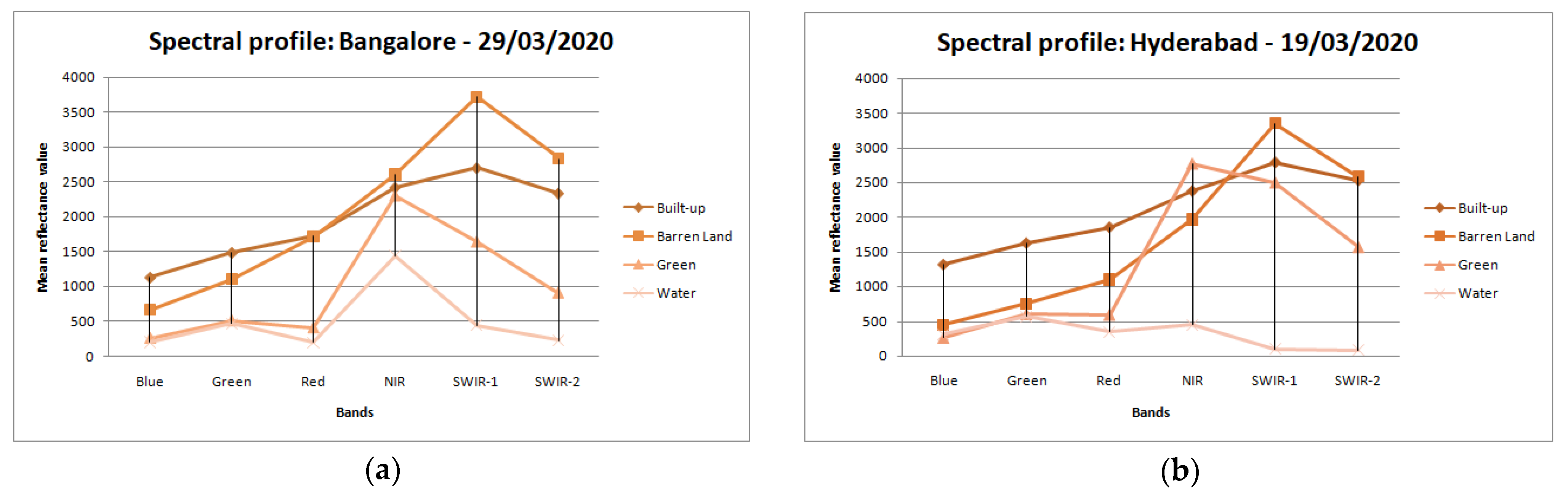

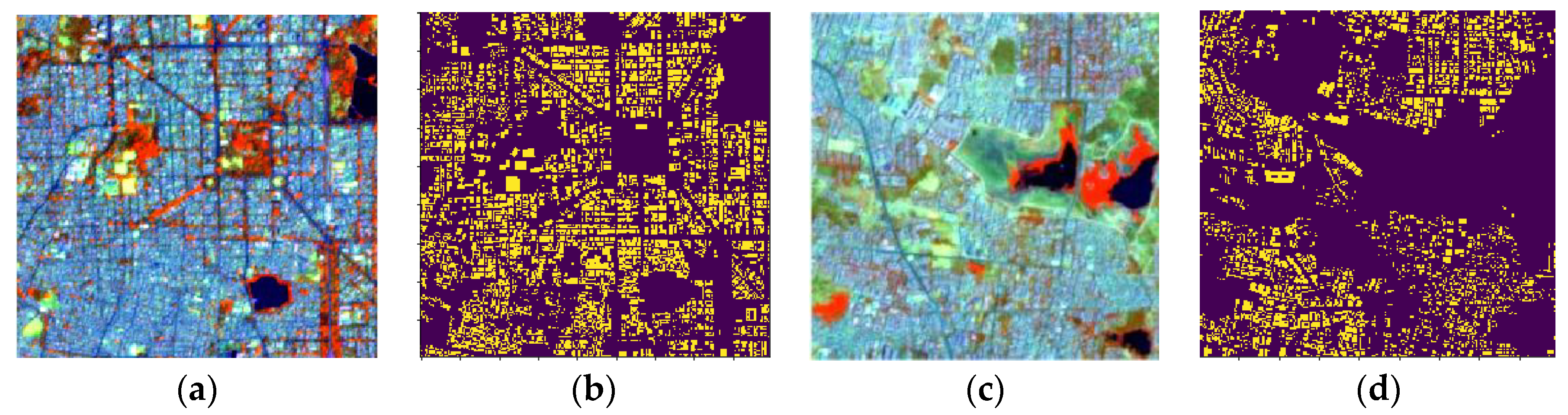

3.1. Study Areas and Data Resources

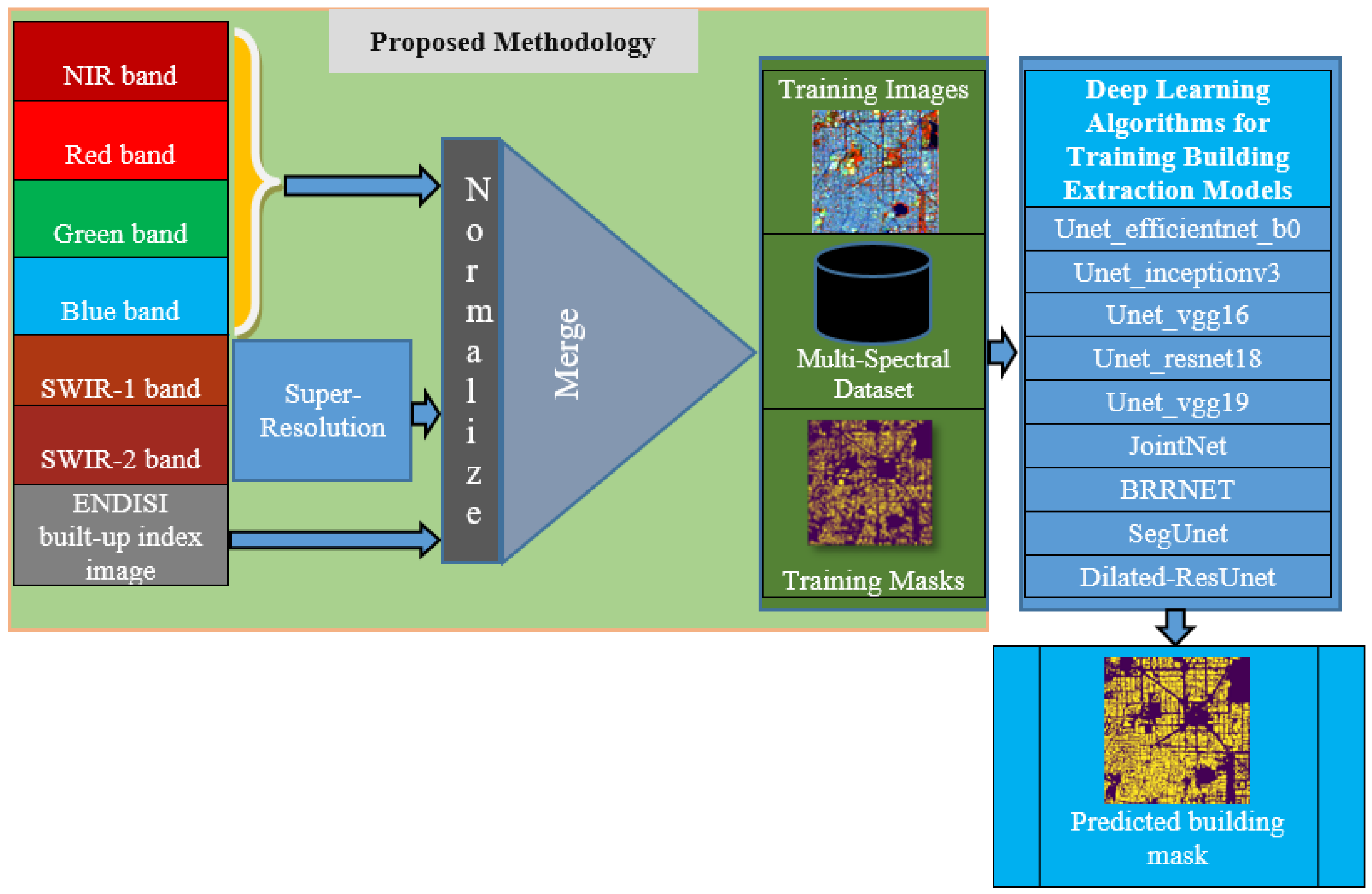

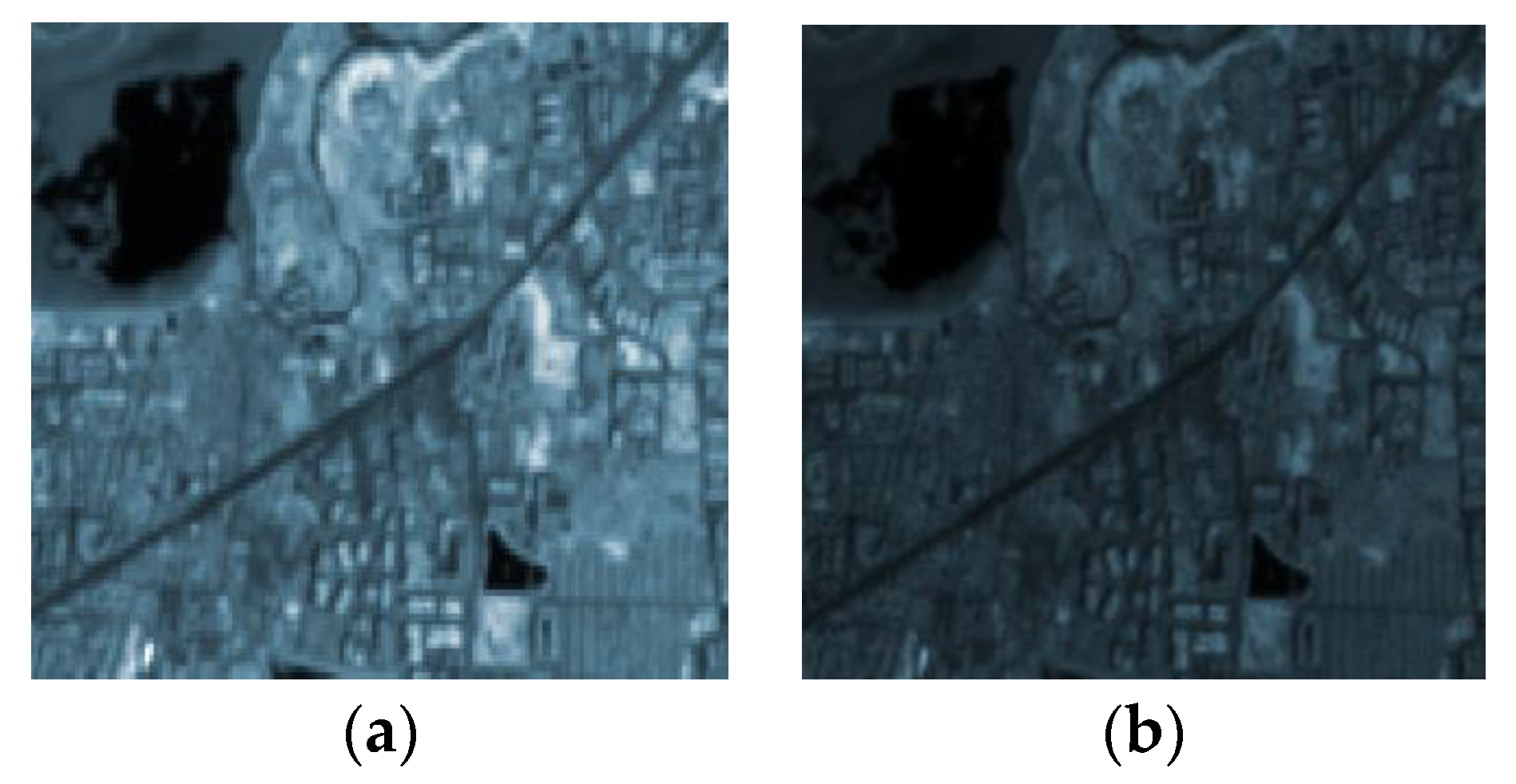

3.2. Proposed Methodology

3.3. Evaluation Metrics

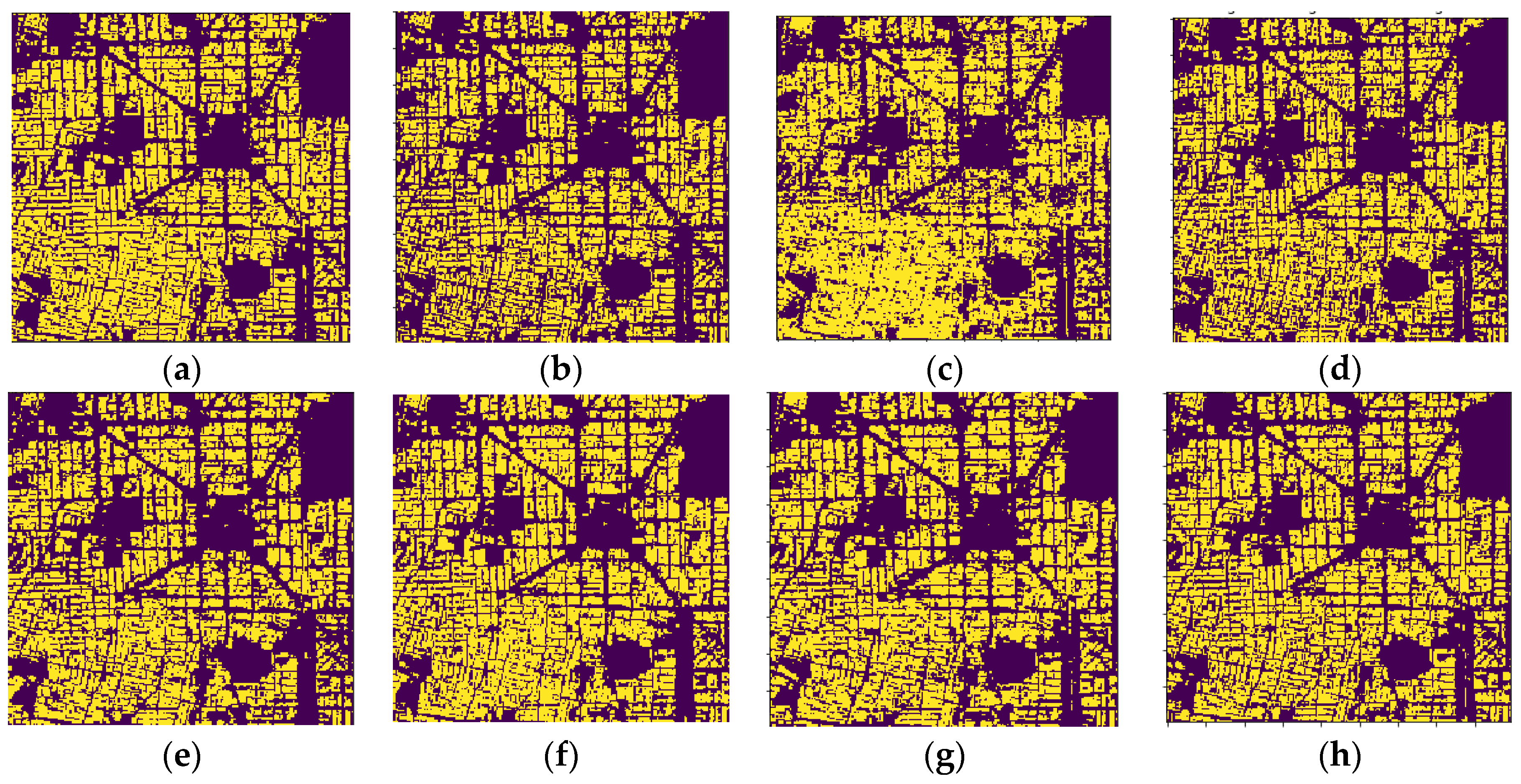

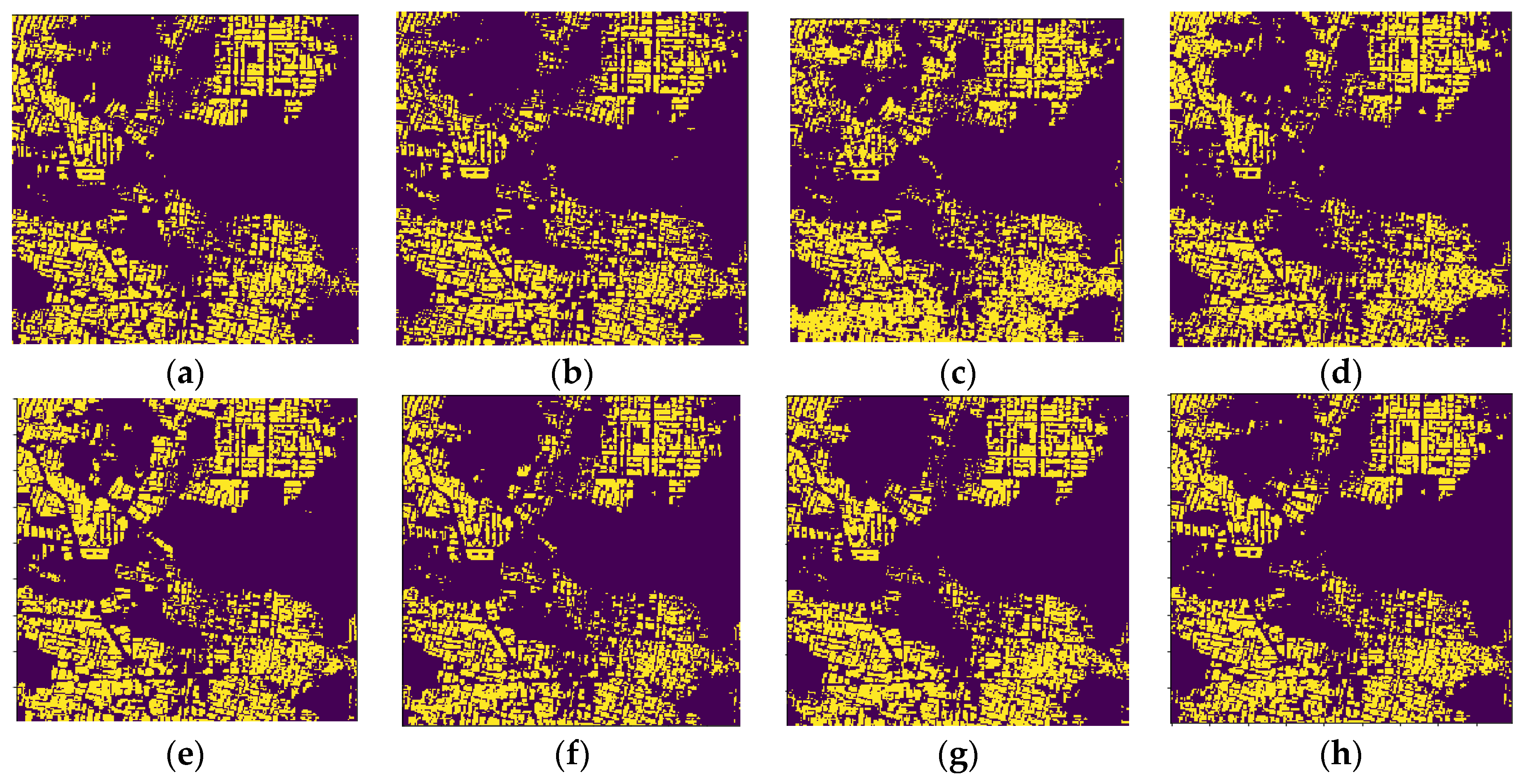

4. Experimental Results and Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Viale Pereira, G.; Schuch de Azambuja, L. Smart Sustainable City Roadmap as a Tool for Addressing Sustainability Challenges and Building Governance Capacity. Sustainability 2022, 14, 239. [Google Scholar] [CrossRef]

- Gómez, P.M.; Carrillo, O.J.J.; Kuffer, M.; Thomson, D.R.; Quiroz, J.L.O.; García, E.V.; Vanhuysse, S.; Abascal, Á.; Oluoch, I.; Nagenborg, M.; et al. Earth Observations and Statistics: Unlocking Sociodemographic Knowledge through the Power of Satellite Images. Sustainability 2021, 13, 640. [Google Scholar] [CrossRef]

- Wang, Y. Automatic Extraction of Building Outline from High Resolution Aerial Imagery. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci.—ISPRS Arch. 2016, 41, 419–423. [Google Scholar] [CrossRef] [Green Version]

- Yuan, J.; Cheriyadat, A.M. Learning to Count Buildings in Diverse Aerial Scenes. In Proceedings of the ACM International Symposium on Advances in Geographic Information Systems, Dallas, TX, USA, 4–7 November 2014; pp. 271–280. [Google Scholar] [CrossRef]

- Juergens, C.; Meyer-Heß, F.M.; Goebel, M.; Schmidt, T. Remote Sensing for Short-Term Economic Forecasts. Sustainability 2021, 13, 9593. [Google Scholar] [CrossRef]

- Chhor, G.; Engineering, M.; Aramburu, C.B. Satellite Image Segmentation for Building Detection Using U-Net. 2017. Available online: http://cs229.stanford.edu/proj2017/final-reports/5243715.pdf (accessed on 1 August 2021).

- Muhuri, A.; Manickam, S.; Bhattacharya, A. Snehmani Snow Cover Mapping Using Polarization Fraction Variation with Temporal RADARSAT-2 C-Band Full-Polarimetric SAR Data over the Indian Himalayas. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 2192–2209. [Google Scholar] [CrossRef]

- Mukherjee, A.; Kumar, A.A.; Ramachandran, P. Development of New Index-Based Methodology for Extraction of Built-Up Area From Landsat7 Imagery: Comparison of Performance With SVM, ANN, and Existing Indices. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1592–1603. [Google Scholar] [CrossRef]

- Deliry, S.I.; Avdan, Z.Y.; Avdan, U. Extracting Urban Impervious Surfaces from Sentinel-2 and Landsat-8 Satellite Data for Urban Planning and Environmental Management. Environ. Sci. Pollut. Res. 2020, 28, 6572–6586. [Google Scholar] [CrossRef]

- Dixit, M.; Chaurasia, K.; Kumar, V. Dilated-ResUnet: A Novel Deep Learning Architecture for Building Extraction from Medium Resolution Multi-Spectral Satellite Imagery. Expert Syst. Appl. 2021, 184, 115530. [Google Scholar] [CrossRef]

- Shi, W.; Mao, Z.; Liu, J. Building Area Extraction from the High Spatial Resolution Remote Sensing Imagery. Earth Sci. Inform. 2019, 12, 19–29. [Google Scholar] [CrossRef]

- Abdollahi, A.; Pradhan, B.; Alamri, A.M. An Ensemble Architecture of Deep Convolutional Segnet and Unet Networks for Building Semantic Segmentation from High-Resolution Aerial Images. Geocarto Int. 2020, 11, 1856199. [Google Scholar] [CrossRef]

- Xu, J.; Xiao, W.; He, T.; Deng, X.; Chen, W. Extraction of Built-up Area Using Multi-Sensor Data—A Case Study Based on Google Earth Engine in Zhejiang Province, China. Int. J. Remote Sens. 2021, 42, 389–404. [Google Scholar] [CrossRef]

- Yuan, X.; Shi, J.; Gu, L. A Review of Deep Learning Methods for Semantic Segmentation of Remote Sensing Imagery. Expert Syst. Appl. 2021, 169, 114417. [Google Scholar] [CrossRef]

- Varshney, A.; Rajesh, E. A Comparative Study of Built-up Index Approaches for Automated Extraction of Built-up Regions From Remote Sensing Data. J. Indian Soc. Remote Sens. 2014, 42, 659–663. [Google Scholar] [CrossRef]

- He, C.; Shi, P.; Xie, D.; Zhao, Y. Improving the Normalized Difference Built-up Index to Map Urban Built-up Areas Using a Semiautomatic Segmentation Approach. Remote Sens. Lett. 2010, 1, 213–221. [Google Scholar] [CrossRef] [Green Version]

- Valdiviezo-N, J.C.; Téllez-Quiñones, A.; Salazar-Garibay, A.; López-Caloca, A.A. Built-up Index Methods and Their Applications for Urban Extraction from Sentinel 2A Satellite Data: Discussion. J. Opt. Soc. Am. A 2018, 35, 35. [Google Scholar] [CrossRef] [PubMed]

- Benkouider, F.; Abdellaoui, A.; Hamami, L. New and Improved Built-Up Index Using SPOT Imagery: Application to an Arid Zone (Laghouat and M’Sila, Algeria). J. Indian Soc. Remote Sens. 2019, 47, 185–192. [Google Scholar] [CrossRef]

- Chen, J.; Yang, K.; Chen, S.; Yang, C.; Zhang, S.; He, L. Enhanced Normalized Difference Index for Impervious Surface Area Estimation at the Plateau Basin Scale. J. Appl. Remote Sens. 2019, 13, 016502. [Google Scholar] [CrossRef] [Green Version]

- Li, C.; Wang, X.; Wu, Z.; Dai, Z.; Yin, J.; Zhang, C. An Improved Method for Urban Built-up Area Extraction Supported by Multi-Source Data. Sustainability 2021, 13, 5042. [Google Scholar] [CrossRef]

- Jaderberg, M.; Simonyan, K.; Vedaldi, A.; Zisserman, A. Reading Text in the Wild with Convolutional Neural Networks. Int. J. Comput. Vis. 2016, 116, 120. [Google Scholar] [CrossRef] [Green Version]

- Kampffmeyer, M.; Salberg, A.B.; Jenssen, R. Semantic Segmentation of Small Objects and Modeling of Uncertainty in Urban Remote Sensing Images Using Deep Convolutional Neural Networks. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 680–688. [Google Scholar] [CrossRef]

- Ali, S.; Shaukat, Z.; Azeem, M.; Sakhawat, Z.; Mahmood, T.; ur Rehman, K. An Efficient and Improved Scheme for Handwritten Digit Recognition Based on Convolutional Neural Network. SN Appl. Sci. 2019, 1, 1125. [Google Scholar] [CrossRef] [Green Version]

- Jeppesen, J.H.; Jacobsen, R.H.; Inceoglu, F.; Toftegaard, T.S. A Cloud Detection Algorithm for Satellite Imagery Based on Deep Learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Wang, Q.; Guo, G. Benchmarking Deep Learning Techniques for Face Recognition. J. Vis. Commun. Image Represent. 2019, 65, 102663. [Google Scholar] [CrossRef]

- Wei, X.; Yang, Z.; Liu, Y.; Wei, D.; Jia, L.; Li, Y. Railway Track Fastener Defect Detection Based on Image Processing and Deep Learning Techniques: A Comparative Study. Eng. Appl. Artif. Intell. 2019, 80, 66–81. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep Learning Based Multi-Temporal Crop Classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Vanhellemont, Q. Automated Water Surface Temperature Retrieval from Landsat 8/TIRS. Remote Sens. Environ. 2020, 237, 111518. [Google Scholar] [CrossRef]

- Zhang, Z.; Liu, Q.; Wang, Y. Road Extraction by Deep Residual U-Net. IEEE Geosci. Remote Sens. Lett. 2018, 15, 749–753. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Q.; Liao, C.; Hu, H.; Mei, X.; Li, H. MAP-Net: Multi Attending Path Neural Network for Building Footprint Extraction from Remote Sensed Imagery. IEEE Trans. Geosci. Remote Sens. 2021, 59, 6169–6181. [Google Scholar] [CrossRef]

- Kang, W.; Xiang, Y.; Wang, F.; You, H. EU-Net: An Efficient Fully Convolutional Network for Building Extraction from Optical Remote Sensing Images. Remote Sens. 2019, 11, 2813. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z.; Wang, Y. JointNet: A Common Neural Network for Road and Building Extraction. Remote Sens. 2019, 11, 696. [Google Scholar] [CrossRef] [Green Version]

- Shao, Z.; Tang, P.; Wang, Z.; Saleem, N.; Yam, S.; Sommai, C. BRRNet: A Fully Convolutional Neural Network for Automatic Building Extraction from High-Resolution Remote Sensing Images. Remote Sens. 2020, 12, 1050. [Google Scholar] [CrossRef] [Green Version]

- Wang, H.; Miao, F. Building Extraction from Remote Sensing Images Using Deep Residual U-Net. Eur. J. Remote Sens. 2022, 55, 71–85. [Google Scholar] [CrossRef]

- Remote, R.; Imagery, S. B-FGC-Net: A Building Extraction Network from High Resolution Remote Sensing Imagery. Remote Sens. 2022, 14, 269. [Google Scholar]

- Huang, H.; Chen, Y.; Member, S.; Wang, R.; Member, S. A Lightweight Network for Building Extraction from Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 2892, 3131331. [Google Scholar] [CrossRef]

- Chen, M.; Wu, J.; Liu, L.; Zhao, W.; Tian, F.; Shen, Q.; Zhao, B.; Du, R. Dr-net: An Improved Network for Building Extraction from High Resolution Remote Sensing Image. Remote Sens. 2021, 13, 294. [Google Scholar] [CrossRef]

- Copernicus Open Access Hub. Available online: https://scihub.copernicus.eu/dhus/#/home (accessed on 6 July 2021).

- Xi, Y.; Thinh, N.X.; Li, C. Preliminary Comparative Assessment of Various Spectral Indices for Built-up Land Derived from Landsat-8 OLI and Sentinel-2A MSI Imageries. Eur. J. Remote Sens. 2019, 52, 240–252. [Google Scholar] [CrossRef] [Green Version]

- Satellite Data: What Spatial Resolution Is Enough? Available online: https://eos.com/blog/satellite-data-what-spatial-resolution-is-enough-for-you/ (accessed on 5 May 2021).

- Brodu, N. Super-Resolving Multiresolution Images With Band-Independent Geometry of Multispectral Pixels. IEEE Trans. Geosci. Remote Sens. 2017, 55, 4610–4617. [Google Scholar] [CrossRef] [Green Version]

- Armannsson, S.E.; Ulfarsson, M.O.; Sigurdsson, J.; Nguyen, H.V.; Sveinsson, J.R. A Comparison of Optimized Sentinel-2 Super-Resolution Methods Using Wald’s Protocol and Bayesian Optimization. Remote Sens. 2021, 13, 2192. [Google Scholar] [CrossRef]

- Snap Download. Available online: https://step.esa.int/main/download/snap-download/ (accessed on 2 January 2021).

- Banerjee, B.; Bhattacharya, A.; Buddhiraju, K.M. A Generic Land-Cover Classification Framework for Polarimetric SAR Images Using the Optimum Touzi Decomposition Parameter Subset—An Insight on Mutual Information-Based Feature Selection Techniques. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1167–1176. [Google Scholar] [CrossRef]

- Download OpenStreetMap Data for This Region. Available online: http://download.geofabrik.de/asia/india.html (accessed on 3 February 2021).

- Tan, M.; Le, Q.V. EfficientNet: Rethinking Model Scaling for Convolutional Neural Networks. In Proceedings of the 36th International Conference on Machine Learning, ICML 2019, Long Beach, CA, USA, 9–15 June 2019; pp. 10691–10700. [Google Scholar]

- Szegedy, C.; Vanhoucke, V.; Ioffe, S.; Shlens, J.; Wojna, Z. Rethinking the Inception Architecture for Computer Vision. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 2818–2826. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar] [CrossRef] [Green Version]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-Scale Image Recognition. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015—Conference Track Proceedings, San Diego, CA, USA, 7–9 May 2015; pp. 1–14. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Springer: Berlin/Heidelberg, Germany, 2015; Volume 9351, pp. 234–241. [Google Scholar] [CrossRef] [Green Version]

- Welcome to Segmentation Models’s Documentation! Available online: https://segmentation-models.readthedocs.io/en/latest/ (accessed on 4 August 2021).

- Adam. Available online: https://keras.io/api/optimizers/adam/ (accessed on 1 August 2021).

- Guo, Y.; Zhou, M.; Wang, Y.; Wu, G.; Shibasaki, R. Learn to Be Clear and Colorful: An End-to-End Network for Panchromatic Image Enhancement. IEEE Geosci. Remote Sens. Lett. 2022, 14, 3142994. [Google Scholar] [CrossRef]

| Sr. No | Study Area | Sensor | Bands Utilized | Central Wavelength (nm) | Spatial Resolution (m) |

|---|---|---|---|---|---|

| 1 | Bengaluru | Sentinel-2A | Red, Green, Blue, NIR, SWIR-1 SWIR-2 | 664.6 (Red), 559.8 (Green), 492.4 (Blue), 832.8 (NIR) | 10 m |

| 2 | Hyderabad | 1613.7 (SWIR-1), 2202.4 (SWIR-2) | 20 m |

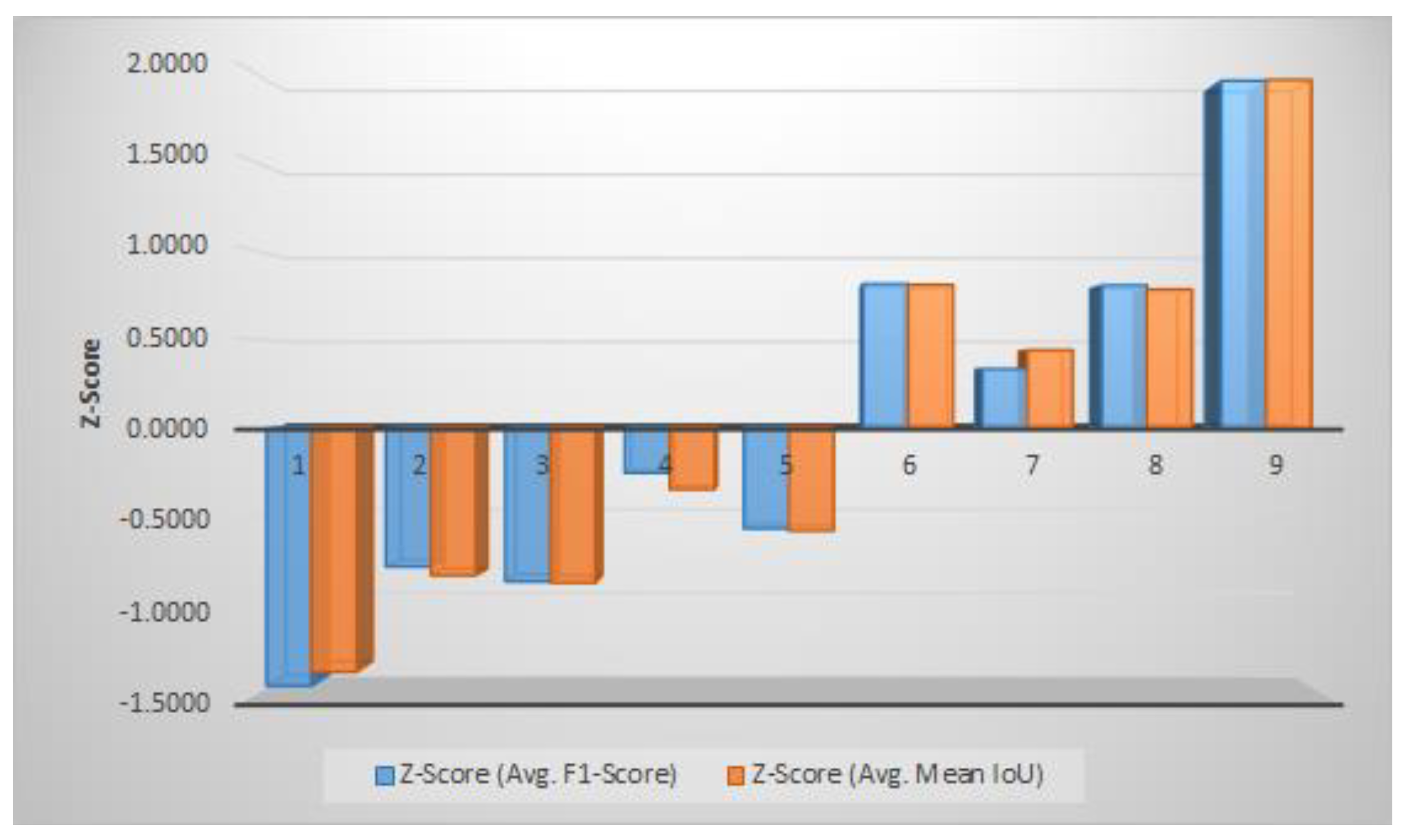

| S. No | Models | F1-Score (6 Band) | F1-Score (6+) | Mean IoU (6 Band) | Mean IoU (6+) |

|---|---|---|---|---|---|

| 1 | Unet_efficientnet_b0 | 0.5170 | 0.5220  | 0.622 | 0.626  |

| 2 | Unet_inceptionv3 | 0.5239 | 0.5379  | 0.625 | 0.632  |

| 3 | Unet_vgg16 | 0.5361 | 0.5396  | 0.633 | 0.634  |

| 4 | Unet_resnet18 | 0.5428 | 0.5489  | 0.637 | 0.639  |

| 5 | Unet_vgg19 | 0.5397 | 0.5514  | 0.633 | 0.640  |

| S. No | Models | F1-Score (6 Band) | F1-Score (6+) | Mean IoU (6 Band) | Mean IoU (6+) |

|---|---|---|---|---|---|

| 6 | Neural-Network-for-Road-and-Building-Extraction (JointNet) | 0.5225 | 0.5522 | 0.624  | 0.643  |

| 7 | Building Residual Refine Network (BRRNET) | 0.5517 | 0.5543 | 0.642  | 0.645  |

| 8 | Dilated-ResUnet | 0.5572 | 0.5663 | 0.646  | 0.650  |

| 9 | SegUnet | 0.5728 | 0.5820 | 0.654  | 0.660  |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dixit, M.; Chaurasia, K.; Mishra, V.K.; Singh, D.; Lee, H.-N. 6+: A Novel Approach for Building Extraction from a Medium Resolution Multi-Spectral Satellite. Sustainability 2022, 14, 1615. https://doi.org/10.3390/su14031615

Dixit M, Chaurasia K, Mishra VK, Singh D, Lee H-N. 6+: A Novel Approach for Building Extraction from a Medium Resolution Multi-Spectral Satellite. Sustainability. 2022; 14(3):1615. https://doi.org/10.3390/su14031615

Chicago/Turabian StyleDixit, Mayank, Kuldeep Chaurasia, Vipul Kumar Mishra, Dilbag Singh, and Heung-No Lee. 2022. "6+: A Novel Approach for Building Extraction from a Medium Resolution Multi-Spectral Satellite" Sustainability 14, no. 3: 1615. https://doi.org/10.3390/su14031615

APA StyleDixit, M., Chaurasia, K., Mishra, V. K., Singh, D., & Lee, H.-N. (2022). 6+: A Novel Approach for Building Extraction from a Medium Resolution Multi-Spectral Satellite. Sustainability, 14(3), 1615. https://doi.org/10.3390/su14031615