1. Introduction

Education that prepares learners for skill development and employment in various economic fields is referred to as career, technical and vocational training [

1]. The role of technical and vocational teachers is crucial in this regard and, therefore, emphasis must be placed on their development in both professional and pedagogical aspects. Teacher training improves the teaching practices of teachers, which results in the better learning of their students [

2]. The majority of school reforms and strategies place emphasis on professional development and teacher quality [

3,

4,

5]. The National Vocational and Technical Training Commission (NAVTTC) in Pakistan is the apex body at a federal level that is responsible for the development of technical and vocational education. Its core objective is to formulate policy to guide and direct the TVET sector in Pakistan and to also provide solutions to their challenges. The National Skills Strategy 2009–2013 (NSS, 2009) is a document formulated by the NAVTTC to provide policy directions with the necessary support for implementation to achieve the national goals of skill development, so that improved social and economic conditions could be achieved (NSS, 2009). According to the NAVTTC [

6], the TVET system of Pakistan is facing many challenges in the form of less trained people being of sub-standard quality; thus, being unable to compete in the national and international market and, consequently, a smaller number of people obtain the proper jobs relevant to their occupation. The NAVTTC [

6] came up with various solutions to provide quality education in the field of TVET. For this purpose, several actions were proposed, out of which the training of in-service vocational teachers was of utmost importance. According to the authors of [

7], teacher training is the most effective and interactive way to provide a guarantee of the successful development of teachers, whether it happens face-to-face [

8,

9,

10] or online [

11].

Technology advancements in recent decades have forced many organizations to make their employees more productive to compete in a rapidly changing world [

12]. Fuller et al. [

13] argued that organizations provide their employees with opportunities for training to improve their knowledge, skills and attitude relevant to their job role. Consequently, the NAVTTC, in coordination with GIZ, launched a training program for in-service vocational teachers in the field of pedagogy that adopted an innovative approach of blended learning. Instructional staff who are committed to providing the best education to their students always try to practice new teaching strategies and tools [

14]. The blended learning approaches were adopted for the very first time in the history of Pakistan to train TVET teachers.

According to Nortvig et al. [

15], blended learning has been viewed differently, and its definition changes with time; there were far fewer discussions over the terms “online” and “face-to-face” learning. When the technology was not involved in education, blended learning was thought to be the use of different teaching strategies in the classroom to facilitate learning [

16]. When face-to-face teaching was linked with technology, such as e-learning and online learning for facilitating student learning, Nortvig, Petersen and Balle [

15] noted that the focus of blended learning then shifted to the use of a mixed learning environment, i.e., face-to-face and online [

16]. The definition, which is generally acceptable, is the combination of online, face-to-face and offline learning in the same training course [

17]. The blended learning format used in Pakistan to train TVET teachers also included online, offline and face-to-face learning. It was completed in two phases: in the first phase, 100 master trainers were trained in 2012 and 2013, and in the second phase, these master trainers further imparted training to 8500 TVET teachers across Pakistan. In-service vocational teachers were from different subject areas, such as computing, electrical, dressmaking, paramedics, etc.

There is much less available empirical research on teachers’ professional development with the blended learning approach [

18]. Previous research covered blended learning in the general education sector and measured e-learning content, student achievements and the application of the innovative approach. For example, a piece of research was conducted to investigate the use of android-based learning materials for junior school classes [

19]. Another piece of research was also conducted on science teachers at a secondary school with a blended learning approach to ascertain their ICT knowledge [

20]. Student perceptions were measured about the application of the blended learning in previous research on university students [

21]. The relationship of blended learning with student achievements was also measured on primary school students [

22]. Thus, previous research showed that the effectiveness of the blended learning approach was not yet measured in the vocational education sector. The present study aimed to evaluate the training effectiveness of the blended learning approach adopted for in-service vocational education teacher training in Punjab.

Different approaches have been applied to evaluate different programs and projects. Approximately 22 approaches were gathered into five categories by Stufflebeam and Shinkfield [

23]. These were categorized as pseudo-evaluations, quasi-evaluation studies, decision/accountability-oriented studies, improvement- and accountability-oriented evaluations and social agenda/advocacy-directed approaches. The various sub-categories were also available for the procedures as mentioned earlier. Based on different approaches, different models for evaluation were presented by various researchers, such as Kirkpatrick’s, the CIPP, the experimental/quasi-experimental, the logic and Robert Stake’s countenance model [

24]. According to Royse et al. [

25], no model is of less importance, but they can be best used as per the needs and requirements of researchers. Kirkpatrick’s model was formulated, widely reviewed and applied in social sciences for evaluating programs and their effectiveness [

26]. The Kirkpatrick model was designed for objectively measuring training effectiveness. Each level represents a more precise measure of the effectiveness of a training program. Other models cover only one or two aspects of a training program, such as the CIPP model, which is useful for providing information during program development. In contrast, the Kirkpatrick model is applied after a program is implemented. It also covers the evaluation of online learning. The blended learning approach covers online learning, so the Kirkpatrick evaluation model fits well to evaluate the training program in totality. The research question under study was “what is effectiveness of in-service vocational teachers’ training program through blended learning approaches?”.

This study could be used as additional knowledge to previous research for finding out the effectiveness of in-service vocational education teacher training programs through blended learning approaches in Punjab, Pakistan. There was a gap in the research study of teacher training with blended learning approaches in the vocational sector, so our paper could provide guideline to teacher training providers to plan their professional development training program as per the recommendations of the current study. The present research provides knowledge about the provision of in-service TVET teacher training programs for TVET institutions in Pakistan, as well as the effectiveness of in-service TVET teacher training programs for the vocational institutes of Pakistan. There are less research about the provision of in-service TVET training programs through blended learning. The additional knowledge to previous existing investigations was distinguished through the application of a theoretical framework for evaluating in-service TVET teacher training programs. Moreover, two levels (reaction and learning) of the Kirkpatrick model have been used in previous research in different fields of education [

27]. This research is a new addition in the application of the four levels of the Kirkpatrick model in the field of TVET teacher training.

This study focuses on exploring the effectiveness of existing training programs of TVET. This study provides guidelines for policymakers, including political and TVET leadership related to the existing skill development of Pakistan policies. Moreover, this study also provides some valuable suggestions to donor agencies. This study could potentially allow for the NAVTTC to reflect on their provision of in-service TVET teacher training through blended learning approaches. It could also provide an opportunity to review the existing training programs of TVET. This research can be a beacon of hope for policymakers to give them an insight into the execution of the existing skill development of Pakistan policies, and prove to be an invaluable source to politicians for formulating TVET policies in a better way in the future. Finally, it could also be a source of awareness for donor agencies about the utilization of their aids in the field of TVET, so that they can channelize their aids in a better way. The above points are discussed, providing the insight to understand how existing TVET training programs work and how they can be improved upon.

2. Theoretical Framework

Blended learning is a kind of teaching model that encompasses both face-to-face and online learning, which means students receive real time and independent engagement. Real-time student engagement means synchronous environments, and independent student engagement means asynchronous environments [

28,

29,

30]. Blended learning, which combines online and face-to-face learning, has been touted as having numerous benefits [

31,

32], since the various modes, i.e., online, offline and face-to-face, can complement and mutually reinforce one another [

33,

34]. Technology plays a key role in nurturing the blended learning approach [

35]. It also improves school accountability, teaching skills, communication skills, creation and the analytical approach [

36,

37]. According to Alvarado-Alcantar, Keeley and Sherrow [

28], enrolment at the secondary level of the blended learning approach increased 500% from 2002 to 2010 in America, which shows the acceptability of the blended learning approach.

This is the age of information technology, and many institutions have started to impart online learning [

38,

39]. Due to drastic changes in technology, students have multiple options of how to interact with teachers and their class fellows. This is in practice in the education system, but in technical and vocational education, online learning has not been fully adopted. Therefore, it was the need of the hour to develop a teacher training program in the field mentioned above. Online learning provides solutions to problems faced in conventional education; however, it also imposes some problems, e.g., separation, remoteness, inadequate feedback, distancing from group members and a lack of professionalism [

40,

41,

42]. In this critical situation, blended learning emerged as a solution to problems faced in both approaches, as it meets online and teaching needs [

43]. Teachers and students are more satisfied with the blended learning approach as compared to the single approach due to its effectiveness. It provides the facility of using different resources that improve the interaction between students and teachers and self-paced learning [

43].

Moore, Robinson, Sheffield and Phillips [

37] argued that higher education institutions are targeted to develop their faculty by adopting the blended learning approach as presented in previous research. There is a lot less research available showing the implementation of blended learning in the professional development of teachers at the secondary level, and only a few studies with guidance on how the blended learning approach affects instructional design and planning [

37]. Different blended learning frameworks and models are available that provide standards to assess teachers’ integration of blended learning [

44], but research is still required for the evaluation of the in-service teacher training of vocational teachers through the use of the blended learning approach [

45]. According to Parks et al. [

46], the requirement of teachers for any kind of training is very important, and should be considered while planning professional development courses. It was further suggested that the blended program should be research-oriented, relevant and must have a long-term impact. According to Shand and Farrelly [

47], a training program with a combination of pedagogy and technology results in effective learning. Ref. [

48] argued that the best practices of a conventional training program should be adopted along with online considerations in a blended learning program. The best way is the alignment of online standards, best teaching practices of conventional teaching and active learning [

46]. Ref. [

48] suggested that the time period for the teaching learning process should be increased through the inclusion of technology essential for a blended learning program. Thus, an effective and quality-oriented blended learning course would recognize the requirements of teachers, address reservations and fears about changes and highlight the need for new strategies to be adopted for a better teaching learning process [

45,

49].

There are a number of professional development training courses that support teaching learning activities through blended learning [

45,

47]. However, according to Alvarado-Alcantar, Keeley and Sherrow [

28], blended learning programs at a secondary level have been increasing since 2002, but the growth of the professional development of teachers with blended learning is still very low and it was needed to increase their technology accepted behavior that would be help for them to take advantages blended learning approach [

50]. According to Archambault et al. [

51], the level of teachers who previously attended courses for delivering online teaching was only 4.1%. Additionally, there is a smaller number of teachers available who were provided with professional training support to teach blended learning courses [

51]. Moore, Robinson, Sheffield and Phillips [

37] are of the opinion that when teachers are not supported with professional development training, they search for their own opportunities to learn. According to Refs. [

46,

52], less professional development training for teachers creates confusion regarding the effectiveness of the blended learning approach. The success of the blended learning approach for the professional development of teachers to teach in a blended learning environment is related to well-planned and organized teacher training [

17]. Limited research is available in comparison to higher education in the field of the professional development of teachers with a blended learning approach. There were only ten research works available on teacher education out of the 176 results of the meta-analysis of online research conducted from 1996 to 2008 [

18]. It is also pertinent to mention that there is no empirical study available on the professional development of teachers with a blended learning approach [

53]. As per the discussion thus far, it is worth mentioning that a blended learning approach is available, but limited research has been conducted on the professional development of teachers. Therefore, it was direly needed to investigate the effectiveness of blended learning empirically.

2.1. Evaluation of Training

Employee productivity is critical for businesses to succeed in the market and outperform their competition. Businesses’ human resource departments or training departments can assist in achieving this goal by providing training opportunities for employees to increase productivity [

54]. Employee knowledge, abilities and attitudes are developed through training in such a way that existing job performance is improved and employees are prepared to deal with future issues [

55]. Employee performance is defined as the achievement of duties and assignments according to desired criteria [

56]. During training, individuals learn the skills required to complete the assigned tasks effectively [

57]. Therefore, from different perspectives, it is evident regarding the term training that a job description is provided to every individual working in an organization and they are supposed to perform their duties and tasks effectively; for this purpose, they are provided with training to achieve the desired tasks.

The ability to bring out the required results or outputs is referred to as effectiveness. It shows the attainment of the desired need. According to Homklin [

58], the term training effectiveness is implied to improve the overall process of training in order to achieve established goals. Goals are established for any training program first, and then delivered to the target audience, and the achievement of set goals shows the effectiveness of that training program [

59]. Thus, we can stated that the effectiveness of a training program can be determined by examining the training program with respect to the established goals. There are two basic concepts attached to the term training effectiveness, one is the training itself, while the other is the effects it triggered on the trainees [

60]. Whatever is learnt from any executed training program can be implemented at the workplace to accomplish assigned tasks [

61]. According to Mohammed Saad and Mat [

62], an effective training process is the measurement of the process of delivering or executing the training program, while another element of training effectiveness is the improvement in the performance of individuals.

According to Desimone [

59], the definition of a training evaluation is the well-organized gathering, analysis and synthesis of essential information required to determine a decision about the training effectiveness. The aforementioned definition clearly identifies detailed information about a training program, which can depict what is happening or has happened in any training program or development intervention. The evaluation of training programs is very important and useful for various stake holders, such as HRD professionals and managers to take appropriate measures regarding particular training programs and methods. Phillips [

63] pointed out that there are also many benefits of training evaluations, including providing information about the achievement of objectives, identifying the strengths and weaknesses of a particular training program, highlighting the changes that are essential to improve training programs, identifying the individuals who can participate in future training programs, identifying the top and bottom performers of the development program, facilitating in marketing the evaluated program and providing a readily available database for the management to determine decisions. Zenger and Hargis [

64] suggested the reasons for conducting a training evaluation, i.e., benefits for the budgets of training providers due to the authenticity of training as determined in the evaluation of a training program, because sometimes in budgets, funds are removed for human resource development due to tough financial conditions. The second reason is it improves the credibility with top managers in an organization.

A training evaluation and training effectiveness are both theoretical approaches for measuring learning outcomes and training effectiveness. A training evaluation depicts a micro-view of training results, and training effectiveness puts emphasis on the learning as a whole and, consequently, provides the macro-view. The training evaluation identifies the learning benefits gained by the participants and improvements in their job performance. Effectiveness shows the benefits that an organization gained as a result of the development program. The results of the evaluation of training programs elaborate what happened as a result of the training intervention. The results of the effectiveness describe why those results happened and also provide recommendations to improve the training program [

65].

According to Alvarez, Salas and Garofano [

65], there are several methods for the evaluation of the effectiveness of training programs, but the most liked and acceptable method is the Kirkpatrick four-level evaluation model [

66]. It provides a clear picture regarding the training program and what was and was not achieved [

67].

2.2. Evaluation Models

The evaluation of programs and initiatives can be performed in a variety of ways. There were approximately 22 approaches gathered into five categories by [

68]. These were categorized as pseudo-evaluations, quasi-evaluation studies, decision/accountability-oriented studies, improvement- and accountability-oriented evaluations and social agenda/advocacy-directed approaches. The various sub-categories are also available for the procedures as mentioned earlier. According to Ref. [

69], there are four types of program evaluation methods. These include the program-oriented approach, consumer-oriented approach, decision-oriented approach and participant-oriented approach. According to Kashar [

2], comprehensive judgements of a program’s efficiency require expertise and a consumer-focused approach. The knowledge-based method is based on the technical competence of the program evaluator or a group of subject-matter experts. The main objective of both approaches is to find out the worth of the program and are applied in many fields. Kashar [

2] argued that major empirical research has not utilized these approaches for program assessments.

The assessment methodologies are included in the program-oriented approach, which highlight a program’s qualities. Logic models and the program theory are the focus of this approach. The logic model can be used to identify inputs, outputs and short-, medium- and long-term consequences. Logic models give logical relationships, simplified visual explaining and the underlying reasoning for a program or project [

2].

The focus of evaluators is on the decision making in the decision-oriented approach [

69]. Through the examination and identification of a program’s decisions, success can be achieved in supporting managers in creating transparency and progress in a program [

2,

68]. Participants, sponsors, investors and program administrators, as well as those with a stake in the initiative, are targeted. One of this approach’s strengths is its ability to improve the program members’ awareness and utilization of program assessments [

2].

Among the many evaluation models based on various techniques, there are Kirkpatrick’s, CIPP, the experimental/quasi-experimental, the logic and Robert Stake’s countenance models [

24]. According to Royse, Thyer and Padgett [

25], no model is of less importance, but can be best used as per the needs and requirements of researchers.

A researcher can analyze four components of a program’s development and implementation using the CIPP program evaluation model, which includes the context, input, process and product [

70]. The context is generally thought of as a type of needs assessment that aids in determining an organization’s needs, assets and resources in order to develop programs that are appropriate for that organization. Evaluators work with stakeholders to identify program beneficiaries and create program goals in the context assessment component [

71]. Various programs are investigated during the input evaluation component to see which ones most closely address the evaluated needs. The program’s sufficiency, feasibility and viability, as well as the financial, time and personnel expenses to the organization, must all be considered [

71]. The process evaluation component focuses on the appropriateness and quality of the program’s implementation. A program’s intended and unexpected effects are identified via the product evaluation component [

72].

2.3. Kirkpatrick Model for Training Evaluation

A training evaluation is very important for making training programs more effective in increasing the productivity of individuals. Effective training programs have positive effects on employees, enabling them to meet current challenges in their respective fields. Thus, training researchers have a consensus on the importance of training evaluations [

73]. The Kirkpatrick model was used in this research for the training evaluation due to the following reasons:

Academics and human resource development practitioners utilize the Kirkpatrick four-level model to measure training success, since it is more comprehensible and well-established. Kirkpatrick updated this model multiple times, including in 1959, 1976, 1994, 1996 and 1998 [

55]. According to [

74], Donald Kirkpatrick’s four-level assessment model is one of the most well-known and widely utilized evaluation models for the training and development of programs. The model provides a basic framework or vocabulary for talking about training outcomes and many sorts of data that can be used to assess how well training programs reached their objectives. Kashar [

2] discussed that more than two hundred leadership training programs have been evaluated by using different levels of the Kirkpatrick model according to a research review provided by Throgmorton et al. [

75]. This model provided a baseline for the emergence of other models of evaluation [

2,

55,

58,

76,

77]. One or two Kirkpatrick levels are very often used by organizations to measure the effectiveness of training programs. The latest model of four levels is very comprehensive, simple and easy to use, which is why business and academic circles prefer to use it for training evaluations. According to Miller [

78], the Kirkpatrick model provides an easy way of evaluating training programs. It is evident from various research works that finding out the training effectiveness by using the Kirkpatrick model has been accepted and well established [

2,

55,

77,

78]. This model also met their expectations. According to Homklin [

58], the four levels of the Kirkpatrick model provide the most useful theoretical information about the training program under study. The major success of this model lies in the fact that it provides an opportunity to find changes in learner behaviors in the form knowledge and skills, and its application in the real working world [

55]. Reio, Rocco, Smith and Chang [

74] discussed that the Kirkpatrick model provides a well-organized and structured way for a formative and summative evaluation in such a way that the training process can be improved upon or completely reconstructed on the basis of the evaluation.

2.4. Criticism on Kirkpatrick Model

Though the four-level Kirkpatrick model has been greatly admired and well accepted there have still been criticisms. The first criticism is of its incompleteness and knowledge addition with the emergence of the four-level Kirkpatrick model training evaluation [

55]. Guerci et al. [

79] argued that a training evaluation is a dynamic process that is not as simple as that described by the Kirkpatrick model. He further argued that it is not possible to combine individual and company impacts in the assessment. Al-Mughairi [

55] disagreed with the argument by saying that it is inappropriate to consider such considerations while using the Kirkpatrick model for a training evaluation. A meta-analytic review of the literature of the training effectiveness of the Kirkpatrick model was conducted by Alliger et al. [

80], and it was found that the Kirkpatrick model provided a rough taxonomy of criteria and vocabulary. There was another finding at the same time that an over generalization and misunderstandings are possible due to the vocabulary and rough taxonomy for criteria [

80]. The Kirkpatrick model also has the problem of being unclear on training. However, the model under discussion is much more popular and influential among practitioners [

81]. According to Alliger, Tannenbaum, Bennett Jr, Traver and Shotland [

80], the Kirkpatrick model still communicates an understanding about training criteria. The model was also discussed in light of the assumptions present in the minds of trainers and researchers, including each successive level of the evaluation providing more comprehensive information to the organizations regarding the training effectiveness, every level being caused by the previous level and the levels being positively interconnected. It was also organized as a hierarchal training evaluation model due to the aforementioned assumptions [

82].

According to Holton III [

76], the involved construct is not properly defined in the four levels of the Kirkpatrick model, and, thus, simply provides a taxonomy of outcomes. Therefore, it is flawed as an evaluation model. He further argued that more tests are required to establish it as a completely defined and researchable evaluation model. Kirkpatrick [

81] responded to this objection by saying that he was happy that his work was not considered as taxonomy, as trainers could not understand it completely. The aforementioned criticism brought a positive improvement in the model and, as a result, organizations still utilize this model on a considerable level.

2.5. Components of the Kirkpatrick Model and Their Relationships

The Kirkpatrick model mainly comprises of four levels of evaluation. These include reaction, learning, behavior and results. Following are the details of all four levels of this model:

2.5.1. Reaction Level

The first level deals with a participant’s reaction towards various aspects of the training, including the trainer, training materials and management of training. Participants at this level express their feelings about the value of the training program as to whether it was productive or not [

27]. The nature of this level is multi-dimensional, because of the fact that it measures trainee satisfaction about the overall aspects of the training program [

83]. Researchers identified 11 categorizations of this level due to its multi-dimensional nature [

27]. According to Reio, Rocco, Smith and Chang [

74], the educational contents are the main concentration point of the level. If the participants give a positive reaction to level one, it means that, at this stage, training is marked as being good. However, this level is not a guarantee for the success of level two and further levels [

78]. According to Miller [

78], the Kirkpatrick model stresses the value of the level one assessment in various organizations. He further elaborated that a level one achievement is not a guarantee of success in learning, behavior or results of the organization [

78]. According to Reio, Rocco, Smith and Chang [

74], no behavioral improvement, which is level three, is very crucial to assess all responses of level one and level two. This level can be evaluated by using questionnaires [

84]. The present study used the four-level Kirkpatrick model for the training evaluation to find out the effectiveness of the in-service training of vocational teachers.

2.5.2. Learning Level

This level deals with the assessment of the learning acquired by the participants in the form of knowledge, skills and attitude [

85]. According to Miller [

78], the learning level refers to the content achievement of training participants in the form of knowledge and skills as a result of participating in a training program. The second level of the model explains the success or failure of the training program, as it depicts the achievement of the set goals [

78]. According to Kirkpatrick et al. [

86], the second level determines what was gained by the training participants in the form of knowledge and acquired skills and competencies as a result of attending a training program. Level one and level two assessments are suggested by various researchers before moving onto level three [

58]. The previous research literature showed that level two is the most common assessment level of training programs [

78]. In order to demonstrate the value of a training program, it is very crucial to provide evidence of the knowledge and developed skills as a result of training [

78]. The evaluation of learning is of utmost importance, because there can be no change in behavior without learning [

86]. Therefore, it is evident from the discussion above that an assessment of learning is very important to gauge the training effectiveness.

2.5.3. Behavior Level

This level assesses the application of the learned skills at the workplace [

27]. Kirkpatrick and Hoffman [

87] discussed that the behavior level measures how effectively the participants implement the knowledge and skills learned during the training program at their workplace. The assessment of the behavior level is very useful, because it shows the transfer of gained knowledge [

74,

78]. According to Reio, Rocco, Smith and Chang [

74], without measuring the change in the behavior, it is not possible to measure the results of the training program. According to Kirkpatrick and Kirkpatrick, Fenton, Carpenter and Marder [

86], as per his assumption, level three is not very appreciated. Trainers work really hard on level one and level two, because they have control over the aforementioned levels. For the implementation of new skills, sufficient time is required by the training participants [

27].

2.5.4. Results Level

Level four provides the attainment of the results as a result of the training executed [

58]. According to Miller [

78], level four identifies the training effectiveness. The author of [

2], Al-Mughairi [

55] and the authors of [

58,

74,

78,

87] recognized level four as the most challenging and difficult to evaluate training effectiveness. According to Miller [

78], Kirkpatrick was against the evaluation of only levels three and four while ignoring level one and two. According to Al-Mughairi [

55,

58,

74,

78], the role of all four levels of the Kirkpatrick model is very important in evaluating the effectiveness of a training program. The Kirkpatrick training evaluation model is implemented after the training has been delivered, so it is a summative-type evaluation that provides the overall effectiveness of the training program and guides whether to continue the training or if it needs some improvements [

78]. According to Pineda-Herrero et al. [

88], organizations invest a lot of resources into training and development, but hardly any of them evaluated it, whether the training served its purpose and whether it was worthwhile against the cost incurred on it.

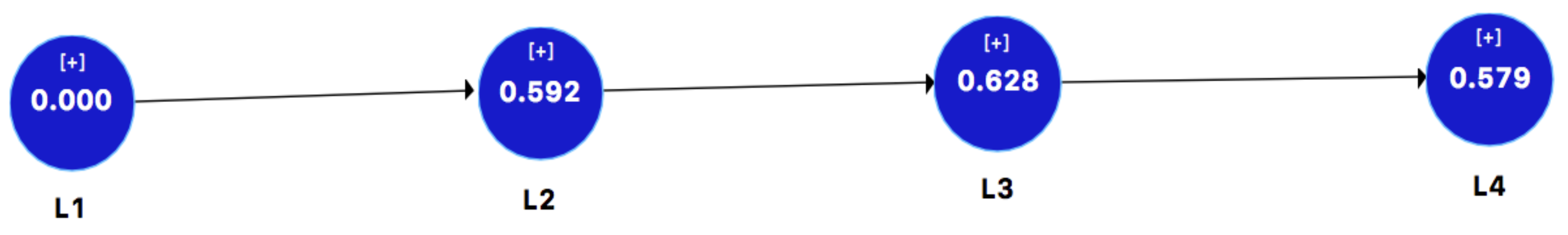

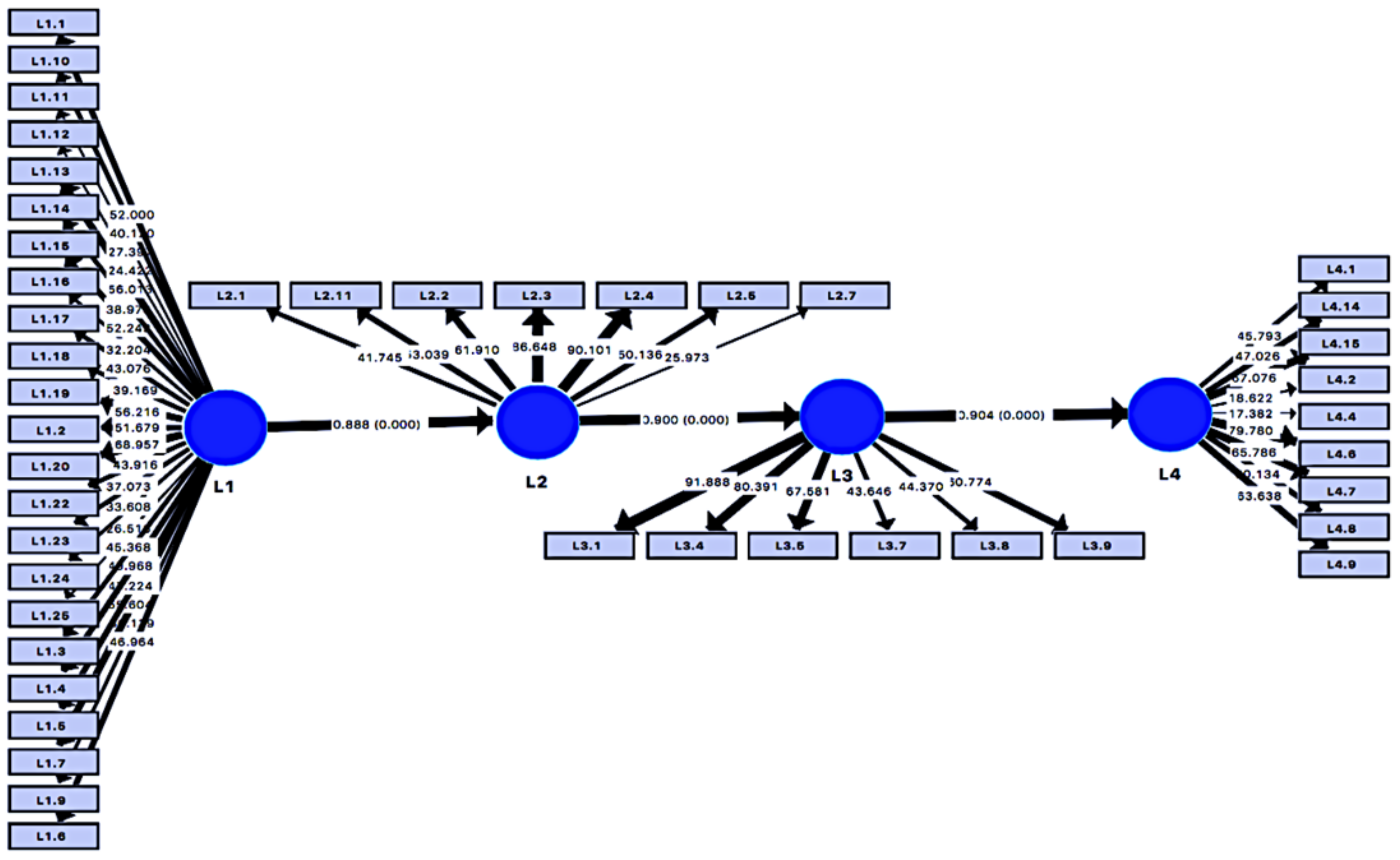

The overall hypothesis of the study under discussion is,

H0. There is a hierarchal relationship among the four levels of the Kilpatrick model for the training evaluation.

H0 (a). Level one influences level two.

H0 (b). Level two influences level three.

H0 (c). Level three influences level four.

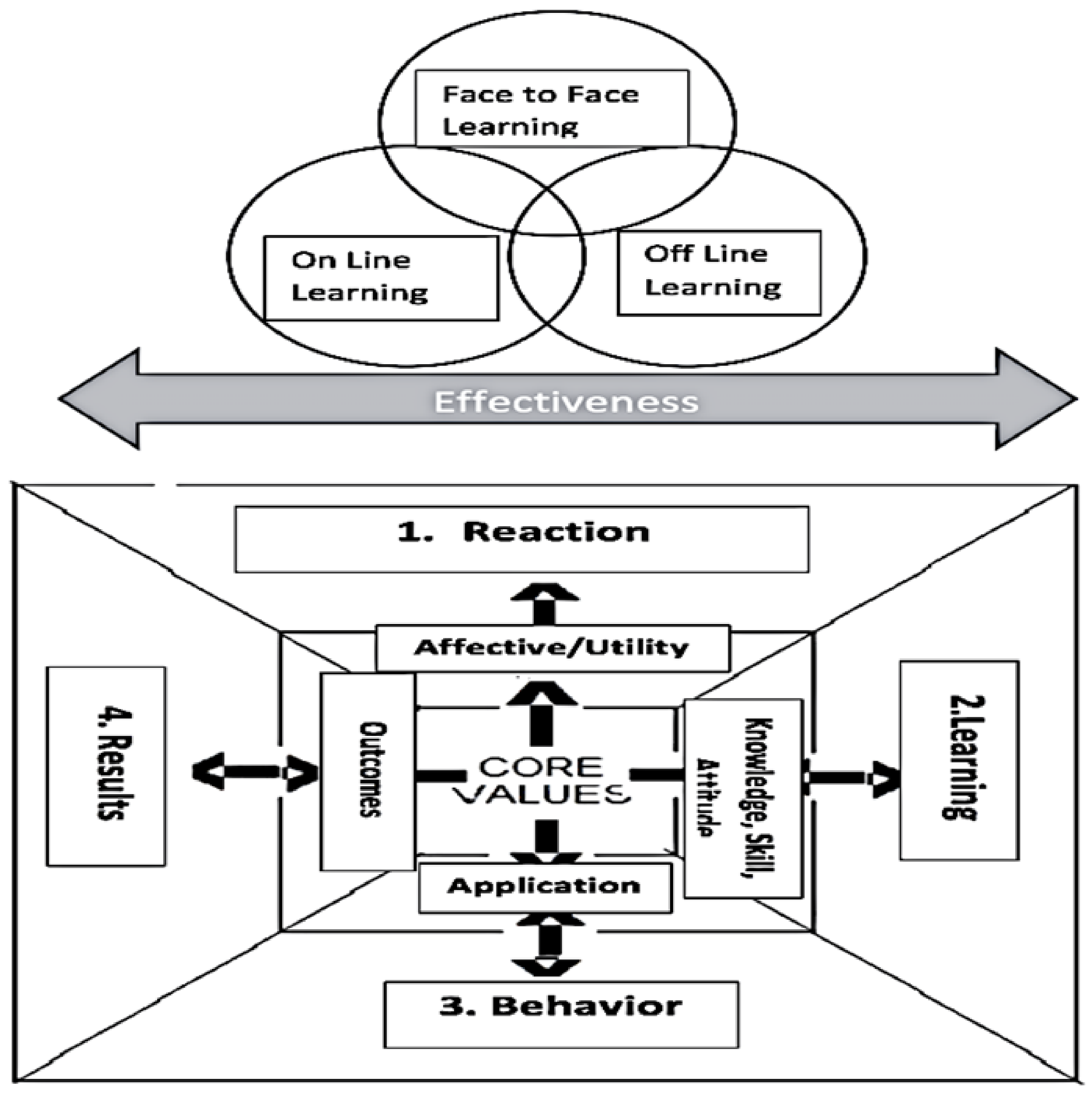

The overall conceptual framework of the study is given in

Figure 1.

7. Discussion

The present study aimed at evaluating the effectiveness of in-service vocational education teacher training through blended learning in Punjab. A training evaluation was conducted by using the four levels of the Kirkpatrick model. Further, the aim was to find the hierarchal relationships among the four levels of the Kirkpatrick model, and to comparative the effectiveness of the various modes of blended learning, i.e., online, offline and face-to-face. To the best of our knowledge, this was the first study in a developing country to evaluate the available in-service vocational teacher training programs through blended learning approaches. In this section, we discussed the knowledge contributions and practical implications of the study.

The Kirkpatrick training evaluation model was used to evaluate the training effectiveness of in-service vocational teacher training with blended learning approaches in Punjab. It was evident from the findings that respondents agreed on the effect of level one, the reaction, which meant satisfaction from the trainer, training materials, training environment and all modes of blended learning approaches, i.e., online, face-to-face and offline. Bates [

107] and Kirkpatrick, Fenton, Carpenter and Marder [

86] showed that the reaction level is very important in evaluating a training program. Results showed that, for the second level of the Kirkpatrick model, the in-service vocational teachers showed their consensus on gaining knowledge, skills and work-related attitudes through the various modes of training with blended learning. The results indicated that vocational teachers learned different learning approaches, teaching methods, activity-based learning, the division of training contents into sections to make content understandable and easy for students to understand, classroom management, time management, making lessons interesting, creating attractive PowerPoint presentations, lesson planning, working at the workshop floor level and the use of IT resources. The results for the behavior level, which is the third level of the Kirkpatrick model, revealed that participants also agreed that they implemented the learned skills at their workplace, which benefited them and their students. Finally, the respondents were also satisfied with the results level that reflected the achievement of training objectives. The results of the current study confirmed the outcomes of the previous studies concerning the training effectiveness of in-service vocational teachers with blended learning approaches [

108,

109]. According to Mir [

110], the satisfaction level is very important, and predicts the successful achievement of the learning outcomes. Kashar [

2] argued that teacher training brings improvements into the teaching practices of the teachers, which resulted in the better learning of their students. Puangrimaggalatung [

111] viewed teacher training programs as beneficial for their professional development in terms of improvements in the teaching process and practices. According to Jahari [

112], the teacher training improved the quality of teachers.

Four levels of the Kirkpatrick model were used in this study to find the hierarchal relationship for in-service vocational teacher training through blended learning approaches in Punjab. The results showed that all observed variables had sufficient correlations. The reaction level had a strong relationship with the knowledge level, the knowledge level had a strong relationship with the behavior level and the behavior level had a strong relationship with the results level. However, the results level and reaction level had a weak relationship. The hierarchal relationship was also measured in various other empirical research works in the past, such as [

2,

41,

58,

80,

110,

113,

114,

115,

116,

117,

118,

119,

120]. The hierarchal relation among the levels of the Kirkpatrick model was also extracted by various researchers in their research studies, e.g., [

58,

61,

121,

122,

123,

124,

125,

126,

127,

128] and meta-analyses of training evaluation studies by [

80,

113]. The current research endorsed the results of previous research works in the vocational sector.

8. Conclusions

The study conducted an evaluation of in-service vocational teacher training programs in the context of a blend of face-to-face, online and offline learning approaches. The study concluded that respondents agreed as to the training effect of level one of the Kirkpatrick model, reaction, which meant satisfaction from the trainer, training materials, training environment and all modes of blended learning approaches, i.e., online, face-to-face and offline also worked well. Moreover, it was concluded that training influenced the second level of the Kirkpatrick model for the in-service vocational teachers, which including gaining knowledge, skills and work-related attitude through various modes of training with blended learning. It was also concluded that the vocational teachers learned different learning approaches, teaching methods, activity-based learning, the division of training content into sections to make it more understandable and easy for students, classroom management, time management, making lesson interesting, creating attractive PowerPoint presentations, lesson planning, working at the workshop floor level and the use of IT resources. We also concluded that the third level of the Kirkpatrick model training had effects on the behavior dimension, that the participants also agreed that they implemented the learned skills at their workplace, which benefited them and their students. It was also concluded that at the satisfaction level, the participants reflects the achievement of training objectives.

Vocational training institutes (VTIs) should provide at least one desktop with an internet connection in the lab of every trade being taught at that institute. Different trades are taught at vocational centers, e.g., auto-mechanics, dress making, food and cooking, beauticians, computer operators, auto CAD, auto electricians, etc. This could help teachers of non-computer trades to complete their assigned tasks during a non-presence phase and can also implement the learned skills in real work environments. It would increase the effectiveness of in-service teacher training with the blended learning approach. For this purpose, VTIs should allocate a budget for IT resources in non-computer trades. Like some VTIs, the heads of other institutes should also nominate IT instructors to provide guidance and support to the non-computer trade instructors. In order to implement this strategy, the head office should send instructions to the heads of vocational institutes to provide such technical support to the instructional staff participating in the in-service training with the blended learning approach. In addition to this support, basic IT skills training can be provided to non-IT trade instructors from their IT instructors prior to nomination for in-service training through blended learning approaches. For this purpose, every institute could use its existing lab after the institute’s working hours. This would help to obtain the maximum benefits of the blended learning approaches.

Blended learning is an innovative approach that provides flexible learning opportunities in different learning environments. It is a new approach for the vocational sector of Pakistan, so future research recommendations are as follows: The present research was conducted to evaluate blended learning for the vocational stream, and now, it is recommended to conduct research in the technical stream comprising teaching at a diploma level. A comparative analysis research of blended learning effectiveness for different occupational trades, e.g., fashion design, computer operators, electricians, clinical assistants, etc., can be conducted.