Load Balanced Data Transmission Strategy Based on Cloud–Edge–End Collaboration in the Internet of Things

Abstract

:1. Introduction

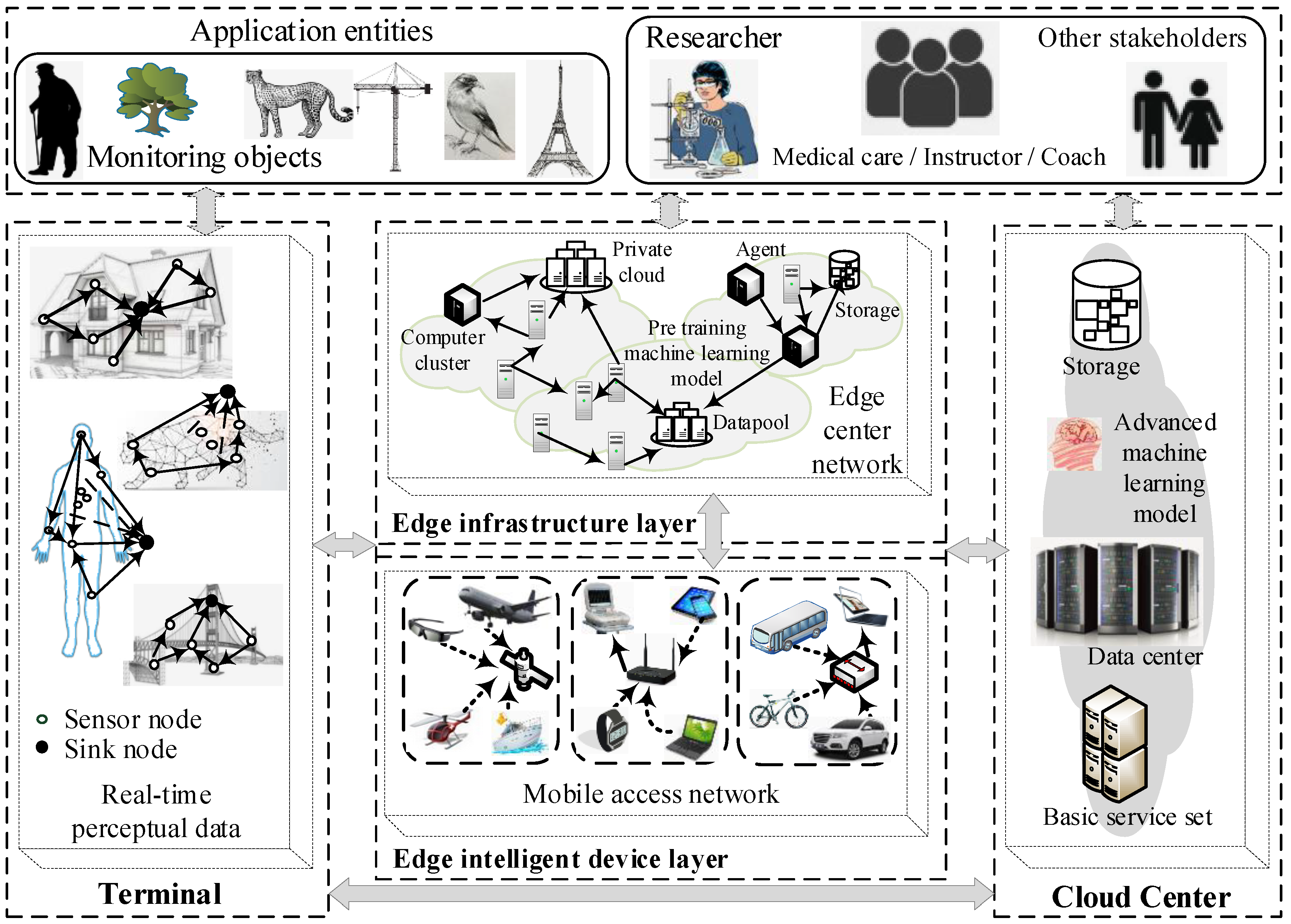

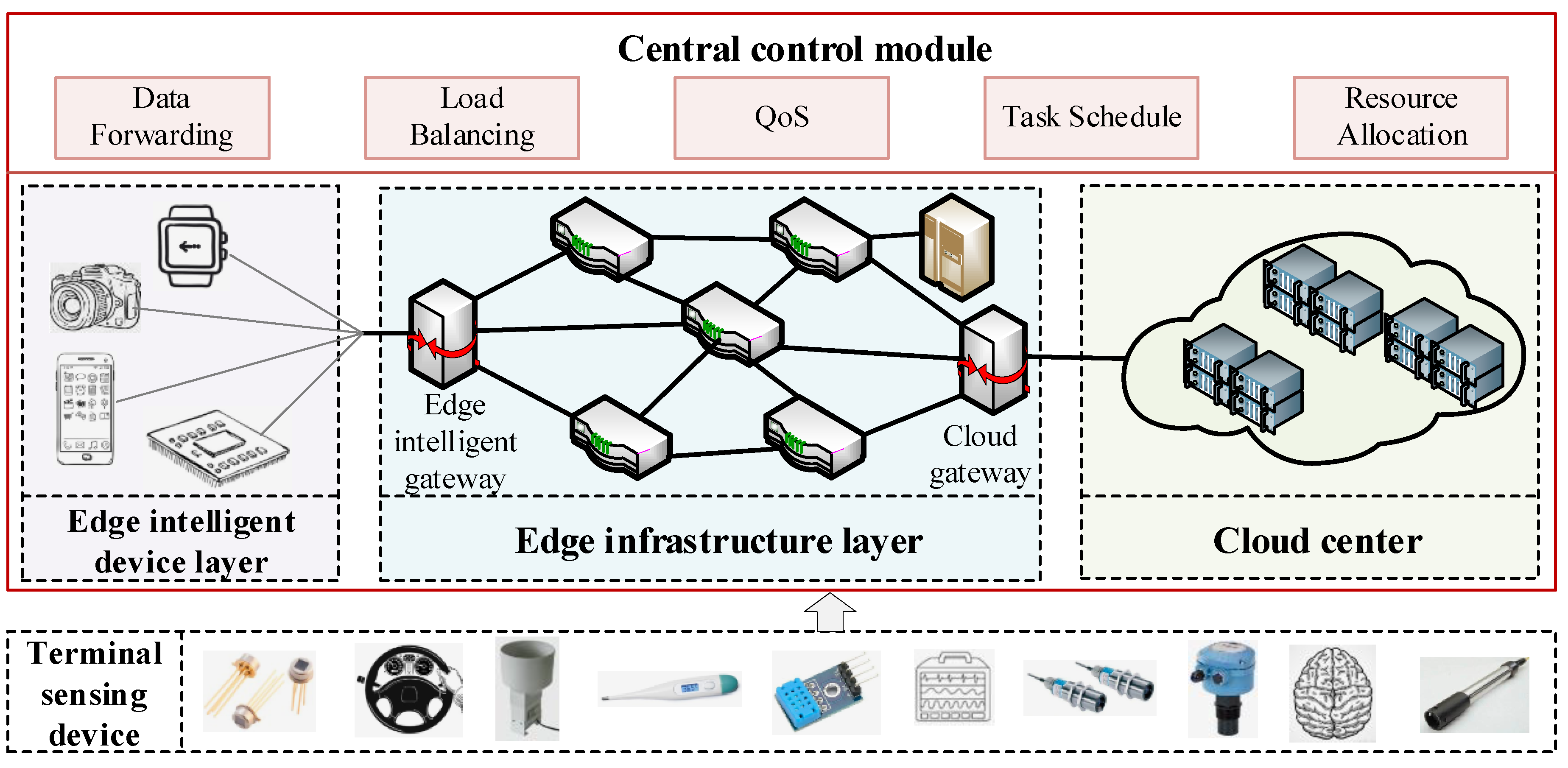

- Taking advantage of edge computing, this paper proposes a novel IoT architecture under CEE collaboration. This heterogeneous framework includes terminal sensing devices, edge devices, cloud center devices, and other related application entities. Its novelty is that the edge cloud is divided into the edge intelligent device layer and the edge infrastructure layer according to the different data transmission and processing capabilities of edge devices to meet different needs of different types of data and applications in the IoT ecosystem for task processing response time.

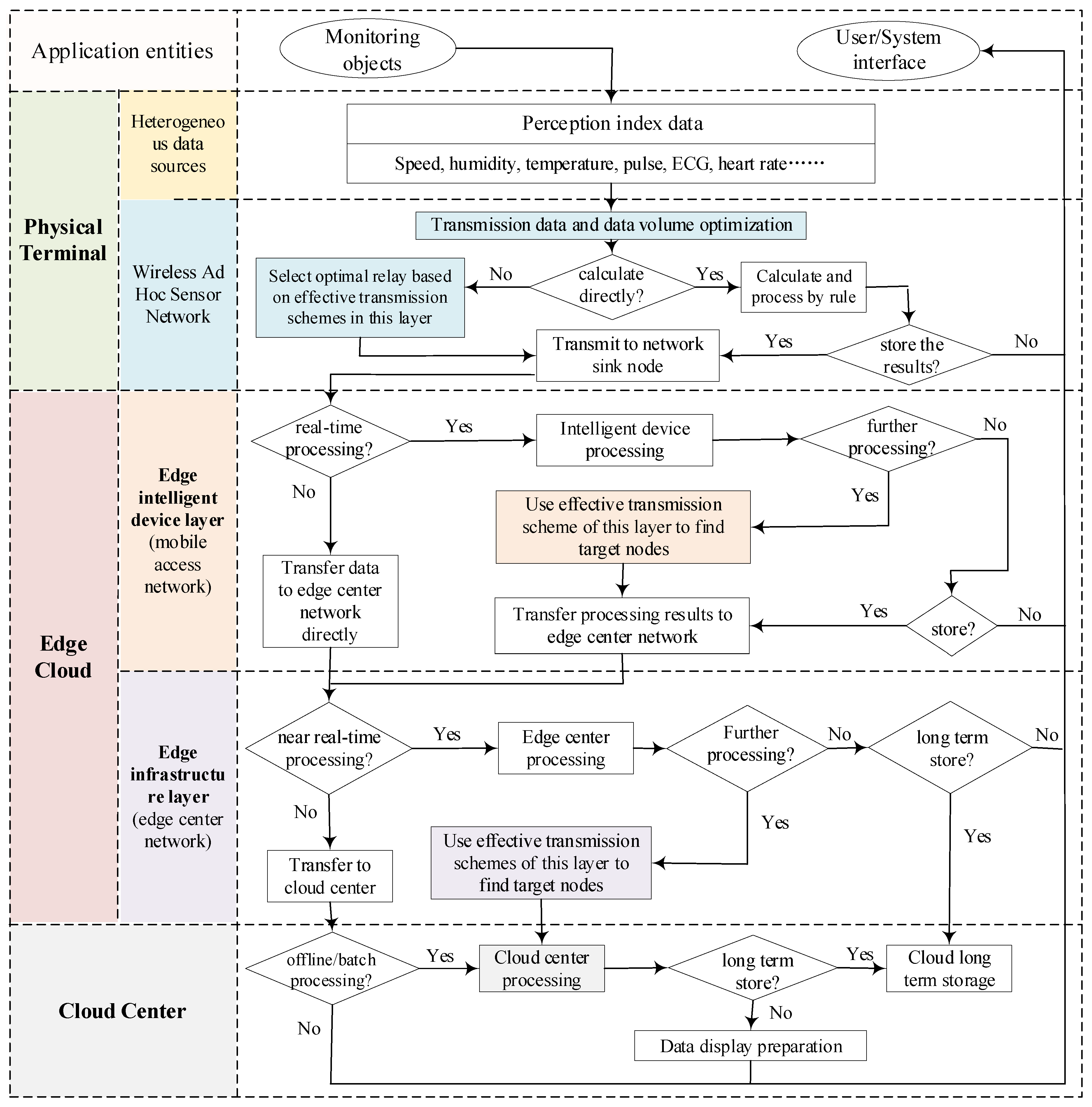

- Based on the cooperative CEE IoT architecture, a highly reliable layered data transmission strategy is designed. This strategy adopts the principle of dynamic resource allocation to set corresponding transmission paths for different types of IoT service data, such as real-time, near-real-time, and offline/batch processing modes; dynamically optimize system resources on demand; balance network load; reduce data transmission delay; and improve data delivery rate.

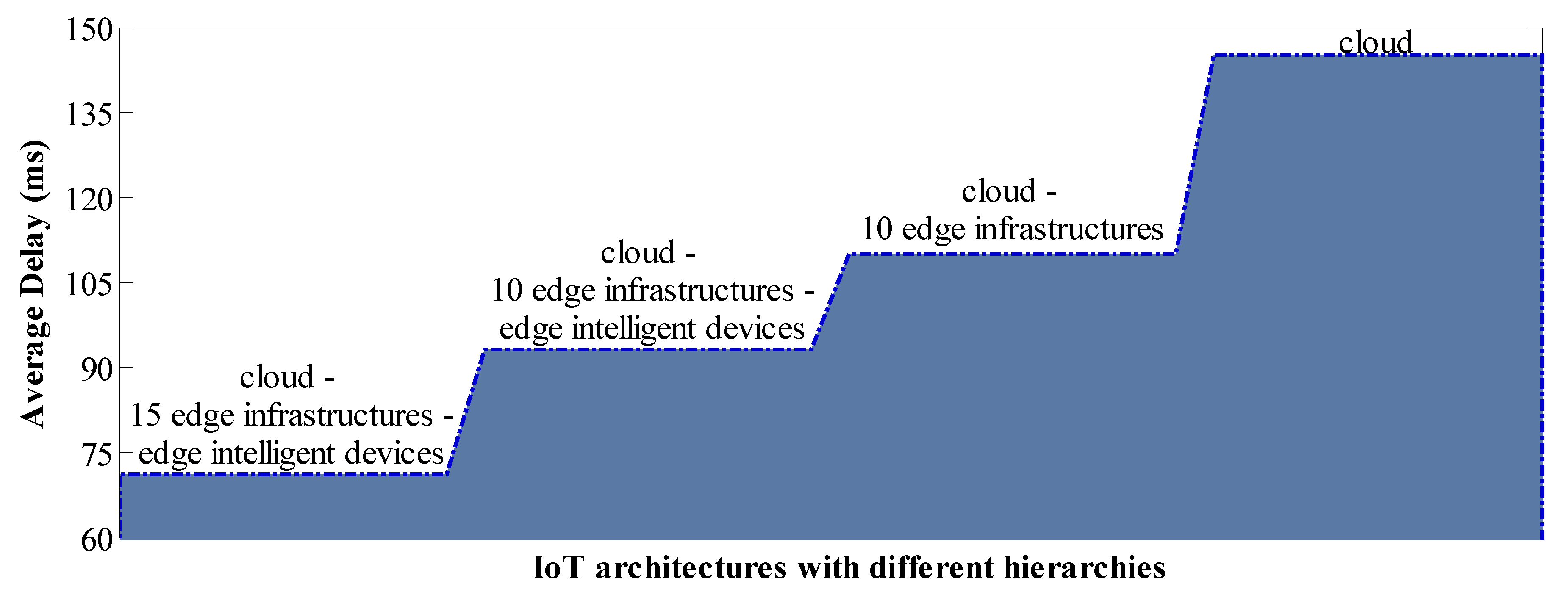

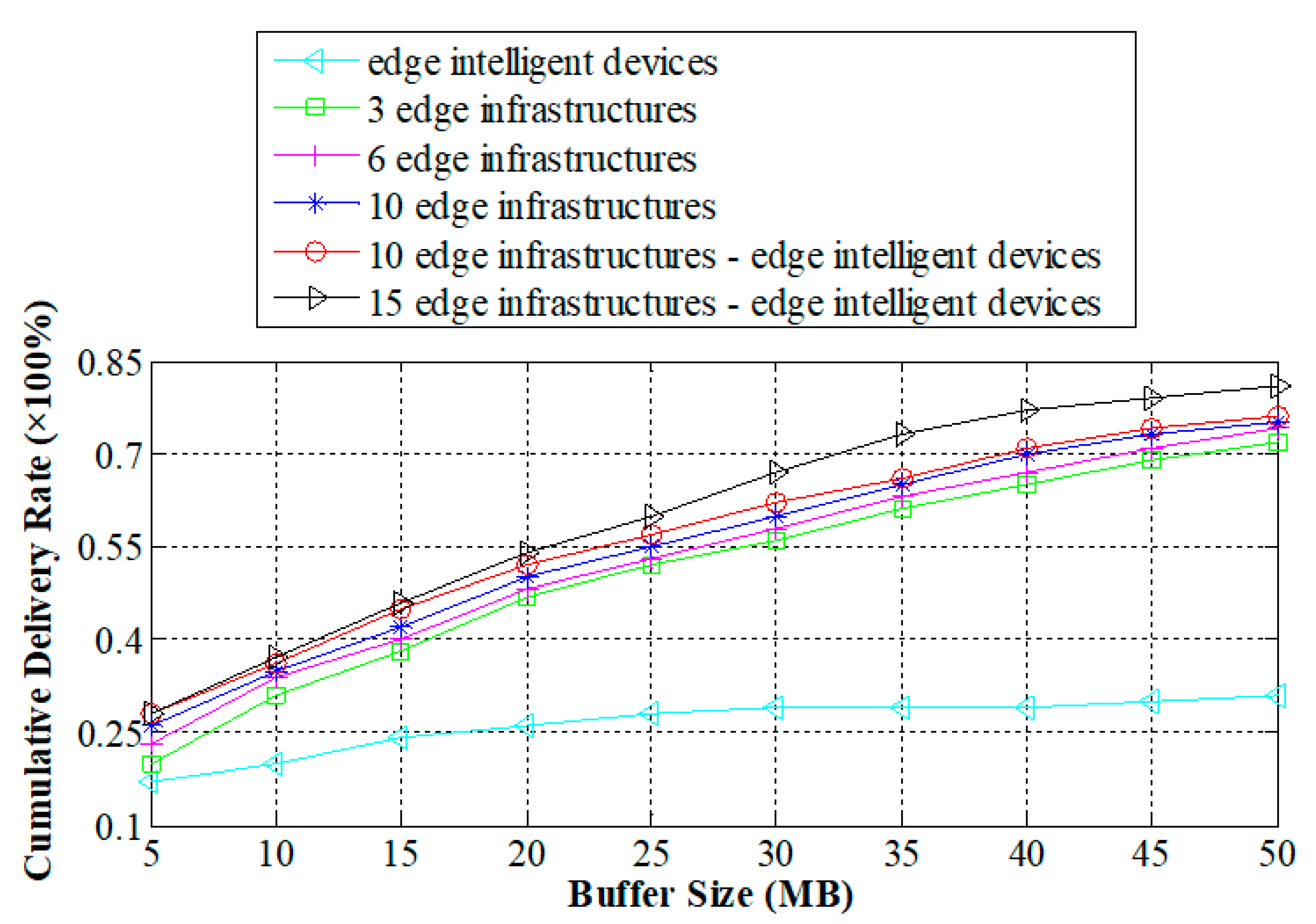

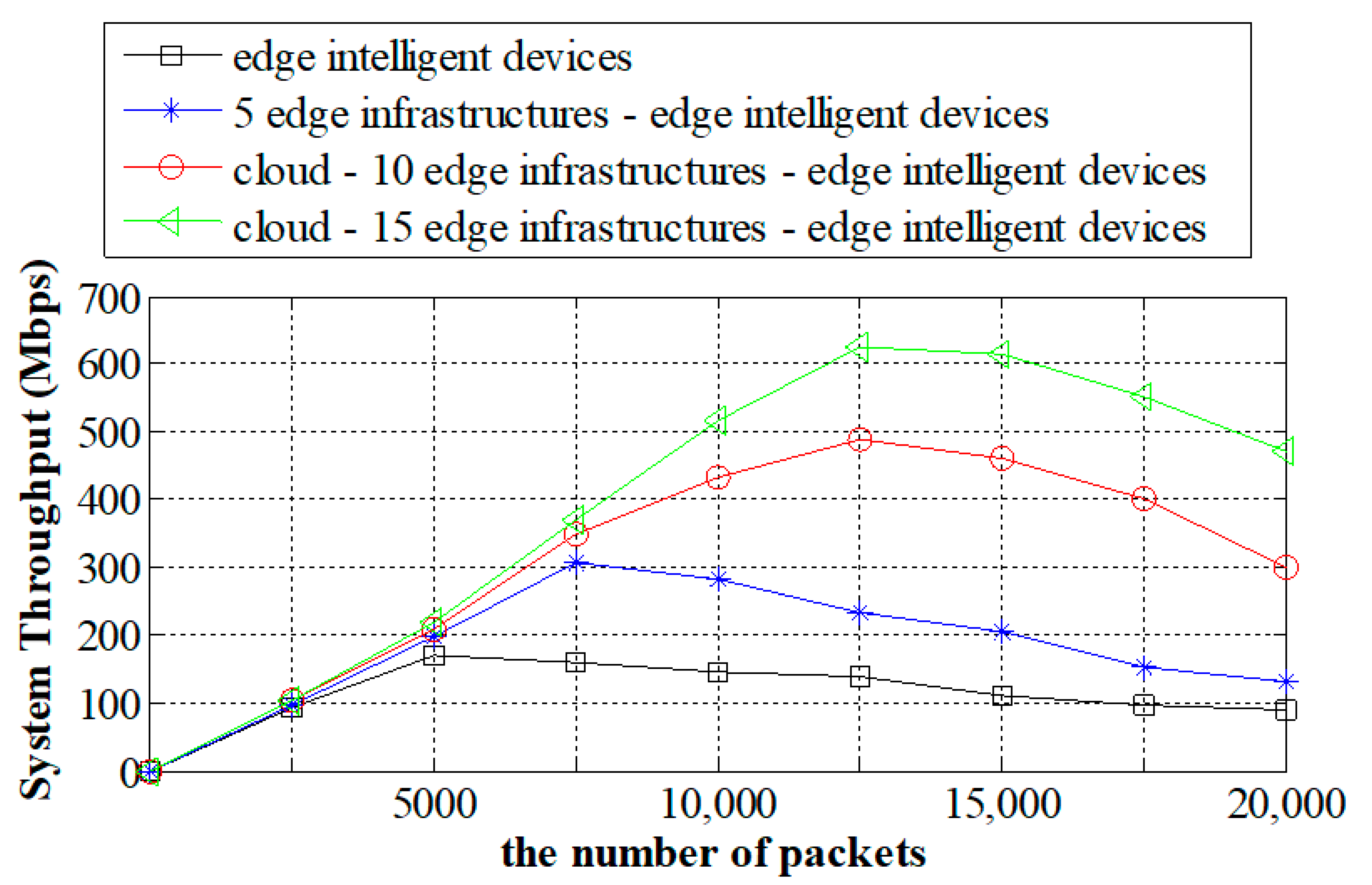

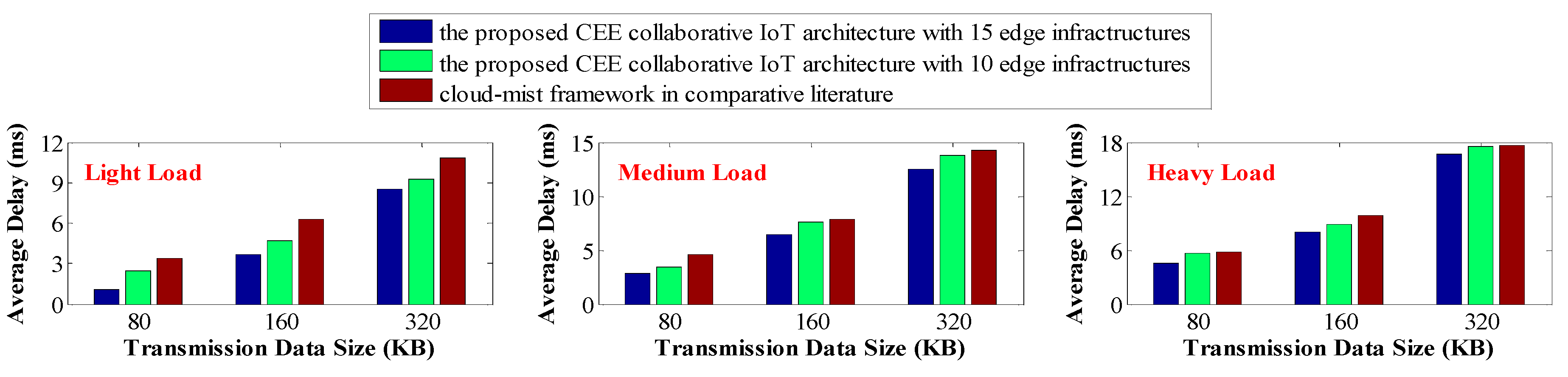

- Simulation is used for comparative analysis to verify the rationality and effectiveness of the layered data transmission strategy of the cooperative CEE IoT architecture proposed in this paper. The experimental results show that the division of the edge intelligent device layer and the edge infrastructure layer makes the data transmission service of IoT have the best performance in terms of the task load, data transmission delay, and data delivery rate. In particular, compared with the cloud–fog framework [36], which does not refine the edge layer, data transmission rate under CEE collaborative IoT architecture is increased by about 27.3%, 12.7%, and 8% on average under the three network environments of light-weight, medium, and heavy loads, respectively.

2. Related Work

3. Load-Balanced, Data-Layered Transmission Strategy in IoT Architecture Based on Cloud–Edge–End (CEE) Collaboration

3.1. IoT Architecture under CEE Collaboration

- (1)

- Physical terminal

- (2)

- Edge cloud

- Intelligent edge device layer

- Edge infrastructure layer

- (3)

- Cloud center

3.2. Data Layered Transmission Scheme

3.3. Optimal Resource Allocation and Load-Balancing Policy

3.3.1. Dynamic Resource Allocation Strategy

3.3.2. System Load-Balancing Scheme

| Algorithm 1 Load-Balancing Scheme. | |

| Input: | Given queue buffer length L; gateway node output link capacity C; bandwidth length requirement eBd; buffer length requirement eBl; |

| Goal: | Optimal load distribution. |

| 1: | Initialize γ, χ, λ, δ; |

| 2: | Link scheduler selects N links based on data requirements; |

| 3: | SWICH (data transmission priority) { |

| 4: | CASE 1: γ = 1; BREAK; |

| 5: | CASE 2: γ = 0; BREAK; |

| 6: | CASE 3: γ = 0.5; BREAK; |

| 7: | } |

| 8: | FOR (n = 1; n ≤ N; n ++) { |

| 9: | Calculate ξn according to Equation (6); |

| 10: | χn = ξn× eBd; λn = ξn × eBl; |

| 11: | SWICH (γ) { |

| 12: | CASE 1: allocate load to link n based on χn; |

| 13: | BREAK; |

| 14: | CASE 0: allocate load to link n based on λn; |

| 15: | BREAK; |

| 16: | CASE 0.5: Ω = eBd/∑nBdn; ℧ = eBl/∑nBln; δ = maxℓ(Ω, ℧); |

| 17: | IF δ = Ω |

| 18: | THEN allocate load to link n based on χn; |

| 19: | ELSE allocate load to link n based on λn; |

| 20: | BREAK; |

| 21: | } |

| 22: | } |

4. Experiments and Performance Analysis

4.1. Experimental Environment and Parameters Setting

4.2. Comparison Metrics

- Average delay Ad

- Cumulative delivery rate Cdr

- System throughput St

- Task distribution Td

4.3. Performance Analysis

5. Discussion

- (1)

- The proposed architecture does not take into account the differences in communication technology standards between different types of devices, especially among devices with different purposes, which makes the experimental results somewhat unrealistic.

- (2)

- This paper only uses a random way to select the optimal target nodes from multiple nodes, and does not provide corresponding optimal data forwarding models for each layer in the proposed CEE collaborative IoT architecture, which makes the experimental results lack some objectivity.

- (3)

- Although experiments verify that the IoT architecture with more edge infrastructures has the best performance in all aspects, this paper does not test and evaluate the optimal configuration proportion of the number of various devices, which may lead to a waste of resources, because the amount of data packets in the experiments is fixed and too many edge facilities will make some of them idle for a long time.

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| IoT | Internet of Things |

| CEE | Cloud–Edge–End |

| QoS | Quality of Service |

| RFID | Radio Frequency Identification |

| D2D | Device-to-Device |

References

- Yaqoob, I.; Hashem, I.A.T.; Ahmed, A.; Kazmi, S.A.; Hong, C.S. Internet of things forensics: Recent advances, taxonomy, requirements, and open challenges. Future Gener. Comput. Syst. 2019, 92, 265–275. [Google Scholar] [CrossRef]

- Li, J.; Li, X.; Gao, Y.; Gao, Y.; Fang, B. Review on Data Forwarding Model in Internet of Things. J. Softw. 2018, 29, 196–224. [Google Scholar]

- Kandhoul, N.; Dhurandher, S.K. An Efficient and Secure Data Forwarding Mechanism for Opportunistic IoT. Wirel. Pers. Commun. 2021, 118, 217–237. [Google Scholar] [CrossRef]

- Chi, Z.; Li, Y.; Sun, H.; Huang, Z.; Zhu, T. Simultaneous Bi-Directional Communications and Data Forwarding Using a Single ZigBee Data Stream. IEEE/ACM Trans. Netw. 2021, 29, 821–833. [Google Scholar] [CrossRef]

- Jiang, Y.; Ge, X.; Yang, Y.; Wang, C.; Li, J. 6G oriented blockchain based Internet of things data sharing and storage mechanism. J. Commun. 2020, 41, 48–58. [Google Scholar]

- Haseeb, K.; Din, I.U.; Almogren, A.; Ahmed, I.; Guizani, M. Intelligent and Secure Edge-enabled Computing Model for Sustainable Cities using Green Internet of Things. Sustain. Cities Soc. 2021, 68, 102779. [Google Scholar] [CrossRef]

- Canger, F.; Curran, K.; Santos, J.; Moffett, S.; Cadger, F.; Curran, K.; Santos, J.; Moffett, S. Location and mobility-aware routing for multimedia streaming in disaster telemedicine. Ad Hoc Netw. 2016, 36, 332–348. [Google Scholar]

- Jamil, F.; Iqbal, M.A.; Amin, R.; Kin, D.H. Adaptive thermal-aware routing protocol for wireless body area network. Electronics 2019, 8, 47. [Google Scholar] [CrossRef] [Green Version]

- Euchi, J.; Zidi, S.; Laouamer, L. A hybrid approach to solve the vehicle routing problem with time windows and synchronized visits in-home healthcare. Arab. J. Sci. Eng. 2020, 45, 10637–10652. [Google Scholar] [CrossRef]

- Prasad, C.R.; Bojja, P. A non-linear mathematical model-based routing protocol for WBAN-based health-care systems. Int. J. Pervasive Comput. Commun. 2021, 17, 447–461. [Google Scholar] [CrossRef]

- Singh, R.R.; Yash, S.M.; Shubham, S.C.; Indragandhi, V.; Vijayakumar, V.; Saravananp, P.; Subramaniyaswamy, V. IoT embedded cloud-based intelligent power quality monitoring system for industrial drive application. Future Gener. Comput. Syst. 2020, 112, 884–898. [Google Scholar] [CrossRef]

- Mohiuddin, I.; Almogren, A. Security Challenges and Strategies for the IoT in Cloud Computing. In Proceedings of the 11th IEEE International Conference on Information and Communication Systems (ICICS), Irbid, Jordan, 7–9 April 2020. [Google Scholar]

- Alkadi, O.; Moustafa, N.; Turnbull, B.; Choo, K.K.R. A deep blockchain framework-enabled collaborative intrusion detection for protecting iot and cloud networks. IEEE Internet Things J. 2021, 8, 94631–99472. [Google Scholar] [CrossRef]

- Arulanthu, P.; Perumal, E. An intelligent IoT with cloud centric medical decision support system for chronic kidney disease prediction. Int. J. Imaging Syst. Technol. 2020, 30, 815–827. [Google Scholar] [CrossRef]

- Zhang, J.; Gao, F.; Ye, Z. Remote consultation based on mixed reality technology. Glob. Health J. 2020, 4, 31–32. [Google Scholar] [CrossRef]

- Tong, J.; Wu, H.; Lin, Y.; He, Y.; Liu, J. Fog-computing-based short-circuit diagnosis scheme. IEEE Trans. Smart Grid 2020, 11, 3359–3371. [Google Scholar] [CrossRef]

- Velu, C.M.; Kunar, T.R.; Manivannan, S.S.; Saravanan, M.S.; Babu, N.K.; Hameed, S. IoT enabled Healthcare for senior citizens using Fog Computing. Eur. J. Mol. Clin. Med. 2020, 7, 1820–1828. [Google Scholar]

- Zhou, Y.; Zhang, D. Near-end cloud computing: Opportunities and challenges in the post-cloud computing era. Chin. J. Comput. 2019, 42, 677–700. [Google Scholar]

- Patra, B.; Mohapatra, K. Cloud, Edge and Fog Computing in Healthcare; Springer: Singapore, 2021; pp. 553–564. [Google Scholar]

- Verba, N.; Chao, K.M.; James, A.; Goldsmith, D.; Fei, X.; Stan, S. Platform as a service gateway for the Fog of Things. Adv. Eng. Inform. 2017, 33, 243–257. [Google Scholar] [CrossRef] [Green Version]

- Ma, L.; Liu, M.; Li, C.; Lu, Z.; Ma, H. A Cloud-Edge Collaborative Computing Task Scheduling Algorithm for 6G Edge Networks. J. Beijing Univ. Posts Telecommun. 2020, 43, 66–73. [Google Scholar]

- Abbasi, M.; Mohammadi-Pasand, E.; Khosravi, M.R. Intelligent workload allocation in IoT–Fog–cloud architecture towards mobile edge computing. Comput. Commun. 2021, 169, 71–80. [Google Scholar] [CrossRef]

- Kaur, A.; Singh, P.; Nayyar, A. Fog Computing: Building a Road to IoT with Fog Analytics; Springer: Singapore, 2020; pp. 59–78. [Google Scholar]

- Rekha, G.; Tyagi, A.K.; Anuradha, N. Integration of Fog Computing and Internet of Things: An Useful Overview; Springer: Cham, Switzerland, 2020; pp. 91–102. [Google Scholar]

- Byers, C.C. Architectural imperatives for fog computing: Use cases, requirements, and architectural techniques for fog-enabled iot networks. IEEE Commun. Mag. 2017, 55, 14–20. [Google Scholar] [CrossRef]

- Zahmatkesh, H.; Al-Turjman, F. Fog computing for sustainable smart cities in the IoT era: Caching techniques and enabling technologies—An overview. Sustain. Cities Soc. 2020, 59, 102139. [Google Scholar] [CrossRef]

- El-Hasnony, I.M.; Mostafa, R.R.; Elhoseny, M.; Barakat, S. Leveraging mist and fog for big data analytics in IoT environment. Trans. Emerg. Telecommun. Technol. 2020, 32, e4057. [Google Scholar] [CrossRef]

- Sood, S.K.; Kaur, A.; Sood, V. Energy efficient IoT-Fog based architectural paradigm for prevention of Dengue fever infection. J. Parallel Distrib. Comput. 2021, 150, 46–59. [Google Scholar] [CrossRef]

- Lei, W.; Zhang, D.; Ye, Y.; Lu, C. Joint beam training and data transmission control for mmwave delay-sensitive communications: A parallel reinforcement learning approach. IEEE J. Sel. Top. Signal Process. 2022, 16, 447–459. [Google Scholar] [CrossRef]

- Li, J.; Li, X.; Yuan, J.; Zhang, R.; Fang, B. Fog computing-assisted trustworthy for-warding scheme in mobile Internet of Things. IEEE Internet Things J. 2019, 6, 2778–2796. [Google Scholar] [CrossRef]

- Ghosh, S.; Mukherjee, A.; Ghosh, S.K.; Buyya, R. Mobi-iost: Mobility-aware cloud-fog-edge-iot collaborative framework for time-critical applications. IEEE Trans. Netw. Sci. Eng. 2020, 7, 2271–2285. [Google Scholar] [CrossRef] [Green Version]

- Manogaran, G.; Rawal, B.S. An Efficient Resource Allocation Scheme with Optimal Node Placement in IoT-Fog-Cloud Architecture. IEEE Sens. J. 2021, 21, 25106–25113. [Google Scholar] [CrossRef]

- Wang, Y.; Ren, Z.; Zhang, H.; Hou, X.; Xiao, Y. “combat cloud-fog” network architecture for internet of battlefield things and load balancing technology. In Proceedings of the IEEE International Conference on Smart Internet of Things (SmartIoT), Xi’an, China, 17–19 August 2018. [Google Scholar]

- Xia, B.; Kong, F.; Zhou, J.; Tang, X.; Gong, H. A delay-tolerant data transmission scheme for internet of vehicles based on software defined cloud-fog networks. IEEE Access 2020, 8, 65911–65922. [Google Scholar] [CrossRef]

- Amiri, I.S.; Prakash, J.; Balasaraswathi, M.; Sivasankaran, V.; Sundararjan, T.V.P.; Hindia, M.; Tilwari, V.; Dimyati, K.; Henry, O. DABPR: A large-scale internet of things-based data aggregation back pressure routing for disaster management. Wirel. Netw. 2020, 26, 2353–2374. [Google Scholar] [CrossRef]

- Awaisi, K.S.; Hussain, S.; Ahamed, M.; Khan, A.A. Leveraging IoT and Fog Computing in Healthcare Systems. IEEE Internet Things Mag. 2020, 3, 52–56. [Google Scholar] [CrossRef]

- Chinnasamy, P.; Deepalakshmi, P.; Dutta, A.K.; You, J.; Joshi, G.P. Ciphertext-Policy Attribute-Based Encryption for Cloud Storage: Toward Data Privacy and Authentication in AI-Enabled IoT System. Mathematics 2021, 10, 68. [Google Scholar] [CrossRef]

- Mancini, R.; Tuli, S.; Cucinotta, T.; Buyya, R. iGateLink: A Gateway Library for Linking IoT, Edge, Fog, and Cloud Computing Environments; Springer: Singapore, 2021; pp. 11–19. [Google Scholar]

- Chinnasamy, P.; Rojaramani, D.; Praveena, V.; SV, A.J.; Bensujin, B. Data Security and Privacy Requirements in Edge Computing: A Systemic Review. In Cases on Edge Computing and Analytics; IGI Global: Hershey, PA, USA, 2021; pp. 171–187. [Google Scholar]

- Dastjerdi, A.V.; Buyya, R. Fog computing: Helping the Internet of Things realize its potential. Computer 2016, 49, 112–116. [Google Scholar] [CrossRef]

- Sarkar, S.; Misra, S. Theoretical modelling of fog computing: A green computing paradigm to support IoT applications. IET Netw. 2016, 5, 23–29. [Google Scholar] [CrossRef] [Green Version]

- An, J.G.; Li, W.; Le-Gall, F.; Kovac, E.; Kim, J.; Taleb, T.; Song, J. EiF: Toward an elastic IoT fog framework for AI services. IEEE Commun. Mag. 2019, 57, 28–33. [Google Scholar] [CrossRef] [Green Version]

- Loffi, L.; Westphall, C.M.; Grüdtner, L.D.; Westphall, C.B. Mutual authentication with multi-factor in IoT-Fog-Cloud environment. J. Netw. Comput. Appl. 2021, 176, 102932. [Google Scholar] [CrossRef]

- Mubarakali, A.; Durai, A.D.; Alshehri, M.; AlFarraj, O.; Ramakrishnan, J.; Mavaluru, D. Fog-based delay-sensitive data transmission algorithm for data forwarding and storage in cloud environment for multimedia applications. In Big Data; Mary Ann Liebert, Inc.: New Rochelle, NY, USA, 2020. [Google Scholar]

- Li, J.; Cai, J.; Khan, F.; Rehman, A.U.; Balasubramaniam, V.; Sun, J.; Venu, P. A secured framework for sdn-based edge computing in IOT-enabled healthcare system. IEEE Access 2020, 8, 135479–135490. [Google Scholar] [CrossRef]

- Mahmud, M.; Kaiser, M.S.; Hussain, A.; Vassanelli, S. Applications of deep learning and reinforcement learning to biological data. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 2063–2079. [Google Scholar] [CrossRef] [Green Version]

- Singh, K.D.; Sood, S.K. 5G ready optical fog-assisted cyber-physical system for IoT applications. IET Cyber-Phys. Syst. Theory Appl. 2020, 5, 137–144. [Google Scholar] [CrossRef]

- Balasubramanian, V.; Aloqaily, M.; Resslein, M. An SDN architecture for time sensitive industrial IoT. Comput. Netw. 2021, 186, 107739. [Google Scholar] [CrossRef]

- Thangaramya, K.; Kulothungan, K.; Logambigai, R.; Selvi, M.; Ganapathy, S.; Kannan, A. Energy aware cluster and neuro-fuzzy based routing algorithm for wireless sensor networks in IoT. Comput. Netw. 2019, 151, 211–223. [Google Scholar] [CrossRef]

| Data Type | Data Rate | Queue Delay | Transmission Priority | Service Type | Typical Examples in Medical IoT |

|---|---|---|---|---|---|

| Delay-Sensitive | high | low | high | key data | real-time patient monitoring |

| multimedia conference | telephone conference | ||||

| video data | video stream for elderly health monitoring or sports control | ||||

| Loss-Sensitive | low | high | middle | judgement result | electronic health records |

| image/video | medical imaging | ||||

| Hybrid | middle | middle | low | non-key professional parameters | measurement of outine physiological indexes of patients |

| Symbol | Meaning |

|---|---|

| Psize | the size of a packet on each intelligent node |

| Bdi | the bandwidth demand of the i-th intelligent node |

| Bli | the buffer length requirement of the i-th intelligent node |

| Tdi | the total delay of all packets on the i-th intelligent node |

| Cdri | the cumulative delivery rate of all packets on the i-th intelligent node |

| Pic | the ratio of the bandwidth demand of the i-th intelligent device node to the maximum capacity of edge intelligent gateway node |

| Pil | the ratio of the buffer length demand of the i-th intelligent device node to the total buffer length of edge intelligent gateway node |

| Qic | the demand ratio of the bandwidth of the i-th intelligent node |

| Qil | the demand ratio of the buffer length of the i-th intelligent node |

| Սi | the total number of packets on the i-th intelligent node |

| Ri | the maximum resource assigned to the i-th intelligent device |

| tTd-i | the transmission delay of packets on the i-th intelligent node |

| pTd-i | the processing/calculation delay of packets on the i-th intelligent node |

| qTd-i | the queuing delay of packets on the i-th intelligent node |

| Aqli | the average queue length of packets on the i-th intelligent node |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Li, X.; Yuan, J.; Li, G. Load Balanced Data Transmission Strategy Based on Cloud–Edge–End Collaboration in the Internet of Things. Sustainability 2022, 14, 9602. https://doi.org/10.3390/su14159602

Li J, Li X, Yuan J, Li G. Load Balanced Data Transmission Strategy Based on Cloud–Edge–End Collaboration in the Internet of Things. Sustainability. 2022; 14(15):9602. https://doi.org/10.3390/su14159602

Chicago/Turabian StyleLi, Jirui, Xiaoyong Li, Jie Yuan, and Guozhi Li. 2022. "Load Balanced Data Transmission Strategy Based on Cloud–Edge–End Collaboration in the Internet of Things" Sustainability 14, no. 15: 9602. https://doi.org/10.3390/su14159602

APA StyleLi, J., Li, X., Yuan, J., & Li, G. (2022). Load Balanced Data Transmission Strategy Based on Cloud–Edge–End Collaboration in the Internet of Things. Sustainability, 14(15), 9602. https://doi.org/10.3390/su14159602