Computer Life-Cycle Management System for Avionics Software as a Tool for Supporting the Sustainable Development of Air Transport

Abstract

1. Introduction

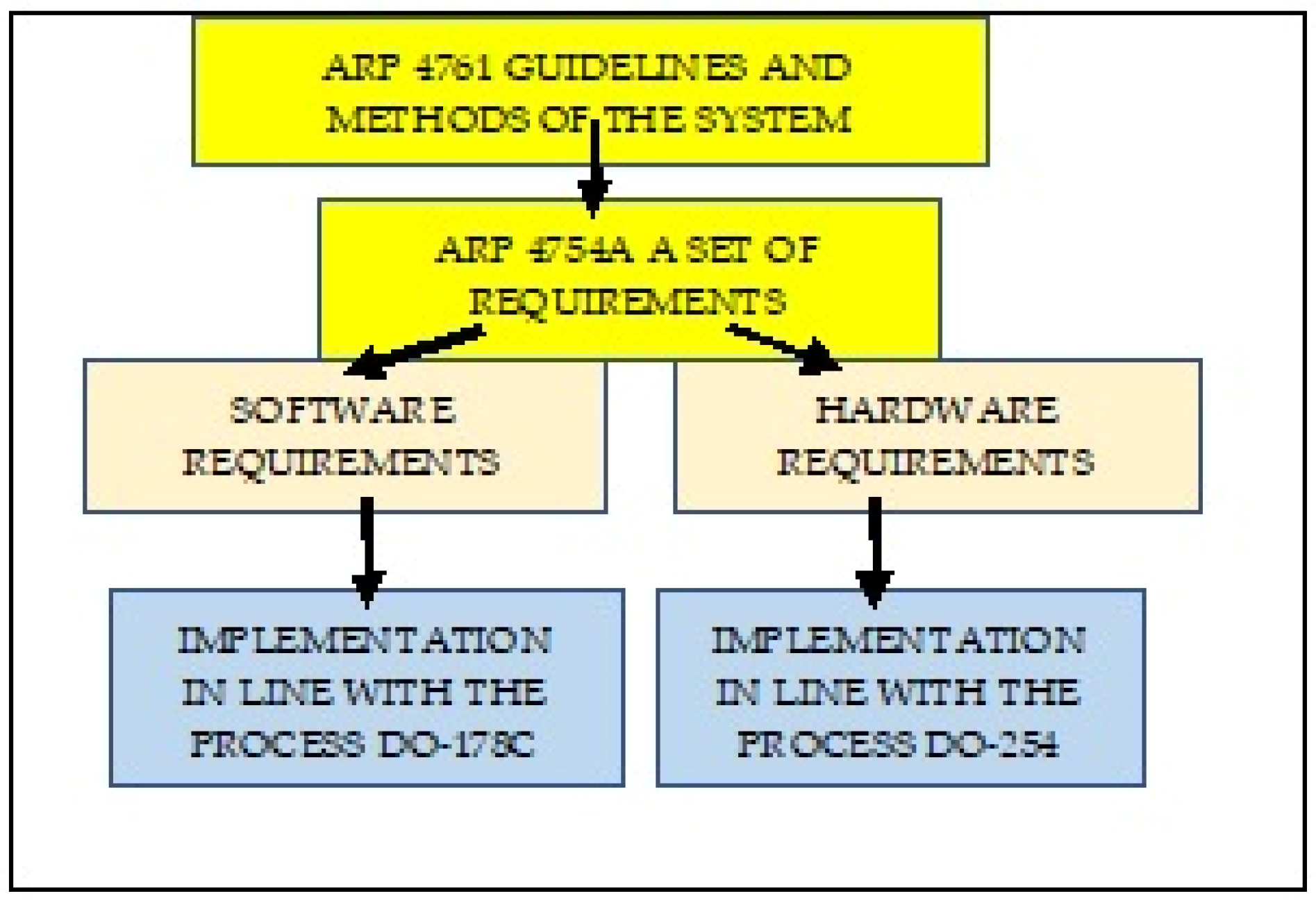

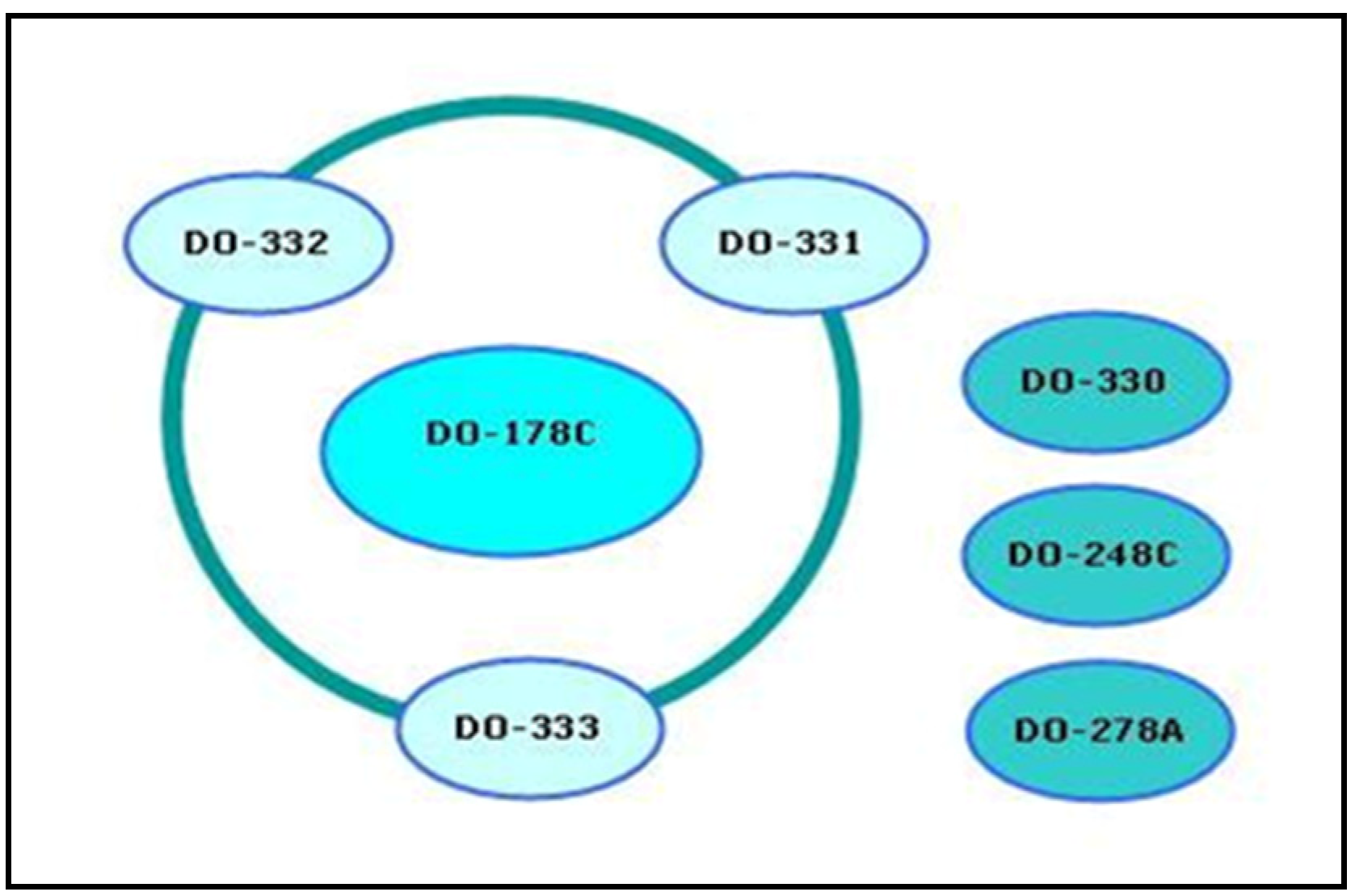

2. Materials and Methods

2.1. Avionics Software Planning Process and Possible Computer-Aided Support

- Plan for Software Aspects of Certification (PSAC): a kind of “contract” between the contractor and the certification body.

- Software Development Plan (SDP): contains the requirements concerning software planning, coding, and integration stages. The SDP was written for software developers and is, a kind of a guide on how to develop the software in order for it to satisfy the adopted requirements.

- Software Verification Plan (SVP): contains aspects associated with verifying software functionality and is intended for software testers. The SVP is associated with the SDP because the assumptions that were thought out at the software development stage are verified at this stage.

- Software Configuration Management Plan (SCMP): defines procedures, tools, and methods aimed at achieving the objectives associated with managing the requirements throughout the entire software lifecycle. It covers the procedures in terms of defining the baseline version and identifying software versions, reporting issues, controlling and reviewing modifications, archiving, controlling software loading and recovery.

- Software Quality Assurance Plan (SQAP): defines the procedures and methods to be applied in order to satisfy the quality requirements associated with standard DO-178C. It determines the procedures in terms of quality management, audit execution, actions associated with issue reporting, and corrective action methodology.

- Software Requirements Standards (SRS), which define principles, methods, and tools to be applied for developing high-level requirements. They include methods used for software development, notations for requirement implementation (algorithms, flow diagrams), and project tool limitations, which will be used for software development, as well as criteria related to the requirements.

- Software Design Standards (SDSs): They define methods, tools, and limitations within the software design process. They include low-level requirements and software architecture. They are intended for a software development team and explain how to implement a design effectively. They cover, among other things, nomenclature methods, design tool limitations, and software complexity (e.g., procedure length).

- Software Coding Standards (SCSs); They define methods, tools, and limitations within the software coding process. They include, among other things, coding standards, programming languages used, code presentation standards, nomenclature standards, compilator limitations, and restrictions arising from programming standards.

- The aforementioned documents, as reference templates, have been implemented within the constructed computer system, which supports the process of managing avionics software development and certification.

2.2. Avionics Software Development Process and Possible Computer-Aided Support

- The software requirement process, which leads to the development of high-level requirements (HLRs);

- The software design process, which develops low-level requirements (LLRs) and software architecture based on HLRs;

- The coding process, which leads to the creation of a source code and a nonintegrated object code;

- The software integration process, which involves consolidating the software into the form of executable programs and its integration with external devices.

2.3. Avionics Software Integration Process and Possible Computer-Aided Support

- Communication within software certification;

- Requirement management within software certification;

- Verification within software certification;

- Quality assessment within software certification.

2.4. A Method for Predicting Avionics Software Vulnerabilities Using Branching Processes

- The use of software metrics, which take into account a specific set of software metrics when creating a binary classifier. The objective of preliminary testing is the empirical evaluation and confirmation of expert opinions that software complexity is opposed to software security. However, the generally observed weak link between complexity and security vulnerabilities lead to the need to investigate various models for predicting vulnerabilities, such as code modification, relationship, code coverage, conjugation, consistency, and developer activity.

- The use of text exploration techniques, where the source code of tested software components is parsed and represented as a set of tokens (i.e., keywords). Tokens are combined into a data set and user together with the data on vulnerabilities for training vulnerability predictors. During the second phase (called the prediction phase), a trained classifier uses these datasets to determine whether a future version of a studied code module is vulnerable to errors and hacker attacks or not.

3. Results

3.1. Basic Tasks and Functions of a Computer System Supporting the Management Process

- Setting up a new project, which involves entering information on, among other things, project title, personal data of the project manager and individual contractors, their authorizations, and system accessibility levels to the system;

- Entering data into the knowledge base regarding project implementation (details, finances, and limitations);

- Automatic generation of document templates required in standard DO-178C (i.e., plans, standards, verification procedures and methods, reports, and other entries);

- Automatic generation of tests for the developed software and archiving the test results;

- Archiving correspondence between project contractors, program files, and their test results;

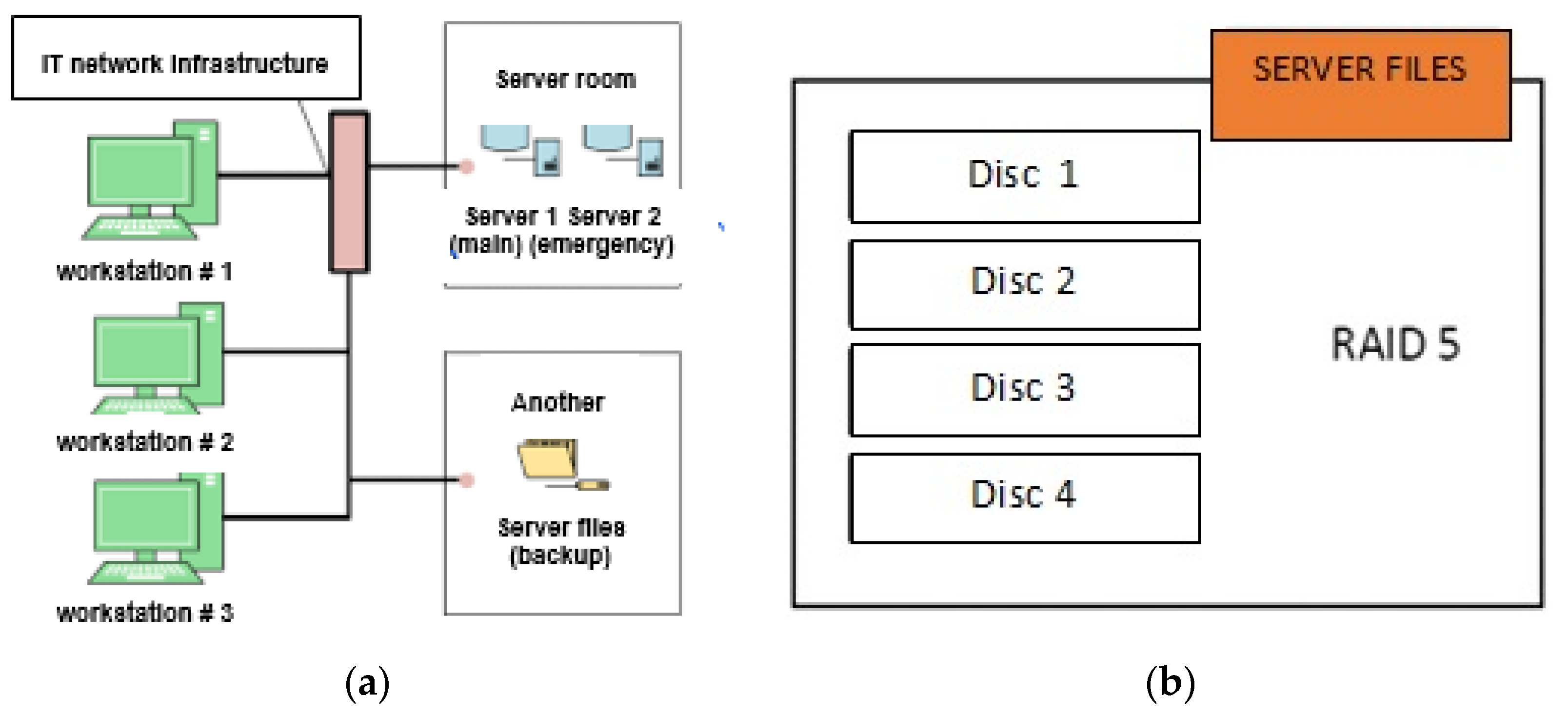

- Automatic backup of the files to a server located in another building within AFIT premises (protection against data loss);

- Providing project implementation data as per entered authorization of system users;

- Reporting project status for the purposes of an audit or inspection, as per the entered guidelines.

3.2. Structural Diagram of a Computer System Supporting the Management Process

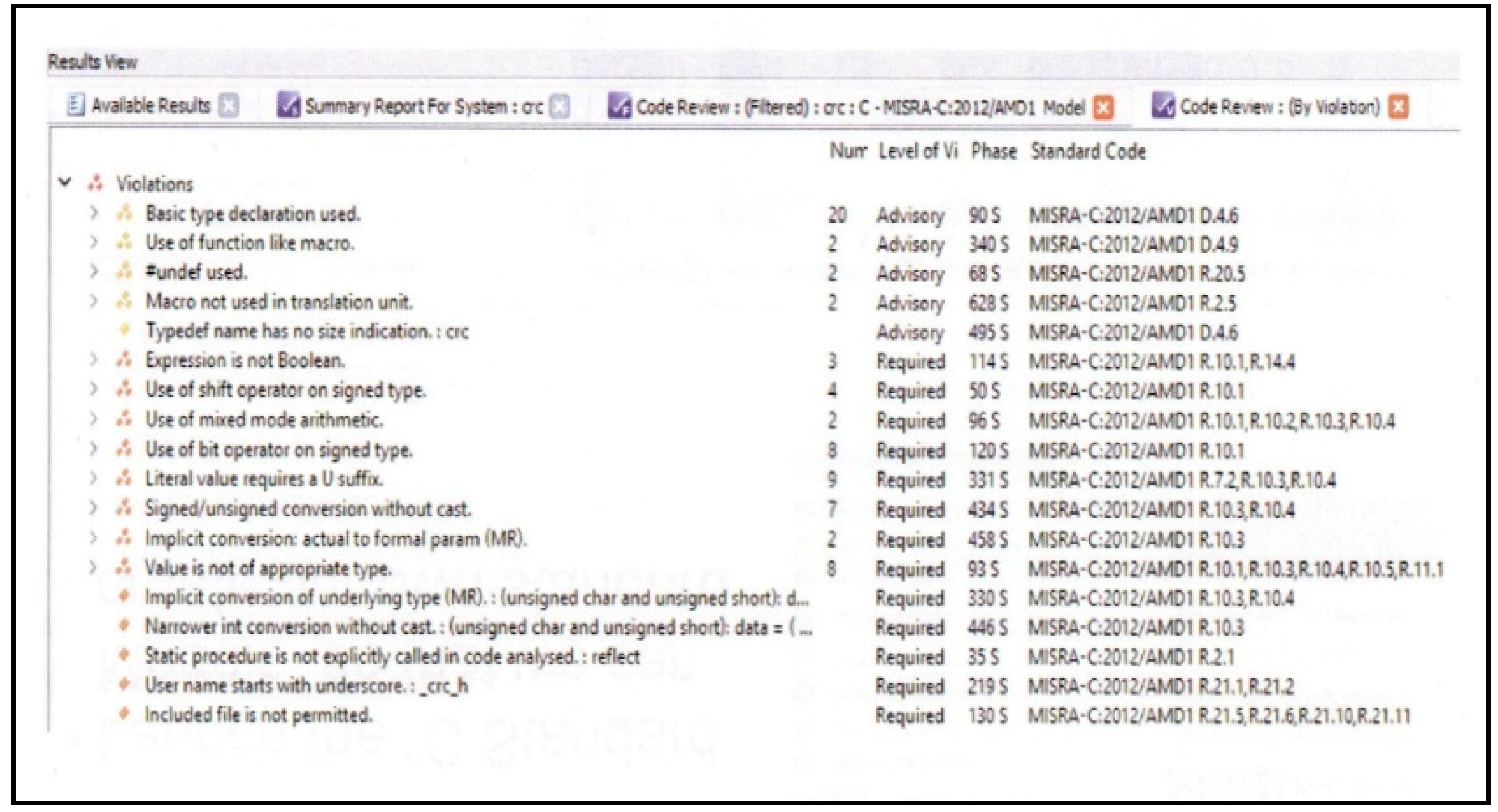

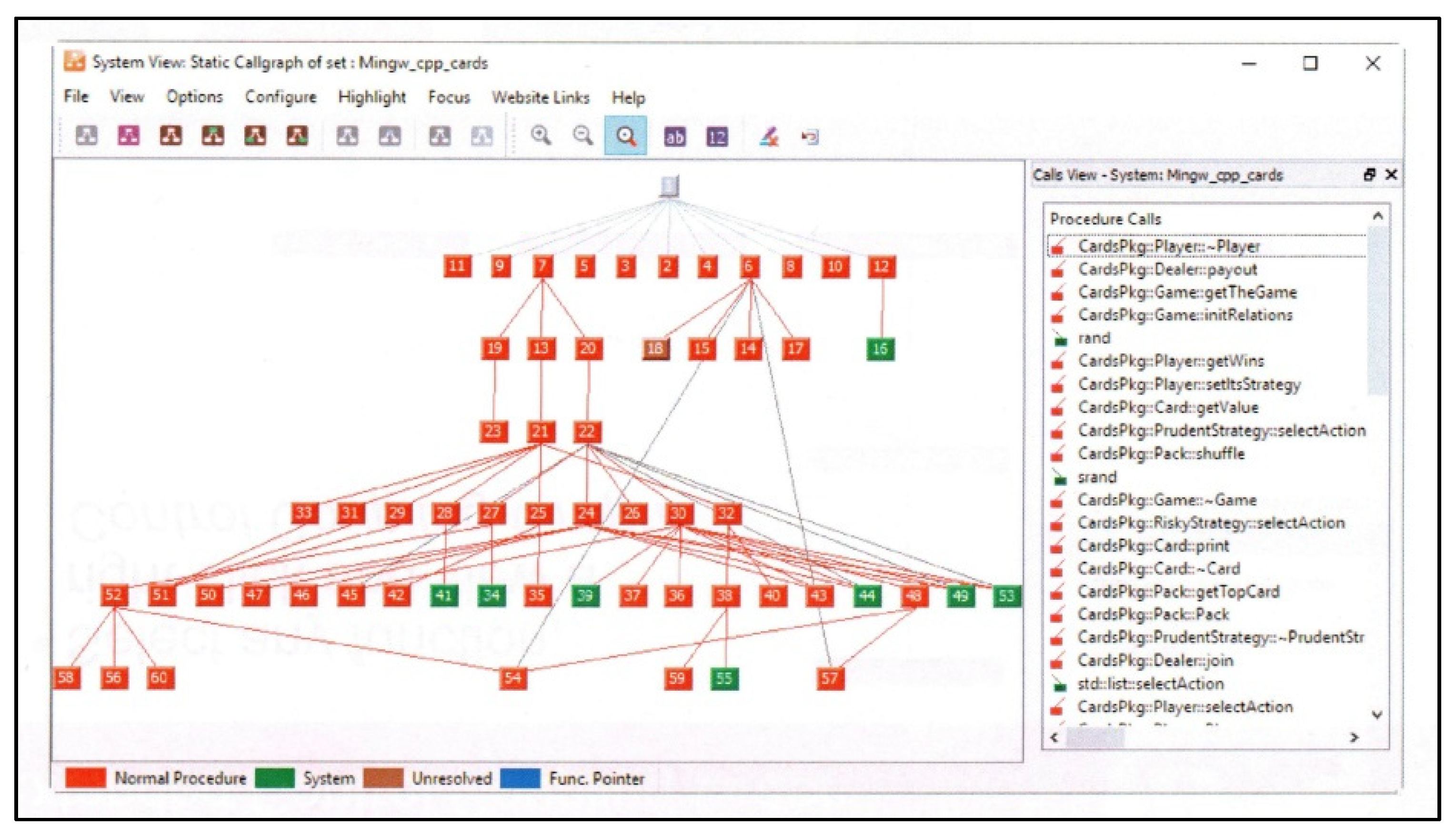

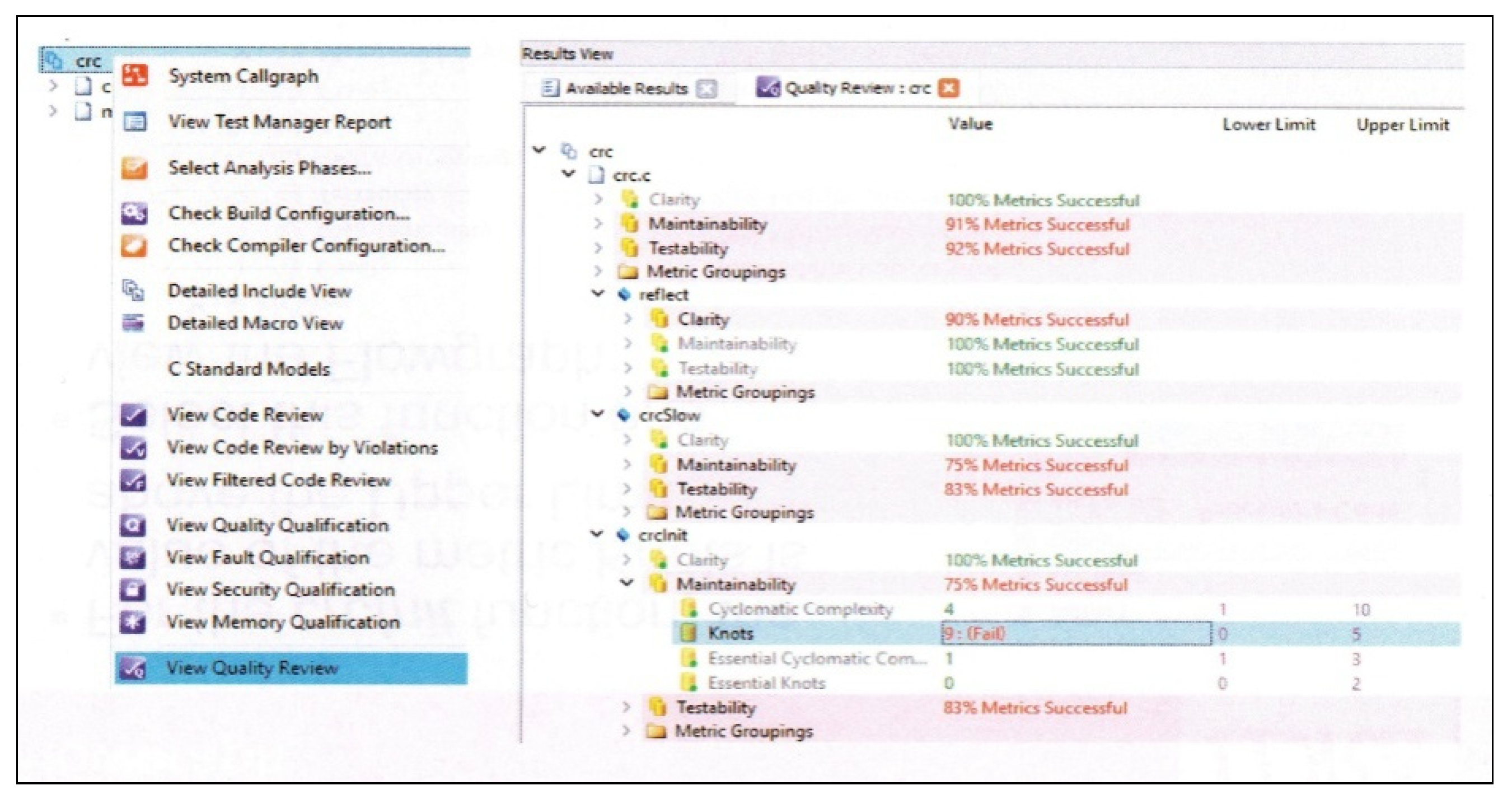

- Basic analysis for preliminary testing and verification of the developed software (Static Analysis, Dynamic Analysis, TBvision, TBrun, TBmisra, TBsafe);

- Advanced analysis for preliminary testing and verification of the developed software (Modified Condition/Decision Coverage, Information Flow Analysis, Dynamic Data Flow Coverage, Extract Semantic Analysis);

- Complementary analysis for preliminary testing and verification of the developed software (Test Vector Generation, TBeXtreme, TBmanager, Support for Target Testing, Tool Qualification);

- Additional testing software, which provides ongoing support for the process of developing individual software components.

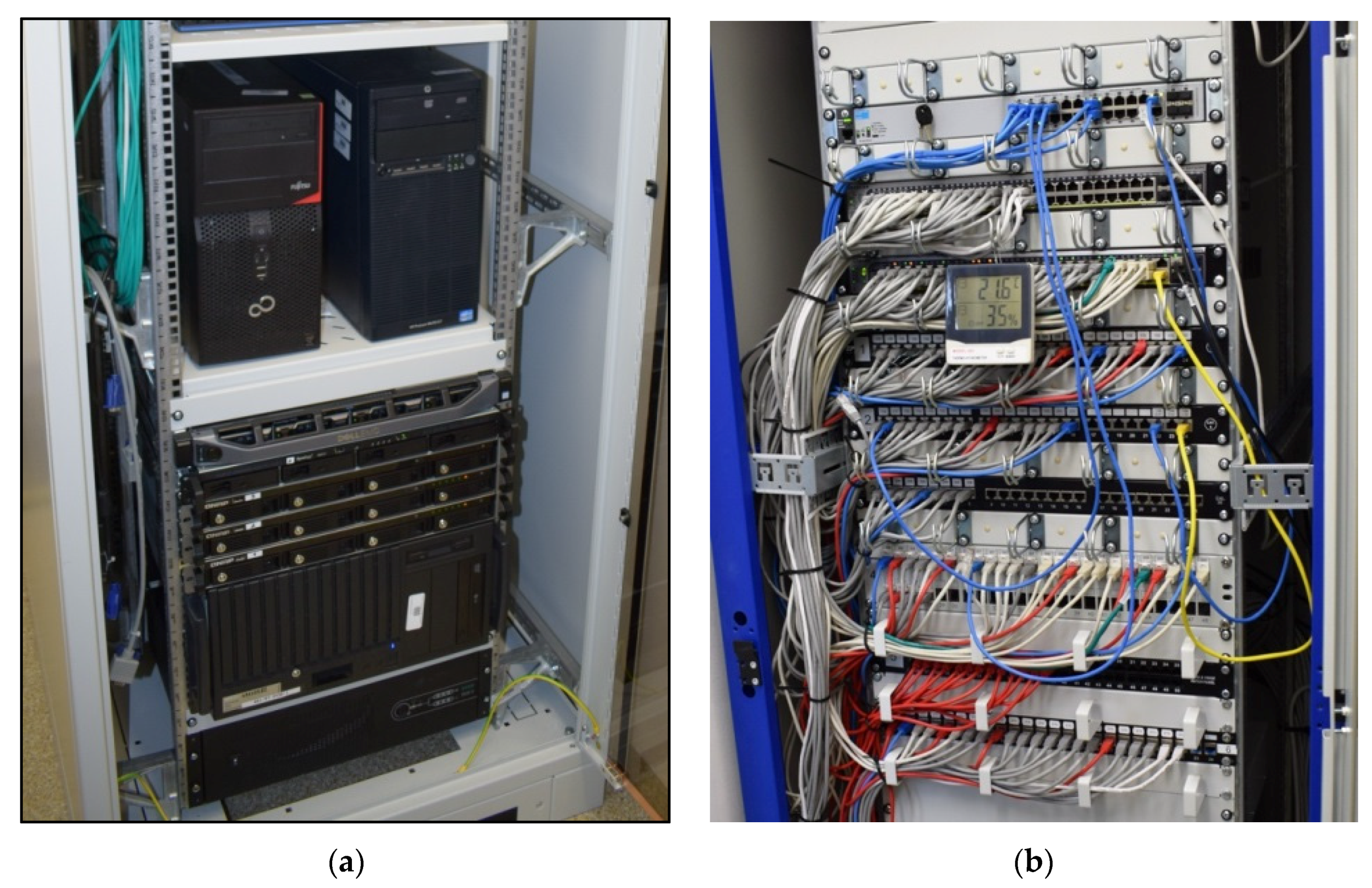

3.3. Technical Implementation of a Computer System Supporting the Management Process

4. Discussion

4.1. Meeting the Software Planning Requirements

- Internal study: “Preliminary requirements regarding the SWPL-1 flight parameter display system software”;

- Internal study: “Detailed requirements regarding the SWPL-1 flight parameter display system software”.

4.2. Meeting the Software Development Requirements

4.3. Meeting the Software Integration Requirements

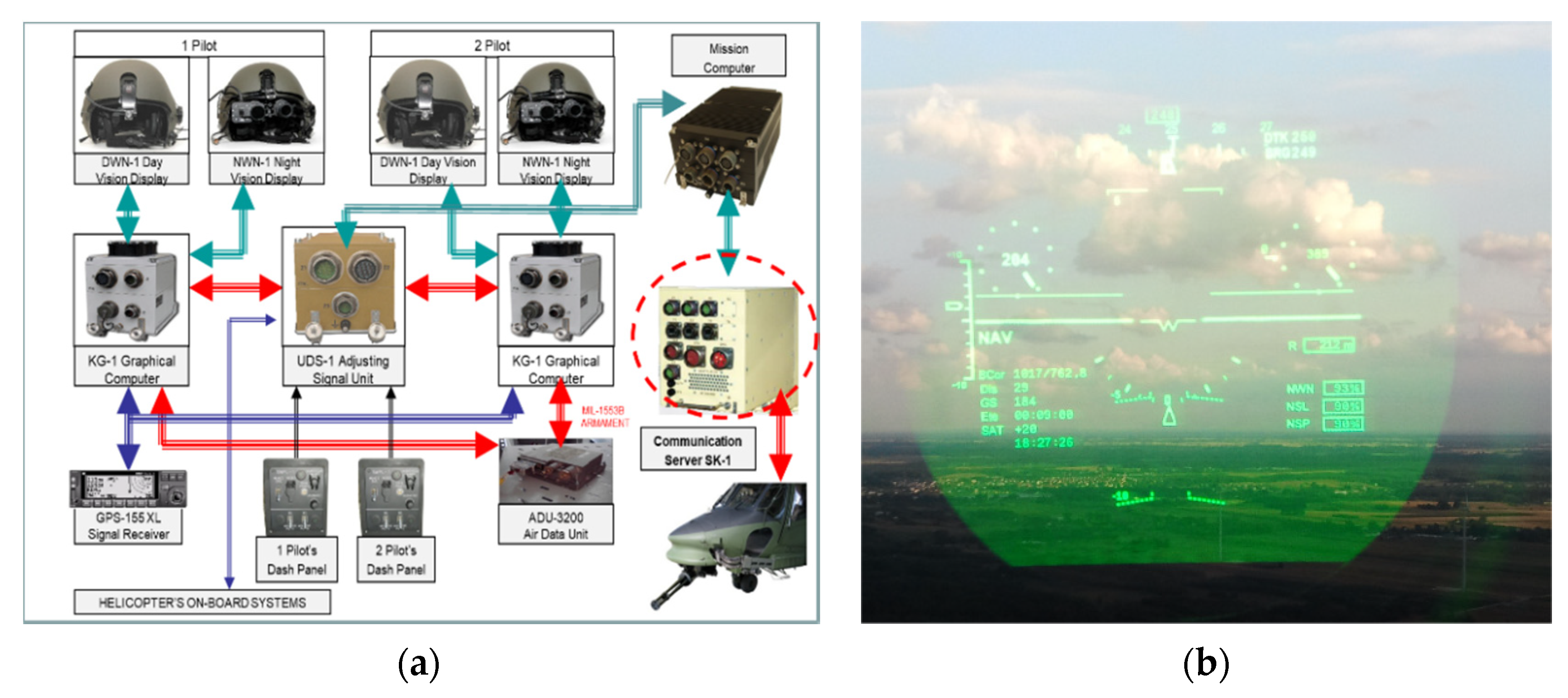

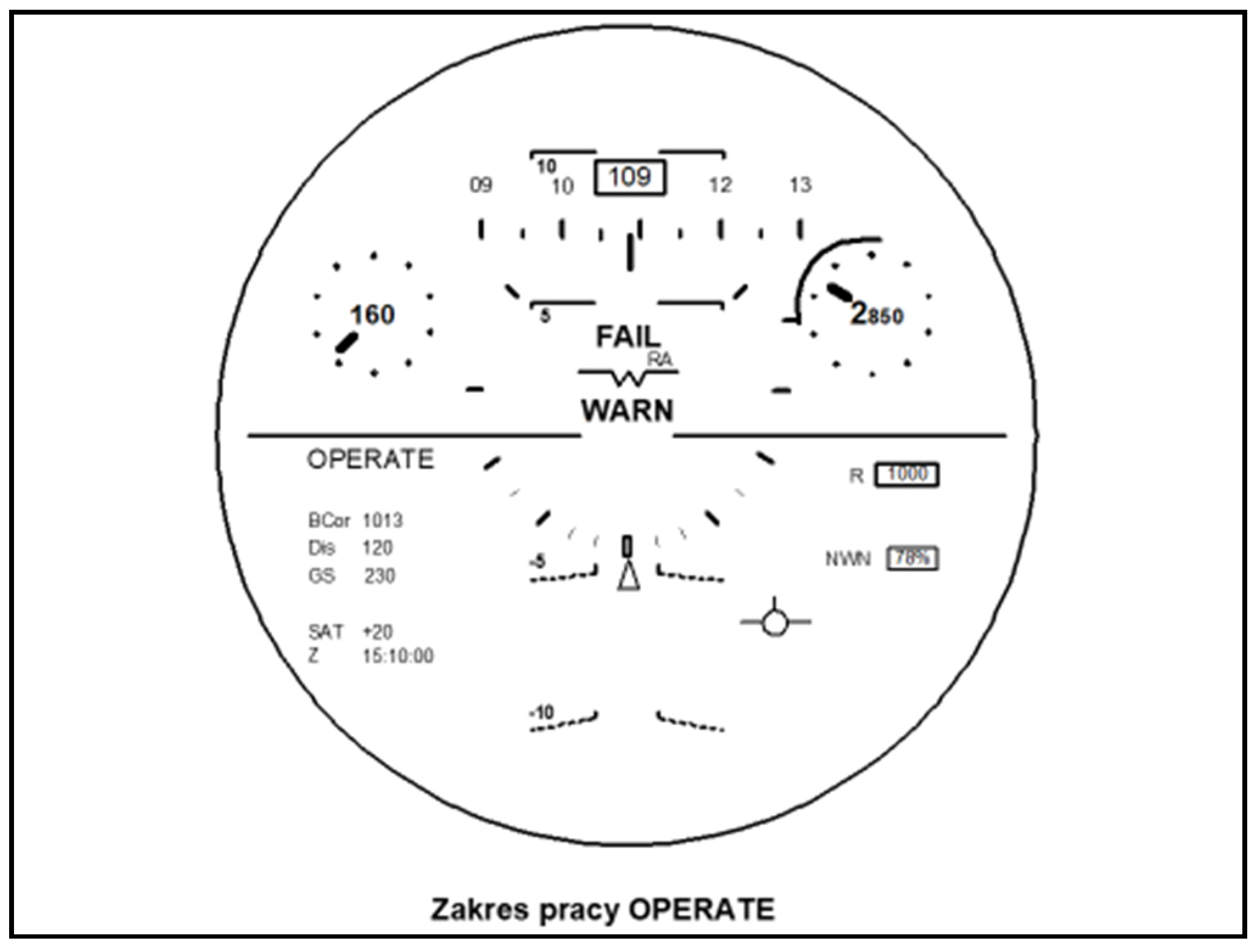

4.4. Selected Test Results Involving Software Developed for a Helmet-Mounted Flight-Data Display System

- BIOS processor direct operation system configuration;

- WINDOWS XP Embedded operating system configuration;

- Graphic computer user software configuration;

- Graphic computer user software architecture (Mi17sys main module, Mi17konfigurator configuration module, and Mi17hud display module).

- Hardware/software integration testing in order to verify the correct functioning of software on the target computer;

- Software integration testing in order to verify the relationships between software and component requirements and verify the implementation of software requirements and their components within the software architecture;

- Low-level testing in order to verify the implementation of low-level requirements.

- Level I is the test of system readiness and efficiency (reporting and alerting malfunctions); integrity is the system’s ability to notify the user about the fitness for use within the navigation process in a timely manner;

- Level II is the correction of system errors and scaling; a data-sensitive (faulty measurement data) sent to a pilot or system user as a result of processing individual datasets within this system;

- Level III is matching the indications and pilot supports; reliability is the measure of the pilot’s confidence in the correctness of information indicated on board an aircraft.

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Lewitowicz, J. Basics of Aircraft Operation. Operational Problems in Aircraft Design and Modernization; AFIT: Warsaw, Poland, 2012; ISBN 9788361021285. [Google Scholar]

- Dattatri, K. C++. Effective Object-Oriented Software Construction; Pearson Education Prentice: London, UK, 2000; ISBN 0130867691. [Google Scholar]

- Kasprzyk, R. Overview of software lifecycle models. Softw. Dev. J. 2006, 10, 52–57. [Google Scholar]

- RTCA DO-178C. Software Considerations in Airborne Systems and Equipment Certification. 2019. Available online: https://assets.vector.com/cms/content/know-how/aerospace/Documents/Complete_Verification_and_Validation_for_DO-178C.pdf (accessed on 29 January 2021).

- Rierson, L. Developing Safety-Critical Software. A Practical Guide for Aviation Software and DO-178C Compliance; Taylor & Francis Group: London, UK, 2013; ISBN 9781439813683. [Google Scholar]

- Szelmanowski, A.; Michalak, S.; Michałowski, P.; Kwiatkowski, T. Computer-Aided Management of Developing a Helmet-Mounted Display System Software as per DO-178C Requirements; AFIT: Warsaw, Poland, 2017. [Google Scholar]

- Borowski, J. Detailed Requirements Regarding the SWPL-1 Flight Parameter Display System Software; AFIT: Warsaw, Poland, 2011. [Google Scholar]

- Borowski, J. Preliminary Requirements Regarding the SWPL-1 Flight Parameter Display System Software; AFIT: Warsaw, Poland, 2008. [Google Scholar]

- Mell, P.; Scarfone, K.; Romanosky, S. A Complete Guide to the Common Vulnerability Scoring System, NIST. 2007. Available online: https://ws680.nist.gov/publication/get_pdf.cfm?pub_id=51198 (accessed on 29 January 2021).

- Mell, P.; Scarfone, K. The Common Configuration Scoring System (CCSS): Metrics for Software Security Configuration Vulnerabilities; NIST Interagency Report 7502; NIST: Gaithersburg, MD, USA, 2010. [Google Scholar]

- LeMay, E.; Scarfone, K.; Mell, P. The Common Misuse Scoring System (CMSS): Metrics for Software Misuse Vulnerabilities; NIST Interagency Report 7864; NIST: Gaithersburg, MD, USA, 2012. [Google Scholar]

- Anton, P.S.; Anderson, R.H.; Mesic, R.; Scheiern, M. Finding and Fixing Vulnerabilities in Information Systems: The Vulnerability Assessment and Mitigation Methodology; RAND: Pittsburgh, PA, USA, 2003. [Google Scholar]

- Kasprzyk, R.; Stachurski, A. A concept of standard-based vulnerability management automation for IT systems. Comput. Sci. Math. Model. 2016, 3, 33–38. [Google Scholar] [CrossRef]

- The MITRE Corporation. Common Weakness Scoring System (CWSS). 2014. Available online: https://cwe.mitre.org/cwss/cwss_v1.0.1.html (accessed on 29 January 2021).

- Alhazmi, O.H.; Malaiya, Y.K.; Ray, I. Measuring, analyzing and predicting security vulnerabilities in software systems. Comput. Secur. 2007, 26, 219–228. [Google Scholar] [CrossRef]

- Alhazmi, O.H.; Malaiya, Y.K. Application of Vulnerability Discovery Models to Major Operating Systems. IEEE Trans. Reliab. 2008, 57, 14–22. [Google Scholar] [CrossRef]

- Moreno, J.A. AQAP 2105 NATO Requirements Required a Quality Plan for a Product Constituting a Contract Subject); NSO: Brussels, Belgium, 2009. [Google Scholar]

- Maj, J. AQAP 2210 (NATO Supplementary Requirements for AQAP 2110 on Ensuring Software Quality); NSO: Brussels, Belgium, 2006. [Google Scholar]

- Borowski, J. Quality Plan for an IT Project. SWPL-1 System Software; AFIT: Warsaw, Poland, 2015. [Google Scholar]

- Michalak, S. Software Documentation for SWPL-1 Flight Parameter Display System; AFIT: Warsaw, Poland, 2015. [Google Scholar]

- ISO/IEC. Information technology—Security Techniques—Information Security Risk Management ISO/IEC FIDIS 27005:2018. Available online: https://www.iso.org/standard/75281.html (accessed on 29 January 2021).

- Joint Task Force Transformation Initiative. Guide for Conducting Risk Assessments, NIST. 2012. Available online: https://nvlpubs.nist.gov/nistpubs/Legacy/SP/nistspecialpublication800-30r1.pdf (accessed on 29 January 2021).

- Shrivastava, A.K.; Sharma, R.; Kapur, P.K. Vulnerability Discovery Model for a Software System Using Stochastic Differential Equation. In Proceedings of the 2015 International Conference on Futuristic Trends on Computational Analysis and Knowledge Management (ABLAZE), Noida, India, 25–27 February 2015; pp. 199–205. [Google Scholar]

- Hoffmann, R. Vulnerability Discovery Models for a Software System Using Stochastic Differential Equations. Collegium Econ. Anal. Ann. 2017, 45, 177–187. [Google Scholar]

- Smith, B.; Williams, L. Using SQL hotspots in a prioritization heuristic for detecting all types of web application vulnerabilities. In Proceedings of the 2011 4th IEEE International Conference on Software Testing, Verification and Validation (ICST), Berlin, Germany, 21–25 March 2011. [Google Scholar]

- Shin, Y.; Meneely, A.; Williams, L.; Osborne, J.A. Evaluating complexity, code churn, and developer activity metrics as indicators of software vulnerabilities. IEEE Trans. Softw. Eng. 2011, 37, 772–787. [Google Scholar] [CrossRef]

- Zieja, M.; Ważny, M.; Stępień, S. Outline of a method for estimating the durability of components or device assemblies while maintaining the required reliability level. Exploit. Reliab. Maint. Reliab. 2018, 20, 260–266. [Google Scholar] [CrossRef]

- Zieja, M. A method of predicting reliability and lifetime of aeronautical hardware with characteristic function applied. In Proceedings of the Transport Means 2015: Proceedings of the International Scientific Conference, Kaunas, Lithuania, 22–23 October 2015. [Google Scholar]

- Zieja, M.; Ważny, M.; Stępień, S. Distribution determination of time of exceeding permissible condition as used to determine lifetimes of selected aeronautical devices/systems. Exploit. Reliab. 2016, 18, 57–64. [Google Scholar]

- Żurek, J.; Smalko, Z.; Zieja, M. Methods Applied to Identify Causes of Air Events. Reliability, Risk and Safety: Theory and Applications; CRC Press: Boca Raton, FL, USA, 2010; pp. 1817–1822. [Google Scholar]

- Zieja, M.; Stachurski, A. An outline of the method for predicting IT vulnerabilities. In Proceedings of the MATEC Web of Conferences 210, 22nd International Conference on Circuits, Systems, Communications and Computers, Majorca, Spain, 14–17 July 2018. [Google Scholar]

- Shin, Y.; Williams, L. Can traditional fault prediction models be used for vulnerability prediction. Empir. Softw. Eng. 2013, 18, 25–29. [Google Scholar] [CrossRef]

- Shin, Y.; Williams, L. Is complexity really the enemy of software security? In Proceedings of the 4th ACM Workshop on Quality of Protection (QoP), Alexandria, VA, USA, 27 October 2008. [Google Scholar]

- Shin, Y.; Williams, L. An empirical model to predict security vulnerabilities using code complexity metrics. In Proceedings of the ACM-IEEE International Symposium on Empirical Software Engineering and Measurement (ESEM), Kaiserslautern, Germany, 9–10 October 2008. [Google Scholar]

- Chowdhury, I.; Zulkernine, M. Using complexity, coupling, and cohesion metrics as early indicators of vulnerabilities. J. Syst. Archit. 2011, 57, 294–313. [Google Scholar] [CrossRef]

- Neuhaus, S.; Zimmermann, T.; Holler, C.; Zeller, A. Predicting vulnerable software components. In Proceedings of the 14th ACM Conference on Computer and Communications Security (CCS), Alexandria, VA, USA, 29 October–2 November 2007. [Google Scholar]

- Zimmermann, T.; Nagappan, N.; Williams, L. Searching for a needle in a haystack: Predicting security vulnerabilities for windows vista. In Proceedings of the 2010 3rd International Conference on Software Testing, Verification and Validation (ICST), Paris, France, 6–9 April 2010. [Google Scholar]

- Scandariato, R.; Walden, J.; Hovsepyan, A.; Joosen, W. Predicting vulnerable software components via text mining. IEEE Trans. Softw. Eng. 2014, 40, 993–1006. [Google Scholar] [CrossRef]

- Pang, Y.; Xue, X.; Namin, A.S. Predicting vulnerable software components through n-gram analysis and statistical feature selection. In Proceedings of the 2015 IEEE 14th International Conference on Machine Learning and Applications (ICMLA), Miami, FL, USA, 9–11 December 2015; pp. 543–548. [Google Scholar]

- Siavvas, M.; Gelenbe, E.; Kehagias, D.; Tzovaras, D. Static Analysis-Based Approaches for Secure Software Development. In Security in Computer and Information Sciences Euro-CYBERSEC 2018. Communications in Computer and Information Science; Gelenbe, E., Campegiani, P., Czachórski, T., Katsikas, S.K., Komnios, L., Romano, L., Tzovaras, D., Eds.; Springer: Cham, Switzerland, 2018; Volume 821. [Google Scholar]

- Pazur, A.; Szelmanowski, A.; Borowski, J.; Michałowski, P. Implementation of the DO-178C standard requirements in the process of creating avionics software dedicated to the helmet-mounted imaging system SWPL-1 CYKLOP. BUSES – Technol. Oper. Transp. Syst. 2017, 18, 351–358. [Google Scholar]

- Szelmanowski, A.; Pazur, A.; Cieślik, A.; Michalak, S. Computer-aided system for managing the life cycle of avionic software according to the requirements of the standard DO-178C. BUSES – Technol. Oper. Transp. Syst. 2017, 18, 442–449. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zieja, M.; Szelmanowski, A.; Pazur, A.; Kowalczyk, G. Computer Life-Cycle Management System for Avionics Software as a Tool for Supporting the Sustainable Development of Air Transport. Sustainability 2021, 13, 1547. https://doi.org/10.3390/su13031547

Zieja M, Szelmanowski A, Pazur A, Kowalczyk G. Computer Life-Cycle Management System for Avionics Software as a Tool for Supporting the Sustainable Development of Air Transport. Sustainability. 2021; 13(3):1547. https://doi.org/10.3390/su13031547

Chicago/Turabian StyleZieja, Mariusz, Andrzej Szelmanowski, Andrzej Pazur, and Grzegorz Kowalczyk. 2021. "Computer Life-Cycle Management System for Avionics Software as a Tool for Supporting the Sustainable Development of Air Transport" Sustainability 13, no. 3: 1547. https://doi.org/10.3390/su13031547

APA StyleZieja, M., Szelmanowski, A., Pazur, A., & Kowalczyk, G. (2021). Computer Life-Cycle Management System for Avionics Software as a Tool for Supporting the Sustainable Development of Air Transport. Sustainability, 13(3), 1547. https://doi.org/10.3390/su13031547