Real-Time Traffic Flow Forecasting via a Novel Method Combining Periodic-Trend Decomposition

Abstract

1. Introduction

2. Literature Review

2.1. Traffic Flow Forecasting Models

- Statistical models. In early studies, many researchers proposed statistical models to predict traffic flow in the assumption of linearity and stationarity. The classical statistical models include autoregressive integrated moving average (ARIMA) [14,15,33,44], Kalman filtering (KF) [11,26], historical average (HA) [33], and exponential smoothing [39,40]. These models are simple and have low computational complexity. However, because of both linearity and nonlinearity, traffic flow is complex and volatile [4,10]. For example, traffic volume increases rapidly in rush hours and decreases rapidly after rush hours. Due to the assumption of linearity, these statistical models cannot well learn those characteristics of traffic flow.

- Artificial intelligent (AI) models. In the later studies, it has been proven that traffic flow is nonlinear and nonstationary [4,5,31]. Plenty of researchers developed AI models to capture the nonlinearity of traffic flow. These models mainly contain k-nearest neighbor (KNN) [27,28,33], support vector regression (SVR) [5,12,29,41], artificial neural network (ANN) [7,30,31,33], wavelet neural network (WNN) [7,37], and extreme learning machine (ELM) [23,40]. Due to the ability of nonlinear modelling, when a data scale is larger, the AI models usually perform better than the statistical models. Besides that, the deep learning models, which are improved from ANN and have more complex neural network structures, have been introduced and developed, to realize a more accurate prediction in recent years. The famous deep learning models include stacked auto encoders (SAE) [4], long short-term memory neural network (LSTM) [9,34,45], deep belief network (DBN) [16,42], and convolutional neural network (CNN) [32]. LSTM, especially, have shown superiority in traffic flow forecasting task due to the ability of temporal modelling [9,34,45]. Because of the universal approximation capability, the deep learning models can approximate any nonlinear function, and have shown outstanding performance for multi-source data. Nevertheless, owing to high computational complexity, these models will consume a large amount of time during training.

- Hybrid models. Any method combining two or more models can be treated as a hybrid model. Hybrid models have flexible frameworks and can integrate the merits of different methods. In addition, both theoretical and empirical findings have indicated that the hybridization of different models is an effective way of improving prediction accuracy. Thus, hybrid models have been paid more attention than the aforementioned two kinds. Zeng et al. [35] and Tan et al. [36] both proposed combining ARIMA with ANN, to capture both the linearity and nonlinearity of traffic flow. In order to mine the spatial-temporal features of traffic flow, Luo et al. [6] proposed the hybrid model of KNN and LSTM, and Li et al. [38] proposed the hybrid model of ARIMA and SVR. To enhance the prediction performance of models, a genetic algorithm (GA) was employed by Hong et al. [41] and Zhang et al. [42], to optimize the hyperparameters of SVR and DBN. Feng et al. [43] employed particle swarm optimization (PSO) to optimize the hyperparameters of SVR.

2.2. STL and the Proposed PTD Approach

2.3. Contributions

- A novel PTD approach is specially formulated for real-time traffic flow composition. We develop the PTD approach to extract trend and periodic characteristics of traffic flow. Fully considering the dynamicity of traffic flow in the real world, PTD is improved from STL to decompose traffic flow data. PTD can decompose the original traffic flow three additive components, including trend, periodicity, and remainder. The components can reveal the inner characteristics of traffic flow.

- A novel method combining PTD is developed to predict traffic flow more accurately. After completing decomposition, the periodicity is predicted by cycling, and the trend and remainder are respectively predicted by modelling. Then, the three predicted results are summed as final outcomes.

- Multi-step prediction is implemented, to provide more traffic flow information of the future. Traditional single-step prediction is unable to provide enough information for further plans and decisions of ITS. Thus, multi-step is necessary. Based on an iterated strategy, a multi-step prediction approach is developed, to extend the proposed hybrid method.

3. Methodology

3.1. Periodic-Trend Decomposition (PTD) Approach

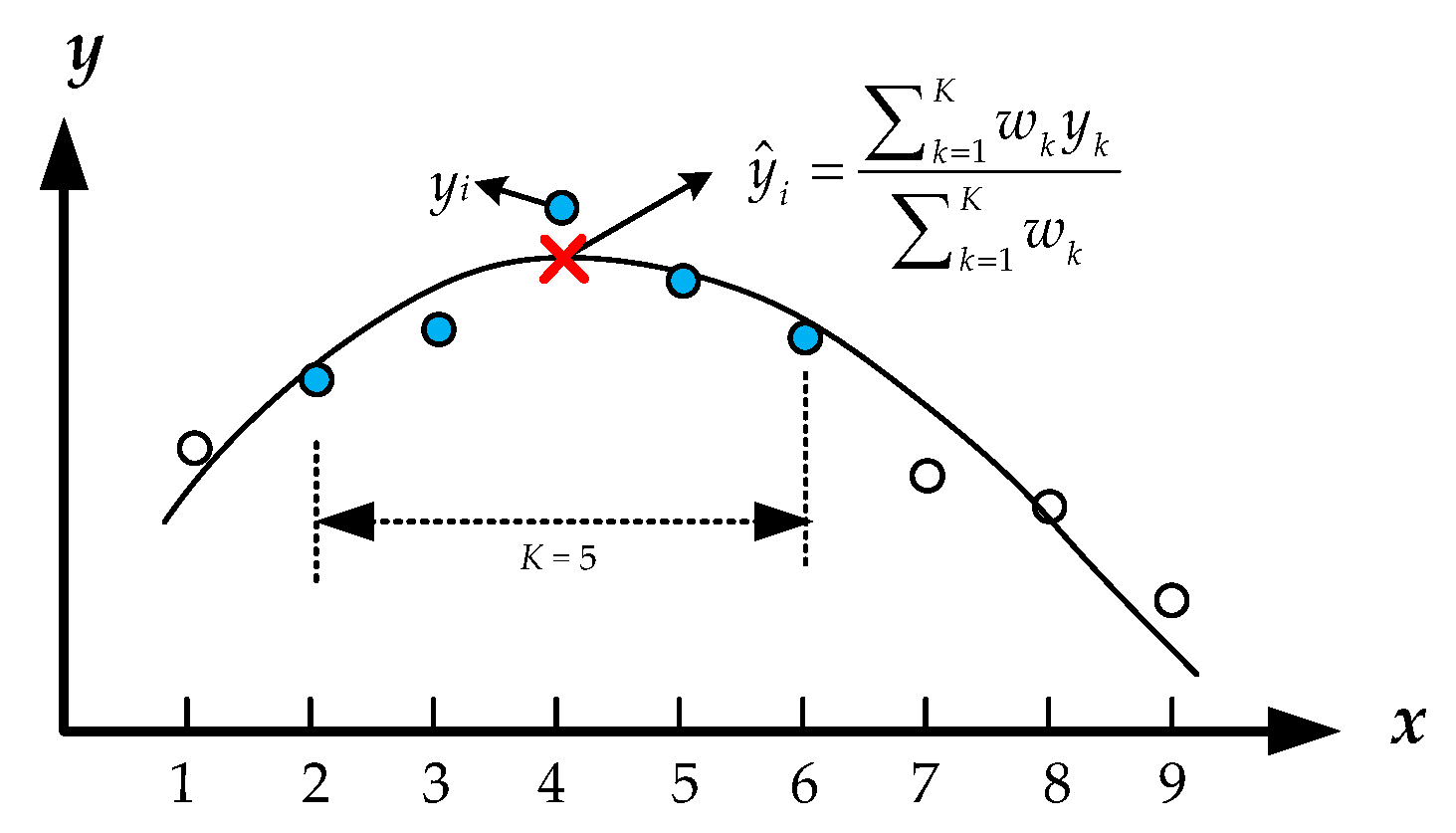

3.1.1. Locally Weighted Regression (LOWESS)

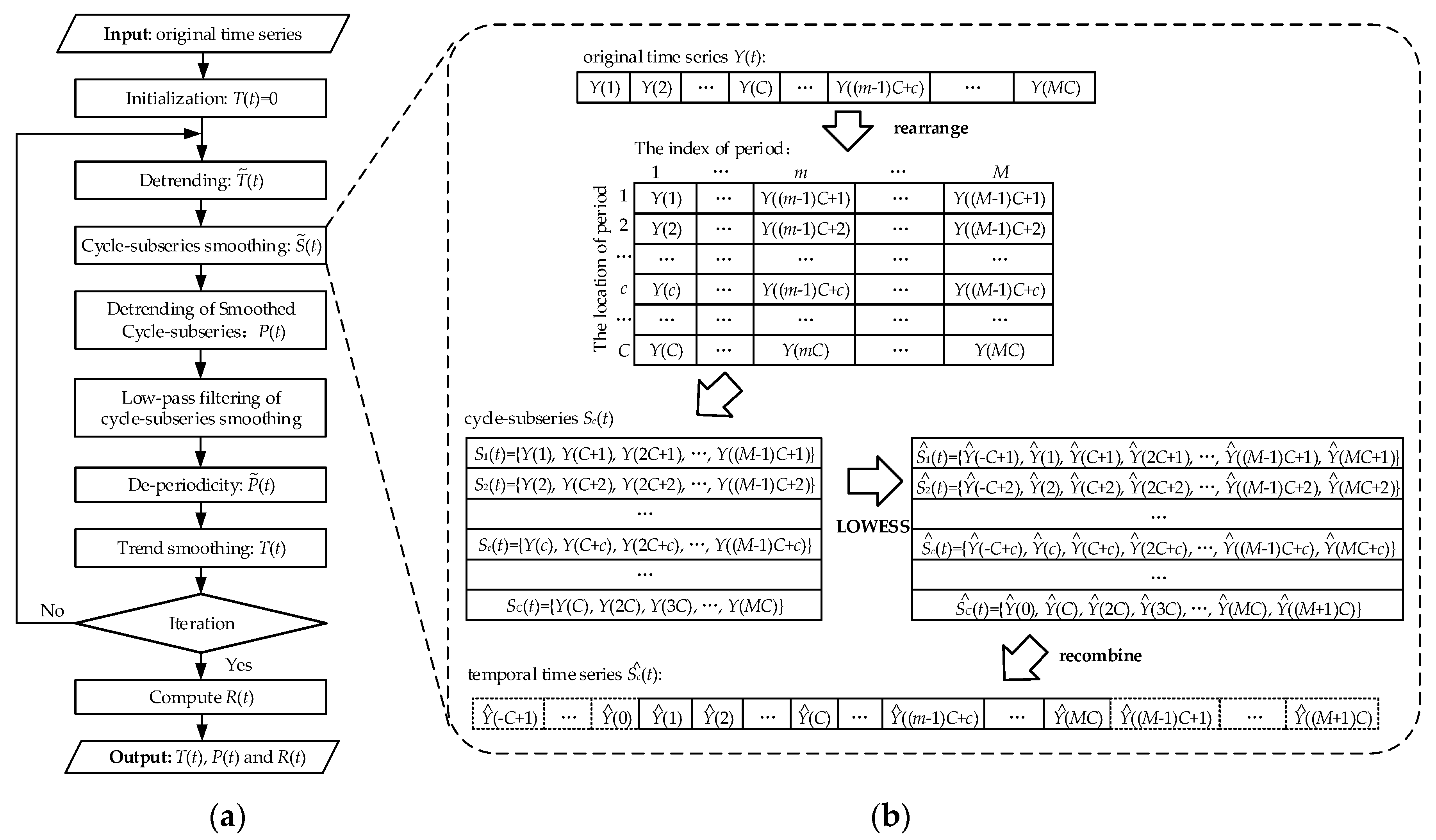

3.1.2. Decomposition for In-Sample

- Step 1: Detrending. Trend T(t) is removed from original data Y(t), then de-trended series is obtained:

- Step 2: Cycle-subseries smoothing. The details of this step are displayed in Figure 2b. Each cycle-subseries Sc(t) of is smoothed by LOWESS with K1, and extended one period forward and backwards. Specifically, for independent variables X = [1, 2, 3, 4, …, M]T and dependent variables , the estimated values are estimated by LOWESS with K1 at X = [0, 1, 2, 3, 4,…, M, M + 1]T, then all the , c = 1, 2,..., C are arranged in chronological order. Finally, they are recombined and generate temporal time series , t = −C + 1, −C + 2,..., MC + C. The cycle-subseries Sc(t) represents a series composed of elements at the same position of each period, as follows:

- Step 3: Low-pass filtering of the cycle-subseries smoothing. The temporal time series , t = −C + 1, −C + 2,..., MC + C are smoothed by a low-pass filter to obtain the series L(t), t = 1, 2,..., MC. The low-pass filter consists of a moving average of length C, followed by another moving average of length C, followed by another moving average of 3, and followed by LOWESS smooth with K2.

- Step 4: Detrending of Smoothed Cycle-subseries. The preliminary periodicity is calculated as:

- Step 5: De-periodicity. The original series Y(t) subtracts the periodicity P(t) to obtain a periodically adjusted series :

- Step 6: Trend smoothing. the is smoothed by LOWESS with K3 to obtain the trend T(t) = 0, t = 1, 2,..., MC.

3.1.3. Decomposition for Out-Of-Sample

- Step 1: Calculating periodicity. The periodicity pt is calculated according to its position of the period in Q(t) (obtained from Equation (9)).

- Step 2: De-periodicity. The original data zt subtract the periodicity pt to obtain a periodically adjusted datum :

- Step 3: Calculating trend component. The periodically adjusted datum is appended to the end of the periodically adjusted series (obtained from Equation (10)). The next point of the new series is evaluated by LOWESS with K4, to obtain trend tt.

- Step 4: Calculating remainder. The remainder rt is computed as:

3.2. Prediction Models for the Decomposed Components

3.2.1. Autoregressive Integrated Moving Average (ARIMA)

3.2.2. Support Vector Regression (SVR)

3.2.3. Artificial Neural Network (ANN)

3.2.4. Long Short-Term Memory Neural Network (LSTM)

3.3. Multi-Step Prediction

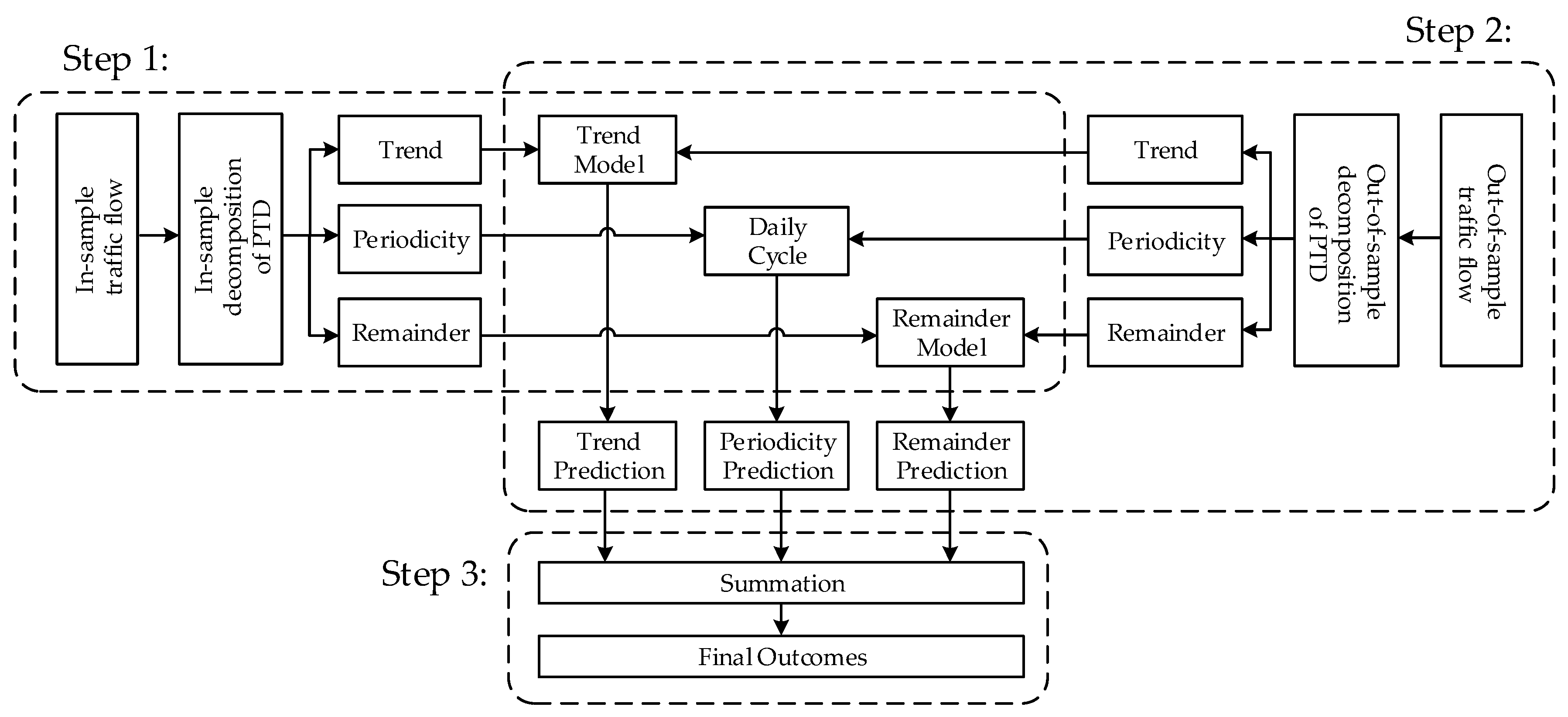

3.4. Hybrid Prediction Models

- Step 1: In-sample decomposition and models training. Based on the in-sample decomposition of PTD, the in-sample data is decomposed into three components: trend, periodicity, and remainder. Then, each component is used to train the models (described in Section 3.2) separately.

- Step 2: Out-of-sample decomposition and multi-step prediction. Based on the out-of-sample decomposition of PTD, the out-of-sample data is also decomposed into trend, periodicity, and remainder. Then, each component of out-of-sample decomposition is input into the corresponding trained model. The predicted results are obtained from the output of the models.

- Step 3: Integration for the final outcomes. The predicted results of the three components are summed as the final outcomes.

4. Experiments

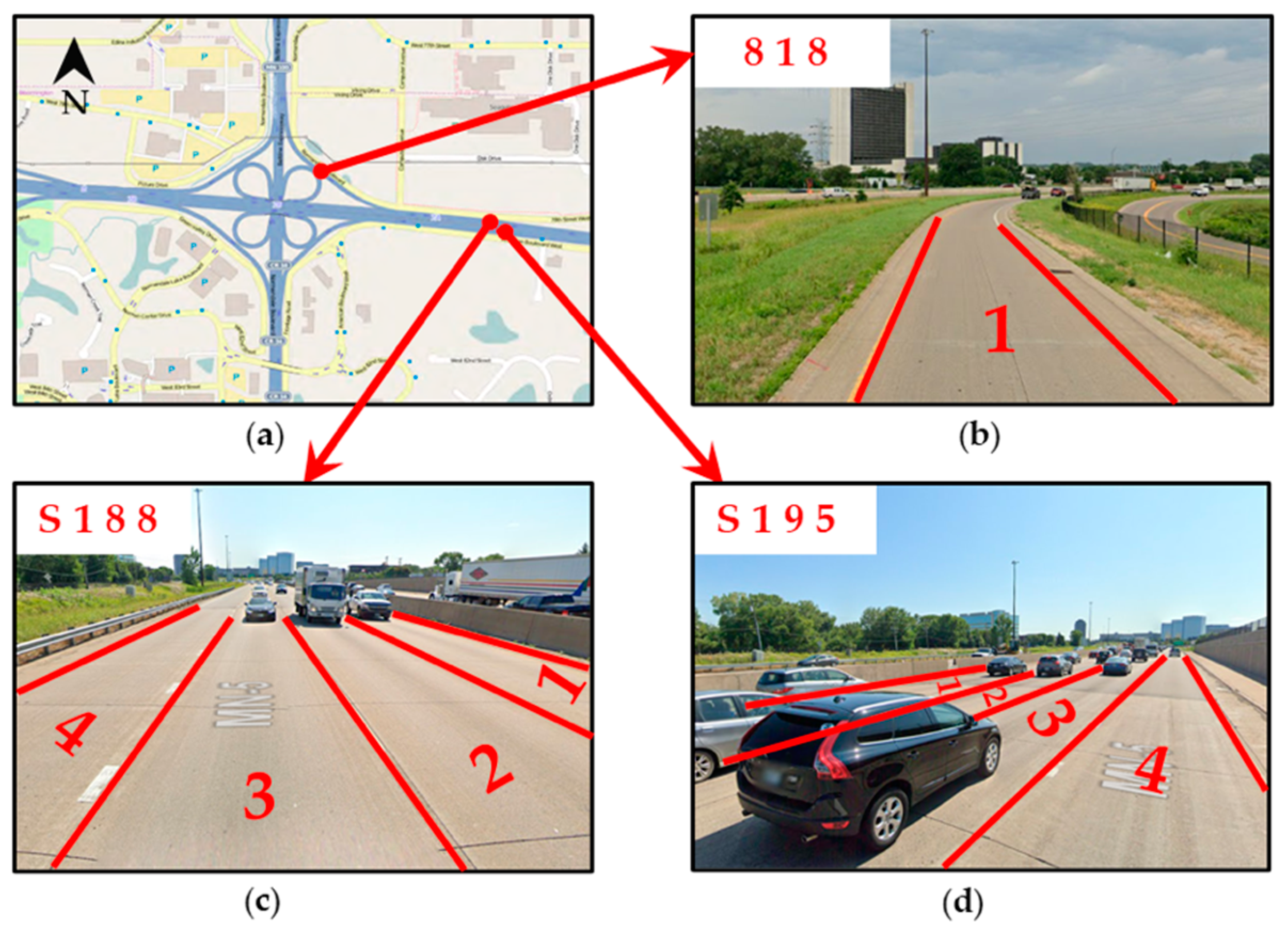

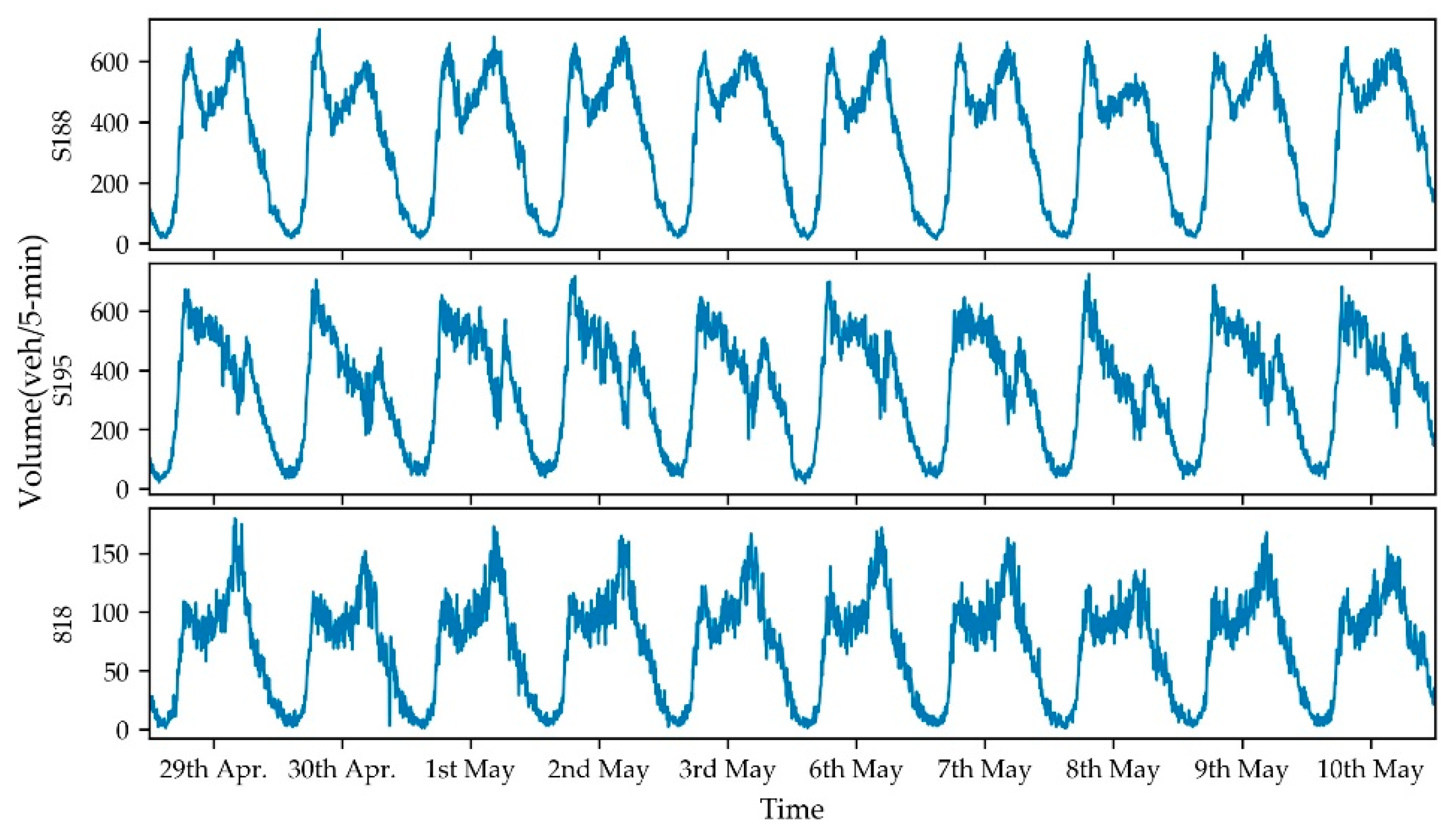

4.1. Data Description

4.2. Design of Experiments

- ARIMA: the difference order d of ARIMA is set based on ADF unit root test and the autoregressive order p and moving average order q are both selected from 0 to 24, based on minimum BIC value (see Equation (15)).

- SVR: The RBF kernel function coefficient γ, and the regularization factor H, the tube width ε are all selected from {10−5, 10−4, 10−3, 10−2, 10−1, 1, 10, 102, 103, 104}.

- ANN: According to previous research [30,31], a one-hidden-layer neural network is frequently utilized and also adopted in our experiment. The number of hidden neurons u is selected from 2 to 40 with step 2. The model is optimized by Adam algorithm with mean square error (MSE) loss function. The learning rate is set as 0.001, the batch-size is 256, and the epochs is 500.

4.3. Performance Measures

5. Results and Discussions

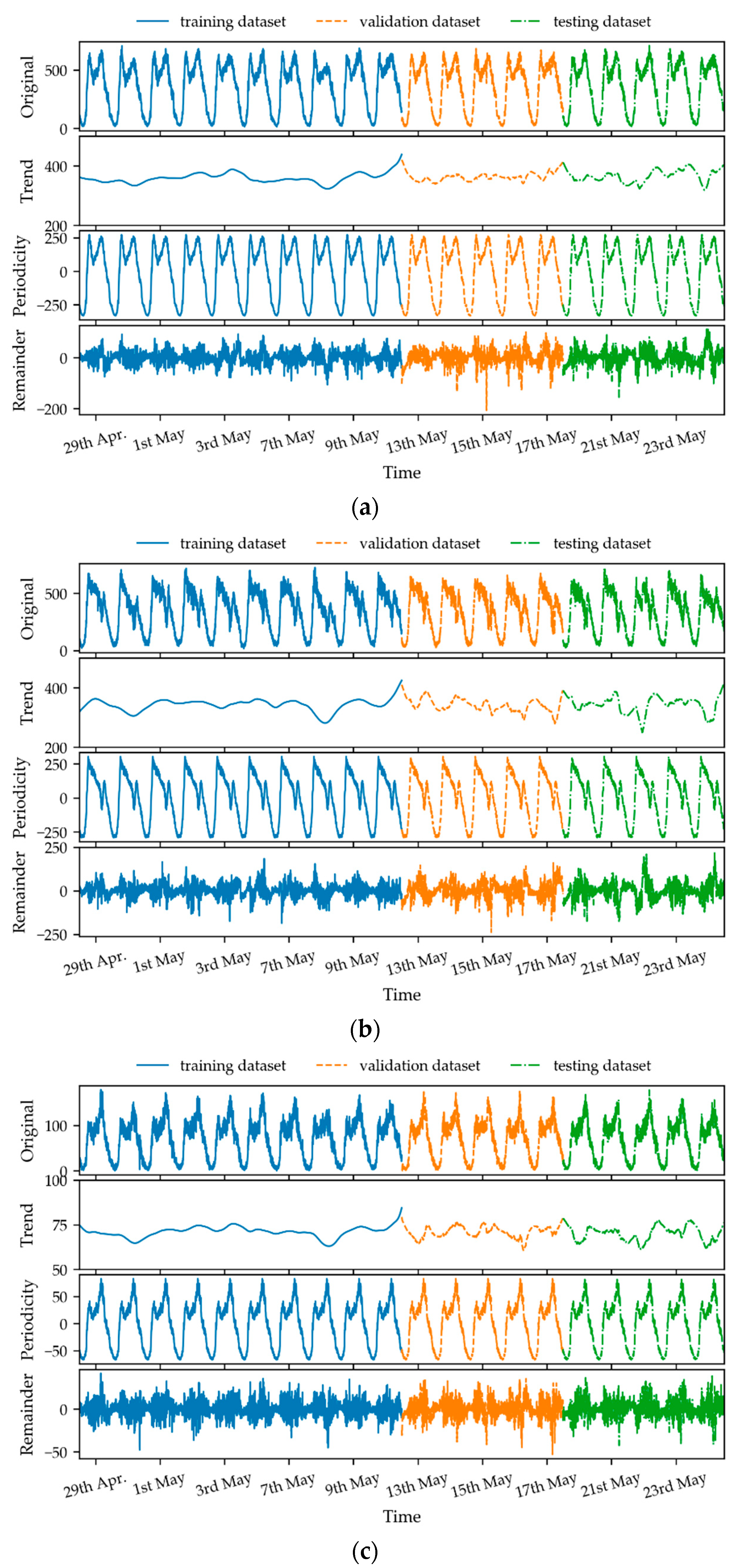

5.1. Analysis of Decomposition Results

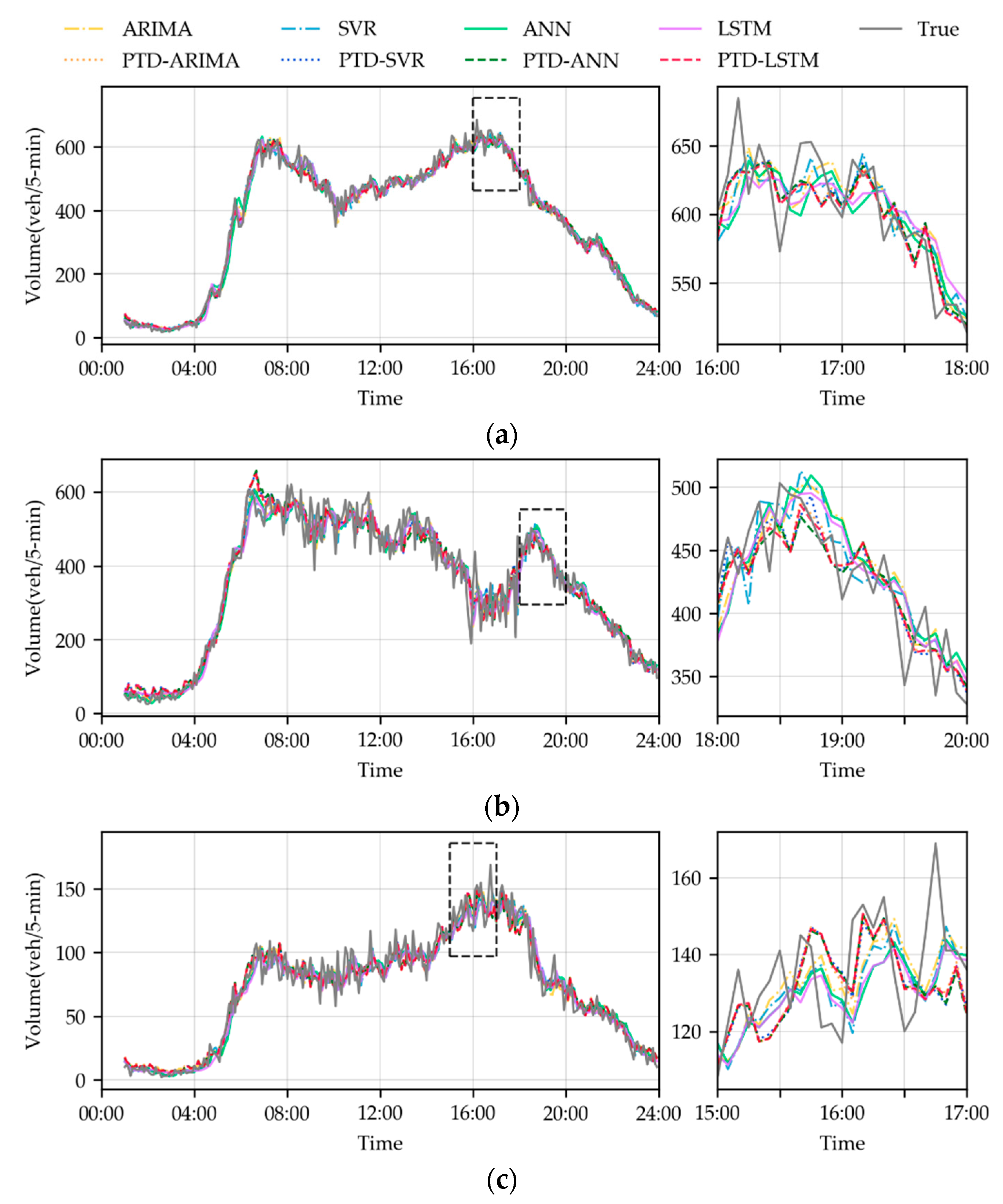

5.2. Analysis of Prediction Results

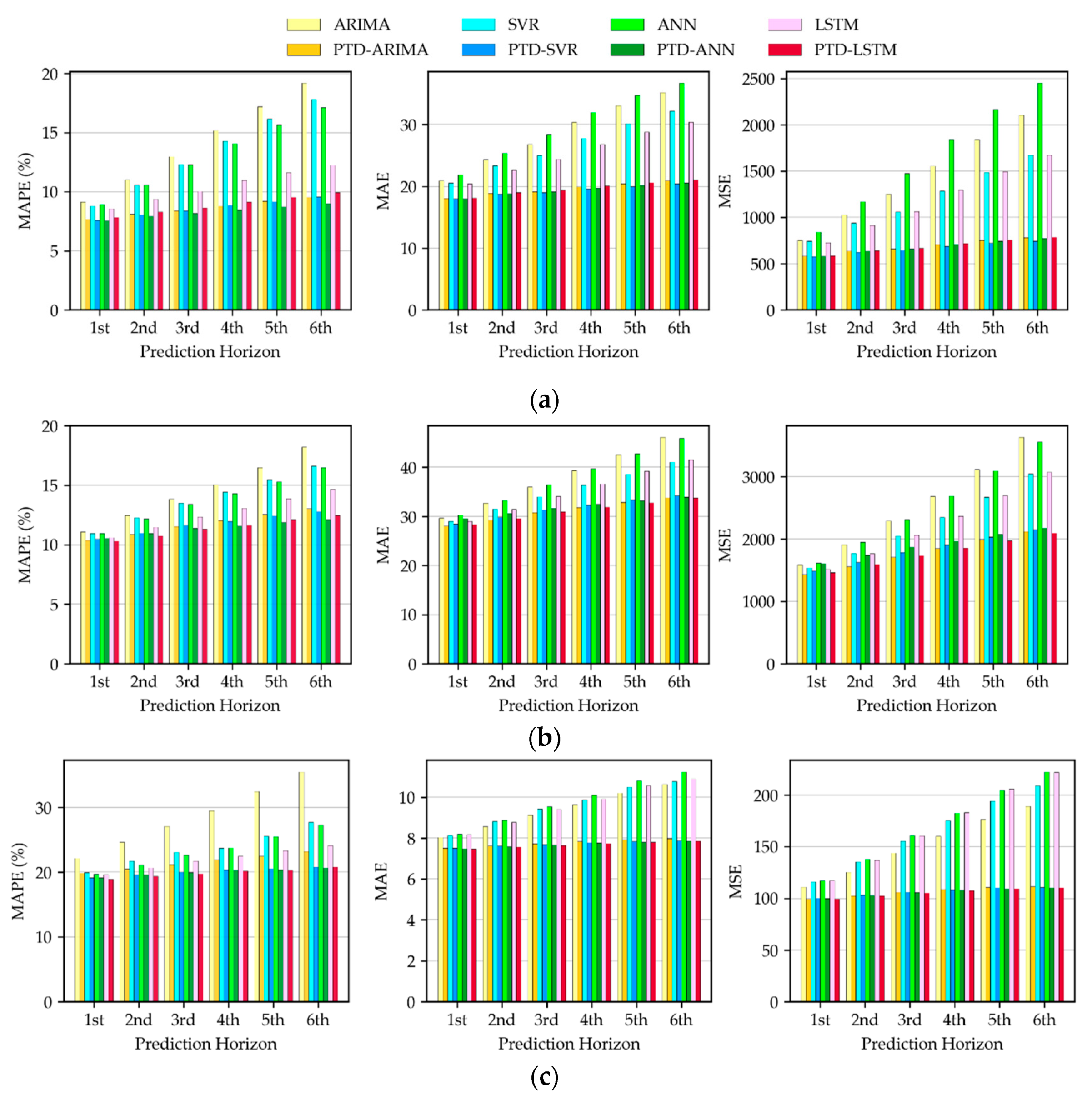

5.3. Analysis of Multi-Step Prediction Errors

6. Conclusions

- A novel decomposition approach for traffic flow PTD is formulated. Fully considering the dynamicity of real-time traffic flow, PTD is formulated to decompose both in-sample and out-of-sample data. This approach can decompose the original traffic flow into trend, periodicity, and remainder.

- A novel hybrid method combining PTD for traffic flow forecasting is developed. To demonstrate the universal applicability of the PTD approach, both statistical and AI models (i.e., ARIMA, SVR, ANN, LSTM) are combined with PTD, to establish hybrid models (i.e., PTD-ARIMA, PTD-SVR, PTD-ANN and PTD-LSTM). Empirical results show that the MAE, MAPE, and MSE of hybrid models are averagely reduced by 17%, 17%, and 29% than individual models, respectively.

- After investigating multi-step prediction results, it is found that the hybrid models combining PTD can not only reduce the prediction errors, but also reduce cumulative errors. It suggests that the proposed hybrid method is robust for multi-step traffic flow forecasting.

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Zahid, M.; Chen, Y.; Jamal, A.; Mamadou, C.Z. Freeway short-term travel speed prediction based on data collection time-horizons: A fast forest quantile regression approach. Sustainability 2020, 12, 646. [Google Scholar] [CrossRef]

- Vlahogianni, E.I.; Karlaftis, M.G.; Golias, J.C. Short-term traffic forecasting: Where we are and where we’re going. Transp. Res. Part C Emerg. Technol. 2014, 43, 3–19. [Google Scholar] [CrossRef]

- Chen, X.; Lu, J.; Zhao, J.; Qu, Z.; Yang, Y.; Xian, J. Traffic flow prediction at varied time scales via ensemble empirical mode decomposition and artificial neural network. Sustainability 2020, 12, 3678. [Google Scholar] [CrossRef]

- Lv, Y.; Duan, Y.; Kang, W.; Li, Z.; Wang, F.Y. Traffic Flow Prediction with Big Data: A Deep Learning Approach. IEEE Trans. Intell. Transp. Syst. 2015, 16, 865–873. [Google Scholar] [CrossRef]

- Zhang, M.; Zhen, Y.; Hui, G.; Chen, G. Accurate multisteps traffic flow prediction based on SVM. Math. Probl. Eng. 2013, 2013. [Google Scholar] [CrossRef]

- Luo, X.; Li, D.; Yang, Y.; Zhang, S. Spatiotemporal traffic flow prediction with KNN and LSTM. J. Adv. Transp. 2019, 2019. [Google Scholar] [CrossRef]

- Jiang, X.; Adeli, H. Dynamic wavelet neural network model for traffic flow forecasting. J. Transp. Eng. 2005, 131, 771–779. [Google Scholar] [CrossRef]

- Bratsas, C.; Koupidis, K.; Salanova, J.M.; Giannakopoulos, K.; Kaloudis, A.; Aifadopoulou, G. A comparison of machine learning methods for the prediction of traffic speed in Urban places. Sustainability 2020, 12, 142. [Google Scholar] [CrossRef]

- Ma, X.; Tao, Z.; Wang, Y.; Yu, H.; Wang, Y. Long short-term memory neural network for traffic speed prediction using remote microwave sensor data. Transp. Res. Part C 2015, 54, 187–197. [Google Scholar] [CrossRef]

- Wang, H.; Liu, L.; Dong, S.; Qian, Z.; Wei, H. A novel work zone short-term vehicle-type specific traffic speed prediction model through the hybrid EMD–ARIMA framework. Transp. B 2016, 4, 159–186. [Google Scholar] [CrossRef]

- Yang, J.S. Travel time prediction using the GPS test vehicle and Kalman filtering techniques. In Proceedings of the American Control Conference, Portland, OR, USA, 8–10 June 2005; Volume 3, pp. 2128–2133. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, Y. Traffic forecasting using least squares support vector machines. Transportmetrica 2009, 5, 193–213. [Google Scholar] [CrossRef]

- Zou, Y.; Hua, X.; Zhang, Y.; Wang, Y. Hybrid short-term freeway speed prediction methods based on periodic analysis. Can. J. Civ. Eng. 2015, 42, 570–582. [Google Scholar] [CrossRef]

- Min, W.; Wynter, L. Real-time road traffic prediction with spatio-temporal correlations. Transp. Res. Part C Emerg. Technol. 2011, 19, 606–616. [Google Scholar] [CrossRef]

- Ahmed, M.S.; Cook, A.R. Analysis of Freeway Traffic Time-Series Data By Using Box-Jenkins Techniques. Transp. Res. Rec. 1979, 1–9. [Google Scholar]

- Li, L.; Qin, L.; Qu, X.; Zhang, J.; Wang, Y.; Ran, B. Day-ahead traffic flow forecasting based on a deep belief network optimized by the multi-objective particle swarm algorithm. Knowl.-Based Syst. 2019, 172, 1–14. [Google Scholar] [CrossRef]

- Tselentis, D.I.; Vlahogianni, E.I.; Karlaftis, M.G. Improving short-term traffic forecasts: To combine models or not to combine? IET Intell. Transp. Syst. 2015, 9, 193–201. [Google Scholar] [CrossRef]

- Lin, L.; Wang, Q.; Huang, S.; Sadek, A.W. On-line prediction of border crossing traffic using an enhanced Spinning Network method. Transp. Res. Part C Emerg. Technol. 2014, 43, 158–173. [Google Scholar] [CrossRef]

- Luo, X.; Li, D.; Zhang, S. Traffic flow prediction during the holidays based on DFT and SVR. J. Sens. 2019, 2019. [Google Scholar] [CrossRef]

- Kolidakis, S.; Botzoris, G.; Profillidis, V.; Lemonakis, P. Road traffic forecasting—A hybrid approach combining Artificial Neural Network with Singular Spectrum Analysis. Econ. Anal. Policy 2019, 64, 159–171. [Google Scholar] [CrossRef]

- Guo, F.; Krishnan, R.; Polak, J.W. Short-term traffic prediction under normal and incident conditions using singular spectrum analysis and the k-nearest neighbour method. In Proceedings of the IET and ITS Conference on Road Transport Information and Control (RTIC 2012), London, UK, 25–26 September 2012. [Google Scholar] [CrossRef]

- Chen, W.; Shang, Z.; Chen, Y.; Chaeikar, S.S. A Novel Hybrid Network Traffic Prediction Approach Based on Support Vector Machines. J. Comput Netw. Commun. 2019, 2019. [Google Scholar] [CrossRef]

- Shang, Q.; Lin, C.; Yang, Z.; Bing, Q.; Zhou, X. A hybrid short-term traffic flow prediction model based on singular spectrum analysis and kernel extreme learning machine. PLoS ONE 2016, 11. [Google Scholar] [CrossRef] [PubMed]

- Zheng, C.; Li, L. The improvement of the forecasting model of short-term traffic flow based on wavelet and ARMA. In Proceedings of the SCMIS 2010—2010 8th International Conference on Supply Chain Management and Information Systems: Logistics Systems and Engineering, Hong Kong, China, 6–9 October 2010. [Google Scholar]

- Zhang, N.; Guan, X.; Cao, J.; Wang, X.; Wu, H. Wavelet-HST: A Wavelet-Based Higher-Order Spatio-Temporal Framework for Urban Traffic Speed Prediction. IEEE Access 2019, 7, 118446–118458. [Google Scholar] [CrossRef]

- Okutani, I.; Stephanedes, Y.J. Dynamic prediction of traffic volume through Kalman filtering theory. Transp. Res. Part B 1984, 18, 1–11. [Google Scholar] [CrossRef]

- Gong, X.; Wang, F. Three improvements on KNN-NPR for traffic flow forecasting. In Proceedings of the IEEE Conference on Intelligent Transportation Systems, Singapore, 6 September 2002; pp. 736–740. [Google Scholar] [CrossRef]

- Yu, B.; Song, X.; Guan, F.; Yang, Z.; Yao, B. K-Nearest Neighbor Model for Multiple-Time-Step Prediction of Short-Term Traffic Condition. J. Transp. Eng. 2016, 142. [Google Scholar] [CrossRef]

- Xiao, J.; Wei, C.; Liu, Y. Speed estimation of traffic flow using multiple kernel support vector regression. Phys. A Stat. Mech. Appl. 2018, 509, 989–997. [Google Scholar] [CrossRef]

- Vlahogianni, E.I.; Karlaftis, M.G.; Golias, J.C. Optimized and meta-optimized neural networks for short-term traffic flow prediction: A genetic approach. Transp. Res. Part. C Emerg. Technol. 2005, 13, 211–234. [Google Scholar] [CrossRef]

- Kumar, K.; Parida, M.; Katiyar, V.K. Short term traffic flow prediction in heterogeneous condition using artificial neural network. Transport 2015, 30, 397–405. [Google Scholar] [CrossRef]

- Ma, X.; Dai, Z.; He, Z.; Ma, J.; Wang, Y.; Wang, Y. Learning traffic as images: A deep convolutional neural network for large-scale transportation network speed prediction. Sensors 2017, 17, 818. [Google Scholar] [CrossRef]

- Smith, B.L.; Demetsky, M.J. Traffic flow forecasting: Comparison of modeling approaches. J. Transp. Eng. 1997, 123, 261–266. [Google Scholar] [CrossRef]

- Jia, Y.; Wu, J.; Xu, M. Traffic flow prediction with rainfall impact using a deep learning method. J. Adv. Transp. 2017, 2017. [Google Scholar] [CrossRef]

- Zeng, D.; Xu, J.; Gu, J.; Liu, L.; Xu, G. Short term traffic flow prediction using hybrid ARIMA and ANN models. In Proceedings of the 2008 Workshop on Power Electronics and Intelligent Transportation System, PEITS 2008, Guangzhou, China, 2–3 August 2008; pp. 621–625. [Google Scholar] [CrossRef]

- Tan, M.C.; Wong, S.C.; Xu, J.M.; Guan, Z.R.; Zhang, P. An aggregation approach to short-term traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 2009, 10, 60–69. [Google Scholar] [CrossRef]

- Hou, Q.; Leng, J.; Ma, G.; Liu, W.; Cheng, Y. An adaptive hybrid model for short-term urban traffic flow prediction. Phys. A Stat. Mech. Appl. 2019, 527. [Google Scholar] [CrossRef]

- Li, L.; He, S.; Zhang, J.; Ran, B. Short-term highway traffic flow prediction based on a hybrid strategy considering temporal–spatial information. J. Adv. Transp. 2016, 50, 2029–2040. [Google Scholar] [CrossRef]

- Chan, K.Y.; Dillon, T.S.; Singh, J.; Chang, E. Traffic flow forecasting neural networks based on exponential smoothing method. In Proceedings of the 2011 6th IEEE Conference on Industrial Electronics and Applications, ICIEA 2011, Beijing, China, 21–23 June 2011; pp. 376–381. [Google Scholar] [CrossRef]

- Yang, H.F.; Dillon, T.S.; Chang, E.; Chen, Y.P.P. Optimized Configuration of Exponential Smoothing and Extreme Learning Machine for Traffic Flow Forecasting. IEEE Trans. Ind. Inform. 2019, 15, 23–34. [Google Scholar] [CrossRef]

- Hong, W.C.; Dong, Y.; Zheng, F.; Wei, S.Y. Hybrid evolutionary algorithms in a SVR traffic flow forecasting model. Appl. Math. Comput. 2011, 217, 6733–6747. [Google Scholar] [CrossRef]

- Zhang, Y.; Huang, G. Traffic flow prediction model based on deep belief network and genetic algorithm. IET Intell. Transp. Syst. 2018, 12, 533–541. [Google Scholar] [CrossRef]

- Feng, X.; Ling, X.; Zheng, H.; Chen, Z.; Xu, Y. Adaptive multi-kernel SVM with spatial-temporal correlation for short-term traffic flow prediction. IEEE Trans. Intell. Transp. Syst. 2019, 20, 2001–2013. [Google Scholar] [CrossRef]

- Nihan, N.L.; Holmesland, K.O. Use of the box and Jenkins time series technique in traffic forecasting. Transportation 1980, 9, 125–143. [Google Scholar] [CrossRef]

- Yang, B.; Sun, S.; Li, J.; Lin, X.; Tian, Y. Traffic flow prediction using LSTM with feature enhancement. Neurocomputing 2019, 332, 320–327. [Google Scholar] [CrossRef]

- Li, Z.; Li, Y.; Li, L. A comparison of detrending models and multi-regime models for traffic flow prediction. IEEE Intell. Transp. Syst. Mag. 2014, 6, 34–44. [Google Scholar] [CrossRef]

- Chen, C.; Wang, Y.; Li, L.; Hu, J.; Zhang, Z. The retrieval of intra-day trend and its influence on traffic prediction. Transp. Res. Part C Emerg. Technol. 2012, 22, 103–118. [Google Scholar] [CrossRef]

- Dai, X.; Fu, R.; Zhao, E.; Zhang, Z.; Lin, Y.; Wang, F.Y.; Li, L. DeepTrend 2.0: A light-weighted multi-scale traffic prediction model using detrending. Transp. Res. Part C Emerg. Technol. 2019, 103, 142–157. [Google Scholar] [CrossRef]

- Cleveland, R.B.; Cleveland, W.S.; McRae, J.E.; Terpenning, I. STL: A seasonal-trend decomposition procedure based on loess. J. Off. Stat. 1990, 6, 3–73. [Google Scholar]

- Rojo, J.; Rivero, R.; Romero-Morte, J.; Fernández-González, F.; Pérez-Badia, R. Modeling pollen time series using seasonal-trend decomposition procedure based on LOESS smoothing. Int. J. Biometeorol. 2017, 61, 335–348. [Google Scholar] [CrossRef] [PubMed]

- Theodosiou, M. Forecasting monthly and quarterly time series using STL decomposition. Int. J. Forecast. 2011, 27, 1178–1195. [Google Scholar] [CrossRef]

- Stow, C.A.; Cha, Y.; Johnson, L.T.; Confesor, R.; Richards, R.P. Long-term and seasonal trend decomposition of maumee river nutrient inputs to western lake erie. Environ. Sci. Technol. 2015, 49, 3392–3400. [Google Scholar] [CrossRef]

- Xiong, T.; Li, C.; Bao, Y. Seasonal forecasting of agricultural commodity price using a hybrid STL and ELM method: Evidence from the vegetable market in China. Neurocomputing 2018, 275, 2831–2844. [Google Scholar] [CrossRef]

- Qin, L.; Li, W.; Li, S. Effective passenger flow forecasting using STL and ESN based on two improvement strategies. Neurocomputing 2019, 356, 244–256. [Google Scholar] [CrossRef]

- Bartholomew, D.J.; Box, G.E.P.; Jenkins, G.M. Time Series Analysis Forecasting and Control. Oper. Res. Q. (1970-1977) 1971, 22, 199. [Google Scholar] [CrossRef]

- Boser, B.E.; Guyon, I.M.; Vapnik, V.N. Training algorithm for optimal margin classifiers. In Proceedings of the Fifth Annual ACM Workshop on Computational Learning Theory, Pittsburgh, PA, USA, 27–29 July 1992; pp. 144–152. [Google Scholar] [CrossRef]

- Cortes, C.; Vapnik, V. Support-vector networks. Mach. Learn. 1995, 20, 273–297. [Google Scholar] [CrossRef]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- The Minnesota Department of Transportation. Mn/DOT Traffic Data. Available online: http://data.dot.state.mn.us/datatools/ (accessed on 11 March 2020).

- Tian, Y.; Pan, L. Predicting short-term traffic flow by long short-term memory recurrent neural network. In Proceedings of the 2015 IEEE International Conference on Smart City, Chengdu, China, 19–21 December 2015; pp. 153–158. [Google Scholar] [CrossRef]

| Ref. | Method | Area | Traffic State | Prediction Interval (Minutes) | Prediction Horizon (Steps) |

|---|---|---|---|---|---|

| Statistical models | |||||

| [14] | ARIMA | arterial | volume; speed | 5 | 12 |

| [11] | KF | arterials | travel time | 5 | 1 |

| [26] | KF | expressway | volume | 5 | 1 |

| Artificial intelligent (AI) models | |||||

| [27] | KNN | highway | volume | 60 | 1 |

| [28] | KNN | urban road | speed | 5 | 5 |

| [12] | SVR | freeway | travel time | 60 | 1 |

| [29] | SVR | expressway | speed | 1 | 1 |

| [5] | SVR | urban road | volume | 5 | 3 |

| [30] | ANN | urban road | volume | 3 | 5 |

| [31] | ANN | highway | volume | 5 | 1 |

| [7] | WNN | freeway | volume | 60 | 1 |

| [9] | LSTM | expressway | speed | 2 | 4 |

| [4] | SAE | freeway | volume | 5;15;30;45;60 | 1 |

| [32] | CNN | urban road | speed | 10;20 | 1 |

| [33] | HA; ARIMA; ANN; KNN | freeway | volume | 15 | 1 |

| [34] | DBN; LSTM | arterial | volume | 10;30 | 1 |

| [8] | ANN; SVR; RF; LR | urban road | speed | 15 | 1 |

| Hybrid models | |||||

| [35] | ARIMA+ANN | highway | volume | 8 | 1 |

| [36] | ARIMA+ANN | highway | volume | 60 | 3 |

| [37] | ARIMA+WNN | urban road | volume | 15 | 1 |

| [38] | ARIMA+SVR | freeway | volume | 5 | 1 |

| [39] | ES+ANN | freeway | speed | 1 | 6 |

| [40] | ES+ELM | freeway | speed | 1 | 1 |

| [6] | KNN+LSTM | freeway | volume | 5 | 1 |

| [41] | GA+SVR | motorway | volume | 60 | 1 |

| [42] | GA+DBN | freeway | volume | 15 | 1 |

| [43] | PSO+SVR | freeway; urban road | volume | 5 | 1 |

| [19] | FT+SVR | freeway | volume | 60 | 1 |

| [10] | EMD+ARIMA | freeway | volume; speed | 5 | 4 |

| [3] | EMD+ANN | freeway | volume | 1;2;10 | 10 |

| [22] | EMD+SVR | urban road | volume | 5 | 1 |

| [21] | SSA+KNN | urban road | volume | 0.6 | 1 |

| [23] | SSA+ELM | urban road | volume | 5 | 1 |

| [25] | WD + GCRNN +ARIMA | urban road | speed | 15 | 1 |

| Model | Component | Hyper-Parameters | Detection Station | ||

|---|---|---|---|---|---|

| S188 | S195 | 818 | |||

| ARIMA | - | p, d, q | 14, 0, 1 | 2, 0, 4 | 6, 0, 11 |

| SVR | - | γ, H, ε | 1−1, 104, 10−4 | 1, 1, 10−2 | 10−1, 10, 10−2 |

| ANN | - | u | 16 | 16 | 18 |

| LSTM | - | u | 30 | 12 | 12 |

| PTD-ARIMA | trend | p, d, q | 1, 0, 14 | 2, 0, 1 | 18, 0, 16 |

| remainder | p, d, q | 1, 0, 1 | 1, 0, 1 | 1, 0, 1 | |

| PTD-SVR | trend | γ, H, ε | 10, 10−1, 10−4 | 10−3, 103, 10−4 | 10−4, 104, 10−4 |

| remainder | γ, H, ε | 10−1, 10, 10−1 | 10−3, 104, 10−3 | 10−2, 102, 10−2 | |

| PTD-ANN | trend | u | 30 | 16 | 30 |

| remainder | u | 16 | 40 | 40 | |

| PTD-LSTM | trend | u | 20 | 32 | 16 |

| remainder | u | 10 | 34 | 4 | |

| Models | S188 | S195 | 818 | ||||||

|---|---|---|---|---|---|---|---|---|---|

| MAE | MAPE | MSE | MAE | MAPE | MSE | MAE | MAPE | MSE | |

| ARIMA | 28.47 | 14.12% | 1422.04 | 37.75 | 14.52% | 2536.03 | 9.37 | 28.55% | 151.08 |

| PTD-ARIMA | 19.56 | 8.62% | 685.39 | 31.09 | 11.73% | 1772.60 | 7.77 | 21.53% | 106.51 |

| improved | 31.32% | 38.95% | 51.80% | 17.65% | 19.21% | 30.10% | 17.10% | 24.60% | 29.50% |

| SVR | 26.53 | 13.32% | 1198.28 | 35.09 | 13.87% | 2234.97 | 9.59 | 23.61% | 164.26 |

| PTD-SVR | 19.30 | 8.60% | 665.39 | 31.60 | 11.72% | 1830.18 | 7.72 | 20.08% | 106.47 |

| improved | 27.25% | 35.41% | 44.47% | 9.96% | 15.53% | 18.11% | 19.44% | 14.93% | 35.18% |

| ANN | 29.87 | 13.11% | 1657.62 | 38.06 | 13.77% | 2534.79 | 9.79 | 23.33% | 171.05 |

| PTD-ANN | 19.40 | 8.31% | 682.92 | 31.89 | 11.40% | 1905.69 | 7.69 | 20.01% | 106.11 |

| improved | 35.05% | 36.62% | 58.80% | 16.22% | 17.17% | 24.82% | 21.45% | 14.21% | 37.96% |

| LSTM | 25.59 | 10.47% | 1194.49 | 35.31 | 12.67% | 2245.46 | 9.62 | 22.01% | 170.88 |

| PTD-LSTM | 19.73 | 8.90% | 692.90 | 31.23 | 11.44% | 1784.21 | 7.68 | 19.88% | 105.73 |

| improved | 22.89% | 15.02% | 41.99% | 11.57% | 9.74% | 20.54% | 20.20% | 9.65% | 38.12% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, W.; Wang, W.; Hua, X.; Zhang, Y. Real-Time Traffic Flow Forecasting via a Novel Method Combining Periodic-Trend Decomposition. Sustainability 2020, 12, 5891. https://doi.org/10.3390/su12155891

Zhou W, Wang W, Hua X, Zhang Y. Real-Time Traffic Flow Forecasting via a Novel Method Combining Periodic-Trend Decomposition. Sustainability. 2020; 12(15):5891. https://doi.org/10.3390/su12155891

Chicago/Turabian StyleZhou, Wei, Wei Wang, Xuedong Hua, and Yi Zhang. 2020. "Real-Time Traffic Flow Forecasting via a Novel Method Combining Periodic-Trend Decomposition" Sustainability 12, no. 15: 5891. https://doi.org/10.3390/su12155891

APA StyleZhou, W., Wang, W., Hua, X., & Zhang, Y. (2020). Real-Time Traffic Flow Forecasting via a Novel Method Combining Periodic-Trend Decomposition. Sustainability, 12(15), 5891. https://doi.org/10.3390/su12155891