Reform of Chinese Universities in the Context of Sustainable Development: Teacher Evaluation and Improvement Based on Hybrid Multiple Criteria Decision-Making Model

Abstract

1. Introduction

2. Review of University Teacher Evaluation Models

2.1. Selection of Appropriate Criteria in the Evaluation Model

2.2. Building Decision-Making Models Using MCDM Methodology

2.3. Building Decision-Making Models Using Statistical or Data Analysis Methodologies

2.4. Research Gaps in Their Decision-Making Models

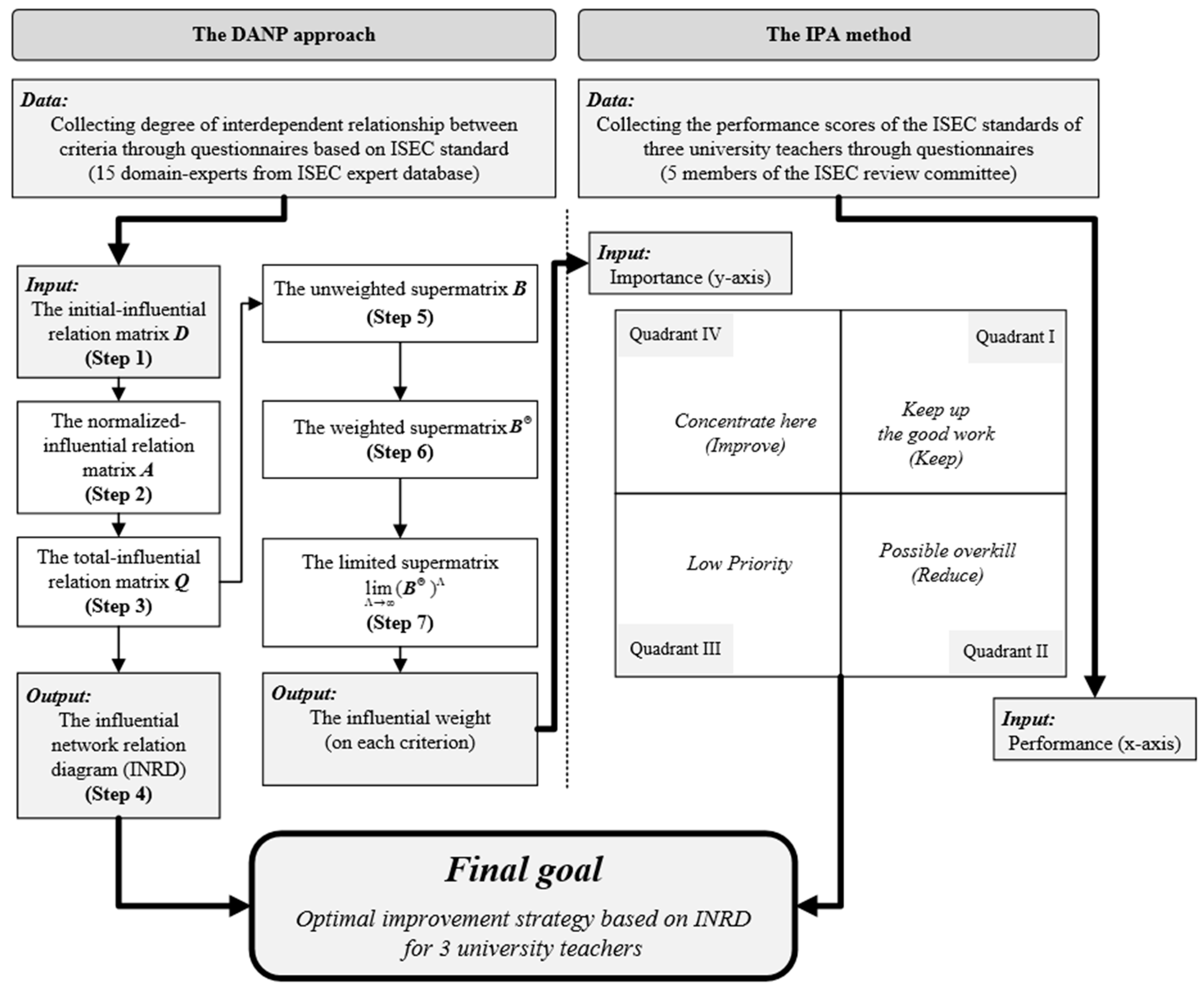

3. Our Proposed Hybrid DANP-IPA Model

3.1. DANP Method

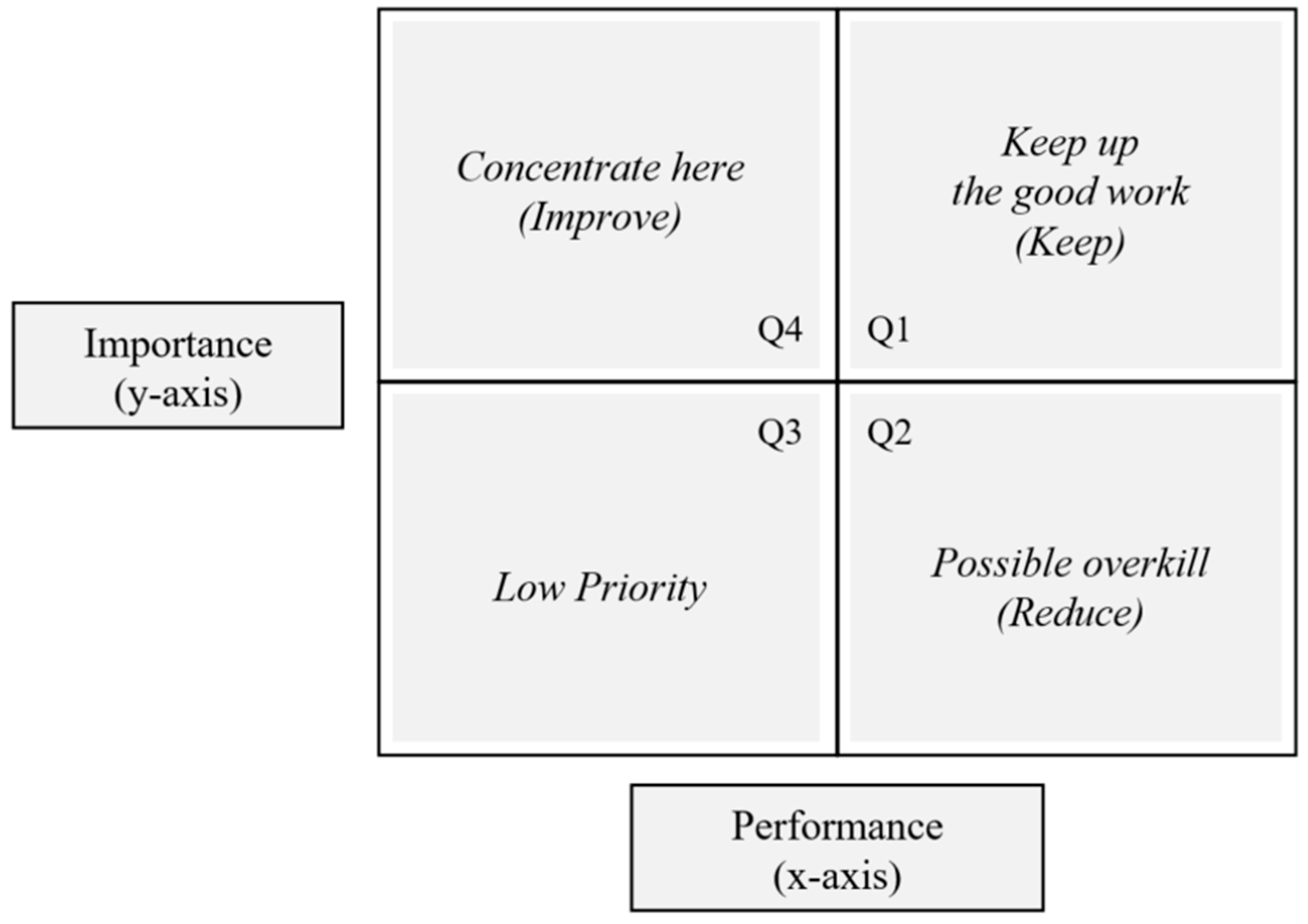

3.2. Importance–Performance Analysis Method

- (1)

- Q1: Keep up the good work, indicating the main strengths and potential competitive advantages of a product or service.

- (2)

- Q2: Possible overkill, representing that these criteria are a low priority for customers. That is, the organization should reduce resources directed toward these criteria because resources are limited.

- (3)

- Q3: Low priority, representing criteria that are not important to customers and not performing exceptionally well. The organization should not care too much about these attributes.

- (4)

- Q4: Concentrate here, representing the service’s primary weaknesses and threats to its competitiveness. For the organization, these criteria have the highest priority in terms of investment.

4. Empirical Case

4.1. Case Background Problem Description

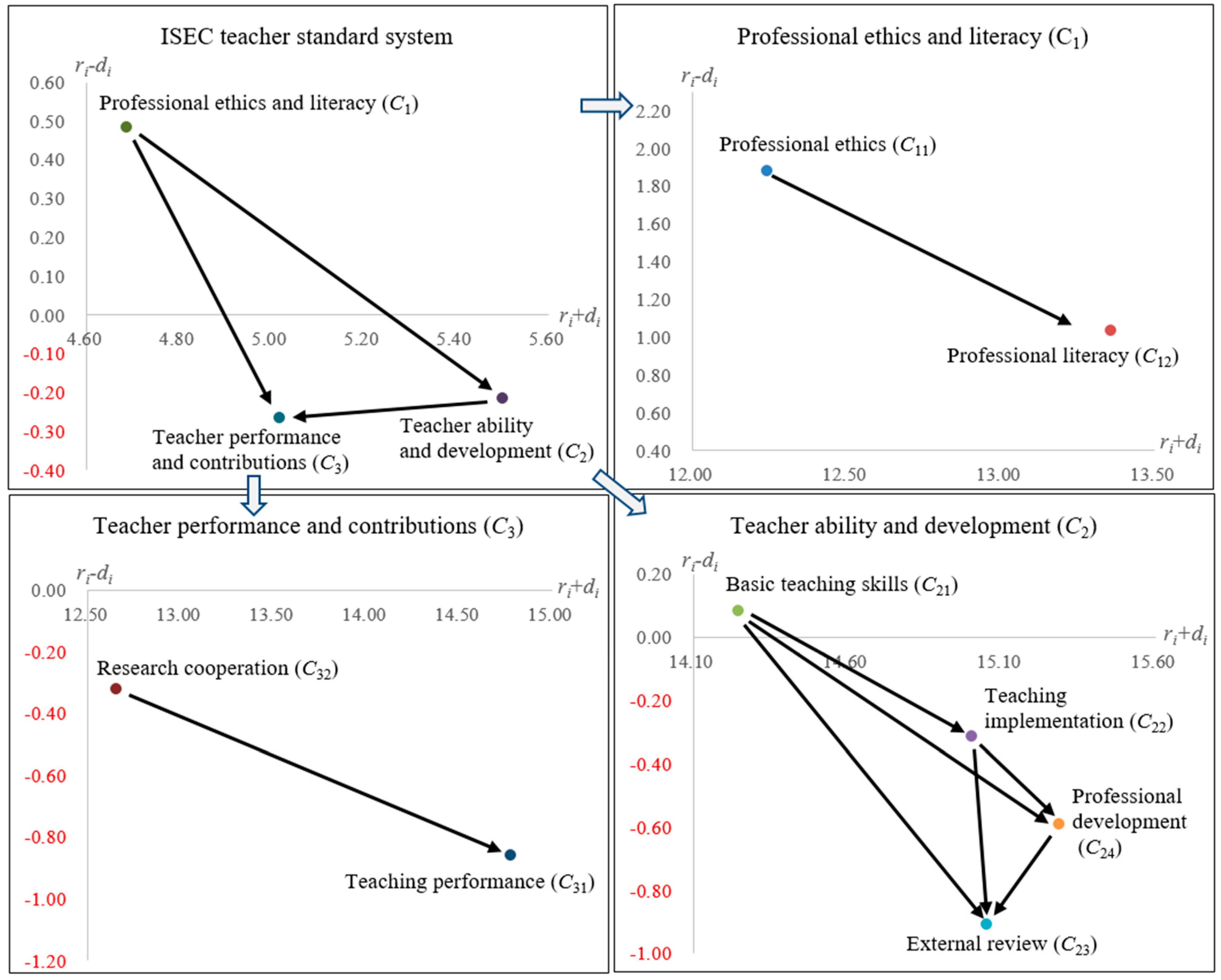

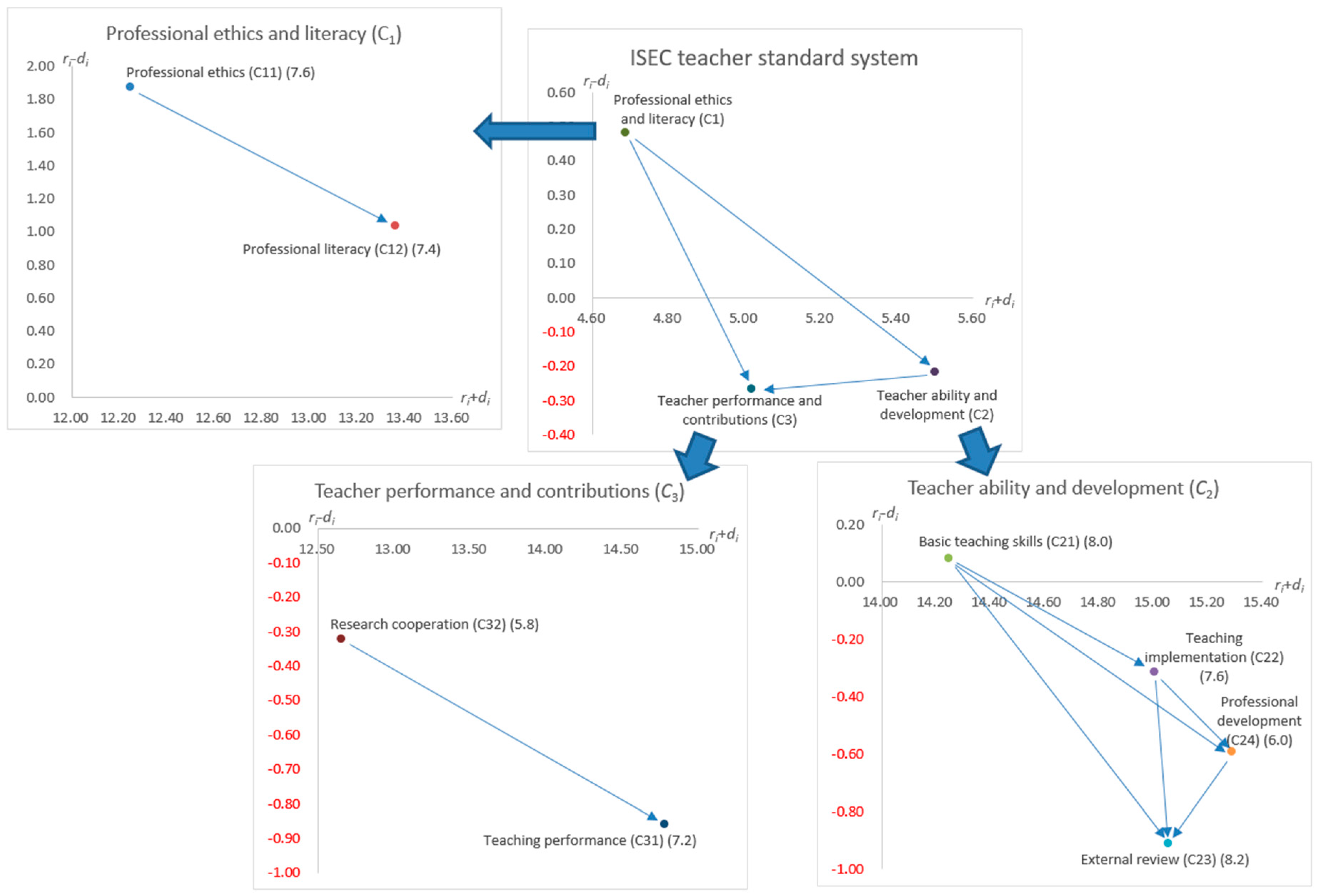

4.2. INRD and Influential Weight Using the DANP Method

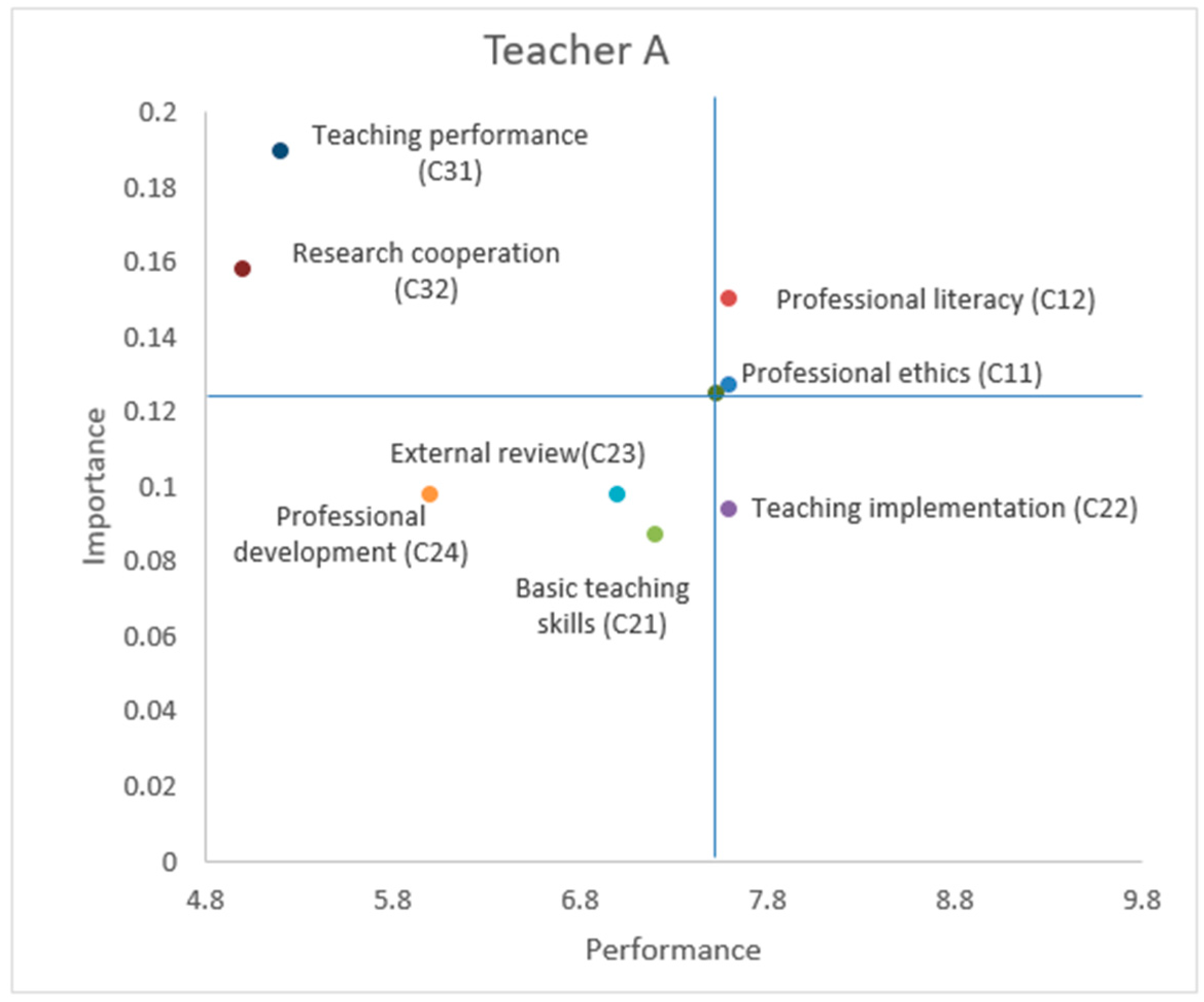

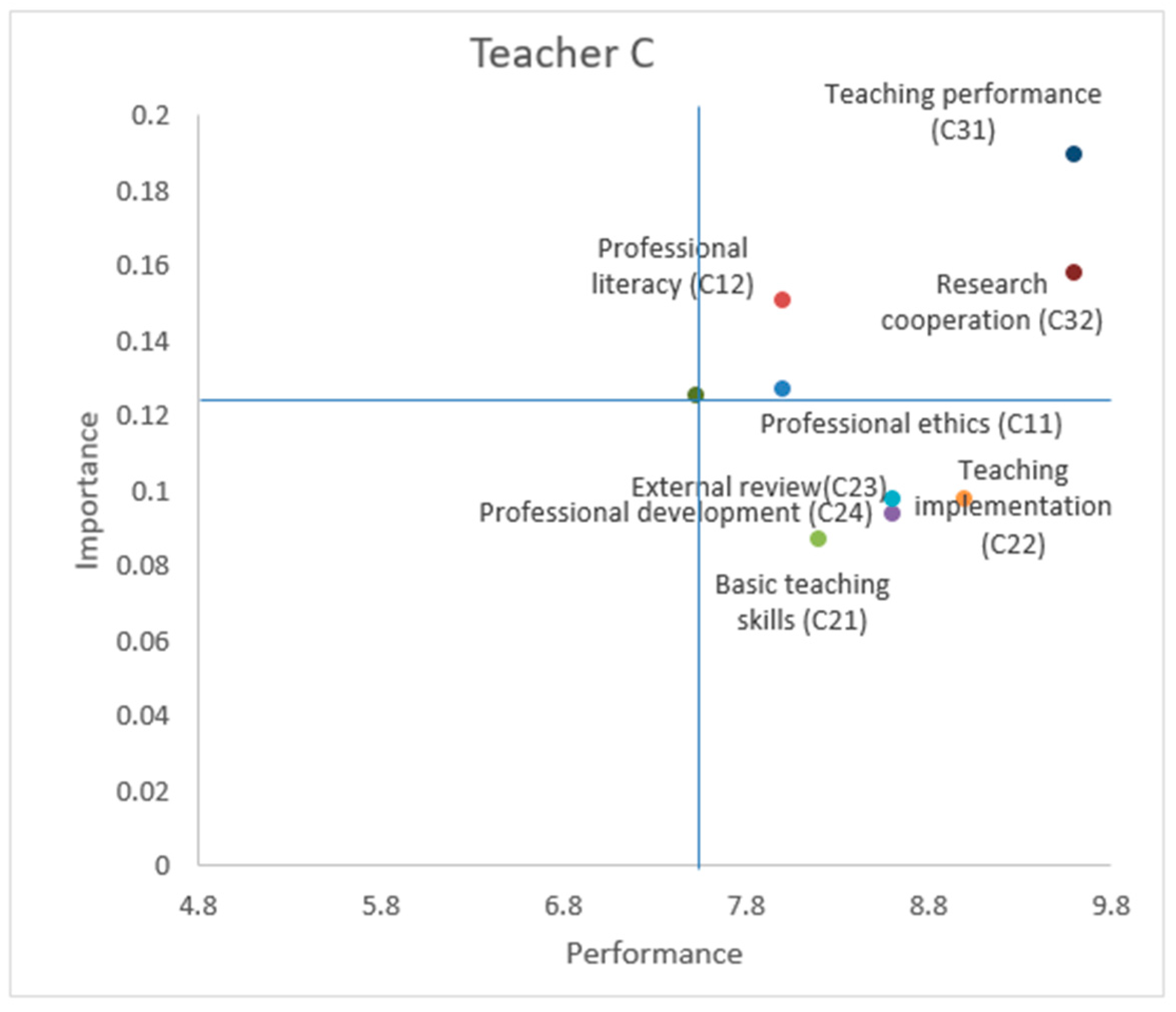

4.3. University Teacher Evaluation Using the IPA Method

5. Discussion

5.1. Proposal for Improvement Suggestions Based on the INRD

5.2. Comparison of Weights

6. Conclusions and Remarks

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Albareda-Tiana, S.; García-González, E.; Jiménez-Fontana, R.; Solís-Espallargas, C. Implementing Pedagogical Approaches for ESD in Initial Teacher Training at Spanish Universities. Sustainability 2019, 11, 4927. [Google Scholar] [CrossRef]

- The United Nations. Sustainable Development Goals: 17 Goals to Transform our World. Available online: https://sustainabledevelopment.un.org/ (accessed on 15 September 2019).

- Bürgener, L.; Barth, M. Sustainability competencies in teacher education: Making teacher education count in everyday school practice. J. Clean. Prod. 2018, 174, 821–826. [Google Scholar] [CrossRef]

- UNESCO. Roadmap for Implementing the Global Action Programme on Education for Sustainable Development; UNESCO: Paris, France, 2014. [Google Scholar]

- Brito, R.; Rodríguez, C.; Aparicio, J. Sustainability in Teaching: An Evaluation of University Teachers and Students. Sustainability 2018, 10, 439. [Google Scholar] [CrossRef]

- The Chinese Government. A National Plan of China for Implementing the 2030 Agenda for Sustainable Development. Available online: http://www.gov.cn/xinwen/2016-10/13/content_5118514.htm (accessed on 15 September 2019).

- Ministry of Education. Notice from the Ministry of Education on Implementing the “Dual Ten-Thousand Plan” for Establishing First Rate Undergraduate Majors. Available online: http://www.moe.gov.cn/srcsite/A08/s7056/201904/t20190409_377216.html (accessed on 15 September 2019).

- Varela-Losada, M.; Arias-Correa, A.; Pérez-Rodríguez, U.; Vega-Marcote, P. How Can Teachers Be Encouraged to Commit to Sustainability? Evaluation of a Teacher-Training Experience in Spain. Sustainability 2019, 11, 4309. [Google Scholar] [CrossRef]

- Álvarez-García, O.; García-Escudero, L.Á.; Salvà-Mut, F.; Calvo-Sastre, A. Variables Influencing Pre-Service Teacher Training in Education for Sustainable Development: A Case Study of Two Spanish Universities. Sustainability 2019, 11, 4412. [Google Scholar] [CrossRef]

- The Ministry of Education and Four Other Ministries. the Action Programme to Rejuvenate Education for the Teaching Staff 2018–2022. Available online: http://www.moe.gov.cn/srcsite/A10/s7034/201803/t20180323_331063.html (accessed on 15 September 2019).

- China News. The Serious Shortage of High-Quality Educational Resources is the Major Contradiction of Chinese Education. Available online: http://edu.china.com.cn/2014-04/30/content_32246901.htm (accessed on 15 September 2019).

- Song Chunpeng, Member of the CPPCC National Committee: The Situation of East is Strong, West is Weak in Universities Must Be Changed. Available online: http://www.moe.gov.cn/jyb_xwfb/xw_zt/moe_357/jyzt_2019n/2019_zt2/zt1902_dbwy/201903/t20190314_373410.html (accessed on 15 September 2019).

- Ding, L.; Zeng, Y. Evaluation of Chinese higher education by TOPSIS and IEW — The case of 68 universities belonging to the Ministry of Education in China. China Econ. Rev. 2015, 36, 341–358. [Google Scholar] [CrossRef]

- Johnes, J.; Yu, L. Measuring the research performance of Chinese higher education institutions using data envelopment analysis. China Econ. Rev. 2008, 19, 679–696. [Google Scholar] [CrossRef]

- Hallinger, P.; Heck, R.H.; Murphy, J. Teacher Evaluation and School Improvement: An Analysis of the Evidence. Educ. Assess. Eval. Account. 2014, 26, 5–28. [Google Scholar] [CrossRef]

- Herlihy, C.; Karger, E.; Pollard, C.; Hill, H.C.; Kraft, M.A.; Williams, M.; Howard, S. State and local efforts to investigate the validity and reliability of scores from teacher evaluation systems. Teach. Coll. Rec. 2014, 116, 1–28. [Google Scholar]

- Papay, J. Refocusing the debate: Assessing the purposes and tools of teacher evaluation. Harv. Educ. Rev. 2012, 82, 123–141. [Google Scholar] [CrossRef]

- Gagnon, D.J.; Hall, E.L.; Marion, S. Teacher evaluation and local control in the US: an investigation into the degree of local control afforded to districts in defining evaluation procedures for teachers in non-tested subjects and grades. Assess. Educ. Princ. Policy Pract. 2017, 24, 489–505. [Google Scholar] [CrossRef] [PubMed]

- Ghosh, D.N. Analytic hierarchy process & TOPSIS method to evaluate faculty performance in engineering education. Dipendra Nath Ghosh Et Al Uniascit 2011, 1, 63–70. [Google Scholar]

- Pavani, S.; Sharma, L.K.; Hota, H. A group expert evaluation for teachers by integrating fuzzy AHP and TOPSIS models. In Proceedings of the 2013 IEEE International Conference in MOOC, Innovation and Technology in Education (MITE), Jaipur, India, 20–22 December 2013; pp. 85–90. [Google Scholar]

- Xu, X.; Wang, Y.; Yu, S. Teaching performance evaluation in smart campus. IEEE Access. 2018, 6, 77754–77766. [Google Scholar] [CrossRef]

- Wang, W.; Liu, Z.; Yao, L.; Liu, G. Classroom teaching performance evaluation model of ideological and political education in colleges and universities based on TOPSIS and triangle fuzzy number. Risti-Rev. Iber. Sist. E Tecnol. Inf. 2016, 2016, 220–230. [Google Scholar]

- Tseng, M.L. Using the extension of DEMATEL to integrate hotel service quality perceptions into a cause–effect model in uncertainty. Expert Syst. Appl. 2009, 36, 9015–9023. [Google Scholar] [CrossRef]

- Kiani Mavi, R.; Standing, C. Cause and effect analysis of business intelligence (BI) benefits with fuzzy DEMATEL. Knowledge Manag. Res. Pract. 2018, 16, 245–257. [Google Scholar] [CrossRef]

- Si, S.L.; You, X.Y.; Liu, H.C.; Zhang, P. DEMATEL technique: A systematic review of the state-of-the-art literature on methodologies and applications. Math. Probl. Eng. 2018, 3696457, 1–33. [Google Scholar] [CrossRef]

- Liou, J.J.; Chuang, Y.C.; Zavadskas, E.K.; Tzeng, G.H. Data-driven hybrid multiple attribute decision-making model for green supplier evaluation and performance improvement. J. Clean. Prod. 2019, 241, 118321. [Google Scholar] [CrossRef]

- Ding, J.F.; Kuo, J.F.; Shyu, W.H.; Chou, C.C. Evaluating determinants of attractiveness and their cause-effect relationships for container ports in Taiwan: users’ perspectives. Marit. Policy Manag. 2019, 46, 466–490. [Google Scholar] [CrossRef]

- Hu, H.Y.; Lee, Y.C.; Yen, T.M.; Tsai, C.H. Using BPNN and DEMATEL to modify importance–performance analysis model–A study of the computer industry. Expert Syst. Appl. 2009, 36, 9969–9979. [Google Scholar] [CrossRef]

- Hu, H.Y.; Lee, Y.C.; Yen, T.M. Amend importance-performance analysis method with Kano’s model and DEMATEL. J. Appl. Sci. 2009, 9, 1833–1846. [Google Scholar] [CrossRef]

- Hu, H.Y.; Chiu, S.I.; Cheng, C.C.; Yen, T.M. Applying the IPA and DEMATEL models to improve the order-winner criteria: A case study of Taiwan’s network communication equipment manufacturing industry. Expert Syst. Appl. 2011, 38, 9674–9683. [Google Scholar] [CrossRef]

- Ho, L.H.; Feng, S.Y.; Lee, Y.C.; Yen, T.M. Using modified IPA to evaluate supplier’s performance: Multiple regression analysis and DEMATEL approach. Expert Syst. Appl. 2012, 39, 7102–7109. [Google Scholar] [CrossRef]

- Cheng, C.C.; Chen, C.T.; Hsu, F.S.; Hu, H.Y. Enhancing service quality improvement strategies of fine-dining restaurants: New insights from integrating a two-phase decision-making model of IPGA and DEMATEL analysis. Int. J. Hosp. Manag. 2012, 31, 1155–1166. [Google Scholar] [CrossRef]

- Wilson, F.; Beaton, D. The theory and practice of appraisal: progress review in a Scottish university. High. Educ. Q. 1993, 47, 163–189. [Google Scholar] [CrossRef]

- Tucker, A. Chairing the Academic Department: Leadership Among Peers; Oryx Press: Phoenix, AZ, USA, 1992. [Google Scholar]

- Moses, I. Assessment and Appraisal of Academic Staff. High. Educ. Manag. 1996, 8, 79–86. [Google Scholar]

- Michael, M.; Adrienne, E.H. Faculty Evaluation: A Prickly Pair. High. Educ. 1999, 38, 351. [Google Scholar]

- Desselle, S.P.; Mattei, T.J.; Pete Vanderveen, R. Identifying and weighting teaching and scholarship activities among faculty members. Am. J. Pharm. Educ. 2004, 68, 1–11. [Google Scholar] [CrossRef][Green Version]

- Filipe, M.N.M.; Ferreira, F.A.F.; Santos, S.P. A multiple criteria information system for pedagogical evaluation and professional development of teachers. J. Oper. Res. Soc. 2015, 66, 1769–1782. [Google Scholar] [CrossRef]

- Lane, J. Let’s make science metrics more scientific. Nature 2010, 464, 488–489. [Google Scholar] [CrossRef]

- Coccia, M. Measuring scientific performance of public research units for strategic change. J. Informetr. 2008, 2, 183–194. [Google Scholar] [CrossRef]

- Bana e Costa, C.A.; Oliveira, M.D. A multicriteria decision analysis model for faculty evaluation. Omega 2012, 40, 424–436. [Google Scholar] [CrossRef]

- Keeney, R.L.; See, K.E.; Von Winterfeldt, D. Evaluating academic programs: With applications to US graduate decision science programs. Oper. Res. 2006, 54, 813–828. [Google Scholar] [CrossRef]

- Billaut, J.-C.; Bouyssou, D.; Vincke, P. Should you believe in the Shanghai ranking? An MCDM view. Scientometrics 2010, 237. [Google Scholar] [CrossRef]

- Oral, M.; Oukil, A.; Malouin, J.-L.; Kettani, O. The appreciative democratic voice of DEA: A case of faculty academic performance evaluation. Soc.-Econ. Plan. Sci. 2014, 48, 20–28. [Google Scholar] [CrossRef]

- Hein, N.; Kroenke, A.; Júnior, M.M.R. Professor Assessment Using Multi-Criteria Decision Analysis. Proc. Comput. Sci. 2015, 55, 539–548. [Google Scholar] [CrossRef]

- Chen, J.-F.; Hsieh, H.-N.; Do, Q.H. Evaluating teaching performance based on fuzzy AHP and comprehensive evaluation approach. Appl. Soft Comput. J. 2015, 28, 100–108. [Google Scholar] [CrossRef]

- Chang, T.-C.; Wang, H. A Multi Criteria Group Decision-making Model for Teacher Evaluation in Higher Education Based on Cloud Model and Decision Tree. Eurasia J. Math. Sci. Technol. Educ. 2016, 12, 1243–1262. [Google Scholar] [CrossRef]

- Dey Mondal, S.; Ghosh, D. An Integrated Approach of Multi-Criteria Group Decision Making Techniques to Evaluate the overall Performance of Teachers. Int. J. Adv. Res. Comput. Sci. 2016, 7, 38–45. [Google Scholar]

- Nikolaidis, Y.; Dimitriadis, S.G. On the student evaluation of university courses and faculty members’ teaching performance. Eur. J. Oper. Res. 2014, 238, 199–207. [Google Scholar] [CrossRef]

- Lyde, A.R.; Grieshaber, D.C.; Byrns, G. Faculty Teaching Performance: Perceptions of a Multi-Source Method for Evaluation (MME). J. Scholarsh. Teach. Learn. 2016, 16, 82–94. [Google Scholar] [CrossRef]

- Bi, H.H. A robust interpretation of teaching evaluation ratings. Assess. Eval. High. Educ. 2018, 43, 79–93. [Google Scholar] [CrossRef]

- Lee, W.-S.; Tzeng, G.-H.; Cheng, C.-M. Using novel MCDM methods based on Fama-French three-factor model for probing the stock selection. In Proceedings of the 10th Asia Pacific Industrial Engineering and Management Systems Conference, APIEMS, Kitakyushu, Japan, 14–16 December 2012; pp. 14–16. [Google Scholar]

- Gabus, A.; Fontela, E. World problems, an invitation to further thought within the framework of DEMATEL. Battelle Geneva Res. Cent. Geneva Switz. 1972, 1–8. [Google Scholar]

- Saaty, T.L. Decision Making with Dependence and Feedback: The Analytic Network Process; RWS Publ.: Casa Branca, Santo André-SP, Brazil, 1996; Volume 4922. [Google Scholar]

- Chuang, Y.-C.; Hu, S.-K.; Liou, J.J.; Lo, H.-W. Building a Decision Dashboard for Improving Green Supply Chain Management. Int. J. Inf. Technol. Decis. Mak. 2018, 17, 1363–1398. [Google Scholar] [CrossRef]

- Zhu, B.W.; Zhang, J.R.; Tzeng, G.H.; Huang, S.L.; Xiong, L. Public open space development for elderly people by using the DANP-V model to establish continuous improvement strategies towards a sustainable and healthy aging society. Sustainability 2017, 9, 420. [Google Scholar] [CrossRef]

- Xiong, L.; Teng, C.L.; Zhu, B.W.; Tzeng, G.H.; Huang, S.L. Using the D-DANP-mV model to explore the continuous system improvement strategy for sustainable development of creative communities. Int. J. Environ. Res. Public Health 2017, 14, 1309. [Google Scholar] [CrossRef]

- Feng, I.M.; Chen, J.H.; Xiong, L.; Zhu, B.W. Assessment of and improvement strategies for the housing of healthy elderly: Improving quality of life. Sustainability 2018, 10, 722. [Google Scholar] [CrossRef]

- Liou, J.J.H.; Chuang, Y.C.; Tzeng, G.H. A fuzzy integral-based model for supplier evaluation and improvement. Inf. Sci. 2014, 266, 199–217. [Google Scholar] [CrossRef]

- Gudiel Pineda, P.J.; Liou, J.J.H.; Hsu, C.-C.; Chuang, Y.-C. An integrated MCDM model for improving airline operational and financial performance. J. Air Transp. Manag. 2018, 68, 103–117. [Google Scholar] [CrossRef]

- Shao, Q.G.; Liou, J.J.H.; Chuang, Y.C.; Weng, S.S. Improving the green building evaluation system in China based on the DANP method. Sustainability 2018, 10, 1173. [Google Scholar] [CrossRef]

- Lu, M.T.; Liou, J.J.H.; Hsu, C.C.; Lo, H.W. A hybrid MCDM and sustainability-balanced scorecard model to establish sustainable performance evaluation for international airports. J. Air Transp. Manag. 2018, 71, 9–19. [Google Scholar] [CrossRef]

- Sever, I. Importance-performance analysis: A valid management tool? Tour. Manag. 2015, 48, 43–53. [Google Scholar] [CrossRef]

- Martilla, J.A.; James, J.C. Importance-performance analysis. J. Mark. 1977, 41, 77–79. [Google Scholar] [CrossRef]

- Abalo, J.; Varela, J.; Manzano, V. Importance values for Importance–Performance Analysis: A formula for spreading out values derived from preference rankings. J. Bus. Res. 2007, 60, 115–121. [Google Scholar] [CrossRef]

- Frauman, E.; Banks, S. Gateway community resident perceptions of tourism development: Incorporating Importance-Performance Analysis into a Limits of Acceptable Change framework. Tour. Manag. 2011, 32, 128–140. [Google Scholar] [CrossRef]

- Cohen, J.F.; Coleman, E.; Kangethe, M.J. An importance-performance analysis of hospital information system attributes: A nurses’ perspective. Int. J. Med. Inf. 2016, 86, 82–90. [Google Scholar] [CrossRef] [PubMed]

- Boley, B.B.; McGehee, N.G.; Tom Hammett, A.L. Importance-performance analysis (IPA) of sustainable tourism initiatives: The resident perspective. Tour. Manag. 2017, 58, 66–77. [Google Scholar] [CrossRef]

- Masoumik, S.M.; Abdul-Rashid, S.H.; Olugu, E.U. Importance-performance Analysis of Green Strategy Adoption within the Malaysian Manufacturing Industry. Proc. CIRP 2015, 26, 646–652. [Google Scholar] [CrossRef][Green Version]

| Category | Characteristic | Limitations or Current Defects |

|---|---|---|

| Selection of appropriate criteria in the evaluation model | The research is focused on the selection of subjective and objective indicators in an evaluation model. |

|

| Building the decision-making model using MCDM methodology | The research used MCDM methods to construct various decision-making models for multi-criteria evaluation, selection, and improvement problems. |

|

| Building the decision-making model using statistical or data analysis methodologies | The research used statistical or data analysis methods to construct decision-making models for overcoming the fuzzy defects of original MCDM models. |

|

| Dimension | Criterion | Content |

|---|---|---|

| Professional ethics and literacy (C1) | Professional ethics (C11) | According to the Code of Professional Ethics of Teachers in Higher Education formulated by the MOE, teachers are examined for their moral performance in the teaching process. |

| Professional literacy (C12) | Evaluation of a teacher’s professionalism, professional sense of belonging, and physical and mental health | |

| Teacher ability and development (C2) | Basic teaching skills (C21) | Evaluation of future planning ability and level of teaching |

| Teaching implementation (C22) | Achievement of teaching goals and amount of teaching resources used | |

| External review (C23) | Evaluation of teaching performance by students, peers, and experts | |

| Professional development (C24) | Planning and implementation of personal career development | |

| Teacher performance and contributions (C3) | Teaching performance (C31) | Contributions to teaching performance, awards, research standards, and teaching teams |

| Research cooperation (C32) | Status of personal and group research results |

| Criteria | C11 | C12 | C21 | C22 | C23 | C24 | C31 | C32 |

|---|---|---|---|---|---|---|---|---|

| C11 | 0.00 | 3.20 | 2.67 | 3.00 | 2.87 | 2.93 | 2.73 | 2.07 |

| C12 | 2.87 | 0.00 | 2.87 | 3.00 | 3.00 | 2.93 | 2.87 | 2.40 |

| C21 | 1.67 | 2.13 | 0.00 | 3.80 | 3.73 | 3.13 | 3.00 | 2.33 |

| C22 | 2.07 | 2.33 | 3.00 | 0.00 | 4.00 | 3.13 | 3.53 | 2.33 |

| C23 | 1.80 | 2.33 | 3.00 | 3.20 | 0.00 | 3.33 | 3.47 | 2.40 |

| C24 | 2.20 | 2.60 | 3.07 | 3.20 | 3.20 | 0.00 | 3.27 | 2.93 |

| C31 | 1.67 | 2.13 | 2.93 | 3.00 | 3.13 | 3.27 | 0.00 | 3.13 |

| C32 | 1.73 | 2.27 | 1.93 | 2.13 | 2.33 | 3.53 | 2.87 | 0.00 |

| Criteria | C11 | C12 | C21 | C22 | C23 | C24 | C31 | C32 |

|---|---|---|---|---|---|---|---|---|

| C11 | 0.58 | 0.81 | 0.90 | 0.97 | 1.01 | 1.00 | 0.98 | 0.81 |

| C12 | 0.70 | 0.70 | 0.92 | 0.99 | 1.03 | 1.02 | 1.00 | 0.83 |

| C21 | 0.66 | 0.78 | 0.80 | 1.01 | 1.05 | 1.02 | 1.01 | 0.83 |

| C22 | 0.68 | 0.81 | 0.94 | 0.89 | 1.08 | 1.05 | 1.05 | 0.85 |

| C23 | 0.65 | 0.78 | 0.91 | 0.98 | 0.89 | 1.02 | 1.01 | 0.82 |

| C24 | 0.69 | 0.82 | 0.94 | 1.01 | 1.05 | 0.92 | 1.04 | 0.87 |

| C31 | 0.64 | 0.76 | 0.89 | 0.96 | 1.00 | 1.00 | 0.86 | 0.84 |

| C32 | 0.58 | 0.69 | 0.77 | 0.83 | 0.87 | 0.91 | 0.88 | 0.63 |

| Dimension | ri | di | ri + di | ri − di | Criterion | ri | di | ri + di | ri − di |

|---|---|---|---|---|---|---|---|---|---|

| C1 | 2.59 | 2.10 | 4.69 | 0.48 | C11 | 7.06 | 5.18 | 12.24 | 1.88 |

| C12 | 7.20 | 6.16 | 13.36 | 1.04 | |||||

| C2 | 2.64 | 2.86 | 5.50 | −0.22 | C21 | 7.16 | 7.08 | 14.25 | 0.08 |

| C22 | 7.35 | 7.66 | 15.00 | −0.31 | |||||

| C23 | 7.07 | 7.98 | 15.05 | −0.91 | |||||

| C24 | 7.35 | 7.94 | 15.29 | −0.59 | |||||

| C3 | 2.38 | 2.64 | 5.02 | −0.27 | C31 | 6.96 | 7.82 | 14.77 | −0.86 |

| C32 | 6.17 | 6.49 | 12.65 | −0.32 |

| Criteria | C11 | C12 | C21 | C22 | C23 | C24 | C31 | C32 |

|---|---|---|---|---|---|---|---|---|

| C11 | 0.42 | 0.50 | 0.46 | 0.46 | 0.46 | 0.46 | 0.46 | 0.45 |

| C12 | 0.58 | 0.50 | 0.54 | 0.54 | 0.54 | 0.54 | 0.54 | 0.55 |

| C21 | 0.23 | 0.23 | 0.21 | 0.24 | 0.24 | 0.24 | 0.23 | 0.23 |

| C22 | 0.25 | 0.25 | 0.26 | 0.22 | 0.26 | 0.26 | 0.25 | 0.25 |

| C23 | 0.26 | 0.26 | 0.27 | 0.27 | 0.23 | 0.27 | 0.26 | 0.26 |

| C24 | 0.26 | 0.26 | 0.26 | 0.26 | 0.27 | 0.23 | 0.26 | 0.27 |

| C31 | 0.55 | 0.55 | 0.55 | 0.55 | 0.55 | 0.54 | 0.51 | 0.58 |

| C32 | 0.45 | 0.45 | 0.45 | 0.45 | 0.45 | 0.46 | 0.49 | 0.42 |

| Criteria | C11 | C12 | C21 | C22 | C23 | C24 | C31 | C32 |

|---|---|---|---|---|---|---|---|---|

| C11 | 0.11 | 0.14 | 0.13 | 0.13 | 0.13 | 0.13 | 0.13 | 0.13 |

| C12 | 0.16 | 0.13 | 0.15 | 0.15 | 0.15 | 0.15 | 0.15 | 0.15 |

| C21 | 0.09 | 0.09 | 0.08 | 0.09 | 0.09 | 0.09 | 0.09 | 0.09 |

| C22 | 0.10 | 0.09 | 0.10 | 0.08 | 0.10 | 0.10 | 0.09 | 0.09 |

| C23 | 0.10 | 0.10 | 0.10 | 0.10 | 0.09 | 0.10 | 0.10 | 0.10 |

| C24 | 0.10 | 0.10 | 0.10 | 0.10 | 0.10 | 0.09 | 0.10 | 0.10 |

| C31 | 0.19 | 0.19 | 0.19 | 0.20 | 0.19 | 0.19 | 0.17 | 0.20 |

| C32 | 0.16 | 0.16 | 0.16 | 0.16 | 0.16 | 0.16 | 0.17 | 0.14 |

| Dimension | Local Weight | Ranking | Criterion | Local Weight | Ranking | Global Weight | Ranking |

|---|---|---|---|---|---|---|---|

| C1 | 0.277 | 3 | C11 | 0.458 | 2 | 0.127 | 4 |

| C12 | 0.542 | 1 | 0.150 | 3 | |||

| C2 | 0.376 | 1 | C21 | 0.231 | 4 | 0.087 | 8 |

| C22 | 0.249 | 3 | 0.094 | 7 | |||

| C23 | 0.260 | 1 | 0.098 | 5 | |||

| C24 | 0.260 | 2 | 0.098 | 6 | |||

| C3 | 0.347 | 2 | C31 | 0.545 | 1 | 0.189 | 1 |

| C32 | 0.455 | 2 | 0.158 | 2 |

| Criteria | Member_1 | Member_2 | Member_3 | Member_4 | Member_5 | Average |

|---|---|---|---|---|---|---|

| C11 | 8 | 7 | 8 | 7 | 8 | 7.6 |

| C12 | 8 | 7 | 8 | 7 | 8 | 7.6 |

| C21 | 7 | 8 | 8 | 6 | 7 | 7.2 |

| C22 | 7 | 8 | 7 | 8 | 8 | 7.6 |

| C23 | 7 | 8 | 7 | 6 | 7 | 7 |

| C24 | 6 | 6 | 6 | 6 | 6 | 6 |

| C31 | 5 | 5 | 5 | 6 | 5 | 5.2 |

| C32 | 5 | 5 | 5 | 5 | 5 | 5 |

| Criteria | Member_1 | Member_2 | Member_3 | Member_4 | Member_5 | Average |

|---|---|---|---|---|---|---|

| C11 | 8 | 7 | 8 | 7 | 8 | 8 |

| C12 | 8 | 7 | 7 | 7 | 8 | 8 |

| C21 | 9 | 7 | 8 | 8 | 8 | 9 |

| C22 | 8 | 7 | 8 | 8 | 7 | 8 |

| C23 | 8 | 8 | 8 | 9 | 8 | 8 |

| C24 | 6 | 6 | 6 | 6 | 6 | 6 |

| C31 | 8 | 8 | 6 | 7 | 7 | 8 |

| C32 | 6 | 6 | 5 | 6 | 6 | 6 |

| Criteria | Member_1 | Member_2 | Member_3 | Member_4 | Member_5 | Average |

|---|---|---|---|---|---|---|

| C11 | 8 | 8 | 8 | 8 | 8 | 8 |

| C12 | 8 | 8 | 8 | 8 | 8 | 8 |

| C21 | 8 | 9 | 8 | 8 | 8 | 8 |

| C22 | 9 | 9 | 9 | 9 | 7 | 9 |

| C23 | 9 | 8 | 9 | 9 | 8 | 9 |

| C24 | 9 | 9 | 9 | 9 | 9 | 9 |

| C31 | 10 | 10 | 10 | 8 | 10 | 10 |

| C32 | 10 | 10 | 10 | 10 | 8 | 10 |

| Criterion | Weight | Teacher A | Teacher B | Teacher C | |||

|---|---|---|---|---|---|---|---|

| Performance | Group | Performance | Group | Performance | Group | ||

| C11 | 0.127 | 7.6 | I | 7.6 | I | 8.0 | I |

| C12 | 0.150 | 7.6 | I | 7.4 | IV | 8.0 | I |

| C21 | 0.087 | 7.2 | III | 8.0 | II | 8.2 | II |

| C22 | 0.094 | 7.6 | II | 7.6 | II | 8.6 | II |

| C23 | 0.098 | 7.0 | III | 8.2 | II | 8.6 | II |

| C24 | 0.098 | 6.0 | III | 6.0 | III | 9.0 | II |

| C31 | 0.189 | 5.2 | IV | 7.2 | IV | 9.6 | I |

| C32 | 0.158 | 5.0 | IV | 5.8 | IV | 9.6 | I |

| Dimension/Criterion | Real Case | DANP | AHP | |||

|---|---|---|---|---|---|---|

| Local Weight | Ranking | Local Weight | Ranking | Local Weight | Ranking | |

| Professional ethics and literacy (C1) | 0.2 | 3 | 0.277 | 3 | 0.459 | 1 |

| Professional ethics (C11) | 0.4 | 2 | 0.458 | 2 | 0.519 | 1 |

| Professional literacy (C12) | 0.6 | 1 | 0.542 | 1 | 0.481 | 2 |

| Teacher ability and development (C2) | 0.5 | 1 | 0.376 | 1 | 0.324 | 2 |

| Basic teaching skills (C21) | 0.2 | 2 | 0.231 | 4 | 0.237 | 2 |

| Teaching implementation (C22) | 0.3 | 1 | 0.249 | 3 | 0.220 | 3 |

| External review (C23) | 0.3 | 1 | 0.260 | 1 | 0.354 | 1 |

| Professional development (C24) | 0.2 | 2 | 0.260 | 2 | 0.188 | 4 |

| Teacher performance and contributions (C3) | 0.3 | 2 | 0.347 | 2 | 0.218 | 3 |

| Teaching performance (C31) | 0.7 | 1 | 0.545 | 1 | 0.816 | 1 |

| Research cooperation (C32) | 0.3 | 2 | 0.455 | 2 | 0.184 | 2 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Weng, S.-S.; Liu, Y.; Chuang, Y.-C. Reform of Chinese Universities in the Context of Sustainable Development: Teacher Evaluation and Improvement Based on Hybrid Multiple Criteria Decision-Making Model. Sustainability 2019, 11, 5471. https://doi.org/10.3390/su11195471

Weng S-S, Liu Y, Chuang Y-C. Reform of Chinese Universities in the Context of Sustainable Development: Teacher Evaluation and Improvement Based on Hybrid Multiple Criteria Decision-Making Model. Sustainability. 2019; 11(19):5471. https://doi.org/10.3390/su11195471

Chicago/Turabian StyleWeng, Sung-Shun, Yang Liu, and Yen-Ching Chuang. 2019. "Reform of Chinese Universities in the Context of Sustainable Development: Teacher Evaluation and Improvement Based on Hybrid Multiple Criteria Decision-Making Model" Sustainability 11, no. 19: 5471. https://doi.org/10.3390/su11195471

APA StyleWeng, S.-S., Liu, Y., & Chuang, Y.-C. (2019). Reform of Chinese Universities in the Context of Sustainable Development: Teacher Evaluation and Improvement Based on Hybrid Multiple Criteria Decision-Making Model. Sustainability, 11(19), 5471. https://doi.org/10.3390/su11195471