A Lightweight Traffic Sign Detection Model Based on Improved YOLOv8s for Edge Deployment in Autonomous Driving Systems Under Complex Environments

Abstract

1. Introduction

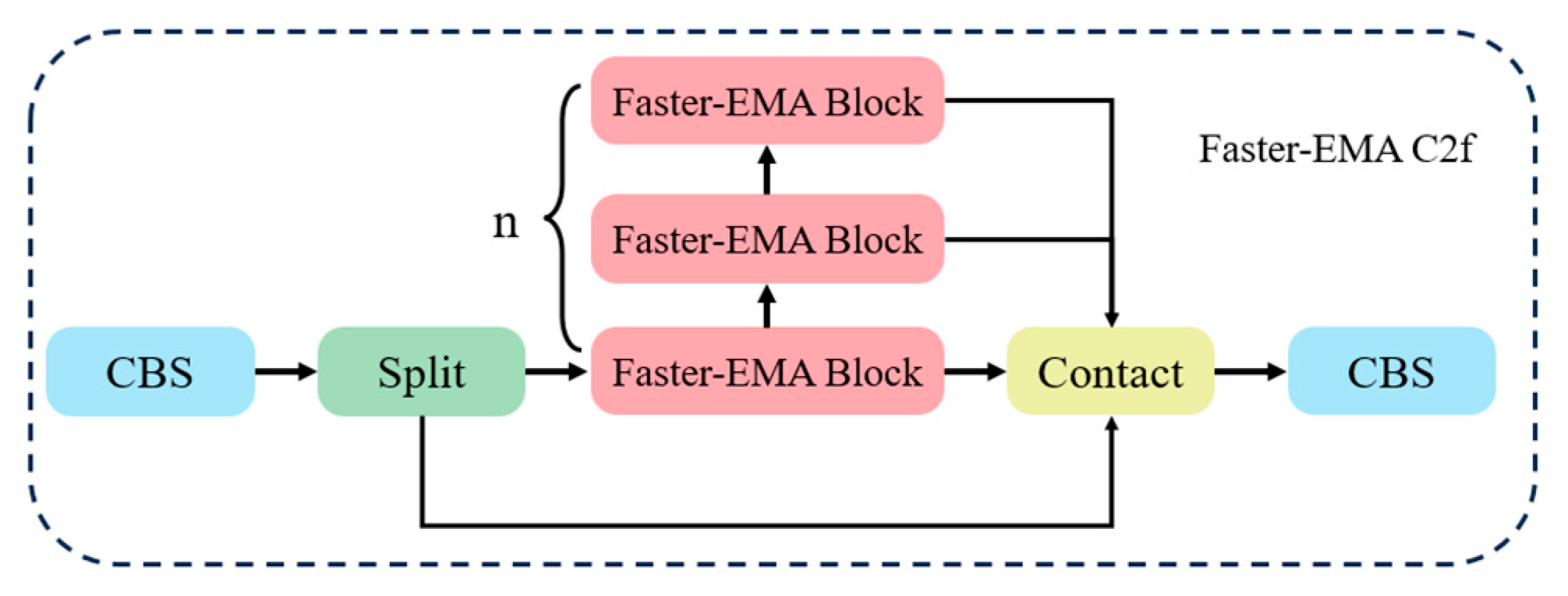

- The bottleneck block in the C2f module is replaced with the FasterNet block [23], leveraging its PConv and pointwise convolution (PWConv) co-design to reduce computational redundancy. An EMA mechanism is integrated to enhance multi-scale feature modeling via its parallel multi-branch structure and cross-space interaction. This preserves feature extraction capability during lightweighting while improving robustness for small targets and in occluded scenes.

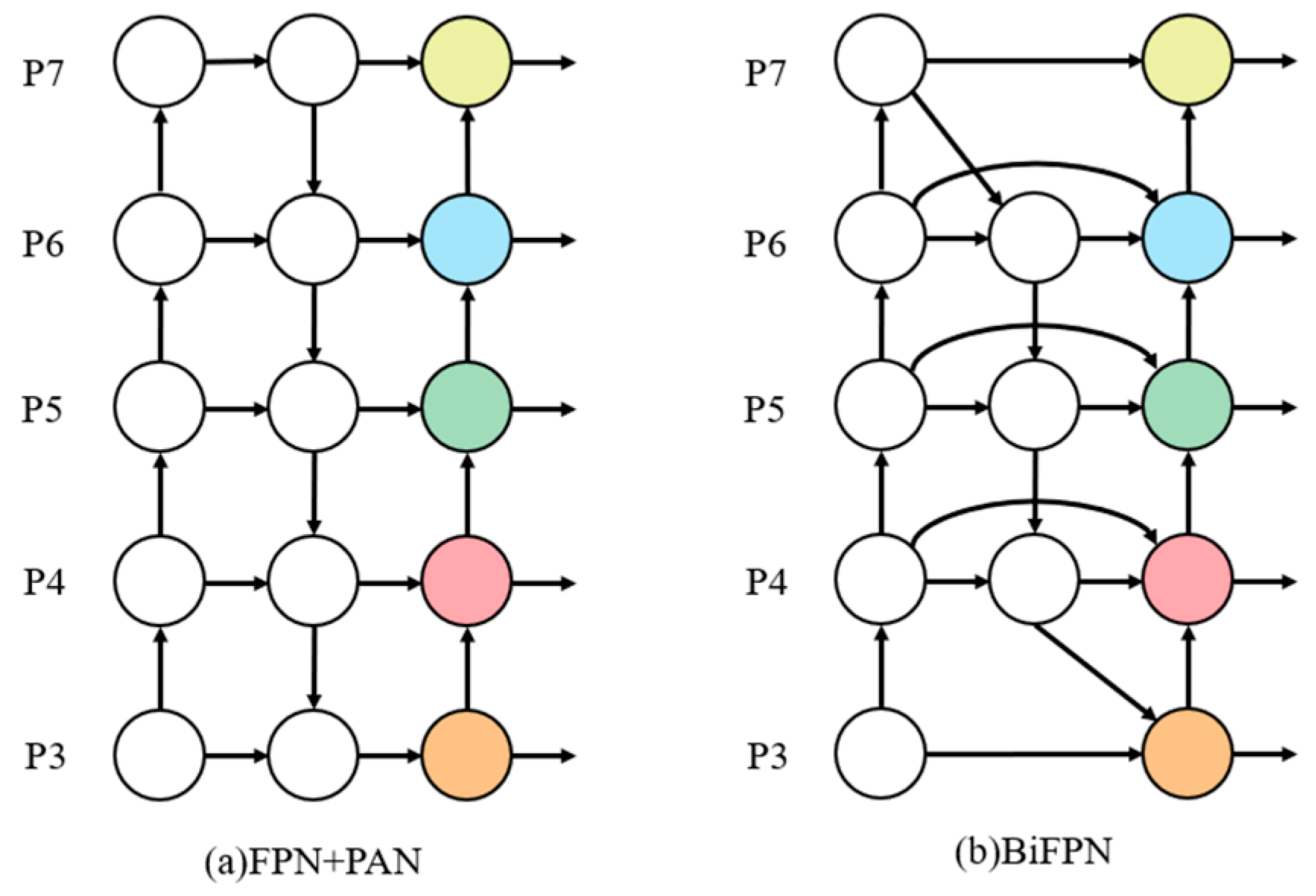

- The neck network is enhanced using a BiFPN structure. A Conv module is incorporated to compress channel dimensions and enhance nonlinear representation. Additionally, a P4 layer downsampling module facilitates cross-layer connections, improving interaction efficiency between shallow detail and deep semantic features, thus enabling the refined model to adapt to the scale variation of traffic signs near and far.

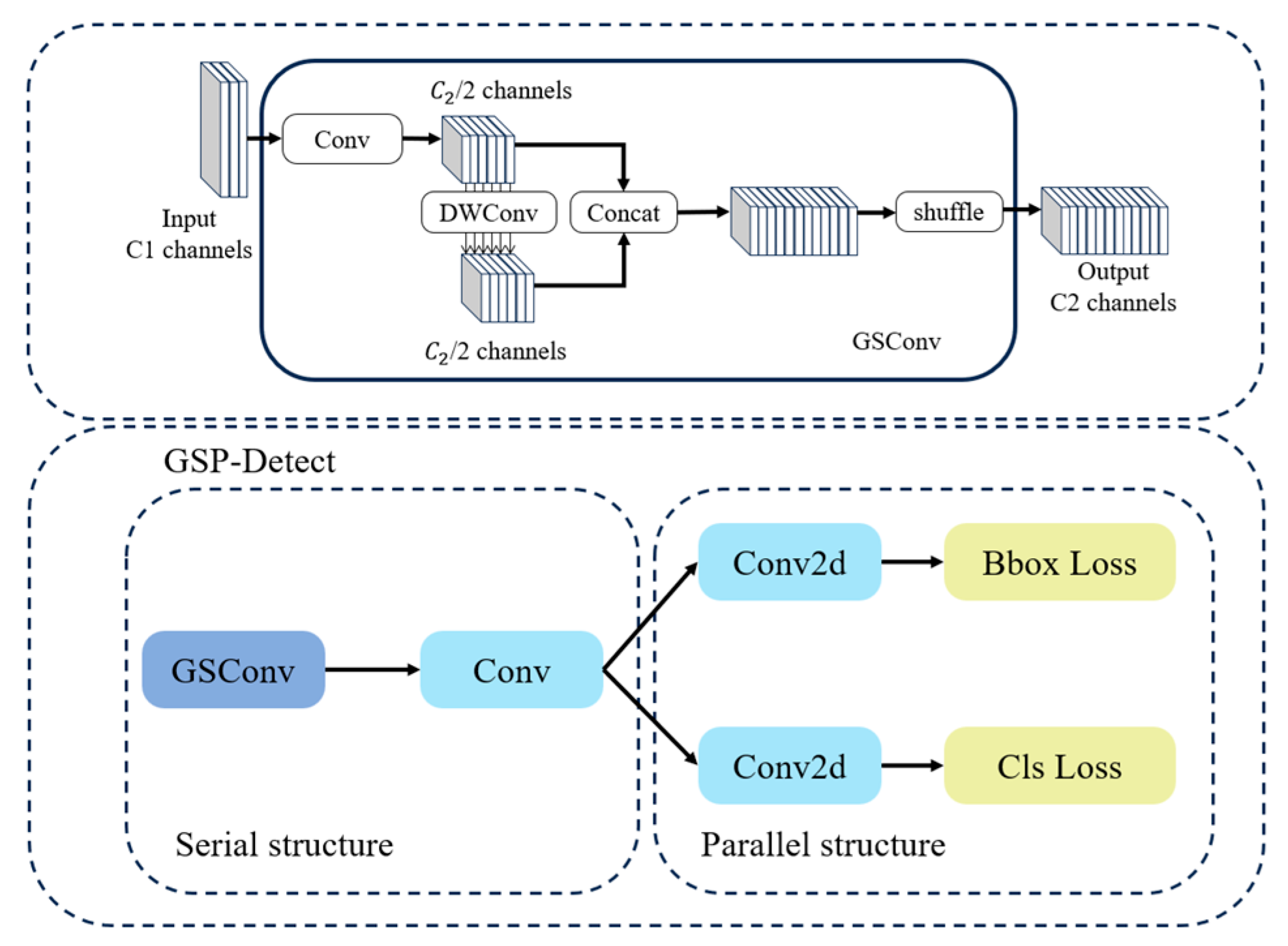

- A GSConv module is employed to construct a hybrid serial–parallel detection head. Its Channel Shuffle operation enhances cross-channel information exchange, optimizing computational efficiency.

2. Methodology

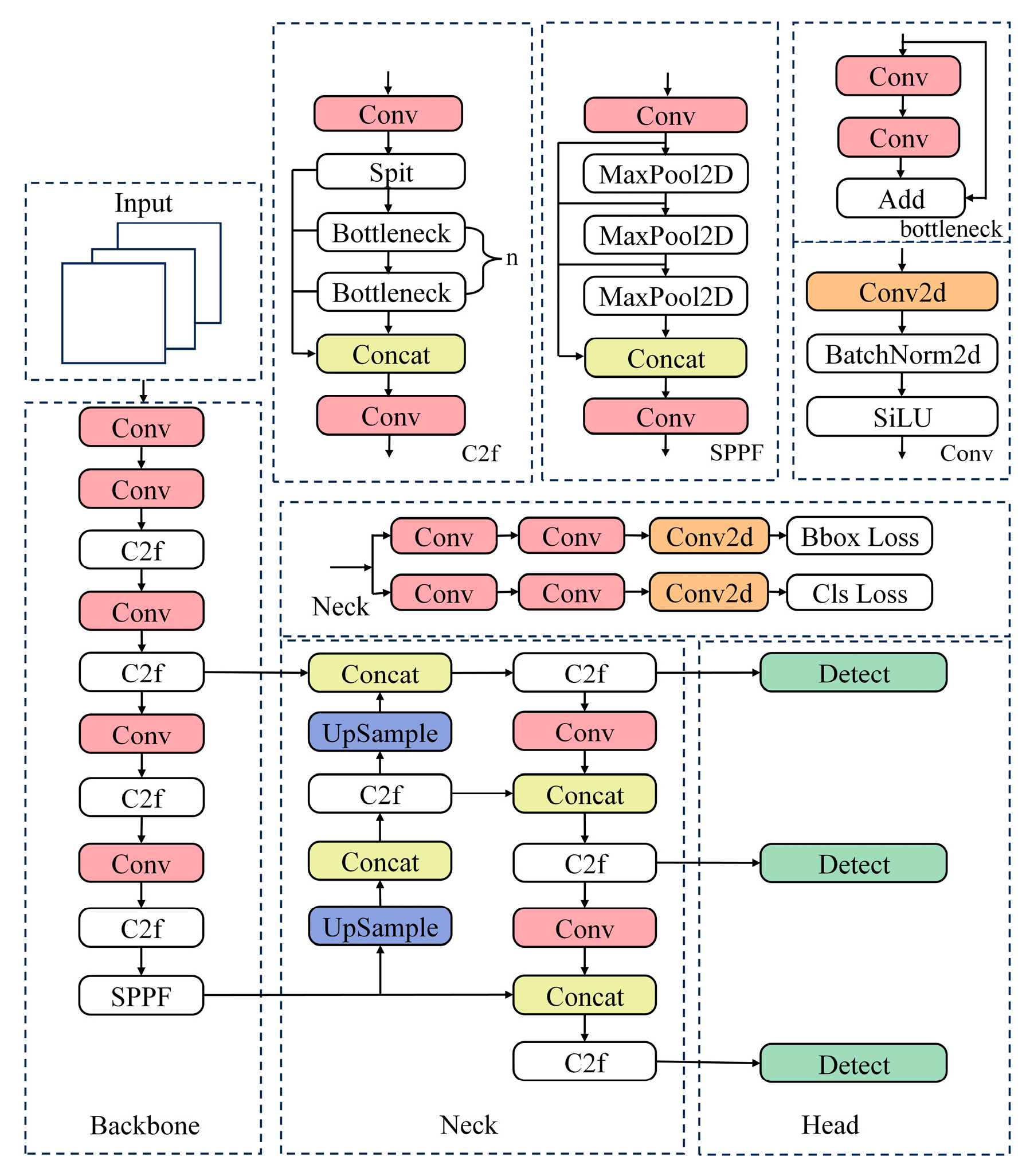

2.1. The YOLOv8 Model

2.2. FEBG-YOLOv8s Model

2.3. Backbone Network Design

2.3.1. C2f Lightweight Architecture

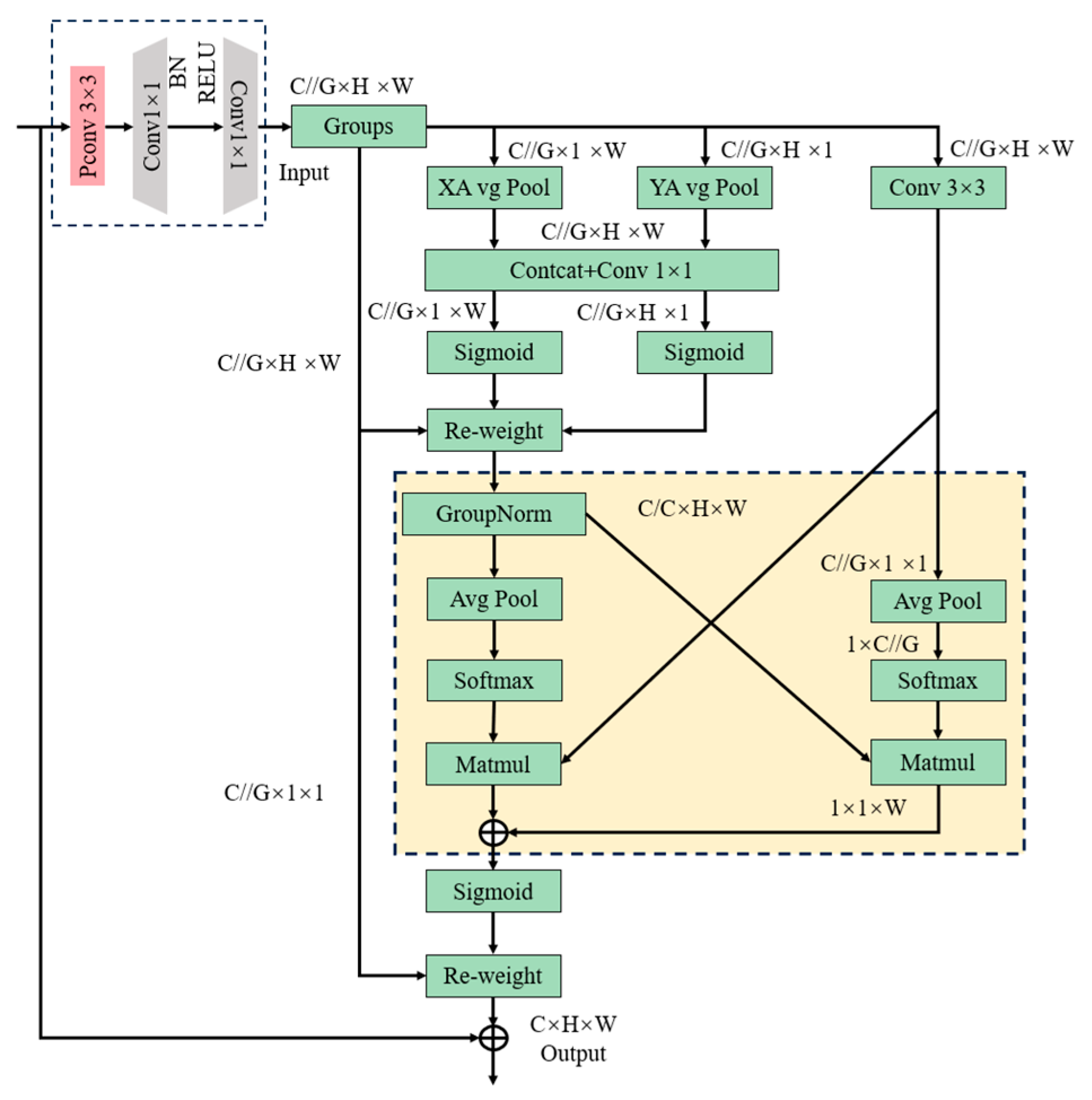

2.3.2. Integration with EMA Mechanisms

2.4. BiFPN-Enhanced Multi-Scale Fusion

2.5. Detection Head Enhancement

3. Datasets and Experimental Settings

3.1. Datasets

3.2. Experimental Settings

4. Results and Discussion

4.1. Evaluation Metrics

4.2. Ablation Experiments

4.3. Model Comparison

4.4. Comparison of Attention Mechanisms

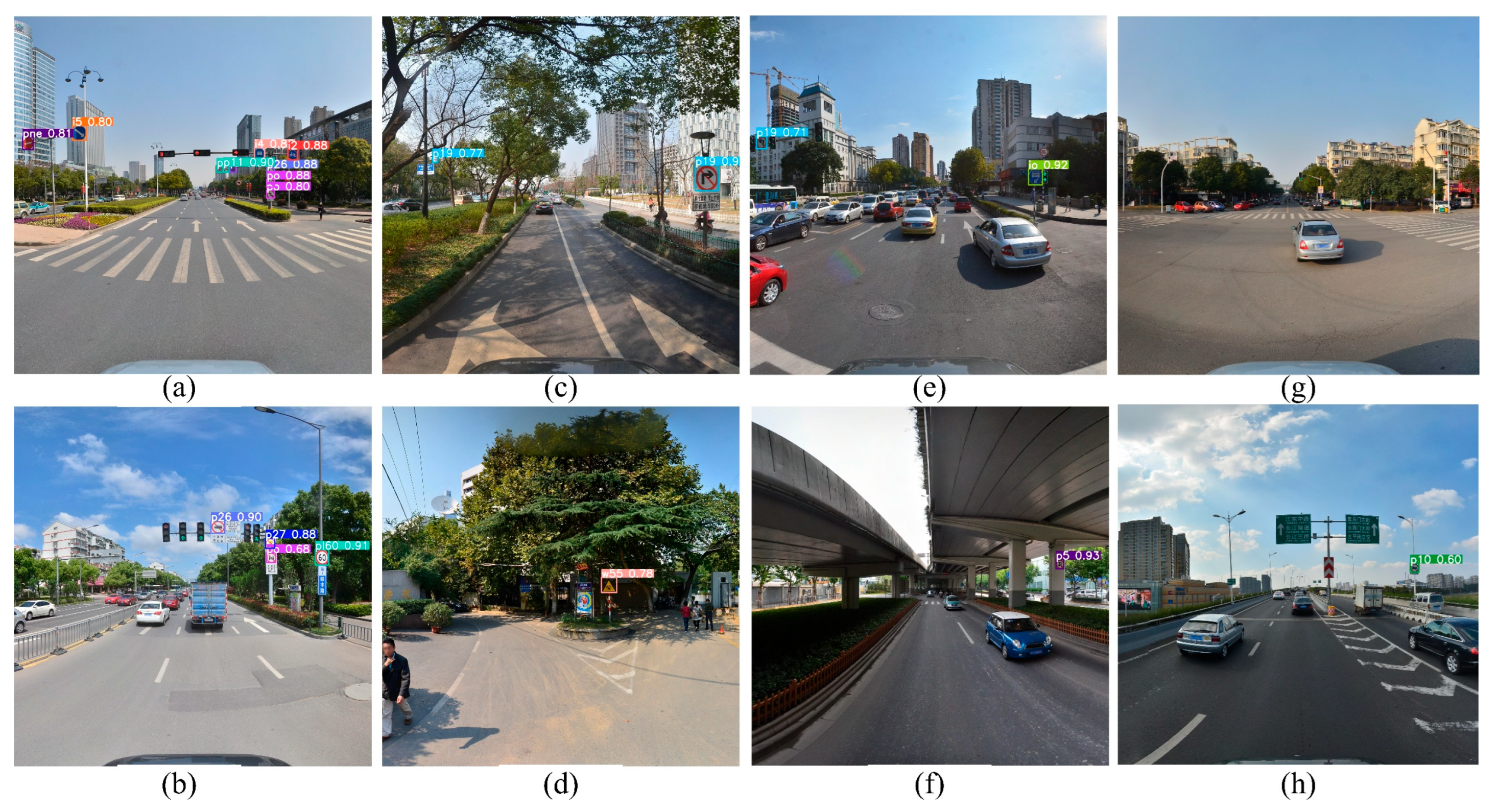

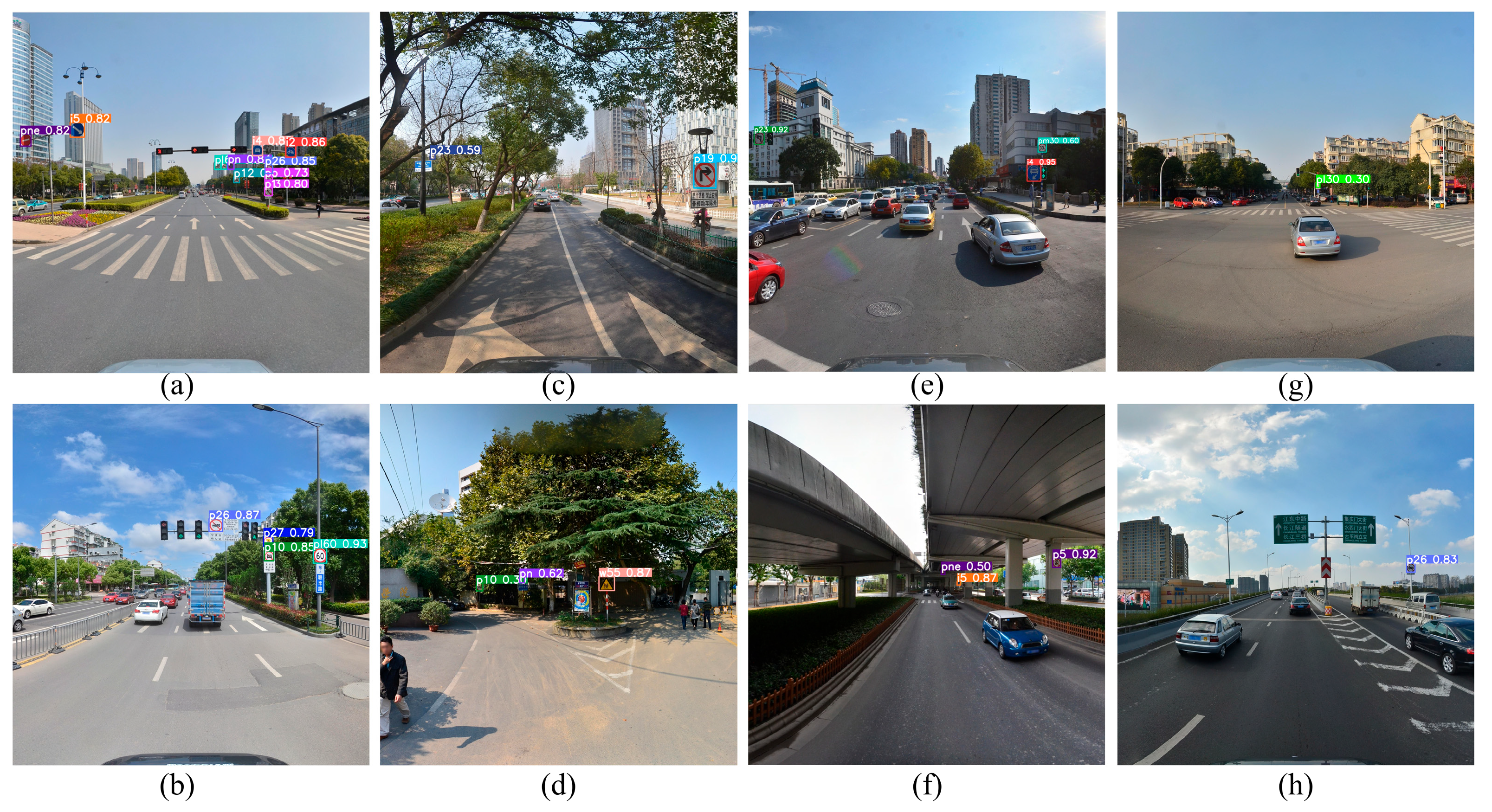

4.5. Visual Analysis

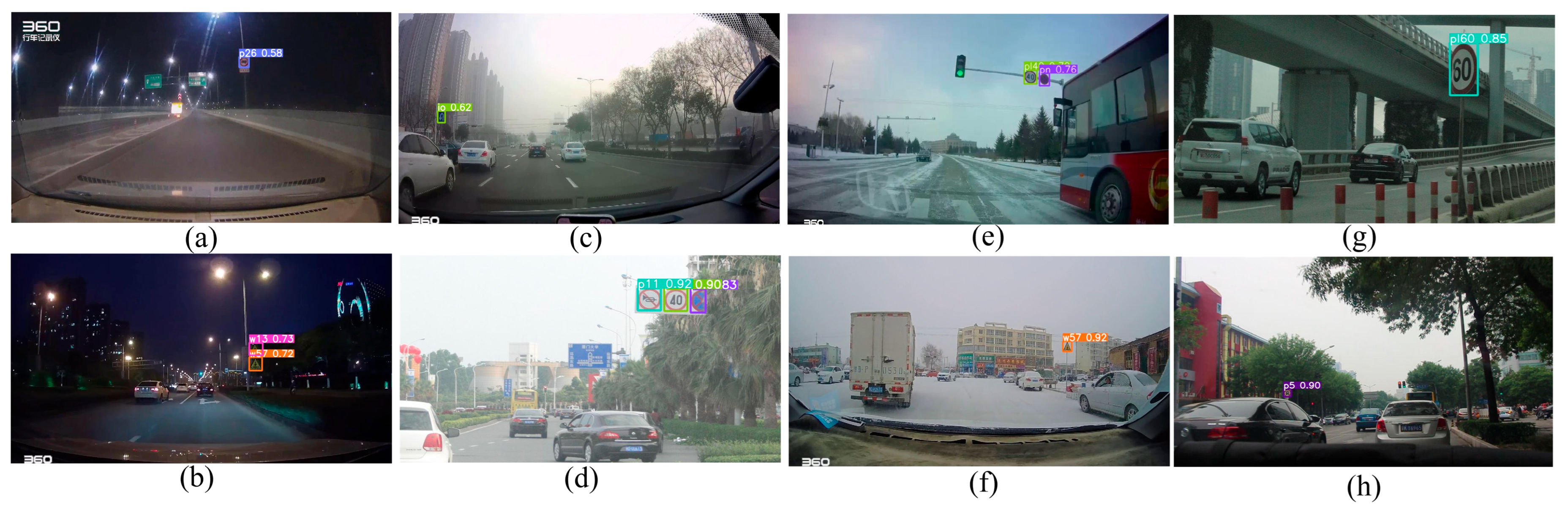

4.6. Generalizability Experiments

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Dewi, C.; Chen, R.-C.; Yu, H.; Jiang, X. Robust Detection Method for Improving Small Traffic Sign Recognition Based on Spatial Pyramid Pooling. J. Ambient Intell. Humaniz. Comput. 2021, 14, 8135–8152. [Google Scholar] [CrossRef]

- Barodi, A.; Bajit, A.; Zemmouri, A.; Benbrahim, M.; Tamtaoui, A. Improved Deep Learning Performance for Real-Time Traffic Sign Detection and Recognition Applicable to Intelligent Transportation Systems. SSRN Electron. J. 2025, 12, 713–723. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, Q. Robust Stacking Ensemble Model for Traffic Sign Detection and Recognition. IEEE Access 2024, 12, 178941–178950. [Google Scholar] [CrossRef]

- Zheng, Z.; Cheng, Y.; Xin, Z.; Yu, Z.; Zheng, B. Robust Perception Under Adverse Conditions for Autonomous Driving Based on Data Augmentation. IEEE Trans. Intell. Transp. Syst. 2023, 24, 13916–13929. [Google Scholar] [CrossRef]

- Wang, C.; Zhou, W.; Wang, G. ORD-WM: A Two-Stage Loop Closure Detection Algorithm for Dense Scenes. J. King Saud Univ.-Comput. Inf. Sci. 2024, 36, 102115. [Google Scholar] [CrossRef]

- Zhai, H.; Du, J.; Ai, Y.; Hu, T. Edge Deployment of Deep Networks for Visual Detection: A Review. IEEE Sens. J. 2025, 25, 18662–18683. [Google Scholar] [CrossRef]

- Apostolidis, K.D.; Papakostas, G.A. Delving into YOLO Object Detection Models: Insights into Adversarial Robustness. Electronics 2025, 14, 1624. [Google Scholar] [CrossRef]

- Qin, Y.; Li, X.; He, D.; Zhou, Y.; Li, L. RLGS-YOLO: An Improved Algorithm for Metro Station Passenger Detection Based on YOLOv8. Eng. Res. Express 2024, 6, 045263. [Google Scholar] [CrossRef]

- Zeng, J.; Wu, H.; He, M. Image Classification Combined with Faster R–CNN for the Peak Detection of Complex Components and Their Metabolites in Untargeted LC-HRMS Data. Anal. Chim. Acta 2023, 1238, 340189. [Google Scholar] [CrossRef]

- Bi, X.; Hu, J.; Xiao, B.; Li, W.; Gao, X. IEMask R-CNN: Information-Enhanced Mask R-CNN. IEEE Trans. Big Data 2023, 9, 688–700. [Google Scholar] [CrossRef]

- Zhai, S.; Shang, D.; Wang, S.; Dong, S. DF-SSD: An Improved SSD Object Detection Algorithm Based on DenseNet and Feature Fusion. IEEE Access 2020, 8, 24344–24357. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You Only Look Once: Unified, Real-Time Object Detection. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 779–788. [Google Scholar]

- Wei, J.; As’arry, A.; Anas Md Rezali, K.; Zuhri Mohamed Yusoff, M.; Ma, H.; Zhang, K. A Review of YOLO Algorithm and Its Applications in Autonomous Driving Object Detection. IEEE Access 2025, 13, 93688–93711. [Google Scholar] [CrossRef]

- Shen, Q.; Li, Y.; Zhang, Y.; Zhang, L.; Liu, S.; Wu, J. CSW-YOLO: A Traffic Sign Small Target Detection Algorithm Based on YOLOv8. PLoS ONE 2025, 20, e0315334. [Google Scholar] [CrossRef]

- Cai, Y.; Min, R.; Huang, J. Research on Traffic Sign Detection Method Based on FLB-YOLOv8. In Proceedings of the Fifth International Conference on Computer Communication and Network Security (CCNS 2024), Guangzhou, China, 22 August 2024; SPIE: Bellingham, WA, USA, 2024; p. 35. [Google Scholar]

- Chen, J.; Huang, H.; Zhang, R.; Lyu, N.; Guo, Y.; Dai, H.N.; Yan, H. YOLO-TS: Real-Time Traffic Sign Detection with Enhanced Accuracy Using Optimized Receptive Fields and Anchor-Free Fusion. arXiv 2024, arXiv:2410.17144. [Google Scholar] [CrossRef]

- Huang, L.; Cai, H.; Peng, Y.; Liao, J. RePCMA-YOLOv8n: A Lightweight Traffic Sign Detection Model. In Proceedings of the International Conference on Applied Intelligence, Zhengzhou, China, 22–25 November 2024; Springer Nature: Singapore, 2024; pp. 105–114. [Google Scholar]

- Deng, Y.; Huang, L.; Gan, X.; Lu, Y.; Shi, S. A Heterogeneous Attention YOLO Model for Traffic Sign Detection. J. Supercomput. 2025, 81, 765. [Google Scholar] [CrossRef]

- Khalili, B.; Smyth, A.W. SOD-YOLOv8—Enhancing YOLOv8 for Small Object Detection in Aerial Imagery and Traffic Scenes. Sensors 2024, 24, 6209. [Google Scholar] [CrossRef]

- Liu, H.; Zhou, K.; Zhang, Y.; Zhang, Y. ETSR-YOLO: An Improved Multi-Scale Traffic Sign Detection Algorithm Based on YOLOv5. PLoS ONE 2023, 18, e0295807. [Google Scholar] [CrossRef]

- Du, S.; Pan, W.; Li, N.; Dai, S.; Xu, B.; Liu, H.; Xu, C.; Li, X. TSD-YOLO: Small Traffic Sign Detection Based on Improved YOLO V8. IET Image Process. 2024, 18, 2884–2898. [Google Scholar] [CrossRef]

- Xie, G.; Xu, Z.; Lin, Z.; Liao, X.; Zhou, T. GRFS-YOLOv8: An Efficient Traffic Sign Detection Algorithm Based on Multiscale Features and Enhanced Path Aggregation. Signal Image Video Process. 2024, 18, 5519–5534. [Google Scholar] [CrossRef]

- Chen, J.; Kao, S.; He, H.; Zhuo, W.; Wen, S.; Lee, C.-H.; Chan, S.-H.G. Run, Don’t Walk: Chasing Higher FLOPS for Faster Neural Networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Vancouver, BC, Canada, 17–24 June 2023. [Google Scholar]

- Howard, A.; Sandler, M.; Chu, G.; Chen, L.-C.; Chen, B.; Tan, M.; Wang, W.; Zhu, Y.; Pang, R.; Vasudevan, V.; et al. Searching for MobileNetV3. In Proceedings of the 2019 IEEE/CVF International Conference on Computer Vision (ICCV), Seoul, Republic of Korea, 27 October–2 November 2019. [Google Scholar]

- Han, K.; Wang, Y.; Tian, Q.; Guo, J.; Xu, C.; Xu, C. GhostNet: More Features from Cheap Operations. In Proceedings of the 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), Seattle, WA, USA, 13–19 June 2020. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-Excitation Networks. In Proceedings of the 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; IEEE: Piscataway, NJ, USA, 2018. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.-Y.; Kweon, I.S. CBAM: Convolutional Block Attention Module. arXiv 2018, arXiv:1807.06521. [Google Scholar] [CrossRef]

- Ouyang, D.; He, S.; Zhang, G.; Luo, M.; Guo, H.; Zhan, J.; Huang, Z. Efficient Multi-Scale Attention Module with Cross-Spatial Learning. In Proceedings of the ICASSP 2023—2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Rhodes Island, Greece, 4–10 June 2023; IEEE: Piscataway, NJ, USA, 2023; pp. 1–5. [Google Scholar]

- Li, H.; Li, J.; Wei, H.; Liu, Z.; Zhan, Z.; Ren, Q. Slim-Neck by GSConv: A Lightweight-Design for Real-Time Detector Architectures. J. Real-Time Image Process. 2024, 21, 62. [Google Scholar] [CrossRef]

- Zhu, Z.; Liang, D.; Zhang, S.; Huang, X.; Li, B.; Hu, S. Traffic-Sign Detection and Classification in the Wild. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 2110–2118. [Google Scholar]

- Zhang, J.; Zou, X.; Kuang, L.D.; Wang, J.; Sherratt, R.S.; Yu, X. CCTSDB 2021: A More Comprehensive Traffic Sign Detection Benchmark. Hum.-Centric Comput. Inf. Sci. 2022, 12, 23. [Google Scholar]

- Geoffroy, H.; Berger, J.; Colange, B.; Lespinats, S.; Dutykh, D. The Use of Dimensionality Reduction Techniques for Fault Detection and Diagnosis in a AHU Unit: Critical Assessment of Its Reliability. J. Build. Perform. Simul. 2022, 16, 249–267. [Google Scholar] [CrossRef]

| Parameter | Specification |

|---|---|

| Operating System | Windows 10 |

| CPU | Intel(R) I7-12700 K |

| GPU | RTX3070Ti |

| GPU Memory | 8 GB |

| RAM | DDR 64 GB |

| Storage | WD SN770 NVMe SSD 1 TB |

| Programming Language | Python3.8 |

| Deep Learning Framework | Pytorch1.12.1 |

| CUDA Toolkit | CUDA11.3.1 |

| CuDNN Version | CuDNN 8.0.5.39 |

| Hyperparameters | Settings |

|---|---|

| Input Size | 640 × 640 |

| Learning Rate | 0.01 |

| Batch Size | 16 |

| Momentum | 0.937 |

| Weight Decay | 0.0005 |

| Optimizer | SGD |

| Epoch | 300 |

| Experiments | Faster-C2f | Faster-EMA C2f | Conv-BiFPN | GSP-Detect | P (%) | R (%) | mAP50 (%) | Param (M) | GFLOPs | FPS | Training Time (h) |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Baseline | 83.6 | 76.5 | 83.1 | 11.1 | 28.8 | 176 | 45.2 | ||||

| 1 | √ | 84.8 | 76.2 | 85.3 | 7.4 | 25.3 | 181 | 40.1 | |||

| 2 | √ | 85.3 | 79.7 | 86.3 | 8.2 | 26.6 | 180 | 42.4 | |||

| 3 | √ | √ | 85.0 | 81.0 | 87.3 | 9.3 | 27.2 | 178 | 43.1 | ||

| 4 | √ | √ | √ | 88.3 | 78.5 | 86.2 | 7.1 | 22.3 | 183 | 35.3 |

| Model/mAP50/% | i2 | i4 | i5 | il100 | il60 | il80 | io | ip | p3 | p5 | p6 | p10 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| YOLOv8s | 90 | 91.1 | 92.1 | 97.2 | 95.4 | 95 | 90.2 | 87.3 | 85.5 | 93.7 | 68.3 | 79.9 |

| FEBG-YOLOv8s | 90.2 | 91.5 | 94.2 | 98.0 | 95.5 | 96.4 | 92.1 | 87.4 | 86.5 | 94.3 | 70.2 | 79.9 |

| Model/mAP50/% | p11 | p12 | p19 | p23 | p26 | p27 | pg | ph4 | ph5 | pl100 | pl120 | pl20 |

| YOLOv8s | 86.2 | 84.1 | 85.7 | 87.4 | 87.8 | 90.9 | 85.3 | 76 | 85.4 | 97.5 | 93.2 | 50.6 |

| FEBG-YOLOv8s | 86.4 | 86.7 | 87.2 | 88.4 | 90.92 | 93.7 | 88.4 | 85.3 | 88.1 | 98.7 | 95.1 | 68.8 |

| Model | P (%) | R (%) | mAP50 (%) | Param (M) | GFLOPs |

|---|---|---|---|---|---|

| SSD | 65.2 | 60.4 | 65.6 | 120 | 35.8 |

| Faster R-CNN | 58.7 | 52.3 | 55.7 | 42.6 | 134.5 |

| YOLOv3 | 62.2 | 59.7 | 81.5 | 63.0 | 185.3 |

| YOLOv4 | 64.8 | 62.3 | 82.1 | 95.9 | 141.8 |

| YOLOv5s | 71.2 | 69.2 | 82.5 | 6.8 | 16.5 |

| YOLOv7-tiny | 70.8 | 67.6 | 81.3 | 6.0 | 13.2 |

| YOLOv8s | 83.6 | 76.5 | 83.1 | 11.1 | 28.8 |

| YOLOv10s | 86.0 | 76.5 | 85.1 | 8.1 | 24.3 |

| YOLOv11s | 85.2 | 78.2 | 85.2 | 9.4 | 25.7 |

| ETSR-YOLO [11] | 88.5 | 77.4 | 88.2 | 7.5 | 37.6 |

| TSD-YOLO [11] | 90.8 | 83.8 | 90.6 | 8.8 | 65.7 |

| CRFS-YOLOv8 [11] | - | 95.0 | 71.2 | 1.71 | 10.9 |

| FEBG-YOLOv8s | 88.3 | 78.5 | 86.2 | 7.1 | 22.3 |

| Model | Param (M) | GFLOPs | mAP50 (%) |

|---|---|---|---|

| Baseline | 7.4 | 25.3 | 85.3 |

| +CBAM | 10.2 | 27.2 | 85.6 |

| +SE | 9.9 | 26.7 | 85.1 |

| +ECA | 9.9 | 26.7 | 85.2 |

| +CA | 10.1 | 27.0 | 85.4 |

| +EMA | 8.2 | 26.6 | 86.3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Published by MDPI on behalf of the World Electric Vehicle Association. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xing, C.; Sun, H.; Yang, J. A Lightweight Traffic Sign Detection Model Based on Improved YOLOv8s for Edge Deployment in Autonomous Driving Systems Under Complex Environments. World Electr. Veh. J. 2025, 16, 478. https://doi.org/10.3390/wevj16080478

Xing C, Sun H, Yang J. A Lightweight Traffic Sign Detection Model Based on Improved YOLOv8s for Edge Deployment in Autonomous Driving Systems Under Complex Environments. World Electric Vehicle Journal. 2025; 16(8):478. https://doi.org/10.3390/wevj16080478

Chicago/Turabian StyleXing, Chen, Haoran Sun, and Jiafu Yang. 2025. "A Lightweight Traffic Sign Detection Model Based on Improved YOLOv8s for Edge Deployment in Autonomous Driving Systems Under Complex Environments" World Electric Vehicle Journal 16, no. 8: 478. https://doi.org/10.3390/wevj16080478

APA StyleXing, C., Sun, H., & Yang, J. (2025). A Lightweight Traffic Sign Detection Model Based on Improved YOLOv8s for Edge Deployment in Autonomous Driving Systems Under Complex Environments. World Electric Vehicle Journal, 16(8), 478. https://doi.org/10.3390/wevj16080478