1. Introduction

The technological challenges that need to be solved to attain complete autonomy are divided into four areas by designers and researchers in the autonomous driving field: perception, localization, path planning, and controls [

1]. Perception is concerned with the task of detecting where objects like cars [

2], trucks [

3], bikes [

4], and pedestrians are [

5]; which lane the egocar is driving in [

6]; where its boundaries are [

7]; and so on. The solutions to these perception subtasks are researched using machine learning techniques that co-ordinate various sensors to accurately detect the surroundings of the egocar [

8]. Sonar, radar, lidar, cameras, and other sensors are among them [

9]. Further, there is also an additional layer of complexity here: certain objects do not move (static elements), while others do (dynamic elements), and it is critical to distinguish between the two [

10].

On the other hand, localization is a vital function for self-driving cars [

11], allowing them to locate their position within centimeters on a reference map [

12]. This high degree of precision is necessary and allows a self-driving car to comprehend its surroundings and form an understanding of the road itself, road objects, and lane structures [

13].

Additionally, the path-planning task is concerned with how an autonomous car drives from one point on the map (the initial position for the trip) to the final goal (the final position for the trip) [

14]. It is divided into two subtasks: global path planning, which is the high-level path on the map for the desired trip, and local path planning, which generates a trajectory profile and a velocity profile for the egocar to maneuver across its environment while avoiding any obstacles, changing lanes, passing other vehicles, etc. The path-planning module receives information from both the perception and the localization modules and sends its generated trajectory to the control module [

15].

Moreover, the controls task in autonomous cars is concerned with the automatic application of force on the car actuators to achieve the reference-trajectory tracking goals [

16]. In self-driving cars, the controllers’ output is exerted on three actuators: steering, throttle, and brake systems [

17]. The input information to these controllers takes several forms: the reference trajectory received from the egocar path-planning module, the egocar speed and acceleration signals, and the speed and acceleration of the preceding car [

18].

This paper mainly focuses on localization and how to improve its accuracy, as it allows the autonomous car to better comprehend its surroundings and form an understanding of the road and lane structures (e.g., when a lane forks or merges, schedule lane changes and determine lane routes even when markers are obscured) [

19].

Sensors play a key role in autonomous vehicle localization, with vehicles being typically equipped with a combination of GPS, IMU, cameras, lidar, radar, and odometry sensors. These sensors provide data about the vehicle’s surroundings and its own motion, forming the foundation for accurate localization. Sensor fusion is a crucial technique that integrates data from multiple sensors to enhance accuracy and reliability. By combining information from sources like GPS, IMU, and lidar, a vehicle can compensate for the limitations of individual sensors, improving overall localization performance. Odometry is another important component estimating the vehicle’s position based on changes in motion, such as wheel speed and steering angle. While useful, odometry tends to accumulate errors over time, prompting its use in conjunction with other localization methods. Map matching involves comparing sensor data with pre-existing maps of the environment, aiding in the identification of the vehicle’s location. This technique is particularly beneficial in environments with recognizable landmarks, contributing to precise localization. Particle filters, a probabilistic method, maintain a set of potential vehicle poses based on sensor measurements and motion models. As the vehicle moves, this set of poses is updated to converge toward the most likely position, enhancing the accuracy of localization.

The 3D pose of an autonomous car inside a high-definition (HD) map, comprising 3D location, 3D orientation, and associated uncertainty, is provided using localization [

20]. Unlike the usage of a navigation map with GPS, which only requires a few meters of precision, the localization of a self-driving car requires a far greater level of accuracy relative to the map, generally in the range of centimeters and a few tenths of degree [

20].

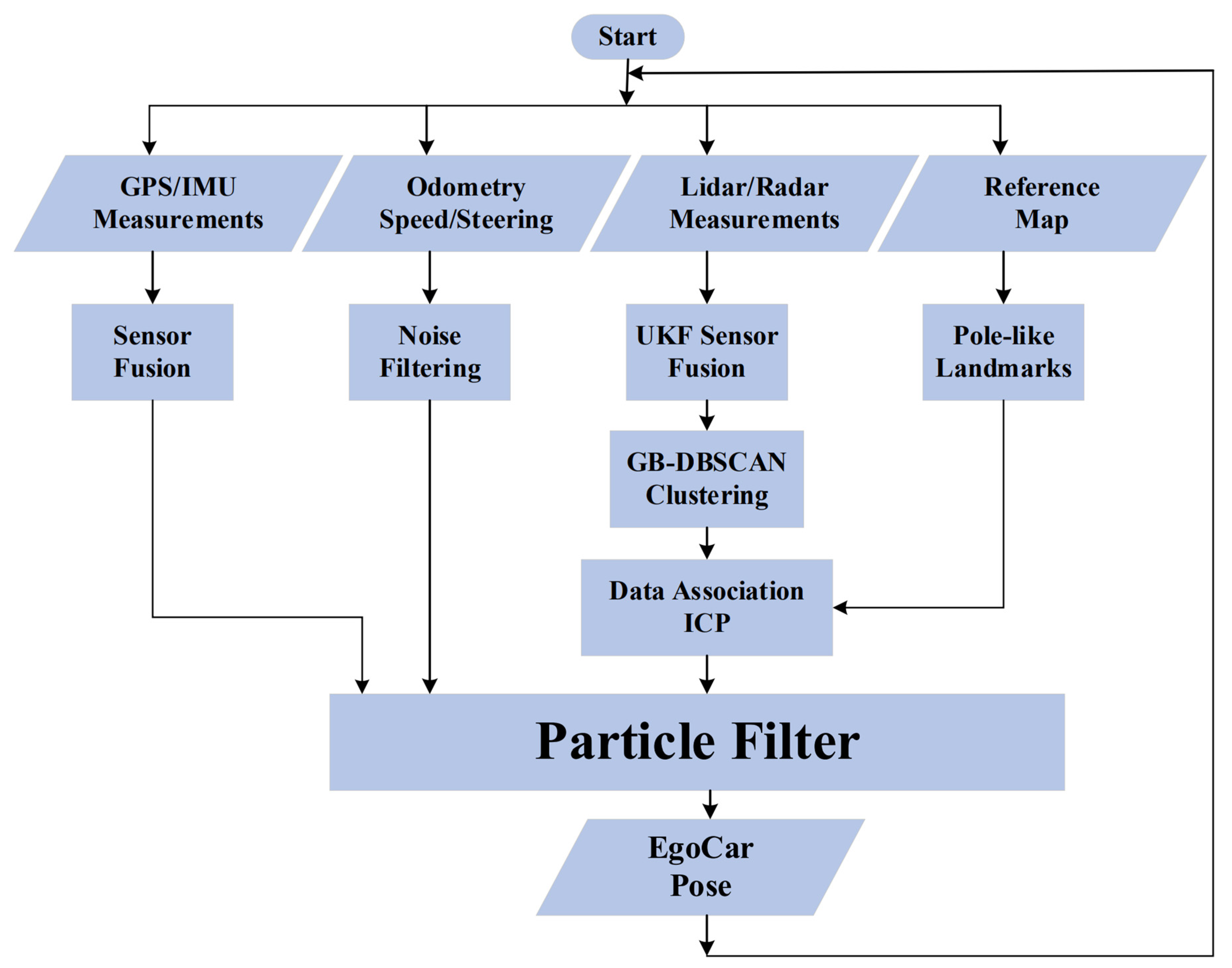

A landmark-based reference map represents a balanced approach between no-map (SLAM) techniques and detailed high-definition mapping techniques and is thus adopted in this study. Moreover, as a contribution, this work does not depend on a single sensor (lidar), with its high resolution but well-known limitations (e.g., limited reach; severely affected by fog, snow, or dust [

21]), but fuses it with radar (e.g., long reach, not affected by fog, but with low resolution [

22]) to strengthen both methods and obtain the best out of them. Additionally, by using a carefully tailored UKF as the employed fusion technique, pole-like landmarks can be detected with greater precision and robustness. As an additional contribution, this paper addresses the uncertainties in the detected pole-like landmark co-ordinates. They are represented on the reference map in a probabilistic form. This allows Bayesian inference, applied using a tailored version of a particle filter, to estimate the egocar pose.

2. Literature Review

Satellite-based localization has been available for decades and has undergone various upgrades. Newer systems, such as RTK-GPS [

23] or DGPS [

24], provide efficient options since they attain centimeter-level precision without the use of any extra techniques. Nonetheless, there are significant uncertainties concerning their consistency. Structures or other towering road obstacles that obscure the line of sight between the car and the satellites can reduce precision by some meters [

25] in metropolitan areas [

26]. Moreover, the latency for acquiring the signals usually comes with errors, which is a critical issue that needs to be addressed [

27] by filtration, noise cancelation, and compensating for missing readings [

28].

As an alternative to the RTK-GPS and according to [

15], lidar approaches were shown to be the most promising in terms of performance for the localization of self-driving vehicle applications. Nevertheless, they demand high processing and computational power, in addition to their high cost. Thus, they are rendered to be impracticable in terms of commercialization and cost-affordability. Therefore, greater lidar technology enhancement or alternative methodologies like vision-based localization or ground-penetrating radar localization within lidar maps may open the door for more feasible systems from a commercial point of view. Nevertheless, before these systems can be mass-deployed, further study will be needed to evaluate their robustness, validate their performance across a range of driving circumstances, and refine operation settings.

Accordingly, using reference-dense maps [

20] could provide a more reliable localization option [

29]. These maps may take several forms like point clouds [

30], grid maps [

31], or polygon meshes [

32]. Nevertheless, map-based systems have the fundamental disadvantage of requiring a substantial amount of memory, which quickly becomes pricey when larger-scale maps are employed [

33]. To address this issue, “landmark maps” have sparked significant attention [

11]. These maps contain only a very limited number of recognizable and designated features that have been extracted from huge volumes of raw sensory data (collected from cameras, radars, and lidars) and condensed [

34], which, in return, reduces the needed memory use by several orders of magnitude.

Subsequently, several efforts have been made in recent research to tackle the challenge of car localization by the employment of lidar point clouds from which pole-like markers are identified. This issue is separated into two sub-problems. The first sub-problem is concerned with the detection of poles and the estimation of their positions, while the second one is concerned with the estimation of the egocar pose based on the position of the detected poles. For example, a pole detector is created by Weng et al. [

35] by dividing the region around the egocar and counting the reflected-scan points in each voxel. The detection of poles is accomplished by recognizing the stacks of voxels that are vertically linked and all surpass a predefined threshold. Additionally, the detector employs the RANSAC algorithm [

36] to fit all of the points associated with the discovered stacks of voxels to a cylindrical shape. When it comes to the egocar pose estimation, the nearest-neighborhood data association is utilized and paired with a particle filter.

Furthermore, the main emphasis of the Sefati et al. pole-detection approach is to eliminate the ground plane from the point cloud generated by the sensors [

37]. A horizontal regular grid is then constructed from the projection of the remaining point-cloud points. The occupancy and height parameters are used to cluster the neighboring cells, and a cylinder is fitted to each of the resulting clusters. Similarly, for the egocar pose estimation, the nearest-neighborhood data association is also employed and paired with a particle filter.

Kummerle et al. improved on the previous work by fitting planes to point-cloud-constructed building facades and also fitting lines to lane markings extracted from stereo camera images [

34]. The previous improvements enhance the pole detection, which consequently improves the pose estimation by employing a Monte Carlo method to tackle the data association stage and a nonlinear least-squares optimization technique to refine the finally computed pose.

A more comprehensive work was conducted by Schaefer et al. [

38] as they propose three phases to construct a full localization system based on landmark detection. The first phase is the pole extractor, the second one is the mapping, and the third one is the localization. The method used for pole detection considers both the endpoint of the laser beams as well as the available open space between the lidar and those beam endpoints. Therefore, based on a map of only pole landmarks, the presented method demonstrates precise and consistent car localization for large time scales. Experiments are carried out for 35 hours over the course of 15 months going through different circumstances like construction zones, weather and seasonal variations, different routes, and hordes of moving objects.

Several attempts have been made to remove the dependency on reference maps and only depend on the mounted sensors on the egocar. These endeavors focus on constructing a map and computing localization simultaneously in what is called simultaneous localization and mapping (SLAM) techniques [

39]. However, these techniques are computationally much more expensive than the ones that depend on reference maps. Therefore, they usually rely on approximations to speed up processing and create a functional outcome [

40]. Consequently, the lack of precision in these techniques hinders their applicability to autonomous driving.

The research gap addressed by this paper lies in the need for a nuanced and versatile mapping technique that strikes a balance between simultaneous localization and mapping (SLAM) methods, which lack mapping information, and high-definition mapping techniques, which can be overly detailed. In contrast to existing approaches, this work adopts a landmark-based reference map, providing a more comprehensive mapping solution.

Furthermore, the existing literature often relies on a single high-resolution sensor, such as lidar, which, despite its advantages, has well-known limitations, including restricted reach and vulnerability to environmental factors like fog, snow, or dust. This paper contributes by adopting a fusion approach, combining lidar with radar. While radar offers a longer reach and is less affected by adverse weather conditions, it has lower resolution. The integration of these complementary sensors is designed to capitalize on their individual strengths and mitigate their weaknesses.

Additionally, the paper introduces a carefully tailored Unscented Kalman Filter (UKF) as the fusion technique, enhancing precision and robustness in detecting pole-like landmarks. This aspect represents a research gap in sensor fusion techniques for landmark-based mapping.

Moreover, the paper addresses a crucial research gap by acknowledging and handling uncertainties in the detected pole-like landmark co-ordinates. Unlike existing methods, this study represents uncertainties in a probabilistic form on the reference map, enabling Bayesian inference. The application of a tailored particle filter allows for the estimation of egocar pose while considering uncertainties, contributing to the overall robustness and reliability of the localization system. This unique approach to handling uncertainties in landmark-based mapping represents a novel contribution to the existing body of research in autonomous vehicle localization.

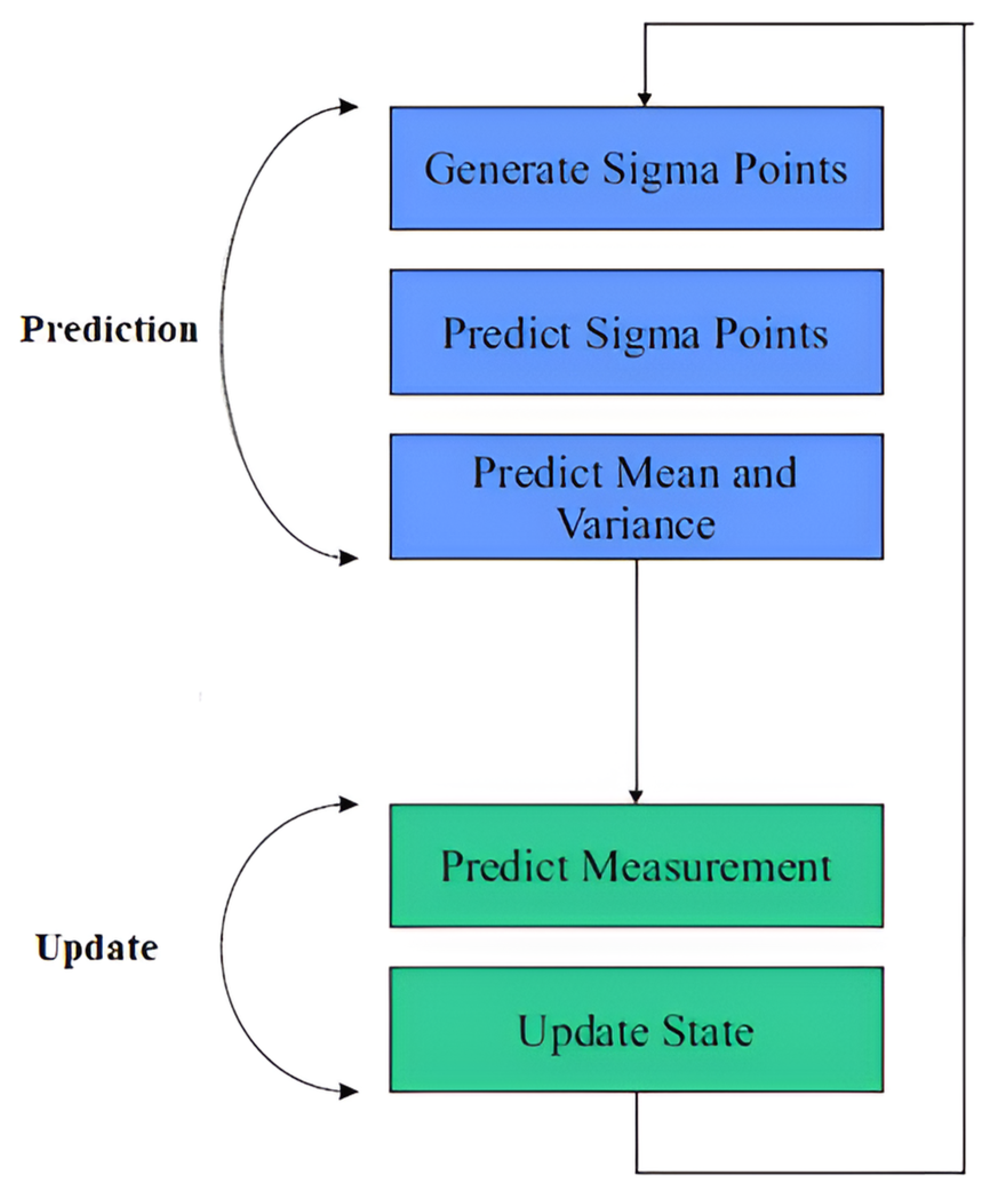

4. Overview of the UKF

The KF is an equation-based system that works as a predictor–update cyclic optimum estimator that minimizes the estimated error covariance [

44]. Given the measurement

of a discrete-time-controlled process described by a set of linear stochastic difference equations, the Kalman filter predicts the state

.

Inapplicably, because KF is restricted to linear processes, it is incompatible with the radar measurement process, which is fundamentally nonlinear. To solve this restriction, the UKF was developed [

45]. The UKF is a deterministic sampling-based derivative-free alternative to the extended Kalman filter [

46]. The UKF also employs the predict–update two-step procedure, but it has been supplemented with additional processes such as sigma point production and prediction, as presented in

Figure 2.

The state Gaussian distribution is represented in the UKF process by a small number of wisely picked sample points known as sigma points. The

sigma points are chosen using the following formula:

where

is the Kalman filter process estimate covariance matrix,

is the sigma-point matrix containing

sigma-point vectors, and

is a design parameter that defines the spread of the produced sigma points and often is calculated as

.

Each produced sigma point is entered into the UKF nonlinear process model described in Equation (2) to build the matrix of predicted sigma points

with an

dimension in what is called the sigma-point prediction phase.

where

is the white noise of the process, which is modeled as a Gaussian distribution (

) with zero mean and covariance matrix

.

The mean and covariance matrices of the predicted state are then computed from the projected sigma points using Equation (3):

where

is the sigma-point weights that are applied to invert the sigma-point spreading. These weights are computed as follows in Equation (4):

Each produced sigma point is entered into the nonlinear UKF’s measurement model described by Equation (5) to build the matrix of predicted measurement sigma points with an

dimension in the measurement prediction phase.

The mean and covariance matrices of the predicted measurement are then computed using the predicted sigma points and the covariance matrix R of the measurement noise, as shown in Equation (6):

where

is the sigma-point weights computed in Equation (4),

is the measurement covariance matrix, and

Is the expected value of the measurement of white noise

, which is modeled as a Gaussian distribution (

) with zero mean and covariance matrix

The final stage is the UKF state update, in which the gain matrix (

) of the UKF is produced using the estimated cross-correlation matrix (

) between the sigma points in the state space and the measurement space, as in Equation (7). The gain is applied to both the UKF state vector (

) and the state covariance matrix (

).

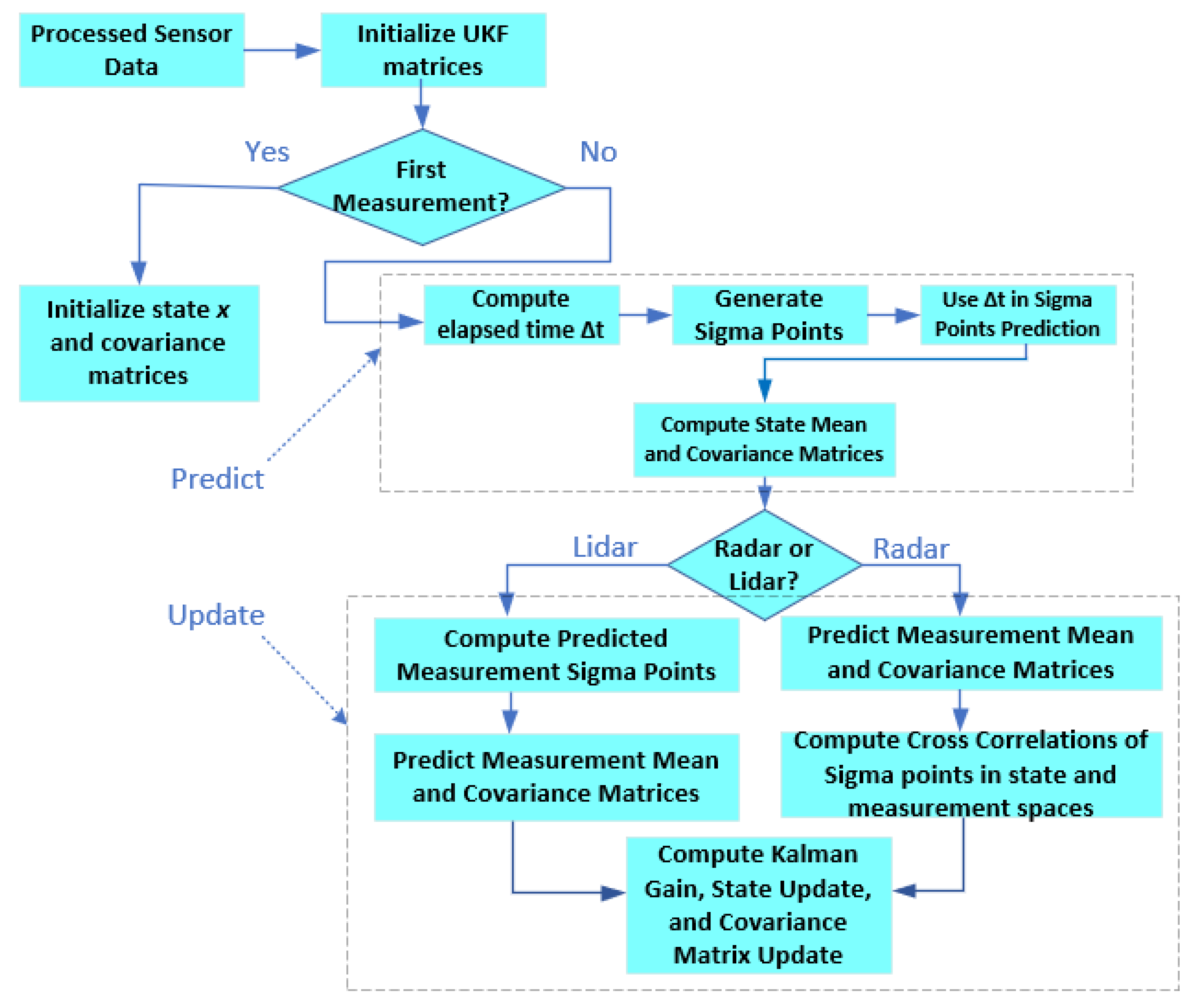

6. UKF-Based Lidar/Radar Fusion

The lidar measures the centroid of the object’s position (moving or stationary) in Cartesian co-ordinates (

and

), as given by Equation (13), while the radar measures the same object’s centroid position in polar co-ordinates (

,

). Moreover, the radar also measures the object’s velocity (

), as given by Equation (14). Therefore, to unify the way of measurement, a mapping function is used (in Equation (15)) to convert the lidar Cartesian co-ordinates to polar form.

As shown in

Figure 4, the prediction step is conducted for both lidar and radar simultaneously. However, the update step is specific for each sensor since each sensor has its own measurement model. Moreover, the update of the belief is executed upon the arrival of a new sensor measurement (both sensors are not synchronized).

After the initialization steps for both the UKF and the measurement models for both the lidar and radar, the time step (

) is computed as shown in

Figure 4 and, at the same time, using Equation (1), the sigma point (

) is created. The predicted sigma point (

) for the next time step is calculated using Equation (2), utilizing the object’s model given by Equation (9). Then, the predicted state mean vector (

) and its covariance matrix (

) is calculated by applying Equation (3).

For the update step in the fusion process, there are two branches. The first one is the lidar branch and the second one is the radar branch. The last received measured signal will decide which branch the workflow will assume (either lidar or radar signal). If a radar signal is received, the predicted measurement sigma point (

) is computed based on the model in Equation (14) and from

in Equation (5). Then,

states that the covariance

and the noise covariance

are computed based on Equation (6). The

covariance matrix is further defined as given in Equation (16) below:

where

,

, and

are the noise SD of the object yaw rate, the noise SD of the object heading, and the noise SD of the object radial distance, respectively.

The cross-correlation matrix () is then computed based on the resulting state vectors and with their counterparts and respectively using Equation (7). The is then used to compute the UKF gain (), which is consequently used to compute the updated state vector () and covariance matrix (), as shown in Equation (7). Then, and will be used to generate the new sigma points () in the next iteration, etc.

Instead, if a lidar signal is received, the predicted measurement sigma point (

) is computed based on the linear lidar measurement model in Equation (17) and directly from

. Then,

states that the covariance

and the noise covariance

are computed based on Equation (6) with more detail of

in Equation (17).

where the object

and

positions,

and

, are the noise SDs, respectively. Likewise, the radar, the cross-correlation matrix (

), the KF gain (

), and the covariance matrix (

) are computed from Equation (7).

7. Point-Cloud Clustering and Association

The point cloud resulting from the application of the UKF fusion algorithm presented in

Figure 4 offers details about the objects in the surroundings of the egocar. To extract each object’s information (geometrical shape and pose), clustering is employed on the UKF point cloud to characterize each object in a source-point model form, which will significantly lower the computation overhead and memory prerequisite.

The clustering in this work is performed using the GB-DBSCAN algorithm, which is a variation of the original DBSCAN algorithm [

47], which is an unsupervised learning algorithm that arranges data points with high density together in one group. Two parameters are used to tune the DBSCAN algorithm and define the allowed density. “

ε” is the first parameter to tune, which defines the allowed radial distance from the point under evaluation “

p”. “

minPts” is the second parameter that determines the lowest number of detection points that are located within a distance “

ε” from “

p”, including “

p” itself, to form a cluster. Therefore, the determination of the density of points to be grouped together to form a cluster is conducted by the proper selection of “

ε” and “

minPts”. However, if the objects to be detected take several topologies (other than circular), as with the case of road objects, the DBSCAN algorithm is not enough. An improvement came from Dietmayer et al. [

48] by proposing not to use fixed parameters like “

ε” and “

minPts” in the GB-DBSCAN but, instead, forming a polar grid taking into account the angular and radial resolution of the sensor. The search area is not necessarily a circular one with a fixed radius but can be a dynamic elliptic one. Therefore, this algorithm is very appropriate for pole-like objects, as their distinctive feature is that they produce a high density of detection points, much more than their surroundings.

The outcome of the GB-DBSCAN algorithm is a coarse clustering of the UKF fusion data. The application of the RANSAC algorithm fine-tunes these clusters and associates geometrical shape proposals with them [

36]. For the pole-like landmarks, the most appropriate geometrical shape is the circular one, which is fitted to all the

points in each cluster. The RANSAC algorithm then finds the parameters of each fitted circle (the radius (

) and the centroid (

)), which will represent a pole landmark, by finding the optimal solution of the following formula:

Once the pole-like landmarks are identified in the source UKF fusion data, the data association step takes place by linking these identified landmarks with their matched counterpart in the target (the supplied reference map). The employed PF relies heavily on precision in this step. The association is implemented using the ICP algorithm [

42]. The standard ICP searches the whole point clouds in both the source and the target; however, here, only the centroids are considered during the objects’ matching process, which, in return, saves a significant amount of memory and processing overhead.

The ICP algorithm is composed of two phases that run iteratively till convergence is reached. In our case, we have a set X representing the source points (UKF data) and another set Y representing the target points (a point-cloud map). The first phase is the matching between each point in X with the closest point in Y. The second phase is to find the optimal transform (X

→Y) given the matched association. Storing the X and Y sets in the form of a KD tree data structure [

49] is very crucial for the efficient matching by distance performed by the ICP.

and

are two matched points from X and Y sets, respectively. The 2D ICP algorithm finds the rotation angle “

” and the translation parameter “t” that minimizes the summation of the quadratic distance between the target and the source points, as presented by Equation (19). Note that the rotation matrix

uses angle “

” as a variable.

The co-ordinate system must be unified between the egocar co-ordinates (

and

) and the reference map co-ordinates (

and

) to perform the data association correctly. Therefore, the homogenous transformation is used (in the form of a transformation matrix) as given by Equation (20). The translation and rotation are performed using map particle/egocar co-ordinates (

and

) and the rotation angle

.

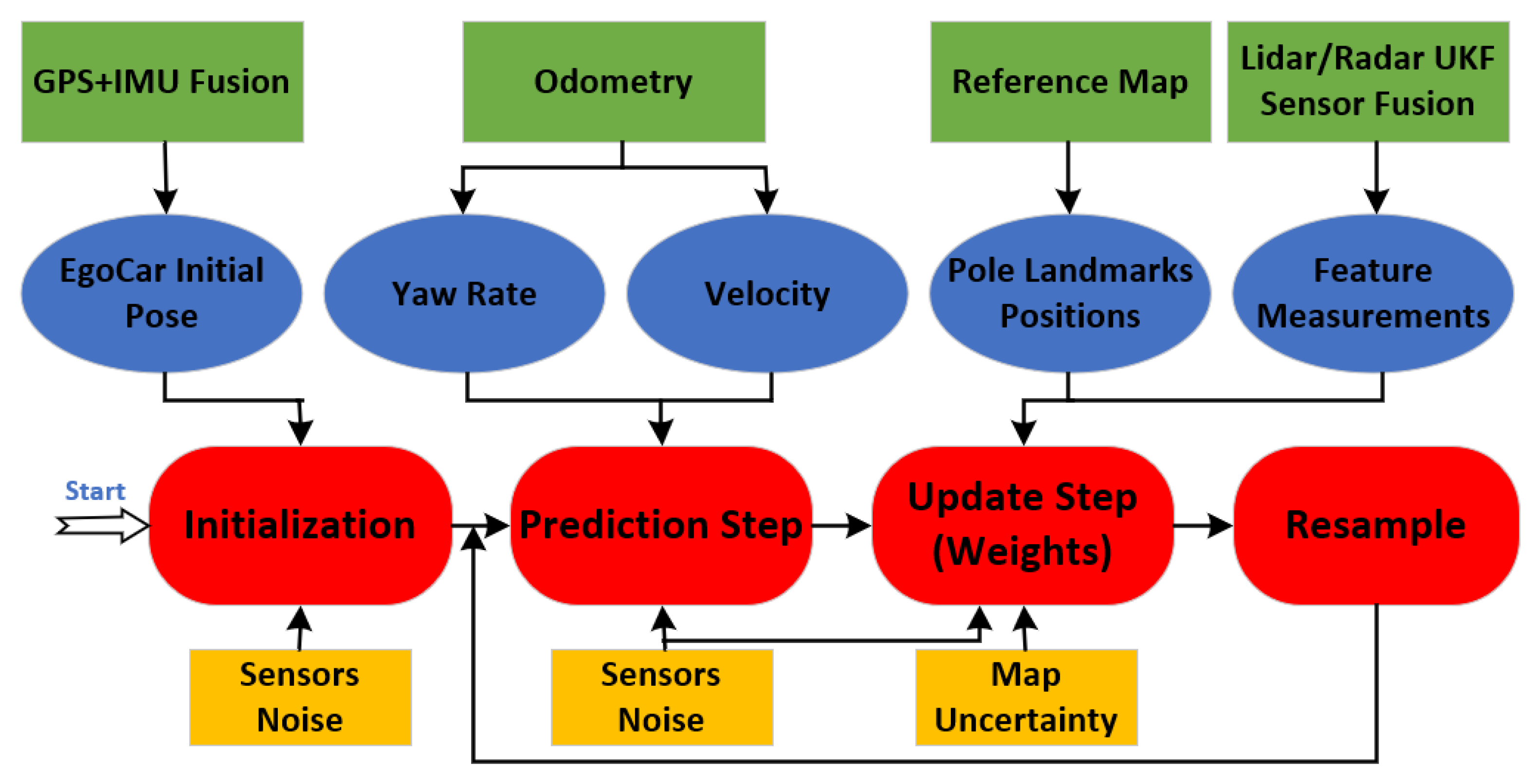

8. Details of the Particle Filter

For a stochastic process that has noisy observations

, the posterior distribution

could be represented by a finite set of particles. This is considered an approximate implementation of the Bayesian filter in a recursive mode with a normalization factor

that is given by Equation (21):

The higher the particles’ number

, the more accurate the representation of the belief distribution

, where

t denotes the time step at which the state of the set of the particles is considered, as given by Equation (22):

The actual state (the optimal solution) will be one of the particles included in the set

at time

t. Therefore, each

represents a hypothesis for the optimal solution. The implementation of the employed PF in the work is presented in Algorithm 1. Moreover, the workflow of the PF is illustrated in

Figure 5. Upon receipt of a new measurement (odometry) signal (

) or a pole-like object measurement update (

) from the UKF, a new search for an optimum egocar pose is initiated.

| Algorithm 1. Workflow of the employed PF. |

| Procedure Particle Filter (): |

Input: Set of particles at a time (), control

inputs , and a set of measurements .

Output: The updated set of particles at time . |

Begin

End. |

The PF implementation is a predict–update cycle, in which the prediction portion of the cycle is implemented by step (2) in the procedure. For each particle (out of the

randomly generated particles at the initiation phase of the procedure), two values are calculated for each particle (which represents a possible egocar); the first one is the state hypothesis (

), and the second one is the state transition distribution

, which is calculated using the object’s motion model explained in

Section 5. A weight is created for each particle based on its generated state hypothesis (importance) among the other state hypotheses of the other particles, as given in Equation (23). Each weight takes the form of a multivariate Gaussian probability density function that is computed for each observation and the likelihood of all the observations are combined by taking their multiplication product.

where

is the count of measurements for particle

,

is the measurements covariance matrix,

is the state mean predicted measurement for the pole corresponding to the

observation at step

, and

is the

pole observation for particle

at step

.

After that, the set of particles () is resampled proportional to the previously produced weights (), where is a normalization coefficient, generating new particles and, hence, (the updated posterior approximation) is produced. will usually contain the strongest particles with multiple copies replacing the weak particles that are left out (have small weights (less important)). And, so on, the algorithm continues till will contain copies of one particle (convergence) that represents the solution or the required egocar pose.

The convergence indicator for the PF is the weighted mean error (

) computed over all the particles, as shown in Equation (24).

is achieved by calculating the RMSE between the ground truth

and the state of each particle

and multiplied by its weight, then summing the product for all particles, and dividing the result by the summation of all the weights.

9. Realization of the RTMCL

The development and coding of the RTMCL are accomplished using C++ programming language [

50], as it is well known for its high performance, especially for real-time applications [

51]. The developed code runs on the Ubuntu Linux operating system [

52]. Moreover, the development used the efficient numerical solving package Eigen, which is used to perform all the vector and matrix computations involved in the execution of the objects’ model and the prediction and update steps [

53].

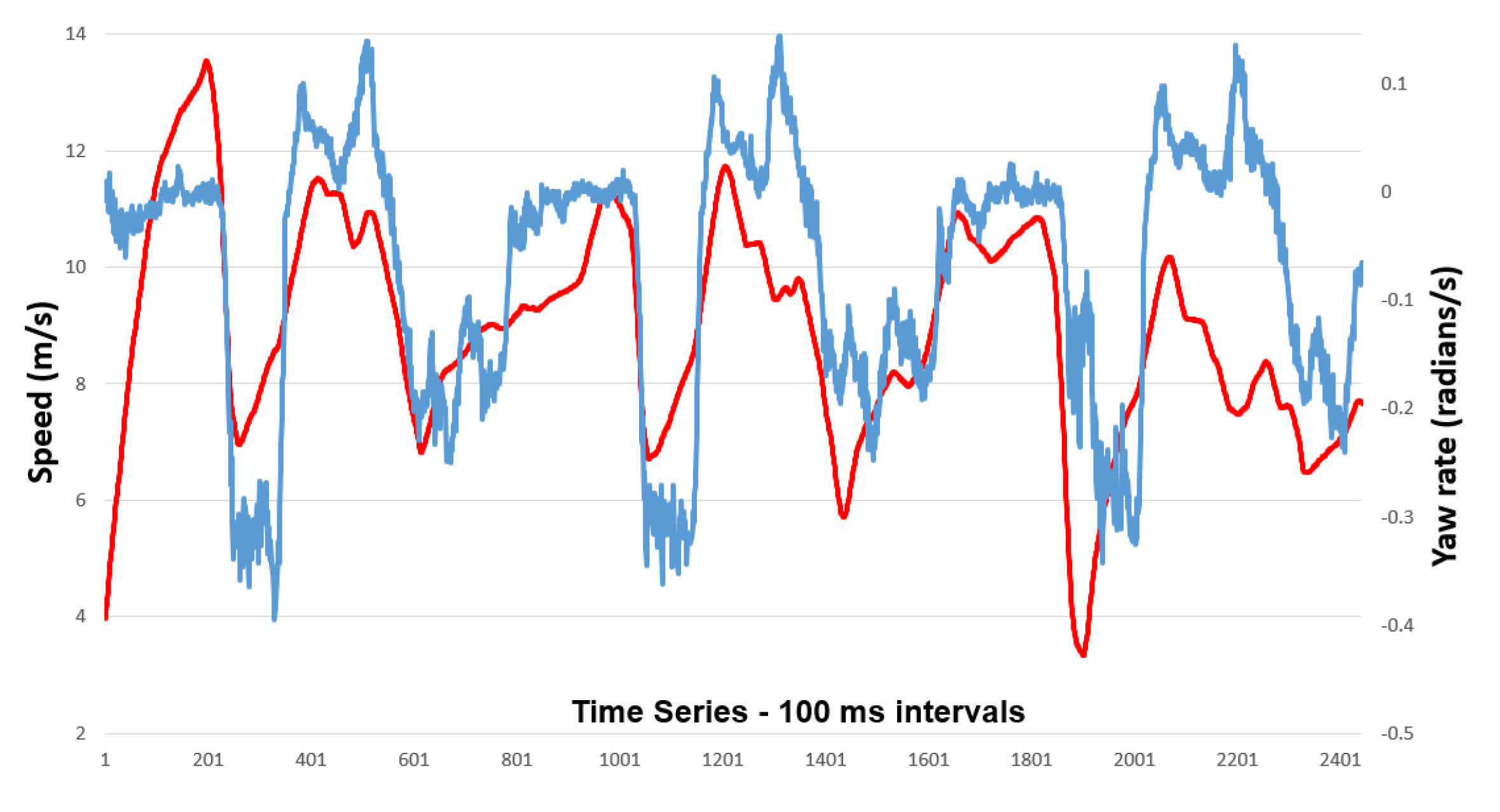

The sensor data are processed using the NVIDIA DRIVE AGX platform. The lidar is Velodyne Lidar VLP-16 (16 channels, 100 m range, 360° horizontal field of view (FoV), 30° vertical FoV, 300,000 points/sec) and the radar is Continental ARS430 (77 GHz, 250 m range, wide azimuth and elevation coverage, 0.1 m accuracy).

In order to sensitively execute the motion models for several objects (furnished by Equations (9)–(11)), various noise parameters must be carefully determined. Their values are fine-tuned and set and presented in

Table 1.

To evaluate the consistency of the UKF design, the NIS measure that is averaged over time is employed [

54] to fine-tune the above noise parameters. Moreover, to make sure the UKF design is unbiased and consistent, the estimation error is aggregated and its mean value is calculated. This value should be around zero, besides the UKF’s actual MSE corresponding to the UKF-computed state covariance. Equation (25) calculates the NIS value at each time sample

and then uses a moving

N-sample window of measurements to compute its average value (

).

The UKF performance depends heavily on how properly it is initialized [

54]. The estimated state vector (

) and its estimated state covariance matrix (

) are considered the most important initialized variables.

and

(the first and second terms of

in Equation (8)) are simply initialized by associating them to the early obtained unrefined sensor measurements. For the following three terms of the

, trial-and-error endeavors in addition to some intuition are used to initialize these variables, as given in

Table 2. Moreover, the

matrix is constructed as a diagonal matrix, as given in Equation (26), and includes the covariance values of the estimate of each term in

.

The calculation of the RMSE, as given in Equation (27), is used to evaluate the performance of the UKF, which is defined as how close the estimated ranges are from the true ranges (the ground truth). An

N-sample moving window of estimates is used to calculate this metric.

where

is the ground-truth state vector generated by the motion driving simulator [

55] or supplied as training data during the UKF design phase and

is the UKF’s output (the estimated state vector).

Regarding the PF, like the UKF, the proper initialization is very critical for its successful execution. The initialization process is as follows:

- (a)

The PF particles’ count

usually falls in the range from 100 to 1000 [

20], as per the literature [

43]. The higher the particles’ count, the higher the accuracy but the slower the speed of computation, and vice versa. Therefore, a compromise must be made. After many trials,

is selected after showing approved real-time performance with the required precision.

- (b)

The result of the GPS/IMU fusion is the initial pose of the egocar (

, which will be used by the PF in the initialization of all the

particles’ state vectors as follows:

where the particle

initialized pose is represented by

,

, and

. The standard deviations of the noise of the GPS/IMU fusion are

,

, and

. Moreover, the artificial noise amounts added to pose variables are

,

, and

. They are used to add randomization to these variables to improve the chances of convergence of the PF.

Table 3 lists the values used to initialize these parameters.

- (c)

The particles’ weights that value their importance use the uniform distribution for initialization.

- (d)

The landmarks that take a pole shape in the reference map and being used by the RTMCL algorithm are represented by

and

, which are Gaussian distributions for both

x and

y positions, respectively. These distributions are used to model these positions’ uncertainties. The standard deviation

and

values are shown in

Table 3.

Equation (29) presents the aggregated mean absolute error (MAE) of each estimated pose variable compared to the ground truth. This metric is used in order to evaluate the performance of the PF. It is computed by employing an N-measurement window that moves across the incoming pose estimates.

where

are the variables of the ground truth that are generated by the motion driving simulator [

56] or provided as training data throughout the particle filter design phase, and

are the best-estimated variables of the PF particles’ poses.

10. Testing and Evaluation Results

To fine-tune the hyperparameters of the RTMCL algorithm, broad trial-and-error tryouts have been carried out. For proper assessment, numerical KPIs are proposed and implemented as given by Equations (24), (26), and (28) to assess the RTMCL under several hyperparameter configurations.

Moreover, through an iterative tuning process, the performance of the RTMCL is evaluated on several testing tracks while under various sets of hyperparameters.

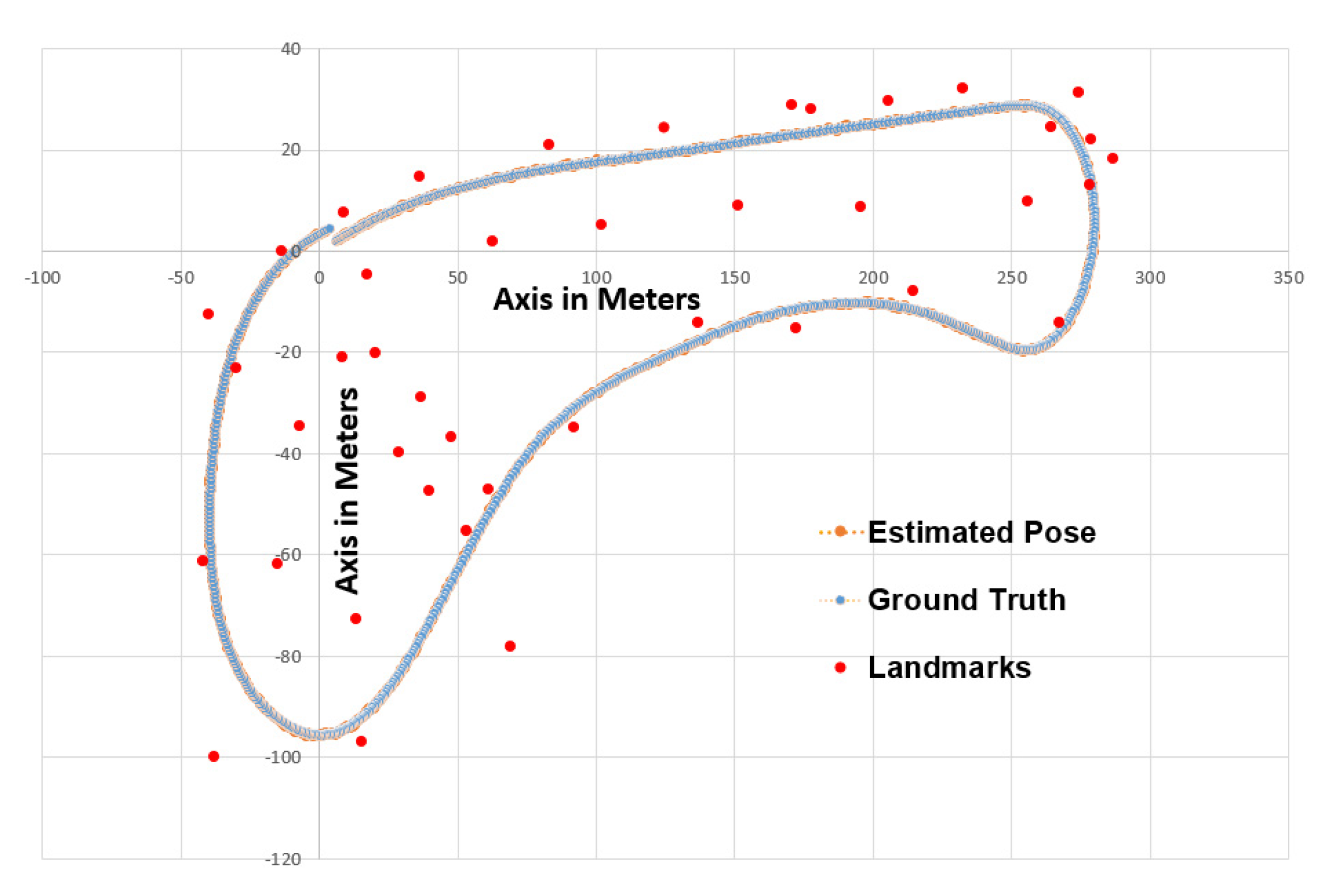

Figure 6 presents an example of one of the employed testing tracks. The length of this track is 754 m and has curvatures at several points. It also contains 42 landmarks that resemble poles to sufficiently emulate an urban driving environment.

The results of UKF testing performing the fusion of the lidar/radar are presented in

Table 4. Several objects are detected, including pole-like landmarks, cars, cyclists, and pedestrians. The five state variables,

,

,

,

, and

, are measured and their

RMSE are listed in

Table 5 as a KPI (Equation (26)). A comparison between the ground truth of each state variable to the estimated value of this state, then obtaining the error is performed by this KPI. Better detection is achieved with a lower value of this KPI.

The UKF is employed and tested in three different ways: the first one with only lidar signals, the second way with only radar signals, and the third one with the fusion between the lidar and the radar. This way of testing evaluates how significant the fusion is for the accuracy of object detection and tracking.

Table 5 presents the results of the three ways of testing on the bicycle track. It is clear how significant the fusion is at all pose variables, all of them have much better RMSE.

Table 5 clearly shows that fusion reduces the RMSE for all the pose state variables and makes a big difference in the accuracy of detection. As an example, the error of the detection position in the

x-axis (

) is lowered by 60% compared to the one with the lidar alone and 70% compared to the one with radar alone. Another example is the error of the velocity detection in the

x-axis (

) is lowered by 30% more than the one with the lidar alone and 26% more than the one with radar alone. Furthermore, the NIS KPI is computed as well for the three previous cases, showing that the fusion has significantly improved the UKF’s consistency. The fusion NIS quantities that are higher than the threshold of 95% have been lowered by 31% compared to the “only lidar” and 38.5% compared to the “only radar” values.

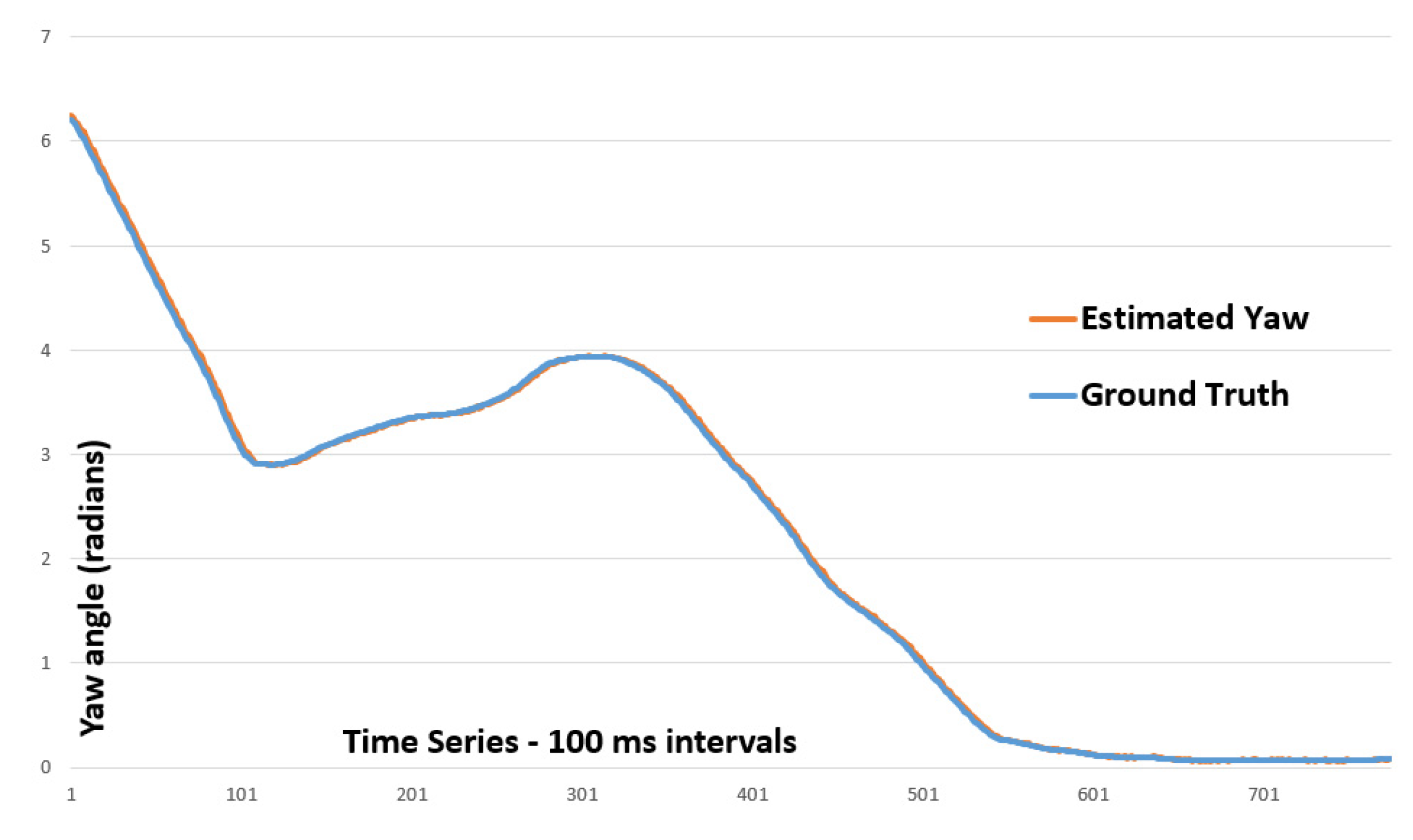

An example of testing the whole pipeline of the RTMCL, which includes the combination of the PF and UKF with the employment of the probabilistic reference map,

Figure 6,

Figure 7 and

Figure 8 present the simulation results of egocar driving on the test track in

Figure 6. The GB-DBSCAN is used for the data association step for the localization of the egocar on the global map.

Figure 7 and

Figure 8 show both the ground truth and the estimated egocar pose values drawn overlapping each other due to the computed small errors as reported in

Table 6. The PF performance is evaluated using the RMSE KPI given in Equation (28). This KPI is computed for the three variables of the pose (

,

, and

). It evaluates each of these estimated values against its ground truth and aggregates the resulting errors. The performance of the PF is better if these KPI values are lower. Several counts of particles are used to choose the most appropriate configuration for the employed PF and to test its performance in real time. Hence,

Table 6 shows that the minimum acceptable particle count is around 25 particles. Lower than this number, the PF starts to divert. However, to observe more robustness and improve further the accuracy, 50 particles are selected in this work to be employed by the PF, as higher numbers will not add much to the accuracy at the expense of much higher execution time. The count of 50 particles is considered a good balance between robustness and execution cost (real-time performance). The selection of 50 particles shows convergence in all the extensive performed tryouts.

The uncertainties in the reference map are modeled by the standard deviations associated with pole-like landmarks. The results of using the uncertainties in the map positions are reported in

Table 7. Several standard deviations are tested and show how significantly it affects the final egocar pose estimation produced by the PF. The table shows that the RTMCL can handle a significant amount of uncertainty in the landmark position, which can reach up to 2.0 m or

, and the computed error in the egocar localization is still below 30 cm.

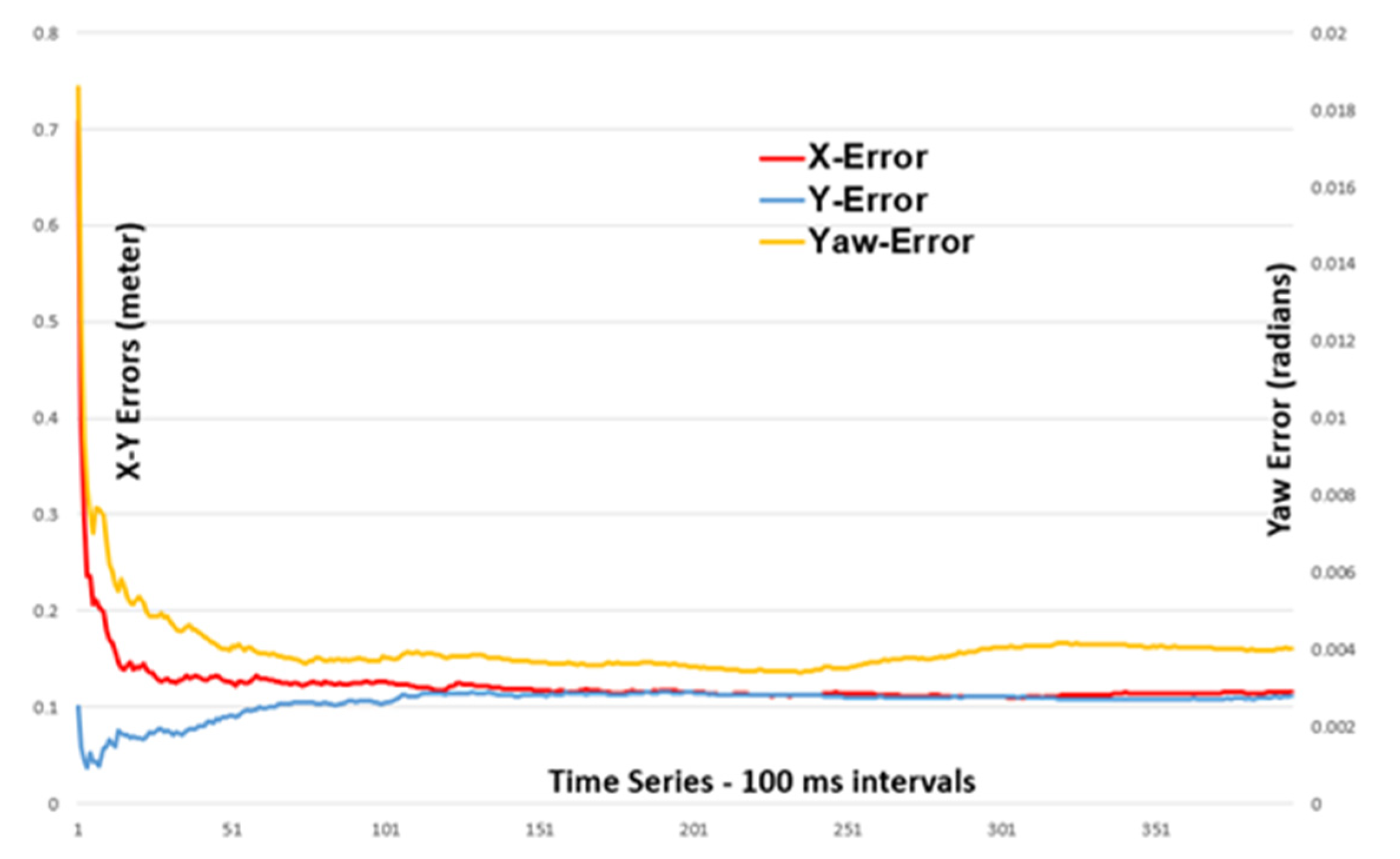

The performance of the PF during the start-up phase is very crucial for its convergence, stability, and performance later on. Therefore,

Figure 9 presents the start-up segment of the PF employing 50 particles till it becomes stabilized (after around 100 time steps). As a sign or indicator of the robustness of the PF performance,

Figure 10 presents the computed particles’ weights throughout a single lap driving on the test track. The best weight at each time step is significantly higher than the average weight. This is considered a good indicator of robustness [

57].

It is observed that there is an inverse relation between these weight values (best and average) and the number of detected poles. Equation (30) below introduces another streamlined form of Equation (22), where the values of the weights are computed as the multiplication of the likelihoods of the observation of each pole-like landmark represented by a multivariate Gaussian probability density function. There is a suitable number of observed poles. If the number of observed poles is high, there is a chance that one or more of these poles have small Gaussian probability density values that can bring the whole product down and, consequently, the associated weight. After many tryouts, it is found that the most appropriate count of identified poles at each time step for the smooth operation of the RTMCL is in the scope of 4 to 12 poles as shown in

Figure 11.

The real-time performance of the RTMCL is tested using extensive experimentation and tryouts proving that its execution is fast enough.

Table 8 presents the measurements of the execution times of several tasks within the RTMCL pipeline on a very moderate computational platform: an Intel 1.6-GHz Core i5 with 8 GB of RAM. These measurements are collected for a single estimation of the egocar pose based on the 12-pole detection.

It is recommended from the literature that the localization pipeline iterates at a speed of 10 Hz to 30 Hz. Accordingly, the measured RTMCL execution time per iteration, which is 8.2 ms (122 Hz) in

Table 8, is considered very well suited at even the upper limit of the recommendation.

Moreover, the values in

Table 8 show that more than 50 pole detections can be employed and, still, the UKF is capable of meeting the requirements of collecting the lidar and radar measurements at a rate of 30 Hz (33.3 ms cycle) according to [

1]. All the above analyses show that the real-time performance of the RTMCL is very convenient, allowing its robustness to be improved by either increasing the number of particles or allowing more pole detections.

Moreover, the results achieved in the work are significantly advantageous if compared with other works in the literature. The mean error for both the lateral and longitudinal positions (11 cm) is less than the 20 cm obtained in [

58] using a pole-based reference map, stereo camera system as a main sensor, GPS as a secondary sensor, particle filter, and Kalman filter.

Additionally, the achieved mean error by RTMCL is also lower than the one achieved by [

35], which is 16.4 cm (with an absolute max. error of 99.6 cm), obtained using a pole-based mapping, a lidar fused with RTK-GPS, IMU, wheel encoder, a Bayesian filter, and the egocar’s CTRV motion model.

Furthermore, the RTMCL outperforms the localization system proposed by [

41], which fuses a digital map, GPS, and IMU, along with lanes and symbolic road markings (SRMs) recognized by a front camera via a particle filter. The latter-mentioned system produced a 95 cm longitudinal error and a 49 cm lateral error. The full comparative analysis is presented in

Table 9 below.

Moreover, the proposed RTMCL is compared to that of other research work based on fusion techniques, as summarized in

Table 10. The first work discusses Gao et al.’s deep learning method for autonomous vehicle object detection [

59], emphasizing the fusion of vision and lidar data to enhance classification accuracy. It highlights the upsampling of lidar point clouds, conversion into a depth feature map, and integration with RGB data for CNN input. The study employs a vehicle-mounted camera and lidar, using NVIDIA

® GeForce GTX Titan X and NVIDIA

® Jetson TX1 (NVIDIA, Santa Clara, CA, USA) for offline detection and classification on a public dataset.

The second work outlines Gao et al.’s integrated framework for predicting cyclist trajectories at unsignalized intersections [

60]. It focuses on intent inference using a Dynamic Bayesian Network (DBN) considering motion, ego vehicle, and environmental features. The approach employs LSTM with encoder–decoder for online trajectory prediction, achieving predictions within 0.9 s of entering the intersection. The text underscores the approach’s outperformance over baseline methods (KF and DBN + KF) across various prediction horizons and its potential benefits for intelligent vehicles in road-user protection and path planning. Future research considerations include addressing cyclist–ego vehicle interaction and enhancing method robustness and interpretability.

11. Conclusions

The proposed real-time Mont Carlo Localization (RTMCL) approach for self-driving vehicles is a pipeline of tasks that starts with the fusion between the GPS and the IMU to produce an unrefined egocar pose that is used to initialize the employed particle filter. Then, in the next task, a tailored UKF is used to fuse the collected data from the installed radar and lidar sensors on the egocar. The output of the UKF contains information about objects in the surrounding of the egocar and, thus, needs further processing to detect these objects and identify them. This task is carried out using the clustering algorithms GB-DBSCAN and RANSAC then produces the poses of the detected pole-like landmarks. In another task. The ICP algorithm is employed to perform the association between the identified poles in the previous task to the ones embedded in the provided reference map. In the final task, a tailored particle filter is developed and all the outputs of the previous tasks generate the fine-tuned egocar pose as the final localization measured reference to the co-ordinates of the global map.

The implementation of the whole RTMCL is conducted using C++ to ensure real-time performance. The testing results show that the RTMCL reached an accuracy of around 11 cm of mean error using merely 50 particles. The recorded execution times on affordable CPUs show the number of particles can further increase without much effect on the real-time performance.

Furthermore, testing the pipeline of the RTMCL has shown that it can run smoothly at 30 Hz while handling up to 50 poles at a time. Moreover, up to 2.0 m in uncertainty in the pose positions of the pole-like landmarks in the reference map can be handled successfully by the RTMCL, as its estimated egocar pose error will not exceed 30 cm in such a case.

It is planned to supplement a front camera as well as range finders [

61] to the current fusion approach in the future and further examine the returns they will contribute to overall localization performance [

62]. In addition, the RTMCL will be supplemented with additional road objects such as curbs, sidewalks, guardrails, and intersection features, among others. It is also worth considering the use of deep learning and other machine learning approaches.