Off-Road Environment Semantic Segmentation for Autonomous Vehicles Based on Multi-Scale Feature Fusion

Abstract

:1. Introduction

- A gated fusion module is used to fuse PointTensor and Cylinder to achieve an efficient end-to-end semantic segmentation network.

- A multi-layer receptive field module is designed to effectively realize the feature extraction of objects of different scales, especially at small scales.

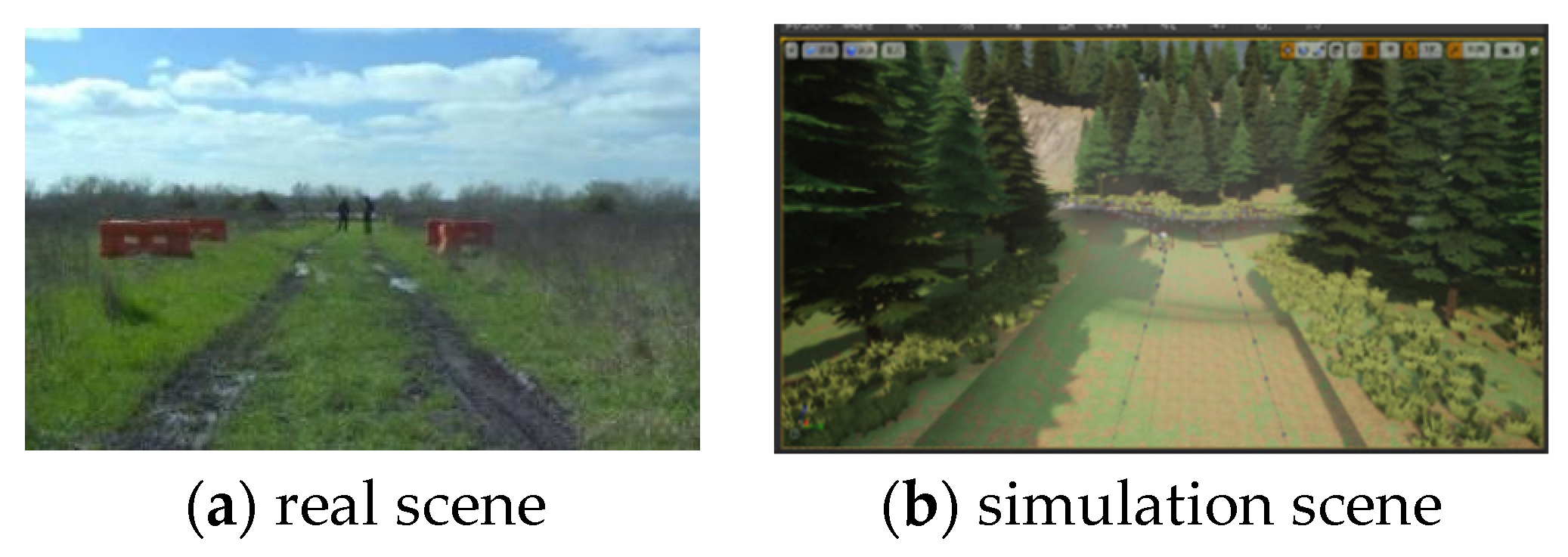

- The CARLA simulator is used to build an off-road environment dataset. In addition, linear interpolation is used to enhance the off-road dataset to improve the training effect.

2. Related Work

2.1. Structured Road Scene 3D Semantic Segmentation

2.2. Off-Road Point Cloud Semantic Segmentation

3. Methods

3.1. Network Overview

3.2. Multi-Layer Receptive Field Fusion Module

3.3. Data Augmentation

3.4. Loss Function and Optimizer

4. Experiment

4.1. Data Preparation

4.2. Evaluation Metrics and Experimental Setup

4.3. Experimental Results

4.3.1. Network Comparison Experiment

4.3.2. Data Augmentation Experiment Results

4.3.3. Multilayer Receptive Field Module Experiment Result

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Yuan, Y.; Jiang, Z.; Wang, Q. Video-based Road detection via online structural learning. Neurocomputing 2015, 168, 336–347. [Google Scholar] [CrossRef]

- Broggi, A.; Cardarelli, E.; Cattani, S.; Sabbatelli, M. Terrain mapping for off-road Autonomous Ground Vehicles using rational B-Spline surfaces and stereo vision. In Proceedings of the 2013 IEEE Intelligent Vehicles Symposium (IV), Gold Coast, Australia, 23–26 June 2013; IEEE: Piscataway, NJ, USA, 2013; pp. 648–653. [Google Scholar]

- Wang, P.-S.; Liu, Y.; Sun, C.; Tong, X. Adaptive O-CNN: A Patch-based Deep Representation of 3D Shapes. ACM Trans. Graph. 2018, 37, 1–11. [Google Scholar] [CrossRef]

- Xia, X.; Bhatt, N.P.; Khajepour, A.; Hashemi, E. Integrated Inertial-LiDAR-Based Map Matching Localization for Varying Environments. IEEE Trans. Intell. Veh. 2023, 1–12. [Google Scholar] [CrossRef]

- Yu, Y.; Li, J.; Guan, H.; Wang, C.; Yu, J. Semiautomated extraction of street light poles from mobile LiDAR point-clouds. IEEE Trans. Geosci. Remote Sens. 2014, 53, 1374–1386. [Google Scholar] [CrossRef]

- Liu, H.; Lin, C.; Gong, B.; Wu, D. Extending the Detection Range for Low-Channel Roadside LiDAR by Static Background Construction. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–12. [Google Scholar] [CrossRef]

- Dosovitskiy, A.; Ros, G.; Codevilla, F.; Lopez, A.; Koltun, V. CARLA: An open urban driving simulator. In Proceedings of the Conference on Robot Learning, PMLR, Mountain View, CA, USA, 13–15 November 2017; pp. 1–16. [Google Scholar]

- Wu, B.; Wan, A.; Yue, X.; Keutzer, K. Squeezeseg: Convolutional neural nets with recurrent CRF for real-time road-object segmentation from 3d lidar point cloud. In Proceedings of the ICRA, IEEE International Conference on Robotics and Automation, Brisbane, Australia, 21–25 May 2018; pp. 1887–1893. [Google Scholar]

- Wu, B.; Zhou, X.; Zhao, S.; Yue, X.; Keutzer, K. Squeezesegv2: Improved model structure and unsupervised domain adaptation for road-object segmentation from a lidar point cloud. In Proceedings of the IEEE International Conference on Robotics and Automation, Montreal, QC, Canada, 20–24 May 2019; pp. 4376–4382. [Google Scholar]

- Xu, C.; Wu, B.; Wang, Z.; Zhan, W.; Vajda, P.; Keutzer, K.; Tomizuka, M. Squeezesegv3: Spatially-adaptive convolution for efficient point- cloud segmentation. arXiv 2020, arXiv:2004.01803. [Google Scholar]

- Cortinhal, T.; Tzelepis, G.; Erdal Aksoy, E. SalsaNext: Fast, uncertainty-aware semantic segmentation of LiDAR point clouds. In Proceedings of the International Symposium on Visual Computing, San Diego, CA, USA, 5–7 October 2020; Springer: Cham, Switzerland, 2020; pp. 207–222. [Google Scholar]

- Zhang, Y.; Zhou, Z.; David, P.; Yue, X.; Xi, Z.; Gong, B.; Foroosh, H. Polarnet: An improved grid representation for online lidar point clouds se-mantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 16–18 June 2020; pp. 9601–9610. [Google Scholar]

- Li, B.; Zhang, T.; Xia, T. Vehicle detection from 3d lidar using fully convolutional network. arXiv 2016, arXiv:1608.07916. [Google Scholar]

- Zhou, Y.; Tuzel, O. Voxelnet: End-to-end learning for point cloud based 3d object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4490–4499. [Google Scholar]

- Qi, C.R.; Su, H.; Mo, K.; Guibas, L.J. Pointnet: Deep learning on point sets for 3d classification and segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 652–660. [Google Scholar]

- Qi, C.R.; Yi, L.; Su, H.; Guibas, L.J. Pointnet++: Deep hierarchical feature learning on point sets in a metric space. arXiv 2017, arXiv:1706.02413. [Google Scholar]

- Hu, Q.; Yang, B.; Xie, L.; Rosa, S.; Guo, Y.; Wang, Z.; Trigoni, N.; Markham, A. Randla-net: Efficient semantic segmentation of large-scale point clouds. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 11108–11117. [Google Scholar]

- Thomas, H.; Qi, C.R.; Deschaud, J.E.; Marcotegui, B.; Goulette, F.; Guibas, L.J. Kpconv: Flexible and deformable convolution for point clouds. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6411–6420. [Google Scholar]

- Liu, Z.; Tang, H.; Lin, Y.; Han, S. Point-voxel cnn for efficient 3d deep learning. arXiv 2019, arXiv:1907.03739. [Google Scholar]

- Xu, J.; Zhang, R.; Dou, J.; Zhu, Y.; Sun, J.; Pu, S. Rpvnet: A deep and efficient range-point-voxel fusion network for lidar point cloud segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 16024–16033. [Google Scholar]

- Liu, T.; Liu, D.; Yang, Y.; Chen, Z. Lidar-based traversable region detection in off-road environment. In Proceedings of the 2019 Chinese Control Conference (CCC), Guangzhou, China, 27–30 July 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 4548–4553. [Google Scholar]

- Chen, L.; Yang, J.; Kong, H. Lidar-histogram for fast road and obstacle detection. In Proceedings of the 2017 IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; IEEE: Piscataway, NJ, USA, 2017; pp. 1343–1348. [Google Scholar]

- Gao, B.; Xu, A.; Pan, Y.; Zhao, X.; Yao, W.; Zhao, H. Off-road drivable area extraction using 3D LiDAR data. In Proceedings of the 2019 IEEE Intelligent Vehicles Symposium (IV), Paris, France, 9–12 June 2019; IEEE: Piscataway, NJ, USA, 2019; pp. 1505–1511. [Google Scholar]

- Holder, C.J.; Breckon, T.P.; Wei, X. From on-road to off: Transfer learning within a deep convolutional neural network for segmentation and classification of off-road scenes. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; Springer: Cham, Switzerland, 2016; pp. 149–162. [Google Scholar]

- Zhu, X.; Zhou, H.; Wang, T.; Hong, F.; Ma, Y.; Li, W.; Li, H.; Lin, D. Cylindrical and asymmetrical 3d convolution networks for lidar segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 9939–9948. [Google Scholar]

- Takikawa, T.; Acuna, D.; Jampani, V.; Fidler, S. Gated-scnn: Gated shape cnns for semantic segmentation. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 5229–5238. [Google Scholar]

- Dai, Y.; Gieseke, F.; Oehmcke, S.; Wu, Y.; Barnard, K. Attentional feature fusion. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual, 5–9 January 2021; pp. 3560–3569. [Google Scholar]

- Choy, C.; Gwak, J.Y.; Savarese, S. 4d spatio-temporal convnets: Minkowski convolutional neural networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 3075–3084. [Google Scholar]

- Behley, J.; Garbade, M.; Milioto, A.; Quenzel, J.; Behnke, S.; Stachniss, C.; Gall, J. Semantickitti: A dataset for semantic scene understanding of lidar sequences. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9297–9307. [Google Scholar]

- Jiang, P.; Osteen, P.; Wigness, M.; Saripalli, S. Rellis-3d dataset: Data, benchmarks and analysis. In Proceedings of the 2021 IEEE International Conference on Robotics and Automation (ICRA), Xi’an, China, 30 May–5 June 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1110–1116. [Google Scholar]

- Zhang, Z.; Sabuncu, M. Generalized cross entropy loss for training deep neural networks with noisy labels. arXiv 2018, arXiv:1805.07836. [Google Scholar]

- Berman, M.; Triki, A.R.; Blaschko, M.B. The lovász-softmax loss: A tractable surrogate for the optimization of the intersection-over-union measure in neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 4413–4421. [Google Scholar]

| Network | IoU (%) | mIoU (%) | Time (ms) | Params (M) | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| Grass | Mud | Vegetation | Rock | Water | People | |||||

| A | SalsaNext | 99.82 | 74.85 | 20.06 | 39.71 | 51.71 | 54.26 | 56.74 | 38 | 6.7 |

| PVCNN | 99.56 | 82.35 | 24.19 | 41.93 | 56.54 | 57.04 | 60.35 | 151 | 2.5 | |

| Our work | 99.98 | 83.70 | 23.57 | 50.76 | 68.11 | 56.30 | 63.74 | 165 | 58.6 | |

| B | SalsaNext | 64.74 | 9.58 | 79.04 | 75.89 | 23.20 | 83.17 | 55.94 | 42 | 6.7 |

| PVCNN | 63.58 | 13.27 | 86.79 | 82.93 | 27.65 | 84.63 | 59.81 | 152 | 2.5 | |

| Our work | 65.18 | 16.09 | 85.40 | 84.58 | 31.09 | 88.51 | 61.81 | 159 | 58.6 | |

| Classes | Before | After | Change Rate |

|---|---|---|---|

| grass | 32.609% | 30.874% | −5.321% |

| vegetation | 27.945% | 26.458% | −5.321% |

| rocks | 22.204% | 21.023% | −5.319% |

| water | 11.452% | 10.843% | −5.318% |

| mud | 5.313% | 9.922% | 86.749% |

| person | 0.211% | 0.628% | 197.630% |

| IoU (%) | mIoU (%) | |||||||

|---|---|---|---|---|---|---|---|---|

| Grass | Mud | Vegetation | Rocks | Water | People | |||

| A | before | 99.98 | 76.56 | 22.34 | 51.89 | 64.78 | 44.25 | 59.97 |

| after | 99.98 | 83.70 | 23.57 | 50.76 | 68.11 | 56.30 | 63.74 | |

| B | before | 65.40 | 10.67 | 82.07 | 81.99 | 30.61 | 64.94 | 55.95 |

| after | 65.18 | 16.09 | 85.40 | 84.58 | 31.09 | 88.51 | 61.81 | |

| IoU (%) | mIoU (%) | |||||||

|---|---|---|---|---|---|---|---|---|

| Grass | Mud | Vegetation | Rocks | Water | People | |||

| A | MAPC-Net-1 | 97.86 | 84.24 | 24.37 | 45.63 | 65.82 | 50.74 | 61.44 |

| MAPC-Net | 99.98 | 83.70 | 23.57 | 50.76 | 68.11 | 56.30 | 63.74 | |

| B | MAPC-Net-1 | 62.37 | 17.28 | 81.07 | 75.52 | 32.24 | 72.65 | 56.86 |

| MAPC-Net | 65.18 | 16.09 | 85.40 | 84.58 | 31.09 | 88.51 | 61.81 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Feng, Y.; Li, X.; Zhu, Z.; Hu, Y. Off-Road Environment Semantic Segmentation for Autonomous Vehicles Based on Multi-Scale Feature Fusion. World Electr. Veh. J. 2023, 14, 291. https://doi.org/10.3390/wevj14100291

Zhou X, Feng Y, Li X, Zhu Z, Hu Y. Off-Road Environment Semantic Segmentation for Autonomous Vehicles Based on Multi-Scale Feature Fusion. World Electric Vehicle Journal. 2023; 14(10):291. https://doi.org/10.3390/wevj14100291

Chicago/Turabian StyleZhou, Xiaojing, Yunjia Feng, Xu Li, Zijian Zhu, and Yanzhong Hu. 2023. "Off-Road Environment Semantic Segmentation for Autonomous Vehicles Based on Multi-Scale Feature Fusion" World Electric Vehicle Journal 14, no. 10: 291. https://doi.org/10.3390/wevj14100291

APA StyleZhou, X., Feng, Y., Li, X., Zhu, Z., & Hu, Y. (2023). Off-Road Environment Semantic Segmentation for Autonomous Vehicles Based on Multi-Scale Feature Fusion. World Electric Vehicle Journal, 14(10), 291. https://doi.org/10.3390/wevj14100291