Abstract

The security of Cloud applications is a major concern for application developers and operators. Protecting users’ data confidentiality requires methods to avoid leakage from vulnerable software and unreliable Cloud providers. Recently, trusted execution environments (TEEs) emerged in Cloud settings to isolate applications from the privileged access of Cloud providers. Such hardware-based technologies exploit separation kernels, which aim at safely isolating the software components of applications. In this article, we propose a methodology to determine safe partitionings of Cloud applications to be deployed on TEEs. Through a probabilistic cost model, we enable application operators to select the best trade-off partitioning in terms of future re-partitioning costs and the number of domains. To the best of our knowledge, no previous proposal exists addressing such a problem. We exploit information-flow security techniques to protect the data confidentiality of applications by relying on declarative methods to model applications and their data flow. The proposed solution is assessed by executing a proof-of-concept implementation that shows the relationship among the future partitioning costs, number of domains and execution times.

1. Introduction

Cloud computing has grown considerably in the last few decades to support applications by remotely providing computation, storage and networking resources [1]. Nowadays, Cloud technologies are extensively adopted but open problems and issues still remain. Among them, the security aspects of Cloud computing represent a major concern of both fundamental research and development [2,3].

There are several lines of research in Cloud computing security [4], spanning from network layers [5] to virtualisation and multi-tenancy [6], from the software stack to specialised hardware [7], and from regulations to responsibility models [8]. A major concern about adopting the Cloud relates to the difficulty of guaranteeing the data confidentiality and code integrity of applications running on Cloud resources.

Most modern applications consist of large codebases that rely on third-party software, subject to regular updates and short development time. Such complexity makes it difficult to verify or certify the security assurances of the deployed software, also exposing applications to bugs that lead to exploitable vulnerabilities. Moreover, application operators manage, run and maintain the software relying on Cloud providers. Those providers deliver hardware and software infrastructure capabilities, maintaining high privileges on the access to the infrastructure [9]. From the data security point of view, Cloud infrastructure providers cannot be considered fully reliable, e.g., a malicious insider could abuse her access rights to steal secret data [10].

Consider a public Cloud scenario, where providers deliver hardware and software infrastructure to their customers managing applications made from software components (e.g., microservice architectures) and hardware components (e.g., network devices). Each software component of an application has a set of security-relevant characteristics, properties or software dependencies of the component, e.g., the use of a non-verified third-party library. Those characteristics determine the degree of trust of a component in order to establish whether the component can manage its data without leaks.

The usage of software with a low degree of trust can pave the way for attacks that compromise the confidentiality of applications’ data. Hence, application developers need mechanisms to: (i) identify how reliable software manages sensitive data, (ii) securely isolate components in order to avoid data leaks from unreliable software and (iii) be protected from unreliable infrastructure providers. To fulfil these requirements and protect the application data from the deployment phase onwards, developers can leverage dedicated hardware to separate the application components into isolated environments. This approach enables data flow solely through explicit communication channels and eludes the privileges of the hardware platform providers.

The most common technologies to provide this kind of isolation are separation kernels (SK). They create a computational environment indistinguishable from a distributed system, where information can only flow from one isolated machine to another through explicit communication channels [11]. In a nutshell, SKs are (hardware or software) mechanisms that partition the available resources in isolated domains (or partitions), mediate the information flow between them and protect all the resources from unauthorised accesses.

In Cloud settings, the hardware is not directly available to customers and trusted execution environments (TEEs) [12,13,14] are emerging to exploit the SK technology. TEEs are tamper-resistant processing environments that run on a separation kernel [15]. They allow customers to create isolated memory domains for code and data that are also not accessible by the privileged software that is controlled by Cloud providers. TEEs provide in the Cloud the same memory and register isolation that separation kernel (SK) technologies provide in local machines.

In this work, we focus on the data separation provided by SKs. We do not consider other sophisticated mechanisms offered, such as the timed scheduling of domains. Given that our discussion is not dependent on the hardware that isolates the domains, in the rest of our article we refer to SKs as the supporting technology that comprehends TEEs and other similar mechanisms.

To effectively adopt these technologies, developers must face several design choices. Deciding which components must be grouped together is particularly important and challenging. Developers must solve the problem of how to partition their applications, i.e., how to separate the software components of the application to be placed in different SKs’ domains. Our ultimate objective is to avoid potential data leaks after the deployment phase by exploiting applications’ information flow. Indeed, there is a research gap in addressing this problem. There exist information-flow security methods to address complementary problems, such as checking the correct labelling of software or monitoring the inputs and storage accesses by the applications. Other proposals rely on specialised hardware to tackle unreliable Cloud providers. As far as we know, however, there is no work that employs both aspects (viz., information-flow security and SK protection mechanisms in the Cloud) aiming at supporting the deployment of Cloud applications on specialised hardware such as SKs.

In our previous work [16], we presented a methodology to determine a (minimal) eligible partitioning of an application onto an SK. Namely, [16] presents:

- (a)

- a declarative model to represent multi-component applications, exploiting information-flow security to check whether components can manage their data without leaks, and

- (b)

- a (formal) definition of safely partitionable applications and eligible partitioning, also considering the performance cost of exploiting SKs and the cost of migrating software, prototyped in SKnife (Open-sourced at: https://github.com/di-unipi-socc/sk, accessed on 20 June 2023).

In this article, we extend (a) and (b), with the following original contributions:

- (c)

- the sketch of the proof that the partitioning determined by SKnife is minimal and unique,

- (d)

- a cost model based on the probability of deployment migration, which depends on user-defined parameters, viz., the upper limit of SK domains and the number of admitted changes in data secrecy and components’ trust,

- (e)

- a novel (Prolog and Python) prototype, ProbSKnife (open-sourced at: https://github.com/di-unipi-socc/ProbSKnife, accessed on 20 June 2023), that exploits SKnife to determine the eligible partitionings and implements the above probabilistic cost model to support the decision-making related to the initial deployment of the application, and

- (f)

- the experimental assessment of ProbSKnife considering the execution times, future costs and probability of not finding a safely partitionable application at varying input parameters.

The goal of our methodology is to support the decision-making of application operators for the deployment of their applications in Cloud settings where the infrastructure provider is considered not trusted, by exploiting the SK technology. Our prototype tool is able to determine the deployment using the minimum number of domains, e.g., with the lowest SK performance impact, or to recommend different solutions considering the trade-off between the number of domains and costs of future re-deployments.

The rest of this article is organised as follows. Section 2 deeply analyses the related literature. Section 3 introduces essential notions to prepare the ground for our methodology and a realistic motivating example. Section 4 describes our declarative modelling of multi-component applications and information-flow methods. Section 5 presents our partitioning methodology aiming at minimising the number of SK domains, prototyped in the Prolog open-source tool SKnife. Section 6 introduces the cost model of a deployment migration and the Prolog implementation ProbSKnife. Section 7 shows the results of the experimental assessment of ProbSKnife aiming at investigating the performance and future costs of the motivating example. Finally, Section 8 concludes the article by pointing to some lines for future work.

2. Related Work

2.1. Specialised Hardware for Cloud Security

The adoption of specialised hardware for security in Cloud scenarios is largely diffused [17,18,19,20,21] to address the issue to entrust applications and their data to Cloud providers. The two main technologies adopted are TEEs and enclaves, which share the idea to reserve a separate and protected region of memory for executing code that is not accessible or temperable by the owner of the machine by enabling secure data processing.

Zheng et al. [22] survey the literature that exploits enclave-based hardware and TEEs to build secure applications in the Cloud and possible attacks on such applications. Moreover, the authors highlight the overheads brought by those technologies in terms of memory usage, data and code encryption and the limited computing resources of the secure area. Relatedly, Zhao et al. [23] conduct an experimental study to evaluate enclave technology’s impact on application performance, measuring the overhead on function and system calls, memory accesses and data exchanged from and to the enclave. The authors conclude that such specialised hardware surely enhances the security of Cloud applications but it is very important to take care of the performance overhead. Arfaoui et al. [24] deeply analyse TEEs by comparing the currently available solutions, focusing on the security aspects.

2.2. Partitioning and Separation

Lind et al. [25] propose a framework to automatically partition C applications for deployment on enclaves to protect data confidentiality from an untrusted operating system. Differently from our work, they do not employ information-flow techniques to determine the partitioning but rely on annotations of the source code as an indication of what should be placed in the enclave. Without considering specialised hardware, other works perform least-privilege application partitioning on source code relying on static analysis [26,27], dynamic analysis [28,29] or their combination [30].

In Cloud settings, Watson et al. [31] propose a methodology to support the deployment of applications composed of service workflows that are partitioned on Cloud nodes based on the security requirements of the data flow. Other approaches aim at verifying the data separation and the data flow of SKs [32,33,34,35]. In mobile settings, Rubinov et al. [36] propose a partitioning framework for placing Android applications on TEEs. By mixing source code annotations and taint analysis, the framework indicates to the user a refactoring of the application in order to isolate the most sensitive parts. Differently from our work, this framework needs to analyse the source code and it is language-specific and OS-specific.

2.3. Information-Flow Security

Information-flow security assigns labels to variables of a program to follow its data flow in order to verify the desired properties (e.g., non-interference [37]) and avoids covert channels. Labels are ordered in a security lattice to represent the relations of the labels from the highest ones (e.g., top secret) to the lowest ones (e.g., public data). Security lattices can be arbitrarily complex and define total or partial orders, e.g., a three-label total order that represents data secrecy from top secret to low secret is represented as top ≻ medium ≻ low.

Some approaches use information-flow security to address problems that are complementary to our techniques, for example, checking the correct labelling of software or monitoring inputs and storage accesses by the applications. Elsayed and Zulkernine [38] propose a framework to deliver Information-Flow-Control-as-a-Service (IFCaaS) in order to protect the confidentiality and integrity of the information flow in a Software-as-a-Service application. The framework works as a trusted party that creates a call graph of an application from the source code and applies information-flow security based on dependence graphs to detect violations of the non-interference policy.

At the Function-as-a-Service (FaaS) level, Alpernas et al. [39] present an approach for dynamic information-flow control, monitoring the inputs of serverless functions to tag them with suitable security labels in order to check access to data storage and communication channels to prevent leaks of the data managed by the functions. Similarly, Datta et al. [40] propose to monitor serverless functions by starting to learn the information flow of an application, showing the detected flows to the developers and enforcing the selected ones.

In the Cloud–Edge continuum, our previous work [41] exploits information-flow security to place FaaS orchestrations on Fog infrastructures. Functions are labelled with security types according to the input received and infrastructure nodes are labelled according to user-defined security policies. The placements are considered eligible if every node involved has a security type greater than or equal to the security type of all the hosted serverless functions. Developers assign a level of trust to infrastructure providers that concurs to rank the eligible placements.

Differently from our approach, all these proposals but [38] consider Cloud–Edge providers reliable. Recently, a few proposals have leveraged information-flow analyses to enforce data security in Cloud applications when the Cloud–Edge provider is untrusted. For example, Oak et al. [42] have extended Java with information-flow annotations that allow verification of whether partitioning an application into components that run inside and outside an SGX enclave violates confidentiality security policies. In this proposal, partitioning is decided by the programmer.

2.4. Declarative Approaches

Similarly to us, declarative techniques have been employed to resolve different Cloud-related problems. There are proposals to manage Cloud resources (e.g., [43]), to improve network usage (e.g., [44]), to assess the security and trust levels of different application placements (e.g., [45]) and to securely place VNF chains and steer traffic across them (e.g., [46]).

To the best of our knowledge, there are currently no proposals that employ information-flow security to partition applications to support the deployment on SKs.

3. Preliminaries

3.1. Threat Model

Table 1 shows the threat model considered hereinafter. Our goal is to protect the data confidentiality of multi-component Cloud applications from external attackers and unreliable Cloud providers.

Table 1.

Threat model.

Application developers are assumed to be trusted, and the information they give about the application to protect is considered reliable. Our trusted computing base (TCB) leverages the SK technology to isolate the software components of an application into separate domains, guaranteeing that the information flows only along the explicit communication lines given by the application developers and avoiding other side channels. This model is consistent with the threat model of several trusted execution environments (e.g., [47]).

Software components can be hacked by external attackers by exploiting their vulnerabilities to leak application data. As an example, an attacker can gain control of a software component from a malicious or bugged library and steal the data managed by that component itself or by the components in the same environment, i.e., domain.

Moreover, a component under the control of an attacker can create covert channels, e.g., by sending stolen data via the network. For these reasons, we need to take particular care in individuating less-trusted components and isolating them from components managing important data. Exploiting SKs guarantees that communication only happens through explicit channels, avoiding the creation of side channels. However, we need to pay particular attention to software components having explicit channels toward the hardware that can be exploited by compromised software components, i.e., creating a path to leak the data.

On the other hand, unreliable infrastructure providers can exploit their superuser privileges on the infrastructure to steal application data. We exploit TEEs to protect the software components that guarantee a tamper-resistant processing environment and remote attestation that proves its trustworthiness for third parties [15]. What is not protected by TEEs is the data exchange with unreliable hardware components, such as network interfaces or storage disks. As an example, having an explicit channel to save secret data on a disk is admitted by TEEs independently from the partitioning of the software components and such data are leaked to the provider as the owner of the disk. Therefore, we need to take care of the secrecy of the data exchanged with hardware components considered not trusted.

3.2. Problem Formulation

We define a partitioning as the structuring of the software components of an application in non-empty parts called domains. Intuitively, a partitioning domain represents an isolated environment where data can flow (inbound or outbound) only by explicit communication channels. Inside every domain, data can potentially be shared.

Domains must satisfy the following two properties:

- data consistency, meaning that the software components of the same domain manage data with the same secrecy level, and

- reliability, meaning that the components of the same domain have the same trust from the operator to manage their data.

A partitioning is safe if and only if each of its domains is data-consistent and reliable.

An application is safely partitionable if it does not leak sensitive data from software components to untrusted hardware components. When an application is non-safely partitionable, exploiting the SK isolation is not enough to protect the data confidentiality of the application. Our approach detects this situation and suggests how to improve the software reliability or the data-secrecy level to make the application safely partitionable.

Overall, the problem addressed by this work can be stated as follows:

“Given a multi-component application consisting of a set S of software components, find safe partitionings of S (if any) in order to deploy the application A to an SK technology while minimising the considered costs and protecting A from data leaks.”

While tackling this problem, two main challenges are addressed. On one hand, the data confidentiality of applications must be considered during the deployment phase. On the other, the performance of applications should not be degraded by the supporting hardware technology that enforces security. We tackle the partitioning problem by employing information-flow security techniques [48]: (i) to understand whether the software components leak sensitive data outside the SK and (ii) to partition the application in order to avoid data leaks between components hosted on the same SK domain.

Moreover, to meet the security requirements it is enough to find a partitioning that isolates unreliable software components of the application in different domains, but doing so blindly can lead to unexpected performance and deployment costs. SKs bring overheads that can heavily impact the application performance [34], e.g., switching domains during the execution has a cost in terms of time that is influenced by sanitising the used resources before re-using them and by the domain-scheduling algorithm of the SK. Moreover, changing software domains after deployment requires working on the explicit communication channels that become intra-domain from extra-domain and vice versa. All of these call for partitionings that allow application operators to reduce those kinds of costs.

We consider two different cost parameters to determine safe partitioning:

- the number of domains used by the safe partitioning in order to reduce the SK overhead, and

- the expected migration cost of switching from one partitioning to another following new evidence about the software components in our application (e.g., disclosure of a new bug or defect bringing a vulnerability).

We also consider the combination of the above costs to support application operators in determining the best trade-off in terms of SK overhead and potential migration cost.

We propose two declarative methodologies and open-source prototypes:

- SKnife, which finds the minimal safe partitioning (Section 4) that minimises the number of domains, and

- ProbSKnife (Section 6.2), which exploits SKnife to find all the safe partitionings with their expected migration cost, up to a user-defined limit of the number of domains.

The first methodology, prototyped in SKnife, exploits logic programming to define partitionings and domains in such a way that they satisfy the data-consistency and -reliability properties. By applying inference rules to the application model, our Prolog prototype determines a minimal partitioning.

The second methodology, prototyped in ProbSKnife, determines all the possible initial partitionings (not only the minimal one) and computes the cost of changing deployment based on probability distributions that describe changes in the data classification and component trustability.

3.3. Motivating Example

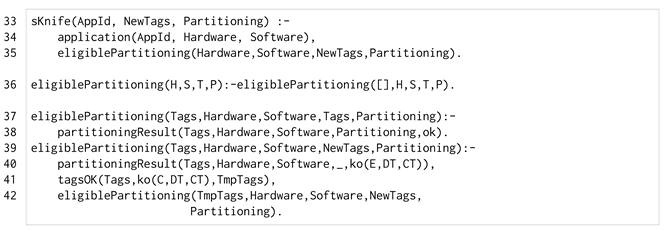

Consider a Cloud-centralised IoT system that collects data sampled by sensors and sends them to a Cloud application, where the data are stored and used to decide which commands to issue to IoT actuators. The users of this application can make requests on the status of the devices and can remotely configure the application. Nowadays, these kinds of applications are well-established in the home-automation field [49], service-device compositions [50] and platforms offered by Cloud providers [51,52].

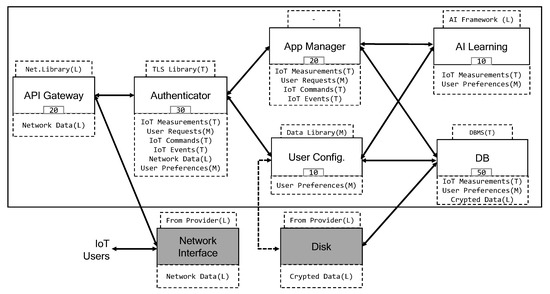

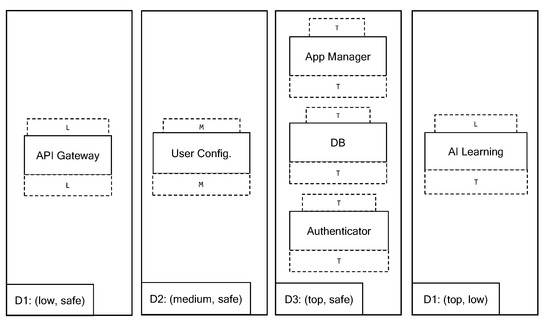

The architecture of the example application is depicted in Figure 1. Consider six software components and two hardware components—depicted in grey—that are used by the application, connected by edges representing explicit communication links between components.

Figure 1.

Application architecture (labels: T = top, M = medium, L = low).

Users and IoT devices perform REST invocations toward the considered application. All inbound communication passes through the Network Interface and is received by the API Gateway. The intra-application communication is performed by message passing between components, exploiting explicit channels supplied by the underlying hardware. The Authenticator decrypts and authenticates the inbound data and forwards them to the intended recipient.

Application users can send General requests and Configuration requests. The former are requests for explicit actuation or data previously sampled and are delivered to the App Manager, which is the main component of the application that implements the business logic. The latter are requests for reading or updating the current application configuration and are delivered to the User Configuration component, which manages the configuration of the application. The IoT devices send either sampled data or events, which are dispatched to the App Manager component. The outbound communication consists of responses to the users based on their requests or IoT commands from the App Manager toward the IoT devices. To store relevant application data—IoT Measurement and User Preferences—the application relies on the component DB, a database that is the only one connected to the Disk. Finally, AI Learning is a machine-learning module that uses IoT Measurement and User Preferences to perform predictions and support the decision-making of the App Manager.

Each component has explicit links to other components, its own data—depicted in the lower boxes of the components—and its relevant characteristics—depicted in the upper boxes of the components. For instance, the component AI Learning has data IoT Measurement and User Preference, its relevant characteristic is AI Framework and it is linked to App Manager and User Configuration. The characteristics are properties, third-party libraries, etc., that impact the trust of the components. For instance, the Disk is owned by the Cloud provider, which in our setting makes the component unreliable. The measure of the trust level of the components is mandatory to determine if they can manage their data in order to avoid leaks. For instance, the API Gateway must be able to manage its data to avoid the leak of such data toward the Network interface. The dotted arrow between User Config and Disk represents a link consisting of an alteration of the application architecture. Figure 1 represents two different application architectures with only that link as a difference. The base application—identified as iotApp1—does not have the dotted link. The modified application—identified as iotApp2—has the dotted link. We will use those two slightly different architectures in Section 5.4 when discussing the partitioning of the two applications.

This application results in a large codebase that includes the operating system, communication stacks, AI frameworks, etc, and also requires frequent updates. These factors make it hard to verify or certify the security of the released software. To determine the level of trust of the software components, we assign security labels to the relevant characteristics of the application. We also assign security labels to the application data in order to establish a direct relationship between the data and the trust of the software components. We adopt a total ordering security lattice (viz., top ≻ medium ≻ low) modelling the labels pertaining to both sensitive data and trusted characteristics. The top label denotes both secret data and high-trust characteristics, the medium label denotes both medium-secret data and medium-trust characteristics and the low label denotes public data and non-reliable characteristics.

A component having characteristics considered unreliable by the application developer is not able to manage secret data. This could cause a leak of its data toward the directly connected components or toward the software components hosted in the same isolation environment, i.e., container, virtual machine or SK domain. For instance, if the DBMS used by DB is not reliable—either because it is malicious or because it has vulnerabilities—the data of DB can leak toward the Disk, component owned by the Cloud Provider. Furthermore, if DB is isolated in the same SK domain of API Gateway (assuming an unreliable Net Library), the leak of data could flow from the DB to the Network Interface through the API Gateway.

We emphasise again that we aim at protecting the data confidentiality of applications placed on the Cloud by finding safe partitionings, i.e., grouping the software components in non-empty subsets that allow placement of the application in SK domains in such a way that the data and trust of the components are homogeneous in every domain, avoiding having less-trusted components share the environment with components that manage sensitive data. For instance, we already discussed that AI Framework is a library of AI Learning considered not reliable; it may contain malicious code or its vulnerabilities may be exploited by an external attacker. Placing all the software components in the same domain exposes the data of the application to be read by AI Framework and sent outside such domain. Partitioning the application components to isolate their data and exploiting the SKs’ isolation mitigates those kinds of threats.

To support the application operator’s decision, we aim at determining the expected migration costs of the software components. Migrating the software components could be needed after the deployment of the partitioned applications due to events such as bug discovery or data declassification that bring a change in the labelling of the application. With the new labelling, the partitioning might not be safe anymore. For instance, a bug discovered in the TLS Library declassifies its trust from top to medium and the Authenticator must be migrated to a different domain to preserve the data confidentiality of the application.

For these reasons, every software component is also annotated with its migration cost, represented as an integer number. The migration cost represents how many hours of work are needed to change the communication link of each software component during a migration that changes the status of the link from intra-domain to extra-domain and vice versa. For instance, the User Configuration needs 10 person hours to change its communication links.

To evaluate the expected costs of migration, we need to know how data and characteristics can change the label over time. Application operators may determine the probability distribution of the change over time by their experience or by exploiting statistical analyses from the open-source community [53].

Table 2 shows a probability distribution to change the label for each data and characteristic of the application.

Table 2.

Probability distributions to change the label for each data type and characteristic.

For instance, we can assume that in the future there is a probability of discovering a side channel not easy to exploit in the TLS Library, reducing its trust from top to medium. Alternatively, there is a probability of discovering a vulnerability in the cryptography algorithm of the same library, which reduces its trust to low. We represent this by assigning to the TLS Library as the probability of changing its label to medium, as the probability of changing its label to low and s as the probability of remaining top.

From Table 2, we can also deduce that the application operator does not exclude the possibility to consider reliable the Cloud provider in the future. Indeed, the From Provider characteristic has a probability of changing to medium trust and a probability of changing to top trust. Network Data and Crypted Data will be always considered low data as they have a probability of remaining low. This means that the application operator assumes that the data arriving from the network and the data stored on the Disk will never be secret.

4. A Declarative Solution: SKnife

This section describes the methodology implemented in SKnife to determine the minimal safe partitioning of an application for the deployment onto an SK by exploiting simple examples excerpted from the motivating example of Section 3.3. The model code (Section 4.1) is presented and discussed inline within the main text. The declarative implementation of our methodology (Section 4.2 and Section 5) is instead presented through code listings featuring line numbers to facilitate a more thorough discussion.

Werecall that a Prolog program is a finite set of clauses of the form a :- b1, …, bn stating that a holds when b1 bn holds, where n ≥ 0 and a, b1, …, bn are atomic literals. Clauses with an empty condition are also called facts. Prolog variables begin with upper-case letters, lists are denoted by square brackets and negation is by \+. With pname/n is indicated a predicate with name pname and its arity n. Prolog programs can be queried, and the Prolog interpreter tries to answer each query by applying SLD resolution and by returning a computed answer substitution instantiating the variables in the query.

4.1. Declarative Modelling Applications and Labelling

Application developers model their applications through suitable Prolog facts and clauses, as

where AppId is the application identifier, Hardware is the list of hardware components interacting with the application and Software is the list of software components to place on the SK.

| application(AppId,Hardware,Software). |

- Example.

- The iotApp1 application of our example is declared by the fact

| application(iotApp1,[network,disk],[userConfig,appManager, |

| authenticator,aiLearning,apiGateway,db]). |

Software and hardware components are declared as in

where SwId and HwId are the unique identifiers of each component, Data is the list of names of the data managed by the component, Characts is the list of names of the component characteristics, LinkedHW is the list of linked hardware components and LinkedSW is the list of linked software components. The only difference is the components definition Cost, which is the integer value representing the migration cost of the software.

| software(SwId,Data,Characts,Cost,[LinkedHW,LinkedSW]). |

| hardware(HwId,Data,Characts,[LinkedHW,LinkedSW]). |

- Example.

- The db and disk components are declared as

| software(db,[iotMeasurements,userPreferences,cryptedData], |

| [dbms],50,([disk],[userConfig,appManager])). |

| hardware(disk,[cryptedData],[fromProvider],([],[db])). |

Application developers must also declare a security lattice formed by ordered labels and they have to label the relevant data of the application and the relevant characteristics of the components. The higher the label of the data, the higher the secrecy of the data. Similarly, the higher the label of a characteristic, the higher the trust in the characteristic. We call the labels assigned to the data secrecy labels and the labels assigned to the characteristics trust labels.

Every data type and characteristic can be labelled using

where Name is the name of the data or characteristic to be labelled and Label is the assigned label. Obviously, the labels must be part of the lattice. We call labelling the set of all pairs ⟨Name,Label⟩ where all data and characteristics have been assigned a security label.

| tag(Name, Label). |

- Example.

- The label of the data and the characteristics of the Disk are declared as

| tag(cryptedData, low). |

| tag(fromProvider, low). |

The probability of changing the label for a data type or characteristic is declared as

| tagChange(DC,label,P). |

representing how the data or characteristic DC changes to label with probability P. To have the full distribution for DC we need facts for every label of the security lattice and the sum of the probabilities of those facts must be 1.

- Example.

- The probabilities of changing the label of the data and the characteristics of the Disk are declared as

| tagChange(cryptedData, top, 0.0). |

| tagChange(cryptedData, medium, 0.0). |

| tagChange(cryptedData, low, 1.0). |

| tagChange(fromProvider, top, 0.1). |

| tagChange(fromProvider, medium, 0.3). |

| tagChange(fromProvider, low, 0.6). |

4.2. Safe Partitioning

Our methodology assigns to every component a pair of labels, one indicating its secrecy level and one indicating its trust level. A component is trusted if its trust label is greater than or equal to its secrecy label; otherwise, it is considered untrusted. All the comparisons between labels are based on the ordering of the security lattice. A trusted component is able to manage its data without the risk of leaking them.

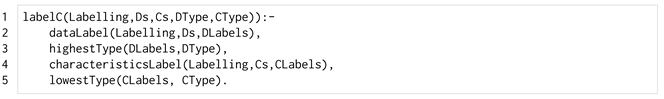

The label assignment to a component is performed by the predicate labelC/4 of Listing 1, using the lists of data and characteristics of the component and the labelling of the application.

| Listing 1. The labelC/4 predicate. |

|

The secrecy label is determined by the highest label of its data in order to consider the most critical data managed by the component. The trust label is determined by the lowest label of its characteristics because the worst characteristic could compromise the trust of the component, e.g., a component using a simple logging library and a certified encryption software could be endangered by a bug in the former.

A component without relevant characteristics is considered reliable and its trust label is the highest of the security lattice. We choose this level of granularity (i.e., the developer labels the data and characteristics instead of directly labelling the components) to have a better insight into the application and to have a more accurate understanding of the situations in which the application is non-safely partitionable.

Untrusted components can leak their data to directly linked components. If such components have a trust label lower than the leaked data they can propagate the leakage through their links. If such data reach a hardware component, then an external leak occurs. An external leak is a path from an untrusted software component to a hardware component where all the components of the path have a trust label lower than the secrecy label of the first software component of the path. The presence of such paths indicates the potential for data leakage from an untrusted component toward the outside of the SK that is not avoidable by the partitioning.

Recall that an application is called safely partitionable when there is no leakage outside the SK, i.e., all its hardware components are trusted and all its untrusted software components do not incur any external leaks.

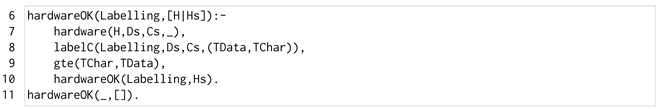

The predicate hardwareOk/2 of Listing 2 checks that all the hardware components of the application are trusted, which avoids hardware attacks that cannot be contrasted by the SK partitioning.

| Listing 2. The hardwareOk/2 predicate. |

|

The predicate recursively scans the list of hardware components to check their labelling. Initially, information about a single component is retrieved (line 7), and then the labelling of the hardware component is determined (line 8). The predicate checks for the component trustability (line 9), where gte/2 checks if the trust is greater than or equal to the secrecy. Finally, hardwareOk/2 recurs on the rest of the list (line 10) until it is empty (line 11).

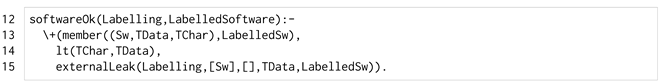

Similarly, the predicate softwareOk/2 of Listing 3 checks that no software component (line 13) that is untrusted (line 14) has an external leak toward an untrusted hardware component (line 15).

| Listing 3. The softwareOk/2 predicate. |

|

5. Determining the Minimal Safe Partitioning

In this section, we first show how SKnife determines a minimal safe partitioning of an application onto an SK. We then also present a technique to support the application operator when the application is non-safely partitionable.

5.1. Minimal Number of Domains

As aforementioned, we initially consider the number of domains in a partitioning P as its cost, as shown in Equation (1), where indicates the cardinality of the partitioning:

Solving the partitioning problem with such a cost corresponds to determining a safe partitioning with the minimum number of domains. Given an application a, a labelling and a security lattice L,

Such a partitioning is unique (see proof in Section 5.2) and its cost depends on the number of labels of the security lattices. Note that, the minimum number of domains needed to safely partition an application is equal to the number of label configurations to satisfy the data-consistency and reliability properties. By counting all possible configurations, an upper bound to the minimum number of domains is . We will prove later how, by construction, SKnife determines the minimal safe partitioning.

5.2. Declarative Strategy for the Minimal Safe Partitioning

We use the notation to indicate that a partitioning P satisfies a labelling when the partitioning is safe with .

Safe partitionings split safely partitionable applications into a set of data-consistent and reliable domains.

The software components of a safely partitionable application can be split and placed on SK domains. A domain is a triple (DTData, DTChar, HostedSw) where DTData is the secrecy label of the domain, DTChar is the trust label of the domain and HostedSw is the list of the software components hosted by the domain. Inside a domain, the software components share the same environment. To avoid placing components in an environment containing data that they are not able to manage, a domain must be data-consistent, as defined in Equation (4)

meaning that in a domain there is no software component with a secrecy label different from the domain secrecy label, i.e., all the software components hosted by a domain have the same secrecy label of the domain. This property avoids placing a software component in a domain that contains data more sensitive than those the component is supposed to deal with.

Another aspect to consider is that untrusted components bring out the risk of leaking sensitive data to other components of the domain or to linked components outside the domain. In order to isolate such components, the domains must be reliable, as defined in Equation (5)

meaning that all the software components of a domain are either trusted or have the same trust label as the domain.

Domains hosting only trustable software components are considered secure from data leaks. Every component can manage its data and can exchange it outside the domain without the risk of leaks, according to the trust assigned by the developer. Untrusted components must be strongly isolated, and they can share a domain only with other untrusted components having the same trust label, in order to have a homogeneous level of trust inside the domain and mitigate the danger of a data leak.

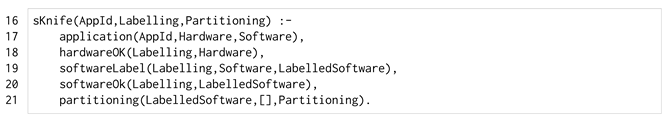

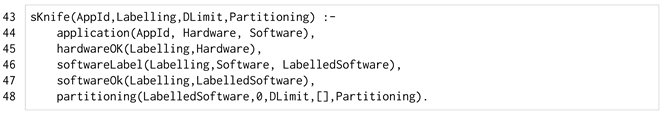

The top-level sKnife/3 predicate (Listing 4) finds the safe partitioning of a safely partitionable application. After retrieving the application information (line 17), it performs two main steps. First, it checks whether the application is safely partitionable (lines 18–20) and then, it creates the set of data-consistent and reliable domains, splitting the software component across them (line 21), starting from an empty partitioning ([] of line 21).

| Listing 4. The sKnife/3 predicate. |

|

The partitioning/3 predicate is listed in Listing 5 and it has the task of splitting the labelled software components, placing them in data-consistent and reliable domains. The predicate recursively scans the list of labelled software components (LabelledSoftware) to place every component starting from a partitioning (Partitioning) that will be updated in the resulting partitioning (NewPartitioning). The domains of the resulting partitioning are data-consistent and reliable by construction. Every software component is placed in a domain with the same secrecy label to satisfy the data consistency of the domain.

| Listing 5. The partitioning/3 predicate. |

|

Trusted components are placed together in domains with the trust label named safe, indicating that all the hosted components are trusted. Untrusted components are placed in the domain with the same trust label, in order to create reliable domains. If the domain needed by a component is not in the starting partitioning, it is created with the correct labels and added to the partitioning.

partitioning/3 has two main clauses (lines 22 and 27) and the empty software list case that leaves the partitioning unmodified (line 32). The first case describes the situation in which a software element can be placed on a domain already created. After determining the labelling of the hosting domain (line 23), the library predicate select/3 checks if such a domain is already created in the partitioning (line 24) and extracts it. Then, an updated domain is created by adding the current software component (line 25). Finally, partitioning/3 recurs on the rest of the software list, giving as the starting partitioning the old partitioning with the updated domain ([DNew|TmpPartitioning] of line 26).

The second clause of the predicate (line 27) describes the situation in which the domain that has to host the software component is not already in the input partitioning. The initial step to determine the hosting-domain labelling is the same as the previous clause (line 28). Then, there is an explicit check that such a domain is not already in the partitioning (line 29). At this point, the new domain is created (line 30) and it is included in the partitioning during the recursive call ([DNew | Partitioning] of line 31).

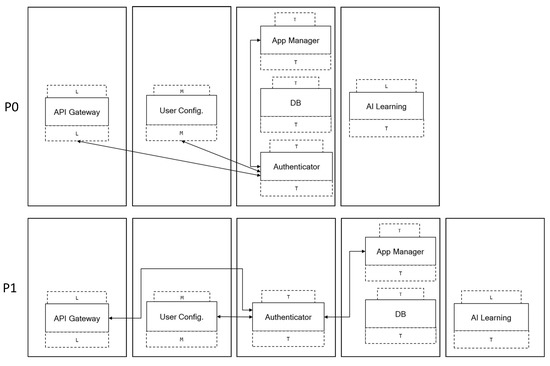

Figure 2 sketches the steps followed by our methodology to determine the minimal partitioning.

Figure 2.

Overview of SKnife methodology.

As a safe partitioning example, the output of SKnife is represented by the four domains

- ((top,safe),[appManager,db,authernicator]),

- ((medium,safe),[userConfig]),

- ((low,safe),[apiGateway]),

- ((top,low),[aiLearning]).

where each domain is represented by their triple (DTData, DTChar, HostedSw), where DTData and DTChar are a pair of labels (or safe) and HostedSw is a list of software components.

In the following, we outline a proof to demonstrate that SKnife computes the minimal safe partitioning of the input application, if any.

Proof of Partitioning Minimality).

As aforementioned, SKnife’s main predicate fails if the application is non-safely partitionable. This is checked by hardwareOk/2 of Listing 2 and softwareOk/2 of Listing 3. The minimum number of domains is not fixed for all possible applications, because it depends on the number of security types of the lattice and on the labelling of the application to be placed.

To prove that the result of SKnife is the minimal partitioning, it is enough to prove that the predicate partioning/3 of Listing 5 creates the minimal partitioning. We sketch the proof of minimality by defining the invariant of the partioning/3 predicate, which maintains the safety of the partitioning. Then, we show by induction that the invariant is preserved and that the resulting partitioning is minimal and unique.

A partitioning is a set of domains. We denote a domain as a triple where d is the secrecy label given by data, c is the trust label given by characteristics and is the list of software components hosted by the domain. The predicate partioning/3 implements a function

where S is the software components set and P is the partitioning set.

We indicate with the function that gives the labelling of a software component and the function that gives the labelling of the domain needed by a software component. The function has the following invariant:

Equations (6) and (7) indicate the labelling of software components and domains to satisfy the data-consistent and reliable-domain properties. Equation (8) indicates that no domains with the same labelling are admitted. Equation (9) indicates that empty domains do not exist.

We can prove by induction that the function creates the minimal partitioning.

Base Case

an empty list of software components does not modify the partitioning.

Induction Step

We assume, by the inductive hypothesis, that the invariant holds up to the i-th step, i.e., for the domain of components [, …, ]. It is easy to see that at step the current is suitably placed into an existing domain (first clause of partitioning/3) or inserted into a new one (second clause of partitioning/3) by following the rule above. Assume that after this step, the invariant does not hold, i.e., that the resulting domain is non-minimal. Then, as was correctly sorted, it means that some of the software in [, …, ] were not. This implies that the invariant did not hold in some of the previous steps, which contradicts the inductive hypothesis.

Note that the minimal partitioning is unique. At any step i, the software must go in a domain having d and c equal to those of . Given that we do not admit domains with the same labelling (Equation (8)), there is only one domain having the labels required by . Thus, there is a unique way to determine the partitioning. □

5.3. Labels Suggestions

Not all existing applications are safely partitionable, precluding the possibility of finding a safe partitioning. To assist application developers in these situations we show how SKnife can suggest relaxed labellings of application data or characteristics that make the application safely partitionable. These suggestions reduce the secrecy or increase the trustability of components, relaxing the labelling of an application in order to find a safe partitioning. This feature is intended to help the review of an application, preventing the risk of leaking the confidentiality of data.

The basic version of SKnife either finds the minimal partitioning or fails if there is a risk of a data leak given by untrusted components. To support the suggestions feature, we suitably modified SKnife to individuate the source of a failure and to retry the partitioning after relaxing the labelling of such a source.

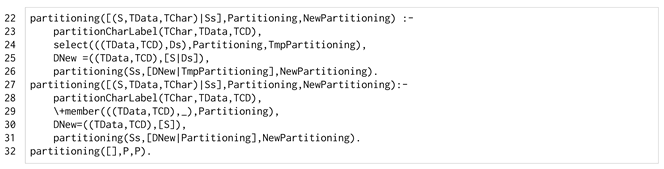

Listing 6 lists the main code of the labelling-suggestion feature. The main predicate of the refinement prototype is sKnife/3 (line 33). It has as the first variable the application identifier AppId, as in the base version. The second variable is the list of relaxed labelling NewTags, pairs of data/characteristic names with new labels. The third variable Partitioning is the safe partitioning found with the relaxed labelling. Note that this predicate does not compute a unique solution. Indeed, for every query, a different relaxed labelling with the associated safe partitioning is computed. This allows SKnife to give the developer different suggestions.

| Listing 6. The sKnife and eligiblePartitioning predicates. |

|

The eligiblePartioning/4 predicate (line 36) initialises the relaxed labelling as an empty list []. The eligiblePartioning/5 predicate (lines 37–42) has two clauses. The first one determines an eligible partitioning, indicated by the ok fact (line 38). The second one acknowledges that the application is non-safely partitionable with the current labelling, indicated by the ko(C, DT, CT) fact (line 40). The information given by this fact is about the untrusted component C involved in the data leak and its pair of labels DT and CT. That information is used to relax the labelling, by the tagsOK/3, which generates alternative new labels (TmpTags) to be added to the previous one (Tags) predicate (line 41). These labels are generated by increasing the characteristic labels of the component C and decreasing its data labels. Then, the new labels are used to determine an eligible placement, querying recursively eligiblePartioning/5 (line 42). Note that an eligible partitioning is eventually determined. In the worst-case scenario, the data labels are relaxed to the lowest security label (i.e., low) and the characteristic labels are relaxed to the highest security label (i.e., top). For the sake of presentation, the full code is omitted.

The predicates that check if an application is safely partitionable are hardwareOk/2 and softwareOk/2. These predicates are modified in order to return the fact ko(C, DT, CT) for every component responsible for a failure when the application is non-safely partitionable.

5.4. Motivating Example Revisited

In this section, we will solve the partitioning problem of the architecture iotApp1 of the motivation example of Section 3.3 given a suitable set of labels for every data type and characteristic. Then, we will consider the slightly different iotApp2 architecture, showing that it is non-safely partitionable, and we will apply the relaxed labelling feature of SKnife. In both cases, the application architecture, the (software and hardware) components and the security lattice can be expressed as per the modelling of Section 4. The data and characteristics are labelled as indicated by the letters between brackets in Figure 1 using the tag/2 predicate.

5.4.1. Finding the Minimal Partitioning

To find the minimal partitioning of iotApp1 we can simply query the sKnife/3 predicate after retrieving the starting labelling, as in

| startingLabelling(StartingLabelling), |

| sKnife(iotApp1,StartingLabelling,Partitioning) |

Initially, SKnife labels all the application components as depicted in Figure 3, where a pair of labels is assigned for each component, one for data and one for characteristics. For instance, App Manager is labelled top for its data (the T above the component) and top for its characteristics (the T below the component). Then, SKnife checks if the application is safely partitionable.

Figure 3.

Labelling of application components.

The application is safely partitionable because the hardware components manage only low data and a path from AI Learning (the only untrusted component) to the hardware components able to leak top or medium data does not exist.

Figure 4 sketches the obtained partitioning. The safe partitioning is composed of four domains, three with trusted components (D1–D3) and one with an untrusted component (D4). It is a minimal partitioning because we have at least three software components with different secrecy labels and only one untrusted component and it is not possible to divide those components into fewer than four domains that are data-consistent and reliable.

Figure 4.

Minimal safe partitioning.

As aforementioned, SKnife outputs only a solution because the minimal safe partitioning is unique, i.e., there is no partitioning of the application with a lower or equal number of data-consistent and reliable domains.

5.4.2. Relaxing the Labelling

To show the relaxing labelling feature we consider the architecture iotApp2 with an additional link between the User Configuration and the Disk. This link creates a path from the AI Learning to the Disk that can leak the top data IoT measurements and medium data User Preferences, making the application non-safely partitionable. This happens because AI Learning is an untrusted component and can leak its data via its explicit links. The linked component User Configurations has the trust label medium and it is not reliable to manage top data; thus, IoT measurements can be leaked to the Disk with the newly added link. In this situation, it is not possible to find a safe partitioning.

To use the relaxing labelling feature on the application iotApp2 we can query sKnife/3 predicate as sKnife(iotApp2, S, Partitioning). As expected, the check performed by softwareOk/2 finds a path with an external leak and individuates all the components involved in the path, triggering the retry behaviour explained in Section 5.3.

For clarity, we only show the results of the query for the suggestion variable S, avoiding displaying the safe partitioning generated by applying the suggestions. The obtained results are

| S=[(iotMeasurements,low),(userPreferences,low)]; |

| S=(aiFramework,top); |

| S=(dataLibrary,top); |

| S=(iotMeasurements,medium); |

| S=(fromProvider,top); |

| S=(iotMeasurements,low). |

For this specific situation, we can see that a solution is to reduce the security of IoT measurements and User Preferences managed by AI Learning, cutting the path toward the Disk. When AI Learning is analysed, the suggestion is to label the data low. When the second component of the path, User Configuration, is analysed, the suggestion is to reduce IoT measurements to medium. Finally, when the last component of the path—Disk—is analysed, the suggestion is to reduce IoT measurements to low. The alternatives increase the trust of each component of the path to cut the possible leak, increasing the characteristics AI Framework, Data Library and From Provider to top.

These suggestions can support application developers in changing the data labelling if it is acceptable to change the secrecy of IoT measurements by reducing it. Otherwise, the suggestions could lead to changing the characteristics involved in the leak, for instance, using a more reliable Data Library for the component User Configuration.

6. Partitioning with a Look Ahead on Migration Costs

We mentioned before that reducing the number of domains corresponds to reducing the used resources and avoids degrading the performance. In this section, we want to consider another type of cost aside from the number of domains.

After the deployment of an application, changing the isolation of the components during the execution could bring unexpected costs, for example, the downtime of components that must be stopped and redeployed or the work time to change the explicit channels from intra-domain to extra-domain. Unfortunately, there are situations in which the labels of data or characteristics must be changed given the determined circumstances. Data labels can change due to new privacy regulations that can classify or declassify categories of data. Regarding the characteristics, an exploitable vulnerability of a library can be discovered that drastically reduces its trust. Then, the deployed partitioning could not be safe anymore with the changed labelling and the data confidentiality protected by the isolation is compromised. In those situations, the software must be migrated to different domains to preserve data confidentiality, at the cost of such a migration.

For example, consider the motivating example deployed as per the safe partitioning calculated in Section 5.4. Assume that a bug in the TLS Library, a characteristic of the component Authenticator, is discovered. This event can reduce the library security label from top to medium, making this software component untrusted and the partitioning not safe anymore. To avoid leaks of data, the application operator should calculate the new safe partitioning, stop the application (or at least the components that change domain), work on the communication code of the components that change domain and re-deploy the application. In Figure 5 we show the partitioning change from the starting partitioning to the final partitioning , indicating the communication links of the Authenticaton component. The downtime of the application and the work on the communication code are a cost for the application developer that can be predicted and optimised with the partitioning.

Figure 5.

Partitioning change due to Authenticator labelling change.

What if the application was deployed with the partitioning from the start? This partitioning is not minimal with the initial labelling, but it is safe. The Authenticator component is isolated in a domain and from the point of view of a safe partitioning its labelling does not matter. What really changes from partitioning to are

- the number of domains, four to five,

- the link connecting the Authenticator and the App Manager components, which is inter-domain in and extra-domain in , and

- the cost of changing the partitioning when the event of TLS Library changes its label from top to another one; this cost is 50 in (given by summing the costs of Authenticator and App Manager) and 0 with .

Indeed, the partitioning is safe for both the starting labelling and for a labelling with the component Authenticator untrusted. Thus, with as the starting partitioning, the label change does not require stopping the application for changing the deployment and the cost of working on the communication links of Authenticator and the App Manager is 0.

This analysis is on the basis of our refined cost model that captures the future cost of migrating software components from one partitioning to another.

6.1. A Refined Probabilistic Cost Model

We start by illustrating the cost of migrating from one partitioning to another and then we define the probabilistic model that describes the labelling change.

6.1.1. Migration Cost

Two partitionings and differ in the distribution of software components in their domains and the status of their links, from inter-domain to extra-domain and vice versa. The cost of migrating from to is defined in Equation (10):

where we represent the link l as a pair of connected software and its status in the partitioning P as . The notation is adopted to express the migration cost c of the single software s.

6.1.2. The Probabilistic Model

To estimate the probability of a migration from a partitioning to a partitioning we define a probabilistic model.

For every data type and characteristic , we introduce a discrete random variable

representing the event that the data or characteristic has the label l of the security lattice, forming the element of a labelling . Every variable has its probability mass function defined in Equation (11):

representing the probability that the data or characteristic has label . The following holds:

We now introduce a second random variable

representing the probability that the labelling is the labelling ; thus, every

and

has l specified and contained in the security lattice L.

Every variable has its probability mass function defined in Equation (12)

which represents that the probability of a specific labelling is given by the joint probabilities of its labelled data and characteristics.

Finally, we introduce the random variable

representing the event that the application starts with the labelling and evolves into the labelling with at most k labels changed (considering that ) and the number of labels changed is given by .

Having the limit k to the number of possible changes admitted during the evolution of a labelling is not unrealistic. Considering the components’ characteristics, a label change can represent a bug found in a library and waiting for a bug in another library to change the deployment is implausible. If we consider the data, it can happen that a group of data can be classified (or declassified) by new privacy laws or by changes in the agreement with clients. However, it is unlikely that all the data change labels, and it is more unlikely that this happens simultaneously with a bug discovery. For those reasons, having the parameter k decided from the start is reasonable and the probabilistic model takes into account every k from 1 (one label change admitted) to the number of data types and characteristics (every label can change).

The probability mass function for every variable is defined in Equation (13).

In this setting the initial labelling is given; thus, for every labelling the first term has the probability

To define the probability of the final labelling from the starting one and given k

we, first of all, discriminate the case of remaining in the starting labelling from all the others

This represents the probability that a labelling does not change and it is independent of the value of k.

For all the other labellings different from the starting one we use the conditional probability formula

where

and thus, the event that the final labelling is the but not the starting one. A is the set of all the labellings with probability

to be the final labelling.

The event B is

thus, the event that we have a specific starting labelling and a fixed number of the maximum labels k different from to . B is the set of all the labellings that has at most k different labels from :

Those events have the probability

The intersection between the events A and B is

and its probability is

Putting everything together we have

To resume, the mass probability function is defined in Equation (14).

This distribution represents the probability of transitioning from the starting labelling to another, assuming that at most k labels have changed. The probability of remaining in the same labelling is fixed for every k and the probability of reaching another labelling is guided by the probability of every label ( variables).

The sum of this distribution over all possible is 1 (note that ), as shown in Equation (15).

6.1.3. Partitioning Migration

When a labelling changes to , the starting partitioning can satisfy the new labelling or not. In the former case, there is no need to change the partitioning. In the latter case, the partitioning must be changed, migrating to the new partitioning . The best migration is defined in Equation (16):

stating that if the partitioning does not satisfy the new labelling , the software is rearranged in the new partitioning that satisfies and has the minimum cost of migration from .

We want to highlight two facts about the best partitioning. First, the with the minimum cost of migration is not unique; there could be multiple partitionings that satisfy the new labelling and are the best migration. Then, when the best migration is to stay in because the cost will be always 0.

The probability of migrating from the partitioning to the partitioning given the starting labelling is defined in Equation (17),

which represents the sum of the probabilities of changing the labelling from to with as the best migration that satisfies .

Given that the best migration is not always unique, the sum of the migration probabilities is not 1.

Another important aspect to highlight is that the application could be non-safely partitionable for every possible labelling, making the migration impossible.

6.1.4. Future Cost

Fixing the maximum number of changes k and given a starting partitioning that satisfies the initial labelling , its cost is defined in Equation (18):

where is the expected cost of migrating the partitioning over all the possible labelling, considering the migration toward the partition that satisfies the labelling as the best migration. The fact that the best migration is not unique does not bring a problem with the expected cost: only the value of the cost is considered and the partitioning with the best migration is not relevant.

We give some intuitions for the complexity of calculating the of all the partitioning that satisfy the initial labelling. The search space of a single partitioning depends on

and the number of labellings l is exponential in the number of data and characteristics:

representing all the possible values that the labels of a labelling can assume.

The number of partitionings satisfying the initial labelling depends on the application architecture. We consider the worst-case scenario where all the possible partitionings satisfy the initial labelling. The number of partitionings p is exponential in the number of software components n decreased by the partitioning dimension i:

representing the sum of all the subsets of n with dimension i from (the lower bound of the number of domains needed) to n (every component is in a different domain).

Thus, the overall search space is the combination of all the partitionings that satisfy the initial labelling and the number of possible labellings. To reduce this search space and be able to calculate the of the partitioning of an application, we use two configurable parameters:

- d: the maximum number of domains admitted and

- k: the number of possible changes admitted during the evolution of a labelling.

The first element of the pair is the number of domains of a partitioning. It does make sense to have an upper bound from the start to reduce the SK overhead. This upper bound is represented by d. The search space of the number of partitionings p becomes

which is also exponential in the number of software components, but the decrease in the possible subsets is considerable. However, having a low value of d reduces the possibility of satisfying some of the labelling changes, increasing the probability of having a non-safely partitionable application.

Concerning the limit to the number of label changes k, it is straightforward that having a low value reduces exponentially the number of labellings reachable from the starting one, reducing the calculation of the . We emphasise a property of our probabilistic model. After fixing k, the probability of reaching a specific labelling is guided by the single distribution of each label given by the variables , i.e., the most probable reachable labelling is the one with the most probable values for each label.

In Section 7 we assess how d and k impact the time to search safe partitionings and the probability of having a non-safely partitionable application.

6.1.5. Look-Ahead Safe Partitionings

Resolving the partitioning problem with the second cost model consists in determining the list of future cost for all the possible partitioning satisfying the starting labelling having up to d domains and limiting the number of label changes to k.

We propose the prototype ProbSKnife to find the list . Given an application a, a lattice L and the distribution for every random variable ,

where every element of is

where is the lower bound of the number of domains needed to partition the application.

6.2. Probabilistic SKnife

ProbSKnife is a declarative prototype that builds on SKnife to determine safe partitionings and contains new Prolog predicates to determine the migration costs and new labellings. Moreover, it includes a Python script to execute multiple Prolog queries, parse the outputs and aggregate the results.

The Python code allows us to simplify the execution flow of ProbSKnife and it is more suited than Prolog to calculate the probabilistic model and the future cost. The exponential dimension of the calculation makes it infeasible to use a pure Prolog prototype, so our choice is to mix our declarative modelling and the determination of partitionings, costs and evolving labelling written in Prolog and the execution flow and expected cost processing written in Python.

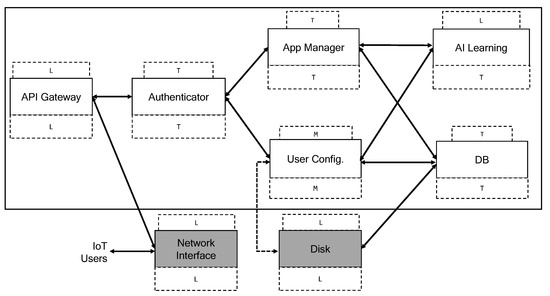

To find all the partitionings that satisfy a labelling with a limit on the number of domains, we use the refined predicate sKnife/4, listed in Listing 7.

| Listing 7. The sKnife/4 predicate. |

|

The new variable DLimit (d in the above formalisation) represents the maximum number of domains admitted for a partitioning. This variable is used to find a safe partitioning (line 48), together with the initial number of domains (0).

The partitioning/5 predicate is listed in Listing 8, with the main differences between the previous version of Listing 5 coloured in cyan. As before, the predicate has two main clauses that scan the list of labelled software components. The first one (lines 49–54) determines the domain labels needed by the software component (line 51) and inserts a software component in a domain already created in the actual partitioning (Partitions) (lines 52–53), without increasing the number of domains of the new partitioning ([PNew|TmpPartitions]). The second clause (lines 55–62), after determining the domain labels needed by the software component (line 57), creates a new domain (P) hosting only the software component (line 59). Then, the number of domains of the partitioning is incremented (line 60) and it is checked that it is less than or equal to the domain limit (line 61).

| Listing 8. The partitioning/5 predicate. |

|

Note that the two clauses are not mutually exclusive, due to the missing check in the second clause about the presence in the partitioning of the domain needed by the software component (commented line 58). This creates a backtracking point in the Prolog engine search, covering all the possible partitionings with different queries that retrieve all the partitionings of an application that satisfy a specific labelling and have a limited number of domains.

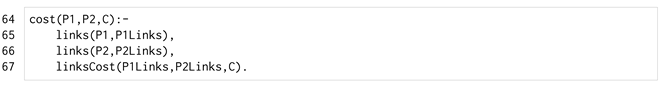

The cost/3 predicate is listed in Listing 9 and is used to calculate the cost C of migrating from the partitioning P1 to the partitioning P2. For each partitioning, the list of links is created to determine the status of every link (lines 65–66). The cost is then calculated by using the predicate linksCost/3 (line 67), which checks the link with different statuses and sums the migration cost of every software.

| Listing 9. The cost/3 predicate. |

|

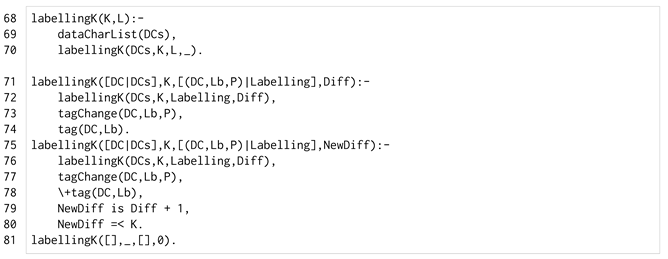

The last predicates we present are used to calculate the labellings with at most K differences from the starting one, listed in Listing 10.

| Listing 10. The labelling/2 and labelling/4 predicates. |

|

The top-level predicate is labellingK/2. By specifying the parameter K, the resulting labelling L is a list of elements (DC,Lb,P) representing the name of the data or characteristic (DC), its label (Lb) and the probability (P) of having that label. This predicate retrieves the list of all data and characteristic DCs (line 69) and then calls the sub-predicate with it (line 70). The predicate labellingK/4 recursively creates a labelling starting from an empty list and initialising the number of differences from the starting one at 0 (line 81). Then, it either includes a pair of data/characteristics and labels of the starting labelling (first clause of line 71) or include a new pair, incrementing the number of differences (second clause of line 75).

ProbSKnife is usable through a Python script that queries the Prolog code and, by parsing the results, builds the probabilistic model and calculates the future cost of the partitionings. The usage of this script is as follows

| python3 main.py APPID [-d DLIMIT] [-k K] [-f] [-l] [-h] |

| APPID identifier of the application |

| DLIMIT an integer |

| K an integer |

| -f shows full results in tables |

| -l shows partitioning labels |

| -l shows partitioning labels |

| -h shows the help |

The main arguments of the program are the application identifier used in the Prolog definition (APPID), the desired maximum limit of domains (DLIMIT) and the maximum number of label changes from the starting one (K).

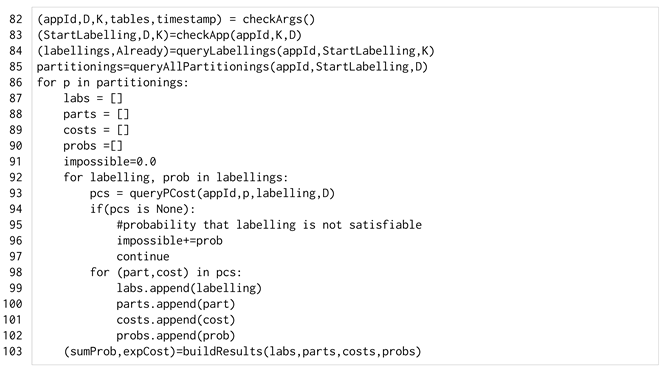

The code of the Python program is listed in Listing 11, omitting the logging and timestamp calculations. The first Prolog query retrieves the starting labelling and finds the maximum values for K and DLimit (line 83). Then, the labellingK/2 predicate is queried to retrieve all the labellings with K different labels from the starting one. For each of them, the probabilistic model (the random variables and ) is calculated (line 84). To find all the possible partitionings that satisfy the starting labelling, the sKnife/4 predicate is queried (line 85). For each partitioning p (line 86) all the labellings are scanned (line 11). All the partitionings with at most domains and that satisfy each labelling are calculated by the sknife/4 predicate and the cost from p is calculated by the cost/3 predicate (line 93).

If a labelling makes the application non-safely partitionable (line 94), the probability of reaching this labelling is accumulated to the total probability of impossible migration (line 96). Otherwise, all the results—partitioning, costs, labellings and probabilities—are recorded (lines 99–102).

| Listing 11. The Python program of ProbSKnife. |

|

Finally, the future cost of p is calculated (line 103) by grouping the results by labelling, taking the minimum cost and multiplying it by the labelling probability. Summing up for all the labellings, we have the future cost as formulated in Section 6.1.

The output of ProbSKnife is the list of all the partitionings satisfying the initial labelling with up to DLimit domains, their cost in terms of the number of domains and future cost and the total probability of reaching a labelling that makes the application non-safely partitionable.

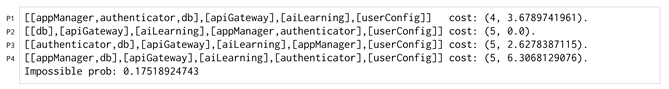

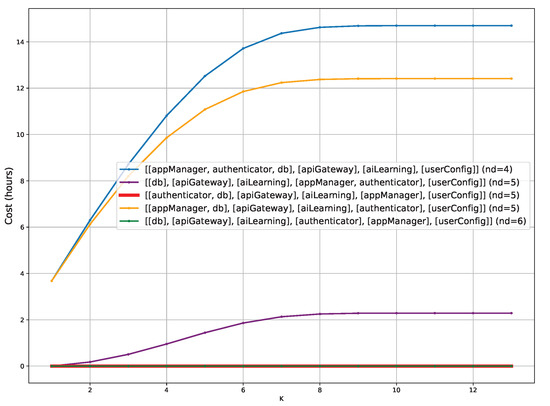

- Example. Listing 12 shows the ProbSKnife output example for a Cloud application with K = 1 and = 5. The probability of reaching labellings that make the application non-safely partitionable does not depend on the initial partitioning; it is the same once K and are fixed. The output shows four safe partitionings, the minimal one (P1) with four domains and three others with five domains (P2–P4) with their future costs. Now it is up to the application operator to choose the starting deployment between one partitioning with fewer domains (P1) but a future cost of about h and one (P2) with more domains but a future cost of 0 h.

| Listing 12. ProbSKnife for the Cloud application with K = 1 and DLimit = 5. |

|

Even with our motivating example, which is relatively small for the sake of presentation, determining by hand the safe partitionings with a limited number of domains is not a trivial operation. Calculating the future cost of each partitioning that satisfies the initial labelling is even harder. Considering the above example, limiting K to 1 has a space of 13 possible labellings of which the probability of reaching them from the starting one must be calculated. Moreover, after fixing a starting partitioning, to determine the migration with fewer costs given each possible labelling is also a non-trivial operation. For these reasons, we argue that our approach and prototype ProbSKnife are supportive of the deployment of multi-component applications onto technology based on SKs.

7. Experimental Assessment

In this section, we report the experimental assessment of ProbSKnife concerning the performance and the future cost impact of the parameters K and , used to reduce the complexity of the solution search space. As aforementioned in Section 2, to the best of our knowledge, there are no proposals that employ information-flow security to determine eligible partitionings onto SKs. Thus, we focused our assessment on the performance of ProbSKnife and the determination of costs and probabilities.

In particular, our goal is to answer the following questions:

- Q1

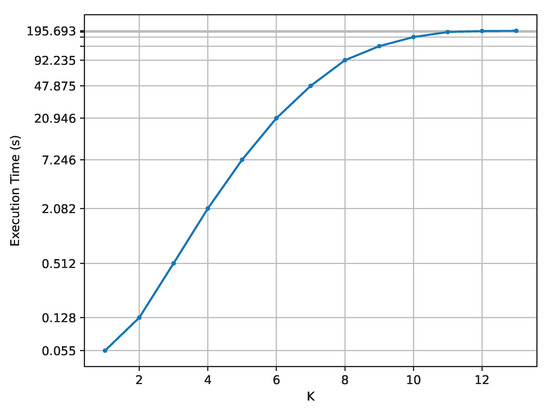

- How much does K impact the creation time of the probabilistic model?

- Q2

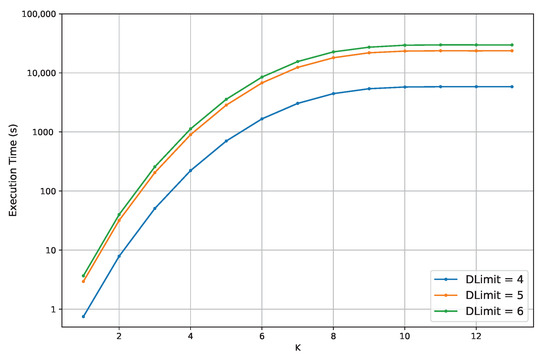

- How much do K and impact the execution time of ProbSKnife?

- Q3

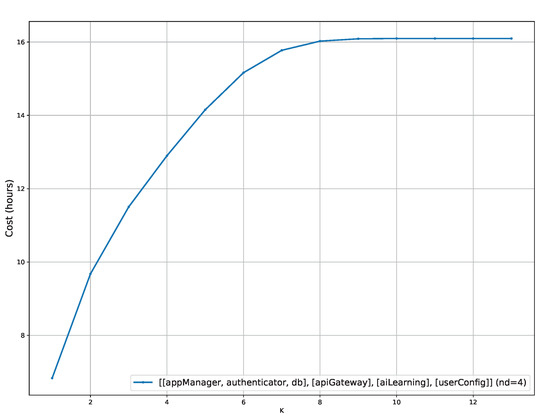

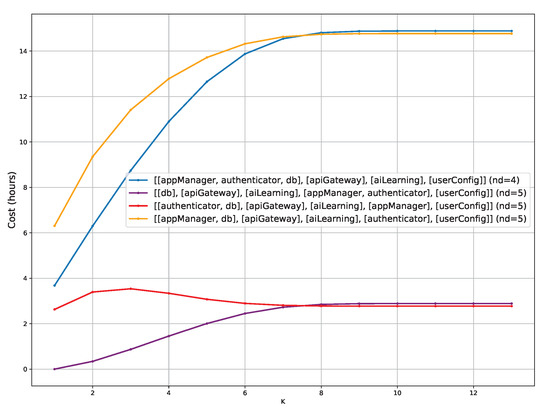

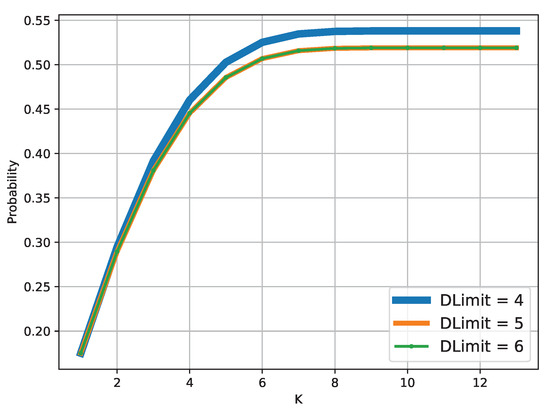

- How much do K and impact the safe partitioning costs?

- Q4

- How much do K and impact the probability of not having a safe partitioning?