Abstract

Since the COVID-19 Pandemic began, there have been several efforts to create new technology to mitigate the impact of the COVID-19 Pandemic around the world. One of those efforts is to design a new task force, robots, to deal with fundamental goals such as public safety, clinical care, and continuity of work. However, those characteristics need new products based on features that create them more innovatively and creatively. Those products could be designed using the S4 concept (sensing, smart, sustainable, and social features) presented as a concept able to create a new generation of products. This paper presents a low-cost robot, Robocov, designed as a rapid response against the COVID-19 Pandemic at Tecnologico de Monterrey, Mexico, with implementations of artificial intelligence and the S4 concept for the design. Robocov can achieve numerous tasks using the S4 concept that provides flexibility in hardware and software. Thus, Robocov can impact positivity public safety, clinical care, continuity of work, quality of life, laboratory and supply chain automation, and non-hospital care. The mechanical structure and software development allow Robocov to complete support tasks effectively so Robocov can be integrated as a technological tool for achieving the new normality’s required conditions according to government regulations. Besides, the reconfiguration of the robot for moving from one task (robot for disinfecting) to another one (robot for detecting face masks) is an easy endeavor that only one operator could do. Robocov is a teleoperated system that transmits information by cameras and an ultrasonic sensor to the operator. In addition, pre-recorded paths can be executed autonomously. In terms of communication channels, Robocov includes a speaker and microphone. Moreover, a machine learning algorithm for detecting face masks and social distance is incorporated using a pre-trained model for the classification process. One of the most important contributions of this paper is to show how a reconfigurable robot can be designed under the S3 concept and integrate AI methodologies. Besides, it is important that this paper does not show specific details about each subsystem in the robot.

1. Introduction

In 2019, a case of severe pneumonia was reported in Wuhan, Hubei, China. This case study determines a new coronavirus 2, SARS-CoV-2 []. As a result, the spread of this coronavirus is causing millions of deaths worldwide. Thus, a pandemic was declared in March 2020 by the World Health Organization [,]. Several technologies have been developed to stop the COVID-19 pandemic as a response. As a result, the human-robot interaction has been growing up extremely fast since COVID-19 is limiting the human-to-human interaction because an aggressive spread of COVID-19 can be promoted when there are persons undetected with COVID-19. Thus, they have to limit their close contact with another person [].

On the other hand, designing robots using the S4 concept can be beneficial since several degrees of sensing, smart, sustainable, and social, can be delimited to boost the design process. Products designed using the S4 concept are presented in [,]. These products show a significant advantage since they can be designed using different levels of sensing, smart, social, and sustainable features. Thus, those products can be customized, and the selection of materials could also be limited. Besides, it is essential to consider that the concept of S4 products allows incrementing the speed of the design process and decrement the product’s cost. When the product is designed, the level of each S4 feature allows decrementing the cost of the total product. For instance, if the cost of sensors is unaffordable, the level of the smart feature can be incremented to create a more suitable design with a low-cost digital system.

In [], several examples of S4 products are presented in agriculture applications. In addition, several communication channels between robots and humans can be used according to specific requirements, and those communication channels also have to be tailored. The communication channels between the end-user and the robot could be classified by the stimuli signal received using the robot, such as visual, tactile, smell, taste, and auditory. Furthermore, the signal could be interpreted and adjusted to determine the actions that need to be done.

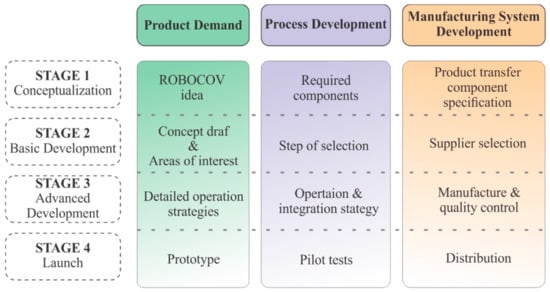

The communication channels are designed to know and solve the end-users necessities, so the communication channel is considered part of the social, smart, and sensing system. Moreover, robots must follow some moral and cultural behaviors according to defined geographical regions or applications in COVID-19; they must be designed to be adopted and accepted swiftly by communities. Figure 1 shows the design process stages for building the reconfigurable robot.

Figure 1.

Design and manufacturing stages.

In addition, artificial intelligence (AI) emerged as a powerful computational tool when data are available; according to [], it could be possible to automatically extract features for object classification. Thus, AI is implemented to detect the face mask’s correct position. Moreover, it could be used for determining the social distance. Moreover, a fuzzy logic controller was designed to avoid collisions against objects. In repetitive tasks such as sanitization, it is possible to use a line follower algorithm to detect a line on the ground that allows recording the trajectory and completing it autonomously (see Figure 2).

Figure 2.

Following a line for repetitive tasks.

In this paper, the robot itself has not been considered autonomous since it does not move autonomously with AI. Instead, it uses AI algorithms for assistive purposes as mask detection, crowd detection, and obstacle detection. Therefore, AI is considered a new feature added to the S4 products. This feature can be considered assistive for determining complex conditions that human operators cannot deal with them. For example, in a crowded space it is almost impossible to determine who is using the face mask correctly or not manually by one person. Still, the robot’s operator has all the information at once.

The paper’s main contribution is to show how to design a reconfigurable robot using S3 features and AI methodologies. The S3 design methodology allows creating rapid prototypes in a short period that can be evaluated in real scenarios []; Robocov has been validated in a Mexican University. However, the additional scenarios presented can be reached since the robot was designed using a central platform that incorporates specific modules according to the required task. Besides, to avoid spreading the COVID-19 virus, technological developments could require reconfigurable robots that integrate several characteristics such as cost, compatibility in the components, multitask, etc. Hence, this paper shows how a reconfigurable robot can be designed and how different sensors, actuators, and methodologies are integrated to design a reconfigurable robot that helps to avoid spreading COVID-19. This paper does not show an exhaustive analysis of AI methodologies or specific elements inside the robot. However, it shows the design process that can be followed for achieving a reconfigurable robot that can be used during COVID-19 pandemic.

On the other hand, it is important to mention that designing an autonomous robot is not always the best alternative since AI classification algorithms require specific databases and digital processors that increase the cost of design, so exploring different alternatives in which AI algorithms can be used into the robot is also a valuable design alternative.

Thus, the interaction between robots and humans could be more effective when the robot is designed to know the end-users cultural and social characteristics under the AI and S4 features.

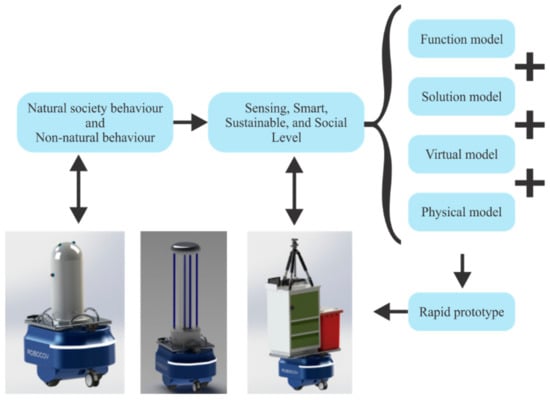

2. The Basic Design of an S4 Robot

To get S4 products from S3 products with smart, sustainable, and social features, social features were included to interact with operators and end-users, such as social sensors and controls as proposed in []. Those features are elements previously described in social robotics [,]. The social robots used during COVID-19 play a fundamental role since they can detect medical conditions for stopping the propagation of COVID-19 [,]. As a result, the four basic models, the function model, the solution model, the virtual model, and the physical model, are determinant factors that must be considered when a robot is designed to decrement COVID-19 pandemic.

- Function model. This model describes all the functions that have to be done by the robot, and the user’s requirements and commercial design factors define those functions.

- Solution model. The S4 components are presented in the solution by the software and mechanical components, and virtual and physical components are grouped according to the S4 concept.

- Virtual model. This model determines the software components required for accomplishing the end-user necessities; this model is the core of the smart and social features.

- Physical model. This model defines all the physical components, and the mechanical structure with sensors and actuators is integrated. In this model, it is also vital to integrate the sustainability features such as economic and environmental impact.

As a result, a first rapid prototype can be achieved using mechanical and software simulations when the four blocks are finished.

Social features are integrated into the robot to increase interaction between the end-users and teleoperators, such as bidirectional and visual communication. The robot also has social responses when someone interacts with it; for example, at the vigilance module, if someone makes a temperature measurement, it displays it on the screen showing the measurement, including a color code and a sound to notify the person of the result. Another example is the displayed eyes which help people around the robot have a social feeling of talking and interacting with someone instead of just a robot. This allows the robot to have social features and responses to improve the interaction with the operator. Thus, the robot can send messages to change the driving conditions.

Moreover, additional messages can be shown if the operator is driving the robot at high speed during long periods. Thus, the end-user could change the way the robot is controlled. In the case of communicating with more robots, they can collect data that can be used to determine the social behavior of end-users and preferences, so some end users’ actions that are not acceptable could be evaluated to promote changing actions. The robots could also establish communication channels with other social products to better define end-user behavior and improve the interaction between the robot and end-user.

Besides, it is essential to mention that social features are not always included in the conventional design methodologies for building robots. Usually, the design is focused on the task, and it is not considered part of the design process to adjust the end-user behavior. These conditions can decrease the robot’s performance since it is a teleoperated robot that depends on human interaction. However, if these social features are positive, they can increase the robot’s whole performance when the operators are involved. Sometimes, it is required to collect information about the end-user to know more about their behavior when controlling the robot. The social features are based on two social communication classes between robots and end-users. The two types of social communication are shown below []:

- Natural Society Behavior; this is defined by the end-user information from the robot when the robot is working.

- Non-Natural behavior; this social feature allows to induce changes in the end-user behavior or reinforce the positive behavior to improve the robot’s performance.

If the social features promote changes in behavior or reinforce the conduct, non-natural behavior is implemented. For example, when the robot detects that someone is not using a face mask, they change their comportment. As a result, not only detecting the face masks is needed. Thus, the robot must include support programs as a gamification strategy to promote face masks. In this first approach, messages are integrated to promote face masks or keep social distance. However, the next version of the robot will include the gamification stage as presented in []. Since the designed robot can read the student’s badge in the university, the student who is not using face masks or is not following the social distance conditions could receive an email telling why using a face mask and preserving social distance. Those are essential rules that we must follow in some physical spaces. As a result, the sensing, smart, and sustainable features are elements of the social design since these features will allow robots to receive new information during their operation.

In addition, the social communication between the robot and other devices permits us to know more about the end-users, such as preferences. For instance, when a robot is operating, the robot can set a social communication with other devices to know more about the work preferences of the end-user. Thus, a social structure could be established to improve the quality of teamwork between robots and end-users. This social information requires specific design elements to complete those tasks. Moreover, the robots have to transmit and receive social data about the social activity between end-users and them, then make decisions according to hierarchical specifications. When the robot is designed using the S4 concept, each feature must be selected to achieve specific goals []. Table 1 shows the S4 levels selected in the robot, while Figure 3 illustrates the flowchart to design a rapid prototype.

Table 1.

S4 Selected Levels for ROBOCOV.

Figure 3.

Flow chart to design a rapid prototype of a reconfigurable robot.

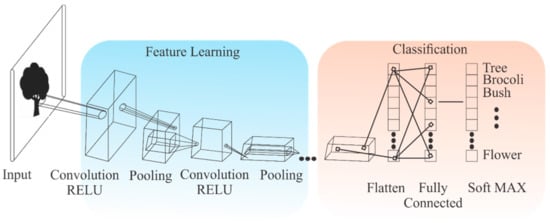

3. AI Integrated on the Robot

AI has increased its impact since new methodologies, advanced digital systems, and data for training and evaluating the AI methodologies []. Convolutional neural networks have been used in different applications related to image classifications. During the COVID-19 pandemic, face masks and social distance detection have been essential to incorporate in public places. Hence, convolutional neural networks have shown to be a good alternative for achieving the recognition requirements in the COVID-19 pandemic [,,,].

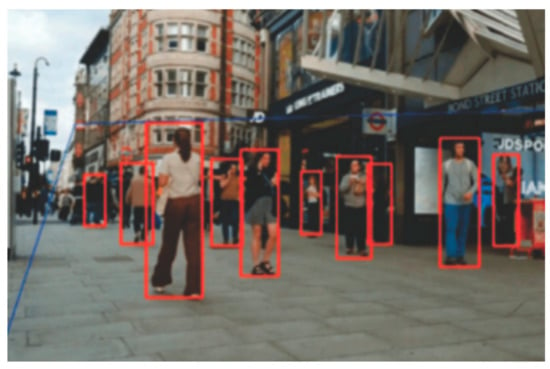

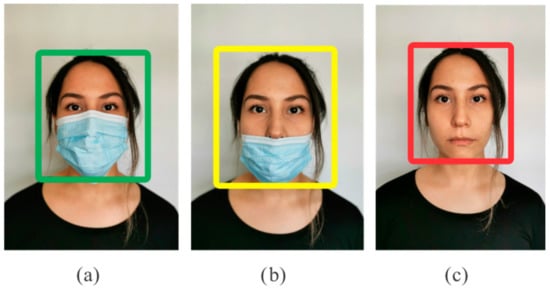

The designed robot integrates essential features needed to control the spread of COVID-19, such as keeping social distance and detecting face masks. Those tasks were selected as autonomous tasks since they are difficult to evaluate when there are crowded spaces, and they are not easy to perform by the human operator. Besides, there are enough databases for training those conditions; a convolution neural network was implemented [,]. Besides, the same navigation camera deployed on the robot could evaluate social distance and detect face masks. Since the operator requires a backup for detecting those, the robot integrates an autonomous detection. Figure 4 shows the general topology of a convolutional neural networks~(CNN), and Figure 5 illustrates the detection of social distance, and Figure 6 illustrates the face mask detection. The inference process uses a pre-trained model of approximately 2000 images, and some data augmentation techniques were applied. The robot only sends the image to evaluate on the PC, and then locally, the CNN is run, releasing the onboard processor of computational load. The minimum specification of the PC to run the CNN are:

Figure 4.

The general CNN topology, from the input to the classification result.

Figure 5.

Social distance detection.

Figure 6.

Face mask detection: (a) correct use of the face mask; (b) incorrect use of the face mask; (c) face mask not detected.

- -

- Intel i7 gen 8 (or better)

- -

- 8GB RAM

- -

- 256 GB SSD

- -

- GPU NVIDIA GeForce 1050 (or better)

The training method was back-propagation that updates the weights along with gradient descent; gradient descent calculates the weights by Equation (1).

where is established as the learning rate, represents the learning function, and stands for the weights of the neural network.

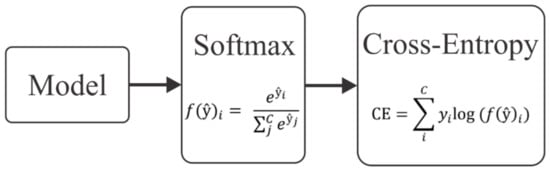

Then back-propagation is used to calculate the weights for each input and output example presented. The softmax function describes the probability for each class in a multi-classification problem.

The loss function is categorical cross-entropy (CCE), a combination of softmax function and cross-entropy function, as described in Figure 7.

Figure 7.

Softmax and cross-entropy loss function.

As mentioned before, the training process for the facemask recognition model used 2000 different images of people in three different cases: wearing a facemask properly (W Mask), not wearing a facemask (W/O Mask) and wearing a facemask in a wrong way (Mask Inc). Data augmentation techniques like flipping, rotating, change in brightness and contrast, cropping, etc., were applied to increase the number of images for the training process.

Figure 8 shows the confusion matrix obtained for the model’s training process, whereas the results for precision, recall, and f1 scores are shown in Table 2.

Figure 8.

Confusion matrix for facemask detection.

Table 2.

Evaluation results of precision, recall, and f1 score.

The mask detection is based on image processing, so all the illumination issues impact the detection of the mask. On the other hand, different objects can be classified as face masks when many persons are in the studied frame. Thus, automatic face mask detection must be implemented in a controlled environment and with a limited number of persons per frame.

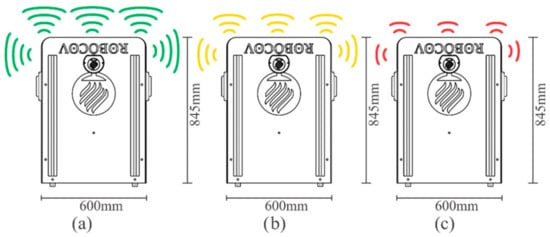

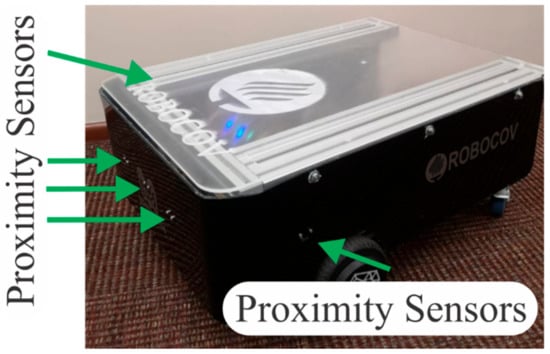

On the other hand, navigation could be a computational and time-consuming process, and requires using advanced sensors []. As a result, autonomous navigation was not integrated into the robot. However, AI can be deployed on a robot subsystems, such as avoiding obstacles that the operator cannot observe or dynamic obstacles that could suddenly appear. Moreover, the selection of deploying AI in the low-cost robot is strategically determined by the task that dramatically increases the robot’s performance. For instance, the avoiding obstacle system using low-cost ultrasonic sensors is integrated because the operator can confidently maneuver the robot. The obstacle avoidance system uses an array of ultrasonic sensors (see Figure 9 and Figure 10) distributed along the robot to measure the presence of nearby objects within a range of 1.2 m to prevent collisions and help the user steer the electric mobility device safely in any type of environment. The main goal of the navigation system is to prevent a collision in the presence of mobile or fixed objects or persons.

Figure 9.

Proximity sensor placement and obstacle detection output: (a) no obstacle detected; (b) obstacle detected close; (c) obstacle detected nearby.

Figure 10.

Proximity sensors in the prototype.

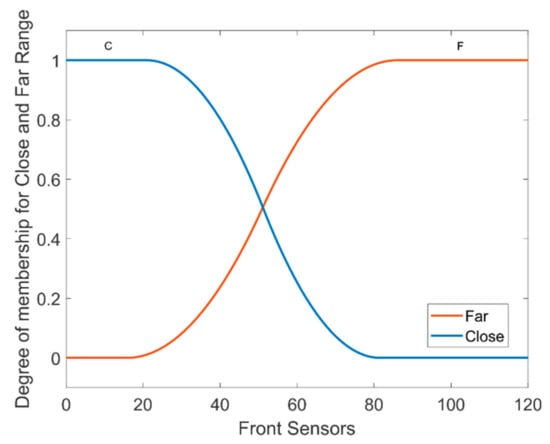

An obstacle avoidance system is based on a fuzzy logic Mamdani type like the controller presented in []. The input fuzzification (Figure 11) stage for each ultrasonic sensor has two membership functions labeled Close and Far for each of the measured distances of the ultrasonic sensors deployed around the robot. For the Close membership function, a Z-shaped input membership function was selected to represent the input of the ultrasonic sensor within a range of 0 m to 0.9 m. On the other hand, an S-shaped input membership function is selected to represent the Far membership function when the sensor reading is within a range of 0.1 m to 1.2 m.

Figure 11.

Input membership function for the ultrasonic sensor readings fuzzification.

The Z and S shapes have a saturation section in which the membership values are equal to one, so the universe of discourse is well segmented with this type of function, instead of implementing triangular functions that do not have a saturation region that does not give the same membership value in a section of the universe of discourse.

The if-then rules were generated to avoid obstacles that the end-user cannot see when controlling the robot by a remote terminal. Those rules involve the inputs (ultra-sonic sensors) and the outputs (signals to the actuators). When the sensors detect an obstacle, the fuzzy logic system can send an output signal to avoid the obstacle, so the robot can navigate in narrow spaces with obstacles that are not shown in the remote terminal (screen).

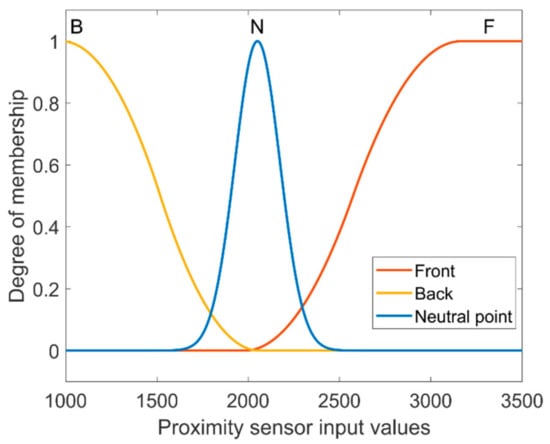

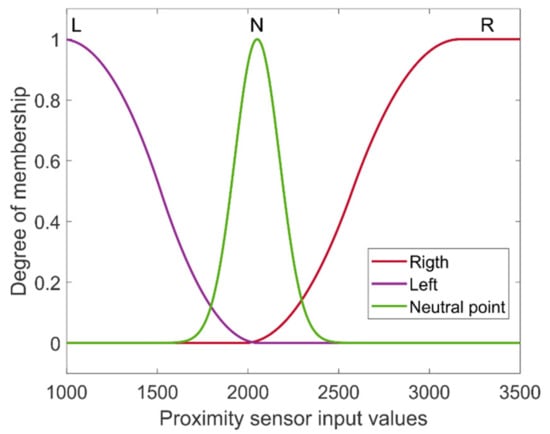

For the defuzzification stage (Figure 12), three membership functions were selected to represent the neutral (no movement of the robot), the forward. The backward movements of the robot, and therefore the array of ultrasonic sensors deployed around the robot, can provide control to its steering in the presence of obstacles. The defuzzification membership functions are shown in Figure 13, and the inference engine is presented in Table 3.

Figure 12.

Defuzzification stage for the back and forward movements.

Figure 13.

Defuzzification membership functions for the right and left movements.

Table 3.

Inference engine rules for the obstacle avoidance controller.

This proposal does not consider the AI system part of the smart feature since it requires sensors, digital systems, etc. These AI subsystems allow integrating or removing them from the robot in a modular way. Hence, the structure of the robot could preserve a low budget. Besides, the AI classification algorithms are running on the PC, so the onboard processor only sends a real-time video using a frontal camera (see Figure 14).

Figure 14.

Onboard camera for the real-time video feed.

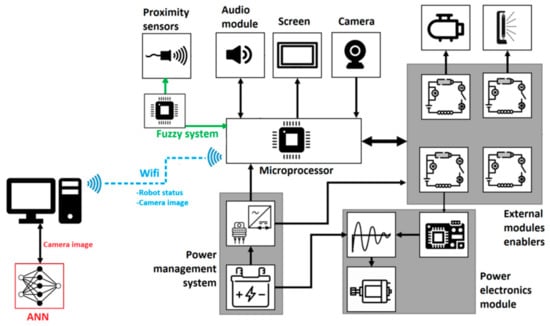

The interconnection diagram between each system and module is illustrated in Figure 15. Such a diagram shows that the artificial neural network (ANN) is carried out in the operator’s computer as an external process; also, the process of the fuzzy system is carried out in a separate microprocessor. In the end, the commands from the teleoperator’s computer and the output of the secondary microprocessor are fed directly into the central processing unit to control the other systems and modules within the ROBOCOV system.

Figure 15.

Block diagram of interconnection between elements inside Robocov.

4. General Description of the Robocov Systems and Subsystems

Robocov is a reconfigurable robot that has multiple subsystems (modules). Each module is designed to fulfill a specific objective. This section analyzes each module according to the S4 features concerning the sensing, smart, sustainable, and social attributes. For example, Sensing (Level 1) describes each subsystem’s measuring and monitoring components. Smart (Level 2) enlists the primary actuators or subsystems. Sustainable (Level 3) analyzes the economic, social, and environmental aspects behind the operation of each module. Finally, the social level describes the interaction of the subsystems with their environments and different users. This analysis is described in Table 4.

Table 4.

S3 solution according to the implemented modules in the ROBOCOV unit.

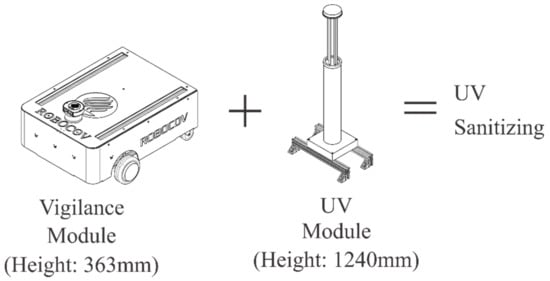

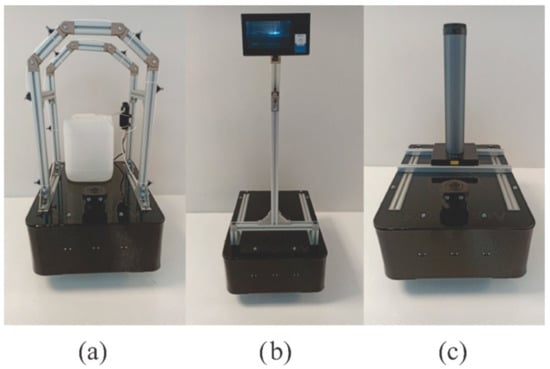

In Table 4, the first module is the disinfection system. The main objective of this module is to sanitize public spaces in an efficient and non-invasive manner; the implementation of two separate modules can achieve this. The first disinfection module is based on UV light for non-invasive disinfection. This module allows Robocov to sanitize any surface and spaces within reach of the UV light (2.5 m from the source) and the correct exposure time (around 30 min). It is essential to clarify that this module should only be used in empty spaces; it has been demonstrated that the UV light used for sanitizing tasks can be harmful to a human user or operator in case of exposure. The Robocov UV light module allows safe and efficient use of this disinfection method owing to a remote control operation. Figure 16 shows how this module is integrated into the Robocov platform.

Figure 16.

UV disinfection system.

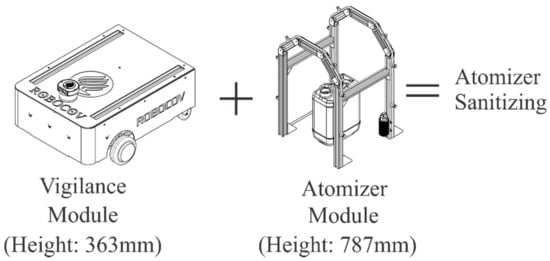

Alternatively, the second disinfection module consists of operating atomizers that distribute a sanitizing solution in the robot’s surroundings using a mechanical designed arch-type structure. This module requires a sanitizer container to transport and provide the sanitizing solution. Moreover, a hose system is designed to reach the distributed atomizers. The latter components are then paired up with a mini-water pump to inject the sanitizing solution from the container to the hose system. In this case, the remote operation of the atomizer module and the safe disinfection are suited for public use. Figure 17 shows the atomized disinfection module and system. The control of both modules within the disinfection system is achieved with onboard Arduino and Raspberry Pi boards; these controller boards and the battery-powered system make these modules a safe, uncomplicated, and economical solution, with minimum environmental impact.

Figure 17.

Atomizer disinfection system.

The second system consists of the Vigilance system. This system can be comprehended as the central driving unit, and its main objective is to mobilize the robot as intended by the operator as efficiently as possible. To achieve this, onboard cameras and proximity sensors are installed to guide the operator in the maneuvering tasks. The operator interaction is carried out via an Xbox controller for ergonomic and intuitive commands. This base module can be paired with any other Robocov module, operating cooperatively or individually.

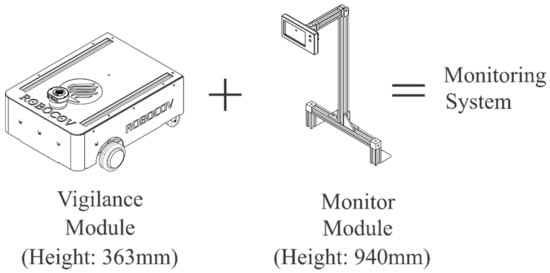

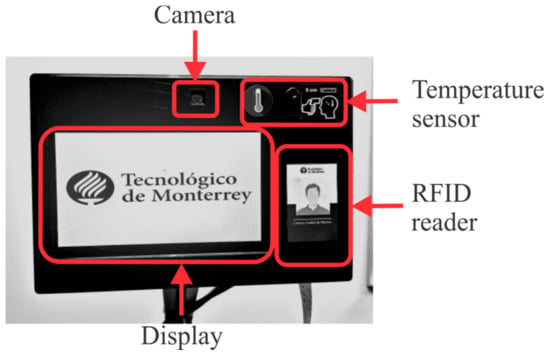

The third system consists of the Monitoring module; this module measures the temperature of particular users owing to an installed proximity temperature sensor; it also interacts with the students via a two-way communication channel and a guide user interface in the front display. The onboard speaker and microphone provide a user-friendly interface for remote interaction between the users and the operator, allowing a personalized interaction for every student. The personalized attention to the students has been achieved owing to an RFID card reader, which identifies the users when interfaced with a student or personnel ID. Figure 18 illustrates how this module can be assembled.

Figure 18.

Monitoring system.

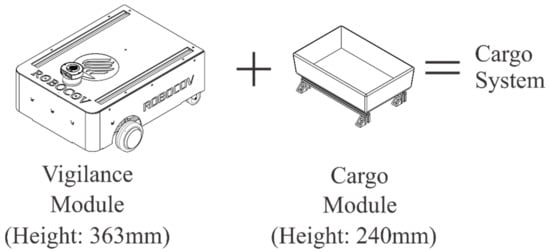

Lastly, the cargo module is designed to function as a safe and reliable transportation service. By taking advantage of the control and maneuverability of the vigilance system, the cargo module can be used to deliver hazardous material, standard packages, or documents from point A to point B without the need for direct interaction between peers. This helps to reduce the direct interaction and number of exposures in a crowded community such as universities or offices. This module is shown in Figure 19.

Figure 19.

Cargo system.

5. Interface and Control Platform

The central Robocov processor is a Raspberry pi 4, which gets control signals through a local wi-fi connection. Different actuators can be controlled, and sensors can be read depending on the connected module. Both actuators and sensors are connected to the Raspberry by a PCB, and it has the necessary circuits to connect digitally with power elements. This interaction is done by the General Purpose Input Output~(GPIO) ports.

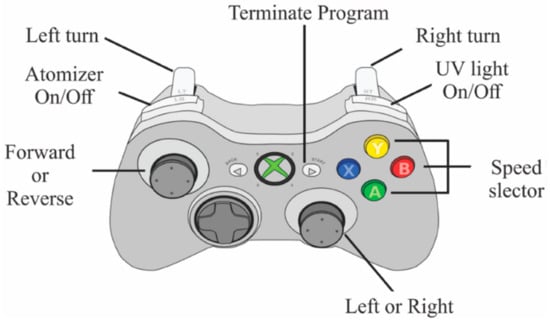

The user can control the robot using an Xbox one controller connected to the main computer. With both triggers and joysticks, it is possible to move Robocov and indicate the movement of both motors. With the buttons “A”, “B” and “Y”, speed mode can be changed between low, medium, and high speed. With the buttons above the triggers, modules UV and sanitizing liquid can be activated and deactivated. This configuration allows the user to have complete control of Robocov without the need for the operator to release the Xbox controller. The controller commands are illustrated in Figure 20.

Figure 20.

Robocov controller mapping in the layout of an Xbox controller.

The Robocov interface is shown on the main computer. This is designed to show all the necessary information in a single window. It has eight parts: (i) Controls, which indicate every button function. (ii) Phrases, where a signal can be sent to the robot to reproduce a specific audio file. (iii) Line follower can activate, pause, or stop the line following mode. (iv) Camera, it shows the image received from the robot as well as being able to activate or deactivate the computer’s microphone and speakers. (v) Connection status shows the connection status with Robocov if it is connected, with low signal, or disconnected. (vi) Speed mode shows which of the three levels is activated, low, medium, or high speed. (vii) Sensors allow the user to see the detected distance to the nearest object from each sensor, and (viii) the module’s part, which deploys different information depending on the connected module. A screenshot of the operator’s computer is shown in Figure 21.

Figure 21.

Robocov interface on the main computer.

All the information presented on the interface allows the user/operator to know what happens in the robot’s surroundings. The operator can control and take assertive decisions to correct the robot’s use with all these indicators. Since all the information is presented in one window, the operator does not need to change anything to have all the sensor’s feedback.

Each user interface component helps the user in different ways; the control indicator shows the user/operator the mapping of every button in the controller. The phrase section lets the operator give specific instructions to subjects near the robot, such as asking them to take their temperature or telling them to use their masks. The line follower mode allows Robocov to do sanitizing tasks by itself. At the same time, the operator can still see the image received from the camera and see the sensor’s status. With this information, the operator can pause or stop the robot.

As mentioned before, the module’s interface changes depending on which one is connected. The interface will show the lamp status. In case the sanitizing liquid module is connected, it will show the liquid level and if it is activated or not. This helps the operator know the status and deceive action on what to do. If the monitoring module is connected, the interface will show a temperature indicator and a label with card ID information if scanned.

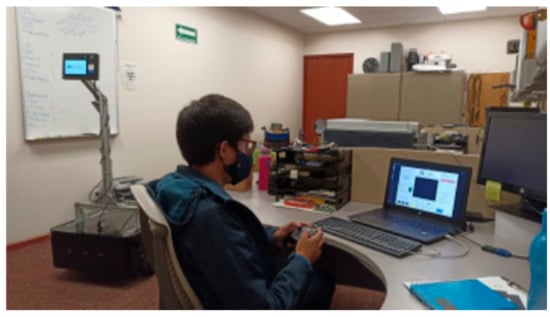

The programming also has security algorithms that help control the robot, such as stopping if the connection is lost. This allows the operator to have confidence in moving the robot and not worry about connection problems. The same happens when any sensors detect something closer than 20 cm, blocking one or two motors depending on which detected an object. Figure 22 illustrates the use case of the robot being operated by an operator.

Figure 22.

A teleoperator controlling the Robocov.

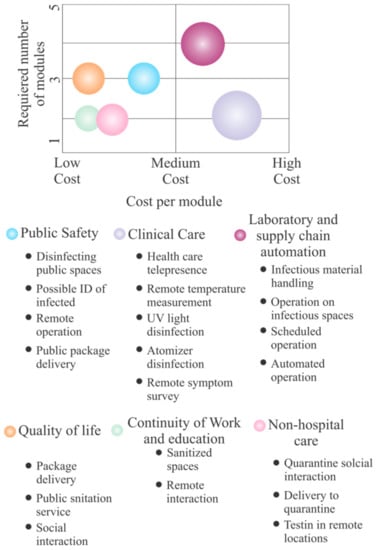

6. The Tasks That Robocov Can Perform

As a modular system, the proposed Robocov platform can accomplish a fair number of tasks. As shown by the bubble cart in Figure 23 [], the installed module allows attaining specific tasks to increase the number of performed tasks. However, the robot is based on a leading platform, so the specific module can be selected to maintain the cost of the robot low. For instance, when the UV module is selected, a specific UV lamp can be installed according to the sanitization requirements as the area that requires to be sanitized and the time for achieving this task. Thus, the designer can tailor the elements of each module based on the requirements and the primary platform limits.

Figure 23.

Design and manufacturing stages.

- Public Safety

- Clinical Care

- Continuity of work and education

- Quality of Life

- Laboratory and supply chain automation

- Non-hospital care

Public safety is a concerning area in today’s pandemic panorama. The contribution of the Robocov in this particular area includes: (i) disinfection of public spaces; (ii) possible identification of infected; (iii) remote monitoring of symptoms; and (iv) public package delivery; these tasks can be achieved with the disinfecting, monitoring, and cargo modules. Subsequently, the clinical care action is identified as (i) Health care telepresence, (ii) remote temperature measurement, (iii) UV light sanitizing, (iv) atomizer sanitizing, (v) remote symptoms survey. The clinical care task can be carried out with only two modules, disinfecting and monitoring system.

Regarding the continuity of work and education area, the Robocov can get sanitized spaces and have a remote interaction with the students. These tasks have a low cost of operation and require a minimum number of modules. Robocov can also impact the quality of life in the scholar sector, and a package delivery service can be implanted owing to its remote operation. Moreover, a public sanitation service can be provided instead of a programmed operation; the social features of the Robocov, such as a two-way interaction, can also help to include the Robocov as an interactive asset, this impacts how the user perceives the robot daily, making it part of the academic life.

Finally, the laboratory and supply chain automation and no-hospital care areas of application are analyzed. The former can use the cargo module for remote handling of hazardous materials in a laboratory environment, and it also can operate in infectious spaces minimizing the risk of transmission. In addition, its operation can be scheduled and automated owing to the onboard line follower features, allowing the implementation of scheduled routes for disinfecting and cargo tasks. On the other hand, no-hospital care includes using the Robocov platform for testing in a remote and hazardous location. It can be delivered to quarantine zones and be used as a safe two-way communication line for quarantine social interaction.

Overall, and despite the application area, each module’s fundamental task and objectives remain unchanged. These basic tasks can be comprehended as disinfection and monitoring, and Figure 24 shows the actual and functional prototypes to achieve such tasks.

Figure 24.

Prototype of Robocov modules: (a) sanitizing solution module; (b) monitoring module; (c) UV light module.

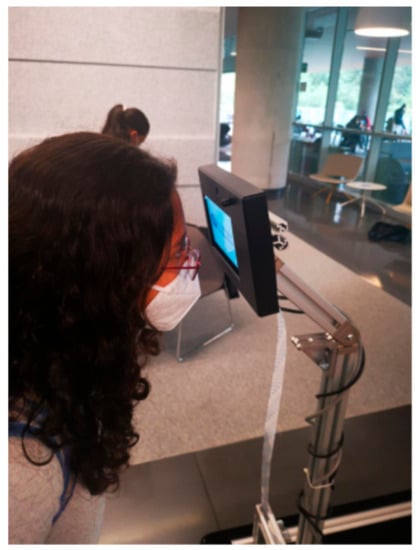

The basic monitoring features are accomplished owing to a designed GUI, and this interface is used to interact with the users before their identity through the RFID card reader. Figure 25 shows the implemented interface as the positioning of the card reader and the temperature sensor used for the symptoms survey. In addition, Figure 26 shows the users’ use in the field of this module.

Figure 25.

GUI for interaction and identification of users.

Figure 26.

User interaction with the temperature sensor.

An experimental field operation of the disinfection system is illustrated in Figure 27 and Figure 28. The former shows the UV light disinfection module during operation time, while the latter displays the atomizer disinfection module operating in a public space.

Figure 27.

UV module operating in the library.

Figure 28.

Operating atomizer disinfection module working on open spaces.

7. User Experience

Robocov was tested in several environments. However, it was explicitly designed to be controlled inside the campus, so end-users were trained for approximately 15 min. Then, they could control Robocov using a remote terminal (see Figure 29) in several tasks. Some end users’ testimonials are presented below. It is essential to mention that end-users point out that at the beginning, it was pretty challenging to control it since the perspective regarding the physical dimensions of the robot is confusing when they use the personal computer’s display. After maneuvering the robot for around 10 min, they could control it. The robot’s controller is based on a video game controller, so the usability issues about the controller were avoided, and end-users can control it without errors. The main problem was the remote perspective regarding the robot and obstacles’ accurate physical dimensions. This project does not include a virtual training system that could be used before using the actual robot to minimize the training time, as was presented in []. The goal of this paper is not to achieve a complete study of the usability and functionality of the robot. Thus, only general opinions are presented.

Figure 29.

A remote terminal controls Robocov.

David Hernández Martinez (end-users):

“It’s like being inside of a video game. At first, you cannot control it because it is not correctly calculated the distance from the robot to the objects; it becomes easier for you with practice. To control it is easy for me, I am familiar with videogames”.

Sandra Silva Carranza (end-users):

“It was effortless for me to control it. Watching the robot by the screen was difficult because I could not calculate the distances from the robot to the objects well, but I could control it well in a short period”.

Bruno Gómez (end-users):

“It was quite difficult for me at the beginning when I only had to watch the screen because the perspective changes, and you have to be careful not to collide against the walls. But after a while, you can control it well”.

8. Discussion

Indeed, since the outbreak of the COVID-19 virus, many industrial and educational sectors have been severely affected, shutting down most of their activities to minimize the spread of the disease. Although some countries have attempted to resume particular scholar, empresarial, and touristic activities, these have been shut down intermittently due to the resurgence of infections. This would indicate that other preventive measurements besides social distancing and sanitizing points need to be implemented to manage the reactivation of these activities better. However, due to the variability in the possible scenarios, one would be mistaken to consider that only one solution will fit all reemerging sectors. In that sense, the introduction of the Robocov as a modular system, designed to follow the S4 concept with AI features, can be highly beneficial to the reactivation paradigm. Robocov was designed using a central platform that considers smart, sensing, sustainable, and social features. This means that Robocov was based on a platform conception, so the platform concept allows a connection of the required elements for specific tasks as a reconfigurable system. If Robocov were designed using a product concept, Robocov would be tailored for a specific task, and the robotic system would be closed for designing more applications. Hence, the proposed robot could not cover more end-user needs. Besides, this robotic platform can be used in agriculture or space tasks when the COVID-19 pandemic is finished.

The modularity of the presented Robocov can be advantageous; its modules can be easily interchanged to fit a specific scenario. In the case of disinfection tasks, these can be accomplished during and after working hours owing to its possible disinfection methods. The easy interchangeability for the use-case of these modules makes the disinfection system a flexible solution. For example, the atomizer system can operate in most public spaces; however, this method may not be the best option in certain areas. For those cases, the disinfection modules can be interchanged to fulfill the sanitizing task. The UV disinfection module may not perform optimally in highly illuminated spaces in the same context. In such cases, the atomizer module can be a better fit to fulfilling the sanitizing objective. It is essential to mention that the interchangeability of these modules does not interfere with the S4 features behind the disinfection system.

In addition to the sanitizing capabilities, the Robocov can also be switched to a monitoring system. By doing this switch, the S4 features change since the objective of each module is different, requiring a different solution for each S4 level. For example, the actuators concerning the Smart level change from sanitizing methods to speakers when switching from the disinfection to the monitoring system. The monitoring system helps to control the alumni and personnel better as a second filter inside the institution by running an AI algorithm capable of detecting the correct and incorrect use of facemasks, the attainment of social distancing norms, and the temperature measurement to avoid any infectious scenarios.

Furthermore, the monitoring system can be paired with other disinfection and cargo modules. This cooperative work improves the productivity of the Robocov system since a significant number of tasks can be accomplished by having two or more modules at the same time. Overall, there is no doubt that the productivity and flexibility of implementing a modular design can benefit the reactivation of scholar and entrepreneur sectors, allowing a better reintegration of students and workers to a safer and disinfected working area. As mentioned before, the modular S4 design and the AI engines could be combined to design a robot that could help to minimize the impact of COVID-19.

9. Conclusions

The proposed robot, Robocov, is a low-cost robot that can perform several tasks to decrease the COVID-19 impact using a reconfigurable structure. Besides, it is a robot that integrates S4 features and AI, allowing the robot to accomplish the end-user requirements such as sanitization and delivered packages. Besides, monitoring temperature is a critical variable used to detect infected people, so temperature sensors are selected instead of thermal cameras that can increase the cost of the whole robot. Moreover, face mask detection or social distance are essential tasks added to the robot’s detection system to integrate into the robot. The remote navigation system has a supportive, intelligent control system to avoid obstacles. In future work, the navigation could be completely autonomous with another implementation of AI. Social features are integrated since the interaction with the operator must be improved according to the operator’s behavior and needs. In addition, this robot is a reconfigurable robot with a central element, the leading platform with the traction system, and a central microprocessor that allows different devices to connect with the robot. Using this concept of S4 and AI is possible to design robots with high performance and low cost that can perform complex tasks required to decrease the impact of the COVID-19 Pandemic.

Author Contributions

Conceptualization, P.P., O.M., E.P., J.R.L., A.M. and T.M.; Data curation, P.P., O.M., E.P., J.R.L. and A.M.; Formal analysis, P.P., O.M., J.R.L. and A.M.; Funding acquisition, P.P., O.M., E.P. and A.M.; Investigation, P.P., E.P., J.R.L., A.M. and T.M.; Methodology, P.P., O.M., E.P., A.M. and T.M.; Project administration, P.P., O.M. and A.M.; Resources, P.P. and A.M.; Software, P.P., O.M., E.P. and J.R.L.; Supervision, P.P. and T.M.; Validation, P.P., E.P., J.R.L. and T.M.; Visualization, P.P., O.M., J.R.L. and T.M.; Writing—original draft, P.P. and J.R.L.; Writing—review & editing, P.P. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Not Applicable, the study does not report any data.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Ciotti, M.; Ciccozzi, M.; Terrinoni, A.; Jiang, W.C.; Wang, C.B.; Bernardini, S. The COVID-19 pandemic. Crit. Rev. Clin. Lab. Sci. 2020, 57, 365–388. [Google Scholar] [CrossRef] [PubMed]

- Tandon, R. The COVID-19 pandemic, personal reflections on editorial responsibility. Asian J. Psychiatry 2020, 50, 102100. [Google Scholar] [CrossRef] [PubMed]

- Yang, G.Z.; Nelson, B.J.; Murphy, R.R.; Choset, H.; Christensen, H.; Collins, S.H.; Dario, P.; Goldberg, K.; Ikuta, K.; Jacobstein, N.; et al. Combating COVID-19—The role of robotics in managing public health and infectious diseases. Sci. Robot. 2020, 5, eabb5589. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Méndez Garduño, I.; Ponce, P.; Mata, O.; Meier, A.; Peffer, T.; Molina, A.; Aguilar, M. Empower saving energy into smart homesusing a gamification structure by social products. In Proceedings of the 2020 IEEE International Conference on Consumer Electronics (ICCE), Taoyuan, Taiwan, 28–30 September 2020; pp. 1–7. [Google Scholar] [CrossRef]

- Ponce, P.; Meier, A.; Miranda, J.; Molina, A.; Peffer, T. The Next Generation of Social Products Based on Sensing, Smart and Sustainable (S3) Features: A Smart Thermostat as Case Study. IFAC-PapersOnLine 2019, 52, 2390–2395. [Google Scholar] [CrossRef]

- Miranda, J.; Ponce, P.; Molina, A.; Wright, P. Sensing, smart and sustainable technologies for Agri-Food 4.0. Comput. Ind. 2019, 108, 21–36. [Google Scholar] [CrossRef]

- Spampinato, C.; Palazzo, S.; Kavasidis, I.; Giordano, D.; Shah, M.; Souly, N. Deep Learning Human Mind for Automated Visual Classification. arXiv 2019, arXiv:1609.00344. [Google Scholar]

- Suresh, K.; Palangappa, M.B.; Bhuvan, S. Face Mask Detection by using Optimistic Convolutional Neural Network. In Proceedings of the 2021 6th International Conference on Inventive Computation Technologies (ICICT), Coimbatore, India, 20–22 January 2021; IEEE: Piscataway, NJ, USA, 2021; pp. 1084–1089. [Google Scholar]

- Barfoot, T.; Burgner-Kahrs, J.; Diller, E.; Garg, A.; Goldenberg, A.; Kelly, J.; Liu, X.; Naguib, H.E.; Nejat, G.; Schoellig, A.P.; et al. Making Sense of the Robotized Pandemic Response: A Comparison of Global and Canadian Robot Deployments and Success Factors. arXiv 2020, arXiv:2009.08577. [Google Scholar]

- Chow, L. Care homes and COVID-19 in Hong Kong: How the lessons from SARS were used to good effect. Age Ageing 2020, 50, 21–24. [Google Scholar] [CrossRef] [PubMed]

- Miranda, J.; Pérez-Rodríguez, R.; Borja, V.; Wright, P.K.; Molina, A. Sensing, smart and sustainable product development (S3product) reference framework. Int. J. Prod. Res. 2019, 57, 4391–4412. [Google Scholar] [CrossRef]

- Aymerich-Franch, L.; Ferrer, I. The implementation of social robots during the COVID-19 Pandemic. arXiv 2021, arXiv:2007.03941. [Google Scholar]

- Ponce, P.; Meier, A.; Méndez, J.I.; Peffer, T.; Molina, A.; Mata, O. Tailored gamification and serious game frame-work based on fuzzy logic for saving energy in connected thermostats. J. Clean. Prod. 2020, 262, 121167. [Google Scholar] [CrossRef]

- Molina, A.; Ponce, P.; Miranda, J.; Cortés, D. Enabling Systems for Intelligent Manufacturing in Industry 4.0: Sensing, Smart and Sustainable Systems for the Design of S3 Products, Processes, Manufacturing Systems, and Enterprises. In Production & Process Engineering; Springer: Berlin/Heidelberg, Germany, 2021; p. 376. [Google Scholar] [CrossRef]

- Wang, F.; Preininger, A. AI in Health: State of the Art, Challenges, and Future Directions. Yearb. Med. Inform. 2019, 28, 16–26. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Mata, B.U. Face Mask Detection Using Convolutional Neural Network. J. Nat. Remedies 2021, 21, 14–19. [Google Scholar]

- Tomás, J.; Rego, A.; Viciano-Tudela, S.; Lloret, J. Incorrect Facemask-Wearing Detection Using Convolutional Neural Networks with Transfer Learning. In Healthcare; Multidisciplinary Digital Publishing Institute: Basel, Switzerland, 2021; Volume 9, p. 1050. [Google Scholar]

- Ramadass, L.; Arunachalam, S.; Sagayasree, Z. Applying deep learning algorithm to maintain social distance in public place through drone technology. Int. J. Pervasive Comput. Commun. 2020, 16, 223–234. [Google Scholar] [CrossRef]

- Vo, N.; Jacobs, N.; Hays, J. Revisiting IM2GPS in the Deep Learning Era. arXiv 2017, arXiv:1705.04838. [Google Scholar]

- Gao, X.W.; Hui, R.; Tian, Z. Classification of CT brain images based on deep learning networks. Comput. Methods Programsin Biomed. 2017, 138, 49–56. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- OwaisAli Chishti, S.; Riaz, S.; BilalZaib, M.; Nauman, M. Self-Driving Cars Using CNN and Q-Learning. In Proceedings of the 2018 IEEE 21st International Multi-Topic Conference (INMIC), Karachi, Pakistan, 1–2 November 2018; pp. 1–7. [Google Scholar] [CrossRef]

- Ponce, P.; Ramirez Figueroa, F.D. Intelligent Control Systems with LabVIEW™; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2010; pp. 1–216. [Google Scholar] [CrossRef]

- Murphy, R.R.; Gandudi, V.B.M.; Adams, J. Applications of Robots for COVID-19 Response. arXiv 2020, arXiv:2008.06976. [Google Scholar]

- Ponce, P.; Molina, A.; Mendoza, R.; Ruiz, M.A.; Monnard, D.G.; Fernández del Campo, L.D. Intelligent Wheelchair and Virtual Training by LabVIEW. In Mexican International Conference on Artificial Intelligence; Sidorov, G., Hernández Aguirre, A., Reyes García, C.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 422–435. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).