1. Introduction

The data whose dynamics such as volume, velocity, veracity, variety are extended massively and unable to be handled by traditional data management system, is termed as big data. For management of such a massive data, modern data analytics techniques are used. With the advent of next generation networks, wireless devices are increasing rapidly in number. According to the indexed issued by CISCO, the number of wireless devices are now more in number than the world population in 2014 [

1]. From such a diverse range of connected device, explosion in data is not unexpected. For efficient handling and extracting valuable insights from such massive source of data, modern data analytics algorithms (big data analytics) will be applied.

During the last few years, discussions on next generation networks (5G) have gained widespread attention within the research community. Given that 4G is now a well-established technology across the globe, opportunities/challenges for 5G and the underlying technologies are being explored. Several breakthroughs have been discussed in the literature during the last few years. The most significant of these are the ultra-dense networks, massive MIMO (Multiple-Input Multiple-Output) and millimeter-waves (mmWaves) [

2]. Beside these, some other technologies such as device to device communication, two-layer architecture, cognitive radio based architecture, and cloud based architecture [

3] also have great importance in next generation networks. For these technologies of next generation networks, big data analytics and machine learning paradigm will perform a key enabling role. Due to rapidly growing wave of big data in wireless networks, big data analytics are becoming the major part of wireless communication as well as efficiently managing the network [

4]. There have been some efforts to show the importance of big data analytics in next generation networks such as big data based model for optimizing next generation networks [

5], and the importance of machine learning in enabling next generation networks [

6,

7]

With the advent of 5G, the number of wireless devices is projected to be in hundreds of billions [

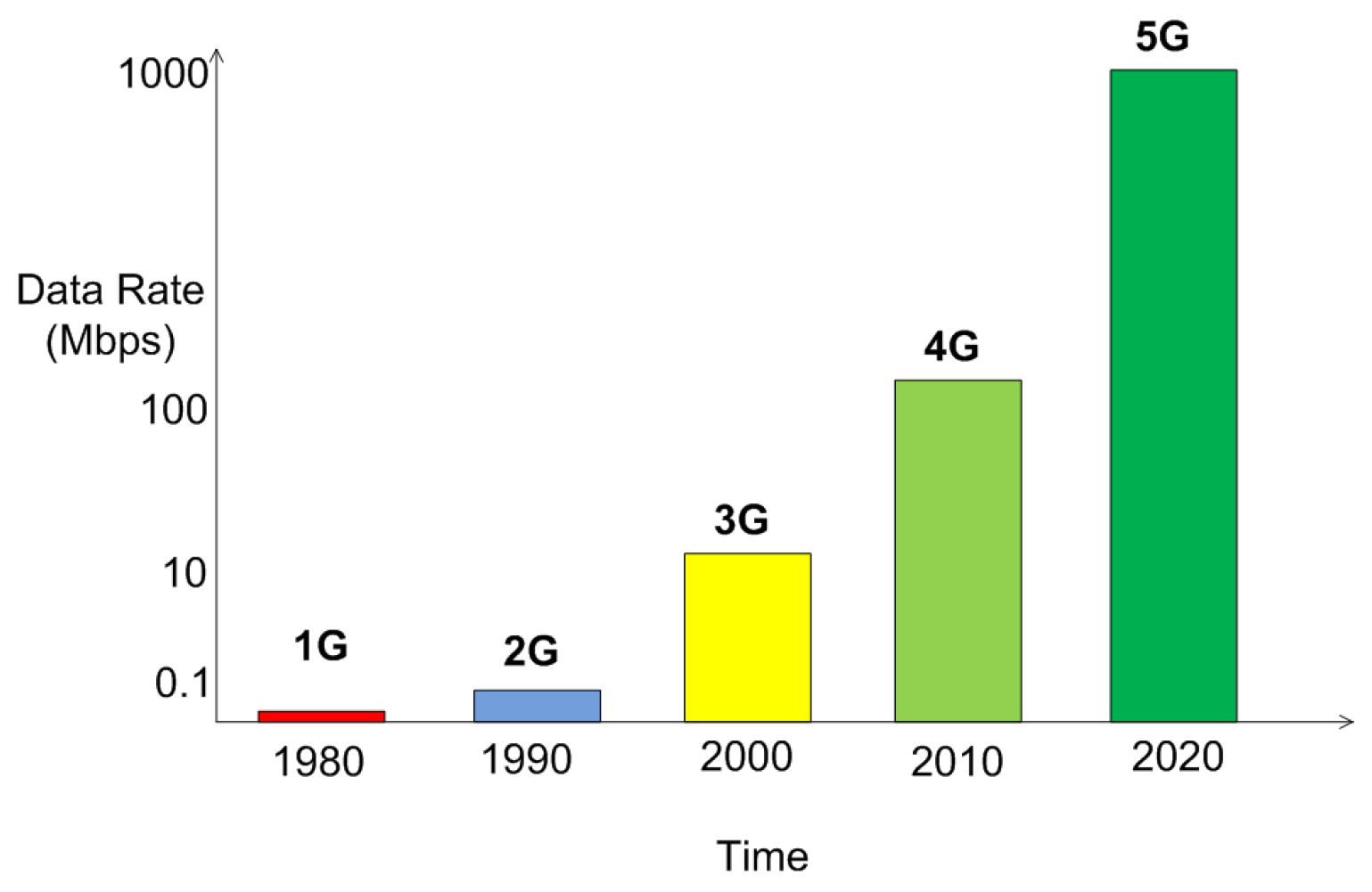

2]. Consequently, the required data rates are also increasing because the applications running on these devices are bandwidth-hungry. A comparison of the exponential growth in data rate is shown in

Figure 1.

Challenges for 5G include high frequency spectrum (millimeter wave), much higher data rates (in Gigabytes) and ways of allocation and re-allocation of the bandwidth. The facts that 5G will open a new era in communication and that a major backbone for 5G is the Internet-of-Things (IoT) make 5G of great interest for the research community (IoT will connect billions of devices and will provide a high rate ubiquitous and low latency network). In summary, three major technologies which will contribute significantly to 5G are: Ultra densification, Millimeter wave (mmWave) and Massive MIMO. Waveform transmission, underlying network infrastructure and energy efficiency of communication are also significant issues for 5G.

5G networks will presumably be intelligent and proactive. Thus, artificial intelligence technologies such as machine learning, advanced machine learning techniques and big data analytics could perform a significant part in 5G networks. In this work, the significance of big data, big data analytics and machine learning’s paradigm are discussed for making next generation networks from theory to reality. To the best of our knowledge, this is the first work that presents all these issues. These issues are discussed in the subsequent sections. This work is organized as follows. In

Section 2, we present the key features required for a 5G network and major technologies that will be adapted in 5G. We then provide a useful insight on 5G from the perspective of big data analysis in

Section 3. We briefly highlight some open issues in

Section 4. Finally, we conclude the paper in

Section 5.

2. Major Milestones of Next Generation Networks

While several requirements can be set for 5G, not all these requirements need to be fulfilled simultaneously and may vary in different underlying scenarios. For example, in video transmission, high data rate is required whereas latency and reliability can be relaxed. On the other hand, in autonomous cars, latency and reliability is necessary but data rate can be compromised slightly. A visual representation of the required features of 5G is shown in

Figure 2. According to the literature, some of the engineering requirements for 5G are higher data rate, close to zero latency, device scalability, spectral efficiency and cost efficient.

Close to zero latency and maximum throughput are key features of the next generation networks. The achievement of these features require efficient and reliable inter cells or intra cell hand-off (or handover).

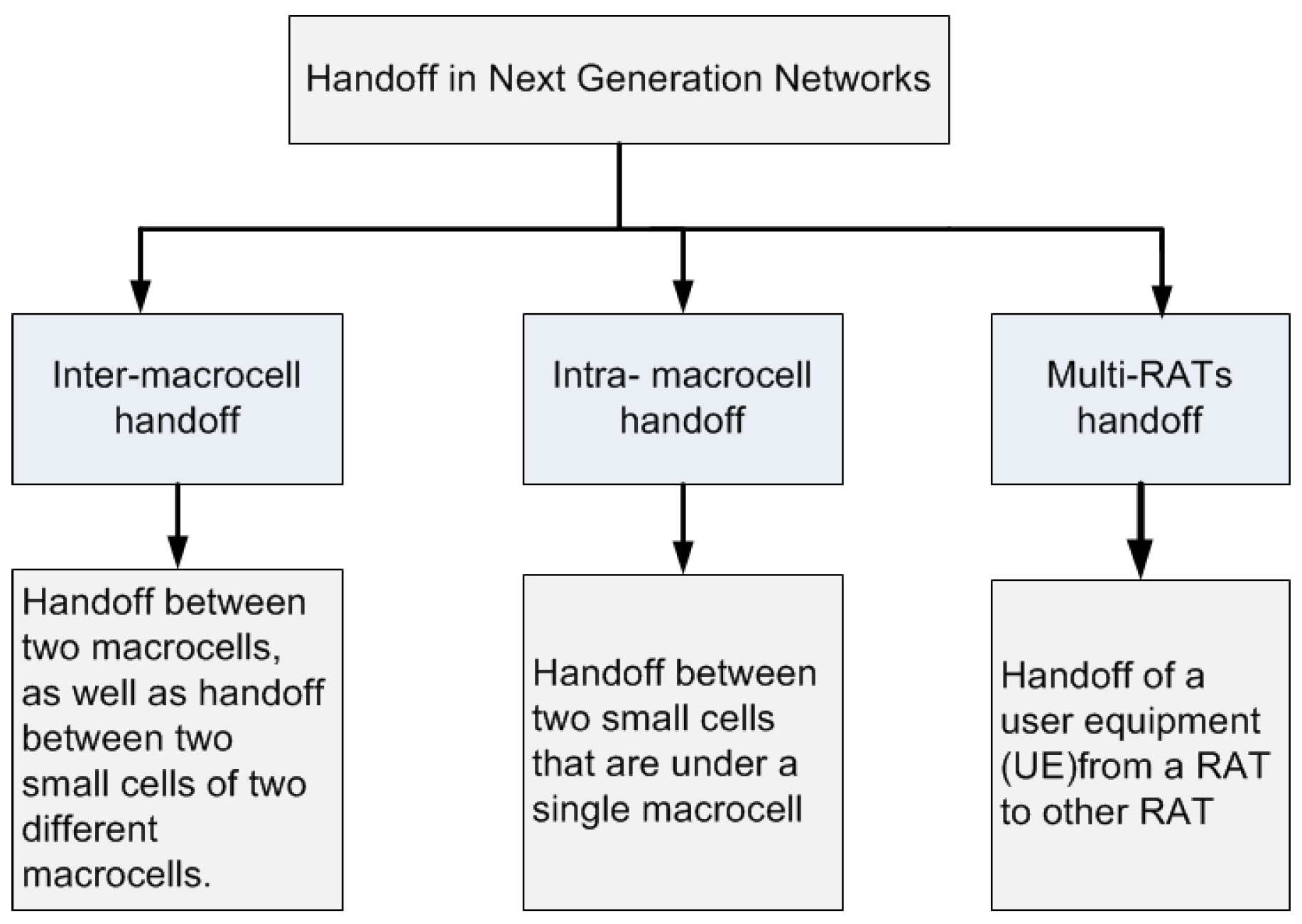

Hand-off refers to the process of sharing the dynamics of mobile networks such as frequency, time slots, spreading code or combination of them without degradation of the quality of service. Hence, hand-off management is critical for next-generation networks. Because of the core requirement of next-generation networks such as ultra-densification, high mobility, accessing the multiple radio access technologies make the handoff management more complex. The next generation networks will be ultra dense comprising of the combination of microcells and macrocells and, thus, these will involve different types of hand-offs such as inter-macrocell handoffs, intra-macrocell handoffs and multi-RATs handoff, as shown in

Figure 3.

Recently, hand-off management has attracted the research community [

8]. Arani et al. [

9] proposed an algorithm based on base stations (BSs) estimated load. In the algorithm proposed in [

9], the hand-off task is shifted from highly loaded BSs to base stations with light load. Hence, this algorithm improves the quality of service. A user-centered multi-objective hand-off scheme has been presented in [

10]. In this technique, the user calculates achievable data receiving rate and the blocking probability of each BSs prior to selection of new BSs for handoff. Through this mechanism, the achievable data receiving rate increases and the data blocking probability reduces. Other useful studies presented on hand-off include hand-off management in software-defined 5G networks, predictive hand-off mechanism, and fast and secure handover for 5G [

11,

12,

13].

In 5G networks, one of the aims is to get thousand times higher data rate than the existing cellular networks and close to zero latency. To achieve the goals of 5G, the following key technologies will be majorly used in 5G:

Ultra Densification

Millimeter Wave

Massive MIMO

In the following text, we discuss these key technologies in more detail.

2.1. Ultra Dense Networks

The concept of cell shrinking is the most effective way for improving the network performance and, thus, it has gained a huge attention within the research community [

14,

15]. In the early 1980s, the cell size was about hundreds of kilometers. With the growth of new technologies, the cell size has shrunk smaller and smaller, e.g., micro-cells, pico-cells, etc. (In Japan, the spacing between BSs is two hundred meters with coverage area in the range of one-tenth of square kilometers [

2]). Cell shrinking has numerous benefits. One of the significant benefits of cell shrinking is the reuse of frequency spectrum. The small cells are away from each other and can use same frequency spectrum without interference. Another benefit of cell shrinking is reduction in the traffic towards base station [

16]. Because of network densification, some challenges may arise too, such as

Optimal User Association, Mobility Support and

Cost.

2.1.1. Base Station Densification Gain

If there is base station density defined by

and corresponding data rate

, and a higher base station density

and corresponding data rate

, then the base station gain can be written as:

“

The densification gain is the slope of the rate increase over density range”. For example, if there is a network whose density is doubled and data rate is increased by 50 percent, then the densification gain,

, will be 0.5. For cellular networks, the densification gain is generally assumed as

[

2]. For mmWaves frequency, the densification gain

might be possible. As for mmWave frequency, communication is largely limited by noise, so density increases but it may increase signal-to-interference noise ratio dramatically [

17].

2.1.2. Optimal User Association

As we move towards 5G networks, the heterogeneity of the network will increase very much. The 5G networks will be integrated with heterogeneous network, thus enabling devices of different standard and protocols for mutual operation [

18]. Hence, in 5G networks, it is a challenge to determine which standard and spectrum will be used and which user will be associated with it. This phenomenon is called optimal user association. In general, optimal user association depends on Signal-to-Interference plus Noise Ratio (SINR) from every user to base station, the instantaneous load at each base station and the choice of other users in the network. Thus, a simpler approach is required for optimal user association [

19,

20]. The existing approach for tackling this is termed as biasing and blanking [

2].

Biasing means to push-off users from heavily loaded macro cells to lightly loaded small cells. In this way, users can utilize the maximum number of resources and remaining users in macro cells will also get resources easily. Biasing is thus a way to ensure that everyone gets the resources.

Blanking means shutting-off the transmission for the fraction of time while the network is serving the biased small cell users. With this process the SINR of small cell user will increase. Hence, this simplest approach of biasing the small cells and blanking the macro-cells will increase the edge rate by a factor as high as 500 percent. Biasing and blanking is thus a very useful and simple approach for resource allocation in 5G networks [

21,

22].

2.1.3. Mobility Support

Due to increase in heterogeneity and densification in network, mobility support has become a big challenge. As the are many data served to users, mobility support and always connectivity have become features of critical importance for cellular networks. An ad-hoc solution referred as the concept of specific virtual cell is adapted in LTE—

Release 11 [

23]. With the support of virtual cells, the user can move or communicate with any BS without the need of hand-off. However, in 5G, where there will be mmWave spectrum, the hand-off is more challenging and difficult. In mmWave, there will be narrow frequencies beams which must be aligned to communicate, so hand-off more likely will not exist in 5G. For communication with multiple BS, a scheme for beam alignment has been proposed in [

24].

2.1.4. Cost

In 5G, the emphasis is on cell shrinking. Cell size in 5G will be small enough. Similarly, BS will also be smaller and low powered. For small cell deployment, there will be some challenges in terms of cost, e.g., rental amount for small cells placement, ensuring a reliable backhaul connection, and enterprise quality-of-services [

25]. Besides cell shrinking for densification, another approach useful for reducing capital expenditures and operational expenditures. In this approach, users located closer enough establish a direct connection instead of connecting via base stations. This approach tends to reduce power consumption, increase data rate and improve latency. A protection scheme against interference is proposed in [

26].

2.2. Millimeter Wave

The extreme high frequency (EHF) and ultra-high frequency (UHF) waves are termed as mmWaves. The mmWaves have wavelength ranging from 10 mm to 1 mm (with frequency ranging from 30 GHz to 300 GHz). The mmWaves spectrum if not used would otherwise lie idle. In the past, mmWave spectrum was considered to be unsuitable for communication because of many factors such as strong path loss, atmospheric and rain absorption, diffraction, penetration and high equipment cost. However, with the increasing demands of higher data rates, it did not take too long to shift focus towards realization of mmWave spectrum usage. The idea of using mmWave spectrum in future communication networks (5G) has widely prevailed [

27,

28,

29]. On the other hand, there exist some challenges in adaptation of the mmWave spectrum, which we discuss in the subsequent section.

2.3. Massive MIMO

The concept of MIMO was proposed earlier by Foschine et al. [

32] but it was implemented in WiFi wireless system and 3G cellular networks around 2006. In MIMO system, there are multiple antennas available at BSs and mobile devices. In single user MIMO (SU-MIMO), the transmitter having multiple antennas communicates with a single user or a mobile device. On the other hand, in multi-user MIMO (MU-MIMO) system, a transmitter having multiple antennas communicates concurrently with multiple users or multiple devices. The comparison of some technologies for 5G is shown in

Table 1. When LTE was developed, there were two-to-four antennas per mobile device and as many as eight antennas per base sector [

33]. With further developments in MIMO, the term Massive MIMO was adapted gradually.

2.3.1. Features of Massive MIMO

The massive MIMO will provide: improved spectral efficiency, smooth channel response, and simplified transceiver design. Because of these promising benefits, massive MIMO has become an important part of 5G technology. Besides, in massive MIMO, front-end could be cheaper as multiple low power amplifiers are cheaper than a single high power amplifier one. Cabling in the RF chain also becomes cheaper. In addition, power efficiency is expected to be high in Massive MIMO, hence the cost of operation in the BS should be cheaper. However, there are several challenges for implementing massive MIMO. In the following text, we briefly outline some of the challenges for massive MIMO.

2.3.2. Challenges with Massive MIMO

The massive MIMO technique has become an important part of 5G given its promising features [

34]. However, several challenges for massive MIMO must be dealt with before the implementation can be realized.

Pilot Contamination: “

The interference among pilots in different cells is called pilot contamination”. Pilot contamination degrades the performance of channel estimation because the channel state information (CSI) in one cell become contaminated due to interference of user of another cell. As the number of BSs antennas increases, pilot contamination also appears, thus posing a problem for massive MIMO design. Although various methods have been proposed for reducing pilot contamination [

35], a more suitable design for mitigating pilot contamination is required.

Architectural Design: One of the key challenge for massive MIMO is to design its overall architecture. As in previous technology, there was high power amplifier feeding as a sector of antenna but in massive MIMO there will be thousands of small antennas correspondingly fed by low-power amplifiers and, most likely, there will be a separate power amplifier for each antenna. Thus, coupling between antennas, correlation and scalability are the main issues for designing massive MIMO [

2].

Full Dimension MIMO: In existing BSs antennas, the technique of horizontal beam forming is in use. The horizontal beam forming suffers a shortcoming of being able to accommodate only a limited number of antennas. However, in full dimension MIMO (FD-MIMO), azimuth and elevation beam forming can work simultaneously. Thus, FD-MIMO can accommodate much more antennas. Beside this, the vertical beam forming increases power of the signal and reduces the interference in neighboring cells. Hence, the average data rate and edge rate increases in FD-MIMO [

2]. Although FD-MIMO has promising benefits, there are some challenges regarding implementation and complexity, which must be considered. The challenges in FD-MIMO are:

Channel estimation for large number of channels,

Channel estimation complexity,

Inference between azimuth and elevation domains, and

MU-MIMO scheduling issues.

Channel Designing: Besides architectural design issues, the channel modeling for massive MIMO system is also a challenge. For non-ideal behaviors, the actual channel orthogonality needs to be verified. Further road map for channel modeling issues will be materialized in the future with the 3D channel modeling study [

36]. Possible channel models that may be adapted for 5G networks have been proposed in [

37,

38].

The challenges and key enablers are shown in

Figure 4.

2.4. Current Standard and Technology Enablers for Next-Generation Networks

The evolutionary technologies such as mm-Wave spectrum, ultra-dense networking, massive MIMO and novel application requirements drive the advancements in next generation networks (5G) [

39]. The industry standardizations and visions from [

40], which are under-way, are summarized in

Table 2.

3. Data Analytics Perspective on Next Generation Networks

With the massive amount of data coming in 5G and the intelligent decision making involved within, Big Data and Machine Learning can rightly be referred as the two pillars of 5G.

3.1. Big Data in Next Generation Networks

Given the massive advancement in cellular technology and increase in mobile devices, congestion of mobile networks is not unexpected. The resultant big data poses challenges to be processed as it is significantly different from ordinary data. As mentioned above, in next generation networks, number of devices will increase by a factor of one thousand [

49,

50]. It becomes discernible that the size of data in 5G networks will be massive as well as diverse in nature. Consequently, one would need to explore solutions for handling such a big data for optimizing 5G networks. The huge flood of data will create hindrance in fulfilling the core requirements and features of 5G networks. Thus, it is very difficult to efficiently deploy 5G networks without handling big data issues. There are big data analytics techniques which can be used for handling such type of data. We discuss these in the subsequent sections.

Significance of Big Data Analytics

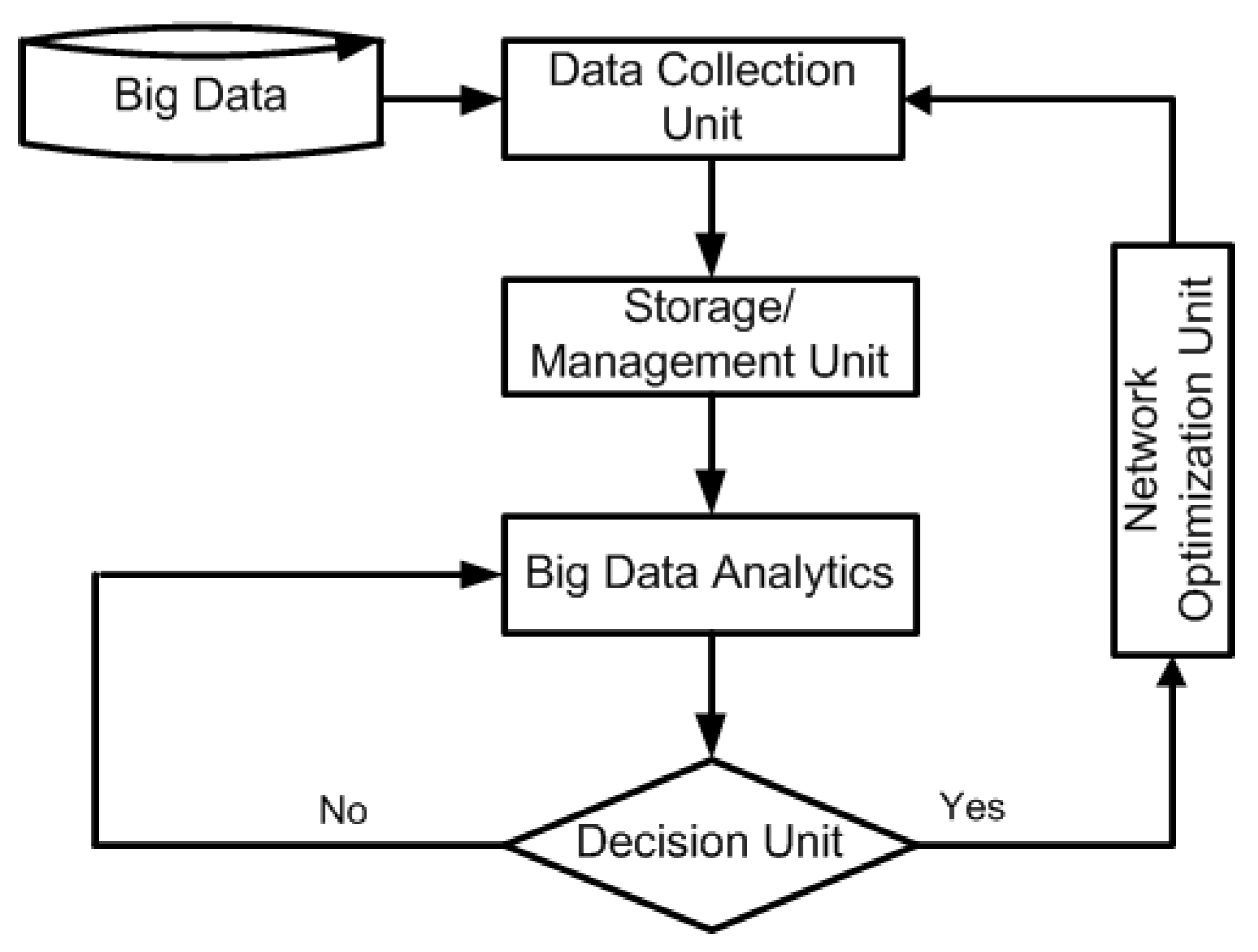

Data analytics is not a new phenomenon as data analytics algorithms have been in use for the last few decades. However, typical data analytics algorithms cannot be used for tackling big data. Thus, for big data handling, there should be novel big data analytics and advanced machine learning techniques such as deep learning, neural networks, representation lerning, transfer learning, active learning and online learning. Due to implementation of such type of techniques, we can efficiently process big data and make decisions accordingly. Raw data can be converted into right actionable data with the help of such analytics techniques. Recently, some frameworks have been proposed which shows the significance of big data and big data analytics in next generation networks. We discuss these models below. The flow and significance of big data in 5G networks can be best explained in

Figure 5.

Figure 5 represents flow structure of big data for next generation networks optimization. It is a closed-loop process and shows that, firstly, big data are collected from user’s equipment as well as from a network. After collection of such huge data, storage and management of big data are also critical tasks. The data that are collected by the collection unit are in the raw form. These raw data include irregularities, misleading patterns, and noise. In this form, data cannot be sent to big data analytics unit for processing. Thus, big data storage/management unit performs data pre-processing and filtering to make the data ready for data analytics unit. On pre-processed and filtered data, intelligent decision-making task is performed by the big data analytics unit. In this unit, advanced data analytics and machine learning algorithms are used for transformation of data into useful data. Finally, useful or actionable data are fetched to network optimization unit. As it is a closed loop process, the data evolved from the network further fetch to big data storage unit and so on. In this way, this closed loop phenomena works and network optimizes.

Recently, Yan et al. [

51] presented a similar closed loop based phenomena for proactive caching and pushing technology. The authors in [

51] described that the maximum utilization of spectrum and improvement in capacity gain is made possible by accurately predicting the user demands, with aid from big data analytics. Similar idea is also presented in [

52].

For optimizing next-generation networks, Zheng et al. [

5] proposed a big data-driven model. The proposed model consists of four layers:

data acquisition layer,

data management and storage layer,

data analyzing layer and

network optimization layer. In the layered model, data are collected from user’s equipment, core network, radio access nodes and Internet service providers. Once collected, the data are shifted to management and storage layer, where performance and capacity can be scaled. This layer performs storing and management operation. After storing the data, the next hindrance is to analyze the massive data and extract meaningful information from it. To transform raw data in to actionable data, machine learning and data analytics techniques are implemented. Lastly, this meaningful data are given to the network optimization layer. This layer is able to identify issues and intelligence decision, i.e., efficiently allocation of resources. Hence, the big data-driven model can do performance optimization in the next-generation networks.

Fan et al. [

53] proposed an architecture for optimizing next-generation network with the help of mobile big data. In this architecture, the authors analyzed the big data collected from subscribers as well as networks and proposed a dynamic algorithm for bandwidth distribution. In the proposed approach, the information obtained from big data is used to form a cluster of the subscribers. A set of users accessing the same content, are put into the same cluster. Initially, the cluster head is chosen. Then, the users adjacent to the cluster head and attempting to access contents same as those accessed by the cluster head, become members of the cluster. Finally, resources are assigned to the cluster head which then distributes these amongst the cluster members. Hence, the throughput of the network will be increased with the aid of such architecture. This framework is applicable in scenario where several users try to access the same content. For example, if a football match is being tele-casted on the internet and is being watched by multiple users, the architecture would be helpful to ensure the best QoS for all the users.

Self-organizing network (SON) in next generation networks is another dimension, which can be optimized with big data analytics. A SON architecture based on big data was proposed by Imran et al. [

54]. With the help of the proposed SON architecture, a typical SON was transformed from reactive to pro-active state, which is useful for next generation networks. Hence, this proactive big data based SON architecture will fulfill close-to-zero requirements for next-generation networks and maximize throughput of the network. The goal of this architecture is to build end-to-end visibility of network through extraction of meaningful information from big data.

3.2. Optimization of Next, Generation Cellular Networks

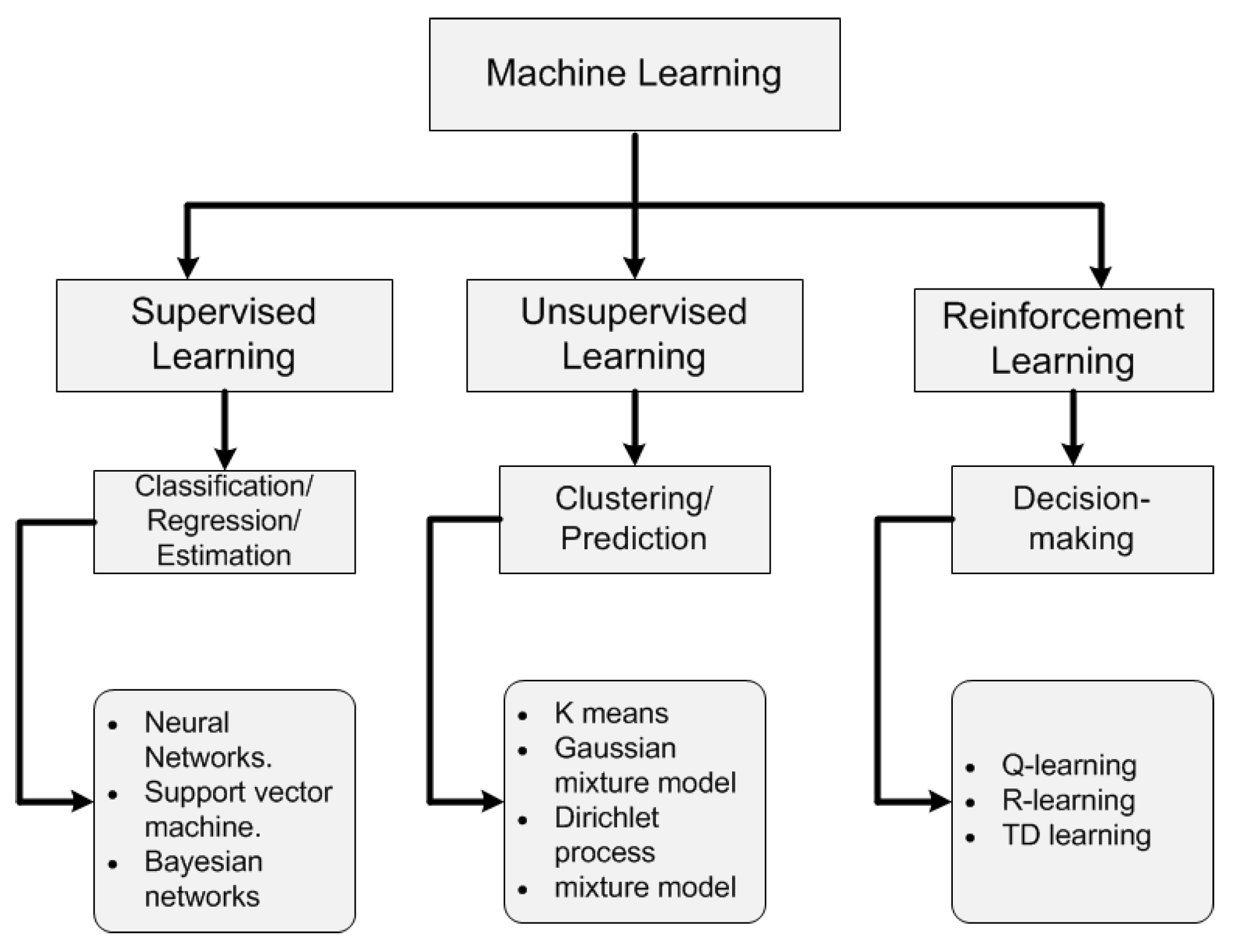

Machine learning is a branch of artificial intelligence. Machine learning helps computers to make (and improve) decisions on the basis of input events. The input source of machine learning algorithms is data; these input data could be diverse and from different data sources. Thus, machine learning is helpful in determining future outcomes based on the input data. For more details, the reader is referred to [

55]. The types of machine learning and the associated algorithms are shown in

Figure 6.

Mobile networks are already complex and it is expected that 5G networks will be much more complex compared to its predecessors. Besides being more complex, 5G networks will be smarter and intelligent in terms of network management, resource distribution, load balancing, cost efficiency, power efficiency, etc. These features can be realized for 5G with machine learning techniques. Thus, we can say that machine learning can perform a major role in deployment of 5G networks. For example, if we recall cellular networks in past decades, telecommunication engineers had to perform drive test of hundreds of miles after every software updates, or had to climb on 50-meter-high cell tower to adjust the tilt of antennas for best quality of coverage. However, recently, networks are getting intelligent and smarter, engineers can use advance software tools for remote drives test instead of doing tiring physical efforts and flying drones can be sent to inspect high rise sites.

As in 5G, there will be seamless and limitless connectivity of anything to anything and from everywhere to everywhere [

54]. According to Nokia technology vision 2020 [

56], number of events will increase from 400 to 10,000 events per hour for a network serving 10 million users. It is impossible to handle such huge number of events manually, and thus the network must be intelligent and smarter. Previously, self-optimizing networking (SON) have been used for improving network efficiency. A SON works in reactive states. As 5G networks will be different from previous networks and will be more complex, so SON is not enough to fulfill 5G network requirements. Nokia Vision 2020 [

56] has presented a new phenomenon for 5G which is called self-aware networks, which we discuss in the the next section.

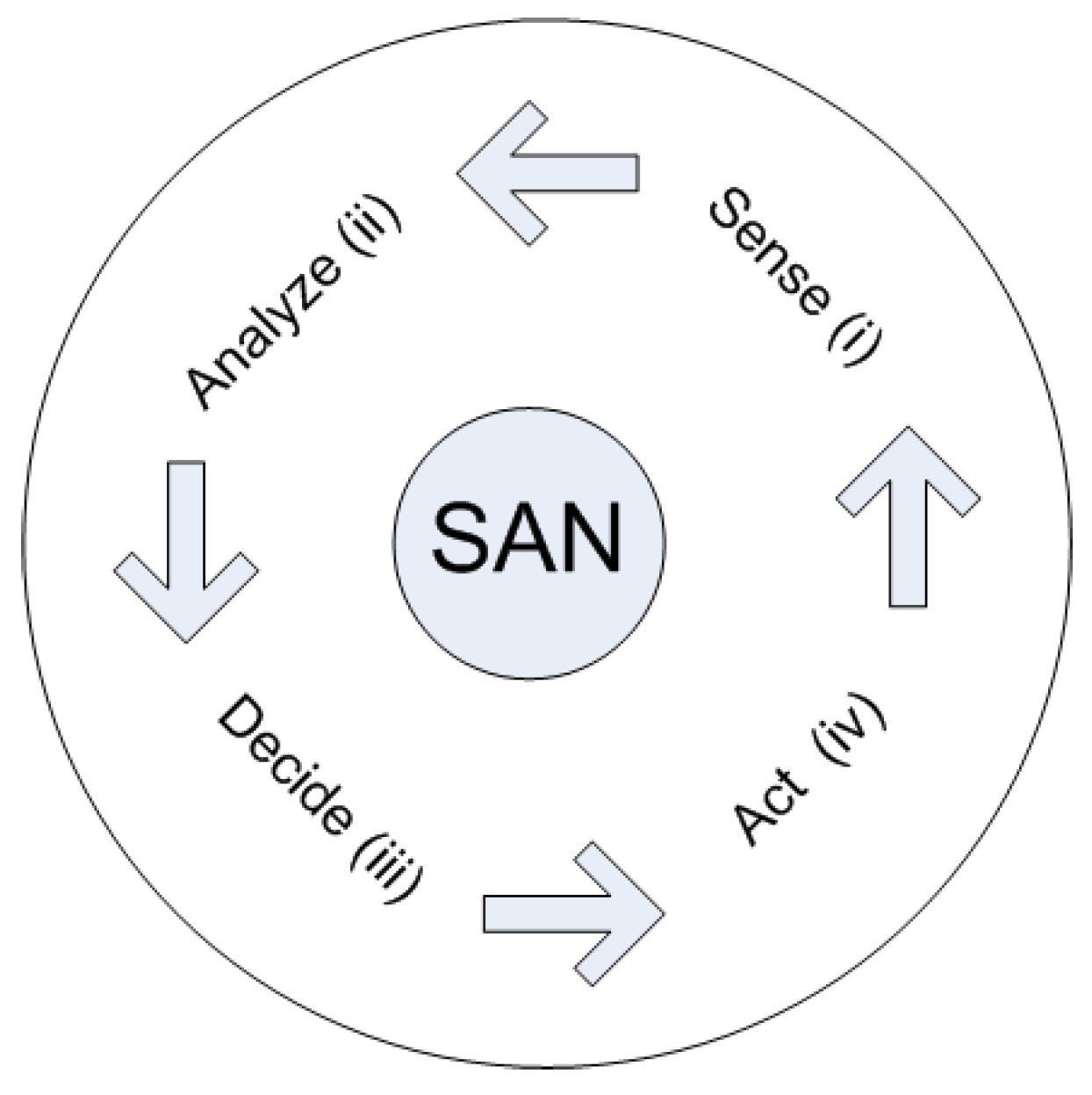

3.2.1. Self Aware Networks

It is obvious that SON only is not enough for fulfilling 5G network requirements. Thus, in self-aware networking, we combine the benefits of artificial intelligence (machine learning tools) and SON for efficient deployment of 5G networks. Such networks may predict changes and demands of the users as well as of networks and make intelligent decision and prediction for future. In this way, a problem might be solved before it occurs in networks. Hence, network subscriber will get best quality of service and quality of experience. Self-aware networks (SAN) will reduce capital expenditures (CAPEX) and operational complexity (OPEX) besides being dynamic to fulfill subscriber demands according to their requirements. The major steps in SAN are:

sense,

analyze,

decide and

act.

Sensing refers to acquiring the data from network and other users devices such as smart phones, sensor nodes, and touch-pad devices. These input data are fuel of SAN and other functionality of SAN starts from here. In

analyzing stage, the gathered data are analyzed to determine what is happening; which events are important and which are not; which user requires resources; and when and where resources are required.

Decision and

prediction are made after analyzing the input events. Decisions are made from the knowledge of past events while prediction is made for future anticipation. Multi-dimension optimization is performed in this stage. In last stage of SAN,

action is taken on the basis of decisions and predictions. Actions can be of different types such as configuration setting, coordination issues or multi-vendor orchestration. The block diagram of SAN is shown in

Figure 7.

3.2.2. Big Data Aware Next Generation Networks

As discussed earlier, the extracted insights of big data will be used in improving network service qualities as well as generate new mobile applications.

Figure 8 represents big data enabled network architecture consisting of three major stages. The first stage is sources of data. These include user devices, core network, and base system stations. The data evolved from these engines are in raw form. In intelligent big data enabled architectures as in [

57], there are storage and computing units at this stage, responsible for edge computing/caching for the provision of optimization solutions. At the second stage, core big data platform exists. This platform collects all the data from different sources. Data processing, storage, filtering, and management are performed at this stage. Lastly, the results generated by big data platform are sent to optimization unit for optimization purpose such as for resources optimization, RAN optimization, etc. Such type of big data-driven architecture is key to implementation of next-generation networks. Han et al. [

58] proposed a big data enabled framework for next-generation network. This architecture is promising for improvement of the performance of the next generation network as it carries significance for signaling procedure, simplified design and standardization procedures. Another framework, showing the viability of big data-driven architecture for mobile communication, is presented in [

51]. The authors in [

51] described that the maximum utilization of spectrum and improvement in capacity gain is made possible by accurately predicting the user demands, and the accurate prediction is provided by big data analytics. We discuss some major advantages of big data aware next generation networks below.

Cache Management: For an ultra-dense and hyper fast network, cache contents are needed to be organized efficiently. Cache is classified in terms of popularity of the contents. For example, contents such as music or video, which are the most popular, are stored in the cache. Similarly, the contents which are not popular anymore should be removed from the cache. In this way, bandwidth will be efficiently utilized and cache size will be preserved [

59].

Resource Optimization: Mobile subscribers accessing similar contents will share the resources. Meanwhile, if connection is not available with BS, the subscriber or device could ask for assistance from their neighbouring devices or subscribers. Similar idea is presented in [

60], where a crowd-enabled data transmission technique is proposed.

Cloud Processing: Cloud processing is an emerging paradigm for big analysis in big data aware networks. For cloud processing in big data aware networks, distributed framework such as Hadoop is used. Based on mobile traffic pattern, channel resources are reserved in advanced and contents are fed to the BSs. Such a smart behavior mobile traffic pattern could be used to allocate the resources (bandwidth and cache) to a particular location for some on-going event.

Software Defined Networking: In Software Defined Networking (SDN), a network administrator or engineer can shape network traffic from centralized control plane without touching individual switches/routers and can deliver services wherever needed in the network. Hence, it decouples data plane and control plane. Such decoupling makes network traffic management flexible, where packets are now sent on the basis of new consideration such payload length, application type and quality of services. These are summarized in

Table 3.

3.2.3. Machine Learning for Cognitive Networks

Cognitive networks (CN) are intelligent wireless networks, which analyze communication channel and tell which communication channel is free or occupied. Thus, the transmission can be carried out via the vacant channel while busy channels may be avoided. Cognitive networks optimize network performance in terms of interference avoidance and spectrum management. The cognitive networks can be implemented with the help of machine learning techniques. Like any machine learning algorithm, CN analyze the communication channel and then make intelligent decisions about communication channel, so transmission is carried out on basis of such decisions. Hence, all cognitive networks have three properties:

sensing,

learning and decision, and

adaptation [

66].

3.3. Some Advanced Learning Methods

In next generation networks, the data will be massive as well as complex and even incomplete. The data from mobile networks, for instance call details record (CDR) datasets, are also massive, incomplete and have misleading patterns. Analysis of such type of data is also challenging [

61]. One may explore advanced machine learning techniques such as active learning, online learning, deep learning, distributed and parallel learning, transfer learning and representation learning to make sense of the complex data. We briefly discuss these advanced learning techniques below.

3.3.1. Active Learning

In many real-world scenarios, data are available largely but seldom labeled. Data labels acquisition is generally costly. Learning from partially labeled data is time consuming and complex. In such cases, active learning techniques fit better. Through active learning, most significant labeled instances are to be selected for learning [

67]. Through this approach, high accuracy becomes possible by selecting few labeled data instances [

68]. Due to the high degree of accuracy of active learning (AL), Bahman et al. [

69] proposed a framework for analyzing Android applications. They adapted hybrid SVM-AL model for malicious application detection in android devices. The number of android devices are increasing rapidly and will only see a boom in use with the implementation of next-generation network, active learning technique will be a valuable tool for such applications.

3.3.2. Representation Learning

Recently, datasets with multi-dimensional features are becoming common. The extraction and organization of useful information from such datasets are challenging if only traditional learning methods are used. For such kind of datasets, representation learning outperforms other techniques [

70,

71]. With the aid of representation learning, a meaningful and useful representation of the data is obtained, which reduces the complexity of information extraction and organization from the data. Through this approach, it becomes possible to achieve a limited number of learned representation dimensions from a large number of inputs. This process reduces computational complexity as well as improves statistical efficiency [

70].

3.3.3. Transfer Learning

In traditional machine learning techniques, it is assumed that the distribution and feature space for training and test data are the same. Because of veracity and heterogeneity of big data, this assumption cannot be preserved for training and test dataset. To overcome this problem, transfer learning technique has been proposed. Through transfer learning, it is possible to extract information from one or more source domains and apply the extracted information on target domain [

72,

73]. By transfer learning, new problems can be solved quickly from the extracted information. Thus, it improves problem solving efficiency. Recently, Tingting et al. [

74] proposed a proactive caching mechanism named learning based cooperative caching based on transfer learning. The authors in [

74] described that transfer learning aided cache mechanism performs better in terms of improving quality of experience and minimizing transmission cost for next-generation networks.

3.3.4. Deep Learning

The recently emerged area of machine learning is deep learning which has widely become popular for knowledge extraction and representation learning tasks particularly when huge raw data are available [

75,

76,

77]. Deep learning approaches can be applied according to requirements of the tasks. Recently, these approaches have been successful in various fields such as computer vision, medical and health care systems, bioinformatics, security and privacy systems, search engines optimization, information and communication systems. The core purpose of these algorithms is to process large datasets and extract useful information and then, based on such useful insights, make decisions and predict future outcomes/scenarios. Zhiyuan et al. [

78] presented a deep reinforcement learning (DRL) assisted architecture for cloud radio access networks (C-RAN). Because of exponentially growth in the data flow, CRAN has become a key enabler for next-generation networks. The authors in [

78] described that the DRL based resources distribution architecture can improve the power efficiency of CRAN and quality of satisfaction of mobile subscribers. Another deep learning based framework for detecting cyber threats in networks has been proposed in [

79].

3.3.5. Distributed and Parallel Learning

With the advancement in distributing computing and systems, distributed and parallel learning approaches are becoming a significant research field for big data analytics. In this technique, learning process is distributed among different workstations. This distribution phenomenon is a natural way for improving learning algorithm efficiency [

80]. In traditional learning procedure, a central data processing unit requires the collection of huge volume data. However, in distributed learning, data processing is performed in distributed manner. Thus, in distributed learning, failure of the entire system due to a single point of failure can be avoided and the processing load of huge volume of data can be divided [

81]. Another major advancement is in the field of parallel learning [

82]. The readers may read [

83] for detail description on distributed and parallel learning.

3.3.6. Online Learning

The processing of non-stationary time series data is challenging, as the data distribution changes with time. To analyze such dynamic data, online learning techniques are applied. In these techniques, data are received sequentially and are used to predict future data at each step. This is used in real time processing of streaming data [

84,

85].

Table 4 briefly summarizes different advanced learning methods and their associated tasks. Recently, Ismail et al. [

86] proposed an online learning based framework for energy efficient resource allocation in next generation heterogamous CRANs. In this architecture, the spectrum is divided into two resource blocks (RBs), where each RB is assigned to a specific group of users according to their position and QoS requirements. This framework works in both centralized and decentralized online learning manner. Hence, the issue of single point of failure is addressed in this framework. Another cell outage management based architecture for meeting the requirement of the next-generation heterogeneous network has been presented in [

87]. This architecture uses online learning approach for cell outage compensation.

Finally, we close this section with

Table 4 summarizing various advanced learning methods and associated solution for next generation networks provided by them.

3.3.7. Role of Neural Networks in Next Generation Networks

Neural network is a branch of artificial intelligence that works in a similar way as human brain works. In human brain, there are millions of neurons that take decision after analyzing a particular action. In the same way, in neural networks, there are many nodes. These nodes are connected with input layer, output layer and hidden layer. The output of neural network is determined by organization and weights of these connected nodes. We can say that neural networks work as non-digital computers. The field of study of neural networks is under consideration from last decades. The success of neural networks is widely reported for computer vision, speech recognition, control science and robotics system, medical and health care system and data analytics systems. Recently, Ahad et al. [

88] presented the importance of neural networks in wireless system. The applications of neural networks in wireless networks, as highlighted in [

88], include:

modeling of non-linearity,

localization issues,

improving quality of service and quality of experience,

efficient routing,

load sharing,

efficient security and privacy policy,

observing-spectral sensing,

signal detection and classification,

pattern based communication,

improved wireless sensor network structure,

network optimization by event prediction, and

fault detection.

To achieve the required features of 5G such as close to zero latency and maximum throughput, the 5G networks must be intelligent, smarter and proactive. From the literature [

88], it could be anticipated that neural network could perform a major role for fulfilling the 5G networks. Ahad et al. [

88] discussed different types of artificial neural networks such as feedback neural networks, deep neural network, recurrent neural networks, fuzzy neural networks, wavelet neural networks and random neural networks. The authors in [

88] also presented how these neural networks can be applied in wireless networks and how they can improve the efficiency of wireless networks.

After the evolution of 3G networks, i.e., wideband code division multiple access (WCDMA) and universal mobile telecommunication service (UMTS), the modulation schemes need changes. These modulation schemes have non-linearity. Such non-linearity has created problem for designing power amplifier. Neural networks are adapted to overcome such non-linearity. Zheng et al. [

89] suggested that non-linearity such as inter-symbol interference and multipath fading in core division multiple access (CDMA) technique can be overcome by using multilayer feed forward neural networks (ML-FFNN). The authors in [

89] described that ML-FFNN can efficiently reconstruct the signal removing non-linearity. Similarly, for 4G LTE networks, MIMO-OFDM has been adapted. In MIMO-OFDM, channel estimation and channel equalization is needed for minimizing signal fading. Previously, least mean square error method was used for channel estimation, but the drawback of this method is that it increases mean square error. Omri et al. [

90] suggested that ML-FFNN can be used for channel estimation in MIMO-OFDM. The authors in [

90] described that ML-FFNN improved channel estimation compared to least square method for MIMO-OFDM. Hence, the benefits of neural networks are obvious, so if MIMO-OFDM or any other novel multiple access technique use in 5G, neural networks can efficiently be adapted for improving the performance of such technique.

4. Current State-of-the-Art and Open Issues

In this section, we briefly discuss some open issues. This also gives a direction on further advancements of studies at the crossroad of big data and 5G, as addressing these issues can give major breakthroughs.

Proactive caching and computing: Computing and caching cost for next generation networks can be minimized through modern big data analytics approaches. In this way, resources are to be efficiently distributed and utilized resulting in balancing the caching and computing overhead. For instance, the intermediate and final results should only be stored if meaningful, as storing all the information is costly.

Security and Privacy: Big data analytics uncovers the hidden information from within the huge data. Consequently, massive data analysis can cause security and privacy issues. During the storage, management and processing stages, data should be encrypted well, to assure that it cannot be manipulated or altered. Moreover, access to the data should be allowed for authorize entities only via secured channels. Thus, security and privacy issues are key concerns for such a massive data analysis and should be addressed intelligently.

Big Heterogeneous data: Big data sources are of different types with different data rate, mobility, and packet loss. The analysis of heterogeneous data in wireless networks is challenging. Heterogeneous data bring spatio-temporal dynamics. Thus, unconventional approaches are required for big spatio-temporal data analysis in mobile networks.

5. Conclusions

In this survey, we present an introduction of the 5G communication networks of the future. We also present the various requirements and challenges and possible design issues which need to be addressed for the realization of 5G networks. The key technologies discussed include the ultra-dense networking, millimeter wave spectrum and massive MIMO. We identify the challenges which need to be overcome and the possible potential architecture design towards 5G implementation. We also outline the energy concern in 5G networks and find that it is always at the foremost of the challenges for 5G networks. During device design, service models are under consideration for 5G, and their backward compatibility will be of huge importance for both the users as well as for service providers. We also present a big data perspective on 5G and the opportunities that machine learning techniques have to offer for learning, inference and decision making on 5G data. There are even more domains in 5G networks which have not been covered in this text in detail, however, possess key importance. Security and privacy of the candidate architectures, hardware, and data transfer protocols in 5G networks pose major challenges and require further research. We hope that this survey will give readers a useful insight into the future generation networks and help the beginners to develop an understanding and realize the opportunities and challenges in 5G networks.

Author Contributions

Conceptualization, K.S. and H.A.; Methodology, K.S. and H.A.; Resources, H.A. and Z.Z.; Writing–Original Draft Preparation, K.S. and H.A.; Writing–Review & Editing, K.S., H.A. and Z.Z.; Supervision, H.A. and Z.Z.; Funding Acquisition, Z.Z.

Funding

This work was supported by the key project of the National Natural Science Foundation of China (No. 61431001), Beijing Natural Science Foundation (L172026), Key Laboratory of Cognitive Radio and Information Processing, Ministry of Education (Guilin University of Electronic Technology), and the Foundation of Beijing Engineering and Technology Center for Convergence Networks and Ubiquitous Services.

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| 3GPP | 3rd Generation Partnership Project |

| AL | Active Learning |

| BS | Base Station |

| CP | Cyclic Prefix |

| CDMA | Code Division Multiple Access |

| CoMP | Coordinated Multipoint |

| C-RAN | Cloud-Radio Access Network |

| CRN | Cognitive Radio Network |

| DRL | Deep Reinforcement Learning |

| EHF | Extreme High Frequency |

| FSPL | Free Space Path Loss |

| GFDM | Generalized Frequency Division Multiplexing |

| FFT: | Fast Fourier Transform |

| HSPA | high speed packet access |

| IoT | Internet of Things |

| mbps | Mega bit per second |

| MBS | Macro-Cell Base Station |

| MIMO | Multiple Input Multiple Output |

| mmWave | millimeter wave |

| NFV | Network Function Virtualization |

| OFDM | Orthogonal Frequency Division Multiplexing |

| RAT | Radio Access Technology |

| RRH | Remote Radio Head |

| SBS | Small-Cell Base Stations |

| SDN | Software Defined Networking |

| SINR | Signal-to-Interference-plus-Noise Ratio |

| TDMA | Time Division Multiple Access |

| UE | User Equipment |

| UHF | Ultra High Frequency |

References

- Mobile, C.V. Global Mobile Data Traffic Forecast Update 2010–2015. Cisco White Paper. Available online: https://www.cisco.com (accessed on 22 November 2015).

- Andrews, J.; Buzzi, S.; Choi, W.; Hanly, S.; Lozano, A.; Soong, A.; Zhang, J. What Will 5G Be? IEEE J. Sel. Areas Commun. 2014, 32, 1065–1082. [Google Scholar] [CrossRef]

- Panwar, N.; Sharma, S.; Singh, A.K. A survey on 5G: The next generation of mobile communication. Phys. Commun. 2016, 18, 64–84. [Google Scholar] [CrossRef]

- Bi, S.; Zhang, R.; Ding, Z.; Cui, S. Wireless communications in the era of big data. IEEE Commun. Mag. 2015, 53, 190–199. [Google Scholar] [CrossRef]

- Zheng, K.; Yang, Z.; Zhang, K.; Chatzimisios, P.; Yang, K.; Xiang, W. Big data-driven optimization for mobile networks toward 5G. IEEE Netw. 2016, 30, 44–51. [Google Scholar] [CrossRef]

- Buda, T.S.; Assem, H.; Xu, L.; Raz, D.; Margolin, U.; Rosensweig, E.; Lopez, D.R.; Corici, M.I.; Smirnov, M.; Mullins, R.; et al. Can machine learning aid in delivering new use cases and scenarios in 5G? In Proceedings of the 2016 IEEE/IFIP Network Operations and Management Symposium (NOMS), Istanbul, Turkey, 25–29 April 2016; pp. 1279–1284. [Google Scholar]

- Jiang, C.; Zhang, H.; Ren, Y.; Han, Z.; Chen, K.C.; Hanzo, L. Machine learning paradigms for next-generation wireless networks. IEEE Wirel. Commun. 2017, 24, 98–105. [Google Scholar] [CrossRef]

- Agyapong, P.K.; Iwamura, M.; Staehle, D.; Kiess, W.; Benjebbour, A. Design considerations for a 5G network architecture. IEEE Commun. Mag. 2014, 52, 65–75. [Google Scholar] [CrossRef]

- Arani, A.H.; Omidi, M.J.; Mehbodniya, A.; Adachi, F. A handoff algorithm based on estimated load for dense green 5G networks. In Proceedings of the IEEE Global Communications Conference (GLOBECOM), San Diego, CA, USA, 6–10 December 2015; pp. 1–7. [Google Scholar]

- Qiang, L.; Li, J.; Touati, C. A user centered multi-objective handoff scheme for hybrid 5G environments. IEEE Trans. Emerg. Top. Comput. 2017, 5, 380–390. [Google Scholar] [CrossRef]

- Bilen, T.; Canberk, B.; Chowdhury, K.R. Handover Management in Software-Defined Ultra-Dense 5G Networks. IEEE Netw. 2017, 31, 49–55. [Google Scholar] [CrossRef]

- Ghosh, S.K.; Ghosh, S.C. A predictive handoff mechanism for 5G ultra dense networks. In Proceedings of the 2017 IEEE 16th International Symposium on Network Computing and Applications (NCA), Cambridge, MA, USA, 30 October–1 November 2017; pp. 1–5. [Google Scholar]

- Sharma, V.; You, I.; Leu, F.Y.; Atiquzzaman, M. Secure and efficient protocol for fast handover in 5G mobile Xhaul networks. J. Netw. Comput. Appl. 2018, 102, 38–57. [Google Scholar] [CrossRef]

- Chandrasekhar, V.; Andrews, J.; Gatherer, A. Femtocell networks: A survey. IEEE Commun. Mag. 2008, 46, 59–67. [Google Scholar] [CrossRef]

- Dohler, M.; Heath, R.; Lozano, A.; Papadias, C.; Valenzuela, R. Is the PHY layer dead? IEEE Commun. Mag. 2011, 49, 159–165. [Google Scholar] [CrossRef]

- Dhillon, H.; Ganti, R.; Baccelli, F.; Andrews, J. Modeling and Analysis of K-Tier Downlink Heterogeneous Cellular Networks. IEEE J. Sel. Areas Commun. 2012, 30, 550–560. [Google Scholar] [CrossRef]

- Larew, S.G.; Thomas, T.A.; Cudak, M.; Ghosh, A. Air Interface Design and Ray Tracing Study for 5G Millimeter Wave Communications. In Proceedings of the IEEE GLOBECOM Workshop, Atlanta, GA, USA, 9–13 December 2013; Volume 1, pp. 117–122. [Google Scholar]

- Qualcomm. Extending LTE Advanced to Unlicensed Spectrum. White Paper. Available online: https://www.qualcomm.com/media/documents/files/white-paper-extending-lte-advanced-to-unlicensed-spectrum.pdf (accessed on 22 January 2016).

- Corroy, S.; Falconetti, L.; Mathar, R. Cell association in small heterogeneous networks: Downlink sum rate and min rate maximization. In Proceedings of the 2012 IEEE Wireless Communications and Networking Conference (WCNC), Shanghai, China, 1–4 April 2012; pp. 888–892. [Google Scholar]

- Ye, Q.; Rong, B.; Chen, Y.; Al-Shalash, M.; Caramanis, C.; Andrews, J. User Association for Load Balancing in Heterogeneous Cellular Networks. IEEE Trans. Wirel. Commun. 2013, 12, 2706–2716. [Google Scholar] [CrossRef]

- Singh, S.; Andrews, J. Joint Resource Partitioning and Offloading in Heterogeneous Cellular Networks. IEEE Trans. Wirel. Commun. 2014, 13, 888–901. [Google Scholar] [CrossRef]

- Ye, Q.; Al-Shalashy, M.; Caramanis, C.; Andrews, J. On/Off macrocells and load balancing in heterogeneous cellular networks. In Proceedings of the 2013 IEEE Global Communications Conference (GLOBECOM), Atlanta, GA, USA, 9–13 December 2013; pp. 3814–3819. [Google Scholar]

- Lee, J.; Kim, Y.; Lee, H.; Ng, B.L.; Mazzarese, D.; Liu, J.; Xiao, W.; Zhou, Y. Coordinated multipoint transmission and reception in LTE-advanced systems. IEEE Commun. Mag. 2012, 50, 44–50. [Google Scholar] [CrossRef]

- Kim, J.; Lee, H.W.; Chong, S. Virtual Cell Beamforming in Cooperative Networks. IEEE J. Sel. Areas Commun. 2014, 32, 1126–1138. [Google Scholar] [CrossRef]

- Andrews, J. Seven ways that HetNets are a cellular paradigm shift. IEEE Commun. Mag. 2013, 51, 136–144. [Google Scholar] [CrossRef]

- Naderializadeh, N.; Avestimehr, A. ITLinQ: A new approach for spectrum sharing in device-to-device communication systems. IEEE Int. Sympos. Inf. Theory 2014, 32, 1573–1577. [Google Scholar]

- Rappaport, T.; Sun, S.; Mayzus, R.; Zhao, H.; Azar, Y.; Wang, K.; Wong, G.; Schulz, J.; Samimi, M.; Gutierrez, F. Millimeter Wave Mobile Communications for 5G Cellular: It Will Work! Access IEEE 2013, 1, 335–349. [Google Scholar] [CrossRef]

- Roh, W.; Seol, J.Y.; Park, J.; Lee, B.; Lee, J.; Kim, Y.; Cho, J.; Cheun, K.; Aryanfar, F. Millimeter-wave beamforming as an enabling technology for 5G cellular communications: Theoretical feasibility and prototype results. IEEE Commun. Mag. 2014, 52, 106–113. [Google Scholar] [CrossRef]

- Rangan, S.; Rappaport, T.; Erkip, E. Millimeter-Wave Cellular Wireless Networks: Potentials and Challenges. Proc. IEEE 2014, 102, 366–385. [Google Scholar] [CrossRef]

- Marcus, M.; Pattan, B. Millimeter wave propagation; spectrum management implications. Microw. Mag. IEEE 2005, 6, 54–62. [Google Scholar] [CrossRef]

- Bai, T.; Alkhateeb, A.; Heath, R.W., Jr. Coverage and Capacity of Millimeter-Wave Cellular Networks. IEEE Commun. Mag. 2014, 52, 70–77. [Google Scholar]

- Foschini, G.; Gans, M. On limits of wireless communications in a fading environment when using multiple antennas. Wirel. Pers. Commun. 1998, 6, 311–335. [Google Scholar] [CrossRef]

- Ghosh, A.; Zhang, J.; Andrews, J.G. Fundamentals of LTE; Prentice-Hall: Englewood Cliffs, NJ, USA, 2010. [Google Scholar]

- Boccardi, F.; Heath, R.; Lozano, A.; Marzetta, T.; Popovski, P. Five disruptive technology directions for 5G. IEEE Commun. Mag. 2014, 52, 74–80. [Google Scholar] [CrossRef]

- Ashikhmin, A.; Marzetta, T. Pilot contamination precoding in multi-cell large scale antenna systems. In Proceedings of the 2012 IEEE International Symposium on Information Theory Proceedings (ISIT), Cambridge, MA, USA, 1–6 July 2012; pp. 1137–1141. [Google Scholar]

- 3GPP TSG RAN Plenary. Study on 3D-Channel Model for Elevation Beamforming and FD-MIMO Studies for LTE. Available online: http://www.3gpp.org/DynaReport/FeatureOrStudyItemFile-580042.htm (accessed on 12 December 2015).

- Wu, S.; Wang, C.X.; Aggoune, E.H.M.; Alwakeel, M.M.; He, Y. A non-stationary 3-D wideband twin-cluster model for 5G massive mimo channels. IEEE J. Sel. Areas Commun. 2014, 32, 1207–1218. [Google Scholar] [CrossRef]

- Kammoun, A.; Khanfir, H.; Altman, Z.; Debbah, M.; Kamoun, M. Preliminary results on 3D channel modeling: From theory to standardization. IEEE J. Sel. Areas Commun. 2014, 32, 1219–1229. [Google Scholar] [CrossRef]

- Agiwal, M.; Roy, A.; Saxena, N. Next, generation 5G wireless networks: A comprehensive survey. IEEE Commun. Surv. Tutor. 2016, 18, 1617–1655. [Google Scholar] [CrossRef]

- 3GPP. The Mobile Broadband Standard. 2015. Available online: http://www.3gpp.org/news-events/3gpp-news/1674-timeline_5g (accessed on 12 December 2015).

- Wunder, G.; Jung, P.; Kasparick, M.; Wild, T.; Schaich, F.; Chen, Y.; Brink, S.; Gaspar, I.; Michailow, N.; Festag, A.; et al. 5GNOW: Non-orthogonal, asynchronous waveforms for future mobile applications. IEEE Commun. Mag. 2014, 52, 97–105. [Google Scholar] [CrossRef]

- 5G Infrastructure Association. The 5G Infrastructure Public Private Partnership: The Next Generation of Communication Networks and Services. Available online: https://5g-ppp.eu/ (accessed on 15 July 2016).

- Osseiran, A.; Boccardi, F.; Braun, V.; Kusume, K.; Marsch, P.; Maternia, M.; Queseth, O.; Schellmann, M.; Schotten, H.; Taoka, H.; et al. Scenarios for 5G mobile and wireless communications: The vision of the METIS project. IEEE Commun. Mag. 2014, 52, 26–35. [Google Scholar] [CrossRef]

- Argentati, S.; Cusumano, N. Horizon 2020: The EU Framework Programme for Research and Innovation; European Commission: Brussels, Belgium, 2015. [Google Scholar]

- R & D Center. Samsung Electronics “5G Vision”; White Paper; Samsung Electronics Co., Ltd.: Suwon, Korea, 2015. [Google Scholar]

- Networks, N. Looking Ahead to 5G: Building a Virtual Zero Latency Gigabit Experience; White Paper; Samsung Electronics Co., Ltd.: Suwon, Korea, 2014. [Google Scholar]

- Huawei. 5G: A Technology Vision; White Paper; Huawei: Shenzhen, China, 2014. [Google Scholar]

- Ericsson. 5G Radio Access; White Paper; Ericsson: Stockholm, Sweden, 2015. [Google Scholar]

- Ericsson. More Than 50 Billion Connected Devices; White Paper; Ericsson: Stockholm, Sweden, 2011. [Google Scholar]

- Sultan, K.; Ali, H. Where Big Data Meets 5G? In Proceedings of the Second International Conference on Internet of Things, Data and Cloud Computing, Cambridge, UK, 22–23 March 2017; pp. 103:1–103:4. [Google Scholar]

- Yan, Q.; Chen, W.; Poor, H.V. Big Data Driven Wireless Communications: A Human-in-the-Loop Pushing Technique for 5G Systems. IEEE Wirel. Commun. 2018, 25, 64–69. [Google Scholar] [CrossRef]

- Chen, M.; Qian, Y.; Hao, Y.; Li, Y.; Song, J. Data-driven computing and caching in 5G networks: Architecture and delay analysis. IEEE Wirel. Commun. 2018, 25, 70–75. [Google Scholar] [CrossRef]

- Fan, B.; Leng, S.; Yang, K. A dynamic bandwidth allocation algorithm in mobile networks with big data of users and networks. IEEE Netw. 2016, 30, 6–10. [Google Scholar] [CrossRef]

- Imran, A.; Zoha, A.; Abu-Dayya, A. Challenges in 5G: How to empower SON with big data for enabling 5G. IEEE Netw. 2014, 28, 27–33. [Google Scholar] [CrossRef]

- Burch, C. A Survey of Machine Learning; Technical Report; Pennsylvania Governor’s School for the Sciences: Pittsburgh, PA, USA, 2001; Volume 4. [Google Scholar]

- Nokia Networks. Technology Vision 2020 Teaching Networks to Be Self-Aware. Technical Report. Available online: https://networks.nokia.com/innovation/technology-vision/teach-networks-to-be-self-aware (accessed on 22 March 2017).

- Chih-Lin, I.; Sun, Q.; Liu, Z.; Zhang, S.; Han, S. The Big-Data-Driven Intelligent Wireless Network: Architecture, Use Cases, Solutions, and Future Trends. IEEE Veh. Technol. Mag. 2017, 12, 20–29. [Google Scholar]

- Han, S.; Chih-Lin, I.; Li, G.; Wang, S.; Sun, Q. Big Data Enabled Mobile Network Design for 5G and Beyond. IEEE Commun. Mag. 2017, 55, 150–157. [Google Scholar] [CrossRef]

- Baştuğ, E.; Bennis, M.; Zeydan, E.; Kader, M.A.; Karatepe, I.A.; Er, A.S.; Debbah, M. Big data meets telcos: A proactive caching perspective. J. Commun. Netw. 2015, 17, 549–557. [Google Scholar] [CrossRef]

- Guo, B.; Chen, C.; Zhang, D.; Yu, Z.; Chin, A. Mobile crowd sensing and computing: When participatory sensing meets participatory social media. IEEE Commun. Mag. 2016, 54, 131–137. [Google Scholar] [CrossRef]

- Zoha, A.; Saeed, A.; Farooq, H.; Rizwan, A.; Imran, A.; Imran, M.A. Leveraging Intelligence from Network CDR Data for Interference aware Energy Consumption Minimization. IEEE Trans. Mobile Comput. 2017. [Google Scholar] [CrossRef]

- Taleb, T.; Samdanis, K.; Mada, B.; Flinck, H.; Dutta, S.; Sabella, D. On multi-access edge computing: A survey of the emerging 5G network edge cloud architecture and orchestration. IEEE Commun. Surv. Tutor. 2017, 19, 1657–1681. [Google Scholar] [CrossRef]

- Zeydan, E.; Bastug, E.; Bennis, M.; Kader, M.A.; Karatepe, I.A.; Er, A.S.; Debbah, M. Big data caching for networking: Moving from cloud to edge. IEEE Commun. Mag. 2016, 54, 36–42. [Google Scholar] [CrossRef]

- Liyanage, M.; Ahmad, I.; Okwuibe, J. Software Defined Security Monitoring in 5G Networks. In A Comprehensive Guide to 5G Security; John Wiley & Sons: Hoboken, NJ, USA, 2018; p. 231. [Google Scholar]

- Huang, X.; Yu, R.; Kang, J.; Gao, Y.; Maharjan, S.; Gjessing, S.; Zhang, Y. Software Defined Energy Harvesting Networking for 5G Green Communications. IEEE Wirel. Commun. 2017, 24, 38–45. [Google Scholar] [CrossRef]

- Moy, C.; Legouable, R. Machine Learning Proof-of-Concept for Opportunistic Spectrum Access. Technical Report. 2014. Available online: https://hal-supelec.archives-ouvertes.fr/hal-01115860/document (accessed on 29 May 2018).

- Fu, Y.; Li, B.; Zhu, X.; Zhang, C. Active learning without knowing individual instance labels: A pairwise label homogeneity query approach. IEEE Trans. Knowl. Data Eng. 2014, 26, 808–822. [Google Scholar] [CrossRef]

- Settles, B. Active Learning Literature Survey; University of Wisconsin: Madison, WI, USA, 2010; Volume 52, p. 11. [Google Scholar]

- Rashidi, B.; Fung, C.; Bertino, E. Android malicious application detection using support vector machine and active learning. In Proceedings of the 2017 13th International Conference on Network and Service Management (CNSM), Tokyo, Japan, 26–30 November 2017; pp. 1–9. [Google Scholar]

- Bengio, Y.; Courville, A.; Vincent, P. Representation learning: A review and new perspectives. IEEE Trans. Pattern Anal. Mach. Intell. 2013, 35, 1798–1828. [Google Scholar] [CrossRef] [PubMed]

- Huang, F.; Yates, A. Biased representation learning for domain adaptation. In Proceedings of the 2012 Joint Conference on Empirical Methods in Natural Language Processing and Computational Natural Language Learning. Association for Computational Linguistics, Jeju Island, Korea, 12–14 July 2012; pp. 1313–1323. [Google Scholar]

- Xiang, E.W.; Cao, B.; Hu, D.H.; Yang, Q. Bridging domains using world wide knowledge for transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 770–783. [Google Scholar] [CrossRef]

- Pan, S.J.; Yang, Q. A survey on transfer learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Hou, T.; Feng, G.; Qin, S.; Jiang, W. Proactive Content Caching by Exploiting Transfer Learning for Mobile Edge Computing. In Proceedings of the 2017 IEEE Global Communications Conference (GLOBECOM 2017), Singapore, 4–8 December 2017; pp. 1–6. [Google Scholar]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [PubMed]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Ali, H.; Tran, S.N.; Benetos, E.; d’Avila Garcez, A.S. Speaker recognition with hybrid features from a deep belief network. Neural Comput. Appl. 2018, 29, 13–19. [Google Scholar] [CrossRef]

- Xu, Z.; Wang, Y.; Tang, J.; Wang, J.; Gursoy, M.C. A deep reinforcement learning based framework for power-efficient resource allocation in cloud RANs. In Proceedings of the 2017 IEEE International Conference on Communications (ICC), Paris, France, 21–25 May 2017; pp. 1–6. [Google Scholar]

- Maimó, L.F.; Gómez, L.P.; Clemente, F.J.G.; Pérez, M.G.; Pérez, G.M. A Self-Adaptive Deep Learning-Based System for Anomaly Detection in 5G Networks. IEEE Access 2018, 6, 7700–7712. [Google Scholar] [CrossRef]

- Peteiro-Barral, D.; Guijarro-Berdiñas, B. A survey of methods for distributed machine learning. Progress Artif. Intell. 2013, 2, 1–11. [Google Scholar] [CrossRef]

- Zheng, H.; Kulkarni, S.R.; Poor, H.V. Attribute-distributed learning: Models, limits, and algorithms. IEEE Trans. Signal Process. 2011, 59, 386–398. [Google Scholar] [CrossRef]

- Upadhyaya, S.R. Parallel approaches to machine learning—A comprehensive survey. J. Parallel Distrib. Comput. 2013, 73, 284–292. [Google Scholar] [CrossRef]

- Bekkerman, R.; Bilenko, M.; Langford, J. Scaling Up Machine Learning: Parallel and Distributed Approaches; Cambridge University Press: Cambridge, UK, 2011. [Google Scholar]

- Shalev-Shwartz, S. Online learning and online convex optimization. Found. Trends Mach. Learn. 2012, 4, 107–194. [Google Scholar] [CrossRef]

- Wang, J.; Zhao, P.; Hoi, S.C.; Jin, R. Online feature selection and its applications. IEEE Trans. Knowl. Data Eng. 2014, 26, 698–710. [Google Scholar] [CrossRef]

- AlQerm, I.; Shihada, B. Sophisticated Online Learning Scheme for Green Resource Allocation in 5G Heterogeneous Cloud Radio Access Networks. IEEE Trans. Mobile Comput. 2018. [Google Scholar] [CrossRef]

- Onireti, O.; Zoha, A.; Moysen, J.; Imran, A.; Giupponi, L.; Imran, M.A.; Abu-Dayya, A. A Cell Outage Management Framework for Dense Heterogeneous Networks. IEEE Trans. Veh. Technol. 2016, 65, 2097–2113. [Google Scholar] [CrossRef]

- Ahad, N.; Qadir, J.; Ahsan, N. Neural networks in wireless networks: Techniques, applications and guidelines. J. Netw. Comput. Appl. 2016, 68, 1–27. [Google Scholar] [CrossRef]

- Zheng, Z.W. Receiver design for uplink multiuser code division multiple access communication system based on neural network. Wirel. Pers. Commun. 2010, 53, 67–79. [Google Scholar] [CrossRef]

- Omri, A.; Hamila, R.; Hasna, M.O.; Bouallegue, R.; Chamkhia, H. Estimation of highly Selective Channels for Downlink LTE MIMO-OFDM System by a Robust Neural Network. J. Ubiquitous Syst. Pervasive Netw. 2011, 2, 31–38. [Google Scholar] [CrossRef]

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).