Comparative Calculation of Spectral Indices for Post-Fire Changes Using UAV Visible/Thermal Infrared and JL1 Imagery in Jinyun Mountain, Chongqing, China

Abstract

1. Introduction

- The thermal infrared-enhanced Normalized Burn Ratio (NBRT) [13].

- Complex transformations (e.g., the Excess Green Index [EXG], Green Leaf Index [GLI], Triangular Greenness Index [TGI]) [16].

- Delineating fire scars using M-statistic separability metrics [7];

2. Materials and Methods

2.1. Overview of Study Area and Technical Workflow

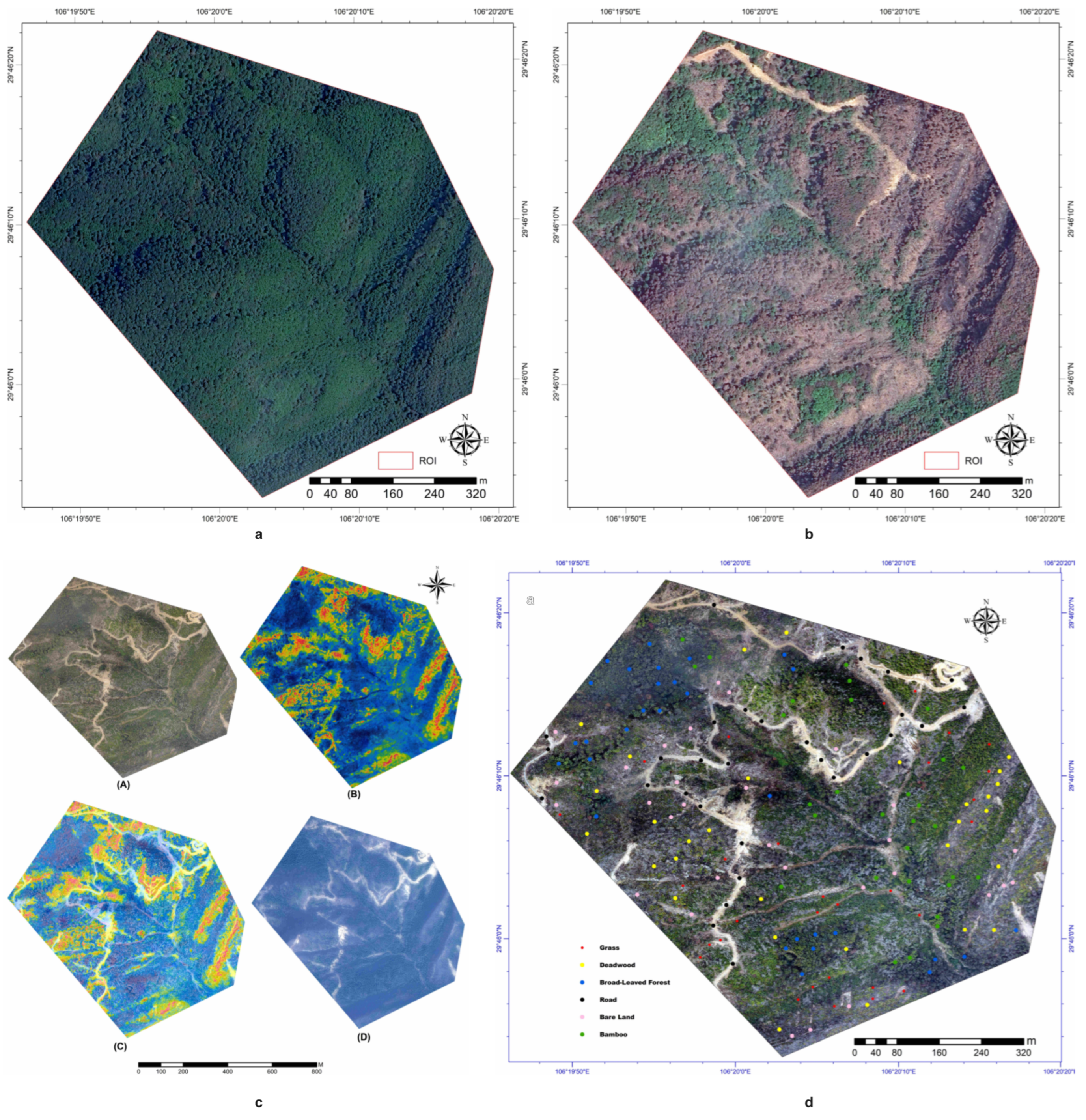

2.1.1. Study Area

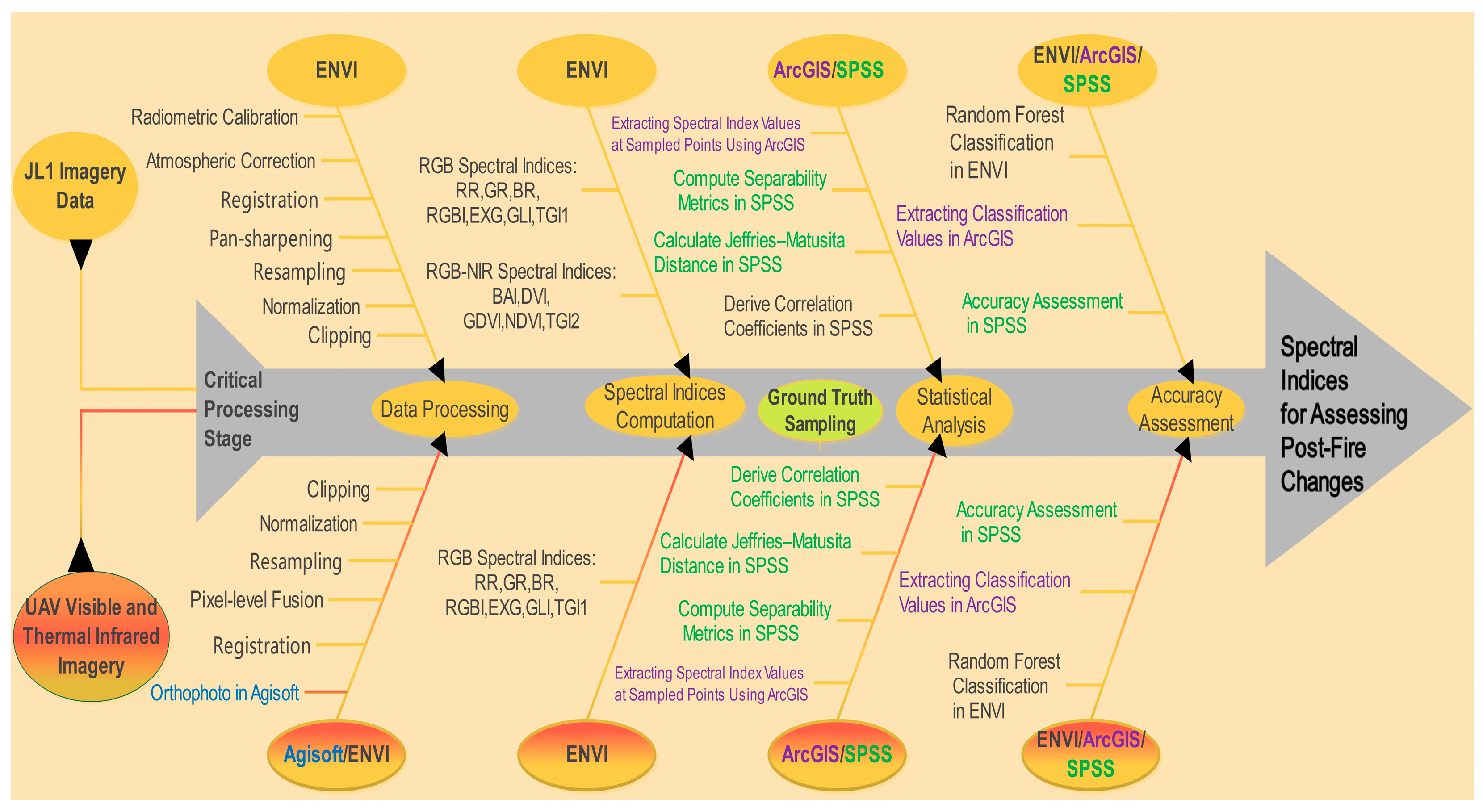

2.1.2. Technical Workflow

2.2. Image Data Acquisition and Preprocessing

2.2.1. Image Data Acquisition

2.2.2. Image Preprocessing

2.3. Spectral Index Calculation

2.4. Data Processing and Analysis

2.4.1. Distinguishing Indices

2.4.2. Jeffries–Matusita (JM) Distance

2.4.3. Relevance

2.5. Accuracy Evaluation

3. Results

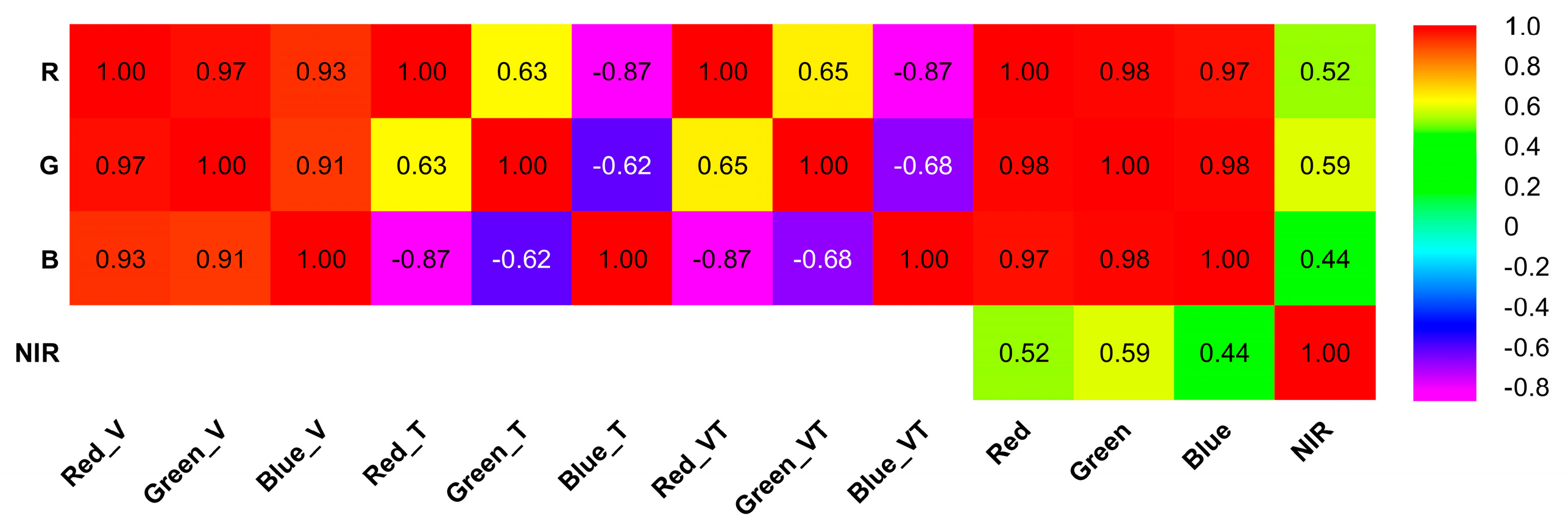

3.1. Spectral Band Comparison

3.2. Comparison of Distinguishing Indices

3.3. Comparison of Spectral Indices of Different Ground Objects

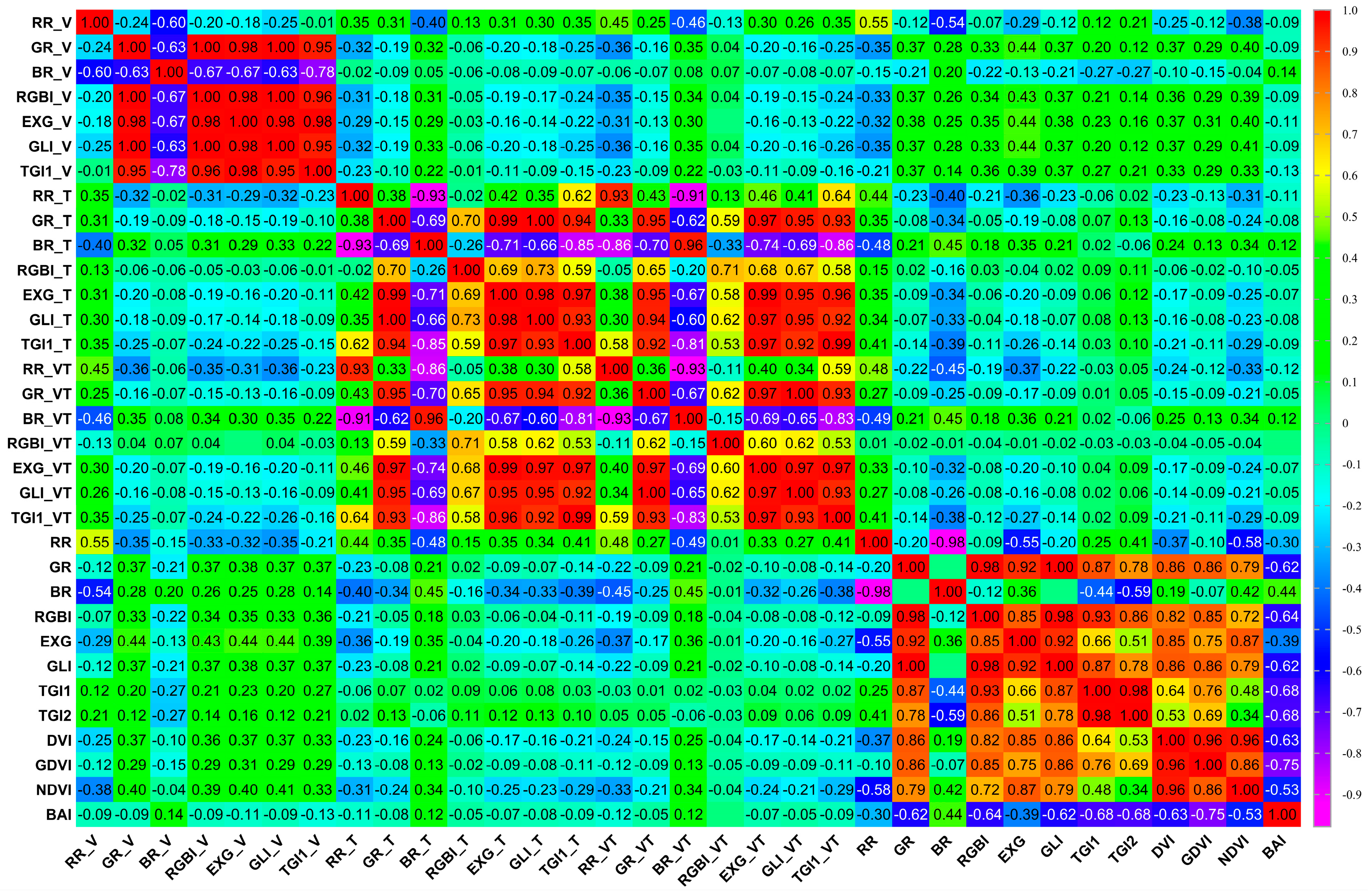

3.4. Correlation of UAVs with JL1-27 Spectral Index

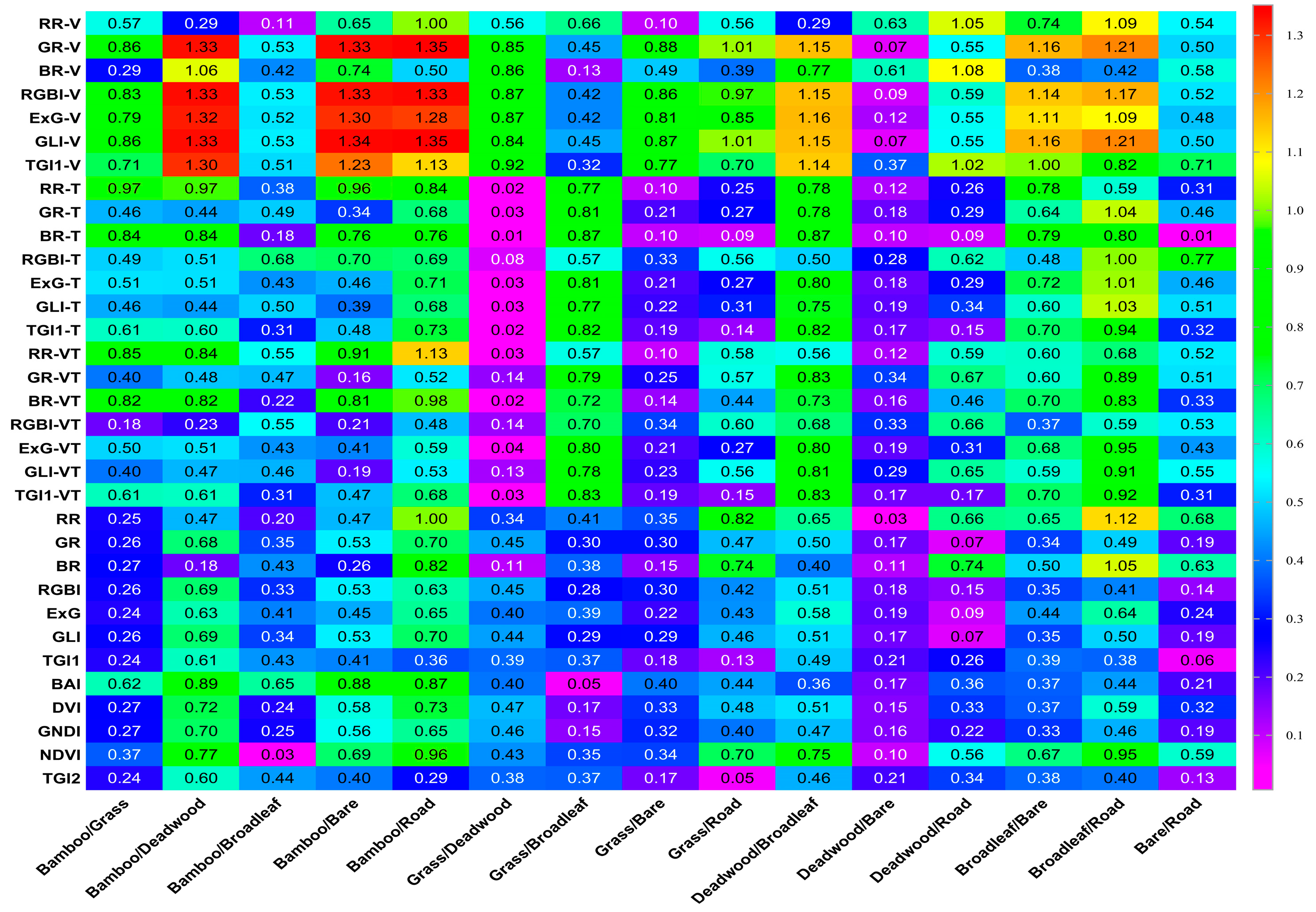

3.5. JM Distance and Spectral Indices

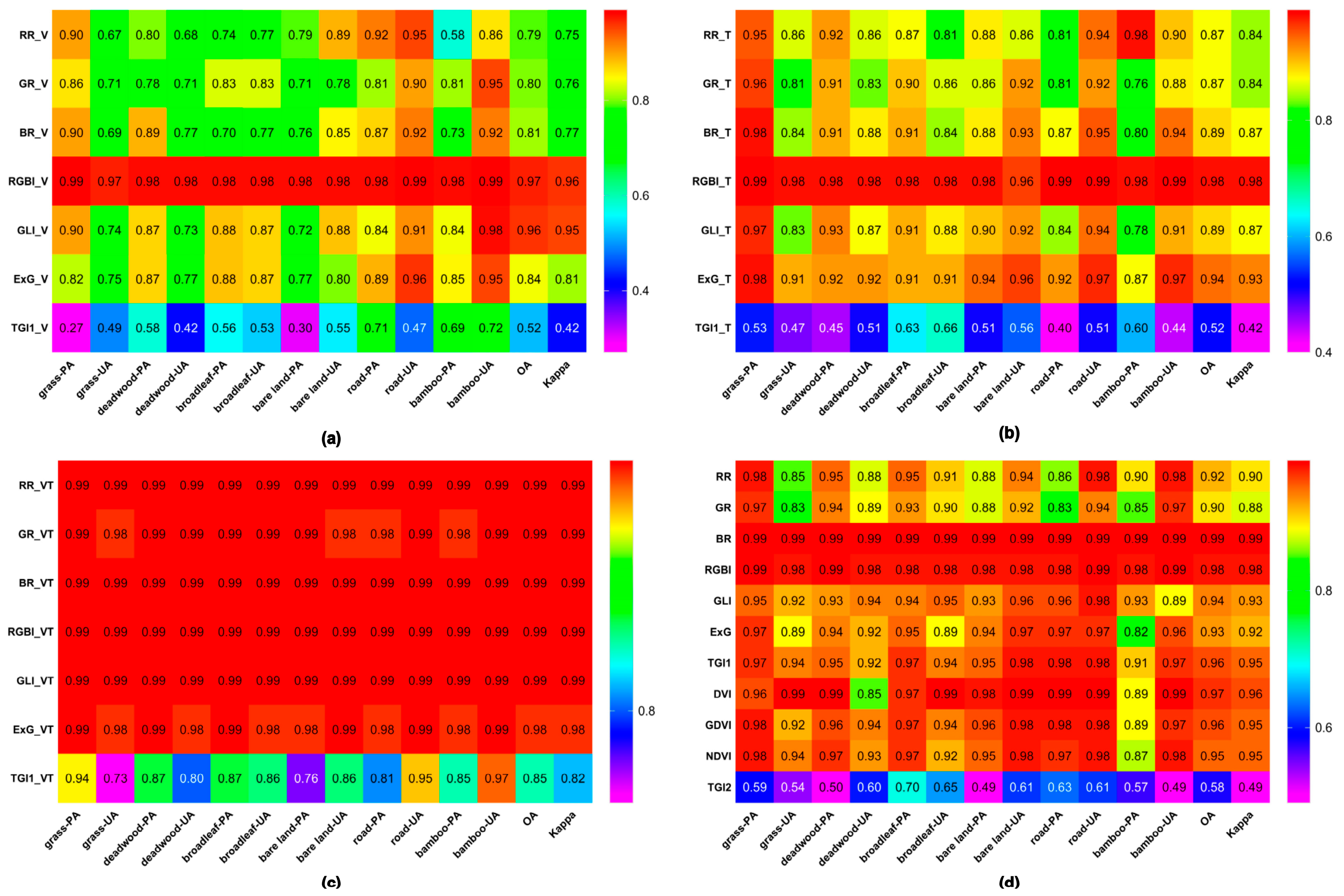

3.6. Accuracy Evaluation

4. Discussion

4.1. Differences in the Extraction of Forest Fire Scars at the Pixel Scale Between UAV and JL1 Images

4.2. Relationship Between Spectral Indices

4.3. Advantages and Disadvantages of Ground Surveys, Unmanned Aerial Vehicles, and Satellite Imagery

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Han, Y.; Zheng, C.; Liu, X.; Tian, Y.; Dong, Z. Burned Area and Burn Severity Mapping with a Transformer-Based Change Detection Model. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2024, 17, 13866–13880. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, M.; Fu, Y.; Ding, Y. A Forest Fire Recognition Method Using UAV Images Based on Transfer Learning. Forests 2022, 13, 975. [Google Scholar] [CrossRef]

- Xu, Y.; Li, J.; Zhang, F. A UAV-Based Forest Fire Patrol Path Planning Strategy. Forests 2022, 13, 1952. [Google Scholar] [CrossRef]

- Li, X.; Gao, H.; Zhang, M.; Zhang, S.; Gao, Z.; Liu, J.; Sun, S.; Hu, T.; Sun, L. Prediction of Forest Fire Spread Rate Using UAV Images and an LSTM Model Considering the Interaction between Fire and Wind. Remote Sens. 2021, 13, 4325. [Google Scholar] [CrossRef]

- Larrinaga, A.R.; Brotons, L. Greenness Indices from a Low-Cost UAV Imagery as Tools for Monitoring Post-Fire Forest Recovery. Drones 2019, 3, 6. [Google Scholar] [CrossRef]

- Guimaraes, N.; Padua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Chuvieco, E.; Martín, M.P.; Palacios, A. Assessment of different spectral indices in the red-near-infrared spectral domain for burned land discrimination. Int. J. Remote Sens. 2002, 23, 5103–5110. [Google Scholar] [CrossRef]

- Fraser, R.H.; van der Sluijs, J.; Hall, R.J. Calibrating Satellite-Based Indices of Burn Severity from UAV-Derived Metrics of a Burned Boreal Forest in NWT, Canada. Remote Sens. 2017, 9, 279. [Google Scholar] [CrossRef]

- Quintano, C.; Fernandez-Manso, A.; Stein, A.; Bijker, W. Estimation of area burned by forest fires in Mediterranean countries: A remote sensing data mining perspective. For. Ecol. Manag. 2011, 262, 1597–1607. [Google Scholar] [CrossRef]

- Perez, C.C.; Olthoff, A.E.; Hernandez-Trejo, H.; Rullan-Silva, C.D. Evaluating the best spectral indices for burned areas in the tropical Pantanos de Centla Biosphere Reserve, Southeastern Mexico. Remote Sens. Appl. Soc. Environ. 2022, 25, 100664. [Google Scholar] [CrossRef]

- Pacheco, A.d.P.; da Silva, J.A., Jr.; Ruiz-Armenteros, A.M.; Henriques, R.F.F.; Santos, I.d.O. Analysis of Spectral Separability for Detecting Burned Areas Using Landsat-8 OLI/TIRS Images under Different Biomes in Brazil and Portugal. Forests 2023, 14, 663. [Google Scholar] [CrossRef]

- Ji, X.; Zhou, Z.; Gouda, M.; Zhang, W.; He, Y.; Ye, G.; Li, X. A novel labor-free method for isolating crop leaf pixels from RGB imagery: Generating labels via a topological strategy. Comput. Electron. Agric. 2024, 218, 108631. [Google Scholar] [CrossRef]

- Roy, D.R.; Boschetti, L.; Trigg, S.N. Remote sensing of fire severity: Assessing the performance of the normalized Burn ratio. IEEE Geosci. Remote Sens. Lett. 2006, 3, 112–116. [Google Scholar] [CrossRef]

- Burnett, J.D.; Wing, M.G. A low-cost near-infrared digital camera for fire detection and monitoring. Int. J. Remote Sens. 2018, 39, 741–753. [Google Scholar] [CrossRef]

- Kedia, A.C.; Kapos, B.; Liao, S.; Draper, J.; Eddinger, J.; Updike, C.; Frazier, A.E. An Integrated Spectral-Structural Workflow for Invasive Vegetation Mapping in an Arid Region Using Drones. Drones 2021, 5, 19. [Google Scholar] [CrossRef]

- Trencanova, B.; Proenca, V.; Bernardino, A. Development of Semantic Maps of Vegetation Cover from UAV Images to Support Planning and Management in Fine-Grained Fire-Prone Landscapes. Remote Sens. 2022, 14, 1262. [Google Scholar] [CrossRef]

- Mpakairi, K.S.; Kadzunge, S.L.; Ndaimani, H. Testing the utility of the blue spectral region in burned area mapping: Insights from savanna wildfires. Remote Sens. Appl. Soc. Environ. 2020, 20, 100365. [Google Scholar] [CrossRef]

- Xiao, W.; Deng, X.; He, T.; Guo, J. Using POI and time series Landsat data to identify and rebuilt surface mining, vegetation disturbance and land reclamation process based on Google Earth Engine. J. Environ. Manag. 2023, 327, 116920. [Google Scholar] [CrossRef]

- Ji, S.; Wang, Y.; He, L.; Zhang, Z.; Meng, F.; Li, X.; Chen, Y.; Wang, D.; Gong, Z. Greenhouse gas emission in the whole process of forest fire including rescue: A case of forest fire in Beibei District of Chongqing. Environ. Sci. Pollut. Res. 2023, 30, 113105–113117. [Google Scholar] [CrossRef]

- Fu, B.; Zuo, P.; Liu, M.; Lan, G.; He, H.; Lao, Z.; Zhang, Y.; Fan, D.; Gao, E. Classifying vegetation communities karst wetland synergistic use of image fusion and object-based machine learning algorithm with Jilin-1 and UAV multispectral images. Ecol. Indic. 2022, 140, 108989. [Google Scholar] [CrossRef]

- Beltran-Marcos, D.; Suarez-Seoane, S.; Fernandez-Guisuraga, J.M.; Fernandez-Garcia, V.; Marcos, E.; Calvo, L. Relevance of UAV and sentinel-2 data fusion for estimating topsoil organic carbon after forest fire. Geoderma 2023, 430, 116290. [Google Scholar] [CrossRef]

- Udelhoven, T. TimeStats: A Software Tool for the Retrieval of Temporal Patterns from Global Satellite Archives. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 310–317. [Google Scholar] [CrossRef]

- Wu, B.; Zheng, H.; Xu, Z.; Wu, Z.; Zhao, Y. Forest Burned Area Detection Using a Novel Spectral Index Based on Multi-Objective Optimization. Forests 2022, 13, 1787. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Vonbargen, K.; Mortensen, D.A. Color indexes for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Ma, D.; Wang, Q.; Huang, Q.; Lin, Z.; Yan, Y. Spatio-Temporal Evolution of Vegetation Coverage and Eco-Environmental Quality and Their Coupling Relationship: A Case Study of Southwestern Shandong Province, China. Forests 2024, 15, 1200. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Remer, L.A. Detection of forests using mid-IR reflectance: An application for aerosol studies. IEEE Trans. Geosci. Remote Sens. 1994, 32, 672–683. [Google Scholar] [CrossRef]

- Hao, P.; Zhan, Y.; Wang, L.; Niu, Z.; Shakir, M. Feature Selection of Time Series MODIS Data for Early Crop Classification Using Random Forest: A Case Study in Kansas, USA. Remote Sens. 2015, 7, 5347–5369. [Google Scholar] [CrossRef]

- Zhao, Y.; Huang, Y.; Sun, X.; Dong, G.; Li, Y.; Ma, M. Forest Fire Mapping Using Multi-Source Remote Sensing Data: A Case Study in Chongqing. Remote Sens. 2023, 15, 2323. [Google Scholar] [CrossRef]

- Sripada, R.P. Determining In-Season Nitrogen Requirements for Corn Using Aerial Color-Infrared Photography. Ph.D. Thesis, North Carolina State University, Raleigh, NC, USA, 2005. [Google Scholar]

- Vargas-Sanabria, D.; Campos-Vargas, C. Multitemporal comparison of burned areas in a tropical dry forest using the burned area index (BAI). Rev. For. Mesoam. Kuru-Rfmk 2020, 17, 29–36. [Google Scholar] [CrossRef]

- Richardson, A.D.; Jenkins, J.P.; Braswell, B.H.; Hollinger, D.Y.; Ollinger, S.V.; Smith, M.-L. Use of digital webcam images to track spring green-up in a deciduous broadleaf forest. Oecologia 2007, 152, 323–334. [Google Scholar] [CrossRef] [PubMed]

- Pereira, J.M.C.; Mota, B.; Privette, J.L.; Caylor, K.K.; Silva, J.M.N.; Sá, A.C.L.; Ni-Meister, W. A simulation analysis of the detectability of understory burns in miombo woodlands. Remote Sens. Environ. 2004, 93, 296–310. [Google Scholar] [CrossRef]

- Carvajal-Ramirez, F.; Marques da Silva, J.R.; Aguera-Vega, F.; Martinez-Carricondo, P.; Serrano, J.; Jesus Moral, F. Evaluation of Fire Severity Indices Based on Pre- and Post-Fire Multispectral Imagery Sensed from UAV. Remote Sens. 2019, 11, 993. [Google Scholar] [CrossRef]

- Padua, L.; Guimaraes, N.; Adao, T.; Sousa, A.; Peres, E.; Sousa, J.J. Effectiveness of Sentinel-2 in Multi-Temporal Post-Fire Monitoring When Compared with UAV Imagery. ISPRS Int. J. Geo-Inf. 2020, 9, 225. [Google Scholar] [CrossRef]

- Hendel, I.-G.; Ross, G.M. Efficacy of Remote Sensing in Early Forest Fire Detection: A Thermal Sensor Comparison. Can. J. Remote Sens. 2020, 46, 414–428. [Google Scholar] [CrossRef]

- de Jesus Marcial-Pablo, M.; Gonzalez-Sanchez, A.; Ivan Jimenez-Jimenez, S.; Ernesto Ontiveros-Capurata, R.; Ojeda-Bustamante, W. Estimation of vegetation fraction using RGB and multispectral images from UAV. Int. J. Remote Sens. 2019, 40, 420–438. [Google Scholar] [CrossRef]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef]

- Sun, J.; Zhu, R.; Gong, J.; Qu, C.; Guo, F. Cross-Comparison Between Jilin-1GF03B and Sentinel-2 Multi-Spectral Measurements and Phenological Monitors. IEEE Access 2024, 12, 43540–43551. [Google Scholar] [CrossRef]

- Zhang, X.; Sun, Y.; Shang, K.; Zhang, L.; Wang, S. Crop Classification Based on Feature Band Set Construction and Object-Oriented Approach Using Hyperspectral Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 4117–4128. [Google Scholar] [CrossRef]

- Louhaichi, M.; Borman, M.M.; Johnson, D.E. Spatially located platform and aerial photography for documentation of grazing impacts on wheat. Geocarto Int. 2001, 16, 65–70. [Google Scholar] [CrossRef]

- Hunt, E.R., Jr.; Daughtry, C.S.T.; Eitel, J.U.H.; Long, D.S. Remote sensing leaf chlorophyll content using a visible band index. Agron. J. 2011, 103, 1090–1099. [Google Scholar] [CrossRef]

- Rouse, J.W.; Haas, R.H.; Schell, J.A.; Deering, D.W. Monitoring vegetation systems in the great plains with ERTS. In Proceedings of the 3rd Earth Resources Technology Satellite Symposium, Washington, DC, USA, 10–14 December 1973. [Google Scholar]

- Hunt, E.R., Jr.; Doraiswamy, P.C.; McMurtrey, J.E.; Daughtry, C.S.T.; Perry, E.M.; Akhmedov, B. A visible band index for remote sensing leaf chlorophyll content at the canopy scale. Int. J. Appl. Earth Obs. Geoinf. 2013, 21, 103–112. [Google Scholar] [CrossRef]

- Bourgoin, C.; Betbeder, J.; Couteron, P.; Blanc, L.; Dessard, H.; Oszwald, J.; Le Roux, R.; Cornu, G.; Reymondin, L.; Mazzei, L.; et al. UAV-based canopy textures assess changes in forest structure from long-term degradation. Ecol. Indic. 2020, 115, 106386. [Google Scholar] [CrossRef]

- Alpar, O.; Krejcar, O. Quantization and Equalization of Pseudocolor Images in Hand Thermography. In Bioinformatics and Biomedical Engineering, Proceedings of the IWBBIO 2017, Granada, Spain, 26–28 April 2017, Part I; Rojas, I., Ortuno, F., Eds.; Lecture Notes in Bioinformatics; Springer: Cham, Switzerland, 2017; Volume 10208, pp. 397–407. [Google Scholar]

| Bands | Channels | Wavelength/μm | Spatial Resolution/m | Acquisition Date | Satellite Sensor | Source |

|---|---|---|---|---|---|---|

| Pan | 0.45~0.80 | 0.5/0.75 | 27 November 2023 | JL1GF02B | https://www.jl1mall.com/ (Available from 12 August 2024 to 15 October 2024) | |

| Band1 | Blue | 0.45~0.51 | 2/3 | 12 August 2024 | JL1KF01B | |

| Band2 | Green | 0.51~0.58 | 6 September 2022 | JL1KF01C | ||

| Band3 | Red | 0.63~0.69 | 3 August 2022 | |||

| Band4 | NIR | 0.77~0.895 | ||||

| RGB-V | Red/Green/Blue | 0.40~0.70 | 0.07 | 28 December 2023 | M2D visible sensor | This study |

| RGB-T | Red/Green/Blue | 8~14 | 0.36 | 28 December 2023 | M2D thermal infrared |

| Index | Abbreviation | Expression | Reference |

|---|---|---|---|

| Red Ratio | RR | [32] | |

| Green Ratio | GR | [32] | |

| Blue Ratio | BR | [32] | |

| Red/Green/Blue Index | RGBI | [25] | |

| Excess Green | EXG | [24] | |

| Green Leaf Index | GLI | [41] | |

| Triangular Greenness Index 1 | TGI1 | [44] | |

| Triangular Greenness Index 2 | TGI2 | [42] | |

| Difference Vegetation Index | DVI | [38] | |

| Green Difference Vegetation Index | GDVI | [30] | |

| Normalized Difference Vegetation Index | NDVI | [43] | |

| Burned Area Index | BAI | [7] |

| Index | Pre-Fire (JL1-83) | JL1-96 | JL1-27 | JL1-812 | UAV-V | UAV-T | UAV-VT | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | STD | Mean | STD | M | M83 | M96 | M83 | M96 | M83 | M96 | M83 | M96 | M83 | M96 | |

| RR | 0.2354 | 0.0080 | 0.3394 | 0.0288 | 2.8252 | 2.0791 | 0.2985 | 2.4236 | 0.0723 | 5.0145 | 0.1690 | 0.3745 | 0.8441 | 0.1593 | 0.6919 |

| GR | 0.3797 | 0.0099 | 0.3342 | 0.0094 | 2.3585 | 1.2249 | 0.1724 | 0.8112 | 1.6797 | 1.0421 | 0.5855 | 0.0296 | 0.4474 | 0.1007 | 0.4061 |

| BR | 0.3849 | 0.0117 | 0.3264 | 0.0212 | 1.7803 | 1.1318 | 0.2598 | 6.4715 | 2.8322 | 2.4962 | 0.5538 | 0.3049 | 0.5318 | 0.1680 | 0.4228 |

| RGBI | 0.2280 | 0.0380 | 0.0065 | 0.0418 | 2.7736 | 1.4518 | 0.1729 | 0.7546 | 1.8912 | 1.2695 | 0.5819 | 1.1210 | 1.8623 | 0.4743 | 1.0486 |

| EXG | 0.0216 | 0.0057 | 0.0000 | 0.0059 | 1.8650 | 0.9334 | 0.1372 | 0.7498 | 1.5413 | 0.5847 | 0.8778 | 0.4910 | 0.5559 | 0.4220 | 0.4863 |

| GLI | 0.1007 | 0.0207 | 0.0018 | 0.0210 | 2.3672 | 1.2350 | 0.1652 | 0.7927 | 1.7044 | 1.0505 | 0.5875 | 0.0849 | 0.4079 | 0.1536 | 0.3729 |

| TGI1 | 0.0083 | 0.0028 | 0.0004 | 0.0021 | 1.6018 | 0.7240 | 0.0881 | 1.2039 | 1.9514 | 0.7877 | 1.0091 | 0.2650 | 0.3054 | 0.2308 | 0.2709 |

| BAI | 38.5388 | 30.1057 | 161.2208 | 86.5482 | 1.0517 | 0.0938 | 1.1439 | 0.5757 | 1.4923 | ||||||

| DVI | 0.2049 | 0.0681 | 0.0669 | 0.0230 | 1.5156 | 0.0503 | 1.1111 | 0.6005 | 2.0382 | ||||||

| GDVI | 0.1826 | 0.0654 | 0.0688 | 0.0253 | 1.2552 | 0.0791 | 1.1287 | 0.7489 | 2.1332 | ||||||

| NDVI | 0.7228 | 0.0663 | 0.3115 | 0.0671 | 3.0831 | 0.8030 | 1.0378 | 0.0810 | 2.0397 | ||||||

| TGI2 | 0.6851 | 0.2523 | 0.0503 | 0.1724 | 1.4947 | 0.6613 | 0.0720 | 1.3304 | 2.0627 | ||||||

| Indices | Road | Bare Land | Deadwood | Grass | Bamboo | Broad-Leaved Forest |

|---|---|---|---|---|---|---|

| RR_V | 0.372 | 0.360 | 0.346 | 0.359 | 0.344 | 0.341 |

| GR_V | 0.338 | 0.335 | 0.334 | 0.349 | 0.378 | 0.363 |

| BR_V | 0.291 | 0.305 | 0.320 | 0.292 | 0.278 | 0.296 |

| RGBI_V | 0.027 | 0.012 | 0.005 | 0.077 | 0.197 | 0.132 |

| GLI_V | 0.009 | 0.004 | 0.002 | 0.035 | 0.096 | 0.065 |

| EXG_V | 0.027 | 0.008 | 0.003 | 0.064 | 0.164 | 0.109 |

| TGI1_V | 0.033 | 0.014 | 0.005 | 0.042 | 0.092 | 0.061 |

| RR_T | 0.230 | 0.311 | 0.313 | 0.314 | 0.079 | 0.098 |

| GR_T | 0.457 | 0.369 | 0.414 | 0.412 | 0.350 | 0.285 |

| BR_T | 0.313 | 0.320 | 0.273 | 0.274 | 0.571 | 0.616 |

| RGBI_T | 0.718 | 0.436 | 0.542 | 0.560 | 0.497 | 0.220 |

| GLI_T | 0.246 | 0.058 | 0.156 | 0.152 | 0.029 | −0.119 |

| EXG_T | 0.484 | 0.157 | 0.327 | 0.312 | 0.073 | −0.078 |

| TGI1_T | 0.235 | 0.086 | 0.178 | 0.168 | −0.022 | −0.083 |

| RR_VT | 0.335 | 0.348 | 0.326 | 0.329 | 0.124 | 0.209 |

| GR_VT | 0.382 | 0.360 | 0.416 | 0.399 | 0.347 | 0.301 |

| BR_VT | 0.283 | 0.292 | 0.258 | 0.273 | 0.529 | 0.490 |

| RGBI_VT | 0.252 | 0.260 | 0.539 | 0.482 | 0.370 | 0.016 |

| GLI_VT | 0.104 | 0.048 | 0.161 | 0.129 | 0.023 | −0.079 |

| EXG_VT | 0.356 | 0.140 | 0.321 | 0.292 | 0.055 | −0.114 |

| TGI1_VT | 0.192 | 0.085 | 0.176 | 0.161 | −0.026 | −0.092 |

| RR | 0.397 | 0.347 | 0.340 | 0.331 | 0.319 | 0.315 |

| GR | 0.318 | 0.318 | 0.316 | 0.319 | 0.325 | 0.321 |

| BR | 0.285 | 0.335 | 0.345 | 0.350 | 0.356 | 0.363 |

| RGBI | −0.053 | −0.069 | −0.079 | −0.064 | −0.034 | −0.052 |

| GLI | −0.035 | −0.036 | −0.040 | −0.033 | −0.018 | −0.028 |

| EXG | −0.028 | −0.021 | −0.023 | −0.018 | −0.010 | −0.014 |

| TGI1 | −0.006 | −0.010 | −0.012 | −0.010 | −0.006 | −0.009 |

| TGI2 | −0.301 | −0.873 | −1.058 | −0.903 | −0.620 | −0.858 |

| DVI | 0.056 | 0.077 | 0.073 | 0.094 | 0.171 | 0.153 |

| GDVI | 0.107 | 0.091 | 0.084 | 0.100 | 0.168 | 0.151 |

| NDVI | 0.101 | 0.185 | 0.189 | 0.238 | 0.389 | 0.372 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, J.; Liu, Y.; Liang, X.; Liu, F. Comparative Calculation of Spectral Indices for Post-Fire Changes Using UAV Visible/Thermal Infrared and JL1 Imagery in Jinyun Mountain, Chongqing, China. Forests 2025, 16, 1147. https://doi.org/10.3390/f16071147

Zhu J, Liu Y, Liang X, Liu F. Comparative Calculation of Spectral Indices for Post-Fire Changes Using UAV Visible/Thermal Infrared and JL1 Imagery in Jinyun Mountain, Chongqing, China. Forests. 2025; 16(7):1147. https://doi.org/10.3390/f16071147

Chicago/Turabian StyleZhu, Juncheng, Yijun Liu, Xiaocui Liang, and Falin Liu. 2025. "Comparative Calculation of Spectral Indices for Post-Fire Changes Using UAV Visible/Thermal Infrared and JL1 Imagery in Jinyun Mountain, Chongqing, China" Forests 16, no. 7: 1147. https://doi.org/10.3390/f16071147

APA StyleZhu, J., Liu, Y., Liang, X., & Liu, F. (2025). Comparative Calculation of Spectral Indices for Post-Fire Changes Using UAV Visible/Thermal Infrared and JL1 Imagery in Jinyun Mountain, Chongqing, China. Forests, 16(7), 1147. https://doi.org/10.3390/f16071147