Edge AI for Industrial Visual Inspection: YOLOv8-Based Visual Conformity Detection Using Raspberry Pi

Abstract

1. Introduction

2. Related Works

3. Materials and Methods

3.1. Theoretical Background

3.1.1. Computer Vision and Convolutional Neural Networks in Industry 4.0

- Convolution Layer: Uses kernels or filters to extract features from the input image. The kernel performs dot product operations on subregions of the image, producing a feature map. The displacement of the kernel is controlled by a parameter called stride, and the use of padding can preserve the original image’s dimensions [8].

- Pooling Layer: Responsible for reducing the dimensionality of feature maps while retaining the most relevant information. The most common operations are max pooling (selects the most significant value in the region) and average pooling (calculates the average) [8].

- Flattening Layer: Converts the feature maps (two-dimensional) into a one-dimensional vector, which allows connecting the information to the dense layers (fully connected) to perform the final classification [8].

- Dense Layers (Fully Connected Layers): Perform the decision process based on the extracted and flattened features. These layers connect all the neurons in the previous layer to all those in the next layer, as in a traditional neural network [8].

- Activation Function: After each convolution or densely connected operation, activation functions such as the Rectified Linear Unit (ReLU) are applied to introduce non-linearities, thereby enhancing the network’s modeling capability [8].

- In industry, CNNs are applied for fulfilling various functions such as the following:

- Automated Inspection of Surface Defects: CNNs are widely used to detect surface defects on industrial materials, including steel, fabrics, ceramics, photovoltaic panels, magnetic tiles, and LCD screens. These networks can extract relevant visual features directly from images captured during production, enabling fast and accurate decisions about product quality [9].

- Vision Systems for Quality Control: The combination of deep learning and computer vision has revolutionized industrial inspection systems, replacing manual methods and simple automation. This results in improved real-time quality control, with the ability to adapt to various lighting conditions and product pattern variation, for example, the visual inspection of automotive surfaces, electronics, or textile items through systems with embedded cameras and CNNs trained to recognize anomalies [10].

- Integration with Industry 4.0: CNNs are considered essential technologies within the Industry 4.0 ecosystem, as they support intelligent automation, predictive maintenance, real-time process control, and customized manufacturing. With visual data collection and real-time analysis, CNNs facilitate decentralized and efficient decision-making. Applications in embedded devices, edge computing, and cyber–physical systems allow the use of CNNs even in industrial environments with limited computational resources [9].

- Visual Inspection in the Automotive Industry: In the automotive sector, CNNs are applied at several stages of production, from inspecting raw materials to verifying the final vehicle. They detect tiny defects, such as corrosion, paint flaws, cracks, or structural deformations, that escape human observation [10].

- Automated Inspection with CNNs in the Industrial Life Cycle: CNNs play a crucial role in various phases of the product life cycle, including visual inspection, assembly, process control, and logistics. The architecture of industrial vision systems integrates sensing modules, vision algorithms, and automated decision-making, optimizing production [7].

- Continuous Improvement in Complex Manufacturing Environments: With the advent of Industry 4.0, CNNs enable adaptive visual inspections that dynamically respond to changes in production processes. This significantly reduces response time and rework costs, promoting intelligent manufacturing based on visual data, and includes real-time inspections with embedded and edge sensor computing [10].

- Monitoring and Diagnostics in Critical Infrastructure Environments: CNNs are also applied in the automated inspection of overhead contact lines (OCLs) used in railways. Such applications demonstrate the potential of computer vision in highly critical industrial sectors and complex infrastructure [11].

3.1.2. YOLOv8 Architecture: Nano vs. Small

3.1.3. Hardware Architectures for AI: CPU vs. GPU

- Reduction in Training Time: the joint use of CPUs and GPUs drastically reduces the training time of AI models, enabling faster development and tuning cycles [19].

- Energy Savings: they optimize energy consumption by leveraging the efficient parallel processing capabilities of GPUs, combined with CPU flow control, which is particularly essential in data centers and large-scale computing infrastructures [19].

3.2. Methodological Framework

3.2.1. Research Approach: Design Science Research (DSR)

3.2.2. Problem Identification and Motivation

3.2.3. Objectives and Success Criteria

- The general geometric shape of the tube (contour and curvature).

- The presence and correct position of the connectors.

- Visual fault detection, such as deformed, missing, or poorly fitted parts.

3.3. System Development

3.3.1. Dataset Collection and Annotation

- Conforming (class 1 with 800 images).

- Non-conforming (class 2 with 1227 images).

3.3.2. Training Environment and Parameters

- Number of epochs: 100;

- Image size: 640 × 640 px;

- Batch size: 8;

- Optimizer: SGD with a learning rate of 0.01;

- Early stopping: enabled after 10 epochs without mAP improvement.

3.3.3. YOLOv8 Model Implementation

3.3.4. Embedded System Deployment on Raspberry Pi 500

3.4. Evaluation and Testing

3.4.1. Inference Testing on GPUs and CPUs

- “Conforming” for parts conforming to the standard;

- “Non-conforming” with visual highlighting of non-conforming elements.

3.4.2. Evaluation and Performance Metrics

- Accuracy measures the proportion of correct predictions among all predictions made by the model.

- Precision and Recall are defined using the following terms:

- True Positives (TP): the number of correctly predicted positive detections.

- False Positives (FP): the number of incorrectly positive predictions (i.e., predicted as positive but actually negative).

- False Negatives (FN): the number of missed detections (i.e., predicted as negative but actually positive).

- Precision (Equation (1)) quantifies the proportion of correctly predicted positive detections among all positive predictions:

- Recall (Equation (2)) measures the ability of the model to detect all relevant objects:

- F1-score (Equation (3)) is the harmonic mean between Precision and Recall, balancing both measures:

- Mean Average Precision (mAP) is the average of the APs obtained for all classes and IoU thresholds.

- -

- mAP@0.5:0.95: the average precision across multiple IoU thresholds from 0.5 to 0.95, used in the COCO benchmark.

- -

- mAP@0.5: AP calculated at an IoU threshold of 0.5.

3.4.3. Practical Demonstration in Industrial Scenario

4. Results and Discussion

4.1. YOLOv8 Model Selection (Small x Nano)

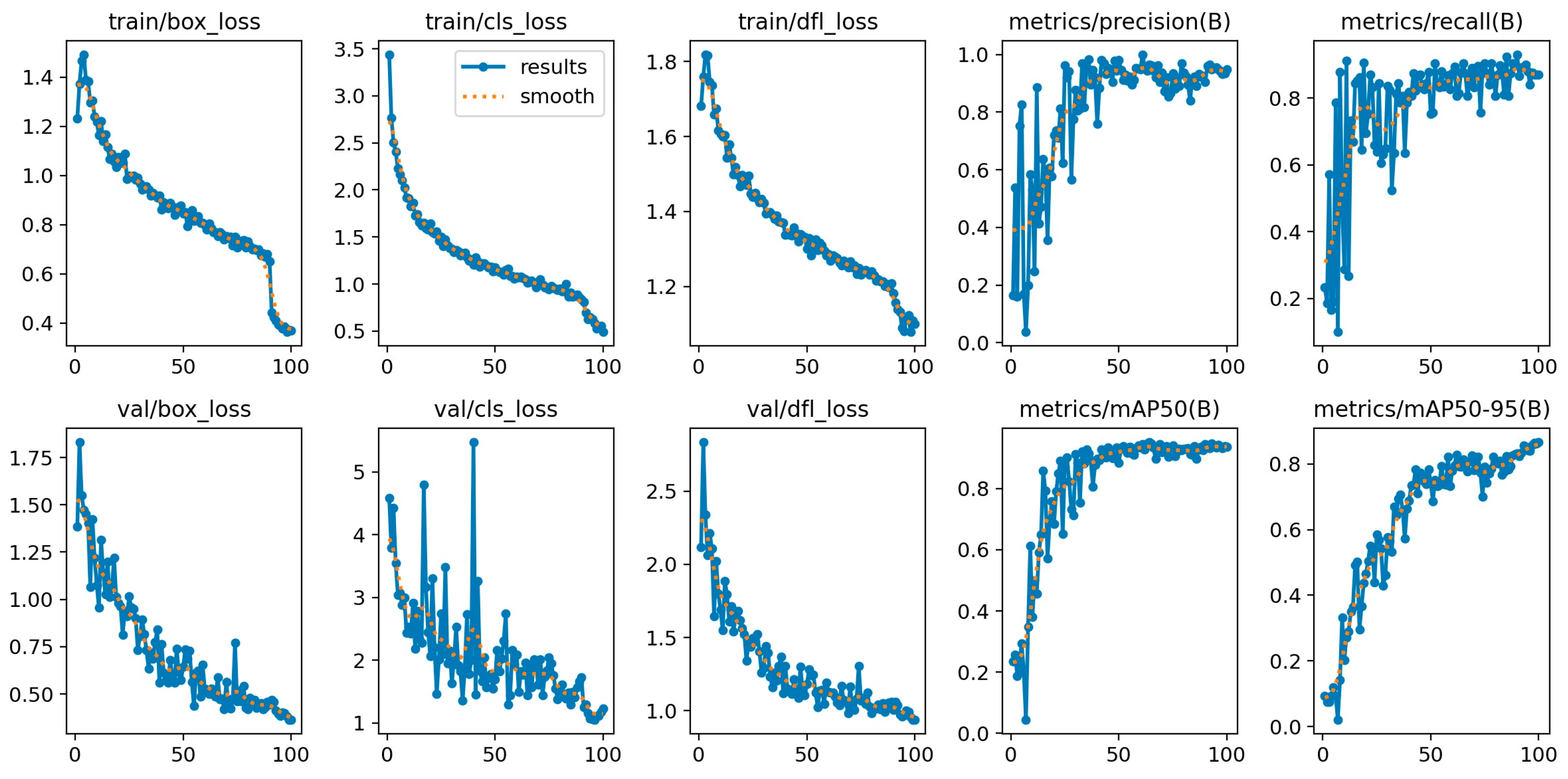

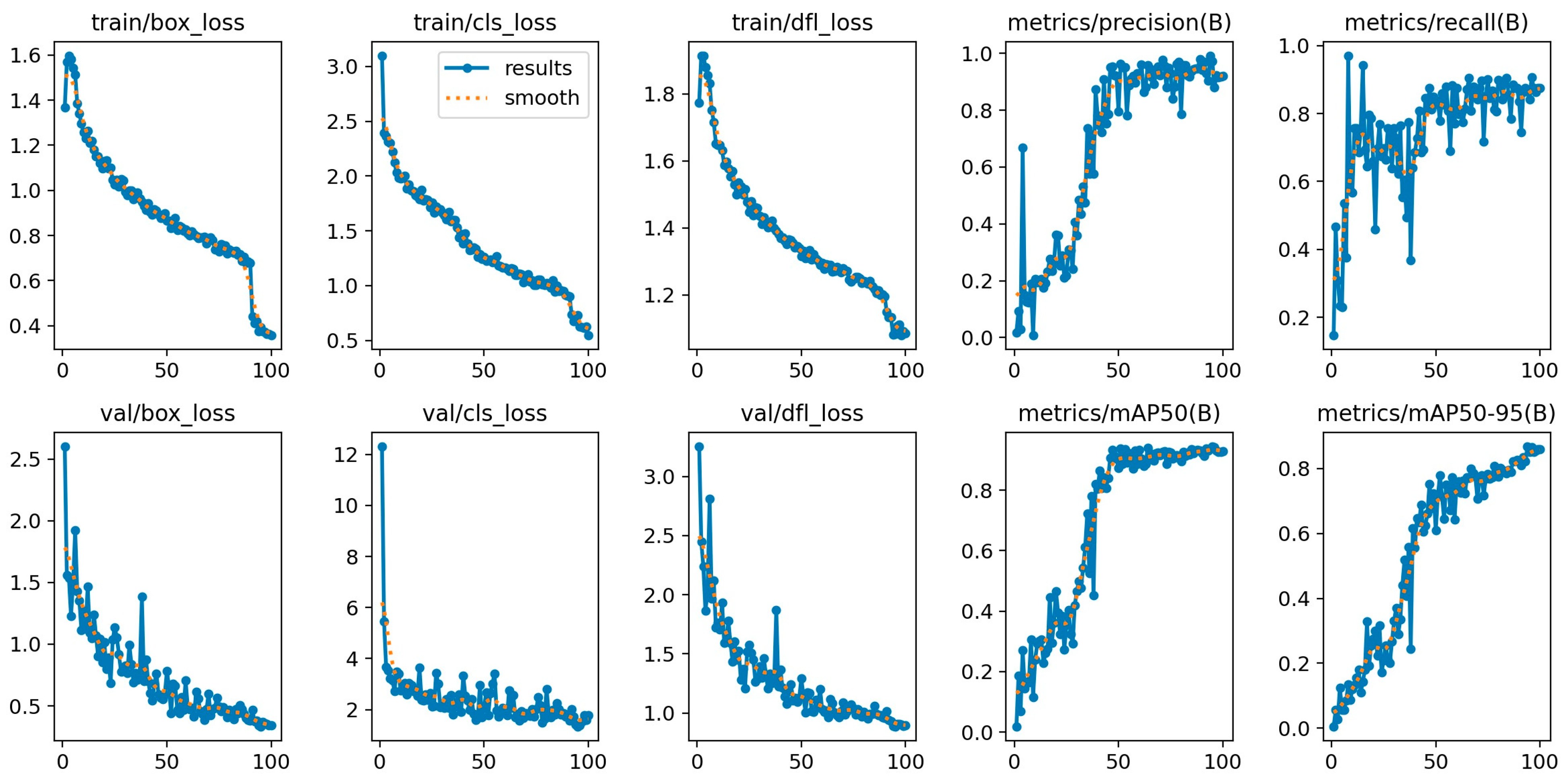

4.2. Performance of YOLOv8 Nano and Small Models

Overfitting Behavior of YOLOv8 Small

4.3. IoT Performance—Pi 500

4.4. Performance Comparison: GPU vs. CPU

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CPU | Central Processing Unit |

| GPU | Graphics Processing Unit |

| CNN | Convolutional Neural Network |

| YOLO | You Only Look Once |

| DSR | Design Science Research |

| ReLU | Rectified Linear Unit |

| mAP | mean Average Precision |

| FLOP | Floating Point Operation |

| IoT | Internet of Things |

References

- Yang, D.; Cui, Y.; Yu, Z.; Yuan, H. Deep learning based steel pipe weld defect detection. Appl. Artif. Intell. 2021, 35, 1237–1249. [Google Scholar] [CrossRef]

- Mazzetto, M.; Teixeira, M.; Rodrigues, É.O.; Casanova, D. Deep learning models for visual inspection on automotive assembling line. arXiv 2020, arXiv:2007.01857. [Google Scholar] [CrossRef]

- Qi, Z.; Ding, L.; Li, X.; Hu, J.; Lyu, B.; Xiang, A. Detecting and classifying defective products in images using YOLO. arXiv 2024, arXiv:2412.16935. [Google Scholar]

- Huang, H.; Zhu, K. Automotive parts defect detection based on YOLOv7. Electronics 2024, 13, 1817. [Google Scholar] [CrossRef]

- Zhang, K.; Qin, L.; Zhu, L. PDS-YOLO: A Real-Time Detection Algorithm for Pipeline Defect Detection. Electronics 2025, 14, 208. [Google Scholar] [CrossRef]

- Zhao, X.; Wang, L.; Zhang, Y.; Han, X.; Deveci, M.; Parmar, M. A review of convolutional neural networks in computer vision. Artif. Intell. Rev. 2024, 57, 99. [Google Scholar] [CrossRef]

- Zhou, L.; Zhang, L.; Konz, N. Computer vision techniques in manufacturing. IEEE Trans. Syst. Man Cybern. Syst. 2022, 53, 105–117. [Google Scholar] [CrossRef]

- Anumol, C.S. Advancements in CNN architectures for computer vision: A comprehensive review. In Proceedings of the 2023 Annual International Conference on Emerging Research Areas: International Conference on Intelligent Systems (AICERA/ICIS), Kanjirapally, India, 16–18 November 2023; IEEE: New York, NY, USA, 2023; pp. 1–7. [Google Scholar]

- Khanam, R.; Hussain, M.; Hill, R.; Allen, P. A comprehensive review of convolutional neural networks for defect detection in industrial applications. IEEE Access 2024, 12, 94250–94295. [Google Scholar] [CrossRef]

- Islam, M.R.; Zamil, M.Z.H.; Rayed, M.E.; Kabir, M.M.; Mridha, M.F.; Nishimura, S.; Shin, J. Deep Learning and Computer Vision Techniques for Enhanced Quality Control in Manufacturing Processes. IEEE Access 2024, 12, 121449–121479. [Google Scholar] [CrossRef]

- Yu, L.; Gao, S.; Zhang, D.; Kang, G.; Zhan, D.; Roberts, C. A survey on automatic inspections of overhead contact lines by computer vision. IEEE Trans. Intell. Transp. Syst. 2021, 23, 10104–10125. [Google Scholar] [CrossRef]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the Ieee Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Hussain, M. YOLO-v1 to YOLO-v8: The Rise of YOLO and Its Complementary Nature toward Digital Manufacturing and Industrial Defect Detection. Machines 2023, 11, 677. [Google Scholar] [CrossRef]

- Karthika, B.; Venkatesan, R.; Dharssinee, M.; Sujarani, R.; Reshma, V. Object Detection Using YOLO-V8. In Proceedings of the 15th International Conference on Computing, Communication and Networking Technologies (ICCCNT), Kamand, India, 24–28 June 2024; IEEE: New York, NY, USA, 2024. [Google Scholar]

- Ahmed, T.; Maaz, A.; Mahmood, D.; Abideen, Z.U.; Arshad, U.; Ali, R.H. The yolov8 edge: Harnessing custom datasets for superior real-time detection. In Proceedings of the 2023 18th International Conference on Emerging Technologies (ICET), Peshawar, Pakistan, 6–7 November 2023; IEEE: New York, NY, USA, 2023. [Google Scholar]

- Lin, J.; Chen, Y.; Huang, S. YOLO-Nano: A Highly Compact You Only Look Once Convolutional Neural Network for Object Detection. arXiv 2020, arXiv:2004.07676. [Google Scholar] [CrossRef]

- Jocher, G. YOLOv5 by Ultralytics Documentation. Ultralytics. 2023. Available online: https://docs.ultralytics.com (accessed on 10 July 2025).

- Chandrashekar, B.N.; Geetha, V.; Shastry, K.A.; Manjunath, B.A. Performance Model of HPC Application on CPU-GPU Platform. In Proceedings of the IEEE 2nd Mysore Sub Section International Conference (MysuruCon), Mysuru, India, 16–17 October 2022. [Google Scholar]

- Kaur, R.; Mohammadi, F. Power Estimation and Comparison of Heterogeneous CPU-GPU Processors. In Proceedings of the IEEE 25th Electronics Packaging Technology Conference (EPTC), Singapore, 5–8 December 2023. [Google Scholar]

- Venkat, R.A.; Oussalem, Z.; Bhattacharya, A.K. Training Convolutional Neural Networks with Differential Evolution using Concurrent Task Apportioning on Hybrid CPU-GPU Architectures. In Proceedings of the IEEE Congress on Evolutionary Computation (CEC), Kraków, Poland, 28 June 2021–1 July 2021. [Google Scholar]

- Kimm, H.; Paik, I.; Kimm, H. Performance Comparison of TPU, GPU, CPU on Google Colaboratory over Distributed Deep Learning. In Proceedings of the IEEE 14th International Symposium on Embedded Multicore/Many-core Systems-on-Chip (MCSoC), Singapore, 20–23 December 2021. [Google Scholar]

- Wang, Y.; Li, Y.; Guo, J.; Fan, Y.; Chen, L.; Zhang, B.; Wang, W.; Zhao, Y.; Zhang, J. Cost-effective computing power provisioning for video streams in a Computing Power Network with mixed CPU and GPU. In Proceedings of the 2023 Asia Communications and Photonics Conference (ACP/POEM), Wuhan, China, 4–7 November 2023. [Google Scholar]

- Jay, M.; Ostapenco, V.; Lefèvre, L.; Trystram, D.; OOrgerie, A.C.; Fichel, B. An experimental comparison of software-based power meters: Focus on CPU and GPU. In Proceedings of the CCGrid 2023—23rd IEEE/ACM International Symposium on Cluster, Cloud and Internet Computing, Bangalore, India, 1–4 May 2023. [Google Scholar]

- Peffers, K.; Tuunanen, T.; Rothenberger, M.A.; Chatterjee, S. A design science research methodology for information systems research. J. Manag. Inf. Syst. 2007, 24, 45–77. [Google Scholar] [CrossRef]

- Antunes, S.N.; Okano, M.T.; Nääs, I.d.A.; Lopes, W.A.C.; Aguiar, F.P.L.; Vendrametto, O.; Fernandes, J.C.L.; Fernandes, M.E. Model Development for Identifying Aromatic Herbs Using Object Detection Algorithm. AgriEngineering 2024, 6, 1924–1936. [Google Scholar] [CrossRef]

| Criterion | YOLO Nano | YOLO Small |

|---|---|---|

| Architecture | Optimized CNN with separable convolutions | CSPNet/C2f + FPN or PAN |

| Parameters | ~1–2 million | ~7–11 million |

| FLOPs | <1 GFLOP | 5–10 GFLOPs |

| Accuracy (mAP) | 25–35% | 35–45% |

| Speed | Very high, ideal for edge | High, with real-time support |

| Applications | IoT, drones, mobile apps | Industry 4.0, autonomous vehicles, manufacturing |

| Compatible Frameworks | Limited support (YOLO Nano is academic research) | Broad support (Ultralytics, PyTorch 2.x, ONNX, CoreML) |

| Criterion | CPU | GPU |

|---|---|---|

| Performance | High in complex sequential tasks; low parallelization [18] | Very high in highly parallelizable tasks; ideal for deep learning and matrix computations [18,21] |

| Cost | Generally, a lower initial cost; good value for money for general tasks [19] | Higher initial cost; excellent cost–benefit in specialized applications requiring acceleration [22] |

| Energy Efficiency | Moderate energy efficiency due to less parallelization [23] | High energy efficiency in parallel applications that reduce total execution time [19,23] |

| Recommended Applications | Sequential applications with high logical complexity [18] | Parallelizable applications, deep learning, intensive numerical computation [20] |

| Model | Epochs | Precision (P) | Recall (R) | mAP@0.5 | mAP @[0.5:0.95] | F1-Score | Inference Time (ms/Image) |

|---|---|---|---|---|---|---|---|

| YOLOv8 Small | 100 | 0.951 | 0.904 | 0.941 | 0.851 | 0.907 | ~13–14 ms |

| YOLOv8 Small | 200 | 0.925 | 0.871 | 0.922 | 0.847 | 0.911 | ~13–14 ms |

| YOLOv8 Nano | 100 | 0.922 | 0.841 | 0.934 | 0.865 | 0.925 | ~13–14 ms |

| YOLOv8 Nano | 200 | 0.932 | 0.862 | 0.938 | 0.868 | 0.914 | ~13–14 ms |

| Model | Epochs | Image | Detected Class | mAP@0.5 | Reliability | Inference Time |

|---|---|---|---|---|---|---|

| YOLOv8 Nano | 100 | ok.jpg | Conforming | 0.934 | 0.91 | ~470 ms |

| YOLOv8 Nano | 100 | nok.jpg | Non-conforming | 0.934 | 0.88 | ~460 ms |

| YOLOv8 Nano | 100 | ok1.jpg | Conforming | 0.934 | 0.41 (low) | ~470 ms |

| YOLOv8 Nano | 100 | nok1.jpg | Non-conforming | 0.934 | 0.63 | ~470 ms |

| YOLOv8 Nano | 100 | ok2.jpg | Conforming | 0.934 | 0.71 | ~460 ms |

| YOLOv8 Nano | 100 | nok2.jpg | Non-conforming | 0.934 | 0.83 | ~470 ms |

| YOLOv8 Nano | 100 | ok3.jpg | Conforming | 0.934 | 0.86 | ~460 ms |

| YOLOv8 Nano | 100 | nok3.jpg | Non-conforming | 0.934 | 0.89 | ~470 ms |

| YOLOv8 Nano | 200 | ok.jpg | Conforming | 0.938 | 0.91 | ~470 ms |

| YOLOv8 Nano | 200 | nok.jpg | Non-conforming | 0.938 | 0.88 | ~460 ms |

| YOLOv8 Nano | 200 | ok1.jpg | Conforming | 0.938 | 0.62 | ~470 ms |

| YOLOv8 Nano | 200 | nok1.jpg | Non-conforming | 0.938 | 0.85 | ~470 ms |

| YOLOv8 Nano | 200 | ok2.jpg | Conforming | 0.938 | 0.71 | ~460 ms |

| YOLOv8 Nano | 200 | nok2.jpg | Non-conforming | 0.938 | 0.89 | ~470 ms |

| YOLOv8 Nano | 200 | ok3.jpg | Conforming | 0.938 | 0.92 | ~460 ms |

| YOLOv8 Nano | 200 | nok3.jpg | Non-conforming | 0.938 | 0.94 | ~470 ms |

| YOLOv8 Small | 100 | ok.jpg | Conforming | 0.941 | 0.98 | ~1337 ms |

| YOLOv8 Small | 100 | nok.jpg | Non-conforming | 0.941 | 0.87 | ~1315 ms |

| YOLOv8 Small | 100 | ok1.jpg | Conforming | 0.941 | 0.61 | ~1319 ms |

| YOLOv8 Small | 100 | nok1.jpg | Non-conforming | 0.941 | 0.64 | ~1322 ms |

| YOLOv8 Small | 100 | ok2.jpg | Conforming | 0.941 | 0.89 | ~1337 ms |

| YOLOv8 Small | 100 | nok2.jpg | Non-conforming | 0.941 | 0.81 | ~1316 ms |

| YOLOv8 Small | 100 | ok3.jpg | Conforming | 0.941 | 0.72 | ~1321 ms |

| YOLOv8 Small | 100 | nok3.jpg | Non-conforming | 0.941 | 0.74 | ~1313 ms |

| YOLOv8 Small | 200 | ok.jpg | Conforming | 0.922 | 0.95 | ~1339 ms |

| YOLOv8 Small | 200 | nok.jpg | Non-conforming | 0.922 | 0.82 | ~1326 ms |

| YOLOv8 Small | 200 | ok1.jpg | Conforming | 0.922 | 0.67 | ~1314 ms |

| YOLOv8 Small | 200 | nok1.jpg | Non-conforming | 0.922 | 0.68 | ~1305 ms |

| YOLOv8 Small | 200 | ok2.jpg | Conforming | 0.922 | 0.83 | ~1327 ms |

| YOLOv8 Small | 200 | nok2.jpg | Non-conforming | 0.922 | 0.80 | ~1319 ms |

| YOLOv8 Small | 200 | ok3.jpg | Conforming | 0.922 | 0.69 | ~1334 ms |

| YOLOv8 Small | 200 | nok3.jpg | Non-conforming | 0.922 | 0.71 | ~1329 ms |

| Model | Epochs | Inference Time (GPU) | Inference Time (CPU—Pi 500) | Approximate Difference |

|---|---|---|---|---|

| YOLOv8 Nano | 100 | ~13 ms | ~470 ms | ~36× slower |

| YOLOv8 Nano | 200 | ~13 ms | ~470 ms | ~36× slower |

| YOLOv8 Small | 100 | ~13 ms | ~1315 ms | ~101× slower |

| YOLOv8 Small | 200 | ~13 ms | ~1315 ms | ~101× slower |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Okano, M.T.; Lopes, W.A.C.; Ruggero, S.M.; Vendrametto, O.; Fernandes, J.C.L. Edge AI for Industrial Visual Inspection: YOLOv8-Based Visual Conformity Detection Using Raspberry Pi. Algorithms 2025, 18, 510. https://doi.org/10.3390/a18080510

Okano MT, Lopes WAC, Ruggero SM, Vendrametto O, Fernandes JCL. Edge AI for Industrial Visual Inspection: YOLOv8-Based Visual Conformity Detection Using Raspberry Pi. Algorithms. 2025; 18(8):510. https://doi.org/10.3390/a18080510

Chicago/Turabian StyleOkano, Marcelo T., William Aparecido Celestino Lopes, Sergio Miele Ruggero, Oduvaldo Vendrametto, and João Carlos Lopes Fernandes. 2025. "Edge AI for Industrial Visual Inspection: YOLOv8-Based Visual Conformity Detection Using Raspberry Pi" Algorithms 18, no. 8: 510. https://doi.org/10.3390/a18080510

APA StyleOkano, M. T., Lopes, W. A. C., Ruggero, S. M., Vendrametto, O., & Fernandes, J. C. L. (2025). Edge AI for Industrial Visual Inspection: YOLOv8-Based Visual Conformity Detection Using Raspberry Pi. Algorithms, 18(8), 510. https://doi.org/10.3390/a18080510