4.1. Beta Distribution Assumptions

The theory of binary subjective logic is well established [

5]. The two mutually exclusive and comprehensive states represent a Bernoulli distribution and the conjugate prior is a beta distribution. However, the ML methods applied as ensembles are not guaranteed to produce a set of predicted probabilities of class membership that fit a beta distribution. Tests of the beta distribution were made by the Kolmogorov–Smirnov distance (D) statistical test (i.e., the K-S test) [

43]. The test statistic compares the empirical cumulative probability distribution to the cumulative probabilities for the reference beta distribution. The reference beta distribution is based on the fitted

and

shape parameters for each validation sample. The Null hypothesis is that the empirical distribution was drawn from the reference distribution. If the measured statistic is greater than the critical value,

, the Null hypothesis is rejected. The critical value is

[

43].

Table 2 gives the results for the K-S test on the beta distribution fitting data for LDA, RF and SVM base models. The “Samples” column gives the number of in silico samples used to train each of the 100 ensembles. The “Mean

D” column gives the average value of D calculated for the 1117 validation samples. The “Non-Beta Fits” and “% Non-Beta Fits” columns give the number and percentage, respectively, of the 1117 samples where the predicted probabilities of class

x membership were statistically different from a beta distribution at the

significance level. Increasing the training set size for the LDA models improved the fraction of validation samples that fit a beta distribution. The RF ML models showed the opposite trend, with an increasing number of validation samples’ predicted class membership probabilities not conforming to a beta distribution. This trend is not understood, nonetheless, the percentage of non-beta distributions remained below

up to a training set size of 2000. However, as the size of the RF training set increased, the median uncertainty showed statistically significant improvement and the ROC AUC also exhibited statistically significant improvement up to a training set size of 60,000. These trends are discussed further in

Section 4.2,

Table 3 and

Table 4. The decreasing median uncertainty for indicates a narrowing of the fitted beta distribution which results from increased similarity among the predicted probabilities from all members of the ensemble. The SVM ML models gave the poorest performance regarding the beta distribution fit. The further analysis of the observed trend for the RF base models will be studied in the future as it is beyond the scope of this work.

4.2. Training Data Set Size Effects

The results from varying the total number of samples in the training data set are shown in

Table 3 and

Table 4. The total number of samples (first column) in each case consists of an equal number of samples containing ILR (class

x), and samples containing no ILR (class

). Data sets with equal representation from classes

x and

are balanced. The number of base models in each ensemble was 100. The results shown in the “Bimodal” column in

Table 3 reflect the number of the 1117 validation samples that produced a set of posterior probabilities that were best fitted by a beta distribution with both

and

less than 1, which corresponds to a bimodal distribution. In the LDA model, training data sets composed of 2000 randomly chosen samples were required to eliminate the bimodal fits. When the beta distribution fit parameters for a single validation sample both fell below a value of 1, the model was considered as showing significant uncertainty. In those cases, the values each fitting parameter were assigned a value of 1, which results in

of 1 and each of

and

equal to 0. In contrast, even the minimum training data set sample size (50

x and 50

) did not produce bimodal posterior probability distributions from the ensemble of RF and SVM models. This is attributed to the greater complexity of these models. The RF models each contained 500 trees and optimized the mtry parameter. The SVM models produce a complex support vector with tuning parameter `sigma’ held constant at a value of 0.1325 and tuning parameter `C’ was held constant at a value of 2.

The “Median

” column in

Table 3 provides the median

value obtained for the 1117 validation samples. The “Max.

” column gives the maximum uncertainty from the validation opinions. The Wilcoxon rank sum test was applied to each successive sample size to determine if the median

was statistically decreasing as the number of training samples increased. The Wilcoxon

p-value is given in the last column of

Table 3. The

p-values were very small and indicate that the decreases in median

were statistically significant. Shapiro–Wilk normality tests showed the

values were not normally distributed in each case. The Wilcoxon rank sum test is non-parametric and does not rely on an assumption of normality.

The LDA results show a maximum of total uncertainty,

, for some validation samples predicted with models developed with training data sets of 1000 or less samples. The maximum uncertainty of the LDA model ensemble falls to a very low value of 5.60

at a training data set size of 60,000 samples. The training samples were bootstrapped to ensure that all 100 training sets were not equivalent. The very low uncertainty for the LDA base model is attributed to the in silico data creation method [

7] which mixes IL into SUB at varying contributions. The covariances for

x and

are nearly identical, which is a requirement for the LDA model; however, the training data set size must be large enough to sufficiently represent the validation data. The low uncertainty of the LDA base model does not necessarily mean that the model discriminates well between

x and

classes.

By comparison, maximum uncertainty for the RF model, , starts at 3.52 for the smallest training data sets and decreases to 1.20 for the largest training size. The RF is a much more sophisticated ML model than LDA; however, the slightly larger value, in comparison to , indicates that the RF ML models are giving slightly broader distribution of the posterior probabilities of class x membership. The same trend can be seen in the median values for and .

The SVM models gave larger median and maximum values than either the LDA or RF methods. This is attributed to the complexity of the support vectors for each ensemble member, which results in a larger range of posterior probabilities of class x membership for each validation sample predicted by each of the 100 ensemble members.

The last three columns of

Table 4 directly address the class separation performance on the fire debris validation data by the three ML models as a function of training data set size. The

is the area under the ROC curve generated from all validation samples. The subscripts on

indicate the relative contribution (strong, moderate and weak) of the ILR in the fire debris validation samples. The ratio was calculated from the most intense chromatographic peaks corresponding to IL and SUB contributions. Ratios of IL/SUB correspond to

,

and

. The AUC is equivalent to the probability that a randomly selected

x sample will have a greater score than a randomly selected

sample. A higher AUC corresponds to better class separation.

In

Table 4, the “95% CI” column gives the confidence interval for the AUC and the “

p-value” column gives the statistic for the two-sided Delong test. The LDA ROC curves are not statistically improving for models exceeding 200 training samples. The RF ROC curves did not statistically improve for models exceeding 20,000 training samples. The SVM ROC curves continued to get statistically better for models up to 20,000 training samples. The SVM ML was not applied to models exceeding 20,000 training samples due to limited computational facilities.

Table 5 shows the change in ROC AUC as the size of the training set increased and the ratio of IL/SUB was changed. For all three ML models, at all training data set sizes, the AUC is seen to increase as the IL/SUB ratio incorporated into the model goes from all samples in the second column from the left (i.e.,

) to only the high IL/SUB ratio (i.e.,

) in the rightmost column. The standard error for each AUC is given in parentheses. The increase in AUC at each training sample size, proceeding from

to

, and from

to

is statistically significant based on a Delong test, with each

p < 2.2

. This result demonstrates that both ML methods are producing better

class separation at strong ILR contribution. Notably, at even the smallest training size, the RF model produces better class separation than the LDA model at the largest training size. At the largest training size, the RF model produces an AUC

s,m,w of 0.849, and the AUC

s of 0.962 indicates very good class separation. The SVM ML model is producing a similar AUC to the RF ML model at a training size of 20,000.

All models are performing similarly to what would be expected from a human analyst (i.e., better ILR detection as the ILR relative contribution increases). The most significant differences in the models are the excessive uncertainty produced by LDA at very small training sizes, the higher overall uncertainty by SVM, and the long training times required by SVM. The optimal model studied in this research is the RF ensemble trained on 60,000 training samples.

4.3. Visualizing Opinions and Performance

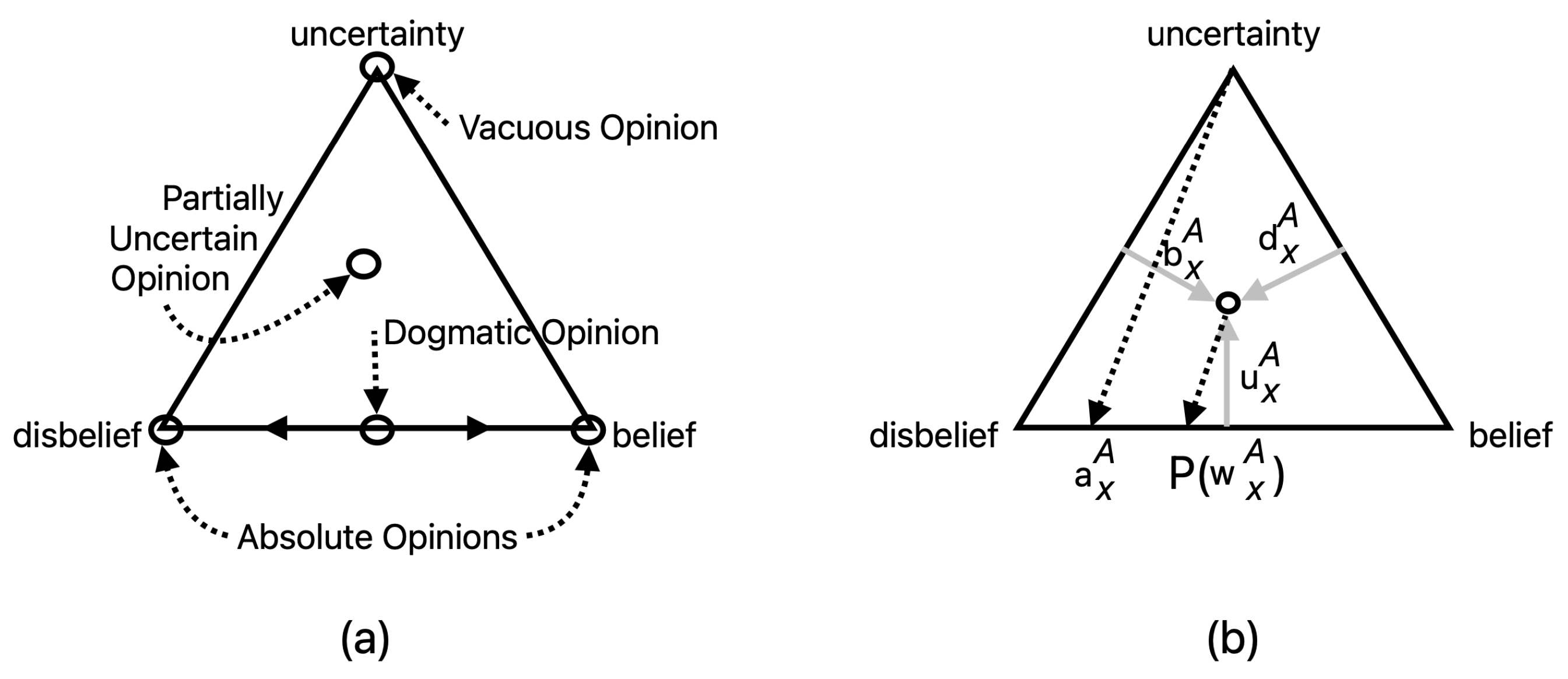

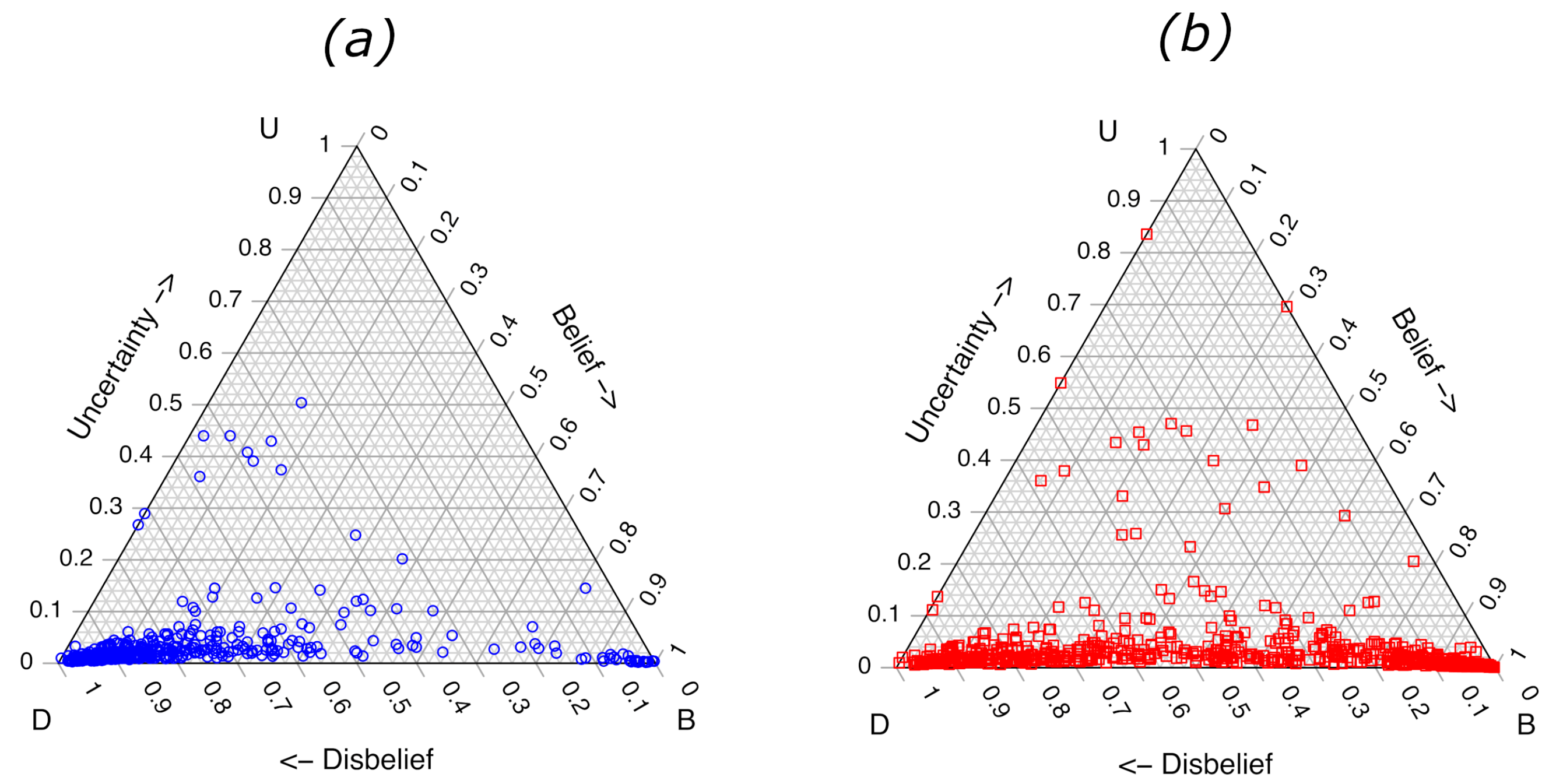

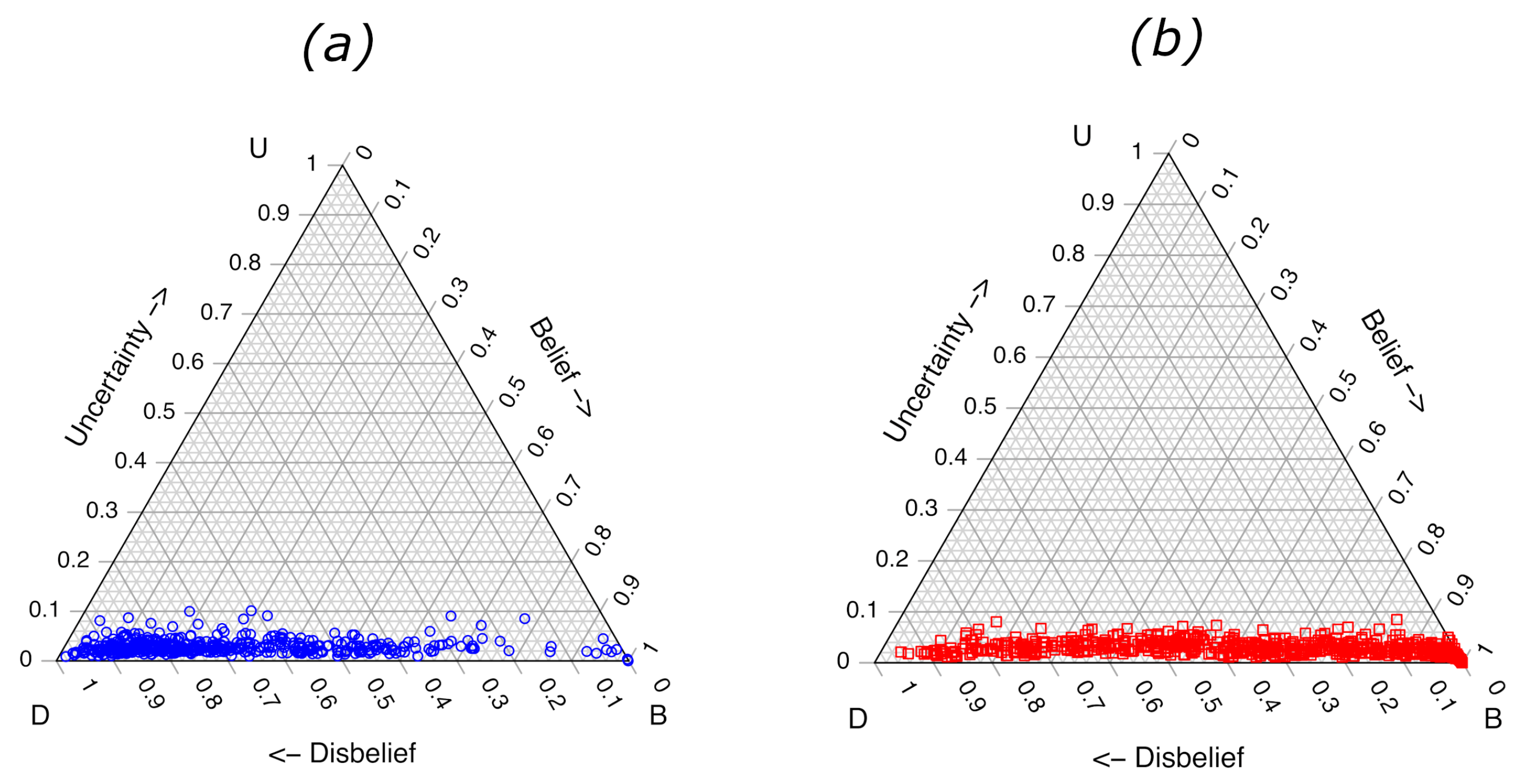

As shown in

Figure 1, the visualization of binary subjective opinions is possible in a ternary plot. The calculated

values for the validation samples trained on 1000

x and 1000

samples are shown in

Figure 2.

Figure 2a shows the values for validation samples belonging to class

, and the plot

Figure 2b shows the values for validation samples belonging to class

x. The plots allow an immediate visual assessment of

for any sample. Other general trends are observed from the ternary plots in

Figure 2. The

value for some of the validation samples belonging to class

x are larger than some of the class

. The three class

x samples with the highest

values all contained moderate or strong levels of the same aromatic solvent that had been weathered to 75% loss of volume, and they all contained “glue stick” as one of the substrate components. The samples correspond to reference numbers 694, 695 and 696 in the Fire Debris Database [

24]. In this case, the identity and composition of the samples with the highest predicted uncertainty do not assist in understanding shortcomings in the LDA ML model, but the information could be helpful to the forensic science interpretation of model performance.

It is also evident from the plots that the , generated from the projection of each point straight down onto the bottom axis (due to ) results in substantial overlap of the two classes. A quantitative analysis of the extent of class separation requires visualizing the values on an ROC graph.

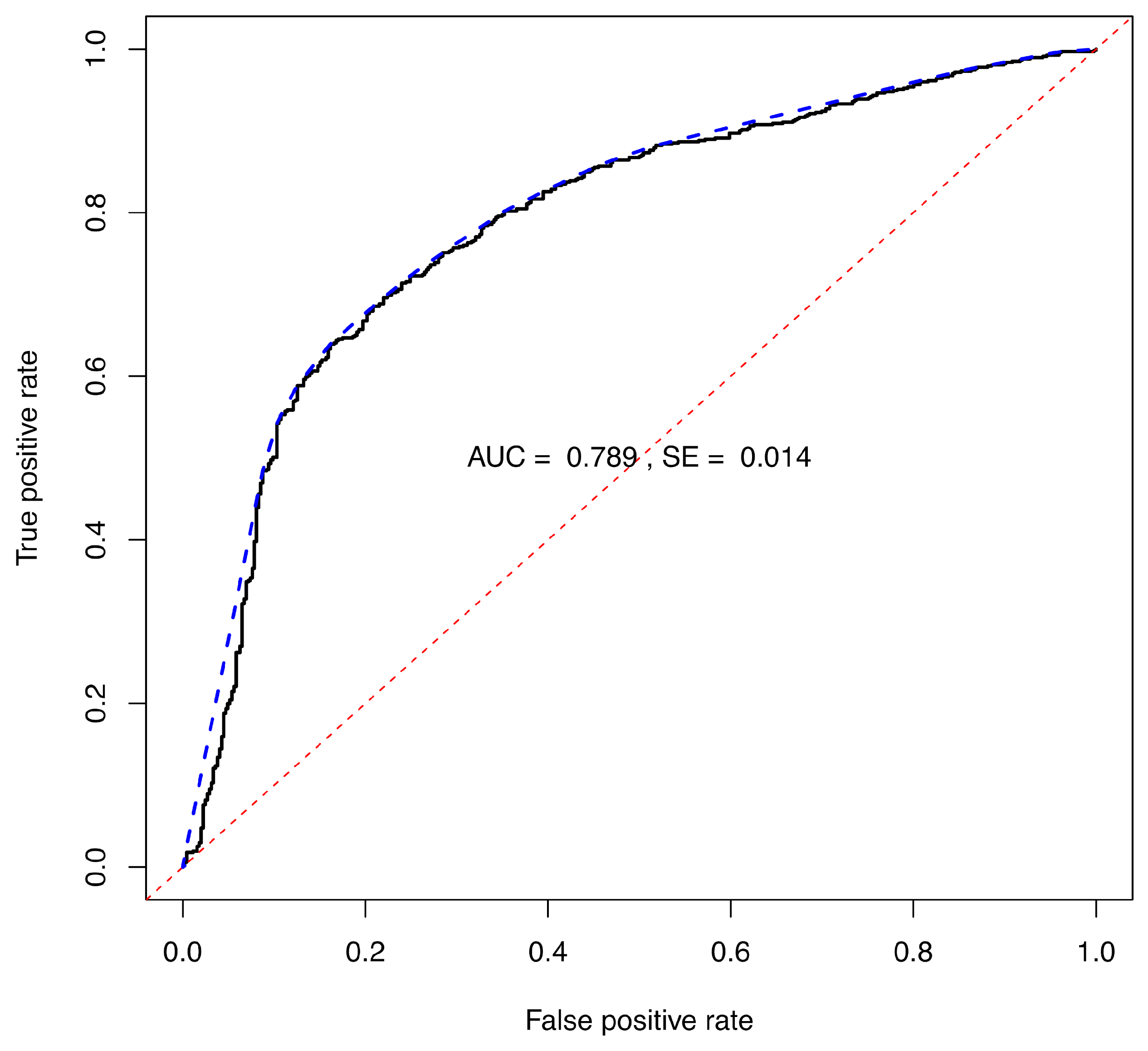

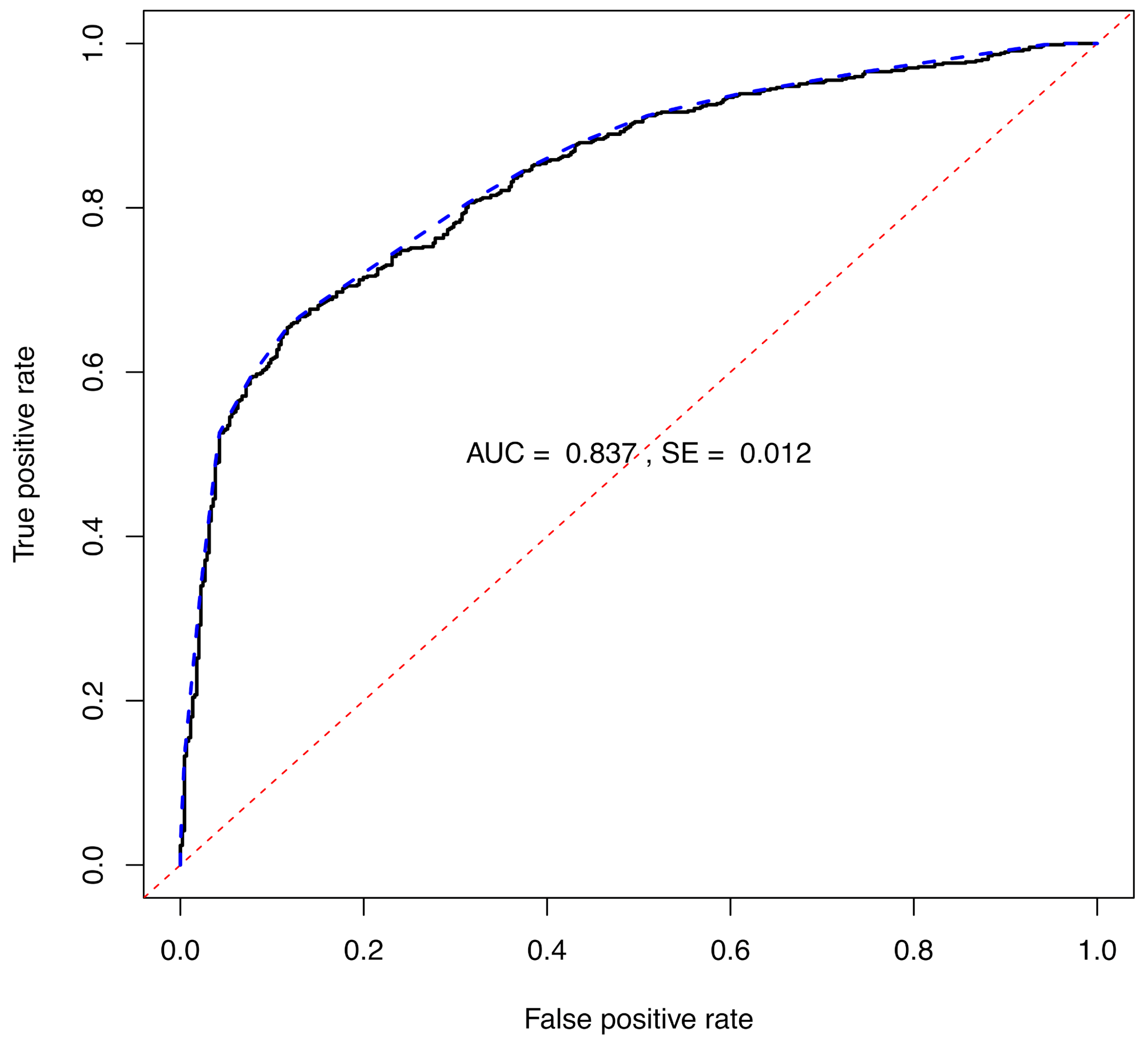

The

values for the binary opinions were used to calculate scores to generate an ROC curve. The ROC curve resulting from the LDA ensemble trained on 2000 samples, including strong, moderate and weak IL contributions,

is shown in

Figure 3. The corresponding

is 0.789, see

Table 4.

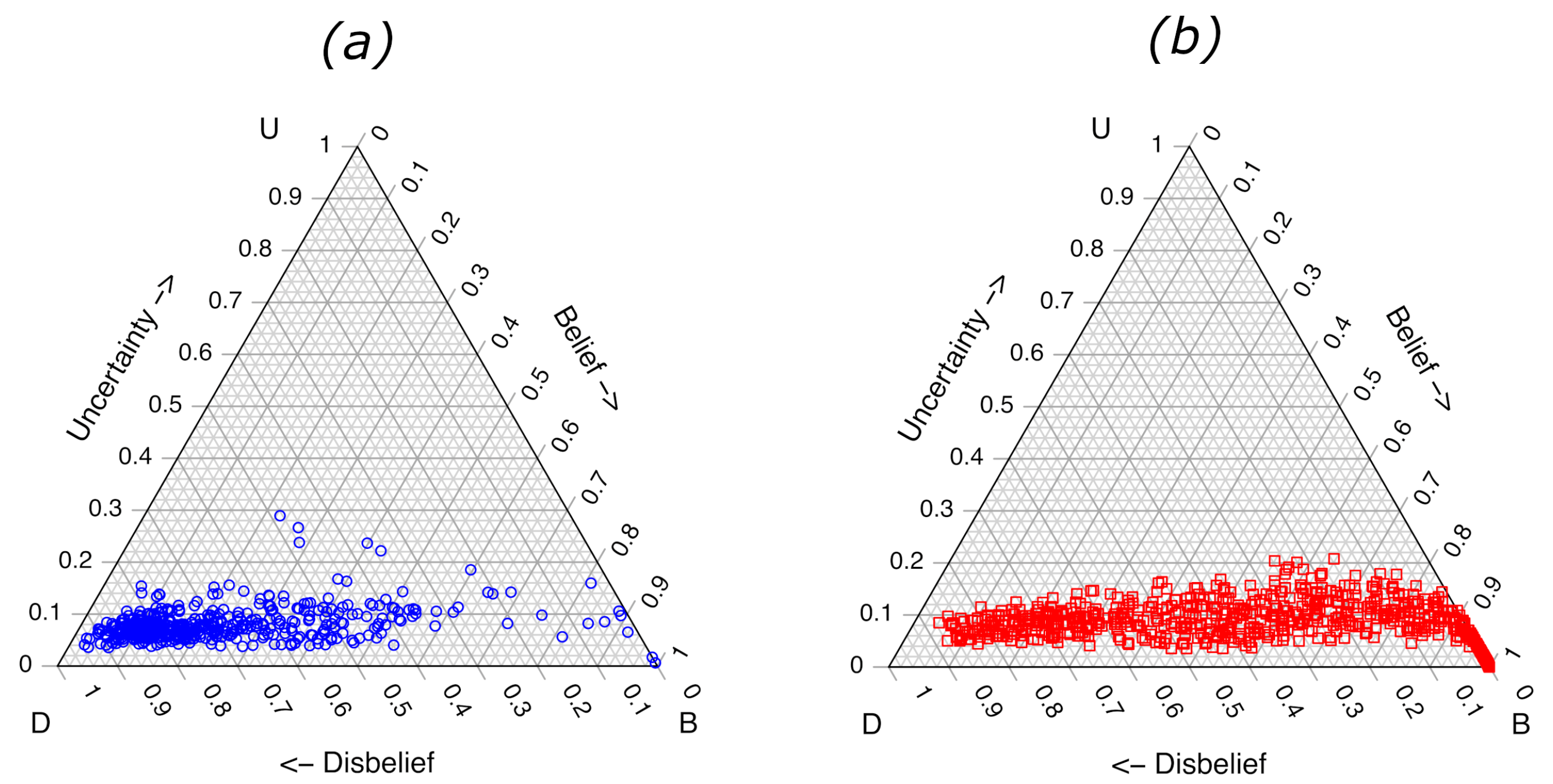

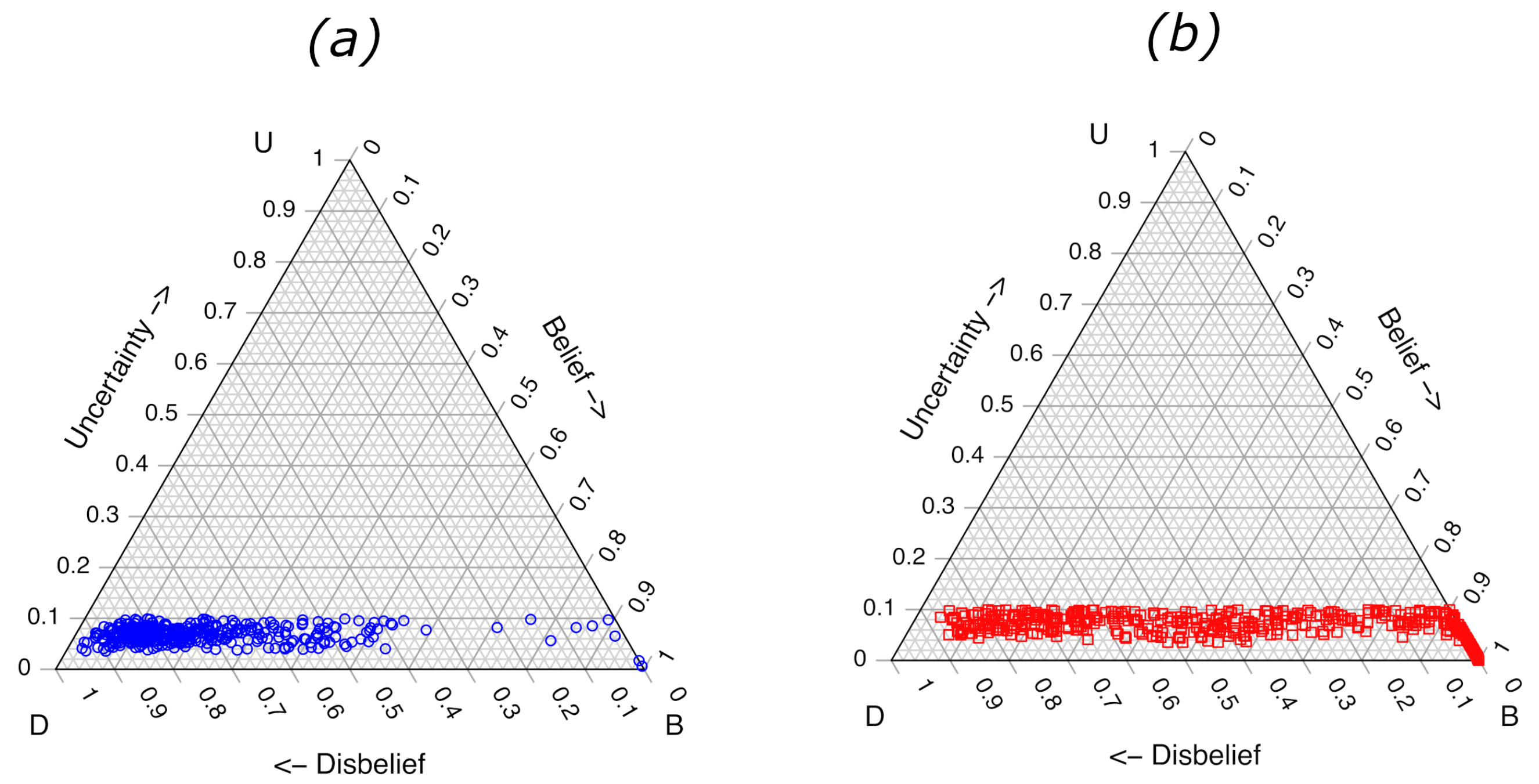

The ternary plots and ROC curve for the RF model trained with 2000 samples in each of the 100 base-models of the ensemble are shown in

Figure 4 and

Figure 5, respectively. The ternary plots demonstrate the lower uncertainties arising from the RF model, with the highest value of approximately 0.20–0.30 calculated for several of the class

samples. The largest uncertainties for the

x samples were approximately 0.20. Again, the

values are obtained by projecting each point straight down to the base of the ternary plot (

). The class separation of the LLR scores calculated from the

values, Equation (

9) produces an ROC plot, as shown in

Figure 5, with an

of 0.837, as well as

Table 4.

Increasing the number of samples in each training set leads to a further decrease in the median and maximum observed

, as shown in

Table 3. The maximum observed

plateaus around a value of 0.1, as shown in

Table 3. The ternary plots for the models trained on 20,000 in silico samples for each member of the ensemble are shown in

Figure 6. Although increasing the training sample size decreases the maximum observed

, the ROC

only increases to 0.846, as shown in

Table 3. Similar results are observed for the LDA method.

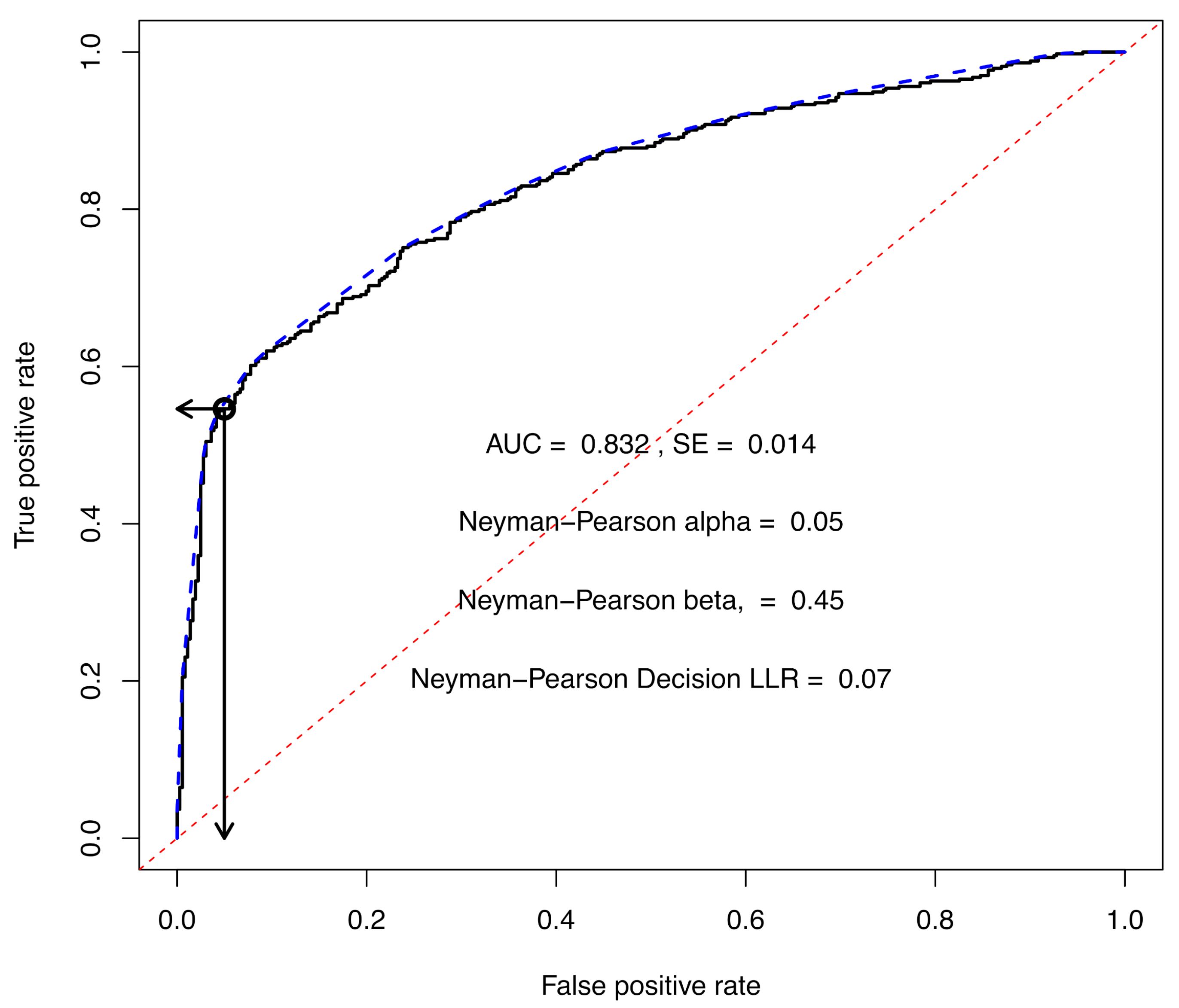

4.4. Extending the Opinions to Classification Decisions and Evidentiary Value

This approach is demonstrated for the RF base-method where each ensemble member is trained on 2000 in silico samples. An uncertainty opinion threshold was set at 0.10 for this example. The

threshold is subjective and should be adjusted based on the amount of uncertainty the application can tolerate. By setting a threshold of 0.10, many of the 1117 validation samples are determined to be ineligible for a decision. The opinions of the 795 validation samples that are decision eligible are shown in

Figure 7. The ROC curve that was generated from the decision eligible validation samples is shown in

Figure 8. The ROC AUC for the curve in

Figure 8 has decreased slightly from 0.837, see

Table 3, to 0.832. The LLR decision threshold of 0.07 was obtained by setting the maximum false positive rate at 0.05, which corresponds to the Neyman–Pearson

value. Projecting vertically from a false positive rate of 0.05, the score at the point of intersection with the ROC curve is the decision threshold LLR of 0.07. The decision LLR corresponds to a

of 0.54 in the ternary plots. Projecting from the decision threshold horizontally to the true positive rate axis gives an intersection of 0.55, which is equal to the quantity

, where

is the Neyman–Pearson

value of 0.45.

The significance of

Figure 7 and

Figure 8 is that a previously unseen sample with a calculated

from the same model may be classified as belonging to class

x or class

with known true and false positive rates. Samples with

should be classified into class

x, only if a decision is required. Otherwise the analyst should be reporting an opinion, which may include the strength of the evidence expressed as a likelihood ratio.

Although a 5% false positive rate is often employed in hypothesis testing, it can be argued that a 5% false positive rate in forensic science could call into question the admissibility of the evidence. Obtaining a validated false positive rate in fire debris analysis is difficult due in part to the challenge of obtaining casework-realistic ground truth samples. Sampat at al. reported an 89% true positive rate for IL detection and a 7% false positive rate for tests limited to three classes of IL while using two-dimensional gas chromatography with time-of-flight mass spectrometry [

44]. When setting a decision threshold on an ROC curve, a lower false positive rate will correspond to a lower true positive rate. This trade-off becomes less problematic as the ROC AUC increases.

The calibrated likelihood ratio for any point can be determined from the slopes of the covering segment of the ROC CH. The ROC CH in

Figure 8 comprises 20 segments, as shown in

Table 6. For example, if a sample is calculated to have a

, it would meet the eligibility requirement for proceeding to a decision if a decision were required. Further, if the sample had a

, the score calculated from Equation (

9) would be 0.421. The calculated score is greater than the Neyman–Pearson decision score of 0.07, as shown in

Figure 8, and would be classified as belonging to class

x. The calculated score would fall between points 3 and 4 in

Table 6 and would be covered by segment 3. The calibrated LR for the sample would be 12.715 (

). This procedure can be followed for any sample

in this work that was calculated by the RF model trained on 2000 balanced in silico samples for each of the 100 ensemble RF base-functions.