Clinical Validation of a Computed Tomography Image-Based Machine Learning Model for Segmentation and Quantification of Shoulder Muscles

Abstract

1. Introduction

2. Materials and Methods

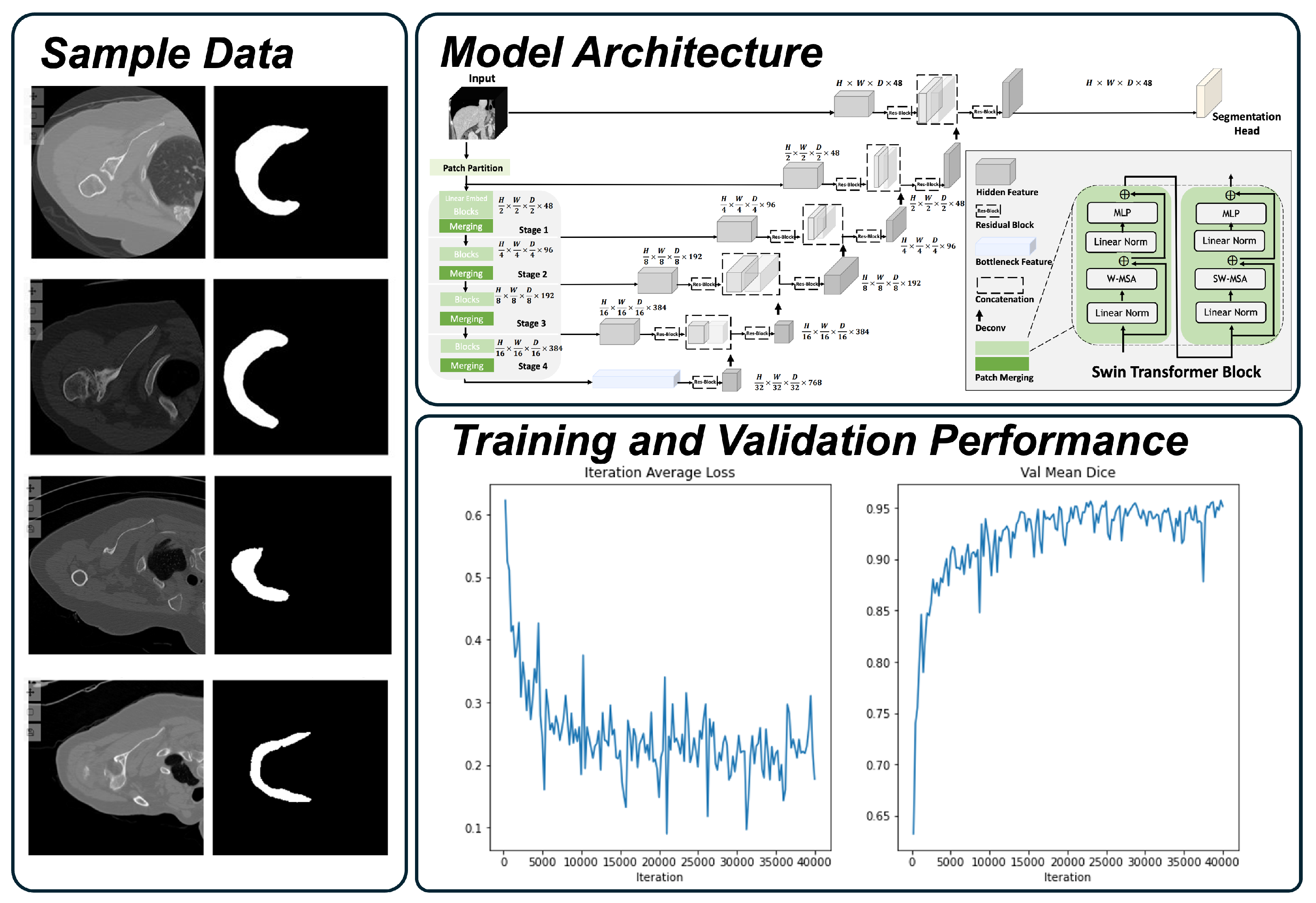

2.1. Deltoid Segmentation Model

2.2. Internal Development

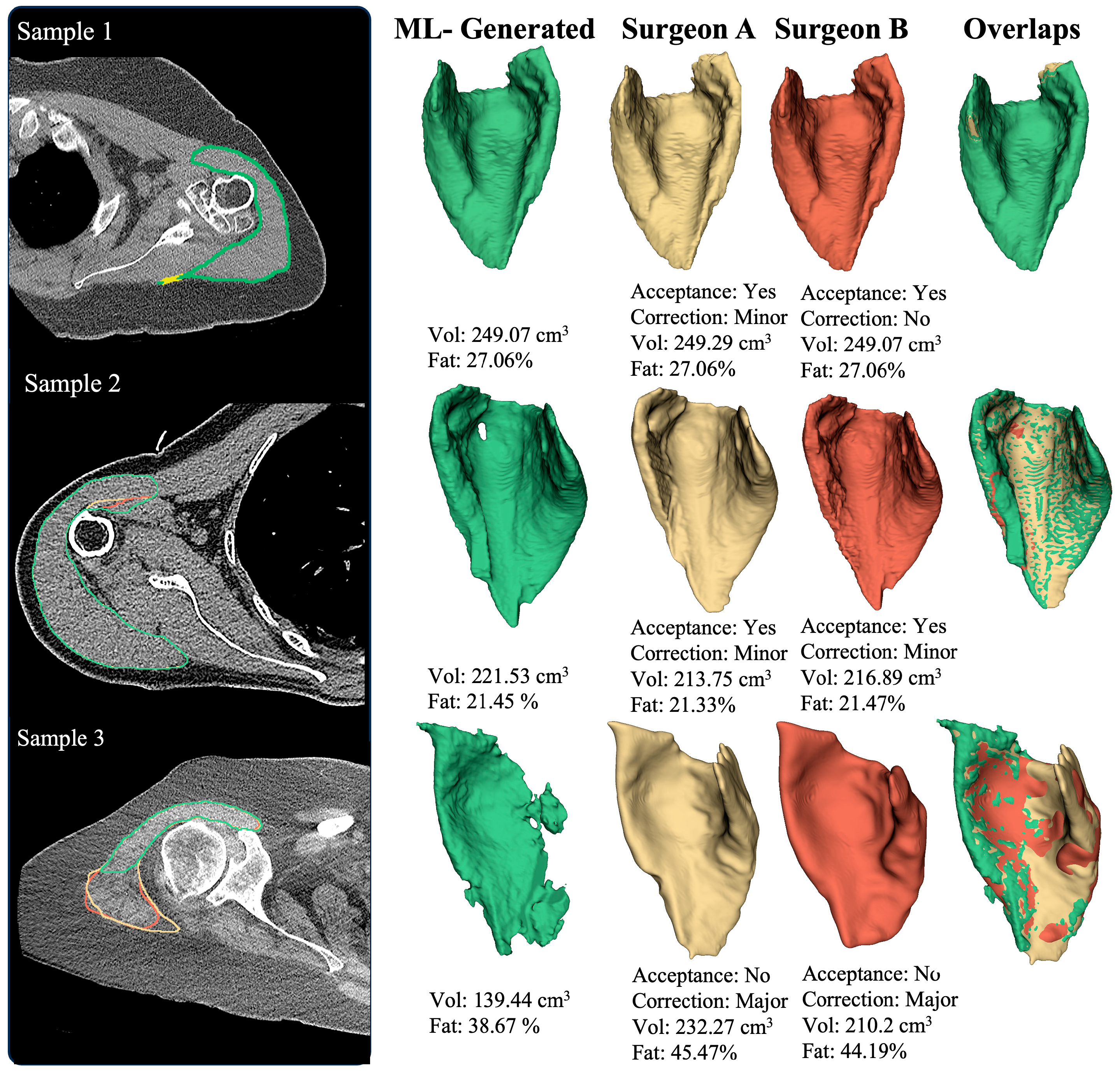

2.3. Expert Validation

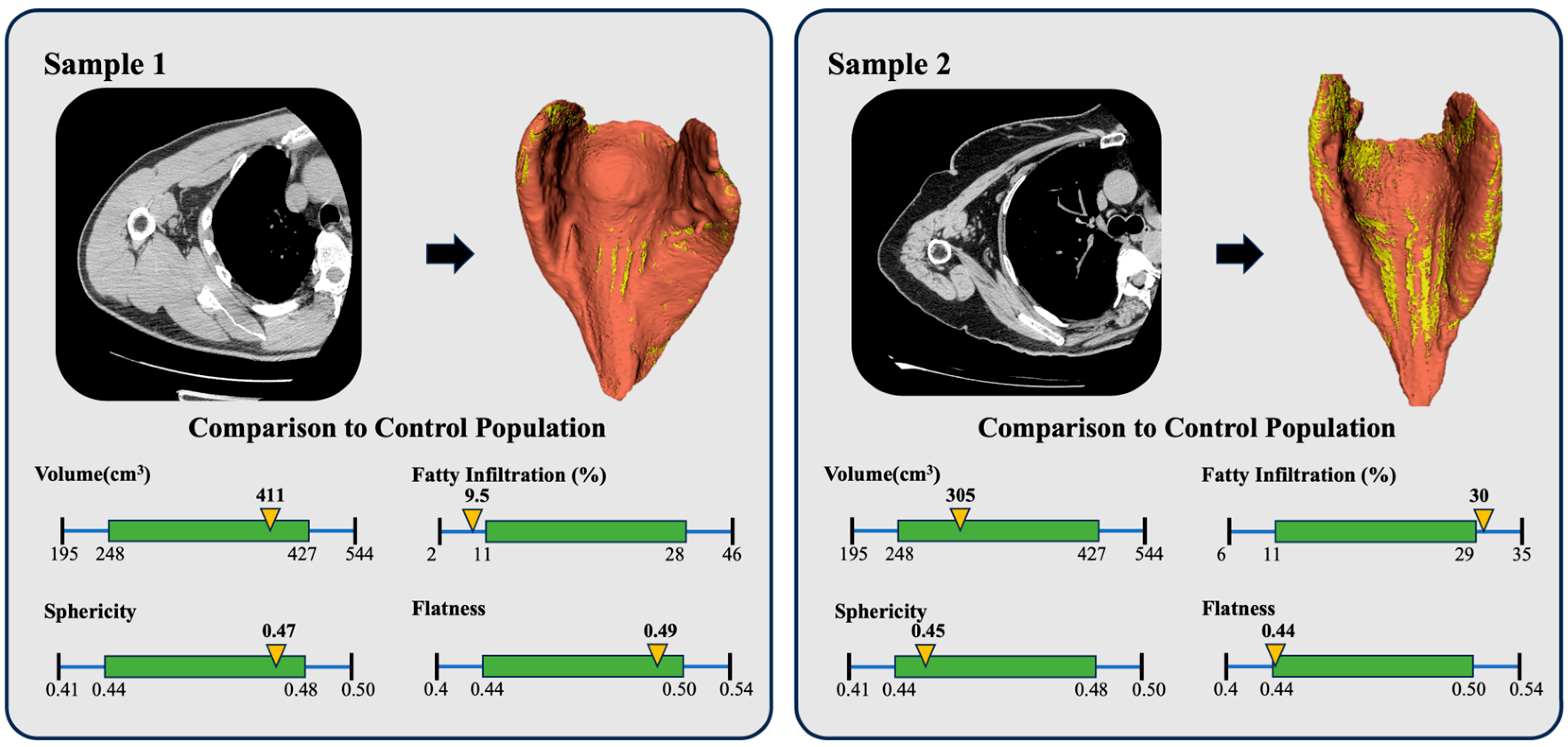

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| aTSA | Anatomic total shoulder arthroplasty |

| rTSA | Reverse total shoulder arthroplasty |

| ML | Machine learning |

| CT | Computed tomography |

| OA | Osteoarthritis |

| RCA | Rotator Cuff Arthropathy |

| RCT | Rotator Cuff Tears |

References

- Roche, C.P. Reverse Shoulder Arthroplasty Biomechanics. J. Funct. Morphol. Kinesiol. 2022, 7, 13. [Google Scholar] [CrossRef] [PubMed]

- Goutallier, D.; Postel, J.M.; Bernageau, J.; Lavau, L.; Voisin, M.C. Fatty muscle degeneration in cuff ruptures. Pre- and postoperative evaluation by CT scan. Clin. Orthop. 1994, 304, 78–83. [Google Scholar] [CrossRef]

- Yoon, J.P.; Seo, A.; Kim, J.J.; Lee, C.H.; Baek, S.H.; Kim, S.Y.; Jeong, E.T.; Oh, K.S.; Chung, S.W. Deltoid muscle volume affects clinical outcome of reverse total shoulder arthroplasty in patients with cuff tear arthropathy or irreparable cuff tears. PLoS ONE 2017, 12, e0174361. [Google Scholar] [CrossRef]

- Wiater, B.P.; Koueiter, D.M.; Maerz, T.; Moravek, J.E., Jr.; Yonan, S.; Marcantonio, D.R.; Wiater, J.M. Preoperative deltoid size and fatty infiltration of the deltoid and rotator cuff correlate to outcomes after reverse total shoulder arthroplasty. Clin. Orthop. Relat. Res. 2015, 473, 663–673. [Google Scholar] [CrossRef] [PubMed]

- McClatchy, S.G.; Heise, G.M.; Mihalko, W.M.; Azar, F.M.; Smith, R.A.; Witte, D.H.; Stanfill, J.G.; Throckmorton, T.W.; Brolin, T.J. Effect of deltoid volume on range of motion and patient-reported outcomes following reverse total shoulder arthroplasty in rotator cuff-intact and rotator cuff-deficient conditions. Shoulder Elb. 2022, 14, 24–29. [Google Scholar] [CrossRef]

- Alipour, E.; Chalian, M.; Pooyan, A.; Azhideh, A.; Shomal Zadeh, F.; Jahanian, H. Automatic MRI-based rotator cuff muscle segmentation using U-Nets. Skelet. Radiol. 2024, 53, 537–545. [Google Scholar] [CrossRef]

- Azimbagirad, M.; Dardenne, G.; Ben Salem, D.; Werthel, J.; Boux De Casson, F.; Stindel, E.; Garraud, C.; Rémy-Néris, O.; Burdin, V. Robust semi-automatic segmentation method: An expert assistant tool for muscles in CT and MR data. Comput. Methods Biomech. Biomed. Eng. 2024, 11, 2301403. [Google Scholar] [CrossRef]

- Hess, H.; Ruckli, A.C.; Bürki, F.; Gerber, N.; Menzemer, J.; Burger, J.; Schär, M.; Zumstein, M.A.; Gerber, K. Deep-Learning-Based Segmentation of the Shoulder from MRI with Inference Accuracy Prediction. Diagnostics 2023, 13, 1668. [Google Scholar] [CrossRef]

- Khan, S.H.; Khan, A.; Lee, Y.S.; Hassan, M.; Jeong, W.K. Segmentation of shoulder muscle MRI using a new Region and Edge based Deep Auto-Encoder. Multimed. Tools Appl. 2021, 82, 14963–14984. [Google Scholar] [CrossRef]

- Kim, B.; Gandomkar, Z.; McKay, M.J.; Seitz, A.L.; Wesselink, E.O.; Cass, B.; Young, A.A.; Linklater, J.M.; Szajer, J.; Subbiah, K.; et al. Developing a three-dimensional convolutional neural network for automated full-volume multi-tissue segmentation of the shoulder with comparisons to Goutallier classification and partial volume muscle quality analysis. J. Shoulder Elb. Surg. 2025, S1058-2746(25)00107-7. [Google Scholar] [CrossRef]

- Lee, K.; Lew, H.M.; Lee, M.H.; Kim, J.Y.; Hwang, J.Y. CSS-Net: Classification and Substitution for Segmentation of Rotator Cuff Tear. In Proceedings of the Computer Vision—ACCV 2022: 16th Asian Conference on Computer Vision, Macao, China, 4–8 December 2022. [Google Scholar] [CrossRef]

- Taghizadeh, E.; Truffer, O.; Becce, F.; Eminian, S.; Gidoin, S.; Terrier, A.; Farron, A.; Büchler, P. Deep learning for the rapid automatic quantification and characterization of rotator cuff muscle degeneration from shoulder CT datasets. Eur. Radiol. 2021, 31, 181–190. [Google Scholar] [CrossRef] [PubMed]

- Riem, L.; Feng, X.; Cousins, M.; DuCharme, O.; Leitch, E.B.; Werner, B.C.; Sheean, A.J.; Hart, J.; Antosh, I.J.; Blemker, S.S. A Deep Learning Algorithm for Automatic 3D Segmentation of Rotator Cuff Muscle and Fat from Clinical MRI Scans. Radiol. Artif. Intell. 2023, 5, e220132. [Google Scholar] [CrossRef] [PubMed]

- Wakamatsu, Y.; Kamiya, N.; Zhou, X.; Kato, H.; Hara, T.; Fujita, H. Automatic Segmentation of Supraspinatus Muscle via Bone-Based Localization in Torso Computed Tomography Images Using U-Net. IEEE Access 2021, 9, 155555–155563. [Google Scholar] [CrossRef]

- Kumar, V.; Allen, C.; Overman, S.; Teredesai, A.; Simovitch, R.; Flurin, P.H.; Wright, T.W.; Zuckerman, J.D.; Routman, H.; Roche, C. Development of a predictive model for a machine learning–derived shoulder arthroplasty clinical outcome score. Semin. Arthroplast. JSES 2022, 32, 226–237. [Google Scholar] [CrossRef]

- Kumar, V.; Roche, C.; Overman, S.; Simovitch, R.; Flurin, P.-H.; Wright, T.; Zuckerman, J.; Routman, H.; Teredesai, A. Using machine learning to predict clinical outcomes after shoulder arthroplasty with a minimal feature set. J. Shoulder Elb. Surg. 2021, 30, e225–e236. [Google Scholar] [CrossRef]

- Kumar, V.; Roche, C.; Overman, S.; Simovitch, R.; Flurin, P.-H.; Wright, T.; Zuckerman, J.; Routman, H.; Teredesai, A. Use of machine learning to assess the predictive value of 3 commonly used clinical measures to quantify outcomes after total shoulder arthroplasty. Semin. Arthroplast. JSES 2021, 31, 263–271. [Google Scholar] [CrossRef]

- Kumar, V.; Roche, C.; Overman, S.; Simovitch, R.; Flurin, P.-H.; Wright, T.; Zuckerman, J.; Routman, H.; Teredesai, A. What Is the Accuracy of Three Different Machine Learning Techniques to Predict Clinical Outcomes After Shoulder Arthroplasty? Clin. Orthop. Relat. Res. 2020, 478, 2351–2363. [Google Scholar] [CrossRef]

- Kumar, V.; Schoch, B.S.; Allen, C.; Overman, S.; Teredesai, A.; Aibinder, W.; Parsons, M.; Watling, J.; Ko, J.K.; Gobbato, B.; et al. Using machine learning to predict internal rotation after anatomic and reverse total shoulder arthroplasty. J. Shoulder Elb. Surg. 2022, 31, e234–e245. [Google Scholar] [CrossRef]

- Allen, C.; Kumar, V.; Elwell, J.; Overman, S.; Schoch, B.S.; Aibinder, W.; Parsons, M.; Watling, J.; Ko, J.K.; Gobbato, B.; et al. Evaluating the fairness and accuracy of machine learning-based predictions of clinical outcomes after anatomic and reverse total shoulder arthroplasty. J. Shoulder Elb. Surg. 2024, 33, 888–899. [Google Scholar] [CrossRef]

- Simmons, C.S.; Roche, C.; Schoch, B.S.; Parsons, M.; Aibinder, W.R. Surgeon confidence in planning total shoulder arthroplasty improves after consulting a clinical decision support tool. Eur. J. Orthop. Surg. Traumatol. 2022, 33, 2385–2391. [Google Scholar] [CrossRef]

- Simmons, C.; DeGrasse, J.; Polakovic, S.; Aibinder, W.; Throckmorton, T.; Noerdlinger, M.; Papandrea, R.; Trenhaile, S.; Schoch, B.; Gobbato, B.; et al. Initial clinical experience with a predictive clinical decision support tool for anatomic and reverse total shoulder arthroplasty. Eur. J. Orthop. Surg. Traumatol. 2023, 34, 1307–1318. [Google Scholar] [CrossRef]

- Rajabzadeh-Oghaz, H.; Kumar, V.; Berry, D.B.; Singh, A.; Schoch, B.S.; Aibinder, W.R.; Gobbato, B.; Polakovic, S.; Elwell, J.; Roche, C.P. Impact of Deltoid Computer Tomography Image Data on the Accuracy of Machine Learning Predictions of Clinical Outcomes after Anatomic and Reverse Total Shoulder Arthroplasty. J. Clin. Med. 2024, 13, 1273. [Google Scholar] [CrossRef] [PubMed]

- Rajabzadeh-Oghaz, H.; Elwell, J.; Schoch, B.S.; Aibinder, W.R.; Gobbato, B.; Wessell, D.; Kumar, V.; Roche, C.P. Radiomic Analysis of the Deltoid and Scapula: Identification of CT-Image Based Measurements Predictive of Pain, Motion, and Function Before and After Shoulder Arthroplasty. JSES Int. 2025, in press. [Google Scholar] [CrossRef]

- Hatamizadeh, A.; Nath, V.; Tang, Y.; Yang, D.; Roth, H.; Xu, D. Swin UNETR: Swin Transformers for Semantic Segmentation of Brain Tumors in MRI Images. In Lecture Notes in Computer Science; Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- van Griethuysen, J.J.M.; Fedorov, A.; Parmar, C.; Hosny, A.; Aucoin, N.; Narayan, V.; Beets-Tan, R.G.H.; Fillion-Robin, J.C.; Pieper, S.; Aerts, H.J.W.L. Computational Radiomics System to Decode the Radiographic Phenotype. Cancer Res. 2017, 77, e104–e107. [Google Scholar] [CrossRef] [PubMed]

- Ostmeier, S.; Axelrod, B.; Verhaaren, B.F.J.; Christensen, S.; Mahammedi, A.; Liu, Y.; Pulli, B.; Li, L.J.; Zaharchuk, G.; Heit, J.J. Non-inferiority of deep learning ischemic stroke segmentation on non-contrast CT within 16-hours compared to expert neuroradiologists. Sci. Rep. 2023, 13, 16153. [Google Scholar] [CrossRef]

- Parsons, M.; Greene, A.; Polakovic, S.; Byram, I.; Cheung, E.; Jones, R.; Papandrea, R.; Youderian, A.; Wright, T.; Flurin, P.H.; et al. Assessment of surgeon variability in preoperative planning of reverse total shoulder arthroplasty: A quantitative comparison of 49 cases planned by 9 surgeons. J. Shoulder Elb. Surg. 2020, 29, 2080–2088. [Google Scholar] [CrossRef] [PubMed]

- Parsons, M.; Greene, A.; Polakovic, S.; Rohrs, E.; Byram, I.; Cheung, E.; Jones, R.; Papandrea, R.; Youderian, A.; Wright, T.; et al. Intersurgeon and intrasurgeon variability in preoperative planning of anatomic total shoulder arthroplasty: A quantitative comparison of 49 cases planned by 9 surgeons. J. Shoulder Elb. Surg. 2020, 29, 2610–2618. [Google Scholar] [CrossRef]

- Boman, M. Human-Curated Validation of Machine Learning Algorithms for Health Data. Digit. Soc. 2023, 2, 46. [Google Scholar] [CrossRef]

- Cabitza, F.; Campagner, A.; Soares, F.; García de Guadiana-Romualdo, L.; Challa, F.; Sulejmani, A.; Seghezzi, M.; Carobene, A. The importance of being external. methodological insights for the external validation of machine learning models in medicine. Comput. Methods Programs Biomed. 2021, 208, 106288. [Google Scholar] [CrossRef]

- Mosqueira-Rey, E.; Hernández-Pereira, E.; Alonso-Ríos, D.; Bobes-Bascarán, J.; Fernández-Leal, Á. Human-in-the-loop machine learning: A state of the art. Artif. Intell. 2022, 56, 3005–3054. [Google Scholar] [CrossRef]

- Ma, J.; He, Y.; Li, F.; Han, L.; You, C.; Wang, B. Segment anything in medical images. Nat. Commun. 2024, 15, 654. [Google Scholar] [CrossRef] [PubMed]

| Considered Populations | |

|---|---|

| Patient Variable | |

| Gender | [M, F] |

| Age cohort | <60, 60–70, 70–80, >80 |

| Diagnosis | [OA, RCA, RCT] |

| Image Variable | |

| Kernels * | [Bone, Bone+, Fc130, [131, 3], B60] |

| Manufacturer * | [GE, Siemens, Toshiba] |

| Exclusion Criteria | |

| Included in training process | None |

| Deltoid insertion cut off | None |

| Metal artifact around shoulder | None |

| Low image quality | None |

| Other diagnoses (fracture, ON, RA, PTA) ** | None |

| Revision (shoulder) | None |

| Image with pixel size | Smaller than 0.3 and larger than 1mm |

| Internal Development | External Validation | |

|---|---|---|

| Patients | 97 (100%) | 32 (100%) |

| Male | 28 (29%) | 15 (47%) |

| Female | 69 (71%) | 17 (53%) |

| Age | ||

| <60 | 7 (7%) | 7 (22%) |

| ≥60 and <70 | 36(37%) | 15 (47%) |

| ≥70 and <80 | 45 (46%) | 6 (19%) |

| ≥80 | 9 (9%) | 4 (13%) |

| Diagnosis | ||

| OA | 61 (63%) | 25 (78%) |

| RCA | 30 (31%) | 5 (16%) |

| RCT | 24 (25%) | 6 (19%) |

| Device | ||

| aTSA | 17 (18%) | 10 (31%) |

| rTSA | 80 (82%) | 22 (69%) |

| Kernel | ||

| Bone | 52 (54%) | 10 (31%) |

| BonePlus | 8 (8%) | 6 (19%) |

| FC30 | 16 (16%) | 7 (22%) |

| [‘I31s’, ‘3’] | 2 (2%) | 6 (19%) |

| B60s | 17 (18%) | 3 (9%) |

| Standard | 2 (2%) | |

| Scanner Manufacturer | ||

| Toshiba | 16 (16%) | 7 (22%) |

| Siemens | 19 (20%) | 9 (28%) |

| GE | 62 (64%) | 16 (50%) |

| Surgeon A | Surgeon B | Surgeon C | Total | |

|---|---|---|---|---|

| Number of cases | 21 | 20 | 21 | 31 |

| Accepted | 20 (95%) | 20 (100%) | 20 (95%) | 30 (97%) |

| No correction needed | 9 (42.9%) | 7 (35%) | 5 (23.8%) | 4 (13%) |

| Minor correction | 11 (52.4%) | 12 (60%) | 14 (66.7%) | 25 (81%) |

| Major correction | 1 (4.8%) | 1 (5%) | 2 (9.5%) | 2 (6%) |

| Total | Dice Coefficient | Distance Map Error (%) | Correction Ratio (%) | Volume Diff (%) | FI Diff (%) | |

|---|---|---|---|---|---|---|

| All Patients | 31 (100%) | 1.0 [0.97, 1.0] | 1.58 [0.0, 8.97] | 0.55 [0.0, 5.49] | 0.28 [0.0, 8.97] | 0.06 [0.0, 2.19] |

| Accepted | 30 (97%) | 1.0 [0.98, 1.0] | 1.54 [0.0, 7.24] | 0.5 [0.0, 3.66] | 0.22 [0.0, 3.32] | 0.06 [0.0, 1.42] |

| Rejected | 1 (3%) | 0.74 | 48.11 | 67.2 | 58.66 | 15.93 |

| Minor Correction | 25 (81%) | 1.0 [0.98, 1.0] | 2.38 [0.0, 6.38] | 0.88 [0.0, 3.55] | 0.49 [0.0, 2.64] | 0.08 [0.0, 1.38] |

| Major Correction | 2 (6%) | 0.86 [0.73, 0.98] | 26.92 [6.56, 49.93] | 32.74 [3.4, 71.17] | 27.34 [3.4, 64.2] | 8.23 [0.55, 17.09] |

| Category | Variable | # | Dice Coefficient | Correction Ratio (%) | Distance-Map Error (%) | Volume Diff (%) | FI Diff (%) |

|---|---|---|---|---|---|---|---|

| All | 32 (100%) | 1.0 [0.97, 1.0] | 0.55 [0.0, 5.49] | 1.58 [0.0, 8.97] | 0.28 [0.0, 8.97] | 0.06 [0.0, 2.19] | |

| Gender | |||||||

| Female | 17 (53%) | 1.0 [0.87, 1.0] | 0.4 [0.0, 30.76] | 1.38 [0.0, 25.43] | 0.16 [0.0, 24.76] | 0.06 [0.0, 7.18] | |

| Male | 15 (47%) | 1.0 [0.98, 1.0] | 0.7 [0.0, 3.8] | 2.0 [0.0, 7.82] | 0.38 [0.0, 3.36] | 0.08 [0.0, 2.19] | |

| Age | |||||||

| <60 | 7 (22%) | 1.0 [0.99, 1.0] | 0.86 [0.0, 2.5] | 2.26 [0.0, 5.35] | 0.8 [0.0, 2.05] | 0.08 [0.0, 1.66] | |

| (60, 70] | 15 (47%) | 1.0 [0.98, 1.0] | 0.32 [0.0, 4.5] | 1.04 [0.0, 8.57] | 0.21 [0.0, 3.44] | 0.04 [0.0, 1.66] | |

| (70, 80] | 6 (19%) | 1.0 [0.75, 1.0] | 0.4 [0.0, 68.9] | 1.33 [0.0, 48.89] | 0.4 [0.0, 61.84] | 0.08 [0.0, 16.43] | |

| >80 | 4 (13%) | 1.0 [0.99, 1.0] | 1.13 [0.12, 2.11] | 2.66 [0.4, 4.0] | 0.61 [0.02, 1.95] | 0.07 [0.01, 0.71] | |

| Diagnosis | |||||||

| OA | 25 (78%) | 1.0 [0.97, 1.0] | 0.88 [0.0, 5.95] | 2.38 [0.0, 9.01] | 0.76 [0.0, 3.74] | 0.07 [0.0, 2.92] | |

| RCA | 5 (16%) | 1.0 [0.99, 1.0] | 0.26 [0.0, 2.07] | 0.94 [0.0, 5.86] | 0.04 [0.0, 0.83] | 0.03 [0.0, 0.94] | |

| RCT | 6 (19%) | 1.0 [0.74, 1.0] | 0.0 [0.0, 67.77] | 0.0 [0.0, 48.37] | 0.0 [0.0, 59.45] | 0.0 [0.0, 16.1] | |

| Device Type | |||||||

| rTSA | 22 (69%) | 1.0 [0.97, 1.0] | 0.34 [0.0, 6.14] | 1.32 [0.0, 8.98] | 0.18 [0.0, 3.9] | 0.05 [0.0, 3.45] | |

| aTSA | 10 (31%) | 0.99 [0.98, 1.0] | 1.58 [0.0, 3.75] | 3.54 [0.0, 5.58] | 1.56 [0.0, 3.42] | 0.1 [0.0, 1.42] | |

| Kernel | |||||||

| FC30 | 7 (22%) | 1.0 [0.76, 1.0] | 0.36 [0.0, 66.64] | 1.06 [0.0, 47.85] | 0.36 [0.0, 57.87] | 0.06 [0.0, 16.77] | |

| BonePlus | 6 (19%) | 1.0 [0.99, 1.0] | 0.00 [0.0, 2.12] | 0.0 [0.0, 5.18] | 0.0 [0.0, 1.99] | 0.0 [0.0, 0.15] | |

| Bone | 10 (31%) | 0.99 [0.99, 1.0] | 1.36 [0.0, 2.84] | 3.76 [0.0, 7.27] | 0.66 [0.0, 2.37] | 0.11 [0.0, 2.26] | |

| [‘I31s’, ‘3’] | 6 (19%) | 1.0 [0.98, 1.0] | 0.72 [0.0, 3.78] | 1.98 [0.0, 7.19] | 0.6 [0.0, 3.64] | 0.19 [0.0, 1.74] | |

| B60s | 3 (9%) | 1.0 [0.99, 1.0] | 0.1 [0.0, 1.03] | 0.58 [0.0, 3.35] | 0.1 [0.0, 1.02] | 0.02 [0.0, 0.32] | |

| Scanner Manufacturer | |||||||

| Toshiba | 7(22%) | 1.0 [0.76, 1.0] | 0.36 [0.0, 66.64] | 1.06 [0.0, 47.85] | 0.36 [0.0, 57.87] | 0.06 [0.0, 15.77] | |

| GE | 16(50%) | 0.99 [0.99, 1.0] | 1.23 [0.0, 2.59] | 2.58 [0.0, 6.2] | 0.28 [0.0, 2.19] | 0.05 [0.0, 1.74] | |

| Siemens | 9(28%) | 1.0 [0.98, 1.0] | 0.34 [0.0, 3.69] | 1.32 [0.0, 6.56] | 0.34 [0.0, 3.49] | 0.01 [0.0, 1.5] |

| Surgeon A | Surgeon B | Surgeon C | |||||||

|---|---|---|---|---|---|---|---|---|---|

| Errors | ML to Surgeon | Inter-Surgeon | Non Inferior p-Value | ML to Surgeon | Inter-Surgeon | Non Inferior p-Value | ML to Surgeon | Inter-Surgeon | Non Inferior p-Value |

| Dice coefficient | 1.00 [0.97, 1.00] | 1.00 [0.98, 1.00] | p = 0.001 | 1.00 [0.98, 1.00] | 1.00 [0.98, 1.00] | p < 0.001 | 1.00 [0.98, 1.00] | 1.00 [0.98, 1.00] | p < 0.001 |

| Distance Map Error (%) | 0.56 [0.00, 9.01] | 2.84 [0.00, 8.45] | p < 0.001 | 1.025 [0.00, 7.24] | 3.00 [0.00, 8.88] | p < 0.001 | 2.84 [0.00, 6.25] | 2.84 [0.00, 10.3] | p < 0.001 |

| Correction Ratio (%) | 0.16 [0.00, 6.26] | 0.96 [0.00,.46] | p < 0.001 | 0.30 [0, 3.345] | 0.725 [0.00, 3.58] | p < 0.001 | 0.8 [0.00, 3.31] | 0.65 [0.00, 3.51] | p < 0.001 |

| Volume Diff (%) | 0.16 [0.00, 3.51] | 0.53 [0.00, 3.77] | p < 0.001 | 0.20 [0.00, 3.44] | 0.39 [0.00, 1.98] | p < 0.001 | 0.79 [0.00, 3.31] | 0.30 [0.00, 1.85] | p < 0.001 |

| FI Diff (%) | 0.04 [0.00, 3.52] | 0.12 [0.00, 2.38] | p < 0.001 | 0.09 [0.00, 1.42] | 0.105 [0.00, 2.47] | p < 0.001 | 0.06 [0.00, 2.19] | 0.08 [0.00, 2.49] | p < 0.001 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Rajabzadeh-Oghaz, H.; Elwell, J.; Schoch, B.; Aibinder, W.; Gobbato, B.; Wessell, D.; Kumar, V.; Roche, C.P. Clinical Validation of a Computed Tomography Image-Based Machine Learning Model for Segmentation and Quantification of Shoulder Muscles. Algorithms 2025, 18, 432. https://doi.org/10.3390/a18070432

Rajabzadeh-Oghaz H, Elwell J, Schoch B, Aibinder W, Gobbato B, Wessell D, Kumar V, Roche CP. Clinical Validation of a Computed Tomography Image-Based Machine Learning Model for Segmentation and Quantification of Shoulder Muscles. Algorithms. 2025; 18(7):432. https://doi.org/10.3390/a18070432

Chicago/Turabian StyleRajabzadeh-Oghaz, Hamidreza, Josie Elwell, Bradley Schoch, William Aibinder, Bruno Gobbato, Daniel Wessell, Vikas Kumar, and Christopher P. Roche. 2025. "Clinical Validation of a Computed Tomography Image-Based Machine Learning Model for Segmentation and Quantification of Shoulder Muscles" Algorithms 18, no. 7: 432. https://doi.org/10.3390/a18070432

APA StyleRajabzadeh-Oghaz, H., Elwell, J., Schoch, B., Aibinder, W., Gobbato, B., Wessell, D., Kumar, V., & Roche, C. P. (2025). Clinical Validation of a Computed Tomography Image-Based Machine Learning Model for Segmentation and Quantification of Shoulder Muscles. Algorithms, 18(7), 432. https://doi.org/10.3390/a18070432