A Weight Assignment-Enhanced Convolutional Neural Network (WACNN) for Freight Volume Prediction of Sea–Rail Intermodal Container Systems

Abstract

1. Introduction

1.1. Background and Motivation

1.2. Multimethodological Approach and Gaps

1.3. Contribution and Paper Organization

- Firstly, a preliminary screening of the indicators based on qualitative analysis methods, completion of the selection of indicators by formulating scientific division standards, and using missing value analysis to exclude the variables with too high a missing rate; then, using multiple interpolation to fill in the missing data.

- For the feature engineering, we perform a Pearson as well as a Spearman correlation analysis on the selected indicators. Based on the correlation results obtained, the metrics are categorized into multiple groups using a k-means clustering analysis and assigned appropriate weight sizes based on their combined weights. At the same time, the dataset size is increased horizontally using quadratic interpolation.

- In order to select the appropriate model structure, we propose a variety of different network structures and select the most appropriate network structure as well as parameter selection through a comparative analysis. At the same time, in order to verify the prediction effect of the WACNN model, this paper uses a variety of mainstream DL as well as ML models to verify that the WACNN model has the best prediction performance.

2. Literature Review

2.1. Indicator Selection

2.2. Feature Engineering

2.3. Prediction Model Selection Strategy

2.4. Summary

3. Methodology

3.1. Problem Statement

3.2. Feature Selection Method

- Step 1. Preliminary selection of indicators

- Step 2. Data processing

- Step 3. Correlation analysis

- Step 4. K-means

- Step 5. Upsampling

- Step 6. Weight assignment

3.3. CNN Architecture Design

3.4. Evaluation

3.5. The Framework of This Paper

- (1)

- The framework starts by using four classification criteria to preliminarily select evaluation indicators. Then, it collects monthly data for each indicator group, conducts missing value analysis and outlier analysis, and uses multiple imputation method to complete the data.

- (2)

- Next, it conducts Pearson and Spearman correlation analyses and uses k-means to group the correlations. Then, it assigns higher weights to the group with higher correlation during normalization.

- (3)

- It uses quadratic interpolation to convert the collected monthly data into daily data.

- (4)

- It builds CNN models separately, and then inputs the WA data into the constructed CNN.

- (5)

- It compares the proposed WACNN with various related models and mainstream models, and validates the analysis using five evaluation criteria.

4. Case Study

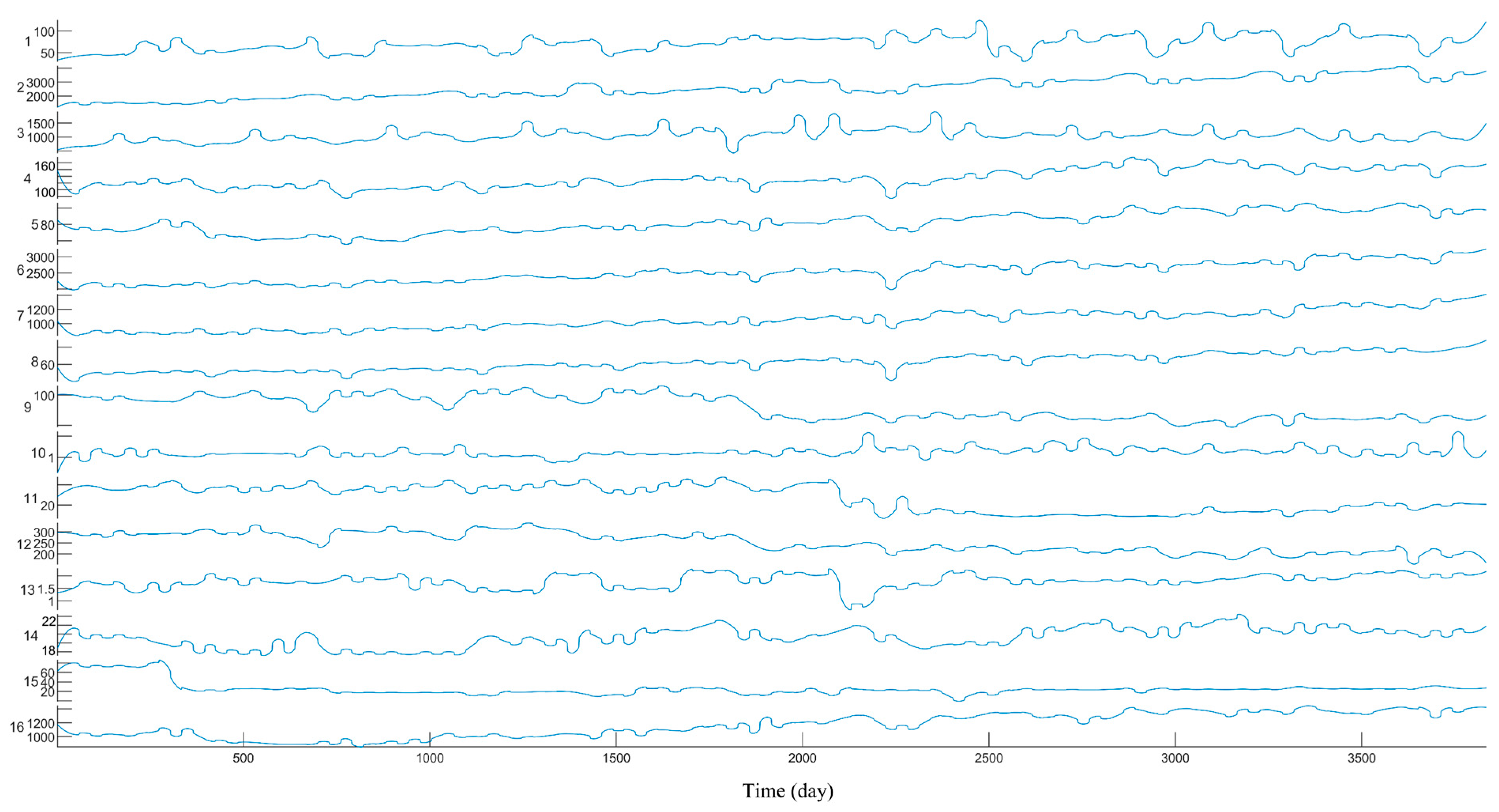

4.1. Data Description

4.2. Data Processing

4.3. Parameter Selection

4.4. Experimental Results

4.5. Cross-Validation

4.6. Results Analysis

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Li, B.; Wen, C.; Yang, S.; Ma, M.; Cheng, J.; Li, W. Measuring high-speed train delay severity: Static and dynamic analysis. PLoS ONE 2024, 19, e0301762. [Google Scholar] [CrossRef]

- Guo, J.; Guo, J.; Kuang, T.; Wang, Y.; Li, W. The short-term economic influence analysis of government regulation on railway freight transport in continuous time. PLoS ONE 2025, 20, e0298614. [Google Scholar] [CrossRef] [PubMed]

- Liu, Z.; Zhou, S.; Liu, S. Optimization of Multimodal Transport Paths Considering a Low-Carbon Economy Under Uncertain Demand. Algorithms 2025, 18, 92. [Google Scholar] [CrossRef]

- Pellicer, D.S.; Larrodé, E. Analysis of the Effectiveness of a Freight Transport Vehicle at High Speed in a Vacuum Tube (Hyperloop Transport System). Algorithms 2024, 17, 17. [Google Scholar] [CrossRef]

- Xie, G.; Zhang, N.; Wang, S. Data characteristic analysis and model selection for container throughput forecasting within a decomposition-ensemble methodology. Transp. Res. E Logist. Transp. Rev. 2017, 108, 160–178. [Google Scholar] [CrossRef]

- Milenković, M.; Milosavljevic, N.; Bojović, N.; Val, S. Container flow forecasting through neural networks based on metaheuristics. Oper. Res. 2021, 21, 965–997. [Google Scholar] [CrossRef]

- Dragan, D.; Keshavarzsaleh, A.; Intihar, M.; Popović, V.; Kramberger, T. Throughput forecasting of different types of cargo in the Adriatic seaport Koper. Marit. Policy Manag. 2020, 48, 19–45. [Google Scholar] [CrossRef]

- Homayoonmehr, R.; Rahai, A.; Ramezanianpour, A.A. Predicting the chloride diffusion coefficient and surface electrical resistivity of concrete using statistical regression-based models and its applicationin chloride-induced corrosion service life prediction of RC structures. Constr. Build. Mater. 2022, 357, 1–25. [Google Scholar] [CrossRef]

- Zhang, Y.; Wu, J.; Zhang, S.; Li, G.; Jeng, D.S.; Xu, J.; Tian, Z.; Xu, X. An optimal statistical regression model for predicting wave-induced equilibrium scour depth in sandy and silty seabeds beneath pipelines. Ocean Eng. 2022, 258, 1–14. [Google Scholar] [CrossRef]

- Castro-Neto, M.; Jeong, Y.S.; Jeong, M.K.; Han, L.D. Online-SVR for short-term traffic flow prediction under typical and atypical traffic conditions. Expert Syst. Appl. 2009, 36, 6164–6173. [Google Scholar] [CrossRef]

- Zeng, Z.; Ying, G.; Zhang, Y.; Gong, Y.; Mei, Y.; Li, X.; Sun, H.; Li, B.; Ma, J.; Li, S. Classification of failure modes, bearing capacity, and effective stiffness prediction for corroded RC columns using machine learning algorithm. J. Build. Eng. 2025, 102, 111982. [Google Scholar] [CrossRef]

- Tang, L.; Zhao, Y.; Cabrera, J.; Ma, J.; Tsui, K.L. Forecasting Short-Term Passenger Flow: An Empirical Study on Shenzhen Metro. Trans. Intell. Transp. Syst. 2019, 20, 3613–3622. [Google Scholar] [CrossRef]

- Haas, C.; Budin, C.; D’arcy, A. How to select oil price prediction models—The effect of statistical and financial performance metrics and sentiment scores. Energy Econ. 2024, 133, 1–14. [Google Scholar] [CrossRef]

- Zhou, W.; Zhou, Y.; Liu, R.; Yin, H.; Nie, H. Predictive modeling of river blockage severity from debris flows: Integrating statistical and machine learning approaches with insights from Sichuan Province, China. Catena 2025, 248, 1–15. [Google Scholar] [CrossRef]

- Tan, M.C.; Wong, S.C.; Xu, J.M.; Guan, Z.R.; Zhang, P. An aggregation approach to short-term traffic flow prediction. Trans Intell. Transp. Syst. 2009, 10, 60–69. [Google Scholar] [CrossRef]

- Khashei, M.; Bijari, M. A novel hybridization of artificial neural networks and ARIMA models for time series forecasting. Appl Soft Comput. 2011, 11, 2664–2675. [Google Scholar] [CrossRef]

- Ruiz-Aguilar, J.; Turias, I.; Jiménez-Come, M. Hybrid approaches based on SARIMA and artificial neural networks for inspection time series forecasting. Transp. Res. E Logist. Transp. Rev. 2014, 67, 1–13. [Google Scholar] [CrossRef]

- Temizceri, F.T.; Kara, S.S. Towards sustainable logistics in Turkey: A bi-objective approach to green intermodal freight transportation enhanced by machine learning. Res. Transp. Bus. Manag. 2024, 55, 101145. [Google Scholar] [CrossRef]

- Bassiouni, M.M.; Chakrabortty, R.K.; Sallam, K.M.; Hussain, O.K. Deep learning approaches to identify order status in a complex supply chain. Expert Syst. Appl. 2024, 250, 1–28. [Google Scholar] [CrossRef]

- Gao, L.; Lu, P.; Ren, Y. A deep learning approach for imbalanced crash data in predicting highway-rail grade crossings accidents. Reliab. Eng. Syst. Saf. 2021, 216, 108019. [Google Scholar] [CrossRef]

- Zhuang, X.; Li, W.; Xu, Y. Port planning and sustainable development based on prediction modelling of port throughput: A case study of the deep-water Dongjiakou Port. Sustainability 2022, 14, 4276. [Google Scholar] [CrossRef]

- Shankar, S.; Ilavarasan, P.V.; Punia, S.; Singh, S.P. Forecasting container throughput with long short-term memory networks. Ind. Manag. Data Syst. 2020, 120, 425–441. [Google Scholar] [CrossRef]

- Shankar, S.; Punia, S.; Ilavarasan, P.V. Deep learning-based container throughput forecasting: A triple bottom line approach. Ind. Manag. Data Syst. 2021, 121, 2100–2117. [Google Scholar] [CrossRef]

- Awah, P.C.; Nam, H.; Kim, S. Short term forecast of container throughput: New variables application for the Port of Douala. J. Mar. Sci. Eng. 2021, 9, 720. [Google Scholar] [CrossRef]

- Tang, S.; Xu, S.; Gao, J. An optimal model based on multifactors for container throughput forecasting. KSCE J. Civ. Eng. 2019, 23, 4124–4131. [Google Scholar] [CrossRef]

- Li, F.; Tong, W.; Yang, X. Short-term forecasting for port throughput time series based on multi-modal fuzzy information granule. Appl. Soft Comput. 2025, 174, 112957. [Google Scholar] [CrossRef]

- Semenoglou, A.A.; Spiliotis, E.; Assimakopoulos, V. Data augmentation for univariate time series forecasting with neural networks. Pattern Recognit. 2023, 134, 109132. [Google Scholar] [CrossRef]

- Wang, W.; Zhao, C.; Wu, Y. Spatial weighting—An effective incorporation of geological expertise into deep learning models. Geochemistry 2024, 84, 126212. [Google Scholar] [CrossRef]

- Dai, Y.; Yu, W.; Leng, M. A hybrid ensemble optimized BiGRU method for short-term photovoltaic generation forecasting. Energy 2024, 299, 131458. [Google Scholar] [CrossRef]

- Shukla, D.; Chowdary, C.R. A model to address the cold-start in peer recommendation by using k-means clustering and sentence embedding. J. Comput. Sci. 2024, 83, 102465. [Google Scholar] [CrossRef]

- Xiao, J.; Wen, Z.; Liu, B.; Chen, M.; Wang, Y.; Hang, J. A hybrid model based on selective deep-ensemble for container throughput forecasting. Syst. Eng. Theory Pract. 2022, 42, 1107–1128. [Google Scholar] [CrossRef]

- Gao, H.; Jia, H.; Huang, Q.; Wu, R.; Tian, J.; Wang, G.; Liu, C. A hybrid deep learning model for urban expressway lane-level mixed traffic flow prediction. Eng. Appl. Artif. Intell. 2024, 133, 1–21. [Google Scholar] [CrossRef]

- Pan, Y.A.; Guo, J.; Chen, Y.; Cheng, Q.; Li, W.; Liu, Y. A fundamental diagram based hybrid framework for traffic flow estimation and prediction by combining a Markovian model with deep learning. Expert Syst. Appl. 2024, 238, 122219. [Google Scholar] [CrossRef]

- Zhang, Y.; Zhou, Q.; Wang, J.; Kouvelas, A.; Makridis, M.A. CASAformer: Congestion-aware sparse attention transformer for traffic speed prediction. Commun. Transp. Res. 2025, 5, 100174. [Google Scholar] [CrossRef]

- Nguyen, T.; Cho, G. Forecasting the Busan container volume using XGBoost approach based on machine learning model. J Internet Things Converg. 2024, 10, 39–45. [Google Scholar] [CrossRef]

- Lee, E.; Kim, D.; Bae, H. Container volume prediction using time-series decomposition with a long short-term memory models. Appl. Sci. 2021, 11, 8995. [Google Scholar] [CrossRef]

- Cui, J.; Liu, B.; Xu, Y.; Guo, X. Regional collaborative forecast of cargo throughput in China’s Circum-Bohai-Sea Region based on LSTM model. Comput. Intell. Neurosci. 2022, 2022, 5044926. [Google Scholar] [CrossRef]

- Hirata, E.; Matsuda, T. Forecasting Shanghai Container Freight Index: A deep-learning-based model experiment. J. Mar. Sci. Eng. 2022, 10, 593. [Google Scholar] [CrossRef]

- Cuong, T.N.; Long, L.N.B.; Kim, H.S.; You, S.S. Data analytics and throughput forecasting in port management systems against disruptions: A case study of Busan Port. Marit. Econ. Logist. 2023, 25, 61–89. [Google Scholar] [CrossRef]

- Liu, B.; Wang, X.; Liang, X. Neural network-based prediction system for port throughput: A case study of Ningbo-Zhoushan Port. Res. Transp. Bus. Manag. 2023, 51, 101067. [Google Scholar] [CrossRef]

- Zhang, L.; Schacht, O.; Liu, Q.; Ng, A.K. Predicting inland waterway freight demand with a dynamic spatio-temporal graph attention-based multi attention network. Transp. Res. Part. E Logist. Transp. Rev. 2025, 199, 104139. [Google Scholar] [CrossRef]

| Literature | Data Filtering | Data Enhancement | Prediction Model | The Best Model |

|---|---|---|---|---|

| Dragan [7] | ✓ | ✓ | SM | ARIMAX |

| Shankar [22] | ✕ | ✓ | ML | LSTM |

| Awah [24] | ✕ | ✕ | ML | RF |

| Tang [25] | ✓ | ✕ | DL | BP |

| Lee [36] | ✕ | ✓ | DL | LSTM |

| Cui [37] | ✕ | ✕ | DL | LSTM |

| Cuong [39] | ✕ | ✓ | DL | LSTM and GRU |

| Liu [40] | ✕ | ✓ | DL | BiLSTM |

| This study | ✓ | ✓ | DL | WACNN |

| Dividing Indicators | Setting of Evaluation Indicators |

|---|---|

| Transportation capacity | Yingkou port container throughput. |

| Yingkou port cargo throughput. | |

| Yingkou port container terminal throughput. | |

| Road freight traffic in Liaoning Province. | |

| Liaoning waterway cargo volume. | |

| Liaoning waterway cargo turnover. | |

| Liaoning highway cargo turnover. | |

| Liaoning port cargo throughput. | |

| Liaoning port container throughput. | |

| China coastal container throughput. | |

| China railway cargo delivery. | |

| China railway cargo turnover. | |

| China coastal cargo throughput. | |

| Fixed-asset investment and construction | Liaoning fixed-asset investment. |

| Fixed-assets investment in road, water, and land transportation in Liaoning. | |

| Fixed-assets investment in road, water, and land transportation in Yingkou. | |

| China highways and waterways fixed investment. | |

| China railway fixed-asset investment. | |

| China fixed-asset investment. | |

| Economic development level | China primary GDP. |

| China secondary GDP. | |

| China tertiary GDP. | |

| Yingkou primary GDP. | |

| Yingkou secondary GDP. | |

| Yingkou tertiary GDP. | |

| Yingkou GDP. | |

| Liaoning GDP. | |

| China GDP. | |

| International trade and openness | China coastal foreign trade cargo throughput. |

| Yingkou port foreign trade cargo throughput. | |

| Liaoning total imports and exports. | |

| Yingkou total imports and exports. | |

| China total imports and exports. |

| Influencing Factor | Pearson | Spearman |

|---|---|---|

| China highways and waterways fixed investment | 0.536 | 0.495 |

| China GDP | 0.689 | 0.655 |

| China fixed-asset investment | −0.031 | −0.027 |

| China primary GDP | −0.101 | −0.110 |

| China secondary GDP | −0.417 | −0.423 |

| China tertiary GDP | 0.412 | 0.347 |

| China total imports and exports | 0.698 | 0.635 |

| China railway cargo delivery | 0.767 | 0.776 |

| China railway cargo turnover | 0.722 | 0.703 |

| China railway fixed-asset investment | 0.180 | 0.186 |

| China coastal cargo throughput | 0.685 | 0.651 |

| China coastal foreign trade cargo throughput | 0.709 | 0.662 |

| China coastal container throughput | 0.659 | 0.641 |

| Yingkou port cargo throughput | −0.571 | −0.660 |

| Yingkou port foreign trade cargo throughput | 0.293 | 0.293 |

| Yingkou port container throughput | −0.298 | −0.271 |

| Yingkou total imports and exports | 0.465 | 0.400 |

| Yingkou port container terminal throughput | −0.011 | 0.018 |

| Liaoning highway cargo throughput | −0.370 | −0.356 |

| Liaoning highway cargo turnover | −0.309 | −0.190 |

| Liaoning waterway cargo throughput | −0.655 | −0.744 |

| Liaoning waterway cargo turnover | −0.684 | −0.671 |

| Liaoning port cargo throughput | −0.667 | −0.684 |

| Liaoning port container throughput | −0.671 | −0.685 |

| Yingkou GDP | 0.288 | 0.329 |

| Yingkou primary GDP | 0.348 | 0.389 |

| Yingkou secondary GDP | 0.131 | 0.189 |

| Yingkou tertiary GDP | 0.230 | 0.200 |

| Liaoning GDP | 0.441 | 0.445 |

| Liaoning fixed-assets investment | −0.035 | −0.251 |

| Liaoning total imports and exports | 0.548 | 0.525 |

| Layer | Parameters | Numerical Value |

|---|---|---|

| Input layer | Sample size | Training set:2800/test set:1034 |

| Step size | [1.1] | |

| Number of features | 16 | |

| CNN layer | Number of convolutional layers | 5 |

| Number of convolution kernels per layer | 16-16-32-32-64 | |

| Size of convolution kernel in each layer | [10, 1] [3, 1] [3, 1] [3, 1] [2, 1] | |

| Convolutional layer activation function | ReLU | |

| Convolutional layer filling method | Same padding | |

| Number of pooling layers | 4 | |

| Pooling layer pooling window size | [5, 1] [2, 1] [2, 1] [2, 1] | |

| Output layer | Number of neurons | 1 |

| Layer | Parameters | LSTM | BiLSTM | GRU |

|---|---|---|---|---|

| Input layer | Number of samples | Training set: 2800/test set: 1034 | 2800/1034 | 2800/1034 |

| LSTM layer | Number of LSTM layers | 2 | 1 | 2 |

| Number of neurons in the first layer | 30 | 40 | 128 | |

| Number of neurons in the second layer | 40 | —— | —— | |

| LSTM layer activation function | ReLU | ReLU | ReLU | |

| Output pattern | last | last | last | |

| Output layer | Number of neurons | 1 | 1 | 1 |

| Hyperparametric | Selected Hyperparametric Range |

|---|---|

| Batch size | 16/32/64/128 |

| Initial learning rate | 0.005/0.01/0.02 |

| Learning rate descent factor | 0.2/0.1/0.05 |

| Hyperparametric | WACNN | CNN | LSTM |

|---|---|---|---|

| Gradient descent algorithm | SGDM | SGDM | Adam |

| Normalized interval | [0, 1.5] and [0, 1] | [0, 1] | [0, 1] |

| Batch size | 32 | 32 | —— |

| Maximum number of training sessions | 1500 | 1500 | 1500 |

| Initial learning rate | 0.01 | 0.01 | 0.01 |

| Learning rate policy | Piecewise | Piecewise | Piecewise |

| Learning rate descent factor | 0.1 | 0.1 | 0.1 |

| Learning rate drop rounds | 1200 | 1200 | 1200 |

| Model | Training Set RMSE | Test Set RMSE | Difference | Difference Percentage |

|---|---|---|---|---|

| WACNN | 13.228 | 15.596 | 2.368 | 17.9% |

| CNN | 14.7255 | 16.3521 | 1.6266 | 11.05% |

| LSTM | 68.7971 | 69.3158 | 0.5187 | 0.75% |

| BiLSTM | 65.0653 | 71.7462 | 6.6809 | 10.27% |

| GRU | 56.0796 | 61.0531 | 4.9735 | 8.87% |

| RF | 19.4782 | 29.9312 | 10.453 | 53.67% |

| SVR | 28.3884 | 28.6886 | 0.5384 | 1.04% |

| PLS | 289.5629 | 286.2715 | 3.2914 | 1.1% |

| Model | R2 | MBE | MAPE | RMSE | Promotion Rate | MAE | Promotion Rate |

|---|---|---|---|---|---|---|---|

| WACNN | 0.99973 | 0.1194 | 0.0081 | 15.596 | —— | 11.8649 | —— |

| CNN | 0.99972 | −2.0177 | 0.0079 | 16.3521 | 4.62% | 12.1138 | 2.05% |

| LSTM | 0.99502 | 11.436 | 0.0335 | 69.3158 | 77.5% | 51.7759 | 77.08% |

| BiLSTM | 0.99458 | 1.6356 | 0.0378 | 71.7462 | 78.26% | 53.2867 | 77.73% |

| GRU | 0.99646 | −9.8724 | 0.0319 | 61.0531 | 74.46% | 45.3181 | 73.82% |

| RF | 0.99892 | 1.0652 | 0.0085 | 29.9312 | 47.89% | 14.0925 | 15.81% |

| SVR | 0.99913 | 0.1067 | 0.0175 | 28.6886 | 45.64% | 26.1449 | 54.62% |

| PLS | 0.91261 | 10.0184 | 0.1597 | 286.2715 | 94.55% | 228.0626 | 94.8% |

| Train Set | Test Set | |

|---|---|---|

| Group 1 | 1–4 years | The fifth year |

| Group 2 | 2–5 years | The sixth year |

| Group 3 | 3–6 years | The seventh year |

| Group 4 | 4–7 years | The eighth year |

| Group 5 | 5–8 years | The ninth year |

| Group 6 | 6–9 years | The tenth year |

| Train-RMSE | Test-RMSE | Train-MAE | Test-MAE | Train-MAPE | Test-MAPE | |

|---|---|---|---|---|---|---|

| 1 | 13.9999 | 17.1086 | 10.6182 | 12.2826 | 1.06% | 1.27% |

| 2 | 14.4681 | 17.2236 | 11.6847 | 13.2206 | 1.13% | 1.27% |

| 3 | 13.0655 | 13.8913 | 10.1827 | 10.5585 | 0.72% | 0.75% |

| 4 | 21.0117 | 25.8002 | 16.3482 | 18.8678 | 0.87% | 0.98% |

| 5 | 15.8201 | 22.8937 | 12.1842 | 16.1985 | 0.51% | 0.68% |

| 6 | 18.0196 | 21.7799 | 13.8753 | 16.8584 | 0.57% | 0.71% |

| Average | 16.0642 | 19.7829 | 12.4822 | 14.6644 | 0.81% | 0.94% |

| Different | 3.7187 | 2.1822 | 0.13% | |||

| Percent | 23.15% | 17.48% | ||||

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, Y.; Li, W.; Qi, X.; Yu, Y. A Weight Assignment-Enhanced Convolutional Neural Network (WACNN) for Freight Volume Prediction of Sea–Rail Intermodal Container Systems. Algorithms 2025, 18, 319. https://doi.org/10.3390/a18060319

Wang Y, Li W, Qi X, Yu Y. A Weight Assignment-Enhanced Convolutional Neural Network (WACNN) for Freight Volume Prediction of Sea–Rail Intermodal Container Systems. Algorithms. 2025; 18(6):319. https://doi.org/10.3390/a18060319

Chicago/Turabian StyleWang, Yuhonghao, Wenxin Li, Xingmin Qi, and Yinzhang Yu. 2025. "A Weight Assignment-Enhanced Convolutional Neural Network (WACNN) for Freight Volume Prediction of Sea–Rail Intermodal Container Systems" Algorithms 18, no. 6: 319. https://doi.org/10.3390/a18060319

APA StyleWang, Y., Li, W., Qi, X., & Yu, Y. (2025). A Weight Assignment-Enhanced Convolutional Neural Network (WACNN) for Freight Volume Prediction of Sea–Rail Intermodal Container Systems. Algorithms, 18(6), 319. https://doi.org/10.3390/a18060319