An Interactive Digital-Twin Model for Virtual Reality Environments to Train in the Use of a Sensorized Upper-Limb Prosthesis

Abstract

1. Introduction

2. The Sensorized Prosthesis

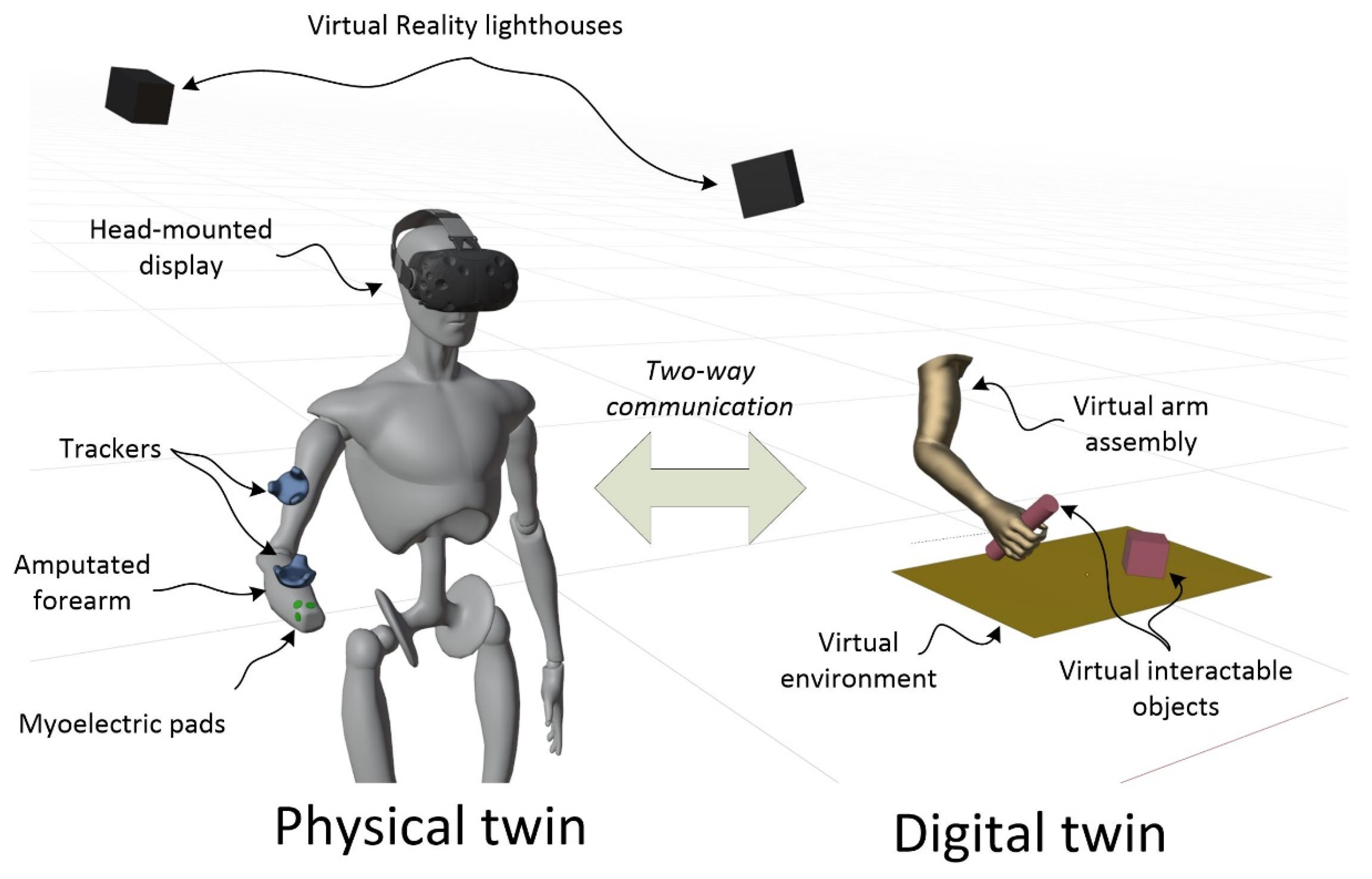

3. Digital Twin and Physical Twin Integration

- The DT communicates (preferably two-way) with its physical counterpart, namely the physical twin (PT) (exchanges information with real sensors);

- The DT increases the information of its PT through mathematical models, providing real-time augmented information;

- The DT is conceived to flank the PT throughout its life cycle.

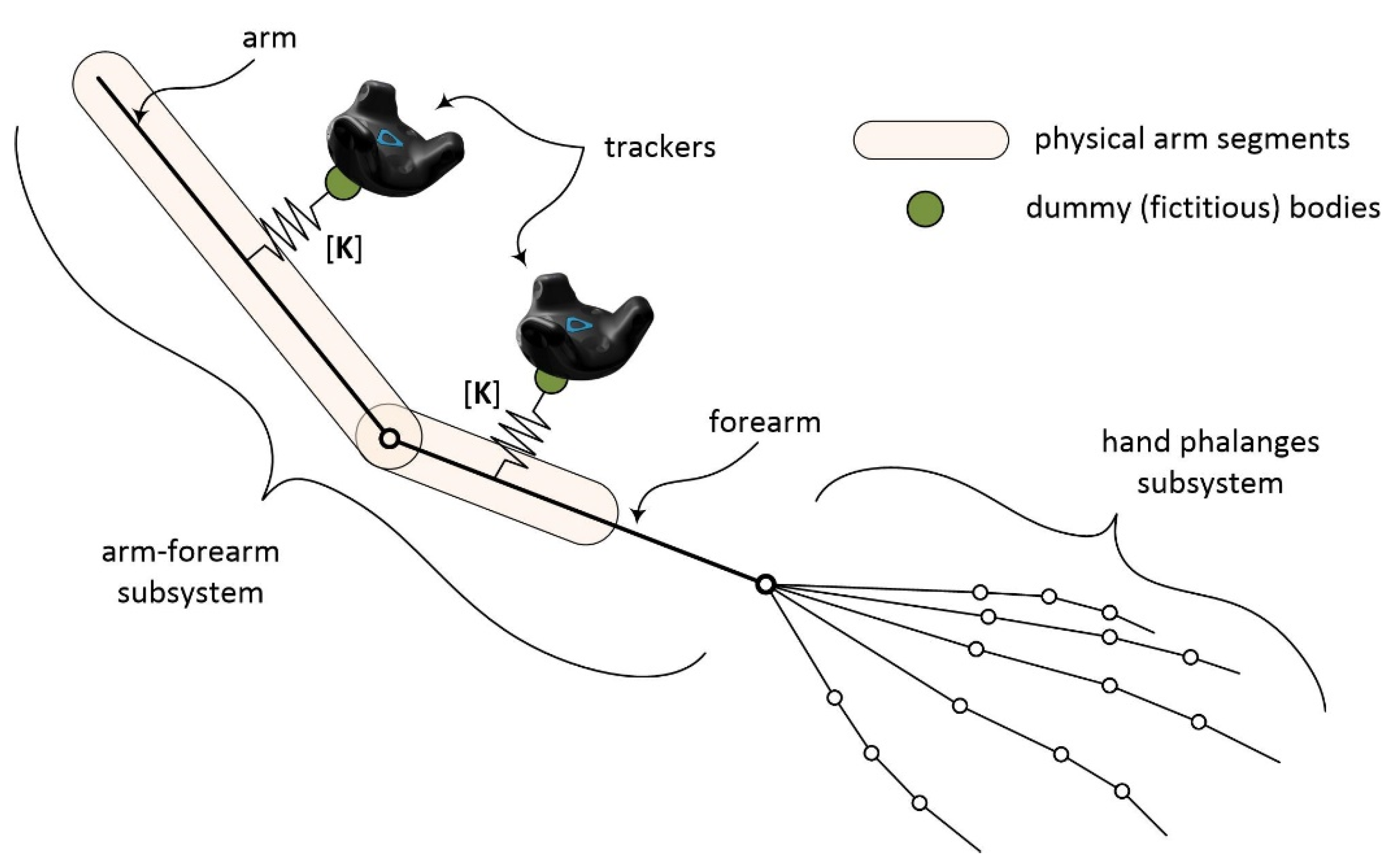

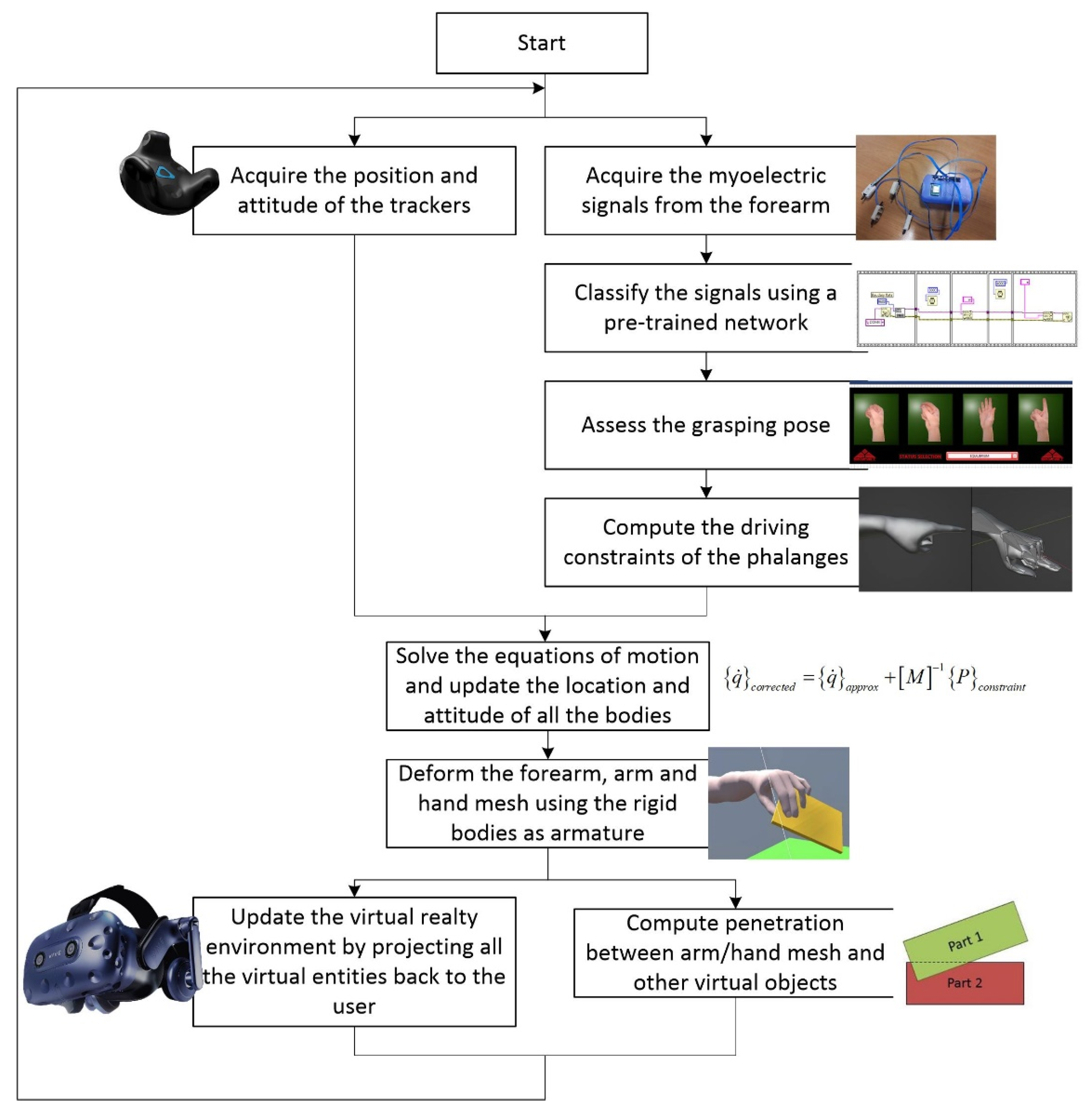

3.1. Virtual Prosthesis Dynamic Model and Trackers Interface

- 24 rigid bodies (4 in the first subassembly and 20 in the second subassembly);

- 17 kinematic constraints with 54 scalar equations (1 spherical joint between the arm and the forearm, 1 fixed constraint between the forearm and the palm, and 15 spherical joints between adjacent phalanges of the hand);

- 57 motion constraints (6 scalar equations for each of the trackers and 3 scalar equations to control the relative rotation for each of the spherical constraints between adjacent phalanges);

- 2 bushing elements (one for each of the trackers).

- At the beginning, the equations of motion are solved by neglecting the kinematic constraints (free-body equations, considering only the external and internal forces applied to the bodies);

- Subsequently, a series of impulses is applied to all the bodies, one at a time, sequentially and iteratively, to update their motion kinematics, fulfilling the constraint equations within a specific tolerance.

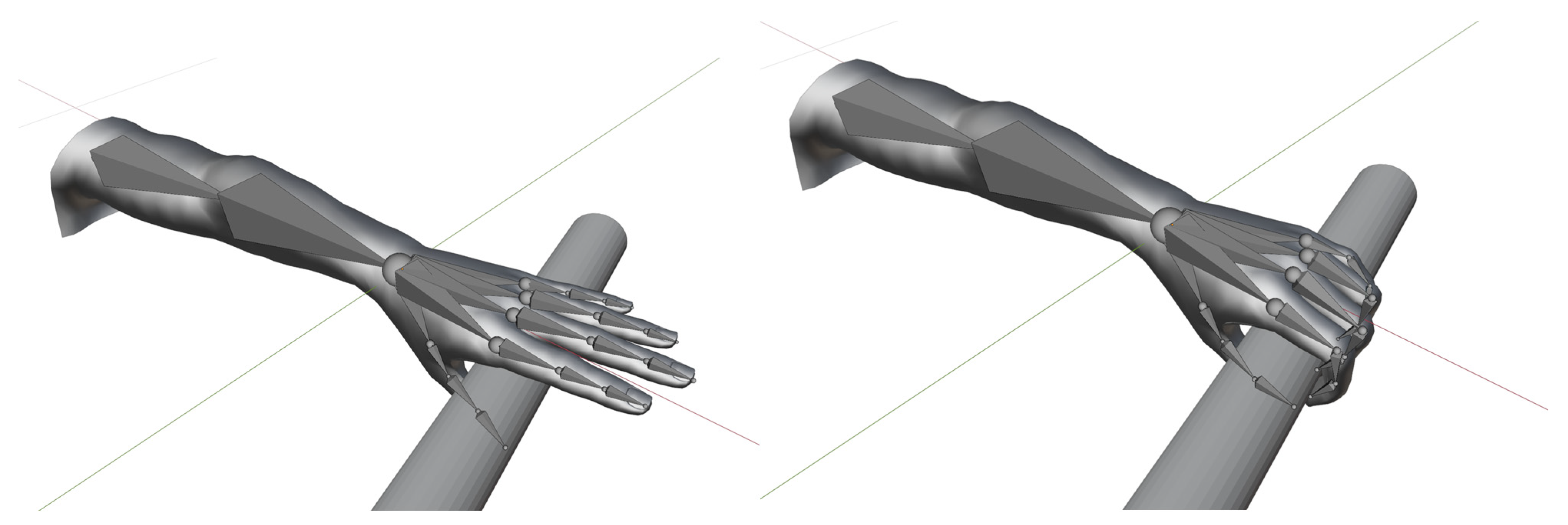

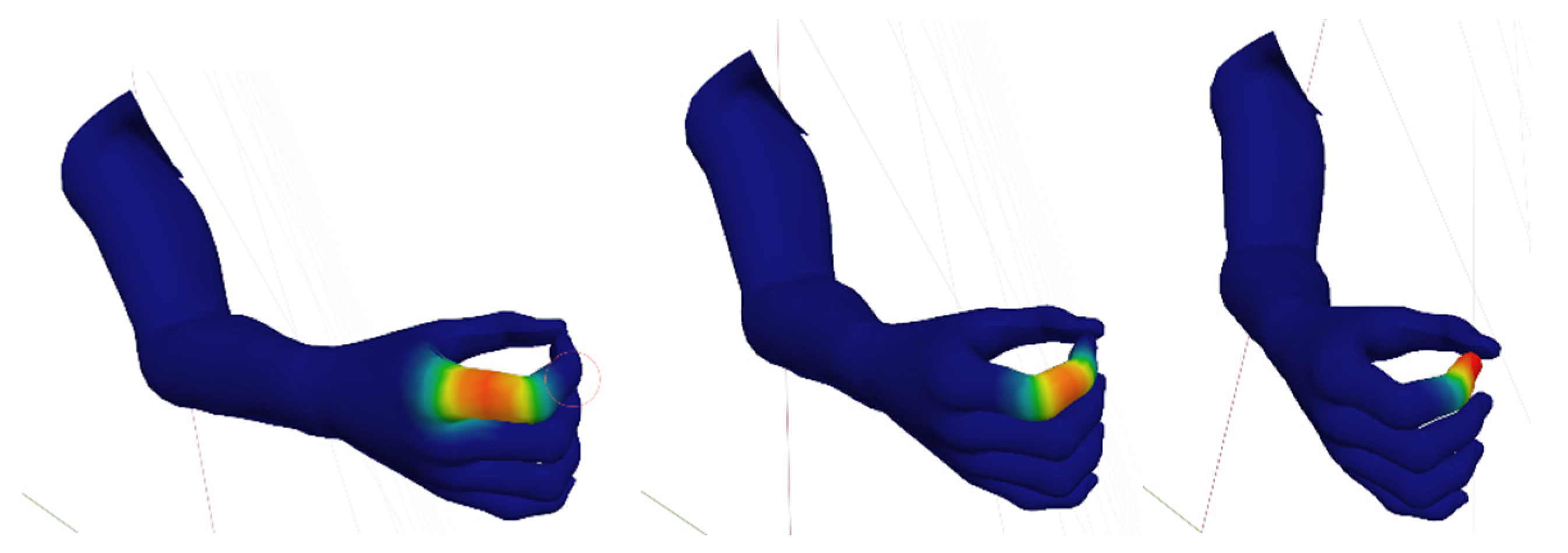

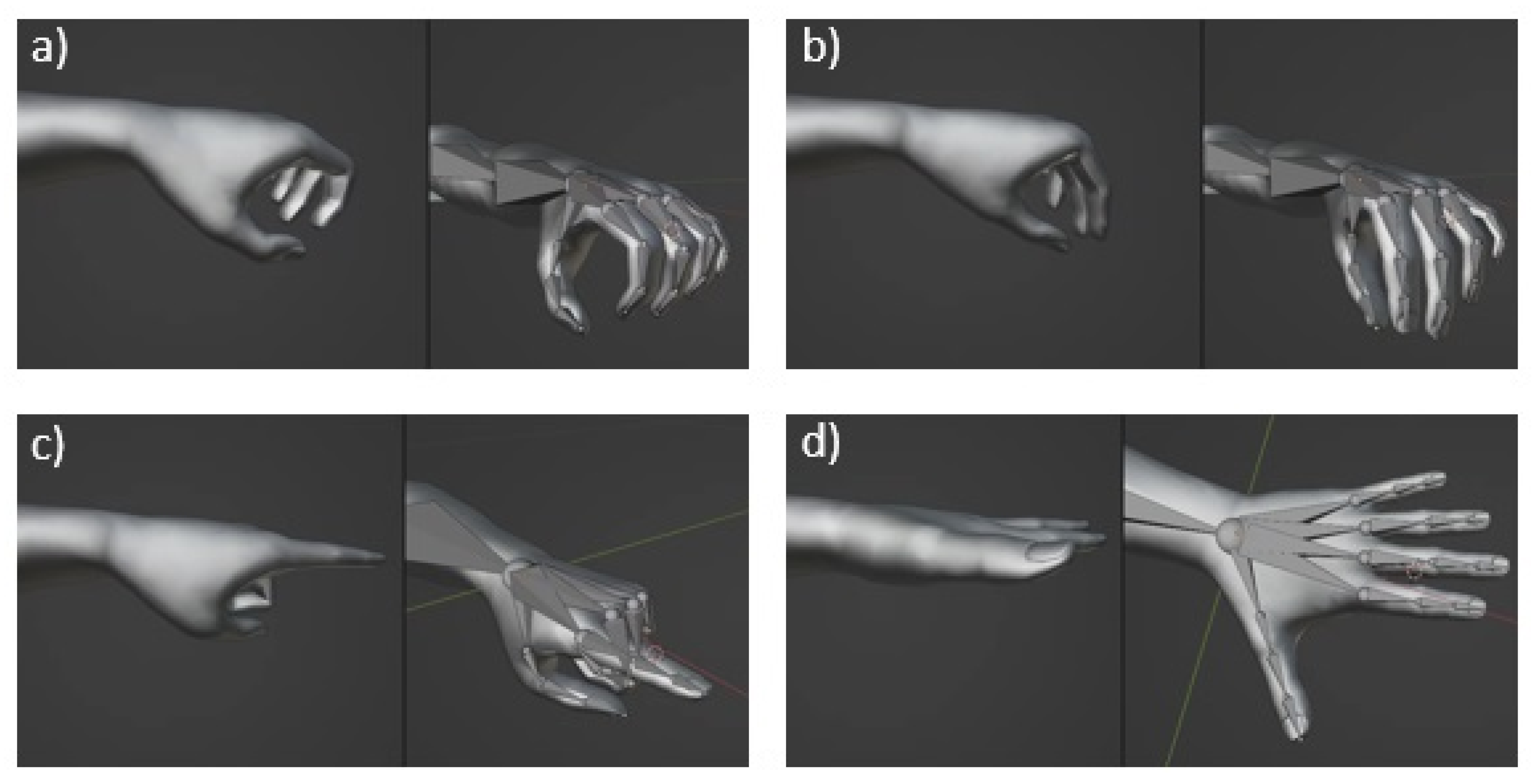

3.2. Arm and Hand Mesh Skinning

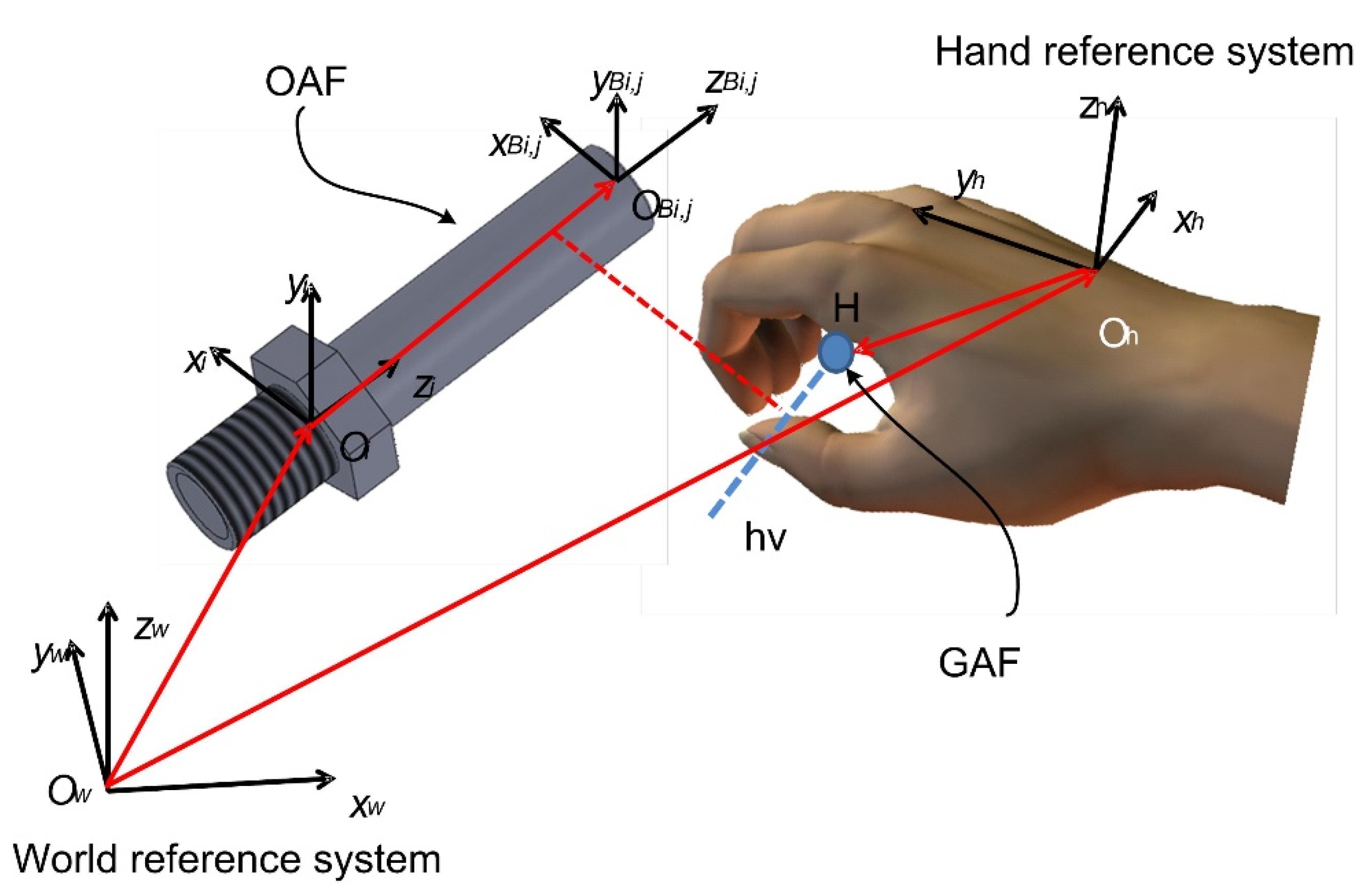

3.3. Implementation of Stable Grasping Actions

- when the user expresses the intention of grasping using a specific pose, the corresponding GAF is activated;

- the relative position and attitude between the activated GAF and all the OAFs on the different objects in the scene are checked;

- if the check produces a positive match (GAF and OAF are close and aligned), the grasping is confirmed, a constraint condition between the two reference systems is enforced, and the vector is updated accordingly;

- if the check produces a negative match (GAF and OAF are far and/or misaligned), the grasping is cancelled and the integration goes on without modifying the equations.

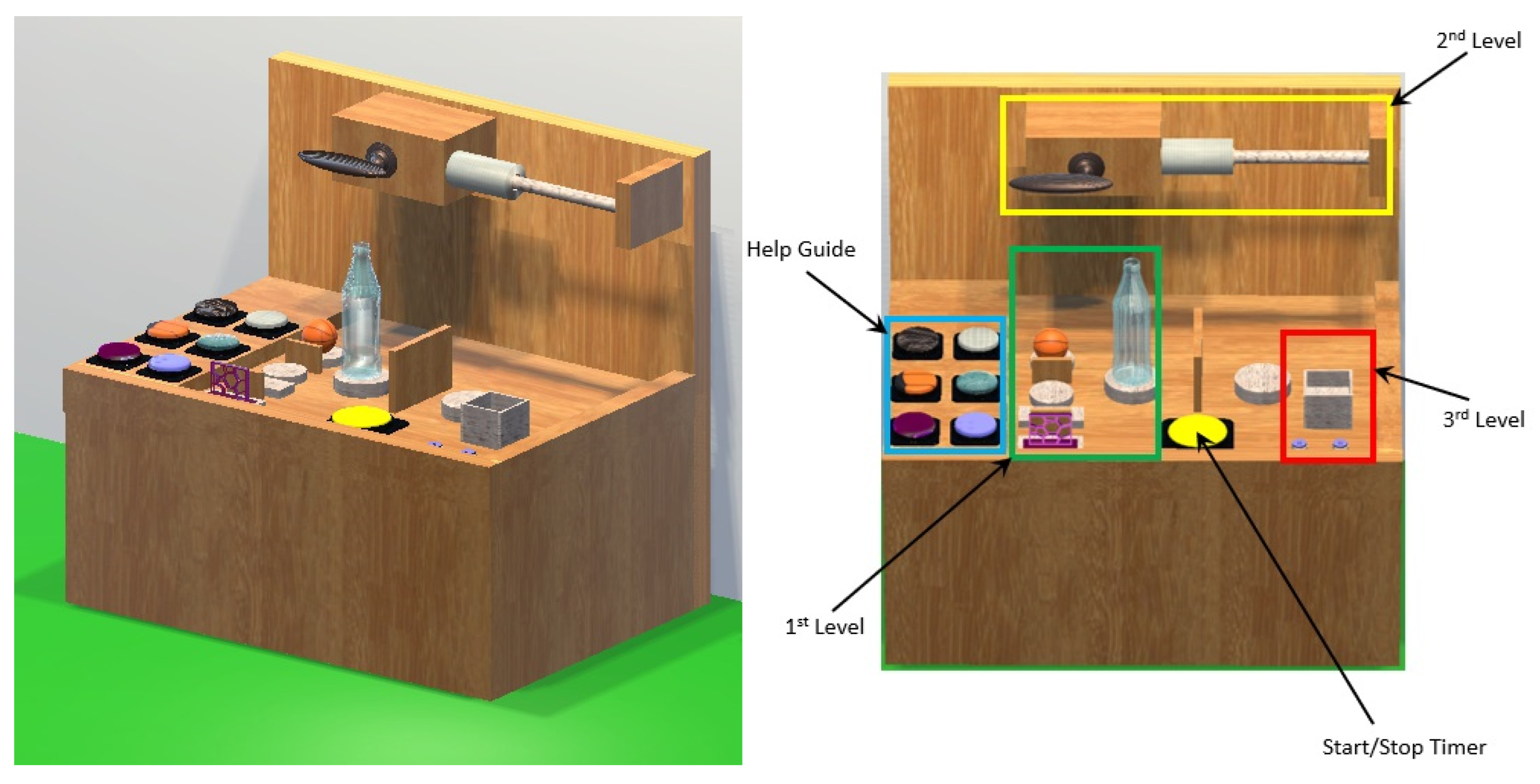

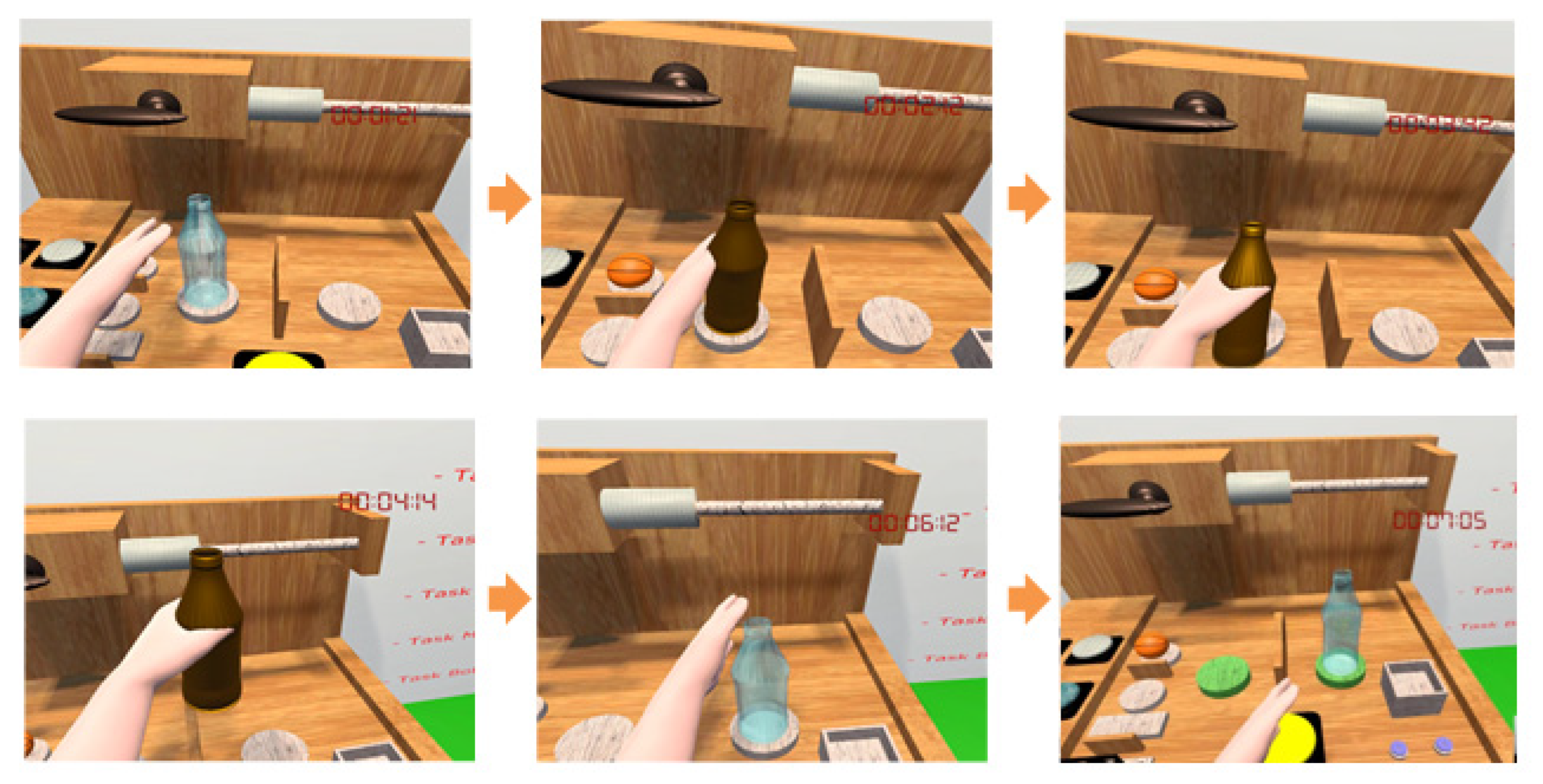

4. Training Tasks

- Updating the meshed virtual arm driven by the user movements, according to the classification outputs from myoelectric signal data, as for the actual prosthesis;

- Implementing a series of grasping and manipulation exercises to improve the dexterity, according to the Southampton Hand Assessment Procedure (SHAP) [38], with the implementation of a virtual replica of the operations (named the VR-SHAP test).

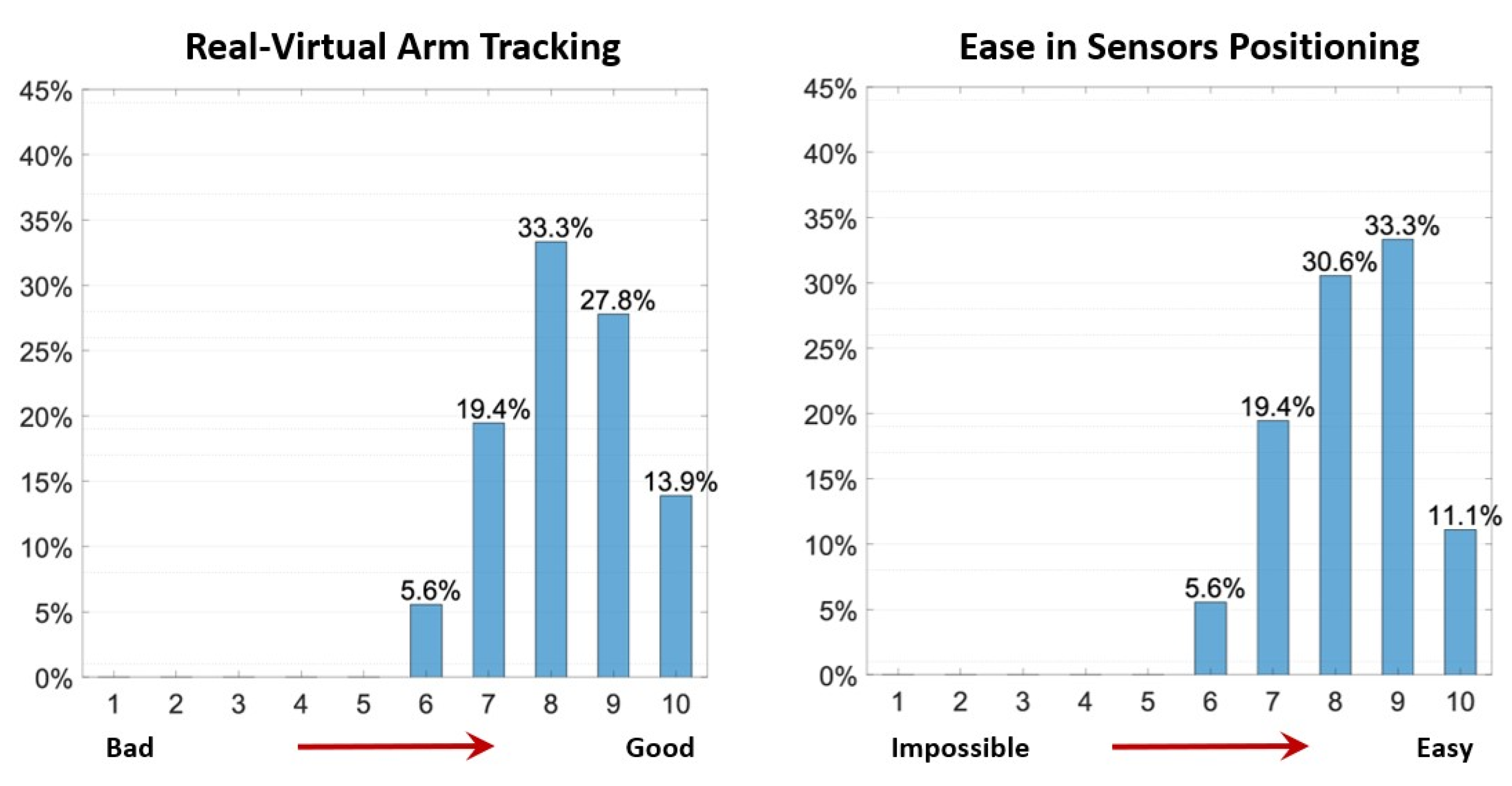

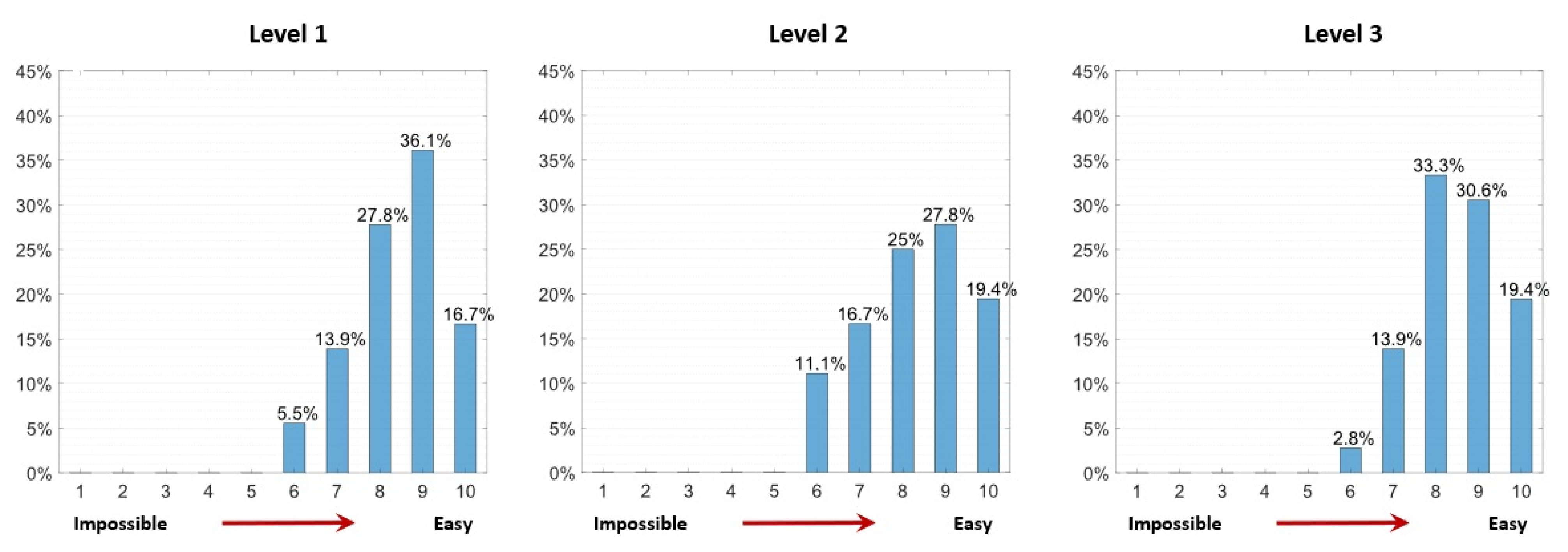

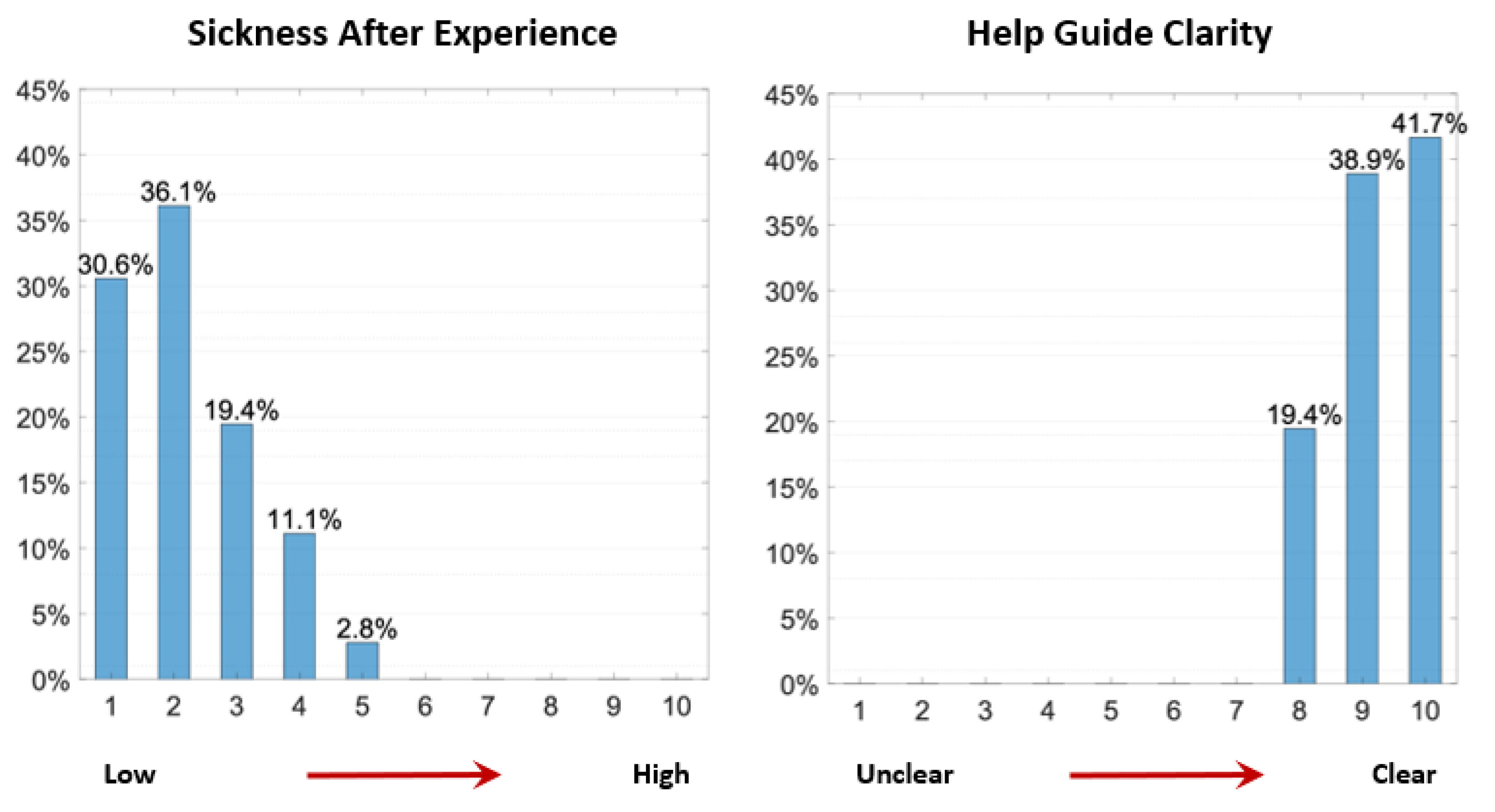

5. Usability Assessment Results

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Castellini, C. Upper limb active prosthetic systems-overview. In Wearable Robotics: Systems and Applications; Academic Press: Cambridge, MA, USA, 2019; pp. 365–376. [Google Scholar]

- Controzzi, M.; Cipriani, C.; Carrozza, M. Design of artificial hands: A review. In Springer Tracts in Advanced Robotics; Springer: Berlin/Heidelberg, Germany, 2014; pp. 219–246. [Google Scholar]

- Vujaklija, I.; Farina, D.; Aszmann, O.C. New developments in prosthetic arm systems. Orthop. Res. Rev. 2016, 8, 31–39. [Google Scholar] [CrossRef] [PubMed]

- Bouwsema, H.; van der Sluis, C.K.; Bongers, R.M. Learning to control opening and closing a myoelectric hand. Arch. Phys. Med. Rehabil. 2010, 91, 1442–1446. [Google Scholar] [CrossRef] [PubMed]

- D’anna, E.; Valle, G.; Mazzoni, A.; Strauss, I.; Iberite, F.; Patton, J.; Petrini, F.M.; Raspopovic, S.; Granata, G.; Di Iorio, R.; et al. A closed-loop hand prosthesis with simultaneous intraneural tactile and position feedback. Sci. Robot. 2019, 4. [Google Scholar] [CrossRef] [PubMed]

- Pistohl, T.; Cipriani, C.; Jackson, A.; Nazarpour, K. Abstract and proportional myoelectric control for multi-fingered hand prostheses. Ann. Biomed. Eng. 2013, 41, 2687–2698. [Google Scholar] [CrossRef]

- Pylatiuk, C.; Schulz, S.; Döderlein, L. Results of an Internet survey of myoelectric prosthetic hand users. Prosthetics Orthot. Int. 2007, 31, 362–370. [Google Scholar] [CrossRef]

- Biddiss, E.; Chau, T. Upper limb prosthesis use and abandonment: A survey of the last 25 years. Prosthet. Orthot. Int. 2007, 31, 236–257. [Google Scholar] [CrossRef]

- Bicchi, A. Hands for dexterous manipulation and robust grasping: A difficult road toward simplicity. IEEE Trans. Robot. Autom. 2000, 16, 652–662. [Google Scholar] [CrossRef]

- Bunderson, N.E. Real-Time Control of an Interactive Impulsive Virtual Prosthesis. IEEE Trans. Neural Syst. Rehabil. Eng. 2013, 22, 363–370. [Google Scholar] [CrossRef]

- Hargrove, L.; Miller, L.; Turner, K.; Kuiken, T. Control within a virtual environment is correlated to functional outcomes when using a physical prosthesis. J. NeuroEngineering Rehabil. 2018, 15, 1–7. [Google Scholar] [CrossRef]

- Barresi, G.; Marinelli, A.; Caserta, G.; de Zambotti, M.; Tessadori, J.; Angioletti, L.; Boccardo, N.; Freddolini, M.; Mazzanti, D.; Deshpande, N.; et al. Exploring the Embodiment of a Virtual Hand in a Spatially Augmented Respiratory Biofeedback Setting. Front. Neurorobotics 2021, 15. [Google Scholar] [CrossRef]

- Lambrecht, J.M.; Pulliam, C.L.; Kirsch, R.F. Virtual reality environment for simulating tasks with amyoelectric prosthesis: An assessment and training tool. J. Prosthet. Orthot. 2011, 23, 89–94. [Google Scholar] [CrossRef] [PubMed]

- Semprini, M.; Boccardo, N.; Lince, A.; Traverso, S.; Lombardi, L.; Succi, A.; Canepa, M.; Squeri, V.; Saglia, J.A.; Ariano, P.; et al. Clinical evaluation of Hannes: Measuring the usability of a novel polyarticulated prosthetic hand. In Tactile Sensing, Skill Learning, and Robotic Dexterous Manipulation; Academic Press: Cambridge, MA, USA, 2022; pp. 205–225. [Google Scholar]

- Di Domenico, D.; Marinelli, A.; Boccardo, N.; Semprini, M.; Lombardi, L.; Canepa, M.; Stedman, S.; Della Casa, A.B.; Chiappalone, M.; Gruppioni, E.; et al. Hannes Prosthesis Control Based on Regression Machine Learning Algorithms. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Prague, Czech Republic, 27 September–1 October 2021. [Google Scholar]

- Weiner, P.; Starke, J.; Hundhausen, F.; Beil, J.; Asfour, T. The KIT Prosthetic Hand: Design and Control. In Proceedings of the IEEE International Conference on Intelligent Robots and Systems, Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Cipriani, C.; Controzzi, M.; Carrozza, M. The SmartHand transradial prosthesis. J. NeuroEngineering Rehabil. 2011, 8, 1–14. [Google Scholar] [CrossRef] [PubMed]

- Zecca, M.; Micera, S.; Carrozza, M.C.; Dario, P. Control of Multifunctional Prosthetic Hands by Processing the Electromyographic Signal. Crit. Rev. Biomed. Eng. 2017, 45, 383–410. [Google Scholar] [CrossRef] [PubMed]

- Costantini, G.; Todisco, M.; Casali, D.; Carota, M.; Saggio, G.; Bianchi, L.; Quitadamo, L. SVM classification of EEG signals for brain computer interface. In Proceedings of the Neural Nets WIRN09, Vietri sul Mare, Italy, 28–30 May 2009. [Google Scholar]

- Riillo, F.; Quitadamo, L.R.; Cavrini, F.; Saggio, G.; Pinto, C.A.; Pastò, N.C.; Gruppioni, E. Evaluating the influence of subject-related variables on EMG-based hand gesture classification. In Proceedings of the IEEE International Symposium on Medical Measurements and Applications (MeMeA), Jeju, Republic of Korea, 14–16 June 2014. [Google Scholar]

- Grieves, M. Digital Twin of Physical Systems: Opportunities and Challenges. In Proceedings of the ASME 2002 International Mechanical Engineering Congress and Exposition, New Orleans, LA, USA, 17–22 November 2002. [Google Scholar]

- Merienne, F. Human factors consideration in the interaction process with virtual environment. Int. J. Interact. Des. Manuf. 2000, 4, 83–86. [Google Scholar] [CrossRef]

- Martínez-Gutiérrez, A.; Díez-González, J.; Verde, P.; Perez, H. Convergence of Virtual Reality and Digital Twin technologies to enhance digital operators’ training in industry 4.0. Int. J. Hum. Comput. Stud. 2023, 180, 103136. [Google Scholar] [CrossRef]

- Kamel, F.A.H.; Basha, M.A. Effects of Virtual Reality and Task-Oriented Training on Hand Function and Activity Performance in Pediatric Hand Burns: A Randomized Controlled Trial. Arch. Phys. Med. Rehabil. 2021, 102, 1059–1066. [Google Scholar] [CrossRef]

- Lam, J.-F.; Gosselin, L.; Rushton, P.W. Use of Virtual Technology as an Intervention for Wheelchair Skills Training: A Systematic Review. Arch. Phys. Med. Rehabil. 2018, 99, 2313–2341. [Google Scholar] [CrossRef]

- Rathinam, C.; Mohan, V.; Peirson, J.; Skinner, J.; Nethaji, K.S.; Kuhn, I. Effectiveness of virtual reality in the treatment of hand function in children with cerebral palsy: A systematic review. J. Hand Ther. 2018, 32, 426–434.e1. [Google Scholar] [CrossRef]

- Kecskeméthy, A.; Weinberg, A. An Improved Elasto-Kinematic Model of the Human Forearm for Biofidelic Medical Diagnosis. Multibody Syst. Dyn. 2005, 14, 1–21. [Google Scholar] [CrossRef]

- Valentini, P.P. Effects of the dimensional and geometrical tolerances on the kinematic and dynamic performances of the Rzeppa ball joint. Proc. Inst. Mech. Eng. Part D J. Automob. Eng. 2013, 228, 37–49. [Google Scholar] [CrossRef]

- Mariti, L.; Belfiore, N.P.; Pennestrì, E.; Valentini, P.P. Comparison of Solution Strategies for Multibody Dynamics Equations. Int. J. Numer. Methods Eng. 2011, 88, 637–656. [Google Scholar] [CrossRef]

- Mirtich, B.V. Impulse-Based Dynamic Simulation of Rigid Body Systems. Ph.D. Thesis, University of California, Berkeley, CA, USA, 1996. [Google Scholar]

- Schmitt, A.; Bender, J. Impulse-based dynamic simulation of multibody systems: Numerical comparison with standard methods. In Proceedings of the Automation of Discrete Production Engineering, Sozopol, Bulgaria, 21–23 September 2005; pp. 324–329. [Google Scholar]

- Valentini, P.P.; Pezzuti, E. Interactive Multibody Simulation in Augmented Reality. J. Theor. Appl. Mech. 2010, 48, 733–750. [Google Scholar]

- Baumgarte, J. Stabilization of constraints and integrals of motion in dynamical systems. Comput. Methods Appl. Mech. Eng. 1972, 1, 1–16. [Google Scholar] [CrossRef]

- Rumman, N.A.; Fratarcangeli, M. State of the art in skinning techniques for articulated deformable characters. In Proceedings of the Eleventh International Conference on Computer Graphics Theory and Application, GRAPP 2016, Rome, Italy, 27–29 February 2016; Part of the Eleventh Joint Conference On Computer Vision, Imaging and Computer Graphics Theory and Applications, VISIGRAPP 2016. SciTePress: Setubal, Portugal, 2016. [Google Scholar]

- Sujar, A.; Casafranca, J.J.; Serrurier, A.; Garcia, M. Real-time animation of human characters’ anatomy. Comput. Graph. 2018, 74, 268–277. [Google Scholar] [CrossRef]

- de Aguiar, E.; Ukita, N. Representing mesh-based character animations. Comput. Graph. 2014, 38, 10–17. [Google Scholar] [CrossRef]

- Valentini, P.P. Interactive virtual assembling in augmented reality. Int. J. Interact. Des. Manuf. 2009, 3, 109–119. [Google Scholar] [CrossRef]

- Light, C.M.L.; Chappell, P.H.; Kyberd, P. Assessment of hand functionality using the Southampton Hand Assessment Procedure. In Proceedings of the 12th World Congress of the International Society for Prosthetics and Orthotics, Vancouver, BC, Canada, 29 July–3 August 2007. [Google Scholar]

| Task | Average Time [s] | Standard Deviation [s] | Level of Difficulty |

|---|---|---|---|

| Bottle | 8.4 | 2.4 | Level 1 |

| Ball | 7.2 | 3.2 | Level 1 |

| Plate | 6.8 | 2.4 | Level 1 |

| Handle | 6.0 | 4.0 | Level 2 |

| Slider | 19.2 | 4.0 | Level 2 |

| Buttons | 17.6 | 4.0 | Level 3 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cellupica, A.; Cirelli, M.; Saggio, G.; Gruppioni, E.; Valentini, P.P. An Interactive Digital-Twin Model for Virtual Reality Environments to Train in the Use of a Sensorized Upper-Limb Prosthesis. Algorithms 2024, 17, 35. https://doi.org/10.3390/a17010035

Cellupica A, Cirelli M, Saggio G, Gruppioni E, Valentini PP. An Interactive Digital-Twin Model for Virtual Reality Environments to Train in the Use of a Sensorized Upper-Limb Prosthesis. Algorithms. 2024; 17(1):35. https://doi.org/10.3390/a17010035

Chicago/Turabian StyleCellupica, Alessio, Marco Cirelli, Giovanni Saggio, Emanuele Gruppioni, and Pier Paolo Valentini. 2024. "An Interactive Digital-Twin Model for Virtual Reality Environments to Train in the Use of a Sensorized Upper-Limb Prosthesis" Algorithms 17, no. 1: 35. https://doi.org/10.3390/a17010035

APA StyleCellupica, A., Cirelli, M., Saggio, G., Gruppioni, E., & Valentini, P. P. (2024). An Interactive Digital-Twin Model for Virtual Reality Environments to Train in the Use of a Sensorized Upper-Limb Prosthesis. Algorithms, 17(1), 35. https://doi.org/10.3390/a17010035