Abstract

In this paper, a new nonsmooth optimization-based algorithm for solving large-scale regression problems is introduced. The regression problem is modeled as fully-connected feedforward neural networks with one hidden layer, piecewise linear activation, and the -loss functions. A modified version of the limited memory bundle method is applied to minimize this nonsmooth objective. In addition, a novel constructive approach for automated determination of the proper number of hidden nodes is developed. Finally, large real-world data sets are used to evaluate the proposed algorithm and to compare it with some state-of-the-art neural network algorithms for regression. The results demonstrate the superiority of the proposed algorithm as a predictive tool in most data sets used in numerical experiments.

1. Introduction

Regression models and methods are extensively utilized for prediction and approximation in various real-world scenarios. When data involve complex relationships between the response and explanatory variables, regression methods designed using neural networks (NNs) have emerged as powerful alternatives to traditional regression methods [1]. Hence, we focus on NNs for regression (NNR): we introduce a new approach for modeling and solving regression problems using fully-connected feedforward NNs with the rectified linear unit (ReLU) activation function, the -loss function, and the -regularization. We call this problem the ReLU-NNR problem, and the method for solving it is the limited memory bundle NNR (LMBNNR) algorithm.

The ReLU-NNR problem is nonconvex and nonsmooth. Note that ReLU itself is nonsmooth. Thus, even if we used a smooth loss function and regularization, the underlying optimization problem would still be nonsmooth. Conventional global optimization methods, including those based on global random search, become time-consuming as this problem contains a large number of variables. In addition, the authors in [2] showed that it is impossible to give any guarantee that the global minimizer is found by a general global (quasi-)random search algorithm with reasonable accuracy when the dimension is large. On the other hand, local search methods are sensitive to the choice of starting points and, in general, end up at the closest local solutions, which may be significantly different from the global ones. To address the nonconvexity of the ReLU-NNR problem, we propose a constructive approach. More specifically, we construct initial weights by using the solution from the previous iteration. Such an approach allows us to find either global or deep local minimizers for the ReLU-NNR problem. To solve the underlying nonsmooth optimization problems, we apply a slightly modified version of the limited memory bundle method (LMBM) developed by Karmitsa (née Haarala) [3,4]. We use this method since it is one of the few algorithms capable of handling large dimensions, nonconvexity, and nonsmoothness all at once. In addition, the LMBM has already proven itself in solving machine learning problems such as clustering [5], cardinality and clusterwise linear regression [6,7], and missing value imputation [8].

We consider NNs with only one hidden layer but the number of hidden nodes is determined automatically using a novel constructive approach and an automated stopping procedure (ASP). More precisely, the number of nodes is determined incrementally by starting from one node. ASP bases the intelligent selection of initial weights on the iteratively self-updated regularization parameter. It is applied at each iteration of the constructive algorithm to stop training if there is no further improvement in the model. Thus, there are no tuneable hyperparameters in LMBNNR.

To summarize, the LMBNNR algorithm has some remarkable features, including:

- It takes full advantage of nonsmooth models and nonsmooth optimization in solving NNR problems (no need for smoothing etc.);

- It is hyperparameter-free due to the automated determination of the proper number of nodes;

- It is applicable to large-scale regression problems;

- It is an efficient and accurate predictive tool.

The structure of the paper is as follows. An overview of the related work is given in Section 2, while Section 3 provides the theoretical background on nonsmooth optimization and NNR. The problem statement—the ReLU-NNR problem—is given in Section 4, and in Section 5, the LMBNNR algorithm together with ASP is introduced. In Section 6, we present the performance of the LMBNNR algorithm and compare it with some state-of-the-art NNR algorithms. Section 7 concludes the paper.

2. Related Work

Optimization is at the core of machine learning. Although it is a well-known fact that many machine learning problems lead to solving nonsmooth optimization problems (e.g., hinge-loss, lasso, and ReLU), nonsmooth optimization methods are scarcely used in the machine learning society. The common practice is to minimize nonsmooth functions by ignoring the nonsmoothness and employing a popular and simple smooth solver (like the Newton method, e.g., [9]) or by applying some smoothing techniques (see, e.g., [10,11]). However, there are some successful exceptions. For example, abs-linear forms of prediction tasks are solved using a successive piecewise linearization method in [12,13], a primal-dual prox method for problems in which both the loss function and the regularizer are nonsmooth is developed in [14], various nonsmooth optimization methods are applied to solve clustering, classification, and regression problems in [5,6,15,16,17], and finally, nonsmooth optimization approaches are combined with support vector machines in [18,19,20].

NNs are among the most popular and powerful machine learning techniques. There are two main properties in any NNs: an activation function and an error (loss) function. The simplest activation function is linear, and NNs with this function can be easily trained. Nevertheless, they cannot learn complex mapping functions, resulting in a poor outcome in the testing phase. On the other hand, smooth nonlinear activation functions, like the sigmoid and the hyperbolic tangent activation functions, may lead to highly complex nonconvex loss functions and require numerous training iterations as well as many hidden nodes [21].

In [22], it is theoretically discussed that NNs with nonsmooth activation functions demonstrate high performance. Among these functions, the piecewise linear ReLU has lately become the default activation function for many types of NNs. This function is nonsmooth, but it has a simple mathematical form of ReLU provides more sensitivity to the summed activation of the node compared with traditional smooth sigmoid and hyperbolic tangent functions, and it avoids easy saturation. Due to the computational advantages of its simple structure and, thus, strong training ability, ReLU is preferable for training complex relationships in NNs. In practice, instead of minimizing the resultant nonsmooth loss function directly, it is often replaced with a smoothed surrogate loss function (see, e.g., [11]). However, algorithms based on smoothing techniques involve the choice of smoothing parameters. The number of such parameters becomes large in large data sets, and their choice becomes problematic, which affects the accuracy of algorithms.

The stochastic (sub)gradient descent method (SGD) is another commonly used method to minimize the loss function in NNs algorithms. Although this method has been used intuitively to solve machine learning problems with nonsmooth activation and loss functions for years, its convergence for such functions has been proved only very recently [23]. SGD is efficient since it does not depend on the size of the data, but it is not accurate as an optimization method and may require a large number of function and subgradient evaluations. Moreover, SGD may easily diverge if the learning rate (step size) is too large. These drawbacks are due to the fact that SGD is based on the subgradient method for convex problems, while NNs problems are highly nonconvex. For more discussions on NNs, we refer to [24].

The most commonly used NNR algorithms are the feedforward backpropagation network (FFBPN) [25,26,27,28] and the radial-basis network (RBN) [29,30,31]. FFBPN and most of its variants converge to only locally optimal solutions [26,27], and they only work under the precondition that all the functions involved in NNR are differentiable. RBN and its modifications can be trained without local minima issues [32], but they involve a heuristic procedure to select parameters and hyperparameters.

The choice of the hyperparameters in NNs is a challenging problem. These parameters define the structure of NNs, and their optimal choice leads to an accurate model. Generally, the hyperparameters can be determined either by an algorithm or manually by the user. Tuning the hyperparameters manually is a tedious and time-consuming process, and thus, several algorithms with varying levels of automaticity have been proposed for this purpose (see, e.g., [33,34,35,36,37,38]). Nevertheless, there is no guarantee that in these algorithms the selected number of hidden units (usually the number of nodes) is optimal, and the question of a good hyperparameter tuning procedure remains open. Therefore, it is imperative to develop algorithms that can minimize nonsmooth loss functions as they are (i.e., without smoothing) and automate the calibration of the hyperparameters.

3. Theoretical Background and Notations

In this section, we provide some theoretical background and notations that are used throughout the paper.

3.1. Nonsmooth Optimization

Nonsmooth optimization refers to the problem of minimizing (or maximizing) functions that are not continuously differentiable [39]. We denote the d-dimensional Euclidean space by and the inner product by , where . The associated and -norms are and , respectively.

A function is called locally Lipschitz continuous on if for any bounded subset there exists such that

The Clarke subdifferential of a locally Lipschitz continuous function at a point is given by [39,40]

where “conv” denotes the convex hull of a set. A vector is called a subgradient. The point is stationary, if . Note that stationarity is a necessary condition for local optimality [39].

3.2. Neural Networks for Regression

Let A be a given data set with n samples: , where are the values of m input features and are their outputs. In regression analysis, the aim is to find a function such that is a “good approximation” of . In other words, the following regression error is minimized:

Applying NNs to the regression problem can lead to significantly higher predictive power compared with traditional regression. In addition, it can model more complex scenarios just by increasing the number of nodes. This concept is proved in the universal approximation theorem, which states that a single hidden layer feedforward network of sufficient complexity is able to approximate any given regression function on a compact set to an arbitrary degree [41].

In practice, NNs take several input features and, as a part of the learning process, multiply them by their weights and run them through an activation function and a loss function, which closely resemble the regression error (1). The loss function is used to estimate the error in the current model so that the weights can be updated to reduce this error on the next evaluation. The most commonly used loss function for NNR problems is the mean squared error (MSE or -norm). However, there are at least two valid reasons to choose the mean absolute error (MAE or -norm) over the -norm: first, the -norm makes the loss function with the ReLU activation function simpler, and second, the regression models with the -norm are more robust to outliers (see, e.g., [42]). Once the is trained, the optimal weights for the model (regression coefficients) are found to fit the data.

4. Nonsmooth Optimization Model of ReLU-NNR

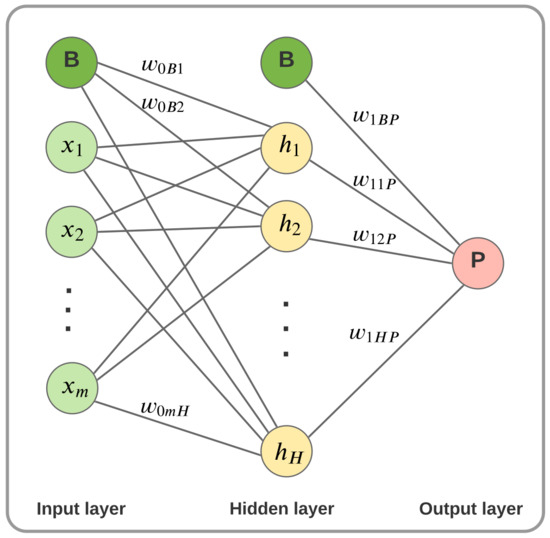

In this section, we formulate the nonsmooth optimization model for the RELU-NNR problem, but first, we give some notations. Let us denote the number of hidden nodes in NNs by H and let w be the weight connection vector and be its element in the index place s, where s is the index triplet (see Figure 1). Then states the weight that connects a-th layer’s b-th node to -th layer’s c-th node. In addition, denote by P an output index value for the weights connecting the hidden layer’s nodes to the output node of the NN. In contrast to the hidden nodes, no activation function is applied to the output node. Finally, let B denote the middle index value for the weights connecting a layer’s bias term to a node in the next layer. The bias terms with this index can be thought of as the last node in each layer. The weight connection vector w for m input features and H hidden nodes has components. For the sake of clarity, we organize in an “increasing order” as

We define the following

where denotes the k-th coordinate of the i-th sample. The loss function using the -norm (cf. regression error (1) with ) is

Figure 1.

A simple NN model with one output, one hidden layer and H hidden nodes.

To avoid overfitting in the learning process, we add an extra element to the loss function . In most cases, -regularization is preferable as it reduces the weight values of less important input features. In addition, it is robust and insensitive to outliers. Thus, we rewrite (2) as

where is an iteratively self-updated regularization parameter (to be described later). Furthermore, the nonsmooth, nonconvex optimization formulation for ReLU-NNR can be expressed as

5. LMBNNR Algorithm

To solve the ReLU-NNR problem (3), we now introduce the LMBNNR algorithm. In addition, we recall the LMBM in the form used here and briefly discuss its convergence. A more detailed description of the algorithm can be found in the technical report [43].

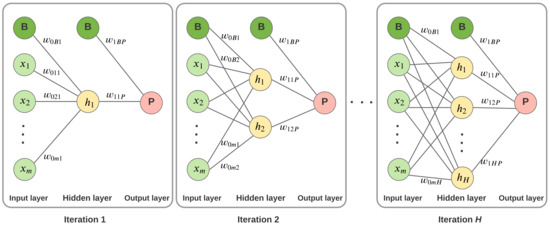

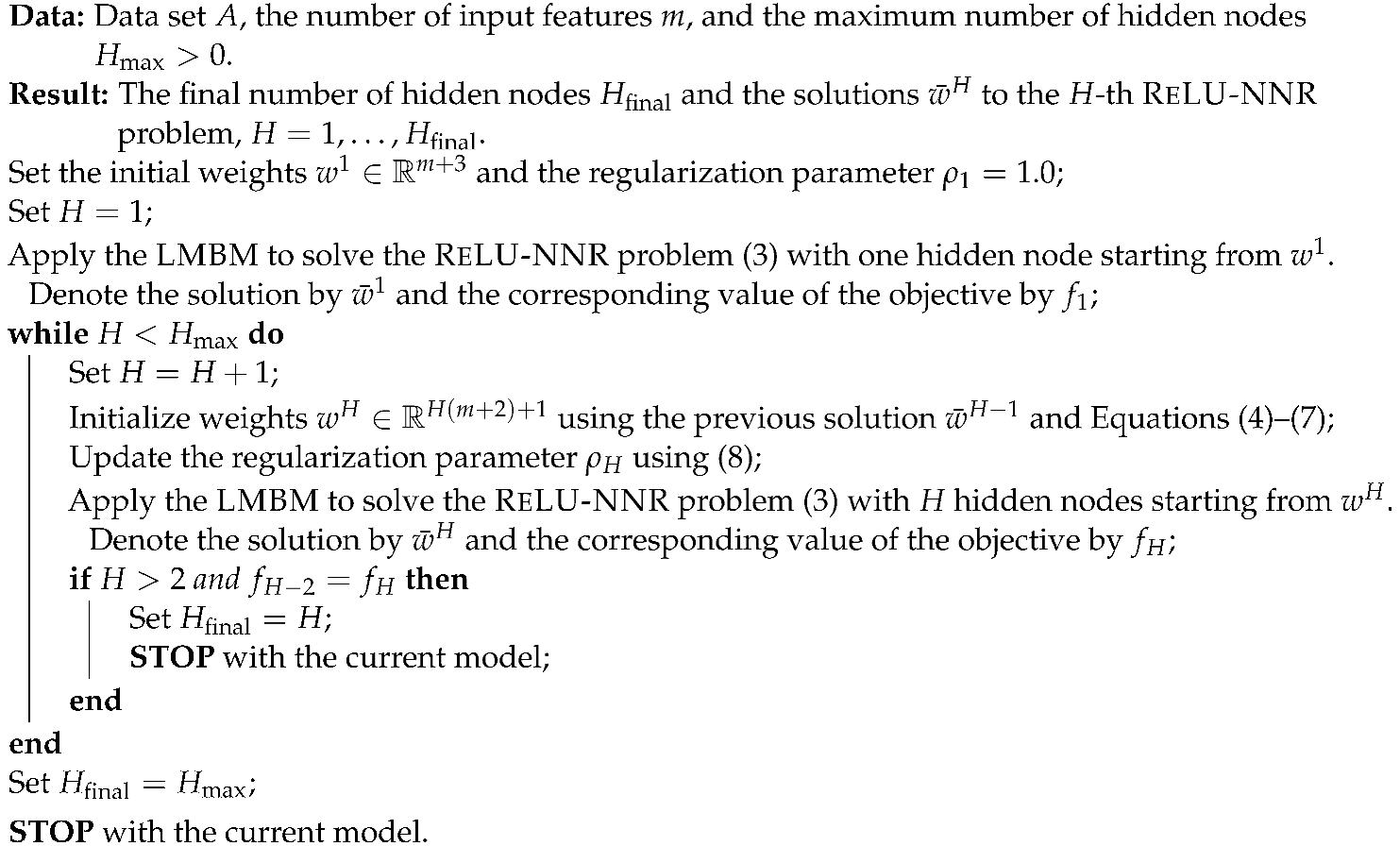

The LMBNNR algorithm computes hidden nodes incrementally and uses ASP to detect the proper number of these nodes. More precisely, it starts with one node in the hidden layer and adds a new node to that layer at each iteration of the algorithm. It is worth noting that each time a node is added, new connection weights appear. Starting from the initial weight the LMBNNR algorithm (Algorithm 1) applies the LMBM (Algorithm 2) to solve the underlying ReLU-NNR problem. The solution is employed to generate initial weights for the next iteration. This procedure is repeated until ASP is activated or the maximum number of hidden nodes is reached. ASP is designed based on the value of the objective function; if the value of the objective is not improved in three subsequent iterations, then the LMBNNR algorithm is stopped. Note that the initialization of weights and the regularization parameter (described below) are determined in such a way that we have for all and is an optimal value of (3) with H nodes.

We select the initial weights as

Here, the first m components are the weights from the nodes of the input layer to the node of the hidden layer. The weights from the bias terms and are set to zero, and the weight from the hidden layer to the output is set to one. Figure 2 illustrates the weights in different iterations and the progress of the LMBNNR algorithm.

Figure 2.

Construction of model by LMBNNR.

At subsequent iterations (i.e., when we add a new node to the hidden layer, here is the final number of hidden nodes), we initialize the weights as follows: let be the solution to the -th ReLU-NNR problem. First, we set weights for all but the last two hidden nodes in the output layer as

and the weights from the input layer to all but the last two hidden nodes as

Note that in the case of , we set . Otherwise, we skip this step as there are only two nodes in the hidden layer. Then we take the most recent weights obtained at the previous iteration and split these weights to get the initial weights connecting the input layer and the last two hidden nodes of the current iteration, that is,

Weights from the last two hidden nodes to the output are

To update the regularization parameter , we use the value of the objective at the previous iteration and the weight connecting the newest node of the hidden layer to the output as follows:

Remark 1.

With the used initialization of weights and the regularization parameter we always have for ASP is activated if the value of the objective is no longer improving. In other words, when adding more nodes to the hidden layer does not give us a better model after optimization of the weights.

Now we are ready to give the LMBNNR algorithm.

| Algorithm 1: LMBNNR |

|

Remark 2.

There are no tuneable hyperparameters in LMBNNR. Hence, there is no need for a validation set. It is sufficient to choose the maximum number of hidden nodes big enough (see Section 6 for suitable values).

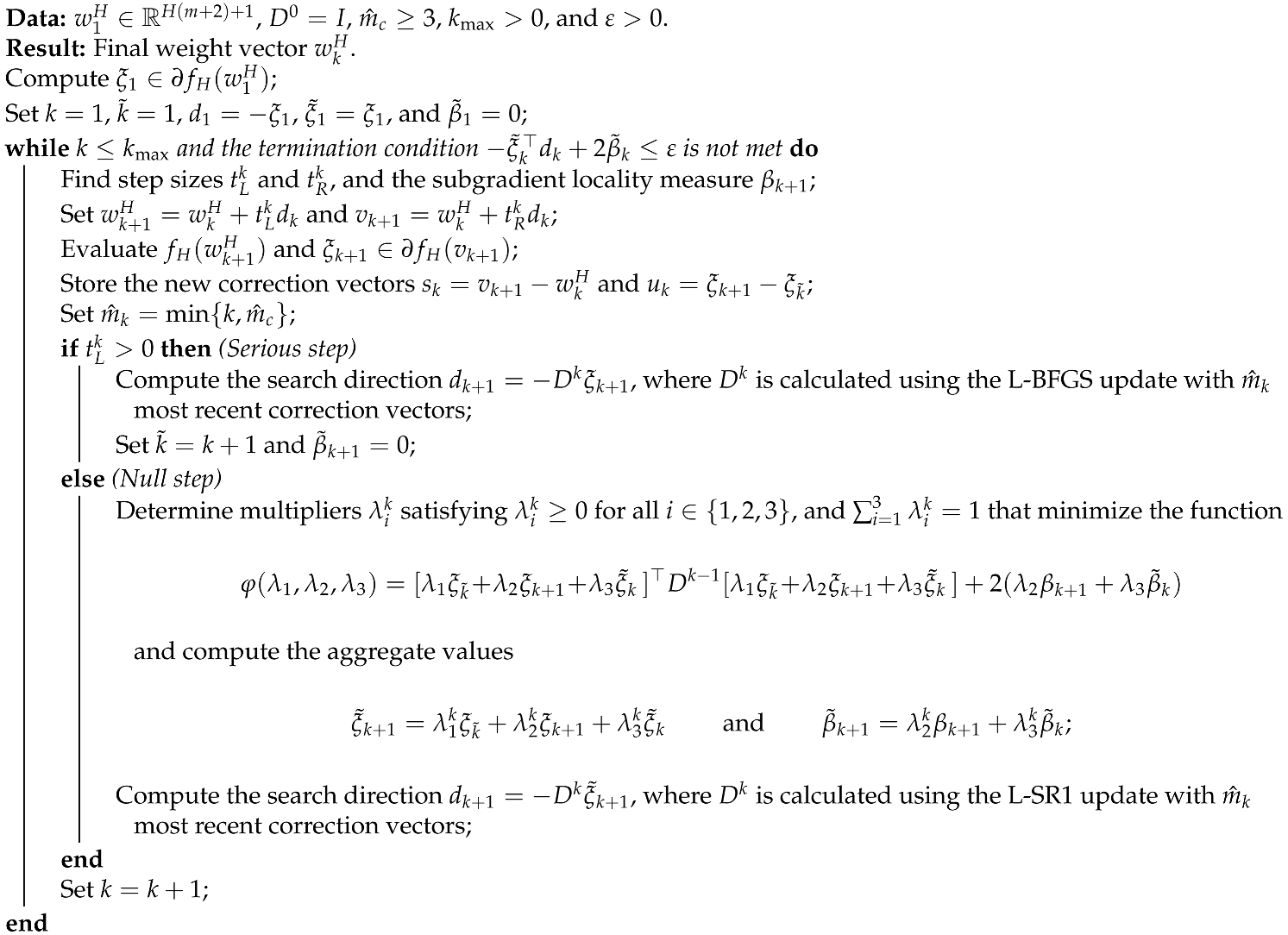

Next, we describe the LMBM with slight modifications to its original version for solving the underlying ReLU-NNR problems in the LMBNNR algorithm. This method is called at every iteration of LMBNNR. For more details of the LMBM, we refer to [3,4].

Remark 3.

We use a nonmonotone line search [4,44] to find step sizes and when Algorithm 2 is combined with Algorithm 1. In addition, as in [9], we use a relatively low maximum number of iterations, , to avoid overfitting.

Remark 4.

The search direction in Algorithm 2 is computed using the L-BFGS update after a serious step and the L-SR1 update after a null step. The updating formulae are similar to those in the classical limited memory variable metric methods for smooth optimization [45]. Nevertheless, the correction vectors and are obtained using subgradients instead of gradients and the auxiliary point instead of the new iteration point.

Remark 5.

The classical linearization error may be negative in the case of a nonconvex objective function. Therefore, a subgradient locality measure , which is a generalization of the linearization error for nonconvex functions (see, e.g., [46]), is used in Algorithm 2.

| Algorithm 2: LMBM for ReLU-NNR problems |

|

We now recall the convergence properties of the LMBM in the case of ReLU-NNR problems. Since the objective function is locally Lipschitz continuous and upper semi-smooth (see e.g., [47]), and the level set is bounded for every starting weight , all the assumptions needed for the global convergence of the original LMBM are satisfied. Therefore, the theorems on the convergence of the LMBM proved in [3,4] can be modified for the ReLU-NNR problems as follows.

Theorem 1.

If the LMBM terminates after a finite number of iterations, say at iteration k, then the weight is a stationary point of the ReLU-NNR problem (3).

Theorem 2.

Every accumulation point of the sequence generated by the LMBM is a stationary point of the ReLU-NNR problem (3).

6. Numerical Experiments

Using some real-world data sets and performance measures, we evaluate the performance of the proposed LMBNNR algorithm. In addition, we compare it with three different widely used NNR algorithms whose implementations are freely available. That is, the backpropagation NNR (BPN) algorithm utilizing TensorFlow (https://www.tensorflow.org/(accessed on 10 September 2023)), Extreme Learning Machine (ELM) [48], and Monotone Multi-Layer Perceptron Neural Networks (MONMLP) [49].

6.1. Data Sets and Performance Measures

A brief description of data sets is given in Table 1 and the references therein. The data sets are divided randomly into training (80%) and test (20%) sets. To get comparable results, we use the same training and test sets for all the methods. We apply the following performance measures: root mean square error (RMSE), mean absolute error (MAE), coefficient of determination (), and Pearson’s correlation coefficient (r) (see the Supplementary Material for more details).

Table 1.

Brief description of data sets.

6.2. Implementation of Algorithms

The proposed LMBNNR algorithm was implemented in Fortran 2003, and the computational experiments were carried out on an iMac (macOS Big Sur 11.6) with a 4.0 GHz Intel(R) Core(TM) i7 machine and 16 GB of RAM. The source code is available at http://napsu.karmitsa.fi/lmbnnr (accessed on 10 September 2023). There are no tuneable parameters in the LMBNNR algorithm.

The BPN algorithm is implemented using TensorFlow in Google Colab. We use the ReLU activation for the hidden layer, the linear activation for the output layer, and the MSE loss function. We use MSE since it usually worked better than MAE in our preliminary experiments with the BPN algorithm. This is probably due to the smoothness of MSE. Naturally, with the proposed LMBNNR algorithm, we do not have difficulties with the nonsmoothness as it applies the nonsmooth optimization solver LMBM. In the BPN algorithm, the Keras optimizer SGD with the default parameters and the following three different combinations of batch size (the number of samples that will be propagated through the network) and number of epochs (the number of complete passes through the training data) are used:

- Mini-Batch Gradient Descent (MBGD): batch size and number of epochs These are the default values for the BPN algorithm;

- Batch Gradient Descent (BGD): batch size = size of the training data and number of epochs . These choices mimic the proposed LMBNNR algorithm;

- Stochastic Gradient Descent (SGD): batch size and number of epochs for data sets with less than 100,000 samples and number of epochs for larger data. We reduce the number of epochs in the latter case due to very long computational times and the fact that the larger number of epochs often leads to NaN loss function values. SGD aims to be as a stochastic version of the BPN algorithm as possible.

The algorithms ELM and MONMLP are implemented in R using the packages “elmNNRcpp” (ELM is available at https://CRAN.R-project.org/package=elmNNRcpp (accessed on 10 September 2023)) and “monmlp” (MONMLP is available at https://CRAN.R-project.org/package=monmlp (accessed on 10 September 2023)). The parameters are the default values provided in the given references, but the number of hidden layers with MONMLP was set to one. The tests with ELM and MONMLP were run on Windows, 2.6 GHz Intel(R) Core(TM) i7-9750H. We run ELM, MONMLP, and the BPN algorithms with the number of hidden nodes set to 2, 5, 10, 50, 100, 200, 500, and 5000. Moreover, since SGD is used as an optimizer in the BPN algorithms as a stochastic method, we run the BPN algorithms ten times for all problems and report the average. Note that LMBNNR needs to be applied to solve the ReLU-NNR problem only once for each data set.

Remark 6.

At every iteration of the LMBNNR algorithm, we solve an optimization problem with the dimension , where H is the number of hidden nodes and m is the number of features in training samples. The convergence rate of the LMBM, employed as an underlying solver in the proposed LMBNNR algorithm, has not been studied extensively, but, as a bundle method, it is at most linear (see, e.g., [62]), while the search direction can be computed within operations [3]. For comparison purposes, we mention that the time complexity of a BPN algorithm is typically , where n is the number of training samples. A more specific analysis of the time complexity of LMBNNR remains a subject for future research.

6.3. Results and Discussion

The results of our experiments are given in Table 2 and Table 3, where we provide the RMSE values for the test set and the used CPU time in seconds. The results using the other three performance measures (MAE, , and r) are given as Supplementary Material for this paper. Since the algorithms are implemented in different programming languages and run on different platforms, the CPU times reported here are not directly comparable. Nevertheless, we can still compare the magnitudes of the computational times used. For the BPN algorithms, the results (including CPU times) are the average of results over ten runs, unless some of the runs lead to NaN loss function values. In that case, the results are the average of the successful runs. Note that obtaining a NaN loss function value is a natural property of SGD and related methods if the learning rate is too large. If there is “NaN” in the tables, then all ten runs lead to the NaN solution. In all separate runs, the maximum time limit is set to 1 h when (n and m are the number of samples and features, respectively) and 2 h otherwise. In tables, “t-lim” means that an algorithm gives no solution within the time limit.

Table 2.

Results with relatively small numbers of features.

Table 3.

Results with large numbers of features.

To evaluate the reliability of ASP, we force LMBNNR to solve ReLU-NNR problems up to 200 nodes. With LMBNNR, the results with smaller numbers of nodes are obtained as a side product. In tables, we report the results obtained with different numbers of nodes (the same numbers that we used for testing the other algorithms), the best solution with respect to the RMSE of the test set, and the results when applying ASP. The last two are denoted by “Best” and “ASP”, respectively.

As we report all the results with different model parameter combinations (i.e., results with different numbers of nodes or different combinations of batch sizes and epochs), we do not use separate validation sets in our experiments. Instead, we use the best results, namely the smallest RSME of the test set, obtained with any other NNR algorithm than LMBNNR, in our comparison. Naturally, in real-world predictions, we would not know which result is the best, and a separate validation set should be employed to tune the hyperparameters with NNR algorithms but LMBNNR. Note that this kind of experimental setting favors the other methods over the proposed one. In tables, the best results obtained with any other NNR algorithm than LMBNNR are presented using boldface font. To make the comparison even more challenging for the LMBNNR algorithm, we compare the result that it gives with ASP to the best result obtained with any other tested algorithm. We point out that the results using the other three performance measures support the conclusions drawn from RMSE. Nevertheless, MAE often indicates slightly better performance of the LMBNNR algorithm than RMSE (see the Supplementary Material). This is, in particular, due to the used loss function or the regularization term.

The predictions with the LMBNNR algorithm in termination are better than those of any other tested NNR algorithm in 5 data sets out of 12. In addition, MONMLP with the selected best number of hidden nodes is the most accurate algorithm in five data sets, and both SGD and ELM are in one data set. MBGD and BGD never produce the most accurate results in our experiments. Therefore, in terms of accuracy, the only noteworthy challenger for LMBNNR is MONMLP. However, the pairwise comparison of these two algorithms shows that the required computational times are clearly in favor of LMBNNR. Indeed, MONMLP is the most time-consuming of the algorithms tested (sometimes together with SGD): in the largest data sets, CIFAR-10 and Greenhouse, MONMLP finds no solution within the time limit, and even in the smallest data tested, it fails to give a solution with larger numbers of hidden nodes ( and 5000). Moreover, MONMLP has a big deviation in the prediction accuracy obtained with different numbers of hidden nodes. For example, in residential building data it gives the most accurate prediction of all tested algorithms (RMSE = 60.932) with two hidden nodes and almost the worst prediction (RMSE = 1200.323) with 50 hidden nodes. In practice, this means that finding good hyperparameters for MONMLP may become an issue.

Although SGD is the most accurate version of the BPN algorithm when the number of features is relatively low, it usually requires a lot more computational time than LMBNNR. Moreover, SGD fails almost always when the number of features is large.

If we only consider computational times, then MBGD, BGD, and ELM would be our choices. From these, BGD is out of the question, as the predictions it produces are not at all accurate. Obviously, the parameter choices used in BGD are not suitable for the BPN algorithm, and the main reason to keep this version here is purely theoretical as these parameters mimic the LMBNNR algorithm. In addition, MBGD, a version using the default parameters of the BPN algorithm, often fails to produce accurate predictions, although it is clearly better than BGD. The pairwise comparison with LMBNNR shows that MBGD produces more accurate prediction results only in one data set: online news popularity. Moreover, similar to SGD, MBGD fails when the number of features is large. Therefore, in large data sets, the only real challenger for LMBNNR is ELM. Indeed, ELM is a very efficient method, usually using only a few seconds to solve an individual ReLU-NNR problem. Nevertheless, LMBNNR produces more accurate predictions than ELM in 9 data sets out of 12, and it is fairly efficient as well.

ASP seems to work pretty well: it usually triggers within a few iterations after the best solution is obtained, and the accuracy of the prediction is very close to the best one. The average number of hidden nodes used before ASP is 40. This is considerably less than what is needed to obtain an accurate prediction with any of the BPN algorithms.

It is worth noting that finding good hyperparameters for most NNR algorithms is time-consuming and may require the utilization of a separate validation. For instance, if we just run a NNR algorithm with two different numbers of nodes, the computational time is doubled—not to mention the time needed to prepare the separate runs and validate the results. With the LMBNNR algorithm, the intermediate results are obtained within the time required by the largest number of nodes without the necessity of separate runs. In addition, there is no need to use a validation set to fit hyperparameters in LMBNNR, as there is none.

7. Conclusions

In this paper, we introduce a novel neural networks (NNs) algorithm, LMBNNR, to solve regression problems in large data sets. The regression problem is modeled using NNs with one hidden layer, the piecewise linear activation function known as ReLU, and the -based loss function. We utilize a modified version of the limited memory bundle method to minimize the objective, as this method is able to handle large dimensions, nonconvexity, and nonsmoothness very efficiently.

The proposed algorithm requires no hyperparameter tuning. It starts with one hidden node and gradually adds more nodes with each iteration. The solution of the previous iteration is used as a starting point for the next one to obtain either global or deep local minimizers for the ReLU-NNR problem. The algorithm terminates when the optimal value of the loss function cannot be improved in several successive iterations or when the maximum number of hidden nodes is reached.

The LMBNNR algorithm is tested using 12 large real-world data sets and compared with the backpropagation NNR (BPN) algorithm using TensorFlow, Extreme Learning Machine (ELM), and Monotone Multi-Layer Perceptron Neural Networks (MONMLP). The results show that the proposed algorithm outperforms the BPN algorithm, even if we tested the latter with different hyperparameter settings and used the best results in comparison. In addition, the LMBNNR algorithm is far more accurate than ELM and significantly faster than MONMLP. Thus, we conclude that the proposed algorithm is accurate and efficient for solving regression problems with large numbers of samples and large numbers of input features.

The results presented in this paper demonstrate the importance of nonsmooth optimization for NNs: the use of simple but nonsmooth activation functions together with powerful nonsmooth optimization methods can lead to the development of accurate and efficient hyperparameter-free NNs algorithms. The approach proposed in this paper can be extended to model NNs for classification and to build NNs with more than one hidden layer in order to develop robust and effective deep learning algorithms.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/a16090444/s1, Description of used performance measures with Tables S1–S12 including obtained MAE, and r results in various data sets.

Author Contributions

Investigation, N.K. and S.T.; Methodology, N.K., P.P. and A.M.B.; Software, N.K., K.J. and P.P.; Writing—original draft, N.K., S.T., K.J., A.M.B. and M.M.M. All authors have read and agreed to the published version of the manuscript.

Funding

This work was financially supported by Research Council of Finland grants #289500, #319274, #345804, and #345805, and by the Australian Government through the Australian Research Council’s Discovery Projects funding scheme (Project no. DP190100580).

Data Availability Statement

The proposed LMBNNR algorithm is available at http://napsu.karmitsa.fi/lmbnnr (accessed on 10 September 2023). The algorithms ELM and MONMLP are implemented in R using the packages “elmNNRcpp” and “monmlp” available in CRAN. More precisely, ELM is available at https://CRAN.R-project.org/package=elmNNRcpp (accessed on 10 September 2023) and MONMLP is available at https://CRAN.R-project.org/package=monmlp (accessed on 10 September 2023). The used data sets are from UC Irvine Machine Learning Repository (UCI, https://archive.ics.uci.edu/ (accessed on 10 September 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Malte, J. Artificial neural network regression models in a panel setting: Predicting economic growth. Econ. Model. 2020, 91, 148–154. [Google Scholar]

- Pepelyshev, A.; Zhigljavsky, A.; Žilinskas, A. Performance of global random search algorithms for large dimensions. J. Glob. Optim. 2018, 71, 57–71. [Google Scholar] [CrossRef]

- Haarala, N.; Miettinen, K.; Mäkelä, M.M. Globally Convergent Limited Memory Bundle Method for Large-Scale Nonsmooth Optimization. Math. Program. 2007, 109, 181–205. [Google Scholar] [CrossRef]

- Karmitsa, N. Limited Memory Bundle Method and Its Variations for Large-Scale Nonsmooth Optimization. In Numerical Nonsmooth Optimization: State of the Art Algorithms; Bagirov, A.M., Gaudioso, M., Karmitsa, N., Mäkelä, M.M., Taheri, S., Eds.; Springer: Cham, Switzerland, 2020; pp. 167–200. [Google Scholar]

- Bagirov, A.M.; Karmitsa, N.; Taheri, S. Partitional Clustering via Nonsmooth Optimization: Clustering via Optimization; Springer: Cham, Switzerland, 2020. [Google Scholar]

- Halkola, A.; Joki, K.; Mirtti, T.; Mäkelä, M.M.; Aittokallio, T.; Laajala, T. OSCAR: Optimal subset cardinality regression using the L0-pseudonorm with applications to prognostic modelling of prostate cancer. PLoS Comput. Biol. 2023, 19, e1010333. [Google Scholar] [CrossRef] [PubMed]

- Karmitsa, N.; Bagirov, A.M.; Taheri, S.; Joki, K. Limited Memory Bundle Method for Clusterwise Linear Regression. In Computational Sciences and Artificial Intelligence in Industry; Tuovinen, T., Periaux, J., Neittaanmäki, P., Eds.; Springer: Cham, Switzerland, 2022; pp. 109–122. [Google Scholar]

- Karmitsa, N.; Taheri, S.; Bagirov, A.M.; Mäkinen, P. Missing value imputation via clusterwise linear regression. IEEE Trans. Knowl. Data Eng. 2022, 34, 1889–1901. [Google Scholar] [CrossRef]

- Airola, A.; Pahikkala, T. Fast Kronecker product kernel methods via generalized vec trick. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 3374–3387. [Google Scholar]

- Bian, W.; Chen, X. Neural network for nonsmooth, nonconvex constrained minimization via smooth approximation. IEEE Trans. Neural Netw. Learn. Syst. 2014, 25, 545–556. [Google Scholar] [CrossRef] [PubMed]

- JunRu, L.; Hong, Q.; Bo, Z. Learning with smooth Hinge losses. Neurocomputing 2021, 463, 379–387. [Google Scholar]

- Griewank, A.; Rojas, A. Treating Artificial Neural Net Training as a Nonsmooth Global Optimization Problem. In Machine Learning, Optimization, and Data Science. LOD 2019; Nicosia, G., Pardalos, P., Umeton, R., Giuffrida, G., Sciacca, V., Eds.; Springer: Cham, Switzerland, 2019; Volume 11943. [Google Scholar]

- Griewank, A.; Rojas, A. Generalized Abs-Linear Learning by Mixed Binary Quadratic Optimization. In Proceedings of African Conference on Research in Computer Science CARI 2020, Thes, Senegal, 14–17 October 2020; Available online: https://hal.science/hal-02945038 (accessed on 10 September 2023).

- Yang, T.; Mahdavi, M.; Jin, R.; Zhu, S. An efficient primal dual prox method for non-smooth optimization. Mach. Learn. 2015, 98, 369–406. [Google Scholar] [CrossRef]

- Astorino, A.; Gaudioso, M. Ellipsoidal separation for classification problems. Optim. Methods Softw. 2005, 20, 267–276. [Google Scholar] [CrossRef]

- Bagirov, A.M.; Taheri, S.; Karmitsa, N.; Sultanova, N.; Asadi, S. Robust piecewise linear L1-regression via nonsmooth DC optimization. Optim. Methods Softw. 2022, 37, 1289–1309. [Google Scholar] [CrossRef]

- Gaudioso, M.; Giallombardo, G.; Miglionico, G.; Vocaturo, E. Classification in the multiple instance learning framework via spherical separation. Soft Comput. 2020, 24, 5071–5077. [Google Scholar] [CrossRef]

- Astorino, A.; Fuduli, A. Support vector machine polyhedral separability in semisupervised learning. J. Optim. Theory Appl. 2015, 164, 1039–1050. [Google Scholar] [CrossRef]

- Astorino, A.; Fuduli, A. The proximal trajectory algorithm in SVM cross validation. IEEE Trans. Neural Netw. Learn. Syst. 2015, 27, 966–977. [Google Scholar] [CrossRef] [PubMed]

- Joki, K.; Bagirov, A.M.; Karmitsa, N.; Mäkelä, M.M.; Taheri, S. Clusterwise support vector linear regression. Eur. J. Oper. Res. 2020, 287, 19–35. [Google Scholar] [CrossRef]

- Selmic, R.R.; Lewis, F.L. Neural-network approximation of piecewise continuous functions: Application to friction compensation. IEEE Trans. Neural Netw. 2002, 13, 745–751. [Google Scholar] [CrossRef] [PubMed]

- Imaizumi, M.; Fukumizu, K. Deep Neural Networks Learn Non-Smooth Functions Effectively. In Proceedings of the Machine Learning Research, Naha, Okinawa, Japan, 16–18 April 2019; Volume 89, pp. 869–878. [Google Scholar]

- Davies, D.; Drusvyatskiy, D.; Kakade, S.; Lee, J. Stochastic subgradient method converges on tame functions. Found. Comput. Math. 2020, 20, 119–154. [Google Scholar] [CrossRef]

- Aggarwal, C. Neural Networks and Deep Learning; Springer: Berlin, Germany, 2018. [Google Scholar]

- Rumelhart, D.; Hinton, G.; Williams, R. Learning representations by back-propagating errors. Nature 1988, 323, 533–536. [Google Scholar] [CrossRef]

- Huang, G.B. Learning capability and storage capacity of two-hidden-layer feedforward networks. IEEE Trans. Neural Netw. 2003, 14, 274–281. [Google Scholar] [CrossRef]

- Reed, R.; Marks, R.J. Neural Smithing: Supervised Learning in Feedforward Artificial Neural Networks; The MIT Press: Cambridge, MA, USA, 1998. [Google Scholar]

- Vicoveanu, P.; Vasilache, I.; Scripcariu, I.; Nemescu, D.; Carauleanu, A.; Vicoveanu, D.; Covali, A.; Filip, C.; Socolov, D. Use of a feed-forward back propagation network for the prediction of small for gestational age newborns in a cohort of pregnant patients with thrombophilia. Diagnostics 2022, 12, 1009. [Google Scholar] [CrossRef]

- Broomhead, D.; Lowe, D. Radial Basis Functions, Multi-Variable Functional Interpolation and Adaptive Networks; Royals Signals and Radar Establishment: Great Malvern, UK, 1988. [Google Scholar]

- Olusola, A.O.; Ashiribo, S.W.; Mazzara, M. A machine learning prediction of academic performance of secondary school students using radial basis function neural network. Trends Neurosci. Educ. 2022, 22, 100190. [Google Scholar]

- Zhang, D.; Zhang, N.; Ye, N.; Fang, J.; Han, X. Hybrid learning algorithm of radial basis function networks for reliability analysis. IEEE Trans. Reliab. 2021, 70, 887–900. [Google Scholar] [CrossRef]

- Haykin, S. Neural Networks: A Comprehensive Foundation; Prentice Hall: Upper Saddle River, NJ, USA, 2007. [Google Scholar]

- Faris, H.; Mirjalili, S.; Aljarah, I. Automatic selection of hidden neurons and weights in neural networks using grey wolf optimizer based on a hybrid encoding scheme. Int. J. Mach. Learn. Cybern. 2019, 10, 2901–2920. [Google Scholar] [CrossRef]

- Huang, D.S.; Du, J.X. A constructive hybrid structure optimization methodology for radial basis probabilistic neural networks. IEEE Trans. Neural Netw. 2008, 19, 2099–2115. [Google Scholar] [CrossRef] [PubMed]

- Odikwa, H.; Ifeanyi-Reuben, N.; Thom-Manuel, O.M. An improved approach for hidden nodes selection in artificial neural network. Int. J. Appl. Inf. Syst. 2020, 12, 7–14. [Google Scholar]

- Leung, F.F.; Lam, H.K.; Ling, S.H.; Tam, P.S. Tuning of the structure and parameters of a neural network using an improved genetic algorithm. IEEE Trans. Neural Netw. 2003, 11, 79–88. [Google Scholar] [CrossRef] [PubMed]

- Stathakis, D. How many hidden layers and nodes? Int. J. Remote Sens. 2009, 30, 2133–2147. [Google Scholar] [CrossRef]

- Tsai, J.T.; Chou, J.H.; Liu, T.K. Tuning the structure and parameters of a neural network by using hybrid Taguchi-genetic algorithm. IEEE Trans. Neural Netw. 2006, 17, 69–80. [Google Scholar] [CrossRef]

- Bagirov, A.M.; Karmitsa, N.; Mäkelä, M.M. Introduction to Nonsmooth Optimization: Theory, Practice and Software; Springer: Cham, Switzerland, 2014. [Google Scholar]

- Clarke, F.H. Optimization and Nonsmooth Analysis; Wiley-Interscience: New York, NY, USA, 1983. [Google Scholar]

- Wilamowski, B.M. Neural Network Architectures. In The Industrial Electronics Handbook; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Kärkkäinen, T.; Heikkola, E. Robust formulations for training multilayer perceptrons. Neural Comput. 2004, 16, 837–862. [Google Scholar] [CrossRef]

- Karmitsa, N.; Taheri, S.; Joki, K.; Mäkinen, P.; Bagirov, A.; Mäkelä, M.M. Hyperparameter-Free NN Algorithm for Large-Scale Regression Problems; TUCS Technical Report, No. 1213; Turku Centre for Computer Science: Turku, Finland, 2020; Available online: https://napsu.karmitsa.fi/publications/lmbnnr_tucs.pdf (accessed on 10 September 2023).

- Zhang, H.; Hager, W. A nonmonotone line search technique and its application to unconstrained optimization. SIAM J. Optim. 2004, 14, 1043–1056. [Google Scholar] [CrossRef]

- Byrd, R.H.; Nocedal, J.; Schnabel, R.B. Representations of quasi-Newton matrices and their use in limited memory methods. Math. Program. 1994, 63, 129–156. [Google Scholar] [CrossRef]

- Kiwiel, K.C. Methods of Descent for Nondifferentiable Optimization; Lecture Notes in Mathematics 1133; Springer: Berlin, Germany, 1985. [Google Scholar]

- Bihain, A. Optimization of upper semidifferentiable functions. J. Optim. Theory Appl. 1984, 4, 545–568. [Google Scholar] [CrossRef]

- Huang, G.B.; Zhou, H.; Ding, X.; Zhang, R. Extreme learning machine for regression and multiclass classification. IEEE Trans. Syst. Man Cybern. 2011, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Lang, B. Monotonic Multi-Layer Perceptron Networks as Universal Approximators. In Artificial Neural Networks: Formal Models and Their Applications—ICANN 2005; Duch, W., Kacprzyk, J., Oja, E., Zadroźny, S., Eds.; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3697. [Google Scholar]

- Tüfekci, P. Prediction of full load electrical power output of a base load operated combined cycle power plant using machine learning methods. Int. J. Electr. Power Energy Syst. 2014, 60, 126–140. [Google Scholar] [CrossRef]

- Kaya, H.; Tüfekci, P.; Gürgen, S.F. Local and Global Learning Methods for Predicting Power of a Combined Gas & Steam Turbine. In Proceedings of the International Conference on Emerging Trends in Computer and Electronics Engineering ICETCEE 2012, Dubai, United Arab Emirates, 24–25 March 2012; pp. 13–18. [Google Scholar]

- Dua, D.; Karra Taniskidou, E. UCI Machine Learning Repository. 2017. Available online: http://archive.ics.uci.edu/ml (accessed on 25 November 2020).

- Yeh, I. Modeling of strength of high performance concrete using artificial neural networks. Cem. Concr. Res. 1998, 28, 1797–1808. [Google Scholar] [CrossRef]

- Harrison, D.; Rubinfeld, D. Hedonic prices and the demand for clean air. J. Environ. Econ. Manag. 1978, 5, 81–102. [Google Scholar] [CrossRef]

- Paredes, E.; Ballester-Ripoll, R. SGEMM GPU kernel performance (2018). In UCI Machine Learning Repository. Available online: https://doi.org/10.24432/C5MK70 (accessed on 10 September 2023).

- Nugteren, C.; Codreanu, V. CLTune: A Generic Auto-Tuner for OpenCL Kernels. In Proceedings of the MCSoC: 9th International Symposium on Embedded Multicore/Many-core Systems-on-Chip, Turin, Italy, 23–25 September 2015. [Google Scholar]

- Fernandes, K.; Vinagre, P.; Cortez, P. A Proactive Intelligent Decision Support System for Predicting the Popularity of Online News. In Proceedings of the 17th EPIA 2015—Portuguese Conference on Artificial Intelligence, Coimbra, Portugal, 8–11 September 2015. [Google Scholar]

- Rafiei, M.; Adeli, H. A novel machine learning model for estimation of sale prices of real estate units. ASCE J. Constr. Eng. Manag. 2015, 142, 04015066. [Google Scholar] [CrossRef]

- Buza, K. Feedback Prediction for Blogs. In Data Analysis, Machine Learning and Knowledge Discovery; Springer International Publishing: Cham, Switzerland, 2014; pp. 145–152. [Google Scholar]

- Krizhevsky, A. Learning Multiple Layers of Features from Tiny Images. 2009. Available online: https://www.cs.toronto.edu/~kriz/cifar.html (accessed on 14 November 2021).

- Lucas, D.; Yver Kwok, C.; Cameron-Smith, P.; Graven, H.; Bergmann, D.; Guilderson, T.; Weiss, R.; Keeling, R. Designing optimal greenhouse gas observing networks that consider performance and cost. Geosci. Instrum. Methods Data Syst. 2015, 4, 121–137. [Google Scholar] [CrossRef]

- Diaz, M.; Grimmer, B. Optimal convergence rates for the proximal bundle method. SIAM J. Optim. 2023, 33, 424–454. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).