Abstract

Heart rate variability (HRV) has emerged as an essential non-invasive tool for understanding cardiac autonomic function over the last few decades. This can be attributed to the direct connection between the heart’s rhythm and the activity of the sympathetic and parasympathetic nervous systems. The cost-effectiveness and ease with which one may obtain HRV data also make it an exciting and potential clinical tool for evaluating and identifying various health impairments. This article comprehensively describes a range of signal decomposition techniques and time-series modeling methods recently used in HRV analyses apart from the conventional HRV generation and feature extraction methods. Various weight-based feature selection approaches and dimensionality reduction techniques are summarized to assess the relevance of each HRV feature vector. The popular machine learning-based HRV feature classification techniques are also described. Some notable clinical applications of HRV analyses, like the detection of diabetes, sleep apnea, myocardial infarction, cardiac arrhythmia, hypertension, renal failure, psychiatric disorders, ANS Activity of Patients Undergoing Weaning from Mechanical Ventilation, and monitoring of fetal distress and neonatal critical care, are discussed. The latest research on the effect of external stimuli (like consuming alcohol) on autonomic nervous system (ANS) activity using HRV analyses is also summarized. The HRV analysis approaches summarized in our article can help future researchers to dive deep into their potential diagnostic applications.

1. Introduction

Heart rate variability, often known as HRV, measures time fluctuation corresponding to two consecutive heartbeats [1]. This fluctuation is estimated by measuring the distance between succeeding R-peaks (RR interval) of the electrocardiogram signals, resulting in the generation of the RR interval or HRV features [2]. HRV is denoted to reflect the heart’s ability to adapt to changing circumstances by recognizing and responding quickly to different stimuli. HRV features can be used as a valuable marker of cardiac autonomic function. It provides information on the autonomic nervous system (ANS) state, which helps to tune the circulatory system and heart, thereby inducing a natural fluctuation in the heart rate (HR) [3]. The ANS encompasses the parasympathetic nervous system (PNS) and the sympathetic nervous system (SNS). Together, these branches are responsible for controlling the heart rate. Increased activity of SNS or decreased activity of PNS causes cardio-acceleration. Contrastingly, cardio-deceleration can be caused by two different reasons: increased PNS activity and decreased SNS activity.

In the last few decades, there has been a growing consciousness in the scientific community to understand the connection between the ANS and cardiovascular pathophysiology that may lead to cardiac morbidity and mortality due to cardiac failure [4,5,6]. In many recent published studies, HRV has been highlighted as a marker of overall cardiac well-being, which may be explored as a diagnostic tool. The measurements of HRV are easy to perform [1]. It does not require any invasive procedure. HRV analysis reliability is dependent on the consistency in ECG recording conditions, electrode placement, and ECG signal quality [7]. An HRV analysis is usually performed using the time and frequency domain linear approaches. Nevertheless, various researchers have considerably explored the application of some non-linear methods like recurrence and Poincare plots for HRV analyses. In recent years, new dynamic procedures of HRV measurement (e.g., Lyapunov exponents [8], approximation entropy (ApEn) [9], and a detrended fluctuation analysis (DFA) [10]) have been proposed to detect the complex variations in HRV features, providing deeper comprehension of an HRV analysis.

An analysis of HRV features has allowed clinicians to identify a diverse range of abnormalities, illnesses, and possible indications of impending death. For example, Kim et al. (2018) described that an HRV analysis can function as a label for psychological stress when necessary [2]. HRV has been explored for monitoring car drivers’ drowsiness, exhaustion, and anxiety levels [11]. Similarly, an HRV analysis has also found applications in detecting athletic performance and fatigue and assessing depression, anxiety, and other chronic conditions [12]. It has also been reported that HRV can correlate between a sedentary life and a person’s mental/physical well-being. The current study reviews various signal processing strategies used for processing and analyzing HRV features, popular ML models employed in association with HRV features for classifying pathologies, and the different applications of HRV research.

2. Generation of RR Interval Time Series

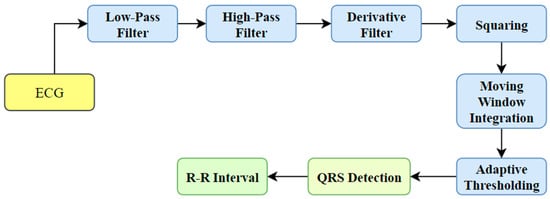

HRV denotes the RR interval time sequence resulting from an ECG signal. The process requires the recognition of the QRS complex from the ECG signal. Various techniques have been developed for detecting the QRS waves, like Pan-Tompkin’s algorithm [13,14] and wavelet transform-based algorithms [15]. Pan-Tompkin’s algorithm analyzes the angle, amplitude, and QRS width using a sequence of filters and mathematical operators. The process includes band-pass filtering, derivative, squaring, integration, and adaptive thresholding. The block diagram representation of QRS complex recognition using Pan-Tompkin’s system is shown in Figure 1. On the other hand, the wavelet transform-based approach involves the disintegration of the ECG signals using a suitable mother wavelet followed by reconstruction using the required sub-bands [15]. Then, the envelope is computed, smoothing is performed, and peak detection takes place to extract the QRS complexes.

Figure 1.

QRS complex detection using Pan-Tompkin’s algorithm.

Historically, the examination of HRV has been conducted using conventional ECG recordings, which typically have a duration of several minutes or longer. Nevertheless, technological progress has facilitated the acquisition of ultra-short ECG recordings, characterized by significantly shorter durations ranging from 10 s to 150 s [16]. The appeal of ultra-short recordings lies in their convenience and efficiency, rendering them more viable in specific contexts, including ambulatory monitoring, remote patient monitoring, and wearable devices.

A predicament arises when attempting to compare the outcomes derived from ultra-short ECG recordings with those acquired from normal ECG recordings. There exist multiple aspects that contribute to the issue of comparability.

- Signal quality can be compromised in ultra-short ECG recordings as a result of a shorter length, leading to the presence of noise, artifacts, and a reduction in signal quality [17,18]. The potential consequences of this phenomenon include potential inconsistencies in an HRV analysis when compared to standard recordings, which may compromise the accuracy of the results.

- An HRV analysis can be conducted through two distinct approaches, namely a time-domain analysis and frequency-domain analysis [19]. The selection of the analytical approach can have an impact on the outcomes and the capacity to compare ultra-short recordings with regular recordings.

- The examination of HRV in research often operates under the assumption that the fundamental physiological mechanisms remain constant during the duration of the recording [2]. The validity of this premise may be compromised when dealing with long-term recordings, as the longer duration could potentially impact the outcomes due to motion artifacts, electrode movements, signal drift, and patient movements, etc. [2].

- The statistical power of ultra-short recordings may be diminished due to a restricted number of data points, resulting in a decrease in comparison to lengthier conventional recordings. The data points are representative of the voltage measurements acquired from the electrodes positioned on the body. The frequency spectrum of the ECG data depicts the allocation of various frequencies that are present within the signal [20]. Increased recording duration leads to enhanced frequency resolution, enabling the detection and capture of lower-frequency elements of the cardiac signal, including the T-wave and QRS complex.

- The identification and rectification of artifacts in recordings can vary depending on whether the recordings are ultra-short or standard in duration, resulting in discrepancies in the resulting heart rate variability (HRV) measures [16].

In order to effectively tackle these concerns and enhance the level of comparison, scholars have the option of employing diverse methodologies, such as the following:

- Standardization refers to the establishment of rules and protocols that dictate the proper procedures for conducting a heart rate variability (HRV) analysis on ultra-short recordings [21]. The purpose of standardization is to ensure uniformity in the methods employed across different studies and platforms.

- Validation Studies: This research aims to compare the measures of heart rate variability (HRV) derived from ultra-short recordings with standard recordings in the same individuals. The purpose is to assess the level of agreement and identify any potential inconsistencies between the two methods [22].

- The application of data augmentation techniques enables the generation of lengthier recordings from ultra-short segments, hence expanding the available dataset for analysis purposes [23].

The resolution of the comparability issue is crucial in order to maintain the use and dependability of an HRV analysis using ultra-short ECG records for clinical and research purposes. It is imperative for researchers and practitioners to possess a comprehensive understanding of the constraints and difficulties linked to ultra-short recordings, while simultaneously persisting in their exploration of the potential advantages they offer.

3. Pressure Support Ventilation (PSV)

The procedure of adapting raw information into numerical attributes that can be employed in place of the original data is referred to as feature extraction. It helps to reduce the computational burden during a signal analysis while maintaining the integrity of the data confined to the original statistics. The feature extraction from HRV data is commonly accomplished using various methods, including but not limited to (i) time-domain methods, (ii) frequency-domain methods, and (iii) non-linear methods. The HRV features extracted from both time and frequency domain approaches are considered linear HRV features [24].

The linear HRV characteristics are studied following the international guidelines made available by the North American Society of Pacing and Electrophysiology task force and the European Society of Cardiology [6]. On the other hand, the non-linear HRV features are calculated per the recommendations presented in the most recent research literature [25,26,27]. Apart from the methods mentioned above, researchers have also attempted to extract proper HRV parameters using signal decomposition techniques and time-series modelling techniques [28]. The following subsections describe the popular HRV feature extraction methods.

3.1. Time-Domain Methods

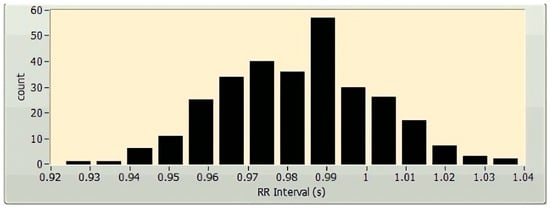

The time-domain HRV parameter extraction methods comprise the geometrical and statistical methods [6]. The statistical methods provide the parameters derived either explicitly from the RR intervals or indirectly after the alteration in the length of the RR intervals [29]. Parameters like standard deviation (SD) of the heart rate (HR SD), average heart rate, mean of the NN intervals (or RR intervals), and standard deviation of the NN intervals (SD NN) can be attained straight from the RR intervals. In this instance, the constraints HR SD and SD NN reveal details related to the dispersion of the HR and NN intermission values from their respective mean values. Conversely, the statistical constraints RMSSD, NN50, and pNN50 offer additional material about the high-frequency aberration in the HR. These parameters are based on the variances amid the RR intervals. The root-mean-square standard deviation, or RMSSD, is the average variation in the intermission between beats and is represented by the square root of the consecutive variance involving the RR intervals. The value of NN50 reveals data regarding the number of successive NN interval differences with more than 50 milliseconds. The value of the parameter pNN50 can be calculated by dividing NN50 by the overall number of NN intervals that are longer than 50 milliseconds. TINN, which stands for “triangular interpolation of NN interval histogram,” and the HRV triangular index, are examples of time-domain geometrical parameters. TINN denotes the reference size of the RRI (or NN intermission) histogram when it is calculated using triangular interpolation [30]. The HRV triangular index is obtained from the RRI histogram (Figure 2) by computing the proportion of the entire amount of RRIs to the stature of the RRI histogram [6].

Figure 2.

A sample histogram of a 5 min HRV, plotted using LabVIEW (National Instruments Corporation, Austin, TX, USA).

3.2. Frequency-Domain Methods

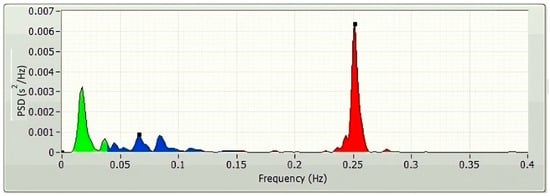

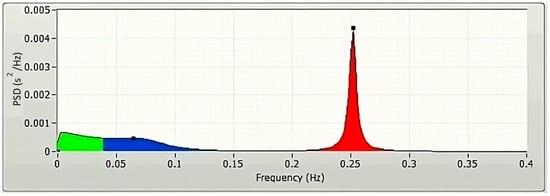

The frequency-domain technique uses the estimation of power spectral density (PSD) of the HRVs as the basis for the parameter’s extraction. The parameters of the frequency domain are determined through the application of the Fast Fourier transform (FFT) (Figure 3) and autoregressive modelling (AR) (Figure 4). The PSDs of the very low frequency (VLF; 0.003–0.04 Hz), the low frequency (LF; 0.04–0.15 Hz), and the high frequency (HF; 0.15–0.4 Hz) are considered as the frequency domain HRV features. Absolute power components, like ms2, and relative power components, such as percent, are typically used to measure the VLF, LF, and HF power components, respectively. However, one may also use the normalized units to measure the LF and HF components (n.u.). The power dispersal over numerous constituents of the PSD is unfixed and changes with the autonomic variation of the heart [6]. This is because the PSD is not a fixed function. It has not been determined what precise functional procedure is accountable for producing the VLF power constituent [6], but research is continuing in this direction. Atropine has been reported to eliminate the VLF component. As a result, the VLF power constituent is regarded as a pointer of parasympathetic commotion [29]. Nevertheless, the nature of the LF power constituent is complicated. Both sympathetic and parasympathetic nerve innervations influence the LF power [29]. This makes the LF power component a problematic variable to analyze. Accordingly, the LF/HF proportion is generally utilized to describe the sympathetic activity [29] or the sympathovagal balance [31] rather than the LF power component.

Figure 3.

A typical FFT spectrum of a 5 min HRV, plotted using LabVIEW.

Figure 4.

A typical AR spectrum of a 5 min HRV, plotted using LabVIEW.

3.3. Non-Linear Methods

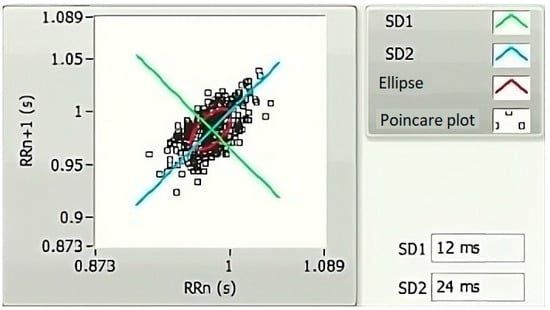

The heart has been represented as an intricate control system [32]. Hence, the HRV description, which relies solely on linear methods, is insufficient to analyze the signals in many cases. Hence, non-linear approaches are also suggested to represent the HRVs’ properties [32]. Popular non-linear methods include the Poincare plot, detrended fluctuation analysis (DFA), and recurrence plot. The Poincare plot visually represents the correlation between the consecutive RRIs (Figure 5). In the Poincare plot, an ellipse is drawn along the line of identity, and then it is tailored to the statistics points. The width (SD1) and the length (SD2) of the ellipse are used to calculate two non-linear HRV features. Such values are used as indicators of both short- and long-term variance [33].

Figure 5.

A representative Poincare graph of a 5 min HRV, plotted using LabVIEW.

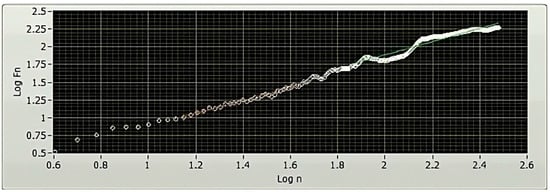

Various non-linear techniques exist for quantifying the correlations in nonstationary physiological time series data [34]. The DFA method, developed in the 1970s, is a technique divulging correlation information. In the context of the HRV study, associations are split up into short-term and long-term variations, depending on the time frame under consideration. These variations are measured and quantified with the DFA method using parameters like alpha1 (α1) and alpha2 (α2), respectively [30]. The slope of the log–log graph is utilized to determine the parameters α1 and α2. These values offer a measurement of association (in terms of fluctuation, Fn) that is dependent on the amount of collected data (n) (Figure 6).

Figure 6.

A representative DFA plot of a 5 min HRV, plotted using LabVIEW. The x-axis of a DFA plot indicates the logarithmically scaled window widths, also known as time scales. Meanwhile, the y-axis represents the logarithmically scaled root-mean-square variation of the detrended data. The analysis of the slope of the plot offers valuable insights into the existence and characteristics of correlations within the examined HRV time series data.

Poincare introduced the concept of recurrence at the end of the 19th century. Recurrence is a fundamental characteristic of every deterministic dynamic scheme [35]. It refers to the phenomenon in which the stages of a dynamic scheme repeatedly appear in phase space [36]. A phase space plot is an imaginary multi-dimensional region where in every point indicates a state of the system. Taken’s theorem [37] has served as the foundation for reconstructing the phase region graphs of the dynamical systems. The theorem proposes that if a variety of parameters governs the dynamics of a system, but a solitary parameter is recognized, at that point, the solitary parameter can still represent the entire dynamics of the system. However, the prediction of the entire system dynamics requires drawing a graph using the quantities of the recognized parameter compared to themselves for a definite amount of times at a predetermined time delay. The selection of appropriate quantities for the embedding dimension and the time delay plays a significant role during the rebuilding of the phase space graph of a dynamic arrangement. The visualization of the vital recurrence property of a phase space plot in two dimensions (2D) has been reported by Eckmann et al. (1987), from whom the idea of recurrence plots was proposed [38]. A recurrence graph is typically described as a symmetrical square matrix in two dimensions. This matrix illustrates the time at which two states of the arrangement become state–space neighbors depending on a cut-off threshold space. However, the information that can be gleaned from this graph is quality-based [39,40]. A recurrence quantification study (abbreviated as RQA) is developed to extract quantity-based variables out of the recurrence plot. The concentration of the reappearance loci and the diagonal, vertical, and horizontal lines present in the recurrence plot are employed for the extraction of quantitative parameters using the RQA method. The popular signal parameters extracted using the RQA method include the recurrence rate (RR), the average number of neighbors (AN), determinism (DET), the length of the longest diagonal line (Lmax), entropy (ENT), laminarity (LAM), trapping time (TT), the maximum length of vertical lines (Vmax), the average length of diagonal lines (AD), the ratio of determinism to the recurrence rate (RATIO), and so on [41].

Chaos theory, a mathematical discipline concerned with the study of intricate and unpredictable systems, has also been used for HRV feature extraction. The utilization of chaos theory principles, including fractals and non-linear dynamics, has been employed in the examination of the intricacy and fluctuation of patterns in HRV [42]. Lyapunov exponents are a sophisticated mathematical construct that originate from the field of chaos theory. These notions find utility in the realm of HRV feature extraction, enabling a deeper understanding of the intricate dynamics inherent in the cardiovascular system. The metric quantifies the speed at which neighboring trajectories in a dynamical system either move apart or come together. A Lyapunov exponent with a larger positive value signifies the presence of chaotic dynamics, whereas a Lyapunov exponent with a negative value shows convergence towards a stable attractor. Through the utilization of Lyapunov exponents derived from time series data of HRV, scholars are able to evaluate the presence of chaotic patterns or ascertain the degree of determinism and stability within the dynamics of heart rate. The provided information can be utilized to gain insights into the regulatory mechanisms of the autonomic nervous system on the heart rate and to detect possible anomalies in cardiovascular well-being [43].

3.4. Signal Decomposition Methods

3.4.1. Empirical Mode Decomposition (EMD)

EMD is a comparatively recent signal processing method utilized to decay the signals into their parts. The processing of non-linear and nonstationary signals is one of its many applications [44]. In this method, the principal (basis) functions are deduced out of the signal, contrasting the Fourier transform or the wavelet transform, which has a fixed basis function. A process known as sifting is implemented in the EMD method to break down a signal into many intrinsic mode functions (IMFs) and a residue (Equation (1)) [45].

The IMFs are a collection of AM-FM (amplitude-modulated–frequency-modulated) signal constituents. They fulfil two criteria: (i) the count of maximum and minimum points has to be similar or slightly differ by one to the count of zero crossings, and (ii) the mean of the covers designed by linking local maxima and minima of the signal is null [46]. Many researchers have reported using the EMD method to decompose the HRVs to extract useful parameters [47]. This may be attributed to the non-linear and nonstationary nature of the HRV features [42].

where M signifies the amount of IMFs, fk(t) shows the kth IMF, and rM links to the residual value.

3.4.2. Discrete Wavelet Transform (DWT)

Discrete wavelet transform (DWT) is a popular combined time–frequency analysis technique [42]. In this context, the “wavelet” denotes a non-symmetric, irregular, and small-duration waveform that possesses a zero mean, finite energy, and a real value of the Fourier transform [43]. Wavelets can be utilized to inspect the occurrences of typically hidden events within a signal. In the last few decades, the DWT method has emerged as an effective tool for analyzing nonstationary signals. In this method, the signals are subjected to a sequence of high- and low-pass filtering at every stage of disintegration to extract the detail and approximation coefficients, respectively. The detail coefficient does not participate in the following levels of decomposition. However, the estimation coefficient is involved in the subsequent disintegration level, leading to generating a fresh pair of detail and approximation coefficients. This procedure is sustained until the essential stage of signal breakdown is achieved. Therefore, the signal can be mathematically expressed, as revealed in Equation (2) [44]. The disintegration of HRVs utilizing the DWT method has been employed by researchers for various diagnostic applications like an automated diagnosis of diabetes [48] and sleep staging classification [49] in the last few decades.

where xm0(t) represents the signal, xm0(t) represents the estimation coefficient at measurer m0, and dm(t) corresponds to the feature coefficient at scale m.

3.4.3. Wavelet Packet Decomposition (WPD)

WPD corresponds to a generalization of the DWT technique that offers a more efficient breakdown of the high-frequency constituents of the input signal [50]. It contrasts with the DWT technique in that both the approximation and the detail constants participate in disintegration at each WPD decomposition level. This is in contrast to DWT decomposition, where just the approximation coefficient takes part in decomposition. WPD causes the segregation of the time–frequency space into boxes with a fixed characteristic proportion as the decomposition process continues [51]. To put it another way, as the level of disintegration increases, the side of the box that represents the time axis expands, while the side that represents the frequency axis contracts along with it. The WPD method has been gaining more popularity for HRV analyses in recent years than other decomposition methods mentioned above [52]. Notable applications of a WPD-based HRV analysis include automatic sleep apnea detection [53], depression [54], etc.

3.5. Parametric Modeling Techniques

The parametric models refer to the techniques that need some parameters to be specified before becoming eligible for making predictions [55]. A number of parametric modelling approaches, including autoregressive (AR), moving average (MA), autoregressive moving average (ARMA), and autoregressive integrated moving average (ARIMA), have been proposed for the analysis of RR intervals [56]. AR, MA, and ARMA models have been used to analyze RR intervals of a small duration (e.g., 5 s) [57]. This may be attributed to the stationary nature of the small-duration RR intervals. The stationarity of the RR intervals is usually verified using techniques like Augmented Dickey–Fuller (ADF). On the other hand, models like ARIMA have been suggested for the relatively longer RR intervals, which are nonstationary [56].

4. Weight-Based Feature Selection Methods

The feature assortment on the basis of weight refers to a set of approaches used to evaluate each input feature’s contribution to the output variable. These algorithms use the weight matrix to determine the relative significance of every input feature in relation to the outcome. They rank the features in order of their importance. This section describes several weight-based feature selection methods that can be performed in Rapidminer software (Rapidminer Inc., Boston, MA, USA).

4.1. Information Gain (IG)

IG is a weighted attribute-selecting approach that uses continuous progress related to the entropy diminution to define the relationship among a parameter X and a category indicator Y. In other words, it is the mutual information of X and Y, where mutual information is the total entropy for classifying attributes [58]. IG is also known as the Kullback–Leibler divergence, a probability distribution proposed by Solomon Kullback and Richard Leibler. It is an entropy-based feature selection model for selecting the exact number of data values to be processed to reduce redundancy and improve memory space [59]. IG can be calculated using Equation (3):

where H(Y) is the entropy of Y, and H(X) is the entropy of X. H (Y|X) represents the conditional entropy of Y given X.

IG provides the importance of an attribute to be noted in the case of feature vectors. It is used to measure the amount of information in bits while class prediction is carried out. The properties of IG include (i) inequality, (ii) symmetry, and (iii) distance function, which are briefly discussed below.

4.1.1. Inequality

The IG is always greater than or equal to zero according to Jensen’s inequality, and it is represented as Equation (4) [60]:

4.1.2. Symmetry

IG follows the symmetrical property, and it can be evaluated from either of the variables regardless of its class variable (Equation (5)).

4.1.3. Distance Function

The metric or distance function measures the distance between two random variables from a set of points. This is mainly used in finding the Euclidean distance in various algorithms used in ML. The distance function for IG can be computed using Equation (6).

4.2. Information Gain Ratio (IGR)

Inverse gradient descent (IGR) is a technique for selecting attributes developed by reducing IG using the attribute entropy. The bias inherent in the IG approach is mitigated with the use of IGR [61]. It maintains accuracy in the IG by considering the intrinsic information (i.e., the instant entropy distribution) from the split data. The intrinsic information reduces the value of IGR, as they are inversely proportional. The intrinsic information of the split data from entropy is given in Equation (7) [61]. This method is widely used during the implementation of a decision tree algorithm.

where S is the split data, and A is the attribute in S.

4.3. Uncertainty

Uncertainty is a feature-selecting technique that emphasizes removing the intrinsic predisposition presented with the IG technique. It is calculated as the proportion of twice of IG to the entirety of the entropies of the feature (X) and the category parameter (Y) (Equation (8)) [58]:

where U is the uncertainty of Y, and H(X) and H(Y) are the entropies of X and Y.

The uncertainty sources are found in the test and training data. It is predominant when the classes tend to overlap or there is a mismatch in the data. The predictions made without the uncertainty quantification may not be considered reliable and may lead to lower accuracy outputs. Uncertainty is widely used in traditional ML applications and deep learning [62].

4.4. Gini Index (GI)

GI is a feature collection technique based on contamination. It designates the possibility of incorrectly classifying a randomly selected variable [58]. It is also referred to as the Gini Impurity or coefficient, which is an alternative to the information gain (IG). Italian statistician Corrado Gini proposed GI. GI is based on the Lorenz curve, which depicts a graphical representation of inequality between two attributes. At times, the Lorenz curve does not provide a complete analysis. So, GI can be improved by interpolating the missing data in the analysis to function properly. It works on the frequency distribution among the values to be computed. GI values range from 0 to 1, with 0 as the complete equality and 1 as the absolute inequality [63]. For assumed information S (i.e., s1, s2, s3, …, sn) and a category parameter Ci (1 ≤ i ≤ k), GI is computed using Equation (9). When the samples are uniformly distributed, GI attains a maximum value [64].

where Pi is the likelihood of any instance in Ci and m is termed to be different classes.

4.5. Chi-Squared Statistics (CSS)

CSS is a widespread non-parametric process of feature choice used in ML algorithms. This works out the significance of a feature by means of the chi-squared statistics (χ2) [58]. CSS is used to calculate the similarity between two probability distributions. If two distributions are similar, then the resultant value is 0. Otherwise, it obtains a larger value compared to 0. It compares and contrasts the independence of two events using the expected and the observed values [65]. The formula for chi-squared statistics is given in Equation (10):

where Oij is the forecasted frequency, and Eij is the anticipated value of the same.

4.6. Correlation

We may think of correlation as a technique for selecting characteristics by how similar they are. Correlation coefficients can take on values between −1 and 1, with the sign indicating the nature of the relationship (negative or positive) [58]. As soon as there is no correlation between the attributes, its quantity reaches 0. The r-value of a pair of variables (X, Y) indicates their level of resemblance. It is characterized by Equation (11):

where i designates the augmentation parameter and n denotes the amount of examples of the features X and Y.

The correlation coefficient is an efficient method in feature selection processes [66]. If the correlation feature is extended in a graphical format, and the points at each instant tend to form a straight line, it is termed linear correlation. If they cluster around a curve other than a straight line, then the correlation is non-linear.

4.7. Deviation

The deviation is the standard deviation (SD) of the characteristics after normalization. Formula (12) is used to obtain SD for variable X, and then the variable is normalized by taking either the highest or lowest result [58]. Standard deviation is an analysis method to measure variability [67]. When the value of SD is small, the feature is mapped to its mean. On the other hand, when the SD returns a higher value, it is spread over a broad range from its mean value.

where i designates the augmentation parameter and n denotes the amount of instances of the attribute X.

4.8. Relief

Kira and Rendall introduced “relief” as a supervised attribute-selecting technique [68]. This technique chooses the examples randomly out of the provided database. Once this is carried out, we can locate the closest instances that fall into both the identical category (near-Hit) and the opposite category (near-Miss) as the original one. Applying formula 13, we may give the attribute in evaluation a rating (St). After comparing their S ratings, all of the attributes are narrowed down to the highest K. The disadvantage of the relief-based algorithm is that its susceptibility to noise is higher. It is generally used in classification problems.

where xt designates the indiscriminately selected instance from the provided input information at repetition figure t, n signifies the entire figure of examples, and d (.) parallels Euclidean detachment.

4.9. Rule

The “rule” signifies a feature-choosing technique that creates a rule for each feature and calculates the fault for them. Every feature is allotted an error-based weight related to it. The significance of the attributes is marked using the score of the weights allocated to them. The other names for “rule” are “OneR” or “One Rule” [58]. Practically, a rule-set is designed to identify the relevance of the features. Each rule is represented as a single region named Ri. Unlike trees, rules can have non-disjoint regions. Some applications require ordered rule-sets, and such rule-sets are termed “decision lists.” Rule induction is processed by adding a single component at a time. The component must be the best minimizer of the distance.

4.10. Support Vector Machine (SVM)

SVM is a popular technique of ML that utilizes hyperplanes (also known as normal vectors) to classify the sample data of a signal into numerous categories. The coefficients linked with the hyperplanes are utilized to rank the features and assign weights [58]. On the other hand, for SVM to function as a method for feature selection, the features themselves must have numeric values. In two-dimensional representations, the linearly and non-linearly separable components are categorized using the SVM. When viewed from a linear perspective, the discriminate function of SVM is given with Equation (14) [69]. For constructing the hyperplane, Equation (14) can be re-written as Equation (15).

where w corresponds to the slope of the hyperplane and b refers to the margin.

The value of g(x) determines the further process in classification or regression problems. If the value of g(x) = 1, then the distance between the two separate classes or class intervals would be calculated as .

5. Dimensionality Reduction Techniques

Different researchers have employed various dimensionality reduction methods to decrease the feature dimension of the HRV features. The following section describes the dimensionality reduction methods that can be performed in Rapidminer software (Rapidminer Inc., USA).

5.1. Principal Component Analysis (PCA)

PCA is a renowned unsupervised ML technique that employs an orthogonal conversion algorithm to alter many associated features to a set of unrelated features identified as principal components [58]. The orthogonal alteration is performed utilizing the eigenvalue breakdown of the covariance matrix, which is produced using the features of the specified signal. PCA selects the dimensions containing the most significant information while discarding the dimensions containing the least important information. This, sequentially, assists to lessen the dimensionality of the data [70]. The samples in the PCA-based approach are placed in a lower-dimensional space, such as 2D or 3D. Because it makes use of the signal’s variation and changes it to alternate dimensions with lesser characteristics, it is a useful tool for attribute choosing. However, the transformation preserves the variance of the signal components.

The PCA technique is generally executed via either the matrix or data methods. The matrix method uses all the data contained in the signal to compute the variance–covariance structure and represents it in the matrix form. Here, the raw data are transformed using matrix operations through linear algebra and statistical methodologies. And yet, the data process works directly on the information and matrix-based operations are not required [70]. Various features like the mean, deviation, and covariance are involved in implementing a PCA-based algorithm. The mean or average (µ) value of the data samples (xi) calculated during the implementation of PCA is given in Equation (16) [70].

where xi represents the data samples.

The deviation () for the dataset can be mathematically expressed using Equation (17) [70].

where xi represents the data samples, and µ represents the deviation.

Covariance (C) determines the variable value that has changed randomly and how much it has deviated from the original data [71]. This value may be either positive or negative and is based on the deviation it has gone through in the previous steps. The covariance matrix is calculated using the deviation formula and then transposing it [70].

where A= [Φ1, Φ2... Φn] is the set of deviations observed from the original data.

In order to apply PCA for dimensionality reduction purposes, the eigenvalues and the respective eigenvectors of the covariance matrix C need to be computed [70]. Among all the eigenvectors (say m), the first k number of eigenvectors with the highest eigenvalues are selected. This corresponds to the inherent dimensionality of the subspace regulating the signal. The rest of the dimensions (m-k) contain noise. For the representation of the signal using principal components, the signal is projected into the k-dimensional subspace using the rule given in Equation (19) [70].

where U represents an m × k matrix whose columns comprise the k eigenvectors.

PCA helps to obtain a set of uncorrelated linear combinations as given in the matrix form below (Equation (20)) [71].

where Y = (Y1, Y2, …, Yp)T, Y1 represents the first principal component, Y2 represents the second principal component, and so on. A is an orthogonal matrix having ATA = I.

5.2. Kernel PCA (K-PCA)

K-PCA is an extension of the principal component analysis (PCA) approach that may be used with non-linear information by employing several filters, including linear, polynomial, and Gaussian [58]. This method transforms the input signal into a novel feature space employing a non-linear transformation. A kernel matrix K is formed through the dot product of the newly generated features in the transformed space, which act as the covariance matrix [72]. For the construction of the kernel matrix, a non-linear transformation (say φ(x)) from the original D-dimensional feature space to an M-dimensional feature space (where M >> D) is performed. It is then assumed that the new features detected in the transformed domain have a zero mean (Equation (21)) [73]. The covariance matrix (C) of the newly projected features has an M × M dimension and is calculated using Equation (22) [73]. The eigenvectors and eigenvalues of the covariance matrix represented in Equation (23) are calculated using Equation (24) [73]. The eigenvector term vk in Equation (23) is expanded using Equation (24) [73]. By replacing the term vk in Equation (23) with Equation (24), we obtain the expression in Equation (25) [73]. By defining a kernel function, , and multiplying both sides of Equation (25), we obtain Equation (26) [73]. Using matrix notation for the terms mentioned in Equation (26) and solving the equation, we obtain the principal components of the kernel (Equation (27)) [73]. If the projected dataset does not have a zero mean feature, the kernel matrix is replaced using a Gram matrix represented in Equation (28) [73].

where k = 1, 2, …, M, vk = kth eigenvector, and λk corresponds to its eigenvalue.

where aki represents the coefficient of the kth eigenvector and k(xl, xi) represents the kernel function.

where 1N represents the matrix with N × N elements that equals 1/N.

Lastly, PCA is executed on the kernel matrix (K). In the K-PCA technique, the principal components are correlated, meaning the eigenvector is projected in an orthogonal direction with more variance than any other vectors in the sample data. The mean-square approximation error and the entropy representation are minimal in the principal components. Identifying new directions using the kernel matrix enhances accuracy compared to the traditional PCA algorithm.

5.3. Independent Component Analysis (ICA)

An Independent Component Analysis (ICA) is an analysis system used in ML to separate independent sources from the input mixed signal, which is also known as blind source separation (BSS) or the blind signal parting problem [74,75]. The test signal is transformed linearly into components that are independent of each other. In ICA, the hidden factors are analyzed, viz., sets of random variables. ICA is more similar to PCA. ICA is proficient in discovering the causal influences or foundations. Before the implementation of the ICA technique on the data, a few preprocessing steps, like whitening, centering, and filtering, are usually performed on the data to improve the value of the signal and eliminate the noise.

5.3.1. Centering

Centering is regarded as the most basic and essential preprocessing step for implementing ICA [74]. In this step, the mean value is subtracted from each data point to transform the data into a zero-mean variable and simplify the implementation of the ICA algorithm. Later, the mean can be estimated and added to the independent components.

5.3.2. Whitening

A data value is referred to as white when its constituents become uncorrelated, and their alterations are equal to one. The purpose of whitening or sphering is to transform the data so that their covariance matrix becomes an identity matrix [74]. The eigenvalue decomposition (EVD) of the covariance matrix is a popular method for the implementation of whitening. Whitening eliminates redundancy, and the features to be estimated are also reduced. Therefore, the memory space requirement is reduced accordingly.

5.3.3. Filtering

The filtering-based preprocessing step is generally used depending on the application. For example, if the input is time-series data, then some band-pass filtering may be used. Even if we linearly filter the input signal xi(t) and obtain a modified signal xi*(t), the ICA model remains the same [74].

5.3.4. Fast ICA Algorithm

Fast ICA is an algorithm designed by AapoHyvärinen at the Helsinki University of Technology to implement ICA effectively [62]. The Fast ICA algorithm is simple and requires minimal memory space. This algorithm assumes that the data (say x) are already subjected to preprocessing like centering and whitening. The algorithm aims to find a direction (in terms of a unit vector w) so that the non-Gaussianity of the projection wTx is maximized, where wTx represents an independent component. The non-Gaussianity is computed in negentropy, as discussed in [74]. A fixed-point repetition system is used to discover the highest non-Gaussianity of the wTx projection. Since several independent components (like w1Tx, …, wnTx) need to be calculated for the input data, the decorrelation between them is crucial. The decorrelation is generally achieved using a deflation scheme developed using Gram–Schmidt decorrelation [74]. The estimation of independent components is performed one after another. This is similar to a statistical technique called a projection pursuit, which involves finding the possible number of projections in multi-dimensional data. The Fast ICA algorithm possesses the advantage of being parallel and distributed, and possesses computational ease and less of a memory requirement.

5.4. Singular Value Decomposition (SVD)

Another approach that builds on PCA is singular value decomposition (SVD), which uses attribute elimination to cut down on overlap [58]. Its consequence is a smaller number of components than PCA but it retains the maximum variance of the signal features. The process is based on the factorization principle of real or complex matrices of linear algebra. This method performs the algebraic transformation of data and is regarded as a reliable method of orthogonal matrix decomposition. The SVD method can be employed to any matrix, making it more stable and robust. This method can be used for a dimension reduction in big data, thereby reducing the time for computing [76]. SVD is used for a dataset having linear relationships between the transformed vectors.

The SVD algorithm is a powerful method for splitting a system into a set of linearly independent components where each component has its energy contribution. To understand the principle of SVD, let us consider a matrix A of dimensions M × N and another matrix U of M × M dimensions. The vectors of A and U are assumed to be orthogonal. Further, let us assume another matrix S, which has a dimension of M × N. Another orthogonal matrix, VT, having a dimension of N × N is also assumed. Then, matrix A is represented by Equation (29) using the SVD method [77].

where the columns of U are called left singular vectors, and those of V are called right singular vectors.

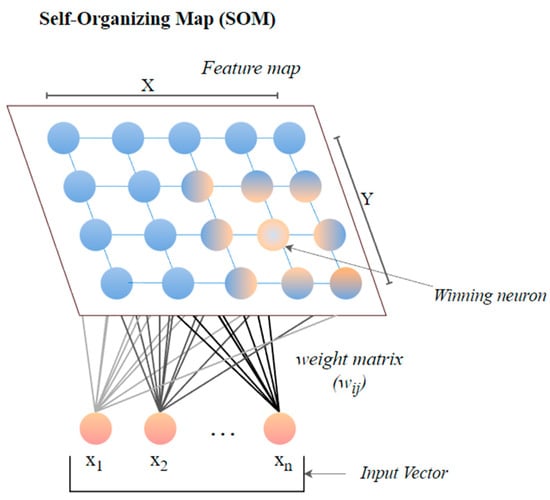

5.5. Self-Organizing Map (SOM)

The self-organizing map (SOM) or the Kohonen map corresponds to a neural network that helps in dimensionality-reduction-based feature selection. The map here signifies the low-dimensional depiction of the features of the specified signal. SOM is based on unsupervised ML with node arrangement as a two-dimensional grid. Each node in the SOM is connected with a weighted vector to make computing easier [78]. SOM implements the notion of a race network, which aims to determine the utmost alike detachment between the input vector and the neuron with weight vector wi. The architecture of SOM comprising both the input vector ‘x’ and output vector ‘y’ is shown in Figure 7.

Figure 7.

The architecture of SOM.

The size of the weight vector ‘wi’ in SOM is controlled using the learning rate functions like the linear, the inverse of time, and the power series as given in Equations (30)–(32) [79].

where T represents the number of iterations and t is the order number of a certain it.

It is distinct from the other ANNs in the case of implementing the neighborhood function. A neighborhood function is a function that computes the rate of neighborhood change around the winner neuron in a neural network. Usually, the bubble and Gaussian functions (Equations (33) and (34)) are used as neighborhood functions in SOM.

where Nc = the index set of neighbor nodes close to the node with indices c, Rc, and Rij = indices of wc and wij, respectively, and ηcij = the neighborhood rank between nodes wc and wij.

6. Classification Techniques

The classification techniques are used to determine the category of new observations based on the training data. In the classification process, an algorithm first learns from the dataset or observations provided and then sorts each new observation into one of the classes. It enables us to divide enormous amounts of data into distinct classes. Various classification techniques that can be used for HRV features and can be executed in RapidMiner software are described below.

6.1. Generalized Linear Model

A generalized linear model is based on the global generalization of simple linear regression (GLM). Nelder and Wedderburn created it to consolidate a wide variety of statistical methods [80]. GLM consists of three elements, i.e., an error dispersal function, a linear forecaster, and an algorithm [81]. The probability distribution functions like Gaussian, binomial, and Poisson are used as the error distribution function. The linear predictor reveals the consistent impact sequence, and the technique delivers the best estimate of the design variables possible.

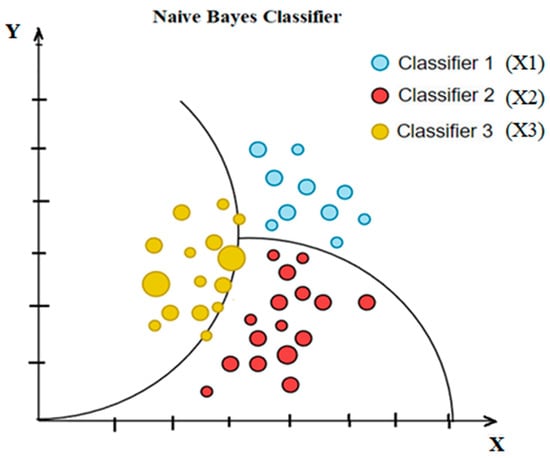

6.2. Naive Bayes

An example of a likelihood classifier, Naive Bayes (NB), is one that makes strong (naive) independent assertions across attributes and relies on Bayes’ theorem. With the class variable in place, it presumes that each attribute may be considered separately. If the fruit is red, round, and around 10 cm in diameter, we call it an apple. An NB technique would attribute equal weight to every parameter when calculating the chance that this item is an apple, irrespective of any correlations among color and sphericity or length. In numerous practical applications, the maximum likelihood method estimates parameters for the NB model (Figure 8). Despite its naïve design and simplistic assumptions, NB classifiers have performed well in various challenging real-world scenarios. Mathematically, the NB model can be expressed with the help of the discriminant function, as shown in Equation (35) [82].

where X = (x1, x2, …, xN) corresponds to the feature vector, ci indicates the class levels, P(ci) represents the prior likelihood, and P(xj|ci) denotes the restrictive likelihood.

Figure 8.

Simple diagram of Naïve Bayes classifier.

6.3. Support Vector Machine

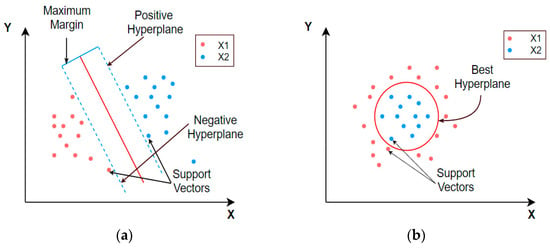

One standard supervised learning method, a support vector machine (SVM), may be used to address categorization and regression issues simultaneously. Its purpose is to find the ideal contour (called the hyperplane) for categorizing n-dimensional space into categories. The features in the dataset determine the dimension of the hyperplane. The SVM can be linear or non-linear based on the nature of the dataset. Data that can be split into two groups in a linear fashion are said to be differentiable, and the SVM classifier used in such cases is known as a linear SVM. A non-linear support vector machine can be employed if a linear classification scheme fails to work on a given dataset. A simple graphical representation of both the linear and non-linear SVM is presented in Figure 9 below.

Figure 9.

Graphical representation of (a) linear and (b) non-linear support vector machines.

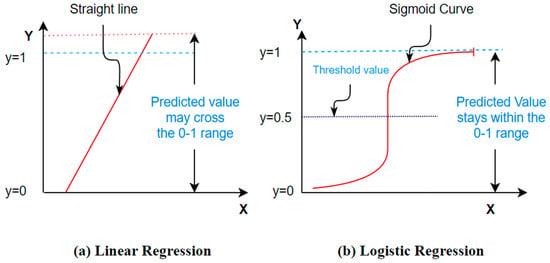

6.4. Logistic Regression

Logistic regression (LR) corresponds to a probabilistic statistical model widely used in classification problems. It aims at defining the connotation among a dichotomous dependent attribute and numerous independent attributes of the dataset [83]. The use of the logistic function f(z) (given with Equation (36)) mainly contributes to the wide acceptance of the LR classifier as its values always lie in the range of 0 to 1 [84]. In addition, the logistic function gives an attractive S-shaped representation of the cumulative outcome (z) of the independent attributes on the dependent attribute f(z) (Figure 10) [85]. It is clear from Figure 10 that the logistic regression is different from the linear regression in the case of its ability to limit the output in the range of 0 to 1.

where z is the cumulative effect of the independent variables, and f(z) is the dependent variable.

Figure 10.

A standard S–shaped illustration of the logistic function.

Equation (36) can be written as

Let us take .

where [p/(1 − p)] indicates the odds of the event occurring.

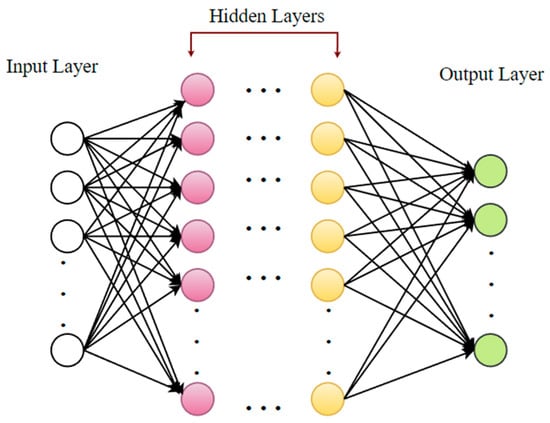

6.5. Deep Learning

Deep learning (DL) is a progressive unsupervised strategy for knowledge features or representations. It has created a novel tendency in ML and pattern identification [86,87]. The hypothetical foundation for DL is the notion of neural nets. DL models employ several hidden neurons and layers (often more than two) associated with conventional neural networks. In addition, the DL models incorporate advanced learning techniques, such as the autoencoder technique and restricted Boltzmann machine (RBM), which are not used in conventional neural networks [87]. A DL network enables automated attribute choosing by abstracting raw data at a high level. These networks can reveal hidden input attributes, enhancing classification performance [88]. Figure 11 displays the overall DL framework [73].

Figure 11.

A standard illustration of a deep neural network.

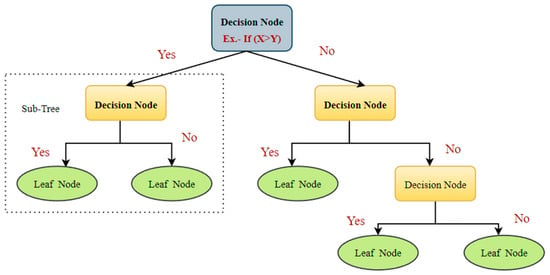

6.6. Decision Tree

The decision tree (DT) procedures are widespread categorization techniques in ML [89]. They separate the data recursively into two categories to construct a tree on the basis of its input attributes [82]. Various established mathematical procedures, such as an information gain, chi-square test, Ginni index, etc., are used to partition the data [89]. These methods provide the variables and threshold for subdividing the dataset input into distinct subgroups. The process of splitting is continued until a mature tree is created. A DT categorizer typically consists of internal nodes, divisions, and leaf nodes (Figure 12) [90]. The internal node represents a test on a feature, while the branches represent the test’s outcome and the leaf nodes denote class levels.

Figure 12.

A standard illustration of the DT classifier.

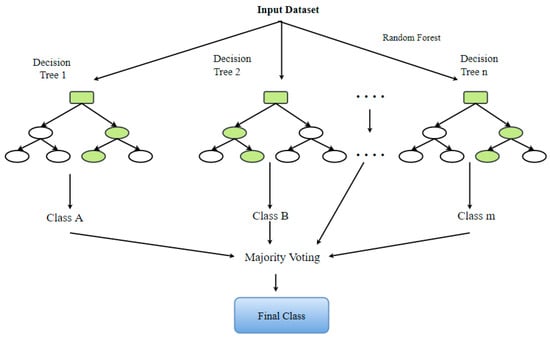

6.7. Random Forest (RF)

The RF model belongs to the composite classifications’ group of algorithms. It is useful for making predictions on the basis of the combination of a number of different decision trees [89]. All decision trees are produced using the portrayal of a subsection of the drilling subsets via replacing values or bagging. The concluding estimate is founded upon the most gained of the votes these decision trees give (Figure 13). The choice of two variables, specifically, the number of decision trees to be produced and the number of attributes to be nominated for budding the trees, shows a critical part in determining the efficiency of the RF architecture [91].

Figure 13.

The graphic illustration of a standard RF classifier.

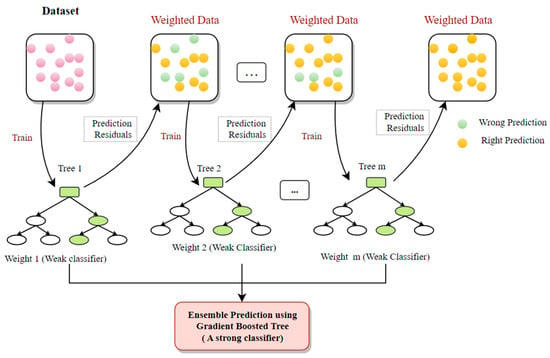

6.8. Gradient Boosted Tree (GBT)

A gradient boosted tree (GBT) is an advanced decision tree approach that divides the dataset into subgroups using an optimization model like XGBoost. It is one of the popular models due to its capacity to deal with absent data and reduce the loss function [92]. It can determine the higher-order relationships between the input attributes [93] too. In each time step, the gradient-boosted tree builds a new tree to minimalize the residual of the existing system (Figure 14).

Figure 14.

The graphic depiction of an archetypal GBT classifier.

6.9. Fast Large Margin (FLM)

The FLM algorithm is a form of linear SVM. It originated from the fast-margin learner developed by Fan et al. (2008) [94]. This classifier yields similar results to traditional SVM and LR approaches. Nevertheless, the FLM classifier can deal with datasets, including millions of samples and attributes.

7. Applications of HRV Analysis

There are various applications of an HRV analysis. It has emerged as an important diagnostic tool in fields as diverse as cardiology, endocrinology, psychiatry, fetal monitoring, neonatal care, and many others. An HRV analysis can also help in understanding the effects of various external consumption factors, like alcohol, drugs, smoking, etc., on the pursuit of the autonomic nervous system. Some of the prominent applications are described in this section.

7.1. Diabetes Detection

Diabetes has emerged as one of the most common diseases worldwide. When blood sugar levels are not under control, the extra blood sugar builds up in the blood vessels and impacts how blood flows to different organs in the body. An inappropriate amount of secretion of insulin causes diabetes [94]. Primarily, two forms of diabetes exist.

Type 1 diabetes: If a person has type 1 diabetes, they need insulin injections or other treatments to receive enough insulin into the body. This is because the body loses the ability to make the right amount of insulin, which can cause high blood sugar levels and other health issues.

Type 2 diabetes: An individual with this form of diabetes does not need insulin and is not resistant to it. In this case, the body makes enough insulin, but it does not use that insulin for energy conversion. Since there is not enough energy, the body makes even more insulin, which causes the blood sugar to rise to abnormal levels [95].

Diabetes has been proven to be the leading cause of heart disease, renal malfunction, strokes, limb paralysis, and vision loss in individuals all over the globe. The majority of diabetes patients have other co-morbidities, such as obesity or hypertension. Diabetes has been linked to cholesterol, blocked arteries, myocardial infarction, and other serious consequences [96].

Recent studies reveal a strong link between HRV and glucose levels, indicating that diabetes causes gradual autonomic dysfunction and reduced heart rate variability [97]. A reduction in HRV features can indicate the potential of a volunteer being diabetic. Several studies attempt to develop viable solutions to the problem of diabetes detection based on an HRV analysis. Yildirim et al. (2019) proposed the automatic identification of diabetic patients by employing deep learning models on spectrogram images of RR intervals. The deep learning (DL) approach included pre-trained CNN models like DenseNet, AlexNet, ResNet, and VGGNet [98]. The spectrogram images were constructed utilizing the short-time Fourier transform (STFT) on the RR intervals. Among all the DL models, the DenseNet model presented an accuracy of 97.62% and a sensitivity of 100% for diagnosing diabetic patients. In another study, Rathod et al. (2021) analyzed the effect of type 2 diabetes mellitus (T2DM) on HRV features to determine the resultant autonomic dysfunction in diabetic patients [99]. The time-domain attributes (mean RRI and SDNN), frequency-domain features (total power, LF, and HF), and non-linear features (SD1 and SD2) were lower in diabetic patients than those of the control group in the resting state. When an orthostatic challenge was conducted as a stimulus to study the ANS reactivity, a blunted response of the ANS reactivity was exhibited by the T2DM patients. The authors then implemented the ML algorithms to detect autonomic dysfunction in T2DM patients. The Classification and Regression Tree (CART) model was able to identify the extent of autonomic dysfunction in individuals with TD2M with the highest accuracy of 84.04%. Thus, the authors recommended the use of HRV features in amalgamation with the CART model for the detection of autonomic dysfunction in TD2M patients. Table 1 lists some other papers issued in the last 5 years discussing the application of an HRV analysis in diabetes detection.

Table 1.

List of published papers in the last 5 years with novel approaches for diabetes detection based on HRV analysis.

7.2. Sleep Apnea Detection

Sleep is important for the proper functioning of the human body [109]. The brain passes through various stages of sleep as a person sleeps. They are mainly classified as rapid eye movement (REM) and non-rapid eye movement (NREM) [110]. Both of these phases are continually repeated during sleep [109]. When a person does not have typical cycles of REM and non-REM sleep, the body may face a variety of negative impacts, including weariness, a decrease in capacity to focus, disruptions in body metabolism, and other similar symptoms, even stroke [111]. Sleep apnea is one of the many diseases that disrupt these healthy sleeping cycles. It is a sleeping ailment categorized by recurrent starting and stopping of breathing while sleeping. Sleep apnea patients experience muscles at the back of their throats getting stretched and becoming more constricted, thus disrupting the normal breathing process [112]. This is a highly prevalent condition often observed more frequently in males than in women. Patients of any age can be affected by this ailment. However, middle-aged individuals are more likely to have it [113]. Sleep apnea can be treated if the patient is aware of the symptoms of sleep apnea, which reduces risk factors, and discusses future treatment options with their doctor [114]. Snoring and occasional breathing disruptions during sleep are the common symptoms of this disorder. Other indications include an abrupt awakening accompanied by shortness of breath, a headache upon waking, insomnia, focus issues, irritability, and hypersomnia. Sleep apnea has been related to various significant health problems such as diabetes, stroke, hypertension, obesity [115], and high blood pressure. Although this cannot be cured, it can be treated with various methods such as behavioral therapy, positive pressure therapy, oral breathing equipment, and even surgery [116,117]. Several pieces of research have been executed in the arena of sleep apnea diagnoses since an early diagnosis of the disease can reduce patient mortality. An HRV analysis has found several applications in the field of sleep, including assessing the severity of sleep apnea, categorizing sleep stages, and detecting sleep apnea events [118,119]. The consequence of disruptive sleep apnea (OSA) severity on HRV features was examined by Qin et al. (2021) [120]. The research was conducted on 1247 persons, among which 426 were healthy and 821 had OSA. The time-domain and non-linear HRV features exhibited less complexity and increased sympathetic dominance in OSA patients. Thus, the authors proposed an HRV analysis as a potential marker of cardiovascular activity in such patients. Recently, Nam et al. (2022) studied the relationship between the apnea–hypopnea index (AHI) and HRV in OSA patients. The authors analyzed the 24 h Holter monitoring-based HRV data and polysomnography data of 62 OSA patients. The results suggested that day/night VLF and LF ratios decreased with the increased severity of OSA. These features were also observed to associate independently with the AHI. Table 2 shows some HRV analysis research conducted for sleep apnea detection.

Table 2.

Studies conducted for sleep apnea diagnoses utilizing HRV analysis in the last 5 years.

7.3. Myocardial Infarction Detection

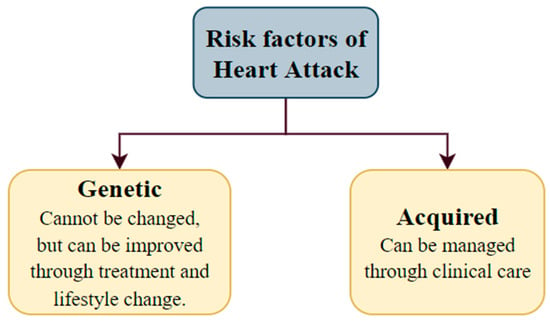

Myocardial infarction (MI) is a widespread illness known as a heart attack. The prevalence of heart attacks in India is 64.37 cases per 1000 individuals. According to a recent assessment, around 1.5 million myocardial infarctions occur annually in the United States (US) [1]. Myocardial infarction takes place when a segment or segments of the heart muscle are deprived of oxygen. This happens when there is a restriction in the blood supply to the heart’s muscles. The leading cause of MI is complete or partial artery blockage. Plaque deposits in coronary arteries can rupture and cause a blood clot. If the clot stops the arterial blood flow, the cardiac muscle will not receive enough blood, causing a heart attack. The risk factors for this cardiac issue have two aspects, i.e., genetic and acquired (from lifestyle, diseases, age, trauma, etc.) (Figure 15).

Figure 15.

The risk factors for heart attack.

The HRV features of patients who suffered from an acute myocardial infarction (AMI) are being analyzed extensively. According to most studies, patients with a decreased or abnormal HRV risk are dying within a few years of having an AMI. Many measures of HRV, including time-domain, spectral, and non-linear, have been employed in the risk stratification of post-AMI patients [126]. Sharma et al. (2019) proposed a model for accurately detecting heart failure utilizing decomposition-based attributes mined via HRV features. Three healthy volunteer datasets and two CHF datasets were used in the study. The Eigen Value Decomposition of the Hankel Matrix (EVDHM) technique was applied in this case for feature extraction. It employed least-square SVM with a radial basis function kernel as the classifier. The suggested approach produced an accuracy of 93.33%, a sensitivity of 91.41%, and a specificity of 94.90% utilizing 500 HRV samples [127]. Further, Shahnawaz et al. (2021) presented an ML model for automated uncovering of myocardial infarction founded on ultra-short-term HRV [128]. They used artificial neural networks (ANN), random forest, KNN, and SVM for categorization purposes and the Relu Activation Function to select and reduce features for the model. This model yielded an accuracy of 99.01% and a sensitivity of 100%.

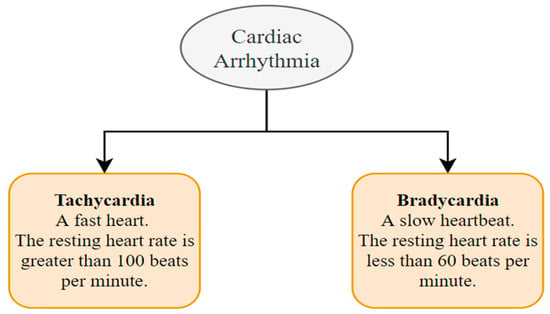

7.4. Cardiac Arrhythmia Detection

A cardiac arrhythmia is when the heart beats erratically [129]. The primary reason for an arrhythmia can be a heart rate that is abnormally high, abnormally low, or irregular [130]. This happens due to abnormal signals from the heart. Mainly two kinds of heart arrhythmias exist. They are usually categorized using the heart rate, namely tachycardia and bradycardia (Figure 16) [131]. Tachycardia is a medical term used to describe an elevated heart rate that exceeds the normal range, often above 100 beats per minute in adult individuals. In contrast, bradycardia is characterized by a diminished heart rate, typically falling below 60 beats per minute among adult individuals. Both tachycardia and bradycardia can manifest within the context of sinus rhythm, wherein the heart’s electrical impulses originate from the sinus node, which serves as the heart’s intrinsic pacemaker. Various approaches to analyzing HRV signals may exhibit distinct behaviors when employed on individuals experiencing tachycardia or bradycardia as discussed below.

Figure 16.

Types of cardiac arrhythmias.

- Time-Domain Analysis:

In subjects with tachycardia, shorter RR intervals can result in reduced variability between consecutive intervals, potentially affecting time-domain measures such as the standard deviation of NN intervals (SDNN) and the root mean square of successive NN interval differences (RMSSD) [29].

In subjects with bradycardia, longer RR intervals might lead to increased variability, potentially influencing the same time-domain measures [132].

- Frequency-Domain Analysis:

In subjects with tachycardia, the faster heart rate can lead to higher-frequency power dominating the spectrum [133], potentially obscuring the interpretation of low-frequency (LF) and high-frequency (HF) components.

In subjects with bradycardia, the opposite might occur, making frequency-domain interpretation more straightforward [134].

- Non-linear Analysis:

Non-linear HRV analysis methods, such as Poincaré plots and fractal analyses, might be affected by the irregularity of the heart rate in tachycardia and the extended RR intervals in bradycardia [135]. These methods might need adjustments or specific considerations for accurate interpretation.

- Geometric Analysis:

Geometric methods, like the triangular index and Lorenz plot, might be sensitive to the distribution of RR intervals [136], which can differ in tachycardia and bradycardia. These methods might need normalization or transformation to account for such differences.

In general, the issues that might arise from arrhythmias include the risk of having a stroke and heart failure [137]. Arrhythmias have also been linked to a higher risk of blood clots. A blood clot can move from the heart to the brain and cause a stroke if it breaks away [138]. Many researchers have suggested different detection methods for cardiac arrhythmias. Atrial fibrillation (AF) is an arrhythmia that may modify heart rhythm dynamics and ECG morphology. It is distinguished by the presence of irregular and rapid electrical impulses inside the atria, resulting in an irregular and frequently elevated heart rate. The measurement of HRV in individuals diagnosed with AF might offer valuable insights into the impact of the autonomic nervous system on the development and progression of this condition. Furthermore, such an analysis may have significant consequences for the process of assessing an individual’s risk level and managing their treatment plan. Nevertheless, it is crucial to acknowledge that the study of HRV in patients with AF can present challenges owing to the irregularity of the cardiac rhythm. Conventional approaches for measuring HRV may not be well-suited for examining datasets characterized by irregular intervals between successive heartbeats. Various algorithms and methodologies have been specifically designed to tackle these challenges and derive significant metrics related to HRV from the data of individuals with AF.

The examination of HRV in patients with AF has the potential to provide significant insights into the role of the autonomic nervous system in the development of the arrhythmia, as well as its potential for predicting unfavorable outcomes or informing treatment strategies. However, it is imperative to use caution when interpreting the findings and to consider them in connection with other clinical and diagnostic factors. Relying just on an HRV analysis may not yield a thorough understanding of a patient’s cardiac well-being. A study by Christov et al. (2018) ranked HRV features for atrial fibrillation detection [139]. They found that the PNN50, SD1/SD2, and RR mean are the three top ranked HRV features, as well as the full set of HRV features achieving a 13%-point higher arrhythmia detection performance compared to the set of beat morphology features. Other recent studies for arrhythmia detection with an HRV analysis can be found in [140,141,142,143]. The experimental results revealed that the HRV descriptors are effective measures for AF identification. In another study, Singh et al. (2019) proposed an arrhythmia detection technique using a time–frequency (T-F) analysis of HRV features [144]. The back-propagation neural networks, in combination with three different types of decision rules, were employed to achieve the classification accuracy of 95.98% for the low-frequency (LF) band and 97.13% for the high-frequency (HF) band [144]. A study by Ahmed et al. (2022) highlighted the development of a client–server paradigm for analyzing HRV features to detect arrhythmias [145]. The proposed platform performed all the real-time steps, from feature extraction to classification. Smoothed Pseudo Wigner–Ville Distribution (SPWVD) was used for the HRV analysis, and SVM was used as the classifier to achieve a classification accuracy up to 97.82%. The classification result can be further sent to clinicians for an interpretation and diagnosis.

7.5. Blood Pressure/Hypertension Detection

Blood pressure (BP) refers to the force of pumping blood against the walls of the arteries. The condition is called hypertension when this pressure is too high [146]. Two numbers are used to express someone’s blood pressure. The first number, the systolic pressure, indicates the pressure in the blood arteries whenever the heart beats or contracts. The second number, the diastolic pressure, indicates the pressure in the blood vessels while the heart rests between the beats [147]. If the systolic BP and the diastolic BP are ≥140 mmHg and ≥90 mmHg, respectively, on two separate days, the patient is said to be suffering from hypertension [148]. Numerous structural and functional alterations of the cardiac muscles are induced in individuals with hypertension due to more workload on the heart. These alterations might increase the cardiovascular risk of hypertensive persons over and above the danger caused by an increase in blood pressure on its own. In recent years, many researchers have studied the feasibility of an HRV analysis as a marker for hypertension detection [149]. In a study by Lan et al. (2018), it was shown that an HRV analysis can predict hypertension at an early stage [150]. The study used 3 months of PPG-based HRV statistics from hypertensive and non-hypertensive participants to calculate HRV features. Six HRV features were utilized in data mining to predict hypertension. It was found that the SDNN feature had the best predictive potential with an accuracy of 85.47%. In light of the findings, the researchers recommended that the PPG-based HRV statistics composed via the wearable devices can be a potential candidate for hypertension prediction with acceptable accuracy. An HRV analysis was tested as a prognostic tool for identifying high-risk patients suffering from hypertension by Deka et al. (2021) [151]. Their study used a hybrid method founded on the dual-tree complex wavelet packet transform (DTCWPT) and other time-domain and non-linear HRV analysis methods to extract features. These features were then fed to the cost-sensitive RUSBoost algorithm, which produced a G-mean and F1 score of 0.9352 and 0.9347, respectively. Table 3 shows selected recent articles on an HRV analysis for hypertension detection.

Table 3.

List of published papers in recent years that have used HRV analysis methods for the detection of hypertension.

7.6. Detection of Renal Failure

An HRV analysis is a valuable tool for assessing autonomic dysfunction, which is vital in predicting the cardiovascular morbidity and mortality associated with kidney failure patients [156]. HRV indices have been analyzed [157] by researchers on individuals diagnosed with renal failure. The association between HRV features and the concentrations of electrolyte ions before and after dialysis was presented in a paper by Tsai et al. (2002) [158]. The HRV features from 5 min ECG signals of twenty patients with chronic kidney failure (CRF) were evaluated. After hemodialysis, the study showed calcium negatively correlated with the average RR intervals and the normalized HF power. Chou et al. (2019) investigated the diagnostic importance of HRV on renal function in kidney failure patients [156]. The authors involved 326 non-dialysis chronic kidney disease (CKD) patients in experimental research of 2.02 years, and their HRV features were analyzed regularly. It was found that the values of HRV features became reduced with the increased severity of CKD. The computation of LF/HF divulged useful information on the progress of CKD apart from the other risk factors. Some more recent articles on the application of HRV features in renal failure are summarized in Table 4.

Table 4.

List of published papers in recent years that have used novel HRV analysis methods for the detection of renal failure.

7.7. Psychiatric Disorder Detection

The dynamic regulation of the heart can be significantly affected by psychological emotions and activities [162,163]. Hence, people with psychiatric problems are at risk of developing cardiovascular diseases [164]. Multiple symptoms of mental disorders are also associated with the disruption of ANS. For instance, depressed people frequently have dry mouth, constipation/diarrhea, and sleeplessness. In the last few decades, many researchers have examined the feasibility of an HRV analysis to elucidate the physiology of psychiatric diseases and their relation to the cardiovascular system [165].

A study by Carney et al. (2001) found that patients with depression and cardiovascular conditions have lower short- and long-term HRV feature values [166]. Other usual mental ailments, such as anxiety disorders, frequently coexist with ANS disorders because of their close relationship. An analysis of HRV data was used in a work by Miu et al. (2009) to understand the relationship between anxiety and malfunction in the ANS [167]. It has been shown that several antipsychotic medications can harm the functioning of the ANS. In particular, ANS dysfunction and abnormal cardiac repolarizations are detected in people medicated with the antipsychotic drug clozapine [168]. These findings point to the possibility that both the schizophrenia disease and the medication prescribed for its therapy could add to the increased likelihood of coronary sickness. Patients who are untreated for their schizophrenia exhibit a reduction in HRV feature values like RMSSD, pNN50, and high-frequency spectral power compared to healthy controls, suggesting a decreased vagal modulation [168]. Some more papers on the application of an HRV analysis in psychiatric disorders are discussed in Table 5.

Table 5.

Recent published papers on the application of HRV analysis in psychiatric disorders.

7.8. Monitoring of Fetal Distress and Neonatal Critical Care

Fetal inspection is vital and must precisely mirror embryonic well-being, identify the most plausible development process, and correctly envisage critical perinatal consequences. Thus, the primary goal of fetal monitoring is to offer precise and efficient care units for an early diagnosis of the onset of potentially life-threatening diseases. The fetus’s ability to survive, thrive, and progress into the neonatal stage depends on proper blood pressure, volume, and fluid dynamics. Prenatal cardiovascular control disturbances can increase fetal and neonatal mortality and morbidity.