Abstract

Digital assistants—such as chatbots—facilitate the interaction between persons and machines and are increasingly used in web pages of enterprises and organizations. This paper presents a methodology for the creation of chatbots that offer access to museum information. The paper introduces an information model that is offered through the chatbot, which subsequently maps the museum’s modeled information to structures of DialogFlow, Google’s chatbot engine. Means for automating the chatbot generation process are also presented. The evaluation of the methodology is illustrated through the application of a real case, wherein we developed a chatbot for the Archaeological Museum of Tripolis, Greece.

1. Introduction

The cultural heritage domain is nowadays increasingly adopting state-of-the-art technologies to disseminate their content to a wider audience and increase the quality of the experience of visitors. Digital assistants—such as chatbots—facilitate the interaction between persons and machines and are increasingly used in the web pages of enterprises and organizations. This paper presents a methodology for the creation of chatbots that offer access to museum information and the proposed method also comprises an algorithm for mapping this information to the structures of the chatbot engines. The proposed methodology can be fully automated, using queries to information repositories to retrieve the information relevant to each step of the algorithm, and then mapping the information retrieved to the files or structures representing the chatbot engine concepts and the linkages between these concepts. The evaluation of this methodology is demonstrated through the application of a real case, wherein we created a chatbot for the Archaeological Museum of Tripolis, Greece.

The proposed work advances the state of the art by (a) providing a framework through which chatbots can provide full guidance and a complete museum experience conveying the intended museum message [1], in contrast to supporting ad hoc queries for individual exhibits or fragmented experiences; (b) accommodating the multiple facets of museum exhibits, which can be organized under different itineraries with specifically tailored storytelling and exhibit sequences; (c) providing an automation framework for museum chatbot generation, allowing the museums to create and maintain their own chatbots in a code-free environment and (d) harnessesing the power of existing chatbot engines, directly exploiting all available features including augmented user interfaces and natural speech-based interaction.

1.1. Chatbots in Enterprises and Organizations

In recent years, chatbots have been broadly used in various enterprises and organizations as one of the top-tier technologies which provide a practical and reasonable way of exchanging business data and direct communication between users and clients and machines and companies [2,3]. The growing number of APIs enable digital exchange and communication between different systems, primarily in the context of instant messengers, in both the consumer and the enterprise context [4]. The two main factors of their success are their malleability and integrability [5,6]. Examples of these chatbots include the Telegram Bot Platform, the Slack apps, the HipChat bots and the Microsoft Teams. As a result, chatbots aim to improve both efficiency and productivity in agile and digital enterprises and organizations.

1.2. Chatbots for Museums

The exponential growth in the use of chatbots by enterprises and organizations is creating further opportunities in the cultural sector. More specifically, there is a growing number of works concerning the use of chatbots for physical and/virtual visitors in museum contexts.

Gaia et al. [7] utilized chatbot technologies and combined them with gamification to promote visitor engagement and facilitate interaction. They present a chatbot developed as an outsourced project to support visitors of the House Museums of Milan, a group of four historic house museums in Milan, Italy. After successive alterations of its design, it was finally released as a gamified chatbot game, developed using Facebook Messenger, and mainly targeted for teenage population. The evaluation presented by the authors indicates high acceptance, since 72% of the respondents characterized application as highly entertaining, while 66% assessed the chatbot to be a useful learning tool, particularly when used cooperatively in pairs or in small groups.

The Field Museum has implemented a chatbot under the name ‘Máximo the Titanosaur’, which offers information about itself, its living days, the relevant historical era, how he ended up in the museum, and so forth [8,9]. The chatbot was developed using the DialogFlow platform offered by Google, exploiting the NLP and machine learning techniques that the platform offers. The chatbot is delivered as a separate web page and also integrated into other platforms such as Facebook and Viber and finally an SMS gateway is provided.

The Anne Frank’s home [10] museum in Amsterdam has implemented a chatbot using the Facebook Messenger app as a frontend. This chatbot allows the user to access information about Anne Frank and her family, her life before the Nazi invasion and after it, as well as the time she and her family was hiding, etc. The msg.ai technology used in this chatbot applies learning techniques to discern responses that are considered successful and increase the rate at which they are reused in similar contexts. It can also operate when user input is not complete or contextualized, and arranges for probing the user for extra information to collect missing information or context elements.

The Hello!Guide startup company based in Hamburg, Germany (https://helloguide.ai/, accessed on 13 July 2023) offers chatbot development services to museums, and a number of museums including the Historical Museums of Hamburg, the German Historical Museum and the Museum für Angewandt Kunst Köln have utilized these services to develop chatbots for their visitors. Chatbots developed by Hello!Guide can be integrated into Facebook pages and the WhatsApp system and is provided under the software-as-a-service model.

Ref. [11] presents a historical overview of chatbot implementations introduced by museums from 2004 onwards.

However, deployed museum chatbots are typically custom developments outsourced to IT companies, and besides the cost incurred for their development, they are static in content and rigid and cannot be easily maintained by museum personnel.

Ref. [12] presents a work on the design and development of AI chatbots for museums using knowledge graphs (KGs). This paper reports on the development of a chatbot for the Heracleum Archaeological Museum, which mainly employs natural language processing, natural language generation and entity recognition to realize a chatbot on top of a triple store describing the exhibits. Ref. [13] presents a chatbot employing a multi-chatbot graph-based conversational AI approach, aiming to facilitate natural language dialogues concerning the life and works of the Greek writer Nikolaos Kazantzakis, which was created in the context of a research and development project for the Nikos Kazantzakis Museum in Greece.

Ref. [14] presents the development of a chatbot that employs three distinct modes, (a) a question answering system, similar to kiosk interaction; (b) a docent mode, where an avatar responds to questions narrating in natural language like a human docent would; and (c) an historical figure mode, where an avatar of a person of the past and talks and responds to users using first person syntax. The proposed chatbot explores how chatbots affect history education and enhance the overall experience based on their language style and appearance. After conducting a user study, the authors present how the behavior of the users—visitors—in the museum changes based on the chatbot model, as well as how users with variant learning styles communicate with chatbot models.

Ref. [15] presents a museum chatbot design based on semantics, aiming mainly to explore the alignment between designer intentions and user perceptions. The presented design explores different purposes (exhibit presentation, exhibit/museum exploration and learning), with corresponding designer aims and user expectations. The authors report on the findings of an experimental study for the design of the chatbot, whose results indicate that the formulation of design and acceptance criteria is manifested on a semantic rather than a technical level.

Toumanidis et al. [16] studied the use of AI in the cultural heritage domain. They introduce an application that enables users to conduct a dialogue with a museum’s exhibits through their smartphone. Furthermore, they present an AI algorithm which translates dialogue to Greek in order to increase the participation.

Zhou et al. [17] present AIMuBot, an interactive system for querying and accessing information from the museum’s knowledge base using natural language, both for query formulation and for response delivery, employing voice-based interaction. The presented system includes a voice-based GUI which assists visitors to refine their queries, supports voice-based interaction and is able to retrieve information concerning the exhibits from the museum’s knowledge base.

Schaffer et al. [18] present a museum conversational UI, namely Chatbot in the Museum (ChiM). The presented system includes a natural language understanding subsystem which converts user input (user queries concerning the museum exhibits) into intentions and generates a textual and oral output. Casillo et al. [19] present a Context-Aware chatbot which is able to suggest services and contents based on tourist contexts and profiles. The presented chatbot is able to support a conversation with the tourist, using context and pattern recognition algorithms, as well as to control the presentation and evolution of the information given to the user. Tsepapadakis and Gavalas [20] present a personalized guide system for heritage sites, namely Exhibot. The presented system utilizes audio augmented reality in order to stimulate immersive experiences to cultural site visitors, which take part in conversational interactions with AI chatbots. These chatbots play the role of ‘talking’ artifacts and exhibits. Furthermore, the proposed system recommends a transparent and natural model of visitor–exhibit interaction, as well as motivates visitors to play an exploratory role in information seeking.

Barth et al. [21] considered the conversation logs of visitors who interacted with “The Voice of Art”, a voice-based chatbot used in the Pinacoteca Museum in Brazil, and found that the main topics that visitors ask when using a conversation chatbot are the meanings of the artworks and questions related to the intentions of the authors. The authors used the feedback that visitors provided about their overall experience when answering a survey, while the visitors’ voice was transcribed by the system in real time using Speech to Text. Wirawan et al. [22] present a chatbot which includes full-text search method and artificial intelligence markup language. The presented chatbot, namely Bali Historian Chatbot, provides information concerning the Balinese history, and, as the users mentioned, it feels like communicating directly with historians.

The research works presented above overcome the issues of rigidity and static content, however, they exhibit the following limitations: (a) most of them focus only on exhibits where users are expected to ask proper questions, with interactions being dependent only on the user’s intuition; (b) many implementations do not provide any means to guide the user through the museum; and (c) when guidance through the museum is available, this supports only the option of showing an overview of the museum or following a single path. However, museum content is highly multi-faceted, with each exhibit having different aspects of interest, which correspondingly can be highlighted in different storytelling. For instance, a garment exhibit may be involved in narratives indicatively related to (a) contemporary style and fashion for a specific historical era, (b) daily life (in which circumstances/activities of daily life is this type of garment worn?), (c) appearance (which messages does the use of the specific garment convey?), and Ref. [23] (d) technologies and practices for garment production within a specific era or the evolution of these technologies and practices along the historic timeline.

The CrossCult project provides a knowledge base model for exhibits [24] that accommodate the multi-facets aspect under the ‘reflective topics’ concept: reflective topics represent the topics of interest for which reflection and re-interpretation is pursued by the museum and each exhibit is associated with a number of reflective topics, according to the choices of the knowledge base content curators. The concept of reflective topics has been utilized in a number of applications, underpinning the organization of exhibits in different narratives, which are available for users to follow [25,26,27].

1.3. Chatbot Platforms

Nowadays, numerous technologies and platforms have been made available for organizations and individuals to create their own chatbots. These technologies and platforms are mainly available as cloud services, under the ‘Software-as-a-Service’ (SaaS) model, while some platforms are available for self-hosted installations. The available platforms have different characteristics related to their ease of use, available features and amount of technical knowledge that is required for their use and successful modeling of a chatbot. In the following, we will briefly review platforms with widespread use.

BotPress [28] is a platform for creating chatbots that are embeddable in web pages, and applications such as Facebook and WhatsApp, or any other context. BotPress focuses on the ease of use for users with low technical expertise, allowing the creation of chatbot agents by populating an activity diagram with elements corresponding to intents. This is accomplished by dragging tools from a toolchest onto the activity diagram and interconnecting the activities via conditional transitions. Each element within the activity diagram may contain a number of actions, such as communicating a message, receiving input, executing code, determining intents and transitions and so forth.

Microsoft Bot Framework [29,30] (also known as Azure Bot Service) is a comprehensive framework for building conversational AI experiences [31]. Chatbots built with the Microsoft Bot Framework can be directly integrated with Azure Cognitive Services, delivering features such as speech synthesis, voice control, natural language analysis and understanding and so forth. Virtual assistants, customer care and Q&A chatbots are typical agents implemented with the Microsoft Bot Framework; however, any application domain can be served. Integration with a multitude of third-party tools and services is also possible.

IBM Watson Assistant [32,33] is a conversational AI platform based on NLP, machine learning, deep learning and entity recognition technologies. It processes user input to determine their meaning and intent, and subsequently finds the best answers and may guide the user through the process of information retrieval and/or task completion. When user intents cannot be determined with a high level of confidence, the IBM Watson Assistant framework automatically injects clarification questions.

Amazon lex is an AI service comprising advanced NLP models which can be used to support the full lifecycle of conversational interfaces in applications, including the design, implementation, testing, deployment and integration of such interfaces [34,35]. Amazon lex-based chatbots can handle either textual or voice input and similarly produce textual or speech-oriented output. They can also easily be integrated with other AWS services, while integration with third-party tools and platforms is also supported.

Google Cloud DialogFlow [36] employs NLP techniques to facilitate the design, development and testing of chatbots. Chatbots developed using Google Cloud DialogFlow can be integrated into a wide range of applications and platforms, including mobile and web applications, automated call centers, etc. DialogFlow accepts and processes input either in textual or audible format, while its responses can also be either textual or audible. Finally, DialogFlow offers a collection of ready-made agents to bootstrap the chatbot development process.

OpenDialog.ai [37] is a platform for designing, developing and testing conversational applications. OpenDialog.ai-based chatbots may be deployed as standalone full-page web applications or as in-page popup experiences, while integrations with mobile environments are available too. OpenDialog.ai provides a code-free conversation designer, through which the interaction with the user may be modeled, and the messages returned by the chatbot to the user can be defined. Interactions modeled in the code-free editor are translated into the OpenDialog Conversation Description Language, and executed using the relevant conversation engine of OpenDialog.ai.

RASA Version 3 is a conversational AI platform comprising both an open-source and a commercial version [38]. RASA includes NLP features, allowing to understand and hold conversations, while its interaction with users may be performed through multiple communication channels to which RASA-based chatbots connect through specialized APIs. RASA supports a set of conversation patterns, including question/answering and FAQs, implementation of custom business logic based on intent recognition, while provisions for contextualizing conversations, handling errors or incomplete information and handing off sessions to human agents are also supported.

2. Materials and Methods

The chatbot creation methodology presented in this paper entails two parts: firstly, the information that will be presented through the chatbot needs to be structured, following the CrossCult knowledge base paradigm [24], and secondly, the structured information must be mapped to the elements of the Google DialogFlow (https://dialogflow.cloud.google.com/, accessed on 13 July 2023) engine. The Google DialogFlow engine was chosen because it provides all the necessary functionality, while additionally being user friendly, enabling its direct use by non-IT specialists (especially curators) that work for museums. While user interaction with chatbots implemented by the free version of Google DialogFlow is performed through a simple text-based user interface, it is possible to integrate these chatbots with front-ends that offer better user experience, including the use of the mouse to select among possible choices, such as the one offered by Kommunicate (https://www.kommunicate.io/, accessed on 13 July 2023). In the chatbot used to evaluate the methodology, presented in Section 3 of this paper, the front-end provided by Kommunicate was used and is illustrated.

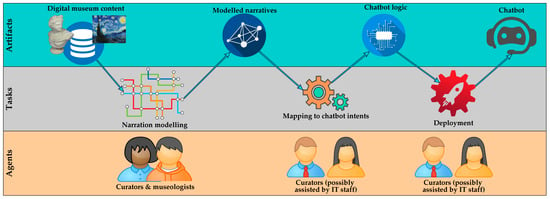

Figure 1 illustrates the overall workflow prescribed by the methodology proposed for the creation of the chatbot. The step of information structuring is discussed in Section 2.1, while the mapping of the structured information to Google DialogFlow elements is presented in Section 2.2.

Figure 1.

Overview of the chatbot creation tasks included in the proposed workflow.

2.1. Information Structuring

In order to enable the museum chatbots to effectively convey the cultural information hosted by the museum to the chatbot users, this information needs to be appropriately structured. While numerous chatbots nowadays can effectively retrieve data corresponding to user queries and present them to the user, this mode of operation does not appropriately highlight the interconnections between exhibits and does not present cohesive stories conveying a concrete museum message [1]. To this end, the concept of ‘reflective topics’ was adopted to model different narratives that the museum provides to its visitors through the chatbot, with each reflective topic being a key concept characterizing a subset of the museum’s exhibits and modeling an itinerary through this subset. To accommodate the multi-faceted nature of museum exhibits, a museum exhibit may participate in different reflective topics, with each membership highlighting a particular viewpoint towards the exhibit.

The selection of reflective topics, the correlation of exhibits to reflective topics, the order in which each exhibit is presented within each reflective topic and the associated texts are determined by curators. It is important to note that when each exhibit is correlated with a reflective topic via the ‘Reflective topic element’ class, the description of the exhibit within the narrative of the particular reflective topic is initialized to the generic description of the exhibit (hosted in ‘Exhibit’ class); however, the curator may customize the description within the narrative to best emphasize the features of the exhibit that are relevant to the reflective topic.

In addition to the organization of the exhibits according to reflective topics, which is mainly targeted to visitors, parallel organizations may be accommodated according to different dimensions; e.g., the following organizations could be used to serve the needs of students and researchers or even model special exhibitions:

- according to the exhibit type (e.g., statues, reliefs, funerary arts, etc.);

- according to the historic period (e.g., Archaic Greece, Classical Greece, and the Hellenistic period);

- according to the area of discovery.

Overall, a visitor’s experience within the museum is a journey carefully prepared by museologists, content curators and museum guides through a workflow of specific tasks: (a) decision on the core storytelling theme, (b) selection of exhibits that are related to the storytelling theme and that best serve its presentation to the target audience, (c) identification of the aspects of the exhibit documentation that are of interest to the target audience, highlighting the aspects supporting the museum message that is desired to be conveyed and (d) weaving the above aspects into a coherent story that will be delivered to the target audience. This fabric underpinning the visitor’s experience is introduced under the concept of narratives [39,40] and is adopted as the key concept in the proposed modeling and implementation of chatbots. For more information on the concept of narratives, the interested reader is referred to [39,40].

The modeling of the information that needs to be captured can be accommodated in a semantic database in the form of an ontology. The semantic database is maintained by curators, using specialized tools, and therefore technical knowledge on ontology modeling and ontology idiosyncrasies is not required. The basic element of this organization is the narration axis, which models a unique organization of presentation of selected museum exhibits that conveys a certain museum message. The narration axis is correlated to the concept it narrates through the ‘isNarratedBy’ relation (and its inverse, termed ‘narrates’) and is realized through a unique navigation path, which effectively constitutes a sequential presentation of selected museum exhibits meticulously crafted by the content curators. Each step within the navigation path is a navigation path element, which is a unique presentation of an exhibit, tailored to the needs of a specific navigation path. Table 1 presents the classes of ontological model, along with a concise description for each one.

Table 1.

Classes of the ontological model used for the structuring of information.

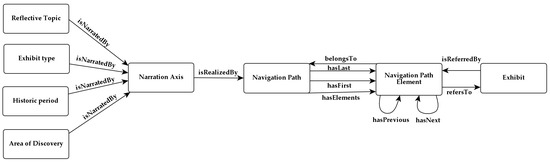

Figure 2 depicts an excerpt of the ontology used by curators to represent the reflective topics and the corresponding organization of exhibits under those topics, while parallel organizations of the exhibits according to (a) the exhibit type, (b) historic period and (c) area of discovery are also accommodated. The CrossCult ontology [24,26] includes many additional concepts related to reflective topics, exhibit types, historic periods, areas of discovery and exhibits that may be utilized according to the goals of the museums and the curators’ approaches. Additional concepts may be accommodated by exploiting the inherent extensibility of the ontology-based models [42].

Figure 2.

Ontology excerpt for narration reflective topics.

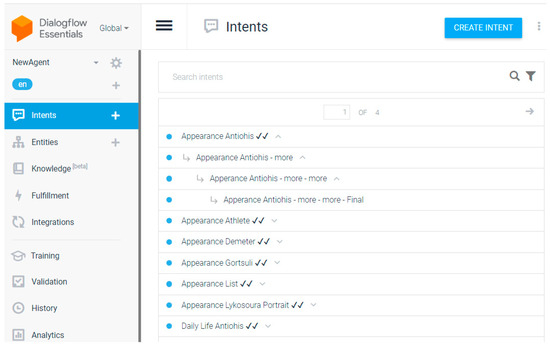

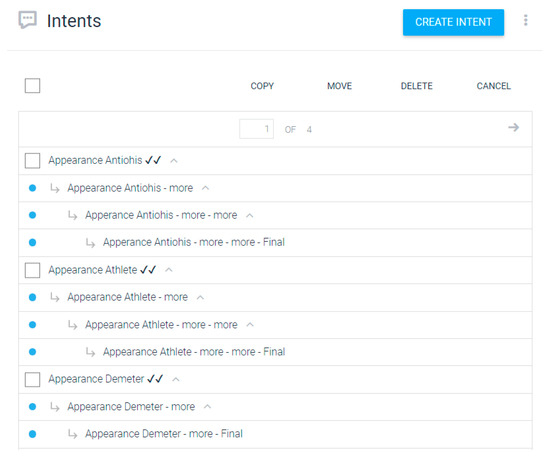

2.2. Mapping of Structured Information to Google DialogFlow Elements

In this section, we present the mapping between the information structure presented in Section 2.1 and the concepts of the DialogFlow platform, which was used for the implementation of the chatbot. The DialogFlow workbench is illustrated in Figure 3. To simplify the user interaction and the procedure of chatbot creation, and to additionally promote the portability of the mapping algorithm to other chatbot platforms, only the intent concept of the DialogFlow engine was exploited; this concept corresponds to the purpose that a user has within the context of a conversation with a customer service chatbot, and is present in most chatbot engine implementations [43]. To provide contextualized interactions, the associated concept of follow-up intents was used, where a follow-up intent is activated in the context of its parent intent when certain conditions are met (e.g., when specific user input is given).

Figure 3.

The DialogFlow tool workbench.

In Figure 3 and subsequent DialogFlow screenshots, top-level intents (default/starting intent, navigation path menu intent, navigation path intents and intents with the main exhibit information) are marked with a double checkmark (✓✓), while this markup is not used in the name of intents that contain additional details for exhibits.

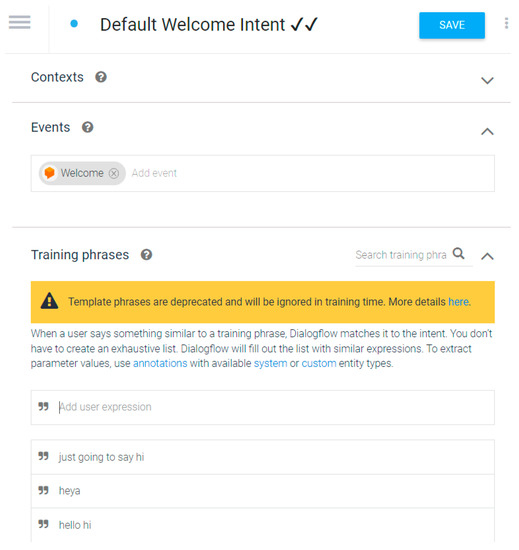

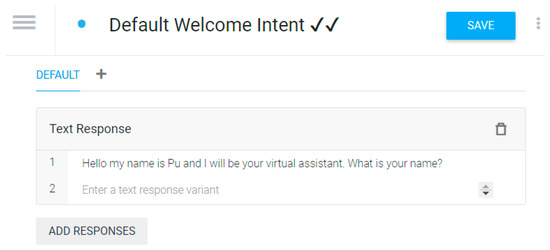

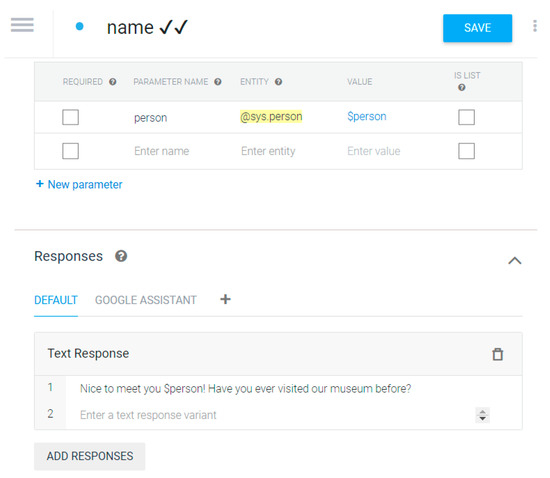

DialogFlow-based chatbots begin their interaction with a default welcome intent. Typically, this is triggered upon interaction initializations or when specific greeting texts are entered by the user; e.g., «Hi», «Hey», etc. When the default welcome intent is triggered, the chatbot presents itself and subsequently triggers a follow-up intent, which asks for the visitor’s name. The visitor’s name is subsequently used during the interaction to create a more friendly and personalized communication atmosphere. Figure 4 and Figure 5 depict the modeling of the default welcome event for the cultural venue chatbot, while Figure 6 illustrates the modeling of the follow-up intent which collects the visitor’s name.

Figure 4.

Activation conditions for the default welcome event.

Figure 5.

Chatbot responses when the default welcome event is activated.

Figure 6.

Modeling of the follow-up intent which collects the visitor’s name.

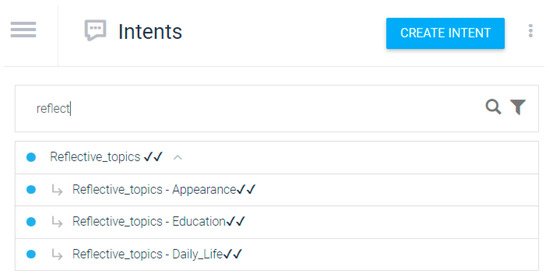

The list of reflective topics, i.e., the narration axes determined by the curators, was modeled as an intent named ‘Reflective topics’; for each distinct reflective topic, a separate follow-up intent is added to the ‘Reflective topics’ intent, allowing the visitor to select and follow the narrative. The modeling of the reflective topic list and the reflective topics as an intent and follow-up intents, respectively, is depicted in Figure 7.

Figure 7.

Modeling of the list of reflective topics and the individual reflective topics.

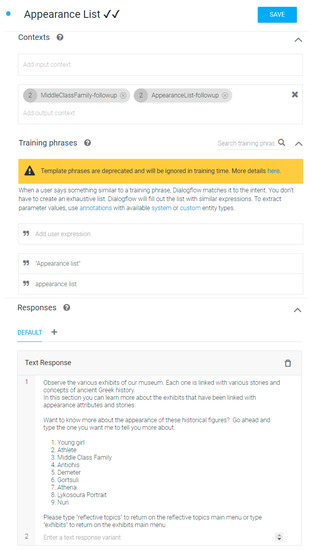

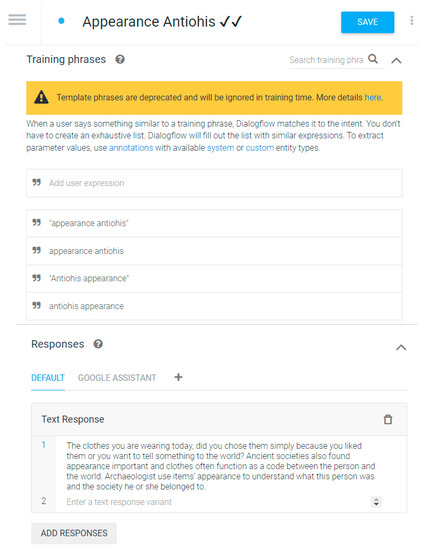

When the ‘Reflective topics’ intent is activated, an introductory text followed by the list of individual reflective topics is presented to the visitor to choose from; this is accomplished through a menu and/or by entering phrases that match the names of the reflective topics or concepts related to them. The text fragments that trigger the selection of reflective topics are entered as training phrases for the follow-up intents, which is similar to the procedure depicted in Figure 4 concerning the activation conditions for the default welcome intent. Figure 8 illustrates the detailed modeling of a reflective topic.

Figure 8.

Detailed modeling of a reflective topic.

Since the list of reflective topics is a top-level intent (in contrast to a ‘follow-up intent’), it can be invoked at any point within the user interaction. This allows the visitor to abort the traversal of an itinerary corresponding to some specific reflective topic and return to the list of reflective topics to choose a different one. On the contrary, the visitor is not expected to memorize the names of the reflective topics of the museum, and hence these are used as triggering phrases only when the ‘Reflective topics’ intent is activated and the menu listing the reflective topics is displayed. This setting also allows the chatbot to display a more concise and usable menu, since displaying the full set of choices at all interaction phases would result to the creation of menus of excessive length, which demotes usability.

When the user chooses a reflective topic, the corresponding intent is activated and an introductory text for the intent is displayed, followed by the list of exhibits associated with the intent, in the specified order. The exhibits associated with the selected reflective topic are modeled as follow-up intents of the reflective topic; therefore, upon the activation of their parent intent (i.e., the reflective topic), they are activated and may be selected. The user may navigate directly to a specific exhibit through the following means:

- by selecting from the list/menu;

- by entering the number corresponding to the exhibit’s sequence;

- by entering phrases that match the names of the exhibits or concepts related to them, which are recorded as training phrases for the corresponding intents;

- by using the ‘next’ and ‘previous’ buttons, where applicable, which are generated according to the order of exhibits specified in the reflective topic information modeling (c.f. Section 2.1).

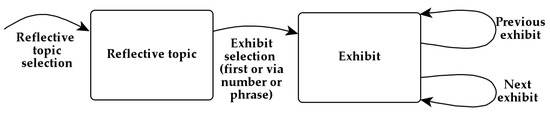

The navigation model for visiting exhibits within a reflective topic is illustrated in Figure 9, while the modeling of a reflective topic as an intent, registering related exhibits as follow-up intents, is exemplified in Figure 10.

Figure 9.

Navigation model for visiting exhibits within a reflective topic.

Figure 10.

Modeling of a reflective topic as an intent, registering related exhibits as follow-up intents.

Some exhibits may have lengthy descriptions and/or associated multimedia content (images, videos, etc.), which would not be optimal to be presented as a single chatbot response when the exhibit is selected, since this would be impractical to read and could also discourage the user. To this end, lengthy information may be split to a number of sequential segments and these segments be then modeled as a list of cascading follow-up intents, starting from the initial intent that corresponds to the exhibit; in Figure 10, exhibit ‘Demeter’ is modeled using this approach, comprising the initial exhibit intent (labeled as ‘Appearance List—Demeter’) and two cascading intents (labeled ‘Appearance List—Demeter—more’ and ‘Appearance List—Demeter—more—Final’). The initial exhibit intent provides navigation controls (a) to the first detail page (‘Appearance List—Demeter—more’ in the example), allowing the user to review the details; (b) to the next exhibit (‘Appearance List—Gotsuli’ in the example), allowing the user to skip the details; and (c) back to the intent of the reflective topic. Intermediate detail intents are furnished with navigation controls (a) to the next and previous detail pages, (b) to the next exhibit and (c) back to the intent of the reflective topic. Finally, the last detail intent is equipped with navigation controls (a) to the previous detail page, (b) to the next exhibit and (c) back to the intent of the reflective topic. Figure 11 illustrates the detailed modeling of the participation of an exhibit in a reflective topic.

Figure 11.

Detailed modeling of the participation of an exhibit in a reflective topic.

As explained in Section 2.1, alternative organizations for presenting the museum content to the users can be adopted; e.g., by exhibit historical period, place of discovery, type of exhibit, etc. For each such organization, the same methodology presented above can be applied; then, the entry point of each organization is linked to the welcome intent as a follow-up intent to allow the user to traverse the corresponding itinerary.

Another type of content that the chatbot should be able to offer is information about the museum itself, its opening hours, the museum administration, etc. This content is organized under a top-level intent ‘About the museum’, which is linked under the default welcome intent as a follow-up intent.

Listing 1 presents the algorithm for creating the chatbot using the information model presented in Section 2.1.

Listing 1. Algorithm for creating the chatbot.

startingPoint = createDefaultWelcomeIntent();

museumInfo = createMuseumInfoIntent();

startingPoint.addFollowUpIntent(museumInfo);

FOR EACH navigationPath IN getAllNavigationPaths() DO

itineraryEntryIntent = createEntryIntent(infoPresentationPath);

exhibitsInItinerary = navigationPath.getNavigationPathElements();

// the ‘previous’ variable will be used to provide for next/previous navigation style

previous = itineraryEntryIntent;

FOR EACH exhibit IN exhibitsInItinerary DO

content = exhibit.getInformation();

IF (content.length < PRESENTATION_LENGTH_THRESHOLD) THEN

exhibitIntent = content.createIntent();

ELSE

//Split the content to chunks

contentChunks = content.splitToChunks();

// the entry point for the exhibit is the first information chunk

exhibitIntent = contentChunks[0].createIntent();

previousChunk = exhibitIntent;

// iterate over remaining chunks

FOR EACH chunk IN contentChunks[1:] DO

chunkIntent = chunk.createIntent();

// add ‘previous/next’ buttons

IF (previousChunk <> contentChunks[0]) THEN

previousChunk.addNavigation(chunkIntentIntent, 'Next');

chunkIntent.addNavigation(previousChunk, 'Previous');

chunkIntent.addNavigation(exhibitIntent, 'Return to exhibit');

END IF

END FOR // FOR EACH chunk

END IF

// link to itinerary entry point

exhibitIntent.addNavigation(itineraryEntryIntent, 'Return to reflective point');

itineraryEntryIntent.addFollowUpIntent(exhibitIntent);

// add ‘previous/next’ buttons

IF (previous <> itineraryEntryIntent) THEN

previous.addNavigation(exhibitIntent, 'Next');

exhibitIntent.addNavigation(previous, 'Previous');

// If the previous exhibit is split into chunks, add navigation to chunks,

// allowing the user to skip detail chunks, moving to the next exhibit

IF (previous.IsChunked()) THEN

FOR EACH chunk IN previous.getChunks() DO

chunk.addNavigation(exhibitIntent, 'Next exhibit');

END FOR // FOR EACH chunk

END IF

END IF

previous = exhibitIntent;

END FOR // FOR EACH exhibit

// Link the itinerary to the welcome intent

startingPoint.addFollowUpIntent(itineraryEntryIntent);

END FOR // FOR EACH infoPresentationPath

// publish the chatbot

publishChatbot(startingPoint);

2.3. Automating the Creation of Chatbots

The procedure described in Section 2.2 prescribes a method for utilizing the data from an appropriately modeled information repository and working through the interactive environment of Google DialogFlow to create the chatbot. This work is tedious and error-prone, especially when working with repositories with large number of exhibits and/or multiple navigation paths, as it necessitates additional work when exhibit content is updated to mirror these updates to the chatbot. To this end, we have explored methods for automating the chatbot creation process presented in this section and we provide the following details:

- describing how the necessary data can be extracted from the repository hosting the information model presented in Section 2.1;

- detailing how the data can be mapped to appropriate representations that can be directly imported to the Google DialogFlow engine.

At this point, it is worth noting that chatbots running on top of the Google DialogFlow engine automatically utilize the advanced features of the Google DialogFlow platform for user interaction with the chatbot, including natural language processing for input, text-to-speech for output, and so forth. Therefore, in the following paragraphs we do not address issues related to the user interaction with the chatbot, including NLP features, but only focus on the mapping of the chatbot model to the structures of the Google DialogFlow engine.

The automation of chatbot creation speeds up the creation or update of chatbot engines and removes the risk of human errors, including omissions of paths or exhibits, erroneous linking or missing and misplaced textual, visual resources, etc., in the process of mapping the informational model to chatbot constructs. It is noted though that chatbots still need to be tested before deployment to ascertain that itineraries are correctly modeled and presented since when checking at information model-level, some errors may evade detection.

2.3.1. Data Extraction from the Information Repository

In Section 2.1, ontology excerpts for the representation of the information model used for the creation of the chatbot are presented. Currently, RDF triple stores are the predominant systems for storing and managing semantically annotated data, and, typically, information is retrieved from these systems using the SPARQL query language [44,45]. In this subsection, we present how different information elements are extracted using SPARQL queries.

Firstly, the reflective topics modeled by the museum curators need to be extracted. Reflective topics are instances of the ‘ReflectiveTopic’ class; hence, the relevant SPARQL query retrieves all instance of the ‘ReflectiveTopic’ class, as illustrated in Listing 2.

Listing 2. SPARQL query for retrieving the reflective points.

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#>

PREFIX rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>

PREFIX cc: <http://www.crosscult.eu/KB#>

PREFIX crm: <http://erlangen-crm.org/current/>

SELECT ?iri ?name ?description ?firstElement ?lastElement

WHERE {{?iri rdf:type cc:ReflectiveTopic} .

{?iri rdfs:label ?name .

{?iri crm:P3_has_note ?description} .

{?iri cc:isNarratedBy ?narrationAxis} .

{?narrationAxis cc:isRealizedBy ?navigationPath} .

{?navigationPath cc:hasFirst ?firstElement} .

{?navigationPath cc:hasLast ?lastElement}

}

Similar queries can be used to retrieve elements of other navigation paths, e.g., historic periods or locations of discovery. Note that the ‘iri’ element returned by the query (the first variable in the select list) is effectively the unique identifier of the reflective topic. The fields ‘firstElement’ and ‘lastElement’ returned by the query will be used for ordering the exhibits participating in the itinerary in the following steps.

It is also possible that all narration axes are retrieved from the knowledge base, exploiting the modeling property that each narration axes that should be realized by the chatbot is linked with a ‘narrates’ relation (an inverse of the ‘isNarratedBy’ relation, omitted from Figure 2 to avoid clutter). This is accomplished using the SPARQL query illustrated in Listing 3.

Listing 3. SPARQL query for retrieving all concepts having an associated narration axis.

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#>

PREFIX rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>

PREFIX cc: <http://www.crosscult.eu/KB#>

PREFIX crm: <http://erlangen-crm.org/current/>

SELECT ?narAxisIri ?conceptIri ?conceptName ?conceptDescription

?firstElement ?lastElement

WHERE {{?narAxisIri rdf:type cc:NarrationAxis} .

{?narAxisIri cc:narrates ?conceptIri} .

{?conceptIri rdfs:label ?name .

{?conceptIri crm:P3_has_note ?description} .

{?narAxisIri cc:isRealizedBy ?navigationPath} .

{?navigationPath cc:hasFirst ?firstElement} .

{?navigationPath cc:hasLast ?lastElement}

}

When processing a reflective topic (or any other concept with an associated narration axis) having an IRI equal to ID, the list of exhibits associated with it need to be retrieved. This can be accomplished by following the ‘isNarratedBy’ and ‘isRealizedBy’ relations and finally using the ‘hasElements’ relation to retrieve all navigation path elements. The ‘refersTo’ relation can be used to obtain the information of the associated exhibit. The relevant SPARQL query is depicted in Listing 4. Note that the query retrieves both the exhibit label (title) and description, as well as the relevant fields from the navigation path element instance, which are specifically tailored to the needs of the specific narration axis. The chatbot will use the fields from the navigation path element instance; however, it may make available the respective elements of the exhibit to the user depending on the user profile, e.g., researchers may have all the pertinent data available while descriptions with specialized terminology and complex descriptions may not be presented to the general public.

Listing 4. SPARQL query for retrieving the exhibits associated with a reflective topic.

PREFIX rdfs: <http://www.w3.org/2000/01/rdf-schema#>

PREFIX rdf: <http://www.w3.org/1999/02/22-rdf-syntax-ns#>

PREFIX cc: <http://www.crosscult.eu/KB#>

PREFIX crm: <http://erlangen-crm.org/current/>

SELECT ?elementIRI ?elementName ?elementDescription

?exhibitIri ?exhibitName ?exhibitDescription

?previous ?next

WHERE {{<ID> cc:isNarratedBy ?narrationAxis} .

{?narrationAxis cc:isRealizedBy ?navigationPath} .

{?navigationPath cc:hasElements ?elementIRI} .

{?elementIRI rdfs:label ?elementName} .

{?elementIRI crm:P3_has_note ? elementDescription} .

{?elementIRI cc:hasPrevious ?previous} .

{?elementIRI cc:hasNext ?next} .

{?elementIRI cc:refersTo ? exhibitIri} .

{?exhibitIri rdfs:label ?exhibitName} .

{?exhibitIri crm:P3_has_note ?exhibitDescription}

}

Once the information from Listing 2 (or Listing 3) and Listing 4 has been retrieved, all data needed by the algorithm presented in Listing 1 are available, and therefore the relevant steps can be performed. The ‘hasFirst’ and ‘hasLast’ fields retrieved by Listing 2, combined with the ‘previous’ and ‘next’ elements of individual exhibits retrieved by Listing 3 can be used to fully determine the order of the exhibits within the itinerary.

2.3.2. Automating Mapping of Data to DialogFlow Information Elements

The DialogFlow engine allows for exporting and importing chatbot agents; this feature can be used to automatically create an appropriate representation for the chatbot, which can then be directly imported into the engine and thus become operational.

More specifically, the format used for importing a chatbot agent is a zip file, where each chatbot intent is represented as a distinct JSON file within the zip file. The representation of the chatbot discussed in this paper is available at https://github.com/costasvassilakis/museumChatbot (accessed on 13 July 2023). To create the representation of the chatbot in a format that is directly importable to the DialogFlow engine, the following procedure must be executed:

- initialize an empty directory on the file system;

- for each intent created by the algorithm, create a JSON representation of the intent according to the schema of the DialogFlow engine and store the representation in a file, within the created directory;

- create a zip file containing all the files within the populated directory.

In step 2, the creation of the intent representation needs to comply with the JSON schema used by the DialogFlow engine; the documentation of this schema provided by the Google Cloud platform has been analyzed and the mapping procedure has been crafted accordingly. (The documentation is provided at https://cloud.google.com/dialogflow/es/docs/reference/rest/v2/projects.agent.intents#Intent (accessed on 13 July 2023). It should be noted that the documentation actually pertains to the GoogleCloud API, not the export/import procedures of the DialogFlow engine. Small discrepancies have been identified, e.g., the name of the intent is listed as ‘displayName’ in the documentation while in the JSON files it is listed as ‘name’. The schemas have been analyzed and compared and the automatic generation procedure has been tailored accordingly). The full presentation of the DialogFlow engine’s JSON schema is beyond the scope of this paper. Table 2 lists the most important elements of the JSON schema, describing the functionality of each element.

Table 2.

Elements of the DialogFlow engine’s JSON schema.

Listing 5 presents the algorithm used for mapping intents created by the algorithm in Listing 1 to DialogFlow representation; this representation can be then imported to the DialogFlow platform.

Listing 5. Algorithm for mapping intents created by the algorithm in Listing 1 to DialogFlow representation.

FUNCTION mapToDialogFlow(chatbot)

directory = createEmptyDirectory();

FOR EACH intent IN chatbot.getAllIntents() DO

// Create and populate a new dialogFlow intent construct

dialogFlowIntent = new DialogFlowIntent();

dialogFlowIntent.id = intent.getId().toUUID();

dialogFlowIntent.name = intent.getTitle();

// create the content of the element

content = new DialogFlowResponse();

content.messages[0].type = 0; // 0 means that this is the content

content.messages[0].title = intent.getTitle();

content.messages[0].speech = intent.getContent();

// add the content to the DialogFlow intent construct

dialogFlowIntent.responses.append(content);

// map navigation links

FOREACH link in intent.getLinks() DO

// Create the DialogFlow structure of the link

dialogFlowLink = new DialogFlowResponse();

dialogFlowLink.action = link.target();

dialogFlowLink.messages[0].type = "suggestion_chips";

dialogFlowLink.messages[0].suggestions[0].title = link.getNavigationText();

// add the link to the DialogFlow intent construct

dialogFlowIntent.responses.append(dialogFlowLink);

END FOR // FOREACH link

// add parent link, if present

IF (intent.getParentIntent() <> NULL) THEN

dialogFlowIntent. parentId = intent.getParentIntent().getId().toUUID();

END IF

// Create appropriate JSON file

filename = directory.path + '/’ + dialogFlowIntent.id + '.json';

dialogFlowIntent.saveAsJSON(filename);

END FOR // FOR EACH intent

// create zip file

createZipFile(chatbot.name + ".zip", directory.path);

END FUNCTION // FUNCTION mapToDialogFlow

Listing 6 presents an excerpt of a JSON file, corresponding to an exhibit of the museum.

Listing 6. Excerpt from the JSON file created via the mapping procedure.

{

"id": "0e6a460b-3206-4d06-a8d2-9999172f82d4",

"parentId": "778473fc-857b-4216-a00b-4e2d4d3e8853",

"name": "Appearance Antiohis - more ",

"contexts": [

"AntiohisApperance-followup"

],

"responses": [

{

"action": "AntiohisApperance.AntiohisApperance-more",

"messages": [

{

"type": "suggestion_chips",

"suggestions": [

{

"title": "More..."

}

],

},

{

"type": "0",

"title": "Appearance Antiohis - more",

"speech": [

"The clothes you are wearing today, did you choose them simply because you liked them or you want to tell something to the world? Ancient societies also found appearance important and clothes often function as a code between the person and the world. Archaeologist use items’ appearance to understand what this person was and the society he or she belonged to."

]

}

]

}

[

}

3. Results

In this section we present the results of the application of the methodology described in Section 2 for the implementation of a chatbot for the Museum of Tripolis, Greece. The chatbot was named ‘Pu’, after the Greek word ‘Πού’ meaning ‘where’. The data used for the chatbot were collected in the context of the CrossCult project [46]. On top of the DialogFlow implementation, the Kommunicate user interface was integrated (https://www.kommunicate.io/product/dialogflow-integration, accessed on 13 July 2023), to offer a more interactive and user-friendly environment.

3.1. Implementation of the Chatbot

This chatbot is based on a modeling that uses two distinct approaches for the organization of exhibits. The first one is based on ‘Reflective Topics’, where the following navigation paths are discerned:

- Education;

- Appearance;

- Daily life;

- Religion and rituals;

- Immortality/mortality;

- Social status;

- Names/animals/myths.

Due to resource constraints, only the first three reflective topics in the list above were implemented in ‘Pu’.

The second approach is based on the types of the exhibits, where exhibits are classified in the following categories:

- Statues;

- Bass reliefs;

- Figurines;

- Funerary art;

- Man-made objects;

- Reliefs;

- Sculptures;

- Tombstones;

- Tondi (circular sculptures);

- Votive offerings.

The steps that were followed to realize the chatbot were as follows:

- The material that had been prepared for the narratives of the museum in the context of developing a digital application were studied and the three most prominent reflective topics for implementation within the chatbot engine were chosen.

- The exhibit type dimension was also selected for implementation, as this was considered to be the most comprehensible for users.

- The methodology presented in Section 2.2 was followed to create the chatbot.

All features listed in Section 2.2 were accommodated in the implementation, i.e., (a) presentation of the museum profile; (b) selection of the narration axis/dimension that will be followed (specific reflective topic or specific exhibit type); (c) traversing of the chosen narration axis; (d) ability to skip detailed presentation of an exhibit and move directly to the next one; and (e) ability to return to the start of a narration axis. Additionally, as listed above, the Kommunicate user interface was integrated on top of the DialogFlow engine, to offer a more interactive and user-friendly environment.

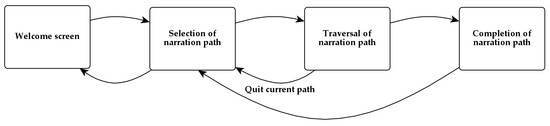

Figure 12 illustrates the overall mode of interaction of users with the chatbot.

Figure 12.

User interaction with the chatbot.

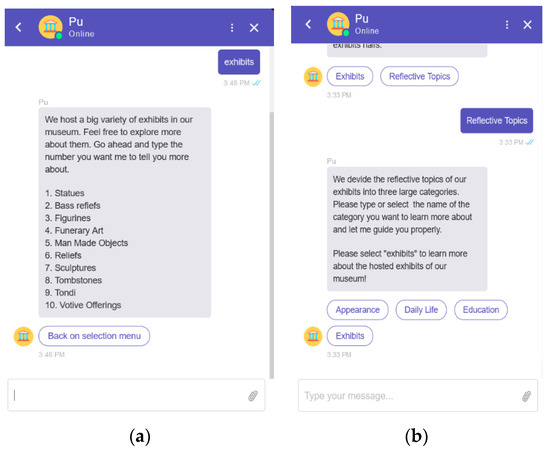

Figure 13 illustrates the chatbot dialogues for listing and selecting itineraries (a) according to the exhibit type and (b) reflective topic.

Figure 13.

(a) List of exhibit types, (b) list of reflective topics.

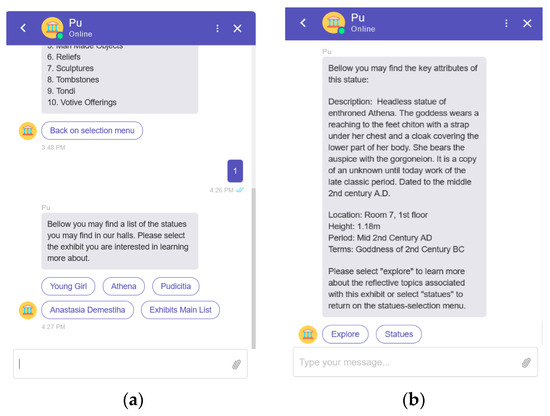

Figure 14 presents chatbot dialogues excerpts for (a) displaying the description of an exhibit category according to the exhibit type and its contents and (b) displaying details about an exhibit within the category.

Figure 14.

(a) Selecting the first type of exhibits (statues), (b) displaying detailed information about an exhibit.

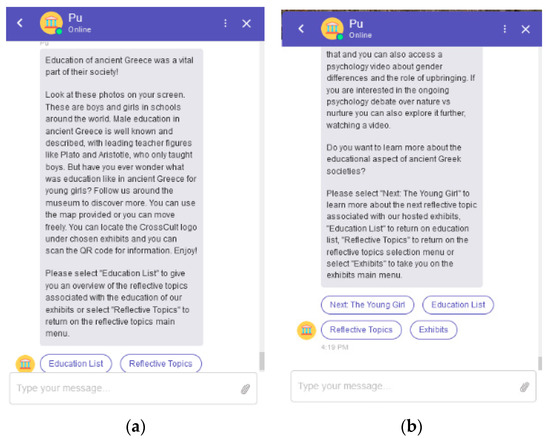

Finally, Figure 15 presents the chatbot dialogues excerpts for (a) selecting a reflective topic and displaying the introductory information and (b) displaying information about an exhibit in the itinerary related to the reflective topic.

Figure 15.

(a) Selecting the ‘Education’ reflective topic, (b) displaying detailed information about an exhibit.

3.2. Evaluation

In order to gain insight to the user’s perception on the chatbot, we conducted two user studies: one aimed towards assessing the chatbot creation process and one aimed towards evaluating the chatbot use. The results of these studies are presented in the following two subsections.

3.2.1. Evaluation of the Chatbot Creation Process

The first part of the evaluation was targeted to the stakeholders involved in the chatbot creation process. For the determination of the aspects to be evaluated and the formulation of the pertinent questionnaire, relevant public service creation user surveys were considered [47,48]; in particular, the aspects of learnability, efficiency, memorability, few errors and satisfaction were discerned as relevant for inclusion in this evaluation. The aspects of “few errors” and “efficiency” were measured as objective parameters, counting the number of mistakes made in the process and the time needed to perform the assigned task, respectively; the remaining aspects were assessed via subjective expression of opinion through the questionnaire illustrated in Table 3.

Table 3.

Questionnaire for assessing the subjective aspects of the chatbot creation process.

In this evaluation, four participants were asked to perform a part of the chatbot creation process and express their opinion regarding the different aspects of this process. Two participants were postgraduate students of cultural studies, one participant was a graduate of humanistic studies and one of them was a postgraduate student of computer science. None of them had prior experience with using the DialogFlow console.

The participants were first introduced to the DialogFlow console, covering only the agent (chatbot) creation and the intent creation steps. Participants were instructed to ignore the other elements of the DialogFlow console, including the entities and knowledge menus. Afterwards, participants were presented with the process of mapping narratives and exhibits to intents, including the chunking of lengthy descriptions into multiple, sequentially connected intents. Participants were allowed to ask questions and request clarifications and were also given the opportunity to practice with the creation of intents. The duration of this introduction ranged between 23 and 36 min.

Afterwards, each participant was asked to create a new narrative and insert four exhibits into it. The data needed for the creation of the narratives and the exhibits (title and description) were made available as word processor files, and participants could copy and paste text from the word processor file to the DialogFlow console. Users were asked to think aloud during the chatbot creation process and were permitted to turn to the interviewer for help. The time that each participant needed to perform each individual step and the whole process was recorded, and after the process was completed, participants filled in a short questionnaire (c.f. Table 3), rating aspects in a 1–5 Likert scale, and were asked to express their opinion about the process.

The time needed for the participants to complete the task varied significantly, ranging from 28 min (for the computer science postgraduate student) to 1 h and 10 min. This difference accounts not only for the proficiency in using the (browser-based) DialogFlow interface but also to the practices used for copying text between the word processor and the browser; in particular, the computer science postgraduate student used the key combination ALT + TAB to rapidly switch between the word processor and the browser, whereas other participants used the mouse, which resulted in the increased time. It is worth noting that the time needed to complete the entry of an exhibit was found to reduce up to 23% between the entry of the first exhibit and the entry of the last (fourth) exhibit, indicating that the process is quickly learnable.

Users responded that the process was fairly straightforward (average: 4.25, min: 3, and max: 5) and learnable (average: 4.5, min: 4, and max: 5). The user interface received lower grades (average: 3.25, min: 3, and max: 4), mainly due to the fact that a number of fields in the intent modeling forms were unused and this encumbered the whole process. All users noted that the process was of low interest (average: 1.75, min: 1, and max: 3) and were positive towards the prospect of being assisted by an automation procedure.

Only few errors, between 0 and 3 per subject, were observed; about 60% of the errors were related to entering information in the wrong user interface field, and the remaining 40% was related to omissions (i.e., some information was not entered in the DialogFlow console). Notably, most errors were observed in the creation of the narrative and the first two exhibits, i.e., in the first tasks that the users performed, while in the modeling of the last two exhibits only two errors were observed, further supporting the subjective view that the system is characterized by high learnability.

3.2.2. Evaluation of Chatbot Use

The second part of the evaluation was targeted toward the use of the chatbot by end-users. For the determination of the aspects to be evaluated and the formulation of the pertinent questionnaire, relevant chatbot usage surveys were consulted [7,49,50,51,52]; the aspects of ease of use, dialogue fluidity [49,53], user interface intuitiveness and pleasantness, and user willingness to use the chatbot were discerned to be measured. All aspects were evaluated using subjective expression of opinions, via the questionnaire illustrated in Table 4. Willingness to use the chatbot was also explored via objective observations, through the actual self-motivated involvement of the subject in the exploration of additional narratives, beyond the tasks that the subjects were requested to complete.

Table 4.

Questionnaire for assessing the subjective aspects of the chatbot use.

Thirty-eight persons took part in the chatbot use evaluation survey. The participants were initially briefed for approximately four minutes on the use of the chatbot (presentation of the overall navigation structure, explanation of the narrative concept, and introduction of chatbot interaction and controls), and were then requested to choose and follow one narrative. Participants were allowed to select and follow a second narrative if they wished to. After the experience, participants were requested to complete a questionnaire.

All participants completed the first narrative, and six of the participants proceeded to traversing the second one. Users found the chatbot easy to use (average: 4.24, standard deviation: 0.75, and min: 2; max: 5), and that dialogues flowed fluidly (average: 4.03, standard deviation: 0.78, min: 2, and max: 5). The user interface was also found to be intuitive (average: 4.34, standard deviation: 0.63, max: 5, and min: 3) and quite pleasant (average: 3.87, standard deviation: 0.66, min: 2, and max: 5). The pleasant user interface criterion received the lowest marks, indicating that a more sophisticated user interface implementation is required. Ten users exploited the functionality of skipping additional exhibit details when information about an exhibit was split into chunks, i.e., they only viewed the first screenful of exhibit information and then moved to the next exhibit. Finally, users stated that they would like to follow additional narratives to explore additional content, including different aspects of exhibits they had already viewed (average: 4.45, standard deviation: 0.60, max: 5, and min: 3).

4. Discussion

The proposed work advances the state of the art by providing a framework through which chatbots can provide full guidance and a complete museum experience conveying the intended museum message, in contrast to supporting ad hoc queries for individual exhibits or fragmented experiences. It is also worth noting that the proposed approach is based on the theoretical framework of narratives, which formalizes the successful practice used by human museum guides, and has also been defined and successfully tested [39,40]. Additionally, the proposed approach accommodates the multiple facets of museum exhibits, which can be organized under different itineraries with specifically tailored storytelling and exhibit sequences. Furthermore, an algorithm is presented to automate museum chatbot generation, allowing the museums to create and maintain their own chatbots in a code-free environment. This algorithm utilizes the DialogFlow chatbot engine, directly exploiting all available features including augmented user interfaces and natural speech-based interaction.

The methodology presented in this paper has both practical and research implications. Practitioners and professionals may use the proposed methodology to construct chatbots for museums with low effort and cost. This is of particular importance for small- and medium-sized museums where budget is limited and IT personnel resources are scarce. The automation of the chatbot creation procedure can relieve the museum staff from a tedious and uninteresting task, necessitating, however, the introduction of a narration modeling application. In the research domain, several aspects of the proposed method may be further examined and/or extended. Firstly, additional concepts of the DialogFlow engine, such as Knowledge Connectors (components that analyze documents such as exhibit descriptions (https://cloud.google.com/dialogflow/es/docs/knowledge-connectors, accessed on 13 July 2023)), can be used to underpin the finding of automated responses. Additional platforms can be surveyed to explore how concepts offered therein (e.g., scenes offered by OpenDialog (https://docs.opendialog.ai/scenes, accessed on 13 July 2023)) can be exploited to offer more features or richer interaction. The chatbot may also analyze interaction patterns or profile information of users to adjust the content presented to the user; e.g., prioritize certain reflective topics or exhibits according to the user profile, or adjust the level of detail of the presented content to match the preferences of the user (e.g., when the user consistently chooses to skip screenfuls with additional details, the relevant navigations can be concealed).

A limitation of the proposed methodology is that it requires a specifically crafted information repository, accommodating the narration axes and exhibit information. However, many museums have bought and used specific applications to catalogue information in proprietary repositories whose schema does not match the one required by the proposed approach. To this end, schema transformations will need to be developed to align the data to the needed storage schema and avoid excessive manual work. Moreover, the proposed work automates the chatbot generation process and results in a functional but quite basic user interface. This is also true when the chatbot is manually created through the DialogFlow console and has been noted by users during the evaluation. While the user interface can be improved and be made more aesthetically pleasing, a user interface created by a user experience expert is bound to be more attractive or include more features tailored to the specific user needs. This tradeoff is common in automatically generated software and the museum management should weigh the pros and cons of each approach for the specific implementation to decide on the most prominent selection. Decoupling the chatbot logic from the user interface is a path that will be explored to allow for automating creation of the chatbot logic, based on the algorithms presented in this work, and linking of this logic to custom-made user interfaces (which can be developed more rapidly and with limited cost), combining the positive features of the two approaches.

5. Conclusions

In this paper, we have presented a methodology for creating chatbots that are able to guide their users along carefully prepared itineraries, with each itinerary corresponding to a story told by museum exhibits and conveying a specific message. This methodology overcomes the limitations of the existing approaches which may (a) focus only on exhibits, where users are expected to ask proper questions, with interactions being dependent only on the user’s intuition; (b) fail to provide any means to guide the user through the museum, and (c)even when guidance through the museum is available, only a single navigation path is provided.

The presented methodology entails two phases, with the first one providing guidance on how the museum information can be structured to best support the chatbot creation process, and the second one comprising an algorithm for using the structured information to create an intent-based chatbot. The proposed methodology can be fully automated, using queries in the information repositories to retrieve the information relevant to each step of the algorithm, and then mapping the information retrieved to the chatbot engine concepts. The mappings of the information elements to the concepts of the DialogFlow chatbot engines are outlined. Finally, a proof-of-concept implementation is provided concerning a chatbot for the Museum of Tripolis, using data curated in the context of the CrossCult H2020 project.

Different ways of handling and presenting exhibits that participate in multiple navigation paths may have diverse repercussions on user experience and the cognitive imprint of the interaction. Currently, navigation paths are treated in isolation, in the sense that if an exhibit participating in multiple navigation paths is visited along some selected itinerary the links to other navigation paths are concealed. This allows the visitor to focus on the relation of the exhibit to the reflective topic or concept corresponding to the itinerary being followed, but on the other hand it deprives the visitor of a more holistic perspective on the exhibit. The extent, however, to which the inclusion of this additional information (i.e., the relationship with other navigation paths) would indeed allow the user to acquire a more holistic perception of the exhibit or perplex navigation and weaken the cognitive imprint of the navigation path followed is an issue that needs to be experimentally tested.

Author Contributions

Conceptualization, V.B., D.S., D.M., C.V., K.K., A.A., G.L., M.W. and V.P.; methodology, V.B., D.S., D.M., C.V., K.K., A.A., G.L., M.W. and V.P.; software, V.B., D.S., D.M., C.V., K.K., A.A., G.L., M.W. and V.P.; validation, V.B., D.S., D.M., C.V., K.K., A.A., G.L., M.W. and V.P.; data curation, V.B., D.S., D.M., C.V., K.K., A.A., G.L., M.W. and V.P.; writing—original draft preparation, V.B., D.S., D.M., C.V., K.K., A.A., G.L., M.W. and V.P.; writing—review and editing, V.B., D.S., D.M., C.V., K.K., A.A., G.L., M.W. and V.P. All authors have read and agreed to the published version of the manuscript.

Funding

Part of this work has been funded by the EU, under Grant #693150 (project ‘CrossCult: Empowering reuse of digital cultural heritage in context-aware crosscuts of European history’).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Participants in the user study were informed about the purpose of the study and that the data they provided will be fully anonymized to remove any personal details, and then be used only for computation of statistical measures.

Data Availability Statement

The chatbot implementation presented in this paper is available at https://github.com/costasvassilakis/museumChatbot (accessed on 13 July 2023).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Maroević, I. The Museum Message: Between the Document and Information. In Museum, Media, Message; Routledge: London, UK, 1995; ISBN 978-0-203-45651-4. [Google Scholar]

- Banisharif, M.; Mazloumzadeh, A.; Sharbaf, M.; Zamani, B. Automatic Generation of Business Intelligence Chatbot for Organizations. In Proceedings of the 2022 27th International Computer Conference, Computer Society of Iran (CSICC), Tehran, Iran, 23–24 February 2022; IEEE: Piscataway, NJ, USA; pp. 1–5. [Google Scholar]

- Isinkaye, F.O.; AbiodunBabs, I.G.; Paul, M.T. Development of a Mobile-Based Hostel Location and Recommendation Chatbot System. Int. J. Inf. Technol. Comput. Sci. 2022, 14, 23–33. [Google Scholar] [CrossRef]

- Stoeckli, E.; Dremel, C.; Uebernickel, F.; Brenner, W. How Affordances of Chatbots Cross the Chasm between Social and Traditional Enterprise Systems. Electron. Mark. 2020, 30, 369–403. [Google Scholar] [CrossRef]

- Richter, A.; Riemer, K. Malleable End-User Software. Bus. Inf. Syst. Eng. 2013, 5, 195–197. [Google Scholar] [CrossRef]

- Seddon, P.; Calvert, C.; Yang, S. A Multi-Project Model of Key Factors Affecting Organizational Benefits from Enterprise Systems. MIS Q. 2010, 34, 305–328. [Google Scholar] [CrossRef]

- Gaia, G.; Boiano, S.; Borda, A. Engaging Museum Visitors with AI: The Case of Chatbots. In Museums and Digital Culture; Giannini, T., Bowen, J.P., Eds.; Springer Series on Cultural Computing; Springer International Publishing: Cham, Switzerland, 2019; pp. 309–329. ISBN 978-3-319-97456-9. [Google Scholar]

- Pequignot, C. Teaching a Titanosaur to Talk: Conversational UX Design for Field Museum. Available online: https://purplerockscissors.com/blog/teaching-a-titanosaur-to-talk (accessed on 11 May 2023).

- Field Museum If the World’s Biggest Dinosaur Could Talk, What Would He Say? Available online: https://www.fieldmuseum.org/exhibitions/maximo-titanosaur (accessed on 11 May 2023).

- Anne Frank House Anne Frank House. Available online: https://www.annefrank.org/en/ (accessed on 11 May 2023).

- Rosen, A.; Kölbl, M. Talk to Me! Chatbots in Museums: A Chronological Overview. Available online: https://zkm.de/en/talk-to-me-chatbots-in-museums (accessed on 11 May 2023).

- Varitimiadis, S.; Kotis, K.; Spiliotopoulos, D.; Vassilakis, C.; Margaris, D. “Talking” Triples to Museum Chatbots. In Culture and Computing; Rauterberg, M., Ed.; Lecture Notes in Computer Science; Springer International Publishing: Cham, Switzerland, 2020; Volume 12215, pp. 281–299. ISBN 978-3-030-50266-9. [Google Scholar]

- Varitimiadis, S.; Kotis, K.; Pittou, D.; Konstantakis, G. Graph-Based Conversational AI: Towards a Distributed and Collaborative Multi-Chatbot Approach for Museums. Appl. Sci. 2021, 11, 9160. [Google Scholar] [CrossRef]

- Noh, Y.-G.; Hong, J.-H. Designing Reenacted Chatbots to Enhance Museum Experience. Appl. Sci. 2021, 11, 7420. [Google Scholar] [CrossRef]

- Spiliotopoulos, D.; Kotis, K.; Vassilakis, C.; Margaris, D. Semantics-Driven Conversational Interfaces for Museum Chatbots. In Proceedings of the Culture and Computing; Rauterberg, M., Ed.; Springer International Publishing: Cham, Switzerland, 2020; Volume 12215, pp. 255–266. [Google Scholar]

- Toumanidis, L.; Karapetros, P.; Giannousis, C.; Kogias, D.G.; Feidakis, M.; Patrikakis, C.Z. Developing the Museum-Monumental Experience from Linear to Interactive Using Chatbots. In Strategic Innovative Marketing and Tourism; Kavoura, A., Kefallonitis, E., Giovanis, A., Eds.; Springer Proceedings in Business and Economics; Springer International Publishing: Cham, Switzerland, 2019; pp. 1159–1167. ISBN 978-3-030-12452-6. [Google Scholar]

- Zhou, C.; Sinha, B.; Liu, M. An AI Chatbot for the Museum Based on User Interaction over a Knowledge Base. In Proceedings of the 2nd International Conference on Artificial Intelligence and Advanced Manufacture, Manchester, UK, 15–17 October 2020; ACM: New York, NY, USA, 2020; pp. 54–58. [Google Scholar]

- Schaffer, S.; Ruß, A.; Gustke, O. User Experience of a Conversational User Interface in a Museum. In ArtsIT, Interactivity and Game Creation; Lecture Notes of the Institute for Computer Sciences, Social Informatics and Telecommunications Engineering; Brooks, A.L., Ed.; Springer Nature Switzerland: Cham, Switzerland, 2023; Volume 479, pp. 215–223. ISBN 978-3-031-28992-7. [Google Scholar]

- Casillo, M.; Clarizia, F.; D’Aniello, G.; De Santo, M.; Lombardi, M.; Santaniello, D. CHAT-Bot: A Cultural Heritage Aware Teller-Bot for Supporting Touristic Experiences. Pattern Recognit. Lett. 2020, 131, 234–243. [Google Scholar] [CrossRef]

- Tsepapadakis, M.; Gavalas, D. Are You Talking to Me? An Audio Augmented Reality Conversational Guide for Cultural Heritage. Pervasive Mob. Comput. 2023, 92, 101797. [Google Scholar] [CrossRef]

- Barth, F.; Candello, H.; Cavalin, P.; Pinhanez, C. Intentions, Meanings, and Whys: Designing Content for Voice-Based Conversational Museum Guides. In Proceedings of the 2nd Conference on Conversational User Interfaces, Bilbao, Spain, 22–24 July 2020; ACM: New York, NY, USA, 2020; pp. 1–8. [Google Scholar]

- Wirawan, K.T.; Sukarsa, I.M.; Bayupati, I.P.A. Balinese Historian Chatbot Using Full-Text Search and Artificial Intelligence Markup Language Method. Int. J. Intell. Syst. Appl. 2019, 11, 21–34. [Google Scholar] [CrossRef]

- Kontiza, K.; Antoniou, A.; Daif, A.; Reboreda-Morillo, S.; Bassani, M.; González-Soutelo, S.; Lykourentzou, I.; Jones, C.E.; Padfield, J.; López-Nores, M. On How Technology-Powered Storytelling Can Contribute to Cultural Heritage Sustainability across Multiple Venues—Evidence from the CrossCult H2020 Project. Sustainability 2020, 12, 1666. [Google Scholar] [CrossRef]

- Vlachidis, A.; Bikakis, A.; Kyriaki-Manessi, D.; Triantafyllou, I.; Antoniou, A. The CrossCult Knowledge Base: A Co-Inhabitant of Cultural Heritage Ontology and Vocabulary Classification. In New Trends in Databases and Information Systems; Kirikova, M., Nørvåg, K., Papadopoulos, G.A., Gamper, J., Wrembel, R., Darmont, J., Rizzi, S., Eds.; Communications in Computer and Information Science; Springer International Publishing: Cham, Switzerland, 2017; Volume 767, pp. 353–362. ISBN 978-3-319-67161-1. [Google Scholar]

- Daif, A.; Dahroug, A.; López-Nores, M.; González-Soutelo, S.; Bassani, M.; Antoniou, A.; Gil-Solla, A.; Ramos-Cabrer, M.; Pazos-Arias, J. A Mobile App to Learn About Cultural and Historical Associations in a Closed Loop with Humanities Experts. Appl. Sci. 2018, 9, 9. [Google Scholar] [CrossRef]

- Kontiza, K.; Loboda, O.; Deladiennee, L.; Castagnos, S.; Naudet, Y. A Museum App to Trigger Users’ Reflection. In Proceedings of the 2nd Workshop on Mobile Access to Cultural Heritage Co-Located with 20th International Conference on Human-Computer Interaction with Mobile Devices and Services, mobileCH@Mobile HCI 2018, Barcelona, Spain, 3 September 2018; Castagnos, S., Kuflik, T., Lykourentzou, I., Wallace, M., Eds.; CEUR-WS.org. Volume 2176. [Google Scholar]

- Daif, A.; Dahroug, A.; Nores, M.L.; Gil-Solla, A.; Cabrer, M.R.; Pazos-Arias, J.J.; Blanco-Fernández, Y. Developing Quiz Games Linked to Networks of Semantic Connections Among Cultural Venues. In Proceedings of the Metadata and Semantic Research–11th International Conference, MTSR 2017, Tallinn, Estonia, 28 November–1 December 2017; Garoufallou, E., Virkus, S., Siatri, R., Koutsomiha, D., Eds.; Springer International Publishing AG: Cham, Switzerland, 2017; Volume 755, pp. 239–246. [Google Scholar]

- Botpress Botpress—The Building Blocks for Building Chatbots. Available online: https://github.com/botpress/botpress (accessed on 5 June 2023).

- Bisser, S. Introduction to the Microsoft Bot Framework. In Microsoft Conversational AI Platform for Developers; Apress: Berkeley, CA, USA, 2021; pp. 25–66. ISBN 978-1-4842-6836-0. [Google Scholar]

- Biswas, M. Microsoft Bot Framework. In Beginning AI Bot Frameworks; Apress: Berkeley, CA, USA, 2018; pp. 25–66. ISBN 978-1-4842-3753-3. [Google Scholar]

- Microsoft Microsoft Bot Framework. Available online: https://dev.botframework.com/ (accessed on 10 May 2023).

- Sabharwal, N.; Barua, S.; Anand, N.; Aggarwal, P. Building Your First Bot Using Watson Assistant. In Developing Cognitive Bots Using the IBM Watson Engine; Apress: Berkeley, CA, USA, 2020; pp. 47–102. ISBN 978-1-4842-5554-4. [Google Scholar]

- Biswas, M. IBM Watson Chatbots. In Beginning AI Bot Frameworks; Apress: Berkeley, CA, USA, 2018; pp. 101–137. ISBN 978-1-4842-3753-3. [Google Scholar]

- Williams, S. Hands-On Chatbot Development with Alexa Skills and Amazon Lex: Create Custom Conversational and Voice Interfaces for Your Amazon Echo Devices and Web Platforms; Packt: Birmingham, UK, 2016; ISBN 978-1-78899-348-7. [Google Scholar]

- Amazon Web Services Platform Amazon Lex. Available online: https://aws.amazon.com/lex/ (accessed on 10 May 2023).

- Sabharwal, N.; Agrawal, A. Introduction to Google Dialogflow. In Cognitive Virtual Assistants Using Google Dialogflow; Apress: Berkeley, CA, USA, 2020; pp. 13–54. ISBN 978-1-4842-5740-1. [Google Scholar]

- OpenDialog.ai OpenDialog-Open-Source Conversational Application Platform. Available online: https://github.com/opendialogai/opendialog (accessed on 5 June 2023).

- RASA Introduction to Rasa Open Source & Rasa Pro. Available online: https://rasa.com/docs/rasa/ (accessed on 5 June 2023).

- Bourlakos, I.; Wallace, M.; Antoniou, A.; Vassilakis, C.; Lepouras, G.; Karapanagiotou, A.V. Formalization and Visualization of the Narrative for Museum Guides. In Semantic Keyword-Based Search on Structured Data Sources; Lecture Notes in Computer Science; Szymański, J., Velegrakis, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2018; Volume 10546, pp. 3–13. ISBN 978-3-319-74496-4. [Google Scholar]

- Antoniou, A.; Morillo, S.R.; Lepouras, G.; Diakoumakos, J.; Vassilakis, C.; Nores, M.L.; Jones, C.E. Bringing a Peripheral, Traditional Venue to the Digital Era with Targeted Narratives. Digit. Appl. Archaeol. Cult. Herit. 2019, 14, e00111. [Google Scholar] [CrossRef]

- Shanahan, M.J. Historical Change and Human Development. In International Encyclopedia of the Social & Behavioral Sciences; Pergamon: Oxford, UK, 2001; pp. 6720–6725. ISBN 978-0-08-043076-8. [Google Scholar]

- Gonçalves, M.A.; Fox, E.A.; Watson, L.T. Towards a Digital Library Theory: A Formal Digital Library Ontology. Int. J. Digit. Libr. 2008, 8, 91–114. [Google Scholar] [CrossRef]

- Luo, B.; Lau, R.Y.K.; Li, C.; Si, Y. A Critical Review of State-of-the-art Chatbot Designs and Applications. WIREs Data Min. Knowl. Discov. 2022, 12, e1434. [Google Scholar] [CrossRef]

- Banane, M.; Belangour, A. A Survey on RDF Data Store Based on NoSQL Systems for the Semantic Web Applications. In Advanced Intelligent Systems for Sustainable Development (AI2SD’2018); Advances in Intelligent Systems and Computing; Ezziyyani, M., Ed.; Springer International Publishing: Cham, Switzerland, 2019; Volume 915, pp. 444–451. ISBN 978-3-030-11927-0. [Google Scholar]

- Ali, W.; Saleem, M.; Yao, B.; Hogan, A.; Ngomo, A.-C.N. A Survey of RDF Stores & SPARQL Engines for Querying Knowledge Graphs. VLDB J. 2022, 31, 1–26. [Google Scholar] [CrossRef]