Abstract

It is well known that -circulant matrices with can be simultaneously diagonalized by a transform matrix, which can be factored as the product of a diagonal matrix, depending on , and of the unitary matrix associated to the Fast Fourier Transform. Hence, all the sets of -circulants form algebras whose computational power, in terms of complexity, is the same as the classical circulants with . However, stability is a delicate issue, since the condition number of the transform is equal to that of the diagonal part, tending to . For , the set of related matrices is still an algebra, which is the algebra of lower triangular matrices, but they do not admit a common transform since most of them (all except the multiples of the identity) are non-diagonalizable. In the present work, we review two modern applications, ranging from parallel computing in preconditioning of PDE approximations to algorithms for subdivision schemes, and we emphasize the role of such algebra. For the two problems, few numerical tests are conducted and critically discussed and the related conclusions are drawn.

1. Introduction

When dealing with structured matrices of Toeplitz type [1,2] not related to fast trigonometric transforms, the problems of computing the solution of large linear system, the matrix vector product, or the eigenvalues are greatly accelerated by using them as approximation matrices belonging to algebras associated to trigonometric transforms [3,4,5,6]. Among them, a very classical choice is the algebra of -circulant matrices with (see the seminal book [7] and references therein). In this work, after briefly introducing these matrices and reviewing a few related applications, we focus on new, challenging problems, such as parallel computing in preconditioning of approximated partial differential equations (PDEs) and algorithms for subdivision schemes, highlighting the benefits and drawbacks of employing -circulant matrices.

The paper is organized in the following manner. In Section 2 we introduce the basic notions on circulant and -circulant matrices. In Section 3, after recalling a technique for the parallel computing of the matrix-vector product and matrix inversion concerning -circulant matrices, we present a general procedure (introduced by Bini [8]) to precondition (block) triangular Toeplitz linear systems. Then, we move on to scalar subdivision schemes, introducing them in Section 4 and presenting the interpolation model as a direct problem with its associated inverse problem in Section 5. Section 6 is devoted to selected numerical experiments and the related critical discussion. To conclude, in Section 7 we draw conclusions and discuss a few open problems, with special attention to the use of structured matrices in subdivision schemes.

2. Some Remarks on Circulant and -Circulant Matrices

In this introductory section, we lay the groundwork for the main part of this paper by recalling the basic definitions and properties of both circulant and -circulant matrices. More information can be found in Ref. [7]. We recall that circulant and -circulant matrices have played a major role in the last four decades for the fast solution of structured linear systems, mainly of Toeplitz type, but also stemming from approximated PDEs. Classical references are Refs. [2,9,10,11,12,13,14], where preconditioned Krylov methods, local Fourier analysis, and its GLT generalizations are treated with attention to the circulant structure and to the related fast Fourier transform (FFT); see Ref. [15]. More specific references for the numerical treatment of PDEs via -circulant preconditioning or directly by multigrid solvers or combination of them can be found in Refs. [10,16,17,18].

Definition 1.

Consider with . A square matrix is called circulant and it is denoted with if we have

An equivalent and compact representation of is given by

where is the permutation matrix

Note that for any fixed the matrix is circulant, since this sum can always be traced back to the form in the above definition.

Denoting with the conjugate transpose, circulant matrices admit the following spectral decomposition.

Proposition 1.

can be diagonalized as

where

is the diagonal matrix containing the eigenvalues of and

is the unitary Fourier matrix.

Proof.

By a direct computation of the eigenvalues, it is easy to verify that factorizes as

with

The thesis follows. □

Remark 1.

The eigenvalues of , namely the diagonal elements of , are given by the Fourier transform of the first column of . Alternatively, they can be seen as

where the function is called (spectral) symbol of .

-circulant matrices represent an extension of the notion of circulant matrix (see, e.g., Refs. [6,19,20] for more details).

Definition 2.

Consider with . A square matrix is called-circulant and it is denoted with if it holds

A compact representation for is given by

where is the matrix

Note that setting leads back to Definition 1. An analogous spectral decomposition can be derived.

Proposition 2.

can be diagonalized as

where

is the diagonal matrix containing the eigenvalues of and

Proof.

Let us write with and consider . Applying (1), the matrix is diagonalized as

and the thesis follows. □

The above factorization is equivalent to

with

which, compared with (2), gives a deeper understanding of the role of . As in the circulant case, we stress that for any fixed the matrix is -circulant.

Remark 2.

With , the eigenvalues of can be expressed as

where the function is called(spectral) symbol of .

To conclude this section, we extend the above definitions and properties to the block case.

Definition 3.

Consider with . A square matrix is called--block circulant and it is denoted with if it holds

A compact representation for is given by

For , Definition 3 describes the notion of -block circulant matrices, while, as expected, for it reduces to Definition 2. As in the case of , for any fixed the matrix is --block circulant.

Proposition 3.

can be diagonalized as

where

and is the identity of size d.

Remark 3.

With , the eigenvalues of can be expressed as

where is a -matrix valued function, called (spectral) symbol of , and , are its eigenvalue functions.

3. Parallel Solution and Preconditioning of Triangular Toeplitz Linear Systems

In this section, we present a parallel model to perform computations involving -circulant matrices and show an application to the preconditioning of triangular Toeplitz linear systems. The procedure is summarized in the following section and has been introduced in 1987 by Bini, who in Ref. [8] addressed the problem of inverting a triangular Toeplitz matrix and proposed a parallel algorithm that exploits the properties of -circulant matrices.

3.1. Parallel Solution of (Block) Triangular Toeplitz Systems

Since a diagonalization of -circulant matrices through the Fourier matrix is available, it can be used to solve a linear system of the form , with as in Definition 2, and , in an efficient way. More precisely, supposing that is invertible and deriving from Proposition 2 the spectral decomposition

can be calculated as the matrix-vector product via the following algorithm

- Compute via the FFT, with sequential cost ;

- Compute , with sequential cost ;

- Compute via the FFT, with sequential cost .

This algorithm has a total sequential cost of , but, since all the steps can be fully parallelized, it can be lowered to in a parallel model of computation.

Now, the key idea to compute the solution of a (lower) triangular Toeplitz linear system , with and

consists in completing A to the following -circulant

and observing that

Hence, applying the algorithm described above, the solution of interest can be approximated by computing , in theory up to an arbitrarily small error for . Nevertheless, in practice an arbitrarily small error may not be attainable, since the condition number of the transform corresponds to the condition number of , which tends to as and thus tends to infinity as , causing stability issues. See the next subsection for a discussion in the setting of preconditioning.

The cost of this computation amounts to in a sequential implementation and to in a parallel implementation. We refer the reader to Ref. [8] for details and two strategies that allow for the retrieval of the exact solution.

We stress that, although for the sake of clarity the above discussion takes into account the lower triangular case only, the technique can be easily extended to block triangular and upper triangular Toeplitz matrices.

3.2. Preconditioning for (Block) Triangular Toeplitz Systems

The algorithm described in the previous subsection can be applied in a very straightforward way to the preconditioning of linear systems with (block) triangular Toeplitz structure, allowing for an extremely efficient computation of the solution. On the other hand, special attention must be paid to the issue of stability, which strongly relies on the parameter . We consider an example to present the approach, but we emphasize that the same basic ideas pertain to a variety of situations.

The example we use is taken from Ref. [21]. Here, Liu and Wu seek the numerical solution of the linear wave equation model

where with is a bounded and open domain with Lipschitz boundary, , are the initial conditions and f is a given source term. The equations are discretized all-at-once in time by means of implicit finite-difference schemes (see Ref. [21] for details). In the two-dimensional case with a rectangle, the attained matrix shows the following block lower triangular Toeplitz structure

in which

- m is the number of grid nodes in the spatial mesh and is the spatial step size;

- is the time mesh step size, obtained as , where n is the number of time steps;

- is the identity matrix of size m;

- is the matrix obtained by discretizing the Laplacian operator in (4) with the central finite difference method.

The associated linear system encompasses all the time steps at once and its solution corresponds to the solution to (4) at each time step simultaneously. In other words, if denotes the solution to (4) at the j-th time step, the system has the form

and therefore the vectors , are computed in parallel as the solution to the all-at-once linear system with the coefficient matrix .

To solve this linear system, the authors adopt a preconditioned GMRES method and construct a class of block -circulant preconditioners as follows. Defining and as

as described in Ref. [22], can be expressed in the form

Then, given the matrix as in Section 2 and

the generalized preconditioner is defined for as

By exploiting the simultaneous diagonalization of the -circulant matrices and and the properties of the Kronecker product, can be written as

where is the Fourier matrix, , and , with , being the first column of and .

Therefore, given a vector , the proposed algorithm for computing is the following

- Compute via the FFT;

- Compute , , where , denote the k-th block of dimension m of , ;

- Compute via the FFT;

Which in its essence is identical to the one proposed by Bini, although we must observe that Ref. [8] is not referenced in the work by Fan and Liu [21].

We conclude this section by discussing the matter of stability when dealing with -circulants. While theoretically the aforementioned algorithm may be applied whenever , in practical implementation exceedingly small values of the parameter will cause stability issues, which should be considered, when stating the global precision of a solution method. In fact, as we mentioned in the previous section, the condition number of the transform is equal to that of , which tends to as and therefore tends to infinity as .

We tested the stability of the procedure described above by fixing a vector , computing and retrieving the starting vector as with the spectral decomposition of , using the algorithm described above and in Ref. [21]. We denote the retrieved vector with .

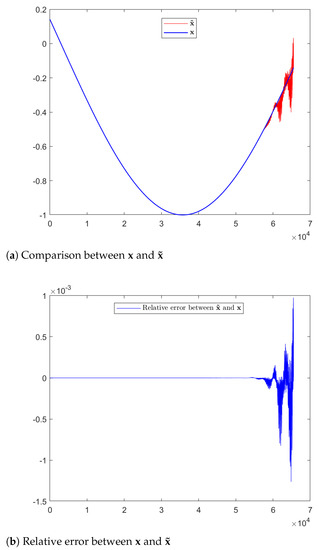

As a first example, we set , , and show the relative errors between and as the parameter decreases in Table 1. Clearly, as gets closer to zero, the error grows and ceases to be an accurate approximation of , especially in the right part in accordance with the accumulation errors in the solution of a lower triangular system. In particular the error for very small values of becomes even worse than that obtained when using a direct inherently sequential algorithm working for triangular systems (). Figure 1 shows the plots of the two vectors for .

Table 1.

The relative error between and as decreases.

Figure 1.

and for .

Our second example, where is this time chosen at random, grants similar results, collected in Table 2. We observe that this matter is not addressed in Ref. [21].

Table 2.

The relative error between randomly chosen and as decreases.

Because of these instability issues, the possibility of using preconditioned MINRES recently emerged, where the preconditioner is taken from the algebra whose eigenvector matrix can be chosen as a real symmetric orthogonal and very stable transform (see Ref. [22] and the references therein). This type of methods, called all-at-once, have attracted a remarkable attention in the last 10 years for the potential use in parallel in time methods for evolution of PDEs; see Refs. [23,24,25,26] and their references. Further works on the matter can be found in Refs. [27,28], also for fractional differential equations.

We now consider the second application of -circulants in the context of subdivision schemes for generating curves and surfaces.

4. Basic Ideas on Scalar Subdivision Schemes

A subdivision scheme is an iterative method that generates curves and surfaces based on successive refinements of a polygon or a mesh. The rules that determine said refinements can be formulated by linear, non-linear, or geometrical operators [29,30,31,32,33]. The case of linear rules is related to refinable functions in Wavelets Theory [34]. In this setting, the vertices of the polygon or mesh are the coefficients in a particular basis of the so-called subdivision curve or subdivision surface. In what follows we focus on the case of the subdivision curve.

Linear uniform subdivision schemes are based on the notion of refinable function, i.e., a function satisfying a relation of the form

and such that, given an initial set of control points and the periodisation , , the closed curve

can also be written as

with

where for the set of new points the periodisation modulo holds, i.e., , .

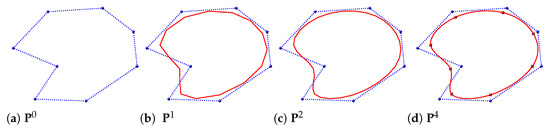

The relation in (7) is known as the subdivision rule and defines a subdivision scheme, while the coefficients in (5) form the so-called subdivision mask. Then, a subdivision scheme is an iterative method where a curve is generated by consecutive refinements of an initial polygon (see Figure 2). Regarding the convergence of this scheme, it has been proven that the sequence of polygons with vertices in converges uniformly to a smooth curve , although in practice a few iterations are sufficient to produce a polygon that appears smooth to the human eye.

Figure 2.

Iterations of a non-interpolatory subdivision scheme. (a) Control points (blue balls). (b) First refinement of the polygon with vertices . (c) Second refinement with vertices . (d) Fourth refinement and interpolated points in the limit (red squares).

The sampling of the curve at integer parameters is derived from (5) and reads as

The set of values is called first limit stencil and it can be obtained from a linear system of equations stemming from (5) (see Ref. [35]) or by performing the spectral analysis of the local subdivision matrix (see Refs. [33,36,37]). In a similar way, the second limit stencil and higher order stencils , , can be determined. Hence, by (6) the k-th derivative of the curve at dyadic parameters is

The mask and the stencils have compact support and therefore in (5) they define a function that has compact support. Even though we use indexes ranging over , we are dealing with finite masks and stencils and correspondingly we have only a finite number of non-zero elements.

Given the control points , let us denote with the points obtained by evaluating the subdivision curve with (8), i.e., , (see Figure 2d). This problem is modeled by the linear operator such that

is referred to as the matrix that represents the point interpolation operator for linear subdivision schemes. Note that the structure of is circulant, according to Definition 1, and the first row is the vector

Depending on the symmetry of the stencils, some particular cases may be analysed.

Definition 4.

A subdivision scheme is called

- Odd-symmetric if ;

- Even-symmetric if for .

This definition contains particular cases of the primal [38] and dual [39] form of subdivision schemes, respectively, and the corresponding limit stencils show the same type of symmetries [33]. As a consequence, the odd-order limit stencil inherits the odd or even symmetry:

Then, for the even-order limit stencil we get

For it holds and .

Now, let us consider the interpolation of n points with a subdivision curve. It is natural to think of those points as a sampling of the curve at integer parameters , , as in (8). By doing so, we get the inverse problem with respect to (10) (see Ref. [40]).

In this setting, an interpolation problem is said to be singular if the operator is singular and therefore ill-posed for the selected stencil. In Section 5, we show that in some cases the singularity depends on the value of n.

To solve a non-singular interpolation problem where has a circulant structure, one can take advantage of the diagonalization through the Fourier matrix recalled in Section 2. However, in the singular case more strategy is needed. In the literature, in the context of curve and surface schemes those cases are treated by a fitting model [41] or by a fairness functional [42] while introducing more points as degrees of freedom. Another possible approach consists in the regularization in a Tikhonov sense [40,43]. Moreover, it is possible to consider a non-singular perturbation of the matrix . This is the strategy that we explore in Section 5.2, using a computationally convenient -circulant matrix.

The Block-Circulant Case for Hermite Interpolation

Considering the case of interpolating points and associated derivatives up to the -th order, with tangent interpolation as the first instance, Equation (9) provides some insight. Let be the data that we wish to interpolate and suppose that there exists a sequence such that the subdivision curve interpolates the data , i.e.,

If the curve is defined by n control points as in (10), we may get a solution to the point interpolation problem (10) that contradicts the values of higher order derivatives. Thus, in order to interpolate all the information in with an equal amount of variables and equations, we need to use control points with the periodisation , . A natural choice is to set the parameters in (13) as , . Then, from (9) we get the equations

which can be represented in matrix form as

where the blocks of the matrix satisfy for and

The structure of the matrix in (14) is the block adaptation of the scalar version (10), i.e., it is a block circulant matrix, as described in Definition 3. Indeed, when , and . Therefore, the Hermite problem represents the block extension of the point interpolation problem in the matrix sense and we can exploit the corresponding linear algebra tools to solve both inverse problems.

5. Interpolation Model with Scalar Subdivision Schemes

Given the problem in (10), let us apply the tools available for circulant matrices to solve it. Indeed, by Remark 1 we deduce that the spectrum of is

and for the stencils with compact support in the symbol can be written as

independent of n. Thus, the singularity of depends on the existence of roots for in the grid .

For odd-symmetric schemes, and thus

Conversely, for even-symmetric schemes , hence

Either way, the first limit stencil satisfies . Note that for odd-symmetric schemes it holds

while for the even-symmetric schemes

Therefore, the study of the symbol can be restricted to the interval instead of . In particular, for both (17) and (18), implies .

From (18), for even-symmetric schemes we find independently of n and . As belongs to the grid for even values of n, then from (16) we obtain the following result.

Proposition 4.

For any even-symmetric subdivision scheme, if the amount of interpolated points n is even, then the interpolation matrix is singular.

On the other hand, in the context of odd-symmetric schemes the symbol may also vanish in the grid . As an example, consider the primal family of J-spline schemes [38] for the particular case that generates subdivision curves

with first limit stencil

The symbol

has a root at , which belongs to the grid when n is an even number.

In the Hermite interpolation scenario, we can find a singular point and tangents interpolation operator even when the point interpolation operator is not singular. Let us consider for instance the cubic B-spline scheme, whose first and second limit stencil are , respectively. Then the corresponding matrices are

which is not singular, and

which is singular with kernel of dimension 1.

As a matter of fact, in the specific case of point and tangent interpolation with odd-symmetric schemes, the following proposition holds.

Proposition 5.

Using an odd-symmetric subdivision scheme and setting , the attained matrix is singular.

Proof.

5.1. A Solution by Shifting Parameters

Propositions 4 and 5 state that, for even-symmetric and odd-symmetric masks, the matrices and are singular independently of the mask. In this section, we propose a different model for interpolating that avoids this inconvenience. What follows is motivated by Plonka’s work [44] on Hermite interpolation with B-spline parametric curves.

Let us consider the dual subdivision schemes again. In Section 4, by interpolating at the parameters , , we obtained the first limit stencil as

Here we consider the variant

with . More specifically, given a positive integer , we investigate the method for varying in . The evaluation of the basic function is done as in [35].

Proposition 6.

For an odd-symmetric subdivision scheme with mask where , the stencil obtained with the set is even-symmetric.

Analogously, for an even-symmetric subdivision scheme with mask where , the stencil obtained with the set is odd-symmetric.

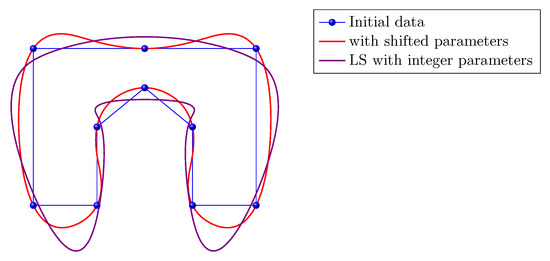

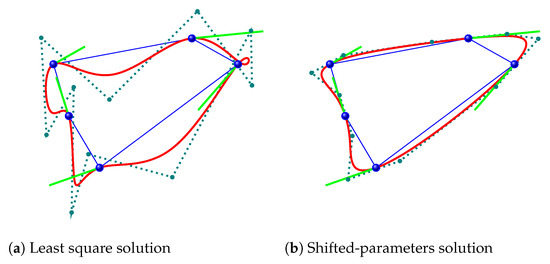

Our proposal consists in modifying the interpolation models (10) and (14) to . For point interpolation, the symbol changes from (18) to (17) and this way we resolve the singularity in Proposition 4. Figure 3 portrays an example of even-symmetric subdivision scheme where after a -shift the new matrix is nonsingular. However, when the new stencil leads to a trigonometric polynomial with roots in the grid it is not possible to avoid the singularity of the corresponding matrix. In such situations, one may consider the general approach , with or treat the singularity as discussed in the next subsection.

Figure 3.

Point interpolation with a dual subdivision scheme [39] considering the shifted parameters.

The use of shifted parameters for interpolation can be employed as a degree of freedom for the geometry of the interpolation curve (see Figure 4). Nevertheless, the symmetry provided by the subdivision scheme might be lost.

Figure 4.

Interpolation with quintic uniform B-spline at different parameter values.

Now let us consider the point and tangent interpolation with the interpolation at the parameters for odd-symmetric schemes. The first block (20) in the factorization of becomes

with

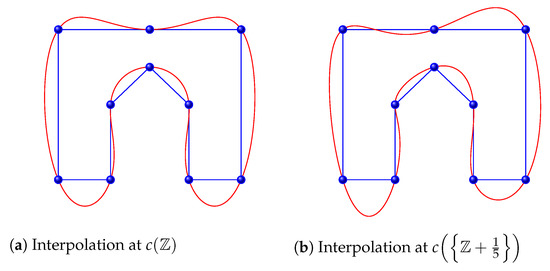

This block is not singular for all stencils, as was the case when in Proposition 5. Figure 5b is an example of an odd-symmetric subdivision scheme where after a -shift the new matrix is nonsingular, while Figure 5a shows the least square solution (), which in this case presents artifacts—loops, to be precise.

Figure 5.

Interpolating points and tangents directions with a cubic B-spline curve by shifting the parameters.

In the cases of point interpolation with odd-symmetric schemes and point-tangent interpolation with even-symmetric schemes, we stick with the choice of interpolation at integer parameters.

5.2. Our Regularizing Strategy

We have already observed that in some cases the matrix obtained by interpolating at integer parameters is singular and that sometimes the singularity cannot be avoided neither by a proper shift of the parameters. In those cases, independently of the interpolated points, the inverse of or is not defined and an alternative solution has to be chosen. The first idea is using the pseudo-inverse of the matrix, providing a least-square solution [41]. Another approach is considering a regularization, which is an approximation of the ill-posed problem by a family of neighboring well-posed problems [40]. Among the regularization methods for solving an ill-posed problem whose discrete form is the linear system we mention the Tikhonov approach, which consists in solving the family of problems

depending on the regularization parameter . The latter controls the weight given to the residual norm and the regularization term. The optimal value for can be chosen by discrepancy principle, generalized cross-validation, or the L-curve method [43]. The operator L, which can be taken as the identity operator or a differential operator (for instance, the first or second derivative operator), looks to alter the least square solution to enforce special features of the regularized approximations [40,43]. As a necessary condition it is required that . This problem is equivalent to solve the normal equations for each .

Since the quality of the obtained solution either by least-squares or Tikhonov regularization is not acceptable in many cases, in the following we study a new regularized problem depending on a parameter in which a (possibly block) -circulant matrix replaces the original circulant coefficient matrix.

First we consider the basic interpolation case where is the -circulant counterpart of with . By Remark 3, the eigenvalues of the new matrix are given by the standard uniform sampling of the function . In this manner, the spectrum of is shifted. We observe that and are both singular if there exists at least two roots of in the grid with distance .

If the latter condition is not satisfied, then the initial system is singular, while its perturbation has a unique, complex solution, even though the original problem is defined in the real domain. However, for small enough in modulus, the imaginary part of the solution becomes negligible and the perturbed system is close to the original one.

Secondly, we consider the interpolation of points and associated tangent vectors. The block (20) in the corresponding --circulant matrix is

Then, is not necessarily singular, even if is, and we can solve rather than (14). In this case, the structure of the symbol differs from (17) and (18).

Remark 4.

For the particular case of cubic B-spline curves, in Ref. [45] the point and tangent interpolation problem is solved by considering only the case of unitary tangents , . Their proposal consists in a non-linear iterative method and convergence has not been proven.

6. Numerical Tests

In the present section we perform a few numerical tests in order to assess the quality of the solution obtained by adopting the -circulant regularization, in the setting of a singular interpolation operator. In all the following examples we employ the J-spline family [38].

We denote by and the control point vectors obtained as (resp. in the Hermite case) and (resp. in the Hermite case), respectively. The pseudo-inverses and are given by

with for and for , and where is the k-th diagonal element in (3). For we immediately get and .

We compare the subdivision curves generated from the control point vectors and ; firstly in terms of interpolation, which is the main goal. With this purpose, consider the residuals , , and which are vectors of points. Notice that the last two residuals are independent from the systems of equations but they are related to the interpolation problems (10) and (14) with the regularized solution.

By reasoning in local terms, we choose the norms

with the j-row of matrix A and the vector 2-norm. This way we measure how far the interpolated information in , is from the approximations in , and by the maximum distance.

On the other hand, reasoning in global terms we consider the relative error with Frobenius matrix norms

In order to evaluate the quality of each curve, proper fairness measures could be used [46,47], penalizing artifacts such as loops (see Figure 5a).

In each figure, the curve obtained with the least square solution is portrayed in solid green lines, while the one obtained with the regularized solution is represented by dashed red lines.

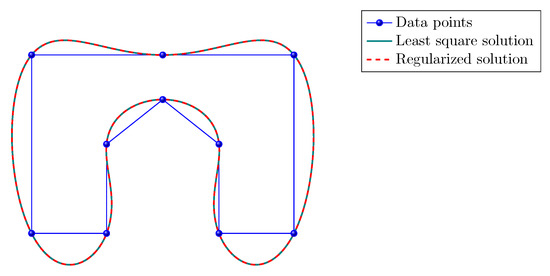

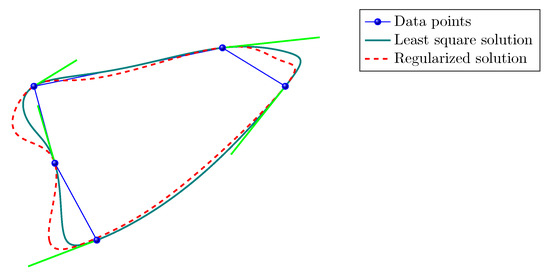

The solution computed with the -circulant matrix interpolates the given points even if a non singular matrix is used. Indeed, in Figure 6, where we set , we only see one line because the two solutions and visually match. We get similar results with values of close to . Hence, the quality of the interpolating solution is not affected by the perturbation.

Figure 6.

Point interpolation with a quintic B-spline curve (that belongs to the J-spline family). Green solid line: least square solution. Red dashed line: regularized solution.

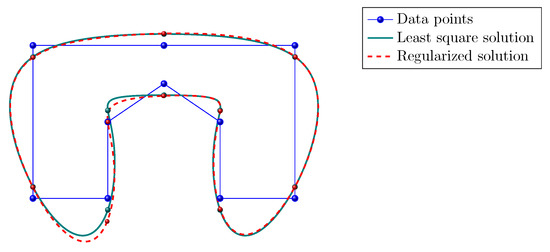

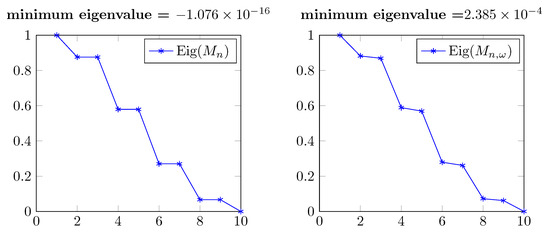

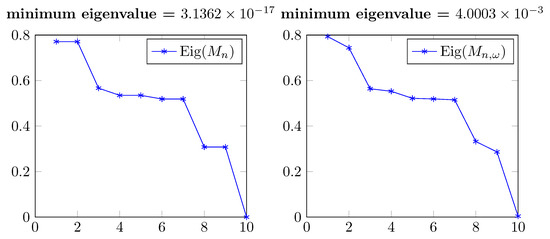

Applying a primal scheme with odd-symmetric mask as in (19), with a singular interpolation operator, the data points are not interpolated by the least square solution (see Figure 7). The spectrum of is perturbed considering a regularized solution and shifting the null eigenvalue as shown in Figure 8. As a result, the condition number is improved, but the approximation is not. In Figure 7 the results are shown for . The residual norms are

Figure 7.

Point interpolation with a J-spline curve (19) with singular interpolation operator (that belongs to the J-spline family). Green solid line: least square solution. Red dashed line: regularized solution.

Figure 8.

Eigenvalues of and (real part) in Figure 7.

It is worth noticing that the regularized curve is closer to the interpolation points, although this is not reflected in the norms.

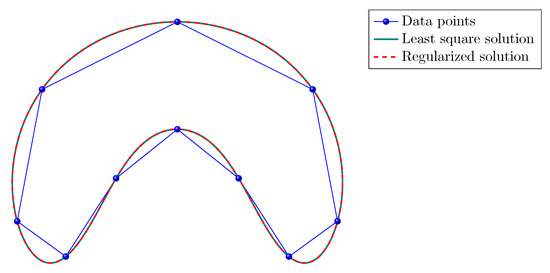

In the block setting for point and tangent interpolation (see Figure 9) the situation is similar. Even though the spectrum of is perturbed with respect to and does not contain a null eigenvalue as the latter (see Figure 10), the solution is not improved; refer to Figure 9 in which . We observe that the points are interpolated, but the tangent interpolation is less accurate. In this case the residual norms are

Figure 9.

Point and tangent vectors interpolation with a quintic B-spline curve (that belongs to the J-spline family). Green solid line: least square solution. Red dashed line: regularized solution.

Figure 10.

Eigenvalues of and (real part) in Figure 9.

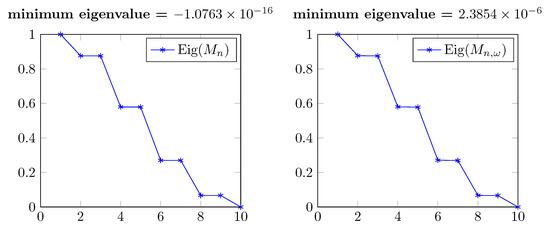

However, if we consider a known solution , we can generate from the columns of and obtain, for a suitable parameter , a solution that interpolates the points as accurately as the least square solution (see Figure 11 in which ), even though the spectra of and are different (see Figure 12).

Figure 11.

Point interpolation with a J-spline curve (19) with a singular interpolation operator for a known solution. Green solid line: least square solution. Red dashed line: regularized solution.

Figure 12.

Eigenvalues of and (real part) in Figure 11.

7. Conclusions

In the present work we explored two distinct applications of -circulant matrices.

After a brief introduction of this matrix algebra and its fundamental properties, we first described their role in the parallel solution and preconditioning of (block) triangular linear systems of Toeplitz type. We recalled an efficient classical algorithm, which relies on the well-known diagonalization of -circulants through the FFT, and studied the stability issue, which needs special attention due to the fact that the condition number of the fast transform strongly depends on the parameter .

Then we analyzed subdivision schemes, for which a few questions remain open.

Indeed, the interpolation of points and tangent vectors with scalar subdivision schemes as inverse problem may lead to a system of equations with a singular matrix. We proposed as a first possible solution to change the common approach of interpolating at integer parameters in dependence of the symmetry of the subdivision mask. With this solution we avoid the presence of a singular matrix in the model and we still benefit from the Fourier factorization of a non-singular circulant or block-circulant matrix.

In some cases it is not possible to avoid the singularity while keeping the symmetry of the stencil. In such situations we considered the solution obtained by the means of least square solution and related -circulant regolarization for the interpolation problem. More in detail, owing to the singular character of the matrix in the interpolation setting, difficulties are overcome by perturbing the spectrum, by taking into consideration its -circulant counterpart.

As observed in the numerical experiments, the -regularization approach is not sufficient for solving the problem. When the original is singular, the -perturbation is well conditioned, but the interpolation condition is not represented exactly and the latter affects the approximation quality. However, the numerical solution stemming from the -circulant linear system interpolates the data points at least in some cases where the solution is known.

A further open problem is the study of the solution Sol(: in this setting, we would like to explore the existence of an asymptotic expansion of the form

when a small parameter is considered.

An expansion of the latter type would open the door to simple and cheap extrapolation procedures for the computation of very precise solutions. Preliminary numerical have been performed and are encouraging. We are convinced that this research line is worth to be investigated in future steps.

Author Contributions

Conceptualization, all the authors equally; methodology, all the authors equally; software, R.D.F.; validation, R.D.F. and R.L.S.; formal analysis, all the authors equally; investigation, all the authors equally; resources, all the authors equally; writing—original draft preparation, all the authors equally; writing—review and editing, all the authors equally; visualization, all the authors equally; supervision, S.S.-C.; project administration, all the authors equally; funding acquisition, S.S.-C. All authors have read and agreed to the published version of the manuscript.

Funding

The work of the three authors is partly supported by the Italian Agency INdAM-GNCS. The work of Stefano Serra-Capizzano is funded from the European High-Performance Computing Joint Undertaking (JU) under grant agreement No 955701. The JU receives support from the European Union’s Horizon 2020 research and innovation programme and Belgium, France, Germany, Switzerland. Furthermore Stefano Serra-Capizzano is grateful for the support of the Laboratory of Theory, Economics and Systems–Department of Computer Science at Athens University of Economics and Business.

Institutional Review Board Statement

Not applicable.

Data Availability Statement

The data are obtained using our software and are available under request.

Acknowledgments

The three authors are members of the INdAM research group GNCS.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Garoni, C.; Serra-Capizzano, S. Generalized Locally Toeplitz Sequences: Theory and Applications, Vol. I; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Ng, M.K. Iterative Methods for Toeplitz Systems. Numerical Mathematics and Scientific Computation; Oxford University Press: New York, NY, USA, 2004. [Google Scholar]

- Aricò, A.; Serra-Capizzano, S.; Tasche, M. Fast and numerically stable algorithms for discrete Hartley transforms and applications to preconditioning. Commun. Inf. Syst. 2005, 5, 21–68. [Google Scholar] [CrossRef]

- Hansen, P.C.; Nagy, J.G.; O’Leary, D.P. Deblurring images. Matrices, spectra, and filtering. In Fundamentals of Algorithms; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2006; Volume 3. [Google Scholar]

- Kailath, T.; Olshevsky, V. Displacement structure approach to discrete-trigonometric-transform based preconditioners of G. Strang type and of T. Chan type. Toeplitz matrices: Structures, algorithms and applications. (Cortona, 1996). Calcolo 1998, 33, 191–208. [Google Scholar] [CrossRef]

- Serra-Capizzano, S. A Korovkin-type theory for finite Toeplitz operators via matrix algebras. Numer. Math. 1999, 82, 117–142. [Google Scholar] [CrossRef]

- Davis, P. Circulant Matrices; John Wiley and Sons: Hoboken, NJ, USA, 1979. [Google Scholar]

- Bini, D. Matrix structures in parallel matrix computations. Calcolo 1988, 25, 37–51. [Google Scholar] [CrossRef]

- Chan, R.H.F.; Jin, X.Q. An introduction to iterative Toeplitz solvers. In Fundamentals of Algorithms; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 2007; Volume 5. [Google Scholar]

- Chan, R.H.; Ng, M.K. Conjugate gradient methods for Toeplitz systems. SIAM Rev. 1996, 38, 427–482. [Google Scholar] [CrossRef]

- Chan, T.F.; Elman, H.C. Fourier analysis of iterative methods for elliptic problems. SIAM Rev. 1989, 31, 20–49. [Google Scholar] [CrossRef]

- Huckle, T. A note on skewcirculant preconditioners for elliptic problems. Numer. Algorithms 1992, 2, 279–286. [Google Scholar] [CrossRef]

- Huckle, T. Thomas Circulant and skewcirculant matrices for solving Toeplitz matrix problems. Iterative methods in numerical linear algebra (Copper Mountain, CO, 1990). SIAM J. Matrix Anal. Appl. 1992, 13, 767–777. [Google Scholar] [CrossRef]

- Serra-Capizzano, S. The GLT class as a generalized Fourier analysis and applications. Linear Algebra Appl. 2006, 419, 180–233. [Google Scholar] [CrossRef]

- Loan, C.V. Computational frameworks for the fast Fourier transform. In Frontiers in Applied Mathematics; Society for Industrial and Applied Mathematics (SIAM): Philadelphia, PA, USA, 1992; Volume 10. [Google Scholar]

- Aricò, A.; Donatelli, M.; Serra-Capizzano, S. V-cycle optimal convergence for certain (multilevel) structured linear systems. SIAM J. Matrix Anal. Appl. 2004, 26, 186–214. [Google Scholar] [CrossRef]

- Bertaccini, D.; Ng, M.K. Block ω-circulant preconditioners for the systems of differential equations. Calcolo 2003, 40, 71–90. [Google Scholar] [CrossRef]

- Serra-Capizzano, S.; Tablino-Possio, C. Multigrid methods for multilevel circulant matrices. SIAM J. Sci. Comput. 2004, 26, 55–85. [Google Scholar] [CrossRef]

- Bini, D. Parallel solutions of certain Toeplitz linear systems. SIAM J. Comput. 1984, 13, 268–276. [Google Scholar] [CrossRef]

- Cline, R.E.; Plemmons, R.J.; Worm, G. Generalized inverses of certain Toeplitz matrices. Linear Algebra Its Appl. 1974, 8, 25–33. [Google Scholar] [CrossRef]

- Liu, J.; Wu, S.L. A fast block α-circulant preconditioner for all-at-once systems from wave equations. SIAM J. Matrix Anal. Appl. 2020, 41, 1912–1943. [Google Scholar] [CrossRef]

- Hon, S.; Serra-Capizzano, S. A block Toeplitz preconditioner for all-at-once systems from linear wave equations. Electron. Trans. Numer. Anal. 2023, 58, 177–195. [Google Scholar] [CrossRef]

- Danieli, F.; Wathen, A.J. All-at-once solution of linear wave equations. (English summary). Numer. Linear Algebra Appl. 2021, 28, 16. [Google Scholar] [CrossRef]

- Gander, M.J.; Halpern, L.; Rannou, J.; Ryan, J. A direct time parallel solver by diagonalization for the wave equation. SIAM J. Sci. Comput. 2019, 41, A220–A245. [Google Scholar] [CrossRef]

- Gander, M.J.; Wu, S.L. A diagonalization-based parareal algorithm for dissipative and wave propagation problems. SIAM J. Numer. Anal. 2020, 58, 2981–3009. [Google Scholar] [CrossRef]

- Soszyńska, M.; Richter, T. Adaptive time-step control for a monolithic multirate scheme coupling the heat and wave equation. BIT 2021, 61, 1367–1396. [Google Scholar] [CrossRef]

- Bertaccini, D.; Durastante, F. Limited memory block preconditioners for fast solution of fractional partial differential equations. J. Sci. Comput. 2018, 77, 950–970. [Google Scholar] [CrossRef]

- Jiang, Y.; Liu, J. Fast parallel-in-time quasi-boundary value methods for backward heat conduction problems. Appl. Numer. Math. 2023, 184, 325–339. [Google Scholar] [CrossRef]

- Andersson, L.E.; Stewart, N.F. Introduction to the Mathematics of Subdivision Surfaces; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2010. [Google Scholar]

- Dyn, N. Linear and Nonlinear Subdivision Schemes in Geometric Modeling; School of Mathematical Sciences, Tel Aviv University: Tel Aviv, Israel, 2008. [Google Scholar]

- Dyn, N.; Levin, D. Subdivision schemes in geometric modelling. Acta Numer. 2002, 11, 73–144. [Google Scholar] [CrossRef]

- Dyn, N.; Levin, D.; Gregory, J.A. A 4-point interpolatory subdivision scheme for curve design. Comput. Aided Geom. Des. 1987, 4, 257–268. [Google Scholar] [CrossRef]

- Sabin, M. Analysis and Design of Univariate Subdivision Schemes; Springer: Berlin/Heidelberg, Germany, 2010. [Google Scholar]

- Chui, C.; de Villiers, J. Wavelet Subdivision Methods: Gems for Rendering Curves and Surfaces; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Schaefer, S.; Warren, J. Exact evaluation of limits and tangents for non-polynomial subdivision schemes. Comput. Aided Geom. Des. 2008, 25, 607–620. [Google Scholar] [CrossRef]

- Daubechies, I.; Guskov, I.; Sweldens, W. Commutation for irregular subdivision. Constr. Approx. 2001, 17, 479–514. [Google Scholar] [CrossRef]

- Dyn, N.; Gregory, J.A.; Levin, D. Analysis of uniform binary subdivision schemes for curve design. Constr. Approx. 1991, 7, 127–147. [Google Scholar] [CrossRef]

- Rossignac, J.; Schaefer, S. J-splines. Comput. Aided Des. 2008, 40, 1024–1032. [Google Scholar] [CrossRef]

- Dyn, N.; Floater, M.S.; Hormann, K. A C2 four-point subdivision scheme with fourth order accuracy and its extensions. In Mathematical Methods for Curves and Surfaces: TROMSØ 2004, Modern Methods in Mathematics; Nashboro Press: Brentwood, TN, USA, 2005; pp. 145–156. [Google Scholar]

- Engl, H.W.; Hanke, M.; Neubauer, A. Regularization of Inverse Problems; Springer: New York, NY, USA, 1996. [Google Scholar]

- Hoppe, H.; DeRose, T.; Duchamp, T.; Halstead, M.; Jin, H.; McDonald, J.; Schweitzer, J.; Stuetzle, W. Piecewise smooth surface reconstruction. In Proceedings of the 21st Annual Conference on Computer Graphics and Interactive Techniques, Orlando, FL, USA, 24–29 July 1994; pp. 295–302. [Google Scholar]

- Halstead, M.A.; Kass, M.; DeRose, T. Efficient, fair interpolation using Catmull-Clark surfaces. In Proceedings of the 20th Annual Conference on Computer Graphics and Interactive Techniques, Anaheim, CA, USA, 2–6 August 1993; pp. 35–44. [Google Scholar]

- Hansen, P.C. Rank-Deficient and Discrete Ill-Posed Problems: Numerical Aspects of Linear Inversion; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 1999. [Google Scholar]

- Plonka, G. An efficient algorithm for periodic Hermite spline interpolation with shifted nodes. Numer. Algorithms 1993, 5, 51–62. [Google Scholar] [CrossRef]

- Okaniwa, S.; Nasri, A.; Lin, H.; Abbas, A.; Kineri, Y.; Maekawa, T. Uniform B-spline curve interpolation with prescribed tangent and curvature vectors. IEEE Trans. Vis. Comput. Graph. 2012, 18, 1474–1487. [Google Scholar] [CrossRef]

- Albrecht, G. Invariante Gütekriterien im Kurvendesign–Einige neuere Entwicklungen. Effiziente Methoden der Geometrischen Modellierung und der Wissenschaftlichen Visualisierung; Springer: Berlin/Heidelberg, Germany, 1999; pp. 134–148. [Google Scholar]

- Veltkamp, R.C.; Wesselink, W. Modeling 3D curves of minimal energy. Comput. Graph. Forum 1995, 14, 97–110. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).