Method for Determining the Dominant Type of Human Breathing Using Motion Capture and Machine Learning

Abstract

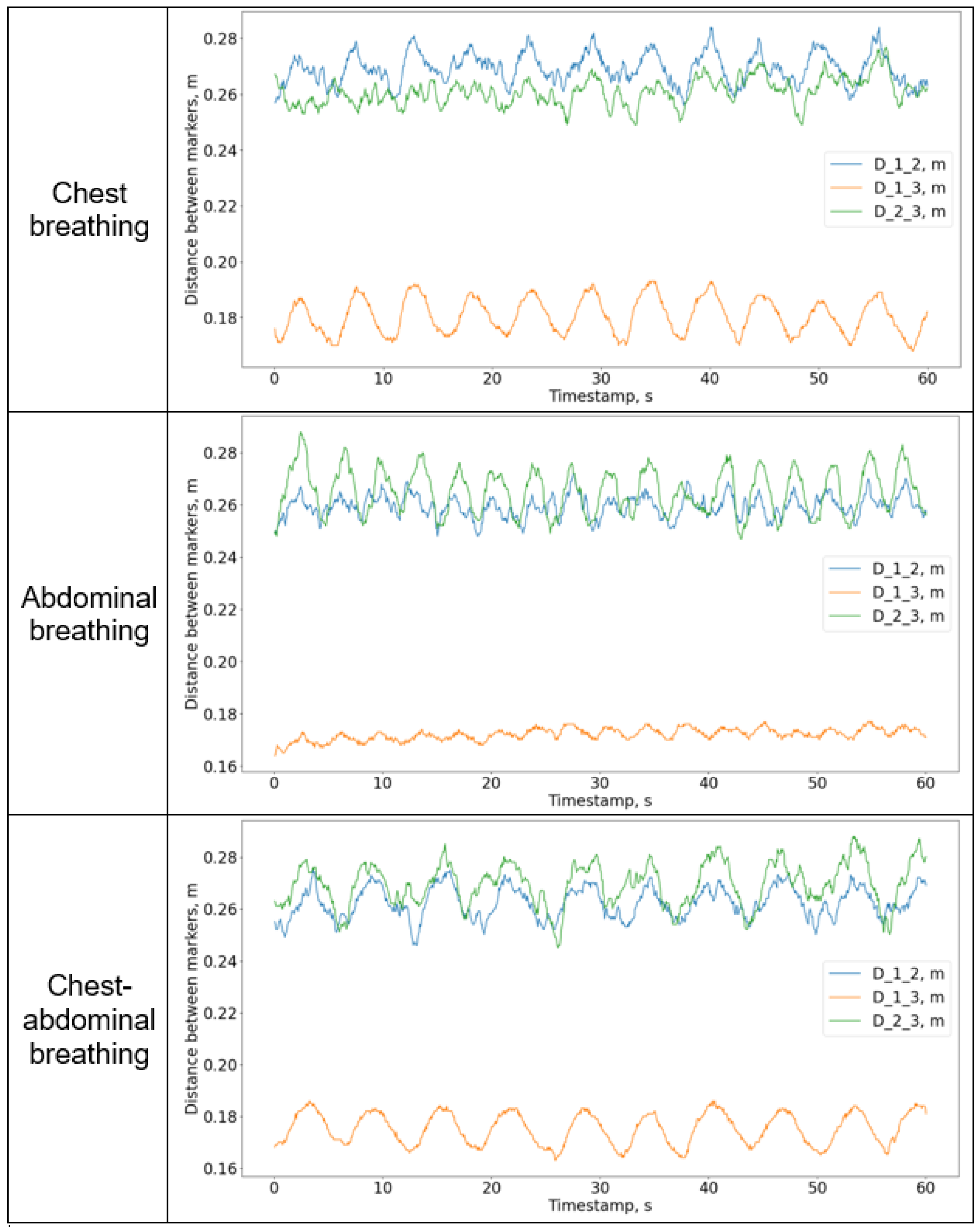

1. Introduction

- RQ1. Can kinematic data taken from key points of the torso be used to determine the person’s characteristic type of breathing with satisfactory accuracy for respiratory rehabilitation?

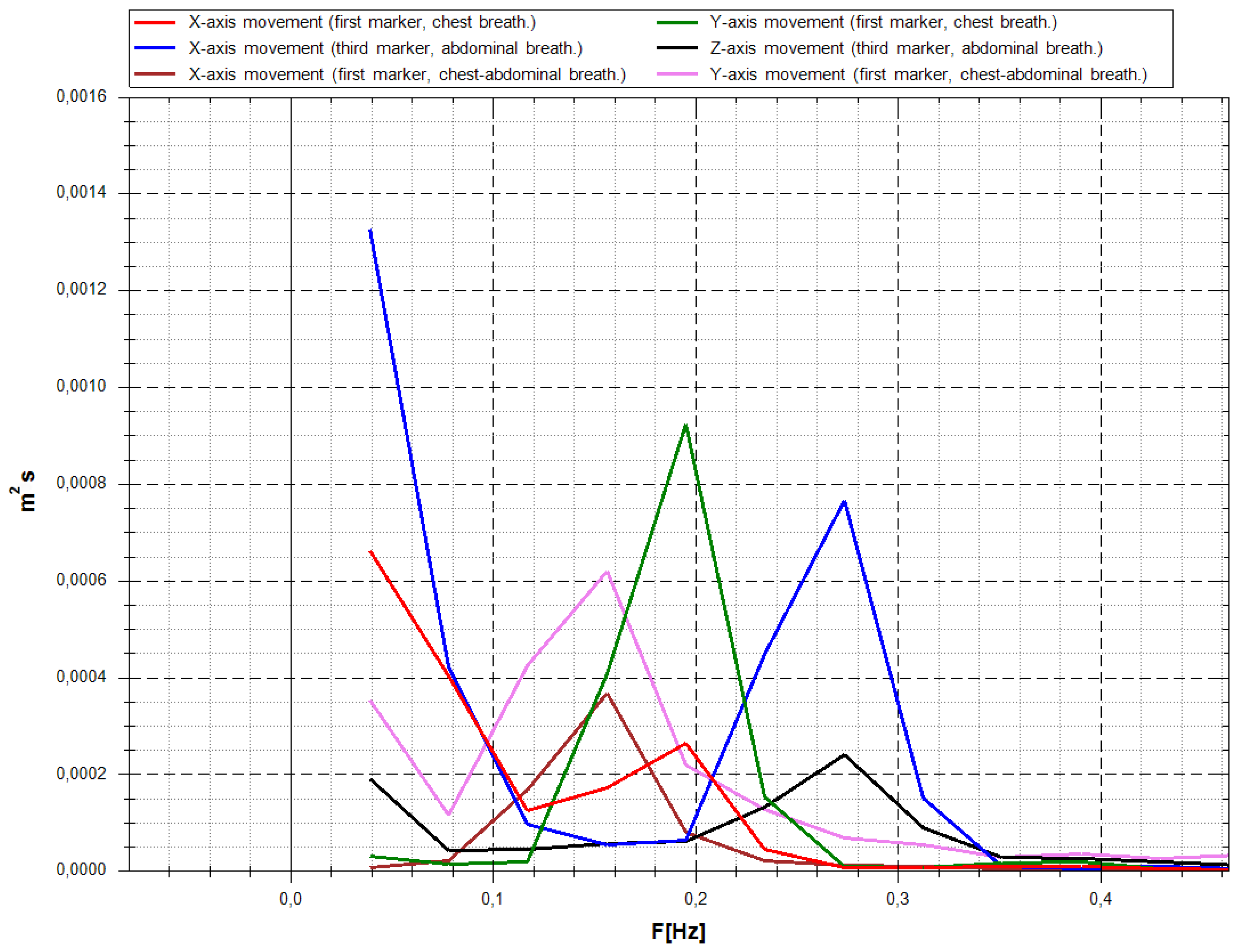

- RQ2. What is the difference in frequency responses of the person’s torso’s marker movement for different types of breathing?

2. Equipment and Methods of Capturing and Preparing Data

2.1. Motion Capture System

- Three VIVE Tracker markers (3.0) (the minimum number required to track the movements of the chest and abdomen);

- Three waist elastic belts with fasteners for markers;

- Two SteamVR Base Stations 2.0.

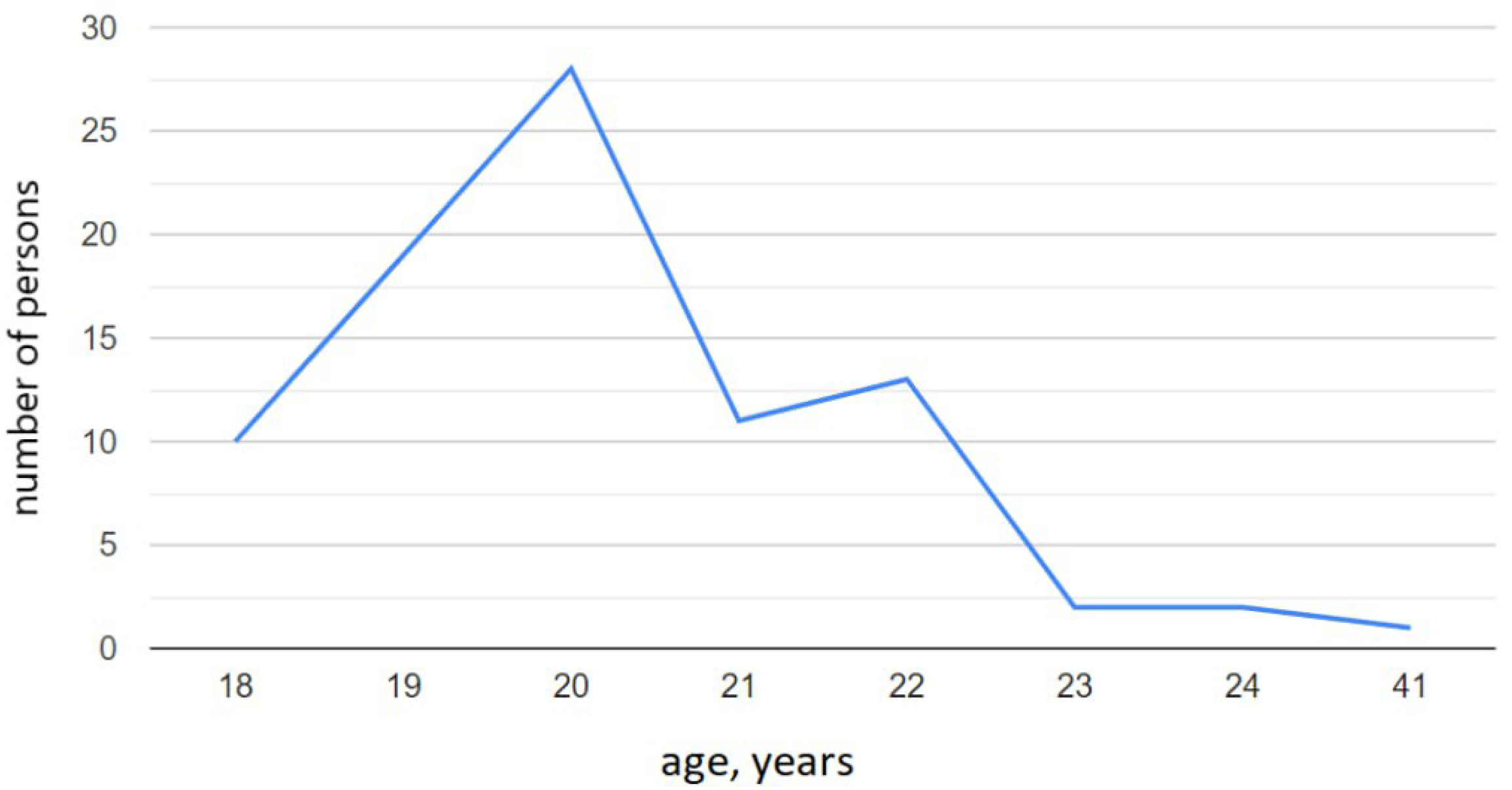

2.2. Study Design

- date of birth;

- biological sex;

- the last time interval of having COVID-19 (if any);

- percentage of lung damage;

- the presence of respiratory diseases at the time of data collection.

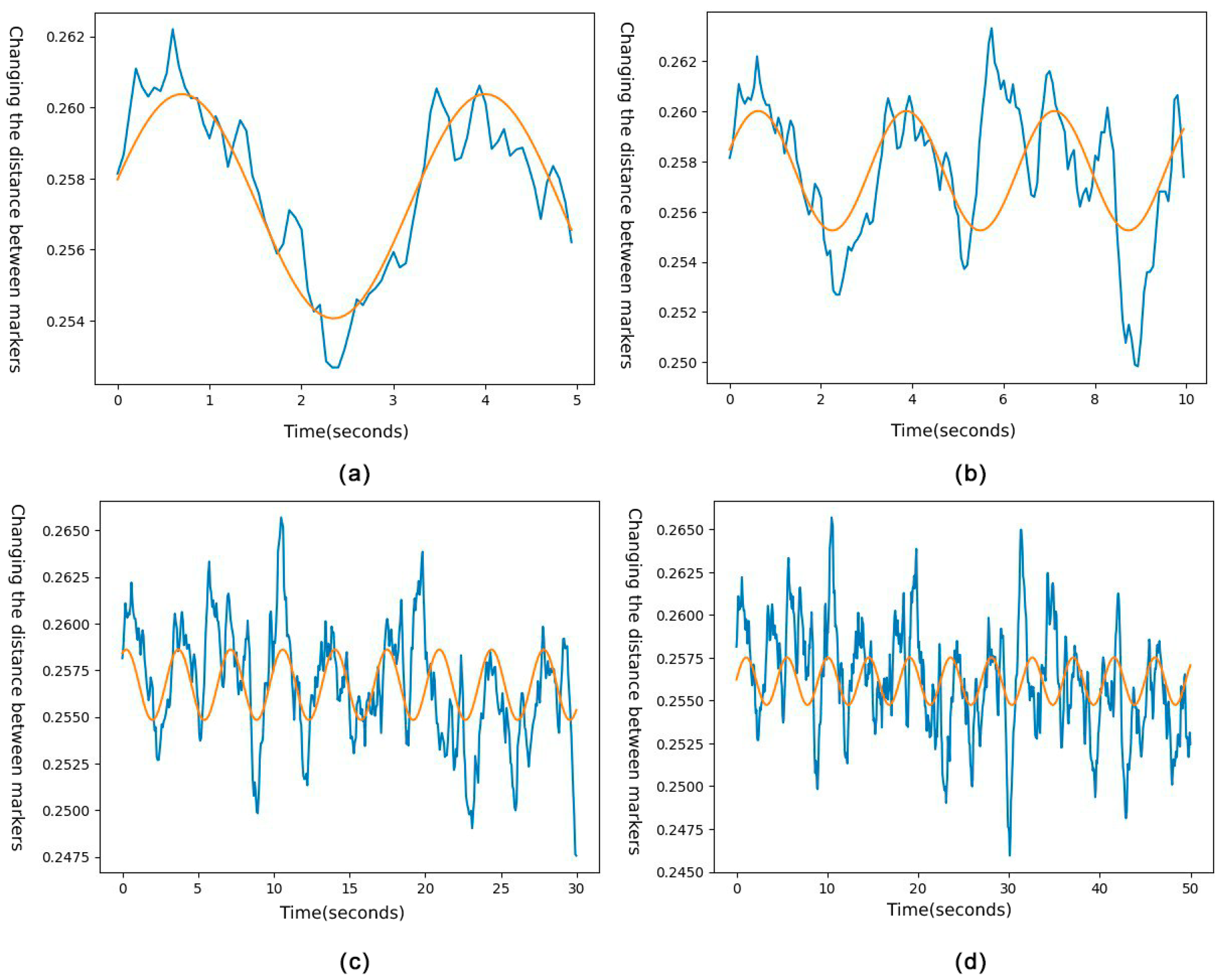

2.3. Data Preprocessing

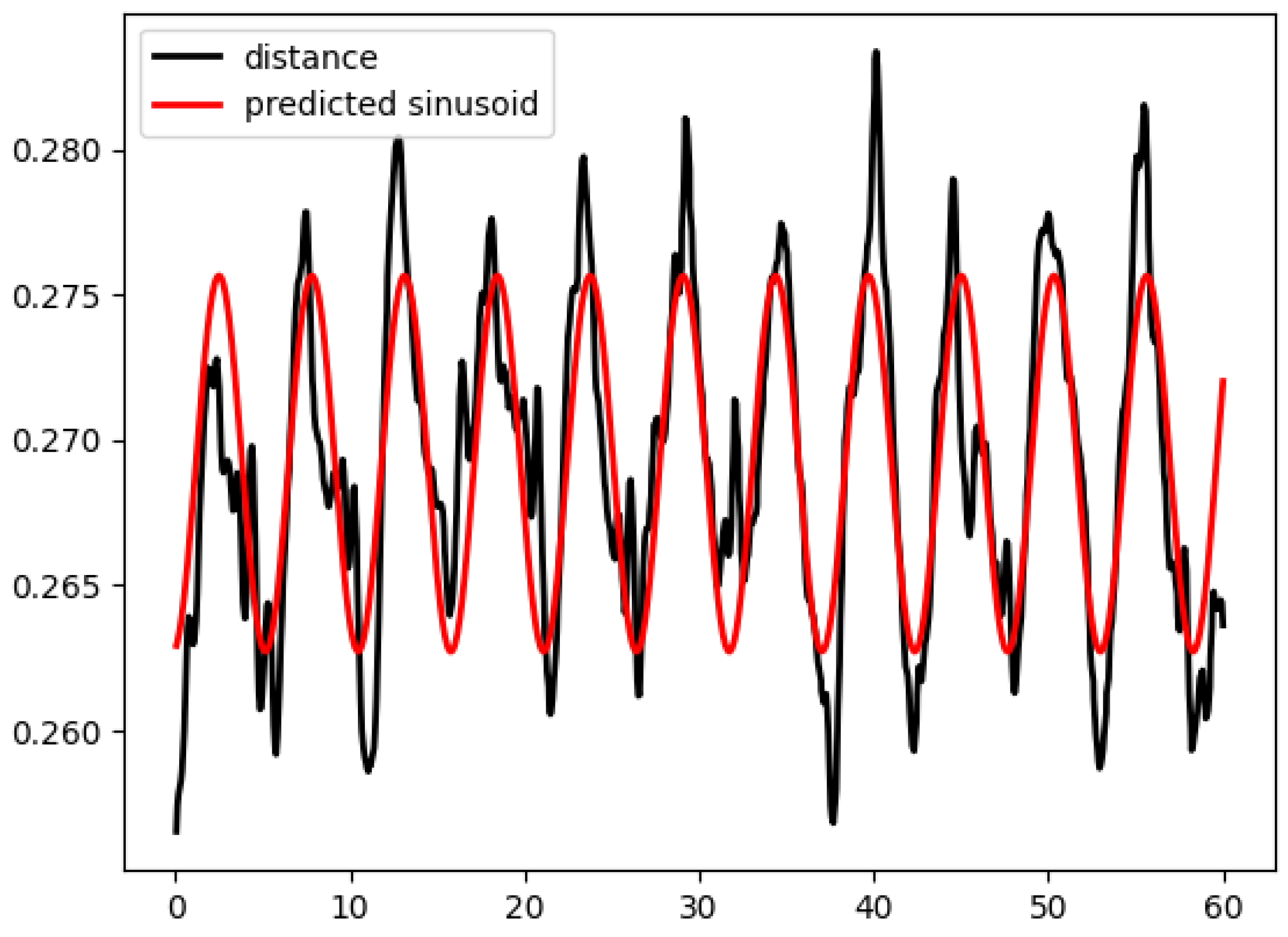

2.4. Signal Spectrum Analysis

3. Coordinate-Based Machine Learning Models

3.1. Decision Tree Model

3.2. Random Forest Model

3.3. K-Neighbors Model

3.4. Catch 22 Classifier Model

3.5. Rocket Classifier Model

3.6. Hist Gradient Boosting Classifier

3.7. Other Models

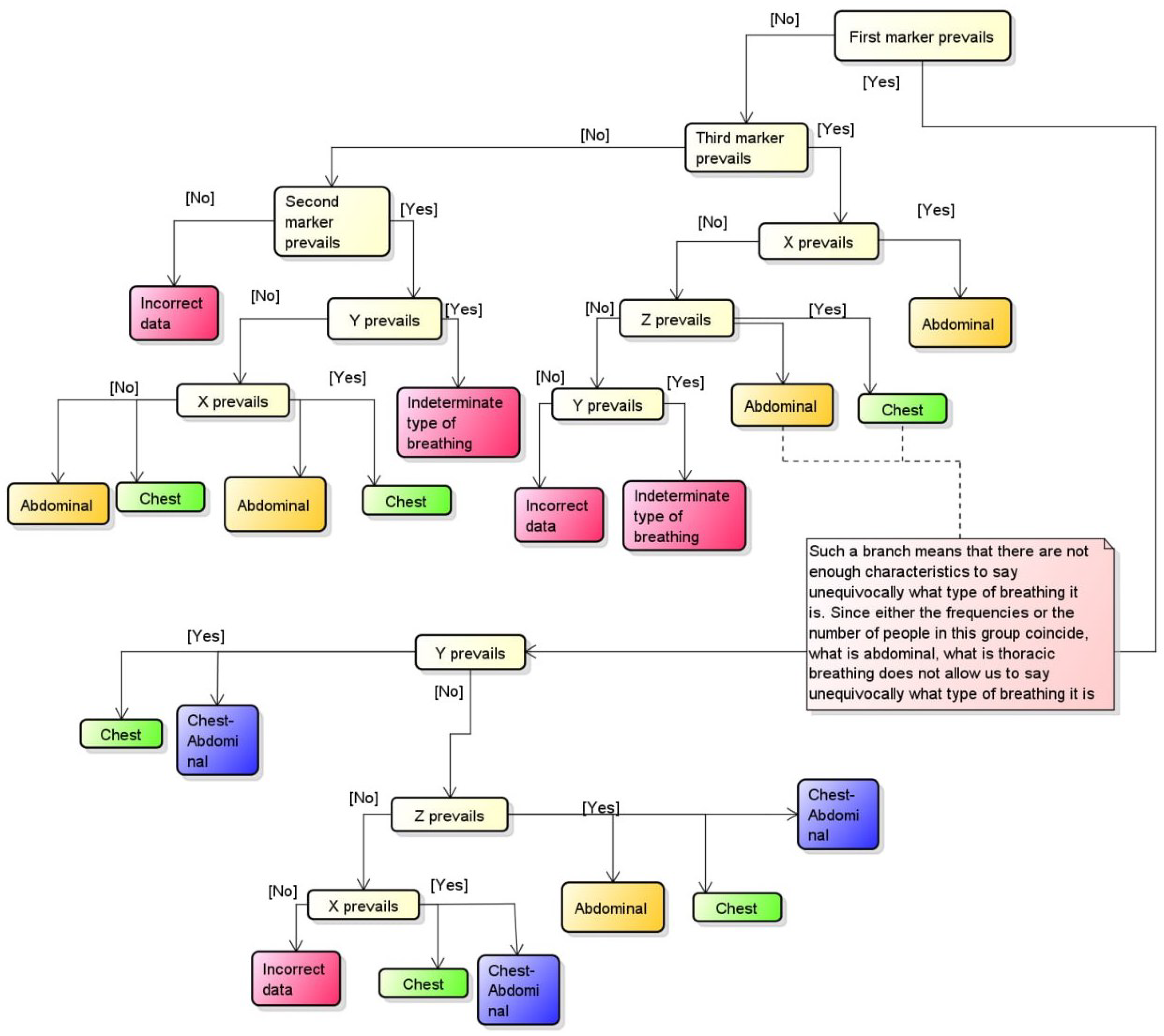

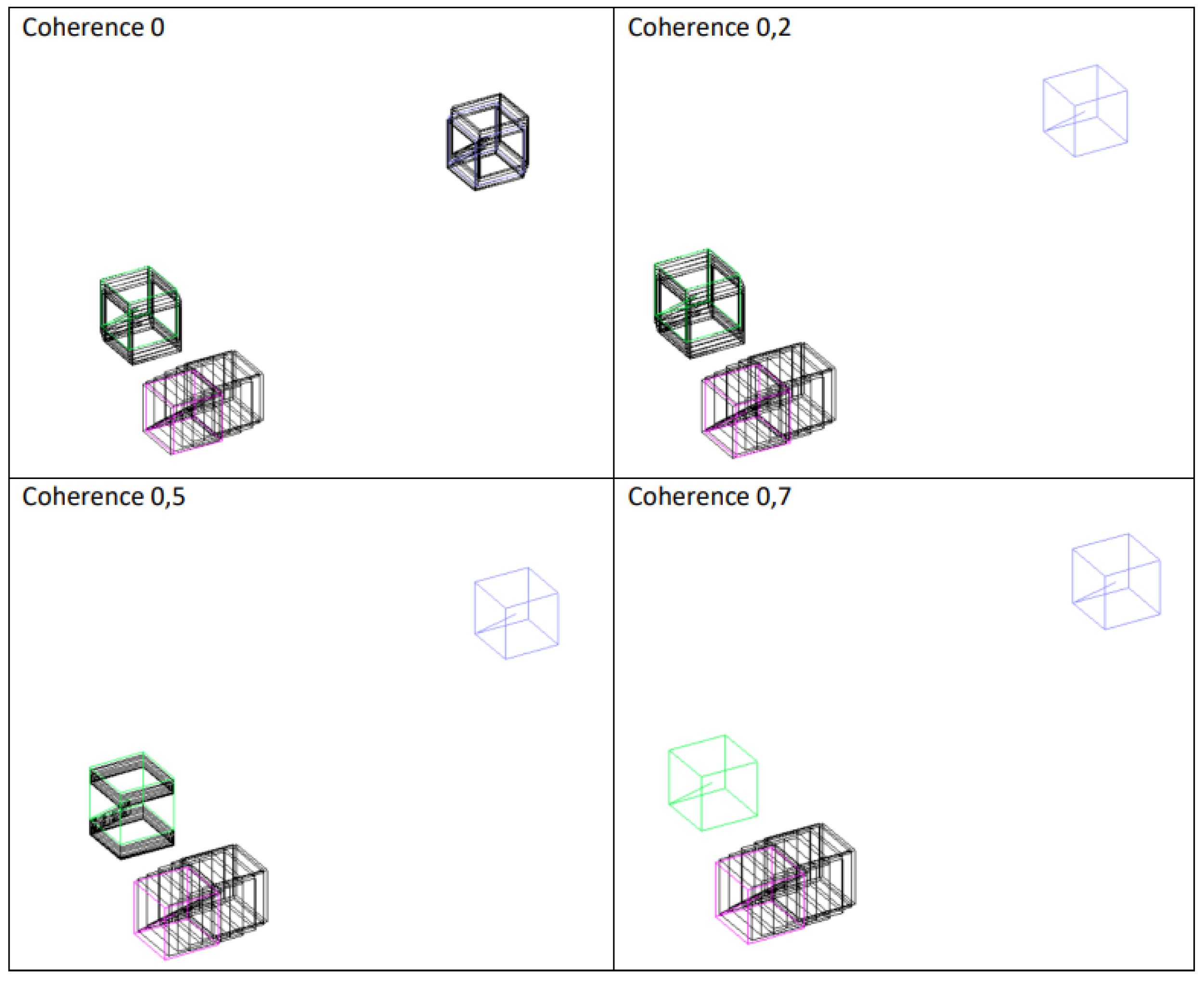

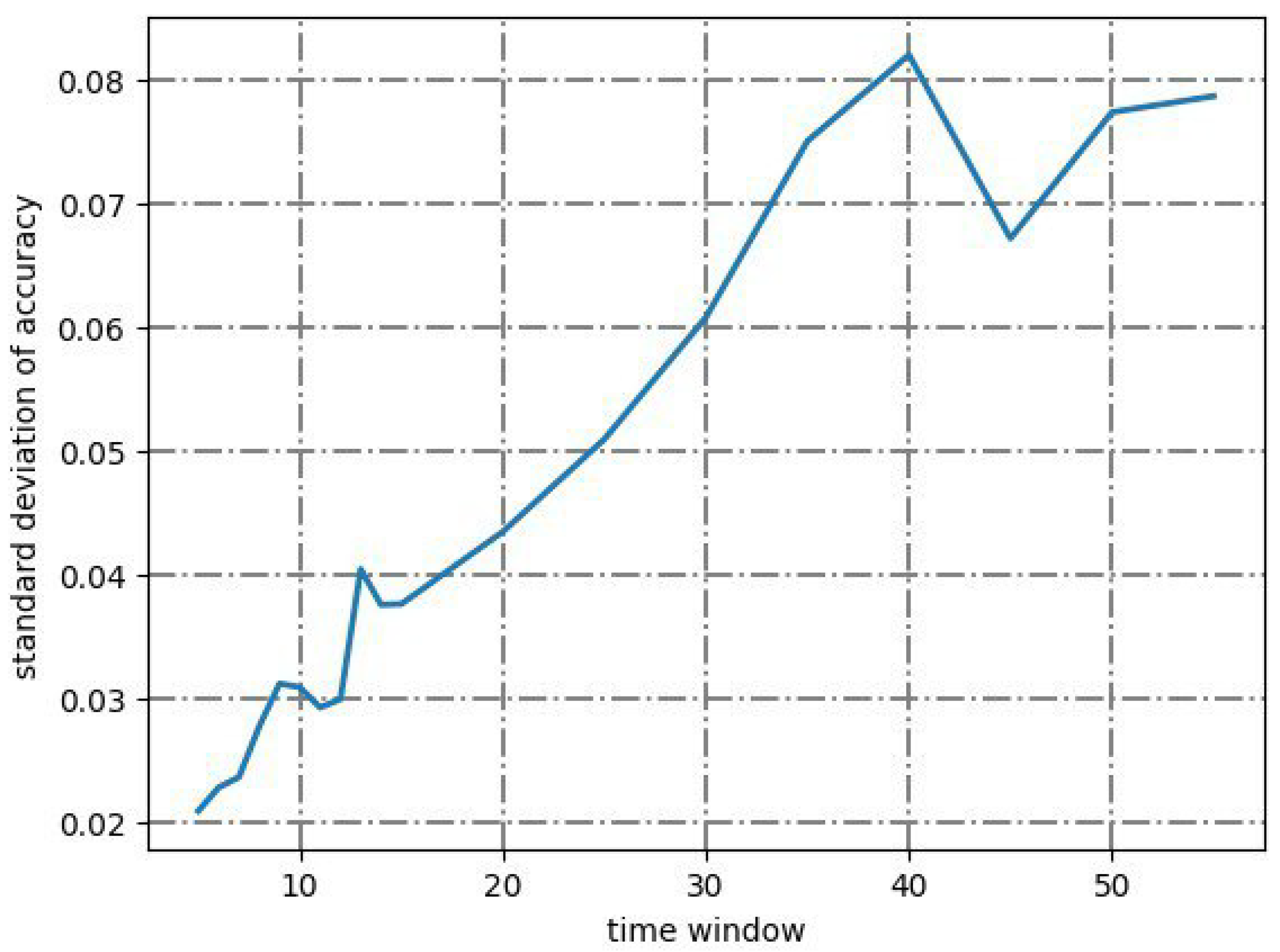

4. Two-Stage Method for Determining the Type of Human Breathing

4.1. Algorithm

| Algorithm 1 Algorithm of determining the type of human breath |

|

- AMD Ryzen 7 5700U 8x 1.80 GHz;

- AMD Radeon™ RX 640;

- 16 Gb RAM 3200 MHz.

4.2. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| API | Application programming interface |

| AUC | Area Under Curve |

| EDA | Electrodermal activity |

| FCN | Fully Convolutional Network |

| GRU | Gated recurrent unit |

| IQR | interquartile range |

| LR | Logistic Regression |

| LSTM | Long short-term memory |

| MLP | Multilayer Perceptron |

| RGB | red, green, and blue |

| SpO | Peripheral oxygen saturation |

| SVM | Support Vector Machines |

| WSN | Wireless sensor Network |

References

- Tuah, N.M.; Ahmedy, F.; Gani, A.; Yong, L.N. A Survey on Gamification for Health Rehabilitation Care: Applications, Opportunities, and Open Challenges. Information 2021, 12, 91. [Google Scholar] [CrossRef]

- Proffitt, R. Gamification in rehabilitation: Finding the “just-right-challenge”. In Handbook of Research on Holistic Perspectives in Gamification for Clinical Practice; IGI Global: Hershey, PA, USA, 2015; pp. 132–157. [Google Scholar] [CrossRef]

- Courtney, R. The functions of breathing and its dysfunctions and their relationship to breathing therapy. Int. J. Osteopath. Med. 2009, 12, 78–85. [Google Scholar] [CrossRef]

- Bradley, D. Chapter 3—Patterns of breathing dysfunction in hyperventilation and breathing pattern disorders. In Recognizing and Treating Breathing Disorders, 2nd ed.; Chaitow, L., Bradley, D., Gilbert, C., Eds.; Churchill Livingstone: London, UK, 2014; pp. 51–59. [Google Scholar] [CrossRef]

- Lee, C.C. Security and Privacy in Wireless Sensor Networks: Advances and Challenges. Sensors 2020, 20, 744. [Google Scholar] [CrossRef] [PubMed]

- BenSaleh, M.S.; Saida, R.; Kacem, Y.H.; Abid, M. Wireless Sensor Network Design Methodologies: A Survey. J. Sens. 2020, 2020, 9592836:1–9592836:13. [Google Scholar] [CrossRef]

- Fascista, A. Toward Integrated Large-Scale Environmental Monitoring Using WSN/UAV/Crowdsensing: A Review of Applications, Signal Processing, and Future Perspectives. Sensors 2022, 22, 1824. [Google Scholar] [CrossRef] [PubMed]

- Pragadeswaran, S.; Madhumitha, S.; Gopinath, S. Certain Investigations on Military Applications of Wireless Sensor Networks. Int. J. Adv. Res. Sci. Commun. Technol. 2021, 3, 14–19. [Google Scholar] [CrossRef]

- Darwish, A.; Hassanien, A.E. Wearable and Implantable Wireless Sensor Network Solutions for Healthcare Monitoring. Sensors 2011, 11, 5561–5595. [Google Scholar] [CrossRef]

- Brown, C.; Chauhan, J.; Grammenos, A.; Han, J.; Hasthanasombat, A.; Spathis, D.; Xia, T.; Cicuta, P.; Mascolo, C. Exploring Automatic Diagnosis of COVID-19 from Crowdsourced Respiratory Sound Data. In Proceedings of the 26th ACM SIGKDD International Conference on Knowledge Discovery & Data Mining, New York, NY, USA, 6–10 July 2020; pp. 3474–3484. [Google Scholar] [CrossRef]

- Manzella, F.; Pagliarini, G.; Sciavicco, G.; Stan, I. The voice of COVID-19: Breath and cough recording classification with temporal decision trees and random forests. Artif. Intell. Med. 2023, 137, 102486. [Google Scholar] [CrossRef]

- Prpa, M.; Stepanova, E.R.; Schiphorst, T.; Riecke, B.E.; Pasquier, P. Inhaling and Exhaling: How Technologies Can Perceptually Extend Our Breath Awareness. In CHI ’20: Proceedings of the 2020 CHI Conference on Human Factors in Computing Systems; Association for Computing Machinery: New York, NY, USA, 2020; pp. 1–15. [Google Scholar] [CrossRef]

- Wang, H. “SOS Signal” in Breathing Sound—Rapid COVID-19 Diagnosis Based on Machine Learning. In CSSE ’22: Proceedings of the 5th International Conference on Computer Science and Software Engineering; Association for Computing Machinery: New York, NY, USA, 2022; pp. 522–526. [Google Scholar] [CrossRef]

- Alikhani, I.; Noponen, K.; Hautala, A.; Gilgen-Ammann, R.; Seppänen, T. Spectral fusion-based breathing frequency estimation; experiment on activities of daily living. BioMed. Eng. OnLine 2018, 17, 99. [Google Scholar] [CrossRef]

- Avuthu, B.; Yenuganti, N.; Kasikala, S.; Viswanath, A.; Sarath. A Deep Learning Approach for Detection and Analysis of Respiratory Infections in Covid-19 Patients Using RGB and Infrared Images. In IC3-2022: Proceedings of the 2022 Fourteenth International Conference on Contemporary Computing; Association for Computing Machinery: New York, NY, USA, 2022; pp. 367–371. [Google Scholar] [CrossRef]

- Gong, Y.; Zhang, Q.; NG, B.H.; Li, W. BreathMentor: Acoustic-Based Diaphragmatic Breathing Monitor System. Proc. ACM Interact. Mob. Wearable Ubiquitous Technol. 2022, 6, 1–28. [Google Scholar] [CrossRef]

- Tran, H.A.; Ngo, Q.T.; Pham, H.H. An Application for Diagnosing Lung Diseases on Android Phone. In SoICT ’15: Proceedings of the 6th International Symposium on Information and Communication Technology; Association for Computing Machinery: New York, NY, USA, 2015; pp. 328–334. [Google Scholar] [CrossRef]

- Schoun, B.; Transue, S.; Choi, M.H. Real-Time Thermal Medium-Based Breathing Analysis with Python. In PyHPC’17: Proceedings of the 7th Workshop on Python for High-Performance and Scientific Computing; Association for Computing Machinery: New York, NY, USA, 2017. [Google Scholar] [CrossRef]

- Zubkov, A.; Donsckaia, A.; Busheneva, S.; Orlova, Y.; Rybchits, G. Razrabotka metoda opredeleniya dominiruyushchego tipa dykhaniya cheloveka na baze tekhnologiy komp’yuternogo zreniya, sistemy zakhvata dvizheniya i mashinnogo obucheniya. Model. Optim. Inf. Tekhnol. 2022, 10, 15. (In Russian) [Google Scholar] [CrossRef]

- Di Tocco, J.; Lo Presti, D.; Zaltieri, M.; Bravi, M.; Morrone, M.; Sterzi, S.; Schena, E.; Massaroni, C. Investigating Stroke Effects on Respiratory Parameters Using a Wearable Device: A Pilot Study on Hemiplegic Patients. Sensors 2022, 22, 6708. [Google Scholar] [CrossRef] [PubMed]

- Massaroni, C.; Cassetta, E.; Silvestri, S. A Novel Method to Compute Breathing Volumes via Motion Capture Systems: Design and Experimental Trials. J. Appl. Biomech. 2017, 33, 361–365. [Google Scholar] [CrossRef]

- Menolotto, M.; Komaris, D.S.; Tedesco, S.; O’Flynn, B.; Walsh, M. Motion Capture Technology in Industrial Applications: A Systematic Review. Sensors 2020, 20, 5687. [Google Scholar] [CrossRef] [PubMed]

- Gilbert, C. Chapter 5—Interaction of psychological and emotional variables with breathing dysfunction. In Recognizing and Treating Breathing Disorders, 2nd ed.; Chaitow, L., Bradley, D., Gilbert, C., Eds.; Churchill Livingstone: London, UK, 2014; pp. 79–91. [Google Scholar] [CrossRef]

- Cuña-Carrera, I.; Alonso Calvete, A.; González, Y.; Soto-González, M. Changes in abdominal muscles architecture induced by different types of breathing. Isokinet. Exerc. Sci. 2021, 30, 15–21. [Google Scholar] [CrossRef]

- Kristalinskiy, V.R. Teoriya Veroyatnostey v Sisteme Mathematica: Uchebnoye Posobiye; Lan: Saint Petersburg, Russia, 2021; p. 136. (In Russian) [Google Scholar]

- Fulcher, B.D.; Jones, N.S. Highly Comparative Feature-Based Time-Series Classification. IEEE Trans. Knowl. Data Eng. 2014, 26, 3026–3037. [Google Scholar] [CrossRef]

- Kotu, V.; Deshpande, B. Chapter 4—Classification. In Predictive Analytics and Data Mining; Kotu, V., Deshpande, B., Eds.; Morgan Kaufmann: Boston, MA, USA, 2015; pp. 63–163. [Google Scholar] [CrossRef]

- Lindholm, A.; Wahlström, N.; Lindsten, F.; Schön, T.B. Machine Learning: A First Course for Engineers and Scientists; Cambridge University Press: Cambridge, UK, 2022. [Google Scholar] [CrossRef]

- Jenkins, G.M.; Watts, D.G. Spectral Analysis and Its Applications; Holden-Day Series in Time Series Analysis and Digital Signal Processing; Holden-Day: San Francisco, CA, USA, 1969; p. 525. [Google Scholar] [CrossRef]

- Michaelson, E.D.; Grassman, E.D.; Peters, W.R. Pulmonary mechanics by spectral analysis of forced random noise. J. Clin. Investig. 1975, 56, 1210–1230. [Google Scholar] [CrossRef]

- FRUND—A System for Solving Non-Linear Dynamic Equations. Available online: http://frund.vstu.ru/ (accessed on 24 January 2023).

- Fujita, O. Metrics Based on Average Distance Between Sets. Jpn. J. Ind. Appl. Math. 2011, 30, 1–19. [Google Scholar] [CrossRef]

- Wainer, J.; Cawley, G. Nested cross-validation when selecting classifiers is overzealous for most practical applications. Expert Syst. Appl. 2021, 182, 115222. [Google Scholar] [CrossRef]

- Szczerbicki, E. Management of Complexity and Information Flow. In Agile Manufacturing: The 21st Century Competitive Strategy; Gunasekaran, A., Ed.; Elsevier Science Ltd.: Oxford, UK, 2001; pp. 247–263. [Google Scholar] [CrossRef]

- Jiawei, H.; Micheline, K.; Jian, P. 8—Classification: Basic Concepts. In Data Mining, 3rd ed.; Han, J., Kamber, M., Pei, J., Eds.; The Morgan Kaufmann Series in Data Management Systems; Morgan Kaufmann: Boston, MA, USA, 2012; pp. 327–391. [Google Scholar] [CrossRef]

- Song, Y.Y.; Lu, Y. Decision tree methods: Applications for classification and prediction. Shanghai Arch. Psychiatry 2015, 27, 130–135. [Google Scholar] [CrossRef]

- Suthaharan, S. Chapter 6—A Cognitive Random Forest: An Intra- and Intercognitive Computing for Big Data Classification Under Cune Condition. In Cognitive Computing: Theory and Applications; Gudivada, V.N., Raghavan, V.V., Govindaraju, V., Rao, C., Eds.; Handbook of Statistics; Elsevier: Amsterdam, The Netherlands, 2016; Volume 35, pp. 207–227. [Google Scholar] [CrossRef]

- Cunningham, P.; Delany, S.J. k-Nearest neighbour classifiers. ACM Comput. Surv. 2007, 54, 1–25. [Google Scholar] [CrossRef]

- Lubba, C.H.; Sethi, S.S.; Knaute, P.; Schultz, S.R.; Fulcher, B.D.; Jones, N.S. catch22: CAnonical Time-series CHaracteristics. Data Min. Knowl. Discov. 2019, 33, 1821–1852. [Google Scholar] [CrossRef]

- Dempster, A.; Petitjean, F.; Webb, G.I. ROCKET: Exceptionally fast and accurate time series classification using random convolutional kernels. Data Min. Knowl. Discov. 2020, 34, 1454–1495. [Google Scholar] [CrossRef]

- Fawaz, H.I.; Forestier, G.; Weber, J.; Idoumghar, L.; Muller, P.A. Deep learning for time series classification: A review. Data Min. Knowl. Discov. 2019, 33, 917–963. [Google Scholar] [CrossRef]

- Piryonesi, S.M.; El-Diraby, T.E. Data Analytics in Asset Management: Cost-Effective Prediction of the Pavement Condition Index. J. Infrastruct. Syst. 2020, 26, 04019036. [Google Scholar] [CrossRef]

- Windeatt, T. Accuracy/Diversity and Ensemble MLP Classifier Design. IEEE Trans. Neural Netw. 2006, 17, 1194–1211. [Google Scholar] [CrossRef]

- Mboga, N.; Georganos, S.; Grippa, T.; Lennert, M.; Vanhuysse, S.; Wolff, E. Fully Convolutional Networks and Geographic Object-Based Image Analysis for the Classification of VHR Imagery. Remote Sens. 2019, 11, 597. [Google Scholar] [CrossRef]

- Charnes, A.; Frome, E.L.; Yu, P.L. The Equivalence of Generalized Least Squares and Maximum Likelihood Estimates in the Exponential Family. J. Am. Stat. Assoc. 1976, 71, 169–171. [Google Scholar] [CrossRef]

- Rakesh Kumar, S.; Gayathri, N.; Muthuramalingam, S.; Balamurugan, B.; Ramesh, C.; Nallakaruppan, M. Chapter 13—Medical Big Data Mining and Processing in e-Healthcare. In Internet of Things in Biomedical Engineering; Balas, V.E., Son, L.H., Jha, S., Khari, M., Kumar, R., Eds.; Academic Press: Cambridge, MA, USA, 2019; pp. 323–339. [Google Scholar] [CrossRef]

- Lipton, Z.; Elkan, C.; Naryanaswamy, B. Optimal Thresholding of Classifiers to Maximize F1 Measure. In Machine Learning and Knowledge Discovery in Databases: European Conference, ECML PKDD 2014; Springer: Berlin/Heidelberg, Germany, 2014; Volume 8725, pp. 225–239. [Google Scholar] [CrossRef]

- Yuan, C.; Cui, Q.; Sun, X.; Wu, Q.J.; Wu, S. Chapter Five—Fingerprint liveness detection using an improved CNN with the spatial pyramid pooling structure. In AI and Cloud Computing; Hurson, A.R., Wu, S., Eds.; Advances in Computers; Elsevier: Amsterdam, The Netherlands, 2021; Volume 120, pp. 157–193. [Google Scholar] [CrossRef]

- Bishop, C.M.; Nasrabadi, N.M. Pattern Recognition and Machine Learning. J. Electron. Imaging 2007, 16, 049901. [Google Scholar] [CrossRef]

- Massaroni, C.; Senesi, G.; Schena, E.; Silvestri, S. Analysis of breathing via optoelectronic systems: Comparison of four methods for computing breathing volumes and thoraco-abdominal motion pattern. Comput. Methods Biomech. Biomed. Eng. 2017, 20, 1678–1689. [Google Scholar] [CrossRef]

| Study | Pros | Cons |

|---|---|---|

| Brown et al. [10] | + High accuracy (>80% AUC) + The convenience of data collection—Web and Android applications for collecting data using a microphone. | - Did not determine the type of breathing. |

| Schoun et al. [18] | + No additional equipment is attached to the patient. It can be used to analyze breathing during sleep, and it is not constrained by the patient’s age. + High accuracy (>70%). | - Analysis of general respiratory metrics, whose values can be collected using already established devices with higher accuracy—spirometry, etc. - The need to assemble the installation around the patient. |

| Yanbin Gong et al. [16] | + Higher accuracy compared to state-of-the-art models (approximately 95% accuracy). + The system consists of only one speaker. | - It determines only one type of breathing—diaphragmatic. |

| Han An Tran et al. [17] | + Cheapness of operation—only an Android-based phone is required. + An average error in determining the type of breathing is 8%. | - Determines only one type of breathing. - A small sample size—17 students aged 23–24 years. |

| Hyperparameter | Short Description | Value |

|---|---|---|

| criterion | A function that evaluates the quality of division at each node of the decision tree. | “gini” |

| splitter | The strategy used to select a specific division from the set at each node, taking into account the estimates obtained from the function specified in the criterion parameter. | “best” |

| max_depth | Maximum depth of the decision tree. | 5 |

| min_samples_split | The minimum number of samples required to split a node of the decision tree. | 2 |

| min_samples_leaf | The minimum number of samples required to form a “leaf”. | 1 |

| max_features | The number of functions to consider when searching for the best partition. | 3 |

| random_state | Setting the fixed state of the random component. | 12 |

| Hyperparameter | Short Description | Value |

|---|---|---|

| criterion | A function that evaluates the quality of division execution at each node of the decision tree. | “gini” |

| n_estimators | The number of decision trees in a random forest. | 10 |

| max_depth | Maximum depth of decision trees. | 5 |

| min_samples_split | The minimum number of samples required to split a node of the decision tree. | 2 |

| max_features | The number of functions to consider when searching for the best partition; when “sqrt”: . | “sqrt” |

| bootstrap | Indicates whether it is necessary to split the initial sample into several random subsamples when training trees. | True |

| random_state | Setting the fixed state of the random component. | 12 |

| Hyperparameter | Short Description | Value |

|---|---|---|

| n_neighbors | Number of neighbors. | 4 |

| weights | The weight function used in prediction. | ‘distance’ |

| metric | A metric used to calculate the distance to neighbors. | ‘minkowski’ |

| Hyperparameter | Short Description | Value |

|---|---|---|

| outlier_norm | Normalization of each sequence during two additional Catch 22 functions. | True |

| n_jobs | Parallelization of calculations into multiple threads. | −1 |

| random_state | Setting the fixed state of the random component. | 12 |

| Hyperparameter | Short Description | Value |

|---|---|---|

| num_kernels | Number of kernels. | 500 |

| n_jobs | Parallelization of calculations across multiple threads. | −1 |

| Hyperparameter | Short Description | Value |

|---|---|---|

| random_state | Setting the fixed state of the random component. | 15 |

| learning_rate | Learning rate. | 1 |

| max_depth | Maximum depth of the decision trees. | 15 |

| loss | The loss function that the model minimizes during the boosting process. | ‘log_loss’ |

| Model Kind | Number of Models | Accuracy | Precision | Recall | F1-Measure | Training time, s | Working Time, s | log_Loss (Logistic Loss) |

|---|---|---|---|---|---|---|---|---|

| Random Forest Classifier | 1 | 0.53 | 0.5 | 0.54 | 0.47 | 0.018 | 0.002 | 8.67 |

| Decision Tree Classifier | 1 | 0.51 | 0.46 | 0.51 | 0.46 | 0.004 | 0.001 | 13.24 |

| Catch 22 Classifier | 1 | 0.63 | 0.65 | 0.63 | 0.64 | 8.926 | 8.665 | 0.94 |

| Rocket Classifier | 1 | 0.46 | 0.45 | 0.46 | 0.46 | 11.507 | 5.437 | 18.68 |

| K-Neighbors Classifier | 1 | 0.41 | 0.38 | 0.41 | 0.36 | 0.006 | 0.001 | 10.8 |

| Hist Gradient Boosting Classifier | 1 | 0.42 | 0.51 | 0.42 | 0.37 | 0.324 | 0.009 | 4.22 |

| Random Forest Classifier | 2 | 0.46 | 0.46 | 0.46 | 0.46 | 0.047 | 0.485 | 6.78 |

| Decision Tree Classifier | 2 | 0.52 | 0.52 | 0.52 | 0.52 | 0.004 | 0.11 | 13.21 |

| Catch 22 Classifier | 2 | 0.57 | 0.57 | 0.57 | 0.57 | 19.02 | 34.609 | 0.96 |

| Rocket Classifier | 2 | 0.48 | 0.48 | 0.48 | 0.48 | 35.318 | 64.687 | 17.83 |

| K-Neighbors Classifier | 2 | 0.4 | 0.4 | 0.4 | 0.4 | 0.005 | 0.407 | 7.89 |

| Hist Gradient Boosting Classifier | 2 | 0.81 | 0.83 | 0.82 | 0.82 | 0.875 | 0.75 | 1.98 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Orlova, Y.; Gorobtsov, A.; Sychev, O.; Rozaliev, V.; Zubkov, A.; Donsckaia, A. Method for Determining the Dominant Type of Human Breathing Using Motion Capture and Machine Learning. Algorithms 2023, 16, 249. https://doi.org/10.3390/a16050249

Orlova Y, Gorobtsov A, Sychev O, Rozaliev V, Zubkov A, Donsckaia A. Method for Determining the Dominant Type of Human Breathing Using Motion Capture and Machine Learning. Algorithms. 2023; 16(5):249. https://doi.org/10.3390/a16050249

Chicago/Turabian StyleOrlova, Yulia, Alexander Gorobtsov, Oleg Sychev, Vladimir Rozaliev, Alexander Zubkov, and Anastasia Donsckaia. 2023. "Method for Determining the Dominant Type of Human Breathing Using Motion Capture and Machine Learning" Algorithms 16, no. 5: 249. https://doi.org/10.3390/a16050249

APA StyleOrlova, Y., Gorobtsov, A., Sychev, O., Rozaliev, V., Zubkov, A., & Donsckaia, A. (2023). Method for Determining the Dominant Type of Human Breathing Using Motion Capture and Machine Learning. Algorithms, 16(5), 249. https://doi.org/10.3390/a16050249